TL;DR#

Motion in-betweening, crucial for animation, traditionally demands character-specific datasets. Existing methods fall short when data is scarce. This work addresses this limitation by introducing AnyMoLe, a method leveraging video diffusion models. AnyMoLe generates motion in-between frames for diverse characters without external data, overcoming the dependence on extensive character-specific datasets.

AnyMoLe employs a two-stage frame generation to enhance contextual understanding and introduces ICAdapt, a fine-tuning technique to bridge the gap between real-world and rendered character animations. Additionally, a “motion-video mimicking” optimization enables seamless motion generation for characters with arbitrary joint structures using 2D and 3D-aware features. This drastically reduces data dependency while maintaining realistic transitions.

Key Takeaways#

Why does it matter?#

This paper introduces a novel motion in-betweening technique applicable to diverse characters without extensive data. It overcomes data scarcity issues, offering new avenues for 3D character animation and video generation research by reducing reliance on manual processes and enabling more creativity.

Visual Insights#

🔼 This figure demonstrates the core capability of AnyMoLe. It shows how the system generates smooth and realistic intermediate motion frames between given context frames (surrounding the desired motion) and keyframes (defining the start and end poses). Importantly, this is achieved without the need for any character-specific training data, unlike previous methods. The figure visually highlights the input context frames and keyframes, and the output in-between frames generated by AnyMoLe.

read the caption

Figure 1: AnyMoLe generates in-between motion from context frames and keyframes without requiring external training data.

| Methods | Characters | Training data | Output |

|---|---|---|---|

| AnyMoLe | Arbitrary | None | 3D motion |

| TS [33] | Human | 3D motion Dataset | 3D motion |

| Deciwatch [53] | Human | video-3D poses Dataset | 3D poses |

🔼 This table compares AnyMoLe with other motion in-betweening methods, highlighting key differences in terms of the types of characters supported, the amount of training data required, and the type of output generated. AnyMoLe stands out by supporting arbitrary characters without needing external training data and producing 3D motion sequences.

read the caption

Table 1: Difference between AnyMoLe and baseline methods.

In-depth insights#

No Data In-Between#

The concept of ‘No Data In-Between’ is intriguing, suggesting a scenario where traditional interpolation or in-betweening techniques fail due to a lack of sufficient or reliable data points connecting the keyframes. This absence of intermediate information presents a significant challenge, particularly in domains like motion synthesis or animation. Overcoming this requires innovative approaches that go beyond simple linear or spline-based interpolation. The method must be able to infer plausible and coherent transitions even when there’s a considerable gap or discontinuity in the available data. Approaches to this challenge might involve leveraging prior knowledge, learning from related datasets, or using generative models to synthesize plausible intermediate states. The key is to move beyond merely connecting the dots and instead, creating a believable and contextually appropriate transition in the absence of explicit guidance.

Contextual Bridge#

The idea of a “Contextual Bridge” in the context of motion in-betweening with video diffusion models is fascinating. It highlights the need to seamlessly connect disparate elements, ensuring smooth transitions between keyframes. Such a bridge would address the inherent limitations of standard interpolation techniques, which often lack awareness of the broader scene dynamics. A good Contextual Bridge can involve domain adaptation techniques to reduce the gap between real-world video datasets and rendered scenes, which is seen in AnyMoLe with their ICAdapt. Also, it can be achieved with two stage video generation to guarantee the quality of transitions with fine tuning. Therefore, the successful motion in-betweening relies on well designed Contextual Bridge in all aspects of the model.

Mimicking Motion#

Motion mimicking aims to transfer movements from a source (e.g., video) to a target character or animation. The core idea is to have the target replicate the motion seen in the source, but with its own unique morphology and constraints. This often involves pose estimation, extracting skeletal data from the source and then retargeting it onto the target character. Challenges include handling differences in body proportions, joint structures, and physical capabilities. Techniques like inverse kinematics and motion capture data processing are crucial, as is dealing with potential data scarcity. Advanced methods use optimization techniques to ensure that the retargeted motion looks natural and avoids physically impossible poses. The goal is to create realistic and believable animation without manual keyframing.

Joint Estimation#

Joint estimation, especially in the context of character animation and motion in-betweening, is a critical and complex undertaking. The key idea behind joint estimation in character animation revolves around simultaneously estimating multiple parameters. In this context, it often involves estimating the 3D poses or joint angles of a character, given some form of input data such as images, videos, or sparse keyframes. Estimating these parameters jointly helps in enforcing consistency and coherence in the estimated motion, leading to more realistic and plausible animations. The challenges often include dealing with high-dimensional parameter spaces, noisy or incomplete input data, and the need for real-time performance. Incorporating prior knowledge about the character’s anatomy and motion dynamics can significantly improve the accuracy and robustness of joint estimation methods.

Beyond MOCAP#

The phrase “Beyond MOCAP” suggests a move past traditional motion capture techniques, implying exploration of alternative methods for creating realistic character animation. This could involve procedural animation, leveraging AI and machine learning, or using video diffusion models to generate plausible movements, even for characters difficult or impossible to capture with MOCAP. These methods aim to reduce reliance on expensive equipment, specialized environments and human actors. It could signify generating novel or fantastical movements beyond the scope of human motion, opening doors to new creative expression, and focusing on generating character-specific animations without the need for large, tailored datasets. Challenges include ensuring motion remains realistic and plausible, controlling the animation process, and bridging the gap between real-world motion and synthetic generation.

More visual insights#

More on figures

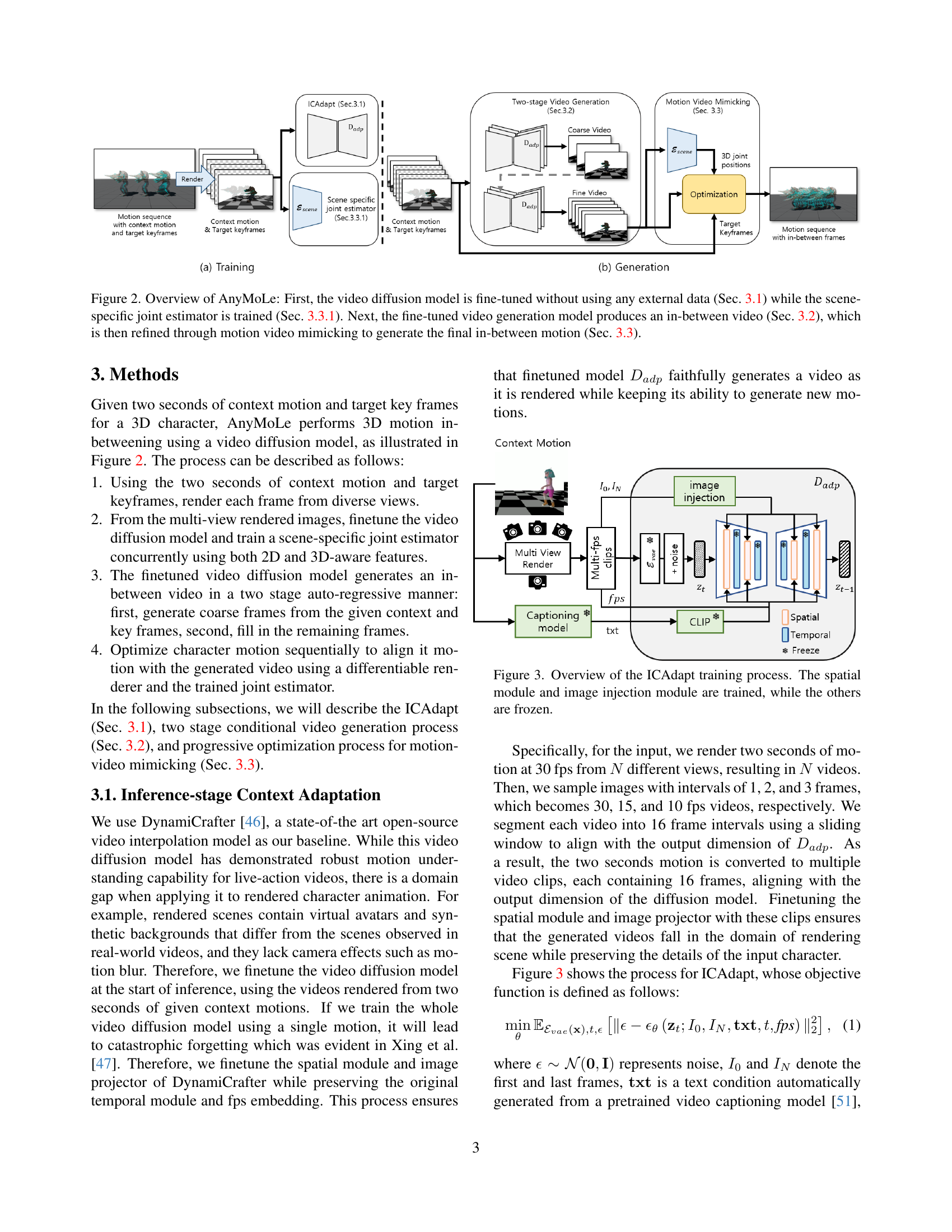

🔼 This figure illustrates the AnyMoLe framework’s three main stages. Initially, a video diffusion model is fine-tuned using only two seconds of context motion from the target character, and concurrently, a scene-specific joint estimator is trained. No external datasets are required for this initial fine-tuning stage. Second, the fine-tuned model generates an in-between video in two stages: coarse frame generation followed by refinement to fill in details. Third, the generated video is processed using motion video mimicking to optimize the character’s 3D motion and generate a final, smooth in-between motion sequence that closely matches the target keyframes.

read the caption

Figure 2: Overview of AnyMoLe: First, the video diffusion model is fine-tuned without using any external data (Sec. 3.1) while the scene-specific joint estimator is trained (Sec. 3.3.1). Next, the fine-tuned video generation model produces an in-between video (Sec. 3.2), which is then refined through motion video mimicking to generate the final in-between motion (Sec. 3.3).

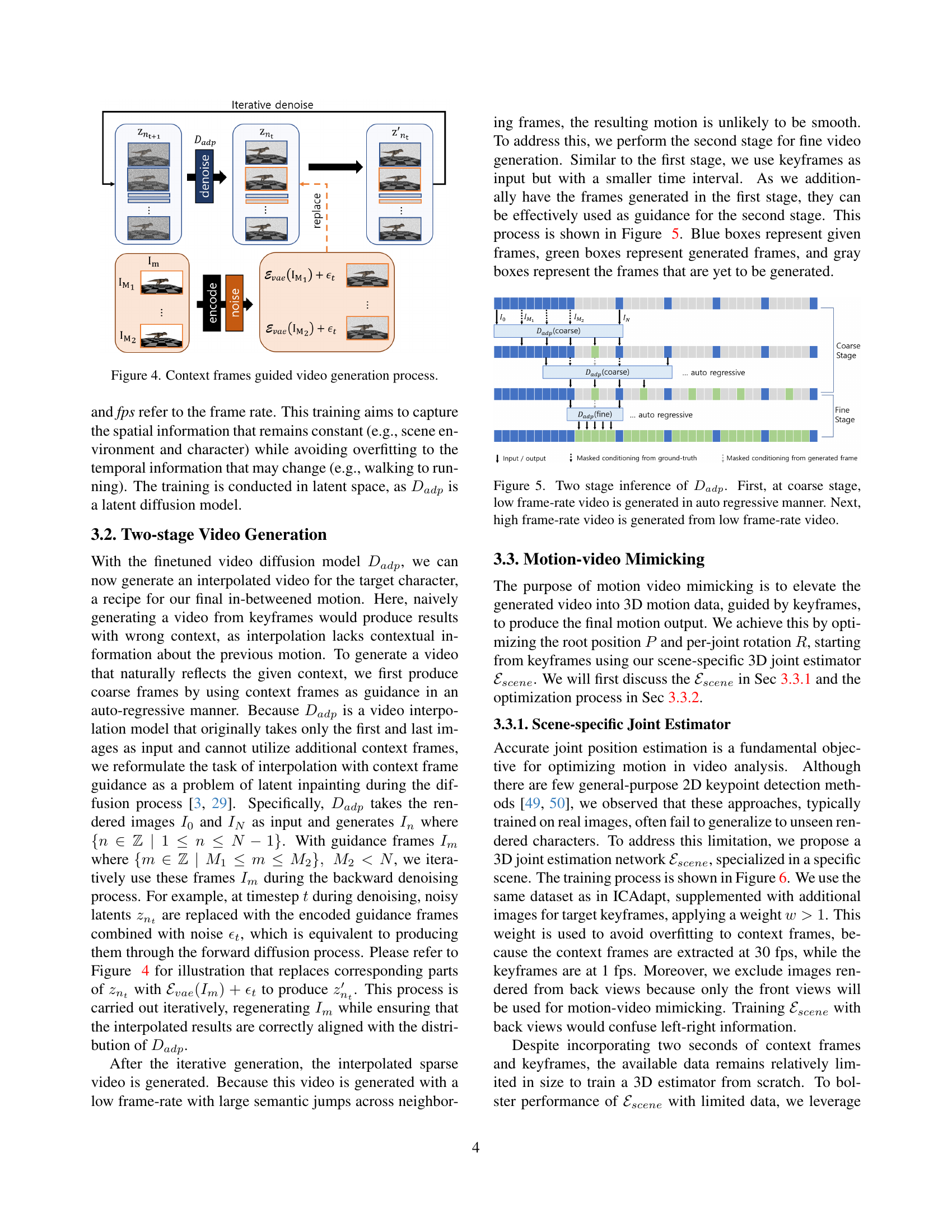

🔼 The figure illustrates the ICAdapt training process, a crucial component in AnyMoLe’s motion in-betweening method. ICAdapt addresses the domain gap between real-world and rendered video data by fine-tuning the video diffusion model. The process focuses on adapting the model to rendered character animations without disrupting its ability to generate realistic motions. Specifically, only the spatial module and image injection module of the DynamiCrafter video diffusion model are fine-tuned using short (2-second) clips of rendered context motion, ensuring that the model accurately represents the virtual characters’ appearance while preserving its learned motion dynamics. The temporal module and other components remain frozen during this fine-tuning phase.

read the caption

Figure 3: Overview of the ICAdapt training process. The spatial module and image injection module are trained, while the others are frozen.

🔼 This figure illustrates the two-stage video generation process within AnyMoLe. The first stage generates sparse frames to establish the motion structure using context frames as guidance within a latent inpainting framework. The second stage then refines these sparse frames by generating dense frames to fill in the details, leveraging the previously generated sparse frames as guidance. This two-stage approach ensures smooth and contextually aware video generation. The process involves iteratively denoising latent representations, replacing noisy parts with encoded guidance frames to incorporate contextual information.

read the caption

Figure 4: Context frames guided video generation process.

🔼 This figure illustrates the two-stage video generation process used in AnyMoLe. The first stage uses the video diffusion model (Dadp) to generate a low frame-rate video autoregressively, meaning it predicts frames sequentially based on previous frames and the input keyframes. This initial video establishes the overall motion structure. The second stage then takes this low frame-rate video, along with the keyframes, as input and uses Dadp to generate a higher frame-rate video, refining the detail and smoothness of the motion. The goal is to produce a more realistic and visually appealing motion sequence.

read the caption

Figure 5: Two stage inference of Dadpsubscript𝐷𝑎𝑑𝑝D_{adp}italic_D start_POSTSUBSCRIPT italic_a italic_d italic_p end_POSTSUBSCRIPT. First, at coarse stage, low frame-rate video is generated in auto regressive manner. Next, high frame-rate video is generated from low frame-rate video.

🔼 This figure details the training process for the scene-specific joint estimator, a key component of AnyMoLe. It starts by taking multi-view rendered images of the character’s motion as input. These images are processed by DINOv2, which extracts 2D semantic features, and FiT3D, which adds 3D structural information. These features are combined, decoded into heatmaps to estimate 2D joint positions, and then passed through a depth MLP to predict the depth of each joint, giving a 3D joint position. The training objective uses a Mean Squared Error (MSE) loss function to compare predicted 3D joints to ground truth 3D joints calculated from context frames and keyframes, aiming for precise 3D joint estimation.

read the caption

Figure 6: Overview of joint estimator training process.

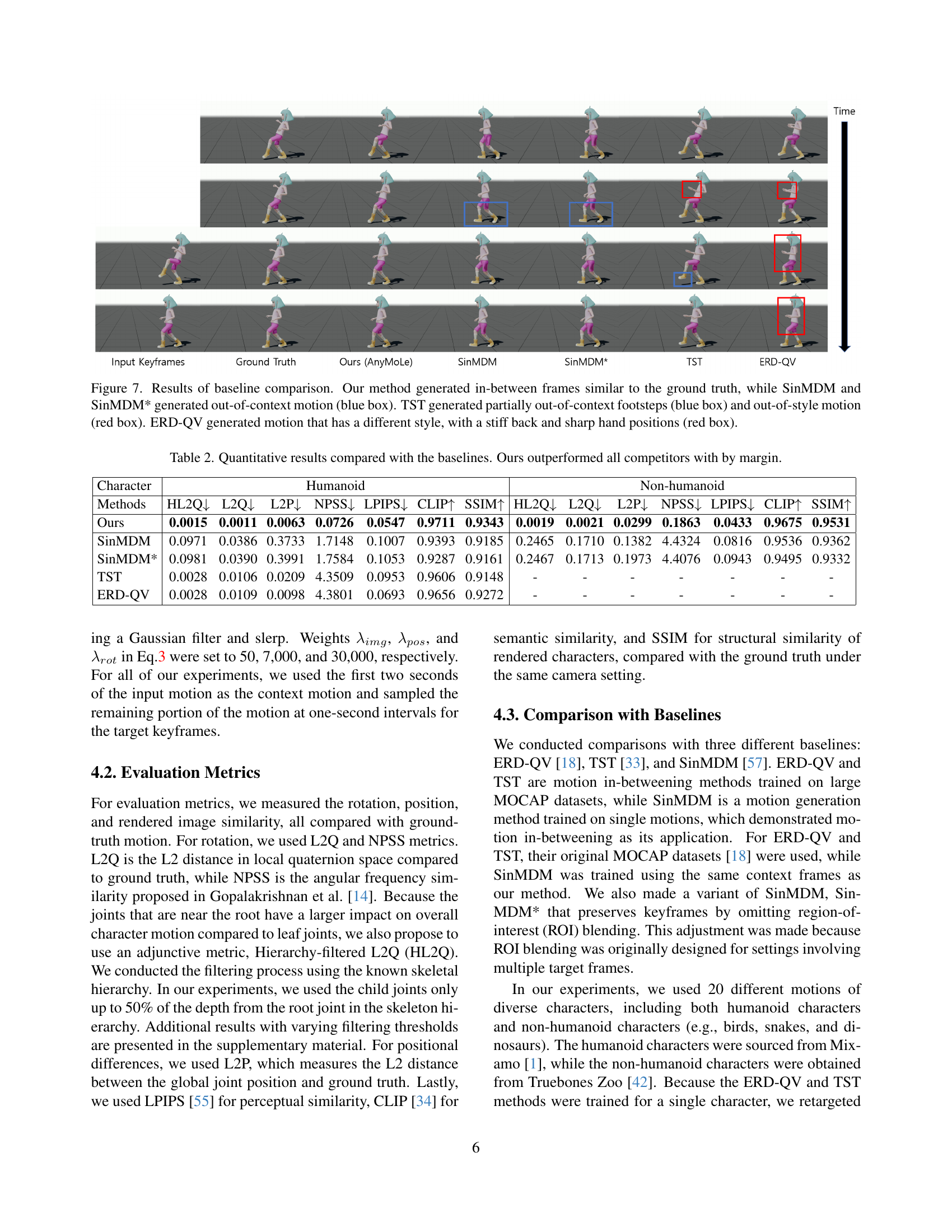

🔼 Figure 7 presents a comparison of motion in-betweening results between AnyMoLe and three baseline methods: SinMDM, SinMDM*, TST, and ERD-QV. The figure visually demonstrates the in-between frames generated by each method for the same input keyframes. AnyMoLe’s output closely matches the ground truth, showing smooth and natural transitions. In contrast, SinMDM and SinMDM* produce frames with significant motion errors, indicated by the blue boxes, which highlight portions of the generated motion that are clearly out of context. TST displays both partially out-of-context movements (blue box), such as illogical foot placement, and stylistic inconsistencies (red box), where the style of the generated motion differs from the original style of the keyframes. ERD-QV generates motions with a distinctly different style than the ground truth, characterized by a stiff back posture and exaggerated hand movements (red box). These visual differences highlight AnyMoLe’s superiority in generating realistic and contextually appropriate in-between frames compared to the baseline methods.

read the caption

Figure 7: Results of baseline comparison. Our method generated in-between frames similar to the ground truth, while SinMDM and SinMDM* generated out-of-context motion (blue box). TST generated partially out-of-context footsteps (blue box) and out-of-style motion (red box). ERD-QV generated motion that has a different style, with a stiff back and sharp hand positions (red box).

🔼 This ablation study analyzes the impact of key components in AnyMoLe’s video generation process. The leftmost column shows the input keyframes. The next three columns show the results of AnyMoLe’s video generation under different conditions: (1) the full model, (2) the model without ICAdapt (Inference-stage Context Adaptation), and (3) the model without the fine-stage video generation. The results demonstrate that using ICAdapt leads to consistent styles, while the fine stage improves frame rate and smoothness. Without ICAdapt, the generated video shows significant style inconsistencies, highlighted by a blue box indicating a noticeable style shift. Omitting the fine stage results in a low frame rate, causing frames to be nearly identical or to exhibit large jumps between frames.

read the caption

Figure 8: Ablation results on video generation. Without applying ICAdapt, each frame of the video exhibited inconsistencies, such as generating noticeable style shifts (blue box). Omitting the fine-stage process resulted in a low frame rate, making identical or significant jumps between frames.

🔼 Figure 9 demonstrates AnyMoLe’s capacity to generate intermediate frames for scenes involving multiple objects. It highlights the model’s ability to comprehend contextual relationships between objects and produce realistic and fluid movements, going beyond simple character animation to more complex multi-object interactions. The example shown illustrates two bouncing balls, showcasing the model’s extrapolation to multi-object scenarios.

read the caption

Figure 9: From understanding of the context, AnyMoLe can naturally generate in-between frames in a multi-object scenario.

More on tables

| Character | Humanoid | Non-humanoid | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Methods | HL2Q | L2Q | L2P | NPSS | LPIPS | CLIP | SSIM | HL2Q | L2Q | L2P | NPSS | LPIPS | CLIP | SSIM |

| Ours | 0.0015 | 0.0011 | 0.0063 | 0.0726 | 0.0547 | 0.9711 | 0.9343 | 0.0019 | 0.0021 | 0.0299 | 0.1863 | 0.0433 | 0.9675 | 0.9531 |

| SinMDM | 0.0971 | 0.0386 | 0.3733 | 1.7148 | 0.1007 | 0.9393 | 0.9185 | 0.2465 | 0.1710 | 0.1382 | 4.4324 | 0.0816 | 0.9536 | 0.9362 |

| SinMDM* | 0.0981 | 0.0390 | 0.3991 | 1.7584 | 0.1053 | 0.9287 | 0.9161 | 0.2467 | 0.1713 | 0.1973 | 4.4076 | 0.0943 | 0.9495 | 0.9332 |

| TST | 0.0028 | 0.0106 | 0.0209 | 4.3509 | 0.0953 | 0.9606 | 0.9148 | - | - | - | - | - | - | - |

| ERD-QV | 0.0028 | 0.0109 | 0.0098 | 4.3801 | 0.0693 | 0.9656 | 0.9272 | - | - | - | - | - | - | - |

🔼 This table presents a quantitative comparison of the proposed AnyMoLe method against several baseline methods for motion in-betweening. The comparison is done using various metrics, including L2 distance for quaternion and position (L2Q and L2P), Normalized Pose Similarity Score (NPSS), Learned Perceptual Image Patch Similarity (LPIPS), CLIP score, and Structural Similarity Index (SSIM). The results are broken down for humanoid and non-humanoid characters, showing that AnyMoLe significantly outperforms all baseline methods across all metrics and character types.

read the caption

Table 2: Quantitative results compared with the baselines. Ours outperformed all competitors with by margin.

| Methods | HL2Q | L2Q | L2P | NPSS | LPIPS | CLIP | SSIM |

|---|---|---|---|---|---|---|---|

| Ours | 0.00169 | 0.00158 | 0.01811 | 0.1295 | 0.04901 | 0.9693 | 0.9437 |

| w/o ICAdapt | 0.00210 | 0.00181 | 0.02640 | 0.1413 | 0.07115 | 0.9619 | 0.9340 |

| w/o fine stage | 0.00200 | 0.00171 | 0.02204 | 0.1345 | 0.06037 | 0.9653 | 0.9385 |

| w XPose | 0.00174 | 0.00167 | 0.01976 | 0.1288 | 0.06004 | 0.9678 | 0.9421 |

| w/o data select | 0.00799 | 0.00421 | 0.15460 | 0.2434 | 0.12735 | 0.9061 | 0.9211 |

🔼 This table presents a quantitative analysis of the ablation study conducted on the AnyMoLe model. It shows the impact of removing key components of the model, specifically the ICAdapt module, the fine-stage generation process, and the selection of training data. The results are measured using several metrics (HL2Q, L2Q, L2P, NPSS, LPIPS, CLIP, SSIM) to assess the quality of the generated motion across different configurations.

read the caption

Table 3: Quantitative results of ablation study.

| Character | Humanoid | Non-humanoid | ||||

|---|---|---|---|---|---|---|

| Methods | Similar | Faithful | Natural | Similar | Faithful | Natural |

| Ours | 60.12 | 63.10 | 64.88 | 90.48 | 92.46 | 91.67 |

| SinMDM | 13.10 | 14.29 | 7.74 | 3.97 | 3.17 | 3.57 |

| SinMDM* | 5.36 | 4.17 | 5.95 | 5.56 | 4.37 | 4.76 |

| TST | 16.07 | 12.50 | 16.67 | - | - | - |

| ERD-QV | 5.36 | 5.95 | 4.76 | - | - | - |

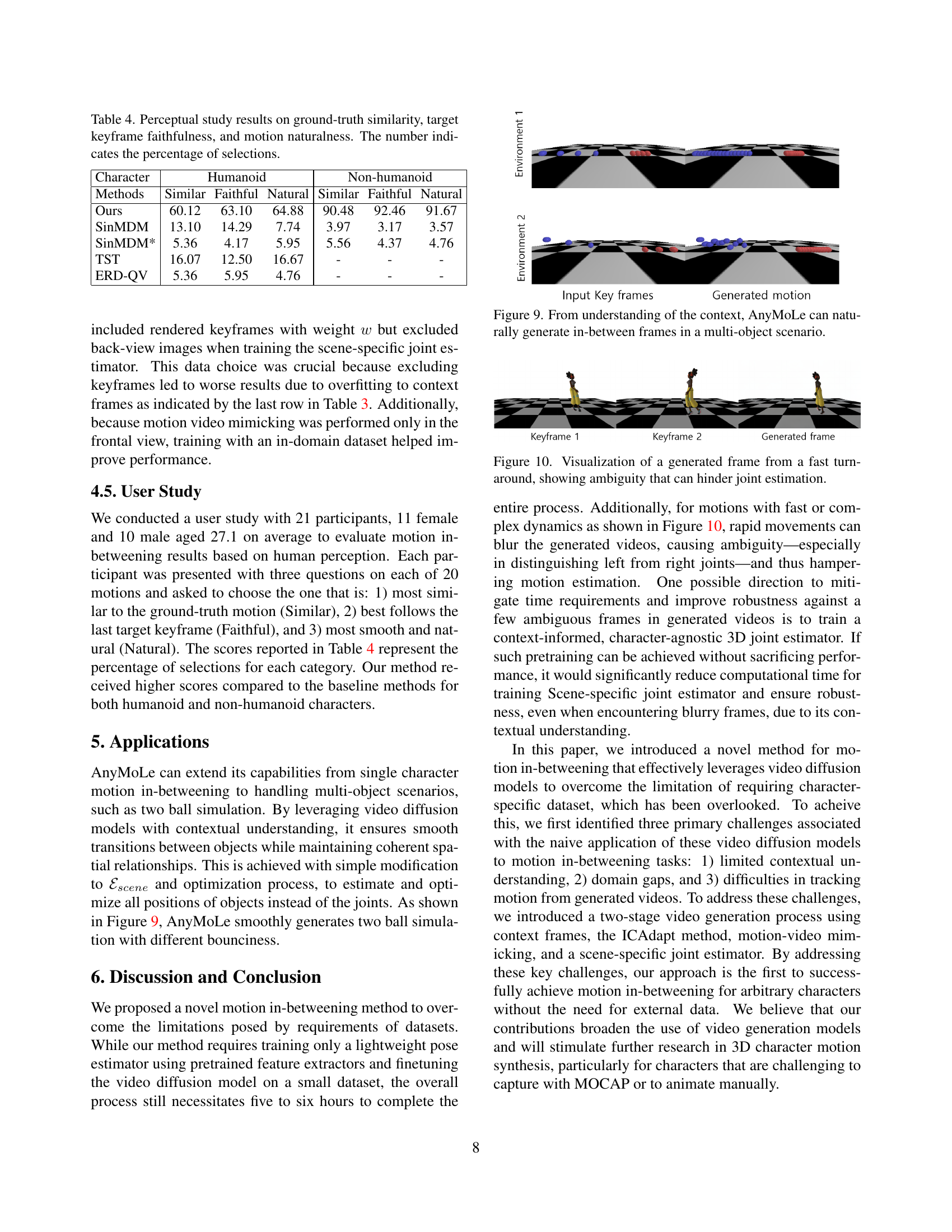

🔼 This table presents the results of a user study evaluating the perceived quality of motion in-betweening generated by AnyMoLe and several baseline methods. Three metrics were assessed: similarity to the ground truth motion, faithfulness to the target keyframes, and naturalness of the motion. For each metric, participants chose the method they perceived as best among AnyMoLe and the baselines (SinMDM, SinMDM*, TST, ERD-QV). The numbers in the table represent the percentage of participants selecting each method for each metric, providing a quantitative comparison of the user’s perception of the different generated motions. The study included both humanoid and non-humanoid character motions.

read the caption

Table 4: Perceptual study results on ground-truth similarity, target keyframe faithfulness, and motion naturalness. The number indicates the percentage of selections.

Full paper#