TL;DR#

Automated Speech Recognition (ASR) models like OpenAI’s Whisper have become essential for many applications, including translation and live transcription. However, issues arise due to inaccuracies in transcription along with increased latency and high computational demands. The researchers analyzed Whisper and two of its variants, focusing on their capabilities. Prior research explored methods to enhance Whisper’s performance, but the impact of quantization on model size and latency needed further exploring.

This study addresses the gap by evaluating the capabilities of Whisper and its variants along with defining quantization techniques for Whisper models. In addition, they examined performance of model in terms of word error rate, processing speed, and latency. The research summarizes qualitative and quantitative findings from two experimental evaluations, showing how quantization reduces latency by 19% and model size by 45%, while also preserving transcription accuracy.

Key Takeaways#

Why does it matter?#

This paper is important for researchers because it provides a comprehensive analysis of Whisper models, quantizatization methods, and experimental evaluations. By understanding the practical applications, researchers can better optimize model size, latency, and accuracy of ASR systems, and find deployment opportunities. This information is crucial for developing real-time applications, and improving accessibility.

Visual Insights#

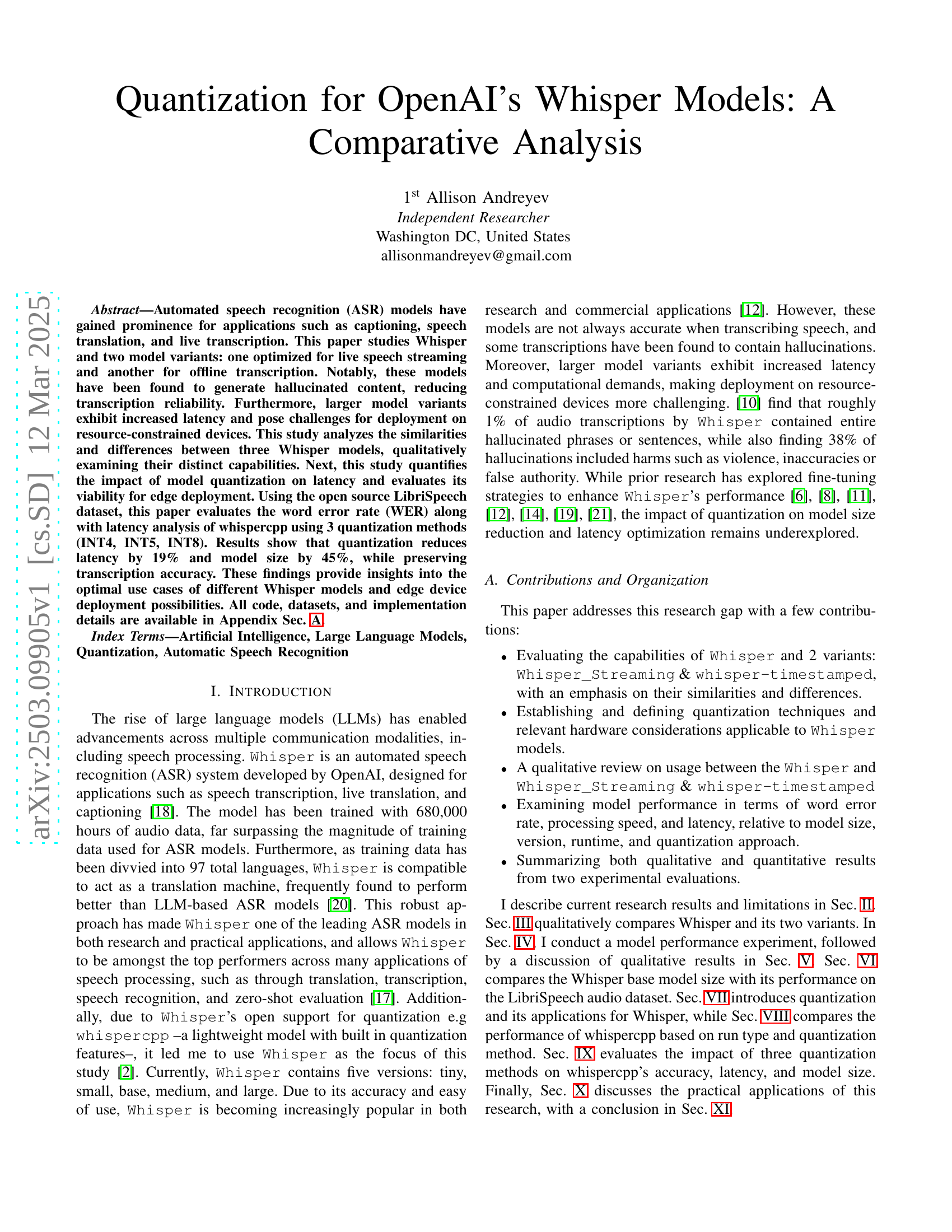

| Model Size | Clean Speech | Challenging Speech |

|---|---|---|

| Tiny | Quick output (¡ 10s), low GPU/CPU usage, inaccuracies with larger text or names, capitalization issues | Misses small background noises, e.g., “They worshiped” only “worship” heard |

| Small | 10-20s output, best capitalization, good timestamp details | Similar to Medium, but 2x faster |

| Medium | 20-40s output, similar accuracy to large model | N/A |

| Large | Long download (2GB), slow processing (up to a couple of minutes), punctuation and capitalization issues | Modifies structure to be grammatically correct while matching audio more closely |

🔼 This table presents a qualitative analysis of the Whisper speech recognition model’s performance across various sizes (tiny, small, medium, large) and speech difficulty levels (clean, challenging). For each model size, it describes observations regarding output speed (time taken for transcription), accuracy of capitalization and punctuation, the presence of any errors in transcription, and overall timestamp accuracy for different speech types.

read the caption

TABLE I: Qualitative Whisper Usage Experience on LibriSpeech Datasets Based on Model Size and Speech Difficulty

In-depth insights#

Quantization Impact#

Quantization’s impact on speech recognition models like Whisper is multifaceted, primarily concerning the trade-offs between model size, computational speed (latency), and transcription accuracy. Quantizing a model, typically by reducing the numerical precision of its weights (e.g., from 32-bit floating-point to 8-bit integer), can significantly shrink the model’s file size. This reduction enables easier deployment on resource-constrained devices like smartphones or embedded systems. Furthermore, smaller models generally exhibit faster inference times, leading to reduced latency in real-time applications like live captioning or speech translation. However, the crucial aspect is ensuring that quantization doesn’t significantly degrade the model’s accuracy. Excessive quantization can lead to information loss, causing a drop in transcription accuracy, measured by metrics like the Word Error Rate (WER). Therefore, the optimal quantization strategy involves finding a balance that maximizes model compression and speed while minimizing accuracy degradation. Certain hardware accelerators, like those found in modern CPUs and GPUs, are designed to efficiently perform computations with quantized models, further enhancing their performance. The viability of different quantization techniques is also dependent on the specific architecture of the ASR, in determining it’s quantization boundaries.

Whisper Variants#

When discussing Whisper variants, it’s crucial to acknowledge that OpenAI offers different versions to cater to diverse needs. These variants might include models optimized for speed (real-time transcription), accuracy (high-fidelity results), or specific hardware constraints (edge deployment). Evaluating trade-offs is important because larger models offer better accuracy but demand more resources. Another crucial factor is the ability to add timestamping. Variants with such features offer granularity but impact model size and processing time. Lastly, the capability to integrate with APIs is also an important consideration, offering opportunities for customization and seamless integration into applications, therefore, developers should consider these factors when choosing a model.

Accuracy Tradeoffs#

Accuracy tradeoffs in speech recognition models, particularly after quantization, are complex. Reducing model size and latency through quantization can impact transcription accuracy, potentially introducing errors or hallucinations. The choice of quantization method (e.g., INT4, INT5, INT8) presents a tradeoff. Lower precision formats (INT4) lead to smaller models and faster inference but may sacrifice accuracy compared to higher precision formats (INT8). The type of speech (clear vs. noisy) also influences accuracy, with challenging speech samples being more prone to errors after quantization. A balance between model size, speed, and accuracy must be considered based on the application’s requirements. For real-time transcription, lower latency may be prioritized over absolute accuracy, whereas for archival purposes, accuracy might be paramount. Fine-tuning strategies and hardware acceleration can help mitigate accuracy loss during quantization and optimize performance for specific use cases.

Hardware Support#

The section on “Hardware Support for Quantization” highlights the crucial role of hardware accelerators in optimizing the efficiency of quantized models, particularly for deployment on resource-limited devices. AMD and ARM CPUs support mixed-precision operations and 8-bit integer quantization, exemplified by AMD Zen 4 and ARM Neoverse architectures. Apple Silicon and NVIDIA GPUs enhance support for 8-bit integer quantization and tensor core optimization. Intel CPUs and Qualcomm GPUs optimize integer quantization and mixed-precision tasks. These hardware capabilities are essential for realizing the benefits of quantization, enabling faster and more efficient execution of AI models on a variety of platforms.

Future ASR Scaling#

Future ASR scaling presents a fascinating landscape, driven by the need for enhanced accuracy, efficiency, and real-time performance. As models like Whisper evolve, optimizing the trade-off between these factors becomes critical. Scaling necessitates exploring advanced quantization techniques beyond INT8, potentially leveraging mixed-precision strategies and hardware accelerators. Furthermore, distributed training and inference could enable handling larger datasets and more complex models. Future research should also focus on robustness to diverse acoustic environments and accents, along with addressing the challenge of hallucinations. Exploring novel architectures, such as attention mechanisms or transformers, may unlock further performance gains. Ultimately, the success of future ASR scaling hinges on a holistic approach that considers both algorithmic advancements and hardware optimization to make ASR accessible.

More visual insights#

More on tables

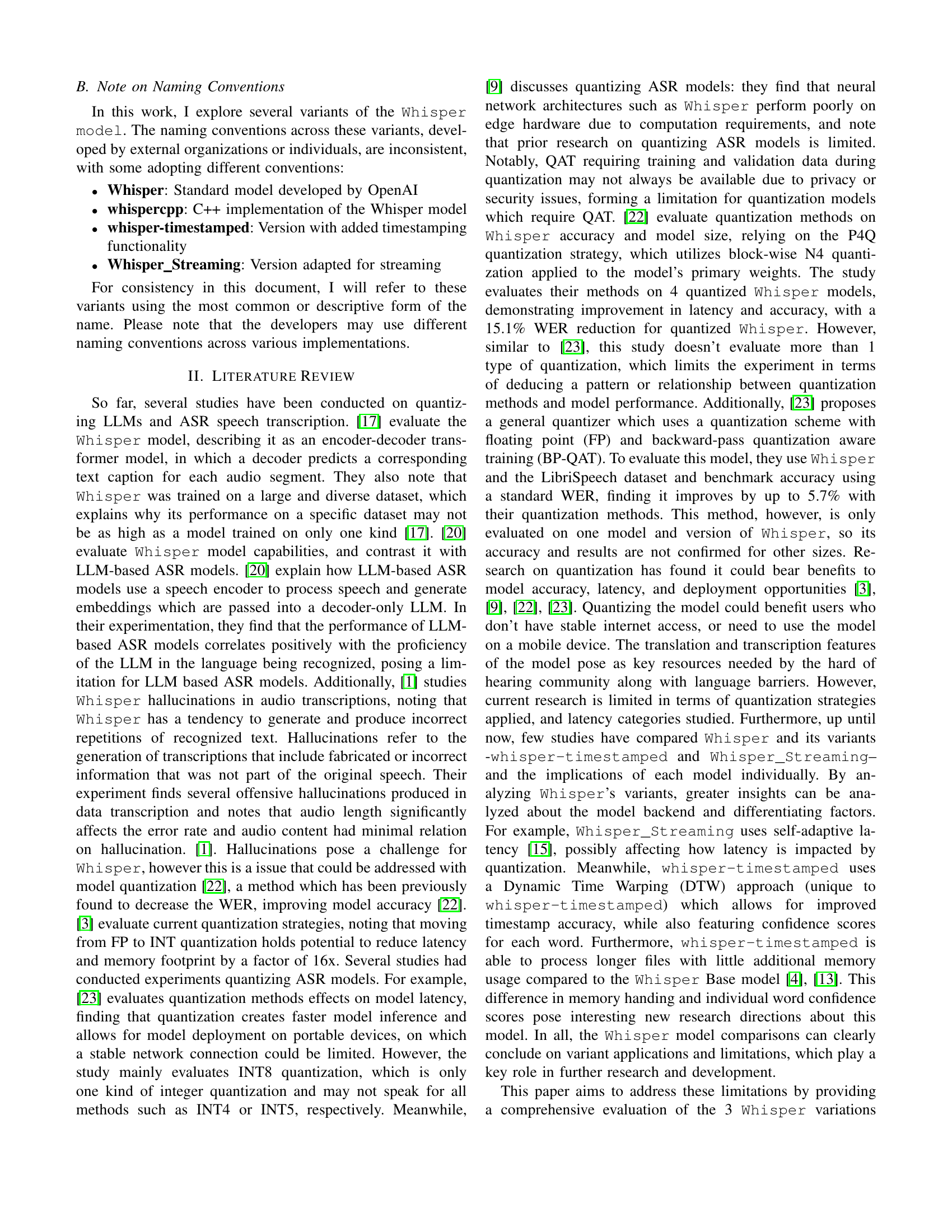

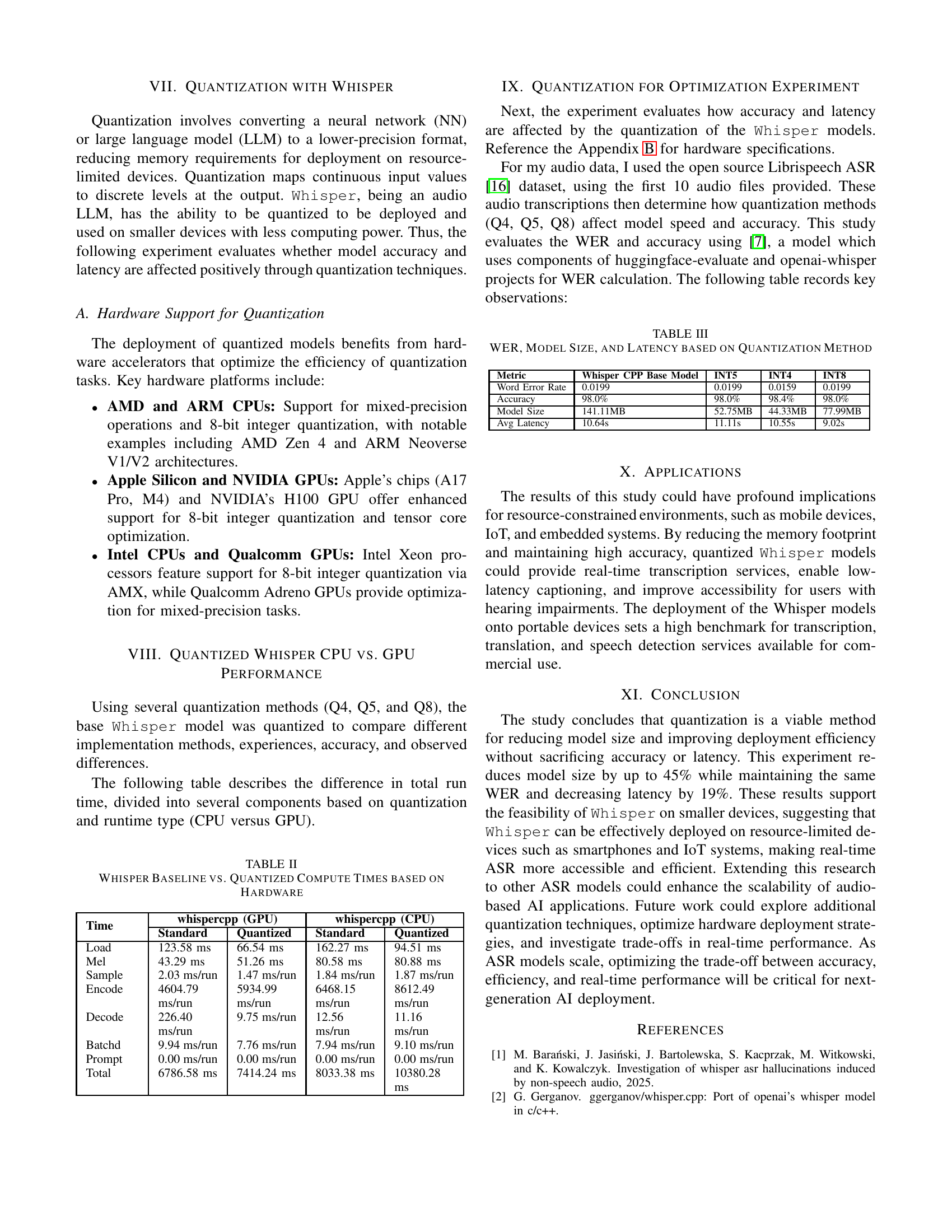

| Time | whispercpp (GPU) | whispercpp (CPU) | ||

|---|---|---|---|---|

| Standard | Quantized | Standard | Quantized | |

| Load | 123.58 ms | 66.54 ms | 162.27 ms | 94.51 ms |

| Mel | 43.29 ms | 51.26 ms | 80.58 ms | 80.88 ms |

| Sample | 2.03 ms/run | 1.47 ms/run | 1.84 ms/run | 1.87 ms/run |

| Encode | 4604.79 ms/run | 5934.99 ms/run | 6468.15 ms/run | 8612.49 ms/run |

| Decode | 226.40 ms/run | 9.75 ms/run | 12.56 ms/run | 11.16 ms/run |

| Batchd | 9.94 ms/run | 7.76 ms/run | 7.94 ms/run | 9.10 ms/run |

| Prompt | 0.00 ms/run | 0.00 ms/run | 0.00 ms/run | 0.00 ms/run |

| Total | 6786.58 ms | 7414.24 ms | 8033.38 ms | 10380.28 ms |

🔼 This table presents a comparison of computation times for the baseline Whisper model and its quantized versions, broken down by hardware (CPU vs. GPU) and processing stage (load, Mel, sample, encode, decode, batch, prompt). It shows the impact of quantization on different parts of the Whisper processing pipeline.

read the caption

TABLE II: Whisper Baseline vs. Quantized Compute Times based on Hardware

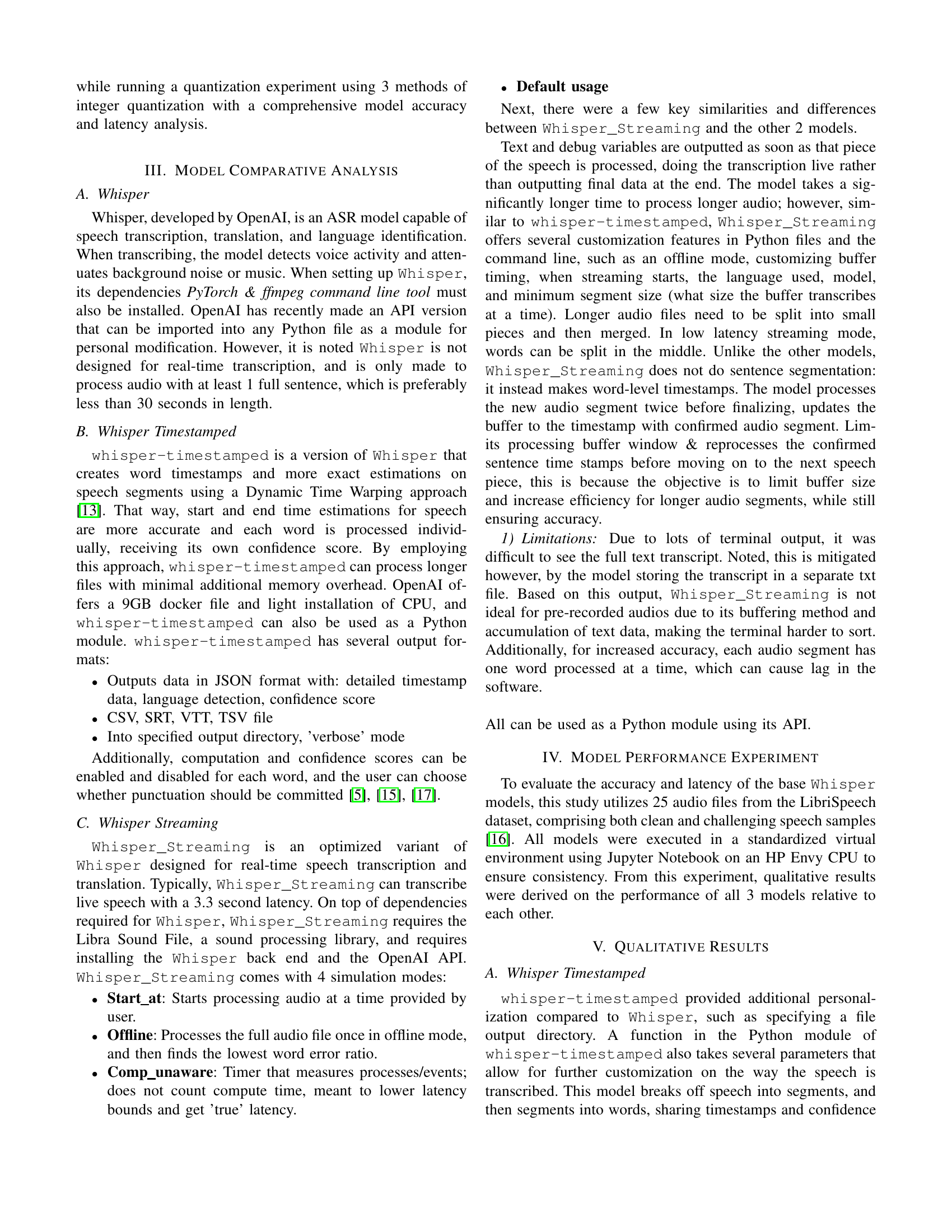

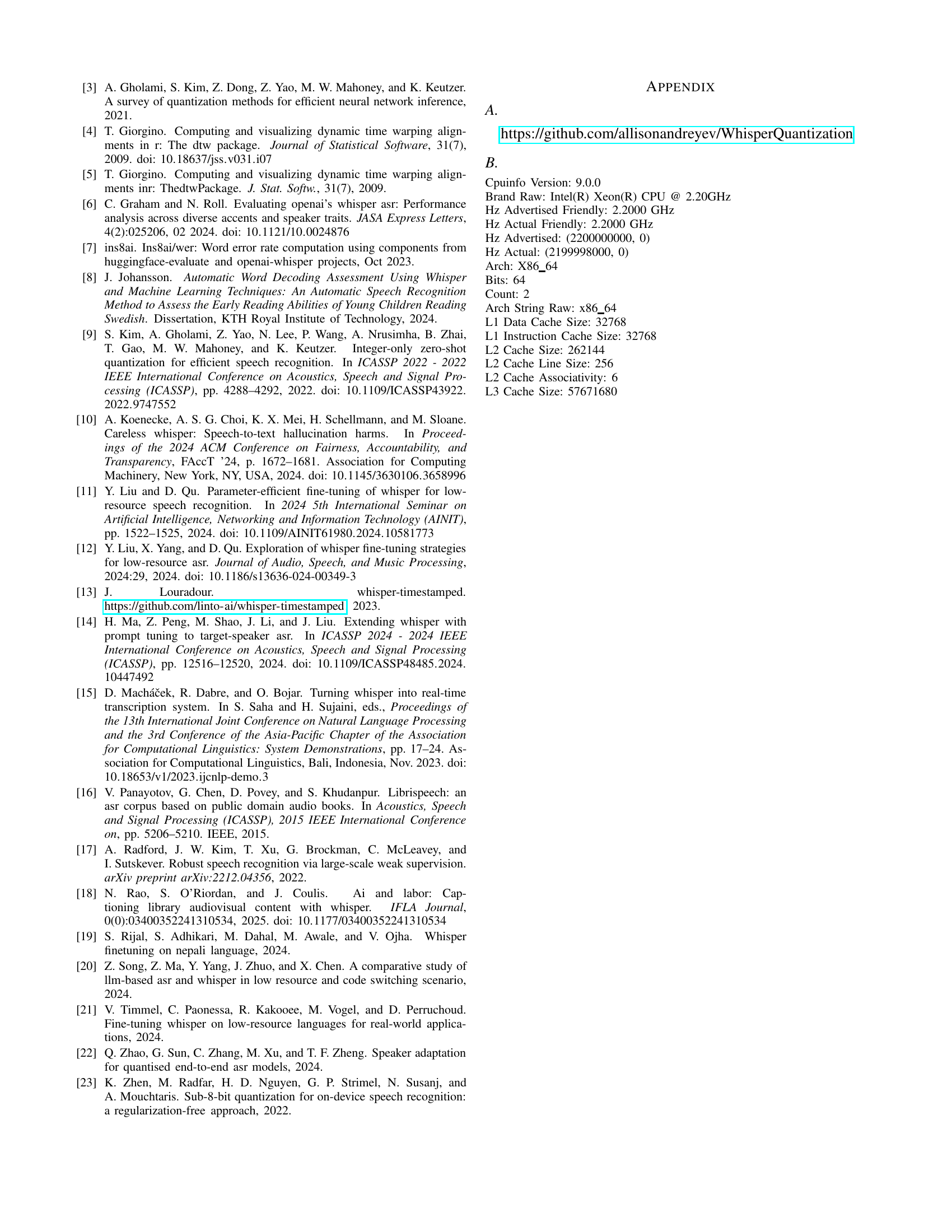

| Metric | Whisper CPP Base Model | INT5 | INT4 | INT8 |

| Word Error Rate | 0.0199 | 0.0199 | 0.0159 | 0.0199 |

| Accuracy | 98.0% | 98.0% | 98.4% | 98.0% |

| Model Size | 141.11MB | 52.75MB | 44.33MB | 77.99MB |

| Avg Latency | 10.64s | 11.11s | 10.55s | 9.02s |

🔼 This table presents a comparison of Word Error Rate (WER), model size, and average latency for different quantization methods applied to the Whisper speech recognition model. It shows how using INT4, INT5, and INT8 quantization affects the model’s performance and resource requirements. The baseline, unquantized model’s results are also included for comparison.

read the caption

TABLE III: WER, Model Size, and Latency based on Quantization Method

Full paper#