TL;DR#

Video-LLMs are advancing, but existing benchmarks mainly test object identification, overlooking their ability to reason about space, time, and causality. Models often rely on pre-trained associations rather than truly understanding how objects interact. This makes it difficult to assess if they truly “understand” videos, leading to inconsistencies. To address this, researchers introduce a new benchmark to evaluate Video-LLMs.

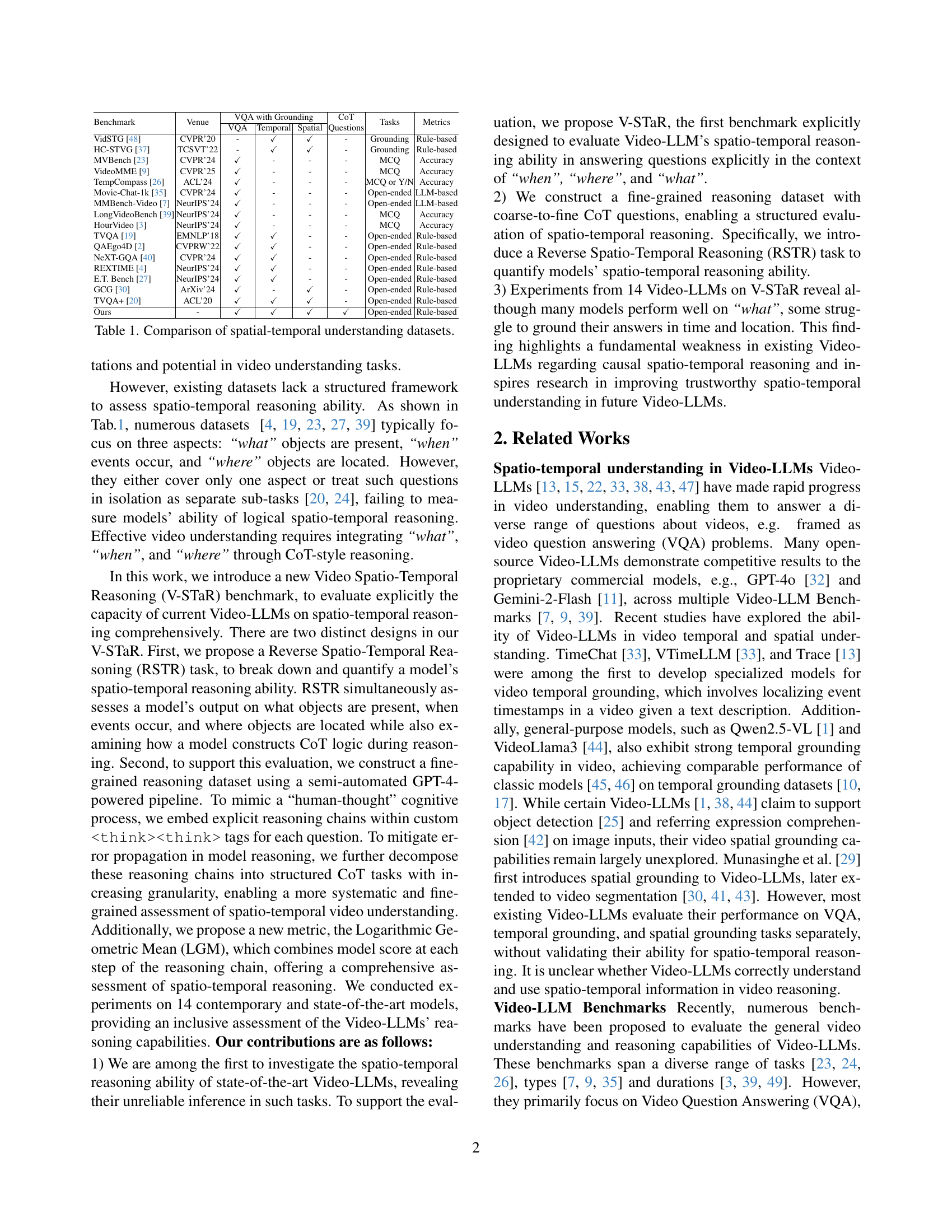

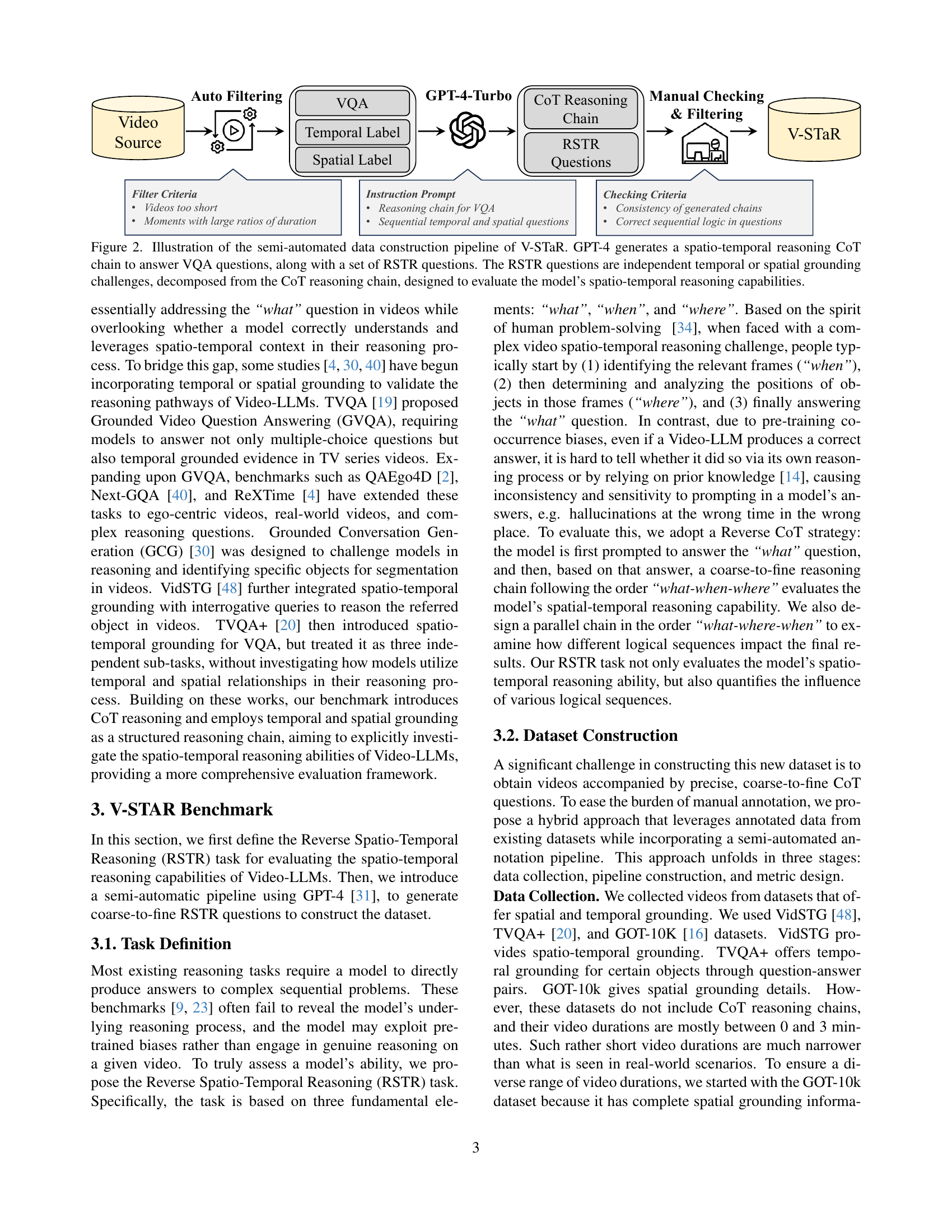

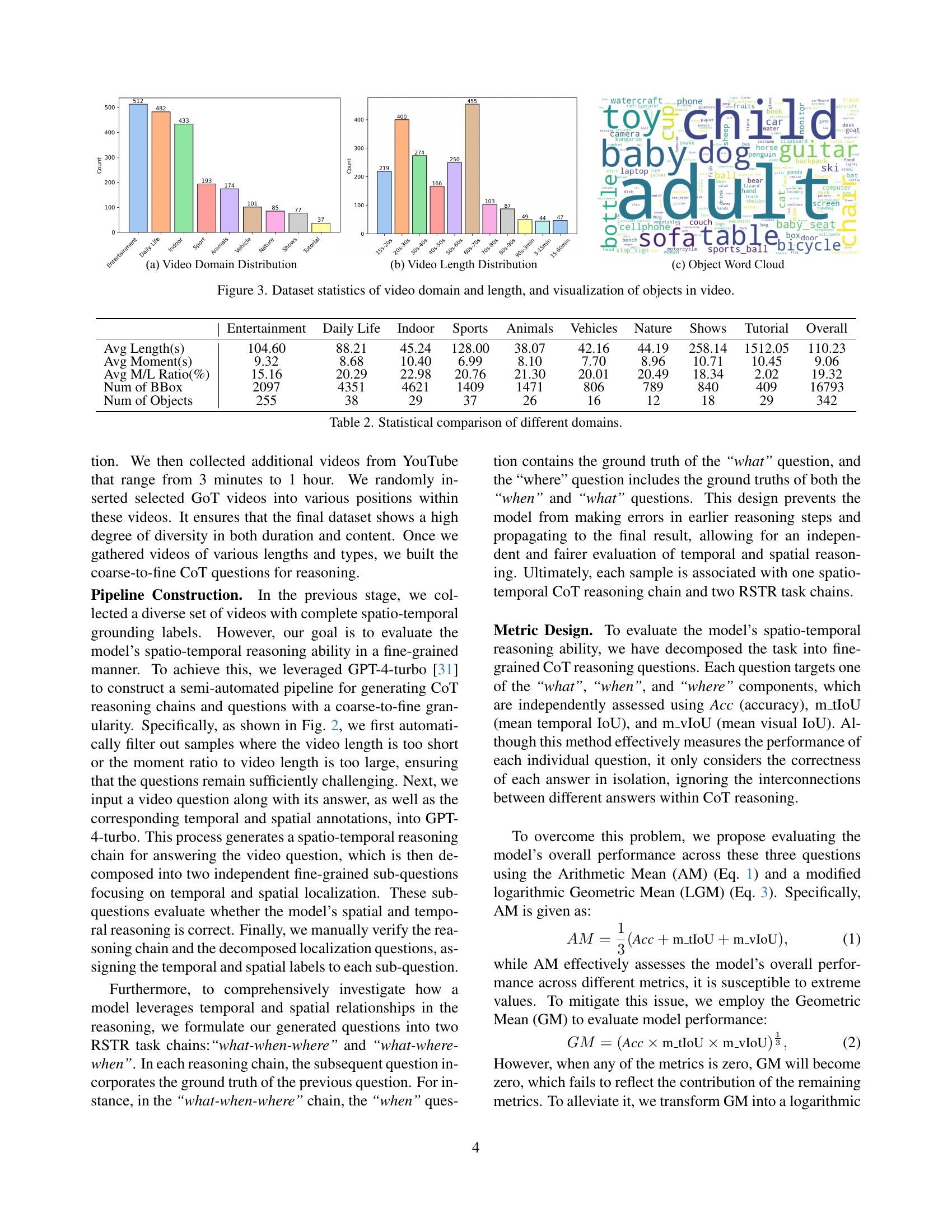

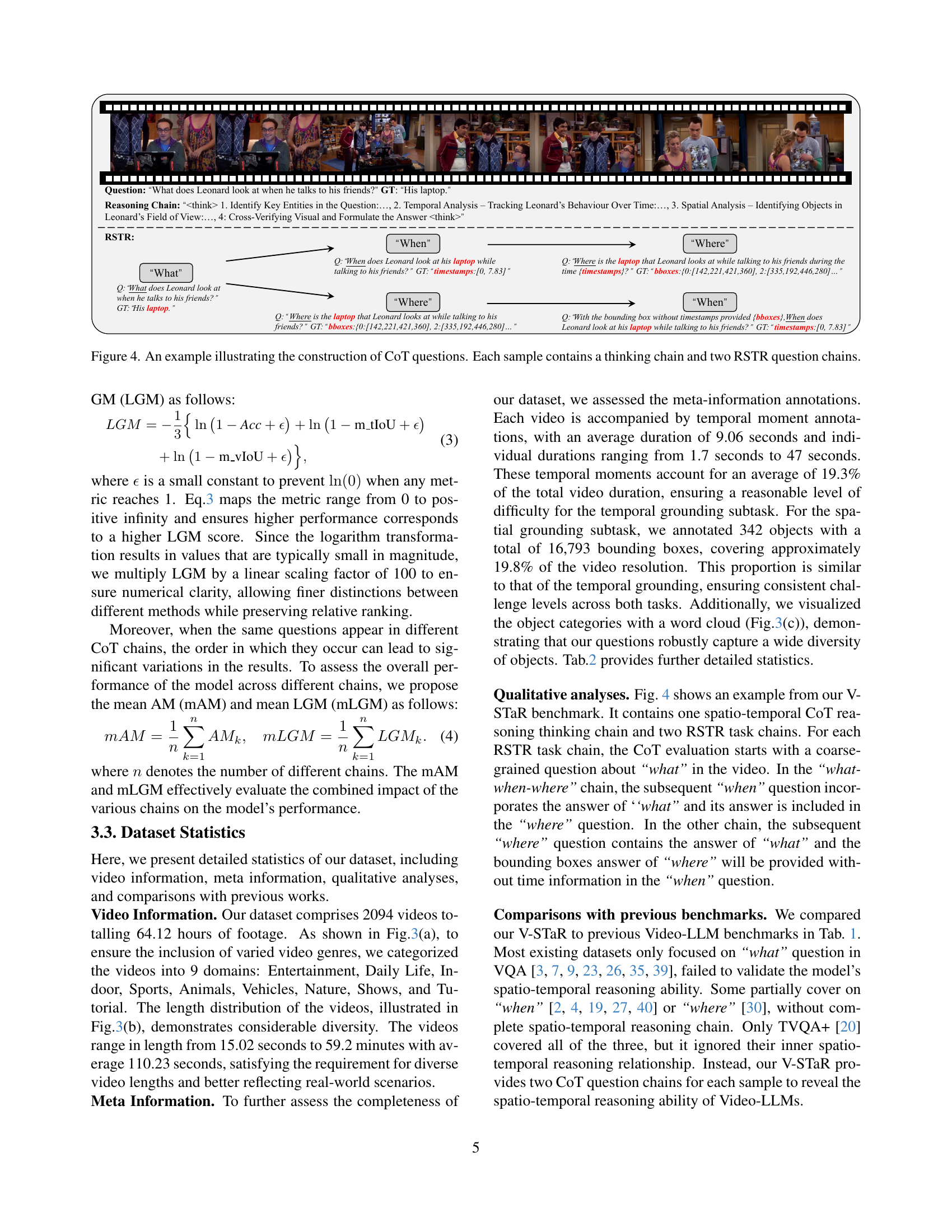

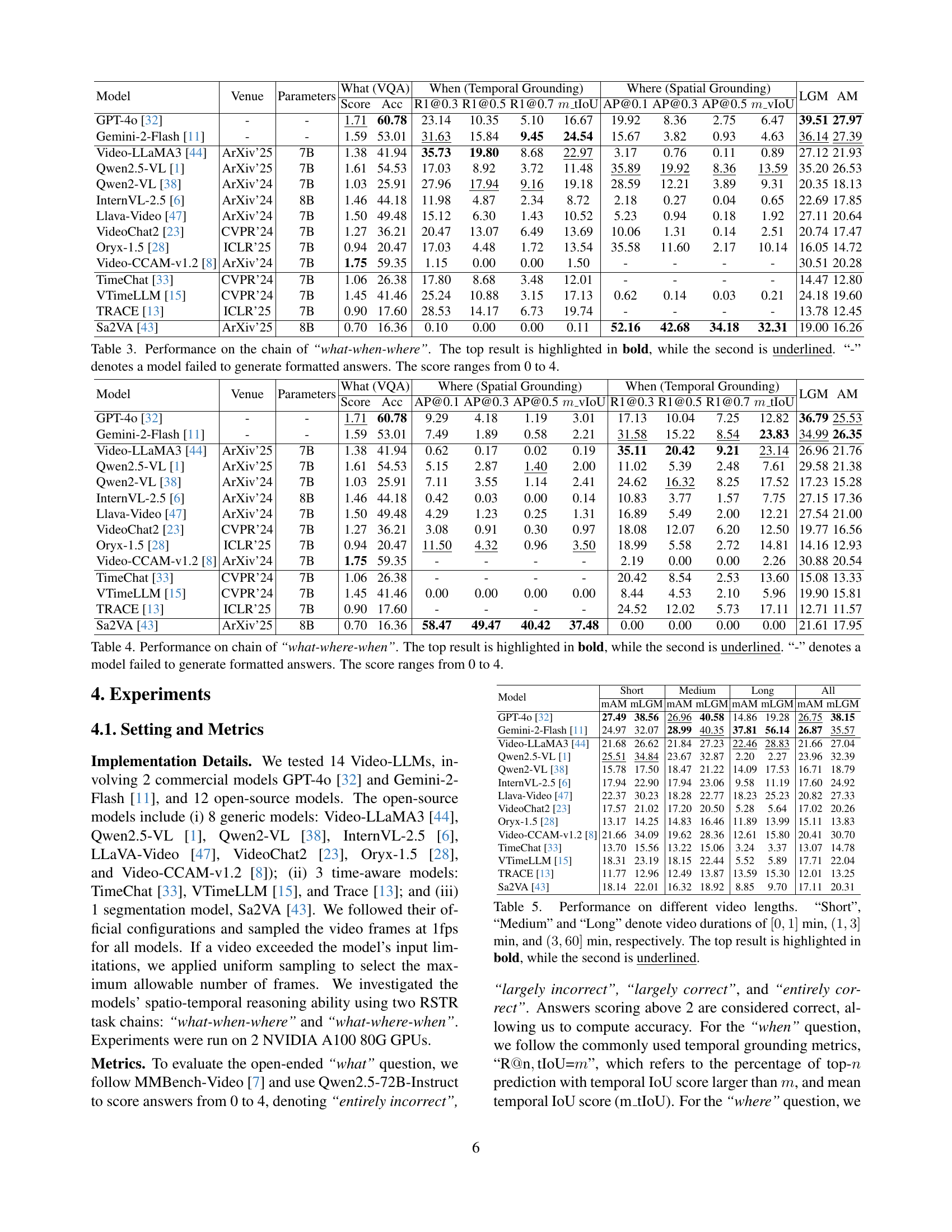

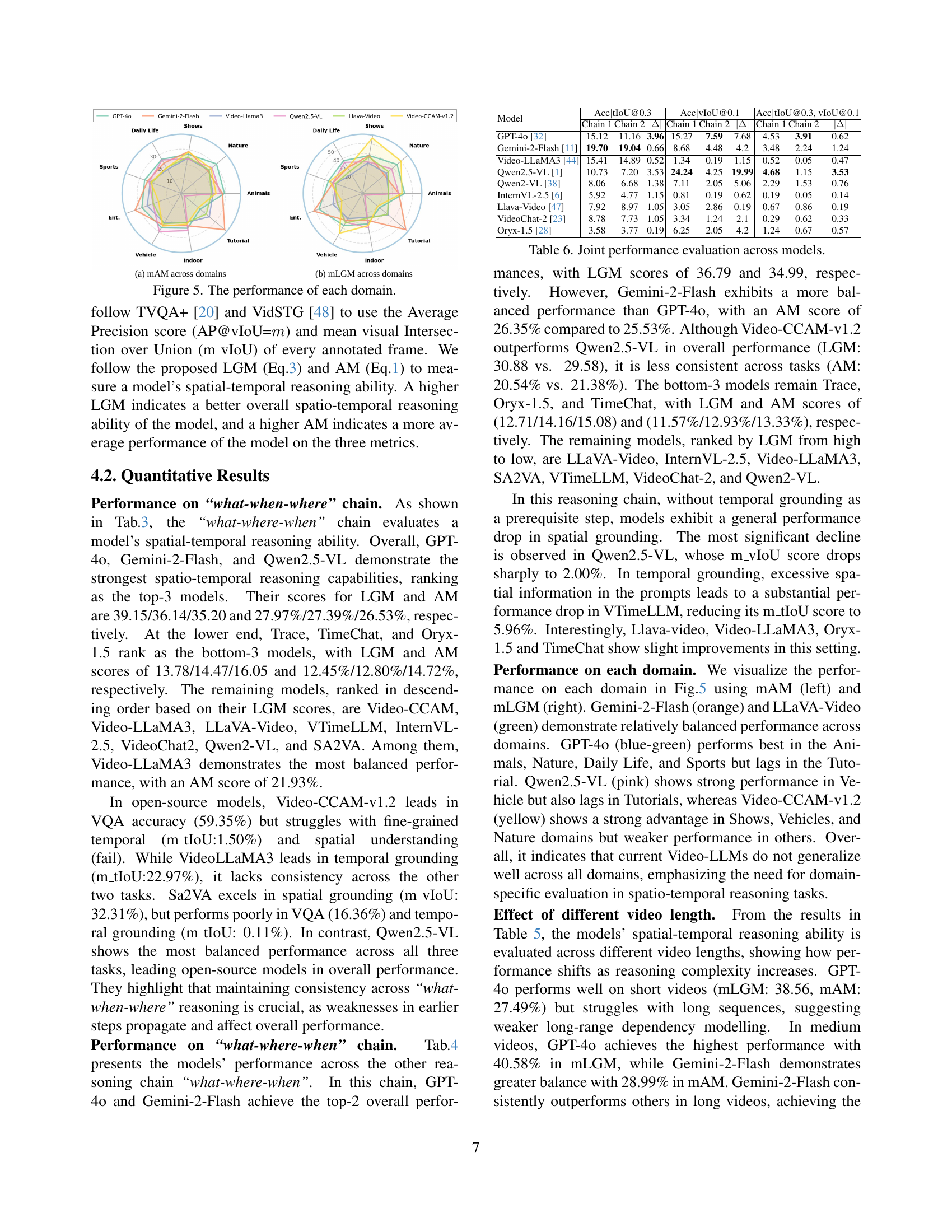

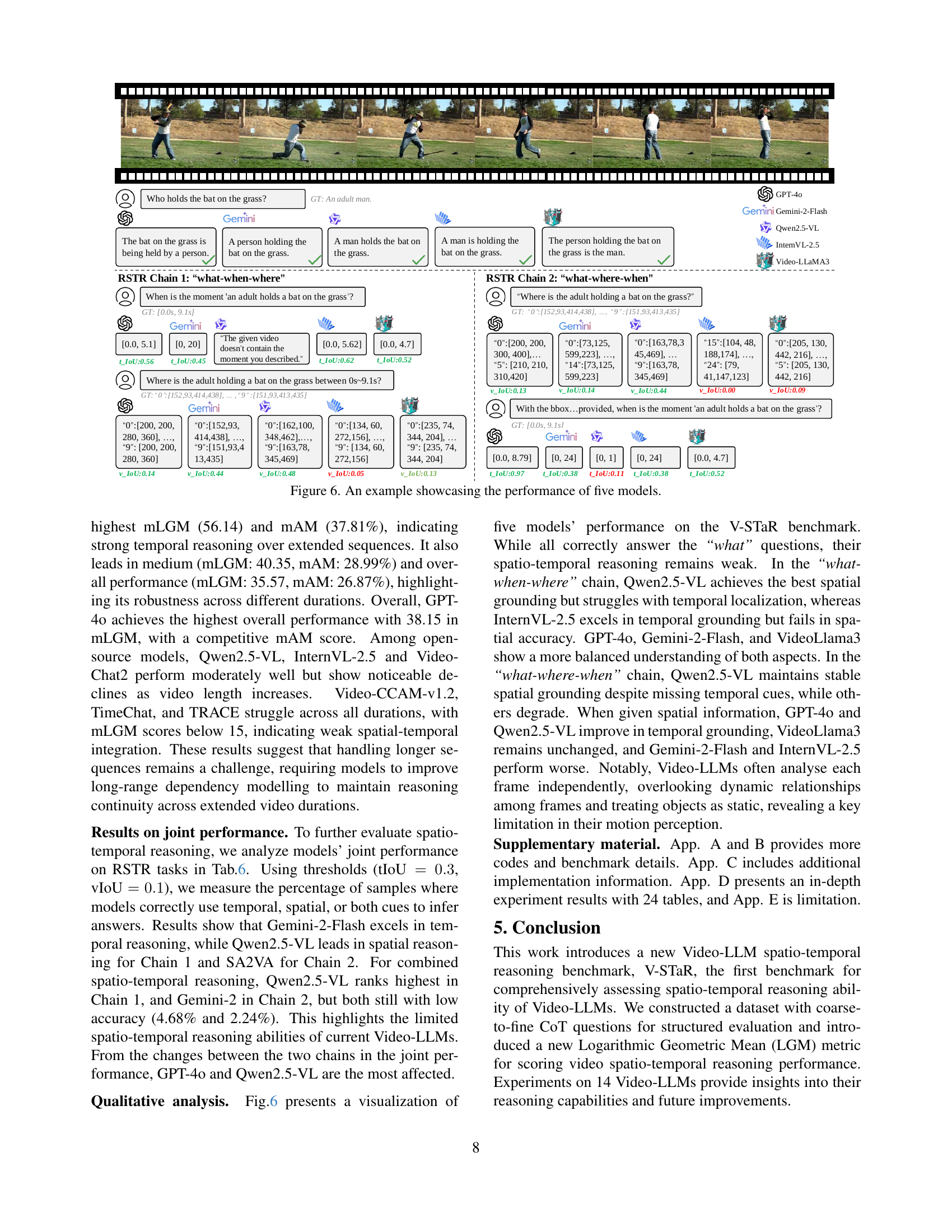

The new benchmark contains the Reverse Spatio-Temporal Reasoning task, which evaluates models on what objects are present, when events occur, and where objects are located, all while capturing the Chain-of-Thought logic. The authors built a dataset using a semi-automated pipeline that mimics human cognition. Experiments on 14 Video-LLMs expose critical weaknesses in current video models.

Key Takeaways#

Why does it matter?#

This paper is important because it addresses the limitations of current video understanding benchmarks, which often fail to assess true spatio-temporal reasoning. By introducing V-STaR, the authors provide a more rigorous evaluation framework, highlighting gaps in existing Video-LLMs’ abilities. The benchmark and the proposed RSTR task open new avenues for developing more robust and consistent video reasoning models.

Visual Insights#

Full paper#