TL;DR#

Image captioning faces new challenges with the rise of VLMs, where models can generate longer descriptions. However, reliably evaluating such detailed captions is difficult because of the fine-grained details, diversity and lack of reliable benchmarks. This paper addresses the question of how well VLMs perform when compared to human captioning & how we can reliably assess the quality of the captions they generate. It also shows that current evaluation methods are unsuitable due to their inherent biases.

To tackle these issues, the authors introduce CapArena, an arena-style platform for evaluating VLMs via pairwise caption battles. It provides high-quality human preference votes to benchmark models. They show that leading models like GPT-4o can match or exceed human-level performance. The authors also introduce CapArena-Auto, a new automated benchmark for detailed captioning, achieving high correlation with human rankings at a low cost.

Key Takeaways#

Why does it matter?#

This work provides a crucial benchmark for evaluating detailed image captioning, a task becoming increasingly important. It reveals the limitations of existing metrics and open-source models, while also introducing a new automated benchmark. This paves the way for more accurate and efficient development of VLMs and deeper understanding in image content.

Visual Insights#

🔼 This figure presents the results of a pairwise comparison-based evaluation of various vision-language models (VLMs) on the task of detailed image captioning. The models are ranked according to their performance in head-to-head comparisons against human-generated captions. The ranking reveals that top-performing, commercial VLMs achieve performance on par with human annotators, demonstrating the advancement of this technology. Conversely, many open-source models lag significantly behind, indicating a considerable performance gap between commercial and open-source VLMs in this area.

read the caption

Figure 1: Model rankings from CapArena in detailed captioning. Top models are comparable to humans, while most open-source models lag behind.

| Image | Pairwise Caption Battle | Preference |

![[Uncaptioned image]](extracted/6280623/latex/images/pro_case2.jpg) | Caption1 (Qwen2-VL-72B): … The dog is holding a stick in its mouth, and the kitten is standing on its hind legs, reaching up to grab the stick. The kitten’s front paws are extended towards the stick, and its body is slightly arched as it tries to take the stick from the dog. … Caption2 (Human): … A gray and black kitten is leaping into the air at the dog’s face. It is facing the back at a right angle. Its right leg is extended out with its paw in front of the dog’s face. Its tail is down to the right in the air. … | Caption2 is better (more accurate and vivid description of the cat’s posture). |

![[Uncaptioned image]](extracted/6280623/latex/images/pro_case1.jpg) | Caption1 (GPT-4o): … The mural features a figure that appears to be inspired by traditional Asian art, possibly a deity or spiritual figure. The figure is depicted with a serene expression … Surrounding the figure is a halo-like glow in warm tones of yellow and orange, adding a sense of divinity or spirituality. Caption2 (LLama-3.2-90B): The image depicts a vibrant mural of a woman on the side of a building, surrounded by various objects and features. **Mural:** The mural is painted in bright colors, featuring a woman with long dark hair wearing a blue robe … | Caption1 is better (a spiritual figure is much more informative than a woman). |

🔼 This table showcases examples of pairwise comparisons used in the CapArena benchmark. Each comparison presents two captions describing the same image: one generated by a VLM and one by a human. Annotators judged which caption was better, providing feedback on accuracy and detail. Red highlights less accurate or less preferable phrases within the captions, while green highlights more accurate or preferable ones. The complete evaluation guidelines are described in Section 3.1, with additional examples provided in Table 6.

read the caption

Table 1: Examples of pairwise battles in CapArena. Red and green indicate less accurate and more preferable expressions, respectively. The evaluation guidelines are detailed in Section 3.1, and more examples are in Table 6.

In-depth insights#

Arena Style Eval#

Arena-style evaluation, drawing inspiration from LLM evaluations, marks a significant advancement. This approach involves pitting captions against each other in pairwise comparisons, judged by humans, to determine preference. It helps overcomes the subjectivity inherent in evaluating detailed descriptions. Human preferences are essential due to the nuanced understanding required. Pairwise comparisons provide a more direct ranking than scoring systems. This method allows for more granular comparisons, even when differences are subtle. The arena approach mimics real-world scenarios where relative quality matters more than absolute scores. It offers a comprehensive model performance assessment, highlighting comparative strengths and weaknesses. While the method relies on human annotations, the high inter-annotator agreement, ensures data reliability. Ultimately, arena-style evaluation provides a nuanced approach. It facilitates the identification of top-performing models and drives progress in image captioning.

VLM Caption Biases#

VLM caption biases are a crucial concern when evaluating image captioning models. Different VLMs may exhibit systematic tendencies to overemphasize or underestimate certain aspects of an image or favor particular descriptive styles. This can stem from biases present in the VLM’s training data, architectural limitations, or the evaluation metrics employed. For example, a VLM trained primarily on datasets with simple captions may struggle to capture the nuances of complex scenes, while another model might be inclined to generate overly verbose descriptions. Understanding these biases is essential for developing more robust and reliable evaluation methods and for ensuring that VLMs generate captions that are accurate, informative, and representative of the image content. Identifying model biases will allow the development community to create more fair and trustworthy models for caption generation.

Detailed Eval Era#

The “Detailed Eval Era” in image captioning signifies a shift towards more nuanced and comprehensive evaluation methodologies. Traditional metrics often fall short in capturing the richness and accuracy of the descriptions generated by modern Vision-Language Models (VLMs). This era necessitates metrics that can assess the fine-grained details, spatial relationships, and contextual understanding exhibited in captions. Furthermore, it involves addressing the biases inherent in existing evaluation methods and ensuring that the assessment aligns with human preferences. The focus moves beyond simple n-gram overlap to encompass semantic relevance and the ability to detect subtle errors or hallucinations in the generated text. Robust benchmarks with high-quality human annotations become crucial for reliably comparing different models and driving progress in detailed image understanding and description.

Automated Metrics?#

The question of automated metrics in image captioning is crucial, especially with the advent of VLMs generating detailed captions. Traditional metrics, designed for shorter captions, often fail to capture the nuances and fine-grained details present in longer descriptions. This necessitates the development of new, more robust automated evaluation methods. While some existing metrics like METEOR show promise at the caption level, they exhibit systematic biases across different models, leading to unreliable model rankings. Therefore, reliable automated metrics are essential for efficient benchmarking and iterative improvement of detailed captioning capabilities in VLMs. This ensures accurate assessment and reduces reliance on costly human evaluations. One promising solution lies in employing VLM-as-a-Judge, leveraging the capabilities of powerful VLMs to simulate human preferences and provide more consistent and accurate evaluations.

VLM Judge Insights#

VLM-as-a-Judge presents itself as a promising avenue for automated caption evaluation, offering notable advantages over traditional metrics. Its key strength lies in its ability to better discern fine-grained details and nuanced semantic alignment between images and captions. Moreover, reference descriptions enhances judgement by clarifying image details, further improving model level agreement. This approach suggests that reducing bias in automated metrics is crucial for accurate model assessment. While exhibiting strong consistency with human preferences, challenges remain in evaluating subtly different caption pairs, highlighting the need for improvements in fine-grained perception.

More visual insights#

More on figures

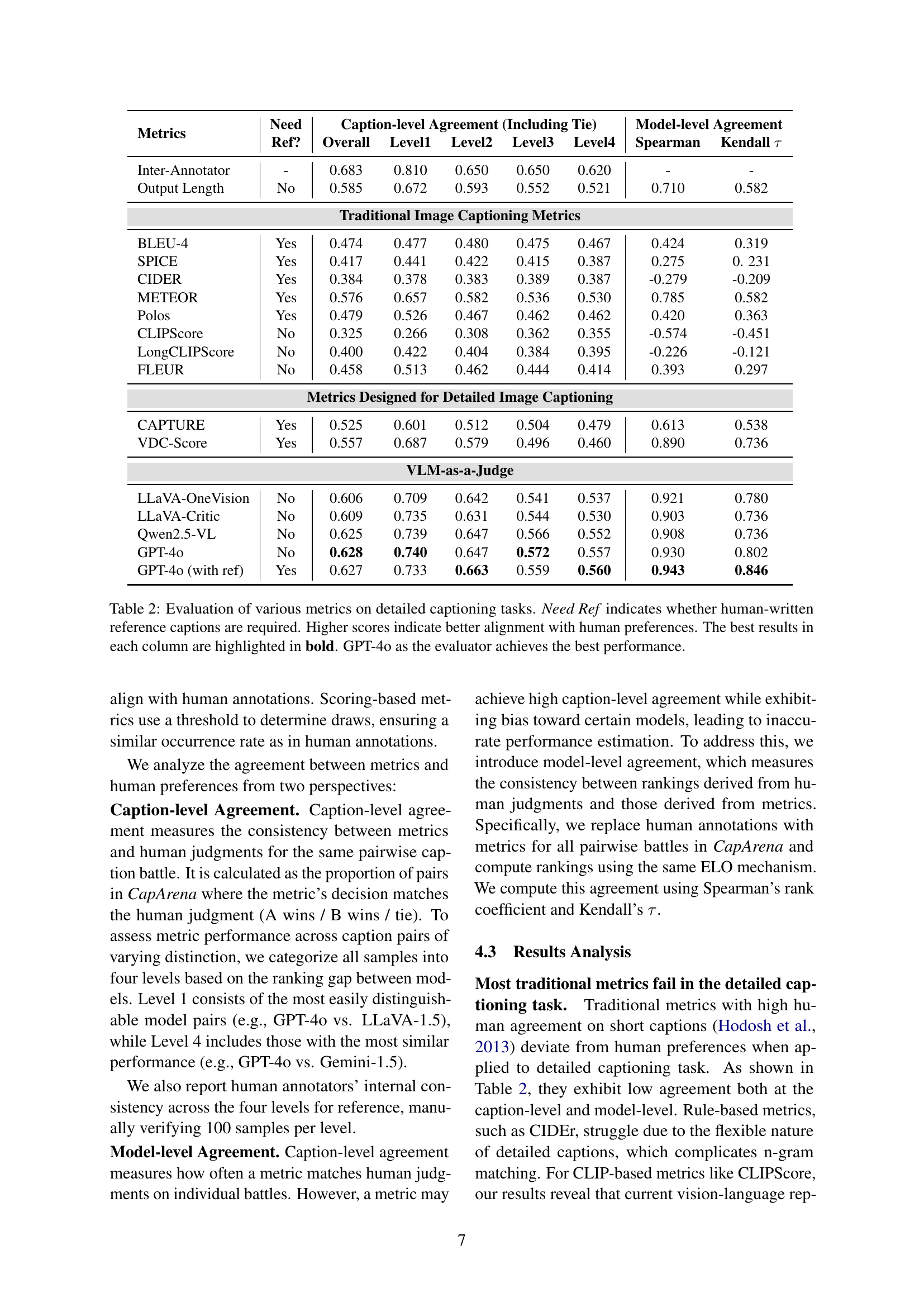

🔼 This figure shows the distribution of caption lengths generated by various Vision-Language Models (VLMs) and human annotators. The x-axis represents the caption length in words, and the y-axis represents the frequency of captions with that length. The distributions are shown as histograms, allowing for a visual comparison of how concise or verbose the different models tend to be in their image captioning. It helps to visualize whether a model produces relatively short or long captions and how that compares to human-generated captions.

read the caption

Figure 2: Caption length distribution of different VLMs.

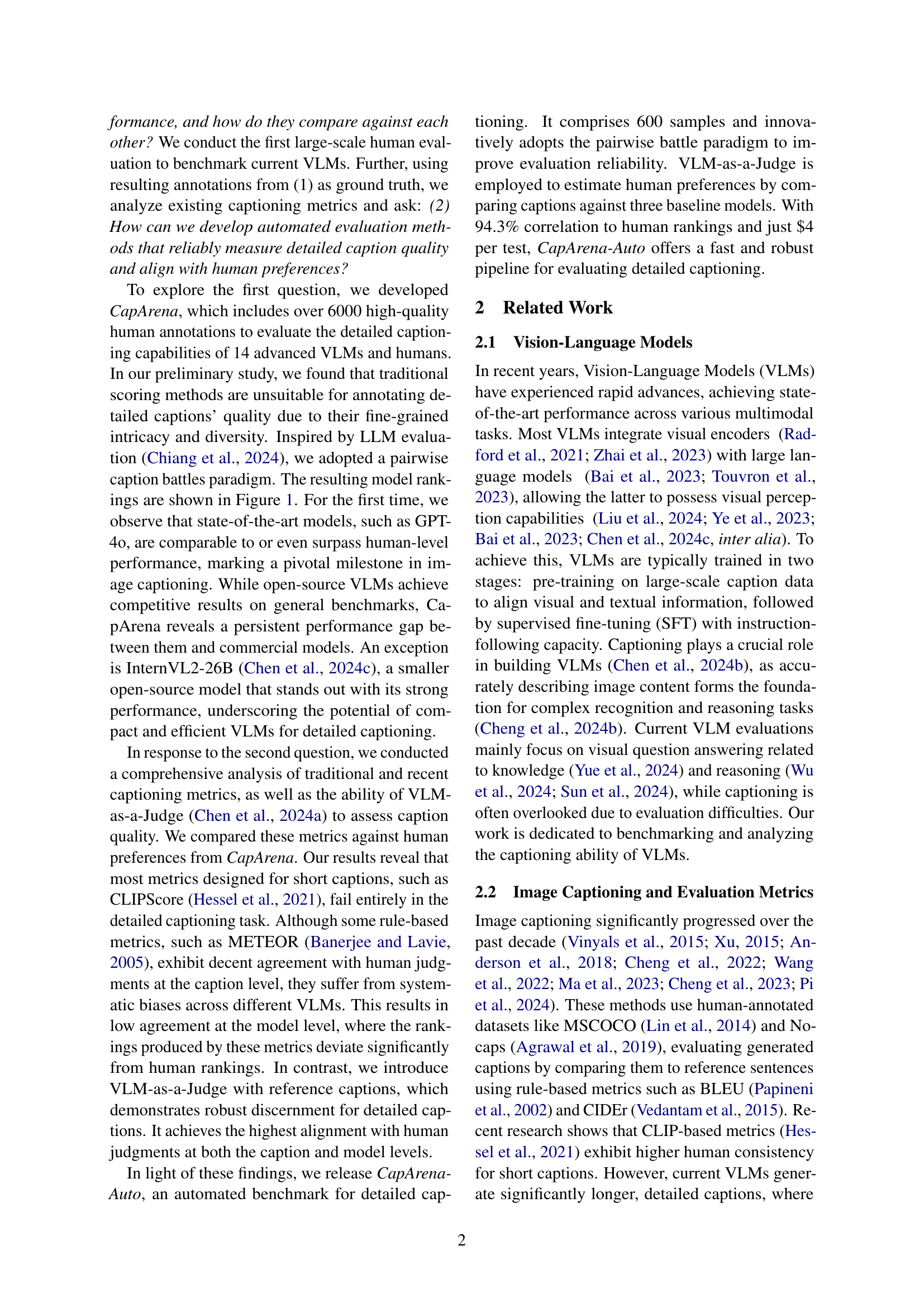

🔼 This figure visualizes the results of pairwise comparisons between different vision-language models (VLMs) in the CapArena benchmark. The heatmap displays the number of pairwise comparisons conducted between each model pair (battle counts), showing how often each model was pitted against every other model. The win rate matrix uses color intensity to represent each model’s win rate in its head-to-head battles against other models, providing a visual representation of the relative performance of the different VLMs.

read the caption

Figure 3: Battle counts and win rate matrix between models in CapArena.

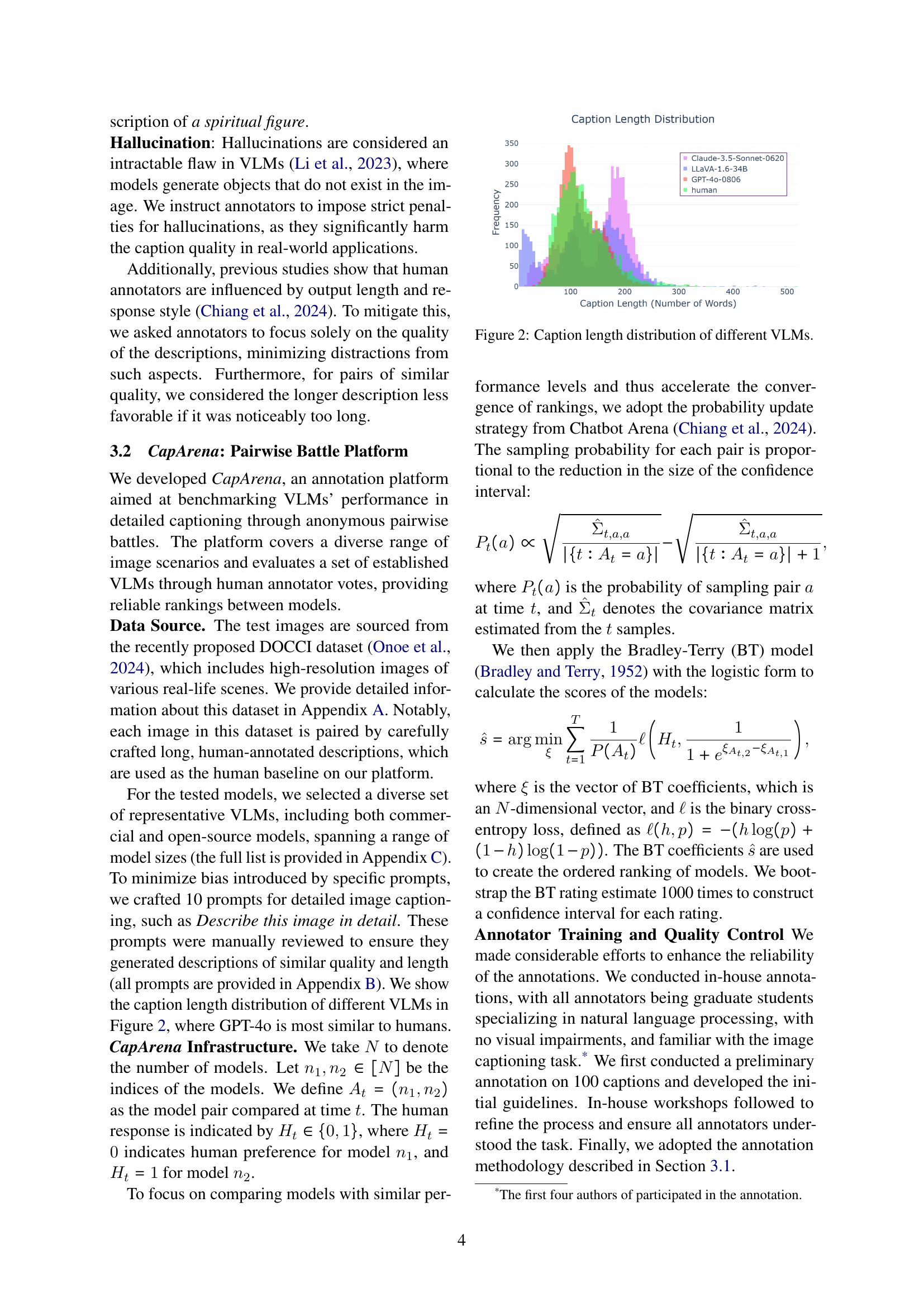

🔼 This figure displays the correlation between a model’s performance on standard vision-language benchmarks and its ranking in the CapArena benchmark specifically designed for detailed image captioning. The x-axis represents the scores from various vision-language benchmarks (MathVista, POPE, and VCR), while the y-axis shows the ranking in CapArena. Each point corresponds to a specific Vision-Language Model (VLM), and its size reflects the model’s parameter count. The plot reveals that strong performance on general vision-language benchmarks does not guarantee strong performance on detailed image captioning tasks.

read the caption

Figure 4: Correlation between vision-language benchmark scores and CapArena ranking. Models that perform well on general benchmarks do not necessarily excel in captioning. The size of each point represents the model size.

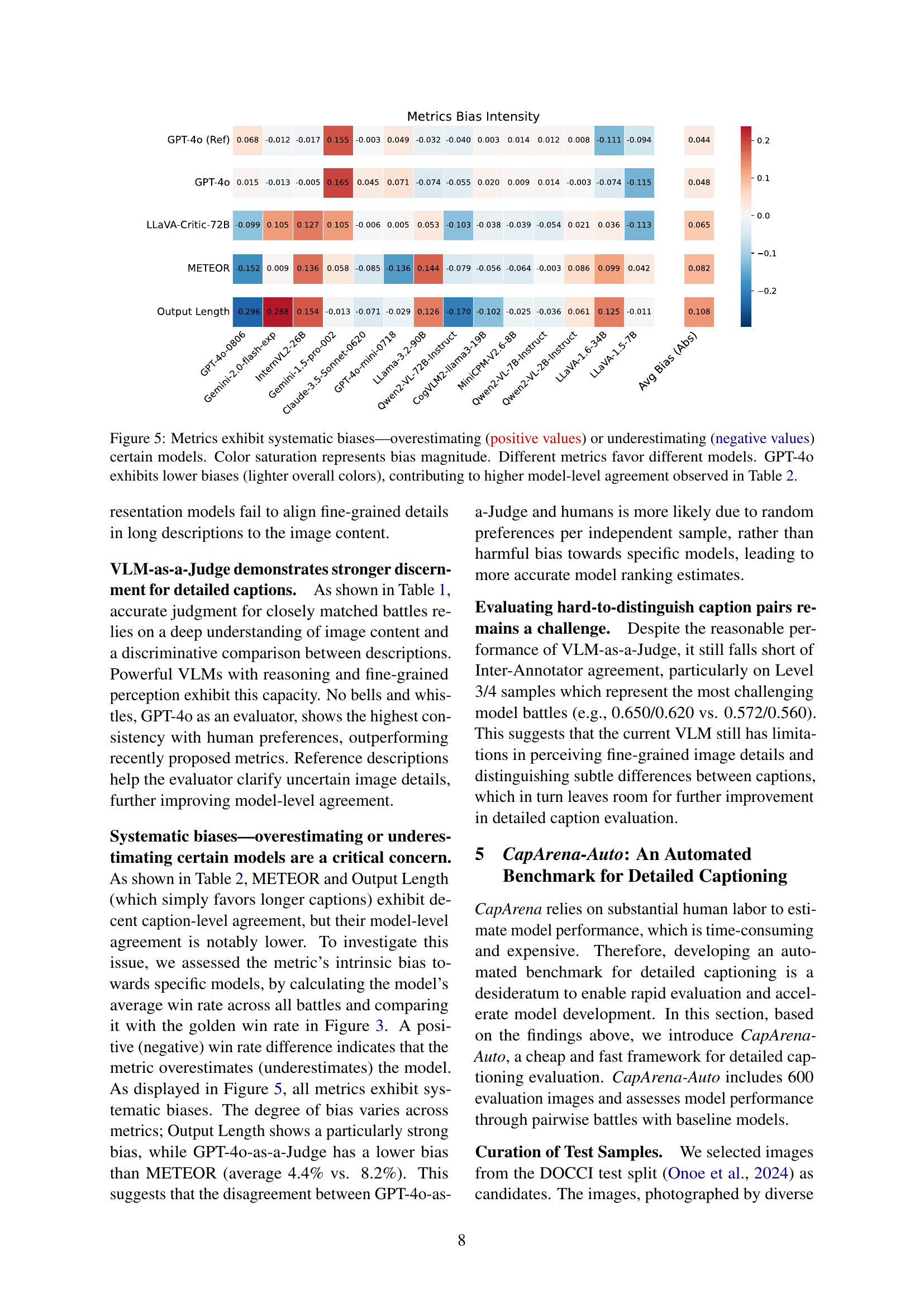

🔼 Figure 5 is a heatmap visualizing the systematic biases of various captioning metrics. Each row represents a model, and each column corresponds to a specific metric. The color intensity indicates the bias magnitude, with warmer colors indicating overestimation and cooler colors indicating underestimation of the model’s performance by that metric. The figure reveals that different metrics exhibit varying degrees of bias towards different models, highlighting the lack of consistency in metric evaluation. Notably, GPT-40 shows relatively low biases across most metrics, which contributes to its higher model-level agreement with human judgments, as shown in Table 2.

read the caption

Figure 5: Metrics exhibit systematic biases—overestimating (positive values) or underestimating (negative values) certain models. Color saturation represents bias magnitude. Different metrics favor different models. GPT-4o exhibits lower biases (lighter overall colors), contributing to higher model-level agreement observed in Table 2.

🔼 This figure shows two detailed captions generated by a Vision-Language Model (VLM) for the same image. The difference highlights how variations in prompt wording can significantly affect the generated caption’s level of detail, descriptive style, and the specific aspects of the image that are emphasized. The captions demonstrate the VLM’s ability to generate detailed and descriptive text based on different prompts. The image itself is not shown.

read the caption

Figure 6: Detailed caption generated with different prompt.

More on tables

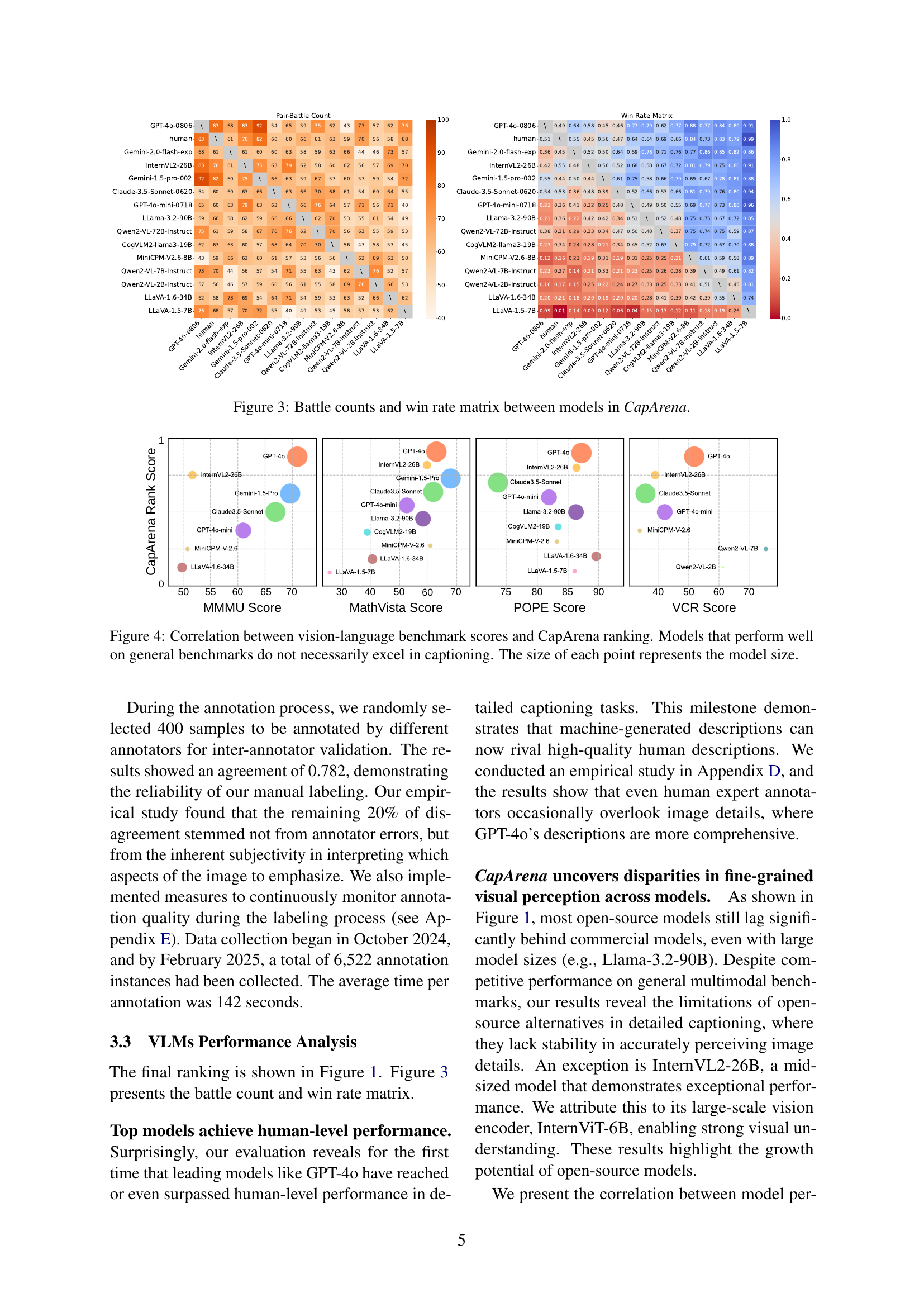

| Need | Caption-level Agreement (Including Tie) | Model-level Agreement | ||||||

| Metrics | Ref? | Overall | Level1 | Level2 | Level3 | Level4 | Spearman | Kendall |

| Inter-Annotator | - | 0.683 | 0.810 | 0.650 | 0.650 | 0.620 | - | - |

| Output Length | No | 0.585 | 0.672 | 0.593 | 0.552 | 0.521 | 0.710 | 0.582 |

| Traditional Image Captioning Metrics | ||||||||

| BLEU-4 | Yes | 0.474 | 0.477 | 0.480 | 0.475 | 0.467 | 0.424 | 0.319 |

| SPICE | Yes | 0.417 | 0.441 | 0.422 | 0.415 | 0.387 | 0.275 | 0. 231 |

| CIDER | Yes | 0.384 | 0.378 | 0.383 | 0.389 | 0.387 | -0.279 | -0.209 |

| METEOR | Yes | 0.576 | 0.657 | 0.582 | 0.536 | 0.530 | 0.785 | 0.582 |

| Polos | Yes | 0.479 | 0.526 | 0.467 | 0.462 | 0.462 | 0.420 | 0.363 |

| CLIPScore | No | 0.325 | 0.266 | 0.308 | 0.362 | 0.355 | -0.574 | -0.451 |

| LongCLIPScore | No | 0.400 | 0.422 | 0.404 | 0.384 | 0.395 | -0.226 | -0.121 |

| FLEUR | No | 0.458 | 0.513 | 0.462 | 0.444 | 0.414 | 0.393 | 0.297 |

| Metrics Designed for Detailed Image Captioning | ||||||||

| CAPTURE | Yes | 0.525 | 0.601 | 0.512 | 0.504 | 0.479 | 0.613 | 0.538 |

| VDC-Score | Yes | 0.557 | 0.687 | 0.579 | 0.496 | 0.460 | 0.890 | 0.736 |

| VLM-as-a-Judge | ||||||||

| LLaVA-OneVision | No | 0.606 | 0.709 | 0.642 | 0.541 | 0.537 | 0.921 | 0.780 |

| LLaVA-Critic | No | 0.609 | 0.735 | 0.631 | 0.544 | 0.530 | 0.903 | 0.736 |

| Qwen2.5-VL | No | 0.625 | 0.739 | 0.647 | 0.566 | 0.552 | 0.908 | 0.736 |

| GPT-4o | No | 0.628 | 0.740 | 0.647 | 0.572 | 0.557 | 0.930 | 0.802 |

| GPT-4o (with ref) | Yes | 0.627 | 0.733 | 0.663 | 0.559 | 0.560 | 0.943 | 0.846 |

🔼 This table presents a comprehensive evaluation of different captioning metrics on the task of detailed image captioning. It compares traditional metrics (like BLEU, CIDEr, METEOR), recently proposed metrics designed for detailed captions (like CAPTURE, VDC-Score), and the novel VLM-as-a-Judge approach. The evaluation considers both caption-level agreement (how well each metric agrees with human judgment on individual captions) and model-level agreement (how well metric rankings of different models correlate with human rankings). The table also notes whether a metric requires human-written reference captions. Higher scores indicate better alignment with human preferences. The results show that GPT-40, when used as an evaluator (VLM-as-a-Judge), significantly outperforms other methods.

read the caption

Table 2: Evaluation of various metrics on detailed captioning tasks. Need Ref indicates whether human-written reference captions are required. Higher scores indicate better alignment with human preferences. The best results in each column are highlighted in bold. GPT-4o as the evaluator achieves the best performance.

| Spearman | Kendall | |

| DOCCI (with BLEU-4) | 0.341 | 0.275 |

| DOCCI (with METEOR) | 0.859 | 0.648 |

| CAPTURE | 0.763 | 0.604 |

| CapArena-Auto | 0.943 | 0.824 |

🔼 Table 3 presents the correlation between CapArena-Auto, an automated benchmark for detailed image captioning, and the human-evaluated rankings from CapArena. The Spearman and Kendall’s Tau correlation coefficients are shown, indicating the strength of agreement between the automated and human evaluations. CapArena-Auto demonstrates the highest correlation with human judgments, signifying its effectiveness as a reliable and efficient alternative to human evaluation for this task.

read the caption

Table 3: Correlation between the automated benchmark and CapArena’s golden ranking. CapArena-Auto exhibits the highest alignment with human preferences.

| Prompts for Caption Generating |

| Describe this image in detail. |

| Generate a detailed caption for this image. |

| Explain the contents of this photo with specifics. |

| Create a rich and descriptive caption for this visual content. |

| Give this image a detailed image caption. |

| Write a detailed explanation of the scene depicted here. |

| Provide a detailed description of this photo. |

| Provide a thorough description of this image. |

| Describe this scene with specifics. |

| Provide a descriptive caption for this image. |

🔼 This table lists the ten different prompts used to generate detailed image captions for the CapArena benchmark. These prompts varied slightly in wording to encourage a range of descriptive responses from the various vision-language models being evaluated.

read the caption

Table 4: Prompts for detailed captioning.

| Image | Detailed Caption |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_03636.jpg) | human: A dog laying on it’s belly looking at the camera, The dog appears to be by a tree on a lawn of grass with dead leaves around the area. The dog has white and curly fur, It has a bone held by its paws, and has a red leash leading to the bottom of the image. The dog is looking directly at the camera and has its body shifted to the right. GPT-4o-0806: A fluffy, cream-colored puppy is resting against the trunk of a large tree in a grassy area. The puppy has a soft, curly coat and is holding a chew stick in its front paws. A red leash is attached to its collar, trailing across the grass. The ground is scattered with dry leaves, and the background shows part of a house with some garden elements. The puppy looks content and relaxed in its outdoor setting. |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_01540.jpg) | human: A medium three quarters frontal view of a white yacht on a dock near a body of water that is partially visible at the bottom of the view. Behind the yacht is a large blue building with a blue toned color on the right side of the view, and on its left side the color is gray. The building extends from the middle left of the view to the right side. At the top of the view the blue sky is visible behind large metal constructs and poles that stand above the yacht and nearby building. Between the metal columns and poles are large nets that extend across the upper portion of the view. Reflections of the white yahct are visible on the water at the bottom of the view. The yacht has blue accents around the bottom and has two different deck sections. GPT-4o-0806: The image depicts a large yacht docked at a marina. The yacht has a sleek, modern design with a white hull and a blue stripe near the waterline. The upper structure features a combination of white and wooden elements, giv- ing it a classic and elegant appearance . The main deck has wooden railings and a covered area with blue fabric, likely for protection against the elements. The yacht has multiple levels, with large windows on the lower deck and a spacious upper deck area, possibly for navigation and leisure. There are antennas and equipment on the top, indicating communication or navigation systems. In the background, there is a large building with a blue exterior and multiple windows, some of which display images or posters. The building is adjacent to the marina, with a walkway and railings visible alongside the water. Above the building, there are tall metal structures, possibly part of a nearby facility or infrastructure. The water is calm, reflecting parts of the yacht and the surrounding structures. The sky is clear, suggesting a sunny day. |

🔼 This table presents two examples where GPT-40’s image captioning outperforms human-generated captions. For each example, an image is shown along with the human-generated caption and the caption generated by GPT-40. The GPT-40 captions demonstrate a more detailed and comprehensive description of the image, including details that the human captions omitted. The examples highlight the ability of GPT-40 to surpass human-level performance in detailed image captioning, particularly in noticing and including fine-grained features.

read the caption

Table 5: Examples of GPT-4o perform better than human

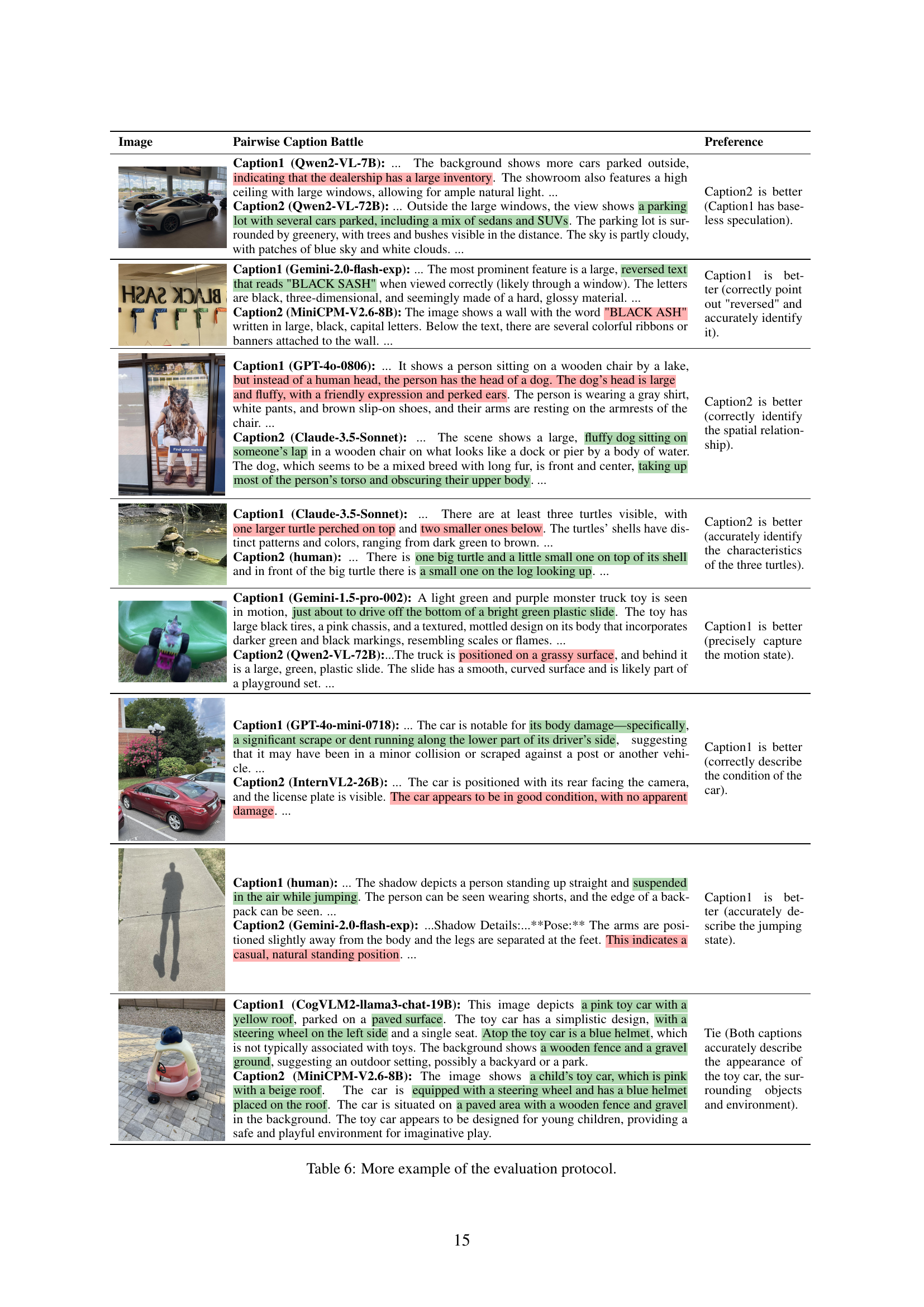

| Image | Pairwise Caption Battle | Preference |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_00727.jpg) | Caption1 (Qwen2-VL-7B): … The background shows more cars parked outside, indicating that the dealership has a large inventory. The showroom also features a high ceiling with large windows, allowing for ample natural light. … Caption2 (Qwen2-VL-72B): … Outside the large windows, the view shows a parking lot with several cars parked, including a mix of sedans and SUVs. The parking lot is surrounded by greenery, with trees and bushes visible in the distance. The sky is partly cloudy, with patches of blue sky and white clouds. … | Caption2 is better (Caption1 has baseless speculation). |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_00578.jpg) | Caption1 (Gemini-2.0-flash-exp): … The most prominent feature is a large, reversed text that reads "BLACK SASH" when viewed correctly (likely through a window). The letters are black, three-dimensional, and seemingly made of a hard, glossy material. … Caption2 (MiniCPM-V2.6-8B): The image shows a wall with the word "BLACK ASH" written in large, black, capital letters. Below the text, there are several colorful ribbons or banners attached to the wall. … | Caption1 is better (correctly point out "reversed" and accurately identify it). |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_03897.jpg) | Caption1 (GPT-4o-0806): … It shows a person sitting on a wooden chair by a lake, but instead of a human head, the person has the head of a dog. The dog’s head is large and fluffy, with a friendly expression and perked ears. The person is wearing a gray shirt, white pants, and brown slip-on shoes, and their arms are resting on the armrests of the chair. … Caption2 (Claude-3.5-Sonnet): … The scene shows a large, fluffy dog sitting on someone’s lap in a wooden chair on what looks like a dock or pier by a body of water. The dog, which seems to be a mixed breed with long fur, is front and center, taking up most of the person’s torso and obscuring their upper body. … | Caption2 is better (correctly identify the spatial relationship). |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_01562.jpg) | Caption1 (Claude-3.5-Sonnet): … There are at least three turtles visible, with one larger turtle perched on top and two smaller ones below. The turtles’ shells have distinct patterns and colors, ranging from dark green to brown. … Caption2 (human): … There is one big turtle and a little small one on top of its shell and in front of the big turtle there is a small one on the log looking up. … | Caption2 is better (accurately identify the characteristics of the three turtles). |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_04963.jpg) | Caption1 (Gemini-1.5-pro-002): A light green and purple monster truck toy is seen in motion, just about to drive off the bottom of a bright green plastic slide. The toy has large black tires, a pink chassis, and a textured, mottled design on its body that incorporates darker green and black markings, resembling scales or flames. … Caption2 (Qwen2-VL-72B):…The truck is positioned on a grassy surface, and behind it is a large, green, plastic slide. The slide has a smooth, curved surface and is likely part of a playground set. … | Caption1 is better (precisely capture the motion state). |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_01077.jpg) | Caption1 (GPT-4o-mini-0718): … The car is notable for its body damage—specifically, a significant scrape or dent running along the lower part of its driver’s side, suggesting that it may have been in a minor collision or scraped against a post or another vehicle. … Caption2 (InternVL2-26B): … The car is positioned with its rear facing the camera, and the license plate is visible. The car appears to be in good condition, with no apparent damage. … | Caption1 is better (correctly describe the condition of the car). |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_00038.jpg) | Caption1 (human): … The shadow depicts a person standing up straight and suspended in the air while jumping. The person can be seen wearing shorts, and the edge of a backpack can be seen. … Caption2 (Gemini-2.0-flash-exp): …Shadow Details:…**Pose:** The arms are positioned slightly away from the body and the legs are separated at the feet. This indicates a casual, natural standing position. … | Caption1 is better (accurately describe the jumping state). |

![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_02693.jpg) | Caption1 (CogVLM2-llama3-chat-19B): This image depicts a pink toy car with a yellow roof, parked on a paved surface. The toy car has a simplistic design, with a steering wheel on the left side and a single seat. Atop the toy car is a blue helmet, which is not typically associated with toys. The background shows a wooden fence and a gravel ground, suggesting an outdoor setting, possibly a backyard or a park. Caption2 (MiniCPM-V2.6-8B): The image shows a child’s toy car, which is pink with a beige roof. The car is equipped with a steering wheel and has a blue helmet placed on the roof. The car is situated on a paved area with a wooden fence and gravel in the background. The toy car appears to be designed for young children, providing a safe and playful environment for imaginative play. | Tie (Both captions accurately describe the appearance of the toy car, the surrounding objects and environment). |

🔼 This table presents additional examples illustrating the pairwise caption battle evaluation protocol used in the CapArena benchmark. Each row shows an image, two captions generated by different vision-language models (VLMs) or a human, and a human preference indicating which caption is better. The examples showcase diverse scenarios and highlight various aspects of caption quality, including precision, informativeness, and handling of unusual or complex image content.

read the caption

Table 6: More example of the evaluation protocol.

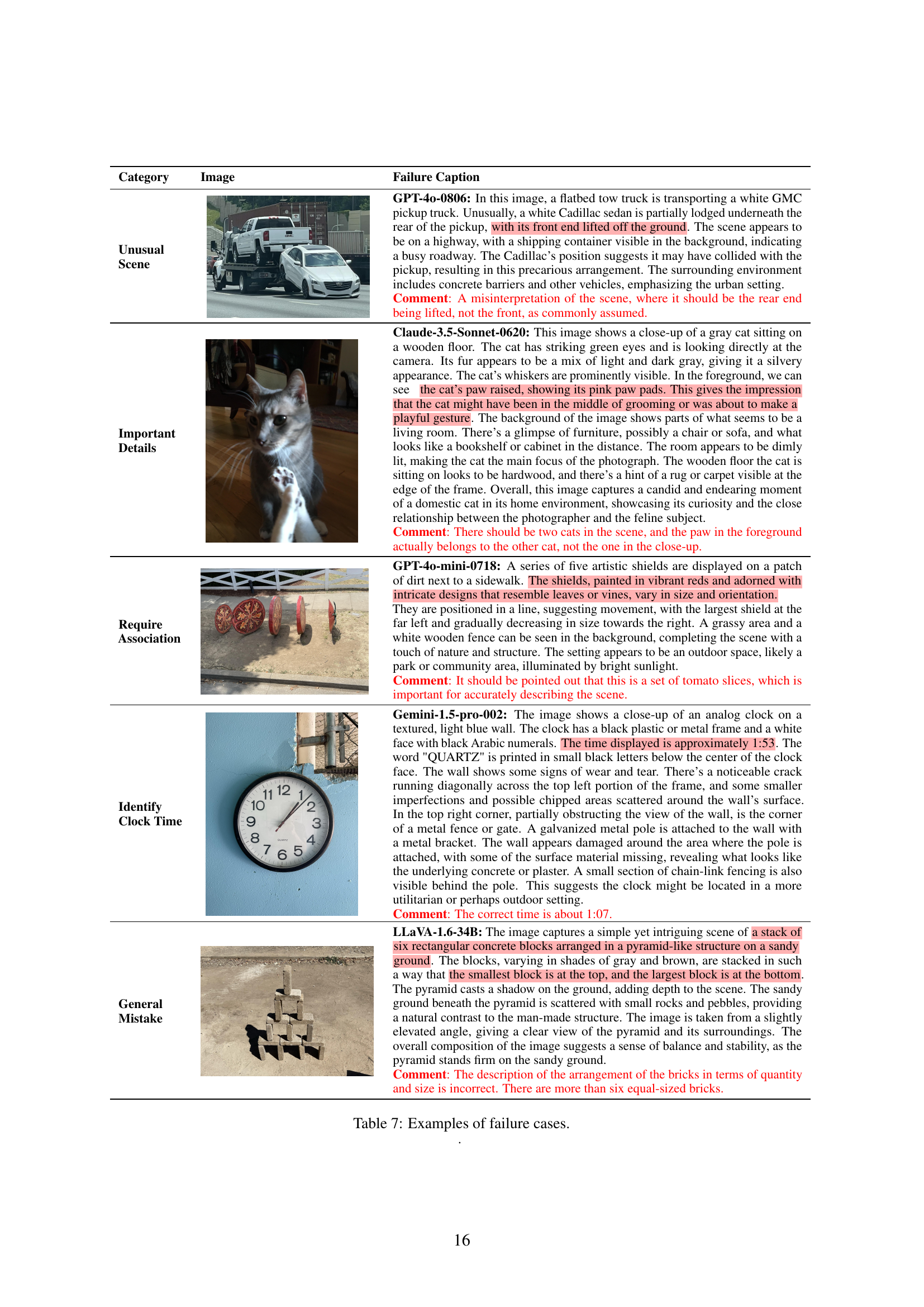

| Category | Image | Failure Caption |

| Unusual Scene | ![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_00222.jpg) | GPT-4o-0806: In this image, a flatbed tow truck is transporting a white GMC pickup truck. Unusually, a white Cadillac sedan is partially lodged underneath the rear of the pickup, with its front end lifted off the ground. The scene appears to be on a highway, with a shipping container visible in the background, indicating a busy roadway. The Cadillac’s position suggests it may have collided with the pickup, resulting in this precarious arrangement. The surrounding environment includes concrete barriers and other vehicles, emphasizing the urban setting. Comment: A misinterpretation of the scene, where it should be the rear end being lifted, not the front, as commonly assumed. |

| Important Details | ![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_02061.jpg) | Claude-3.5-Sonnet-0620: This image shows a close-up of a gray cat sitting on a wooden floor. The cat has striking green eyes and is looking directly at the camera. Its fur appears to be a mix of light and dark gray, giving it a silvery appearance. The cat’s whiskers are prominently visible. In the foreground, we can see the cat’s paw raised, showing its pink paw pads. This gives the impression that the cat might have been in the middle of grooming or was about to make a playful gesture. The background of the image shows parts of what seems to be a living room. There’s a glimpse of furniture, possibly a chair or sofa, and what looks like a bookshelf or cabinet in the distance. The room appears to be dimly lit, making the cat the main focus of the photograph. The wooden floor the cat is sitting on looks to be hardwood, and there’s a hint of a rug or carpet visible at the edge of the frame. Overall, this image captures a candid and endearing moment of a domestic cat in its home environment, showcasing its curiosity and the close relationship between the photographer and the feline subject. Comment: There should be two cats in the scene, and the paw in the foreground actually belongs to the other cat, not the one in the close-up. |

| Require Association | ![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_03283.jpg) | GPT-4o-mini-0718: A series of five artistic shields are displayed on a patch of dirt next to a sidewalk. The shields, painted in vibrant reds and adorned with intricate designs that resemble leaves or vines, vary in size and orientation. They are positioned in a line, suggesting movement, with the largest shield at the far left and gradually decreasing in size towards the right. A grassy area and a white wooden fence can be seen in the background, completing the scene with a touch of nature and structure. The setting appears to be an outdoor space, likely a park or community area, illuminated by bright sunlight. Comment: It should be pointed out that this is a set of tomato slices, which is important for accurately describing the scene. |

| Identify Clock Time | ![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_03557.jpg) | Gemini-1.5-pro-002: The image shows a close-up of an analog clock on a textured, light blue wall. The clock has a black plastic or metal frame and a white face with black Arabic numerals. The time displayed is approximately 1:53. The word "QUARTZ" is printed in small black letters below the center of the clock face. The wall shows some signs of wear and tear. There’s a noticeable crack running diagonally across the top left portion of the frame, and some smaller imperfections and possible chipped areas scattered around the wall’s surface. In the top right corner, partially obstructing the view of the wall, is the corner of a metal fence or gate. A galvanized metal pole is attached to the wall with a metal bracket. The wall appears damaged around the area where the pole is attached, with some of the surface material missing, revealing what looks like the underlying concrete or plaster. A small section of chain-link fencing is also visible behind the pole. This suggests the clock might be located in a more utilitarian or perhaps outdoor setting. Comment: The correct time is about 1:07. |

| General Mistake | ![[Uncaptioned image]](extracted/6280623/latex/images/examples/test_02766.jpg) | LLaVA-1.6-34B: The image captures a simple yet intriguing scene of a stack of six rectangular concrete blocks arranged in a pyramid-like structure on a sandy ground. The blocks, varying in shades of gray and brown, are stacked in such a way that the smallest block is at the top, and the largest block is at the bottom. The pyramid casts a shadow on the ground, adding depth to the scene. The sandy ground beneath the pyramid is scattered with small rocks and pebbles, providing a natural contrast to the man-made structure. The image is taken from a slightly elevated angle, giving a clear view of the pyramid and its surroundings. The overall composition of the image suggests a sense of balance and stability, as the pyramid stands firm on the sandy ground. Comment: The description of the arrangement of the bricks in terms of quantity and size is incorrect. There are more than six equal-sized bricks. |

🔼 This table showcases instances where various Vision-Language Models (VLMs) fail to generate accurate or complete image captions. Each row presents an image, the flawed caption generated by a specific VLM, and a comment explaining the nature of the error. The errors are categorized into four types: unusual scene misinterpretations, important detail omissions, difficulties with requiring associations between objects, and issues in identifying clock times or general factual mistakes. These examples highlight the challenges VLMs face in detailed and nuanced image understanding.

read the caption

Table 7: Examples of failure cases.

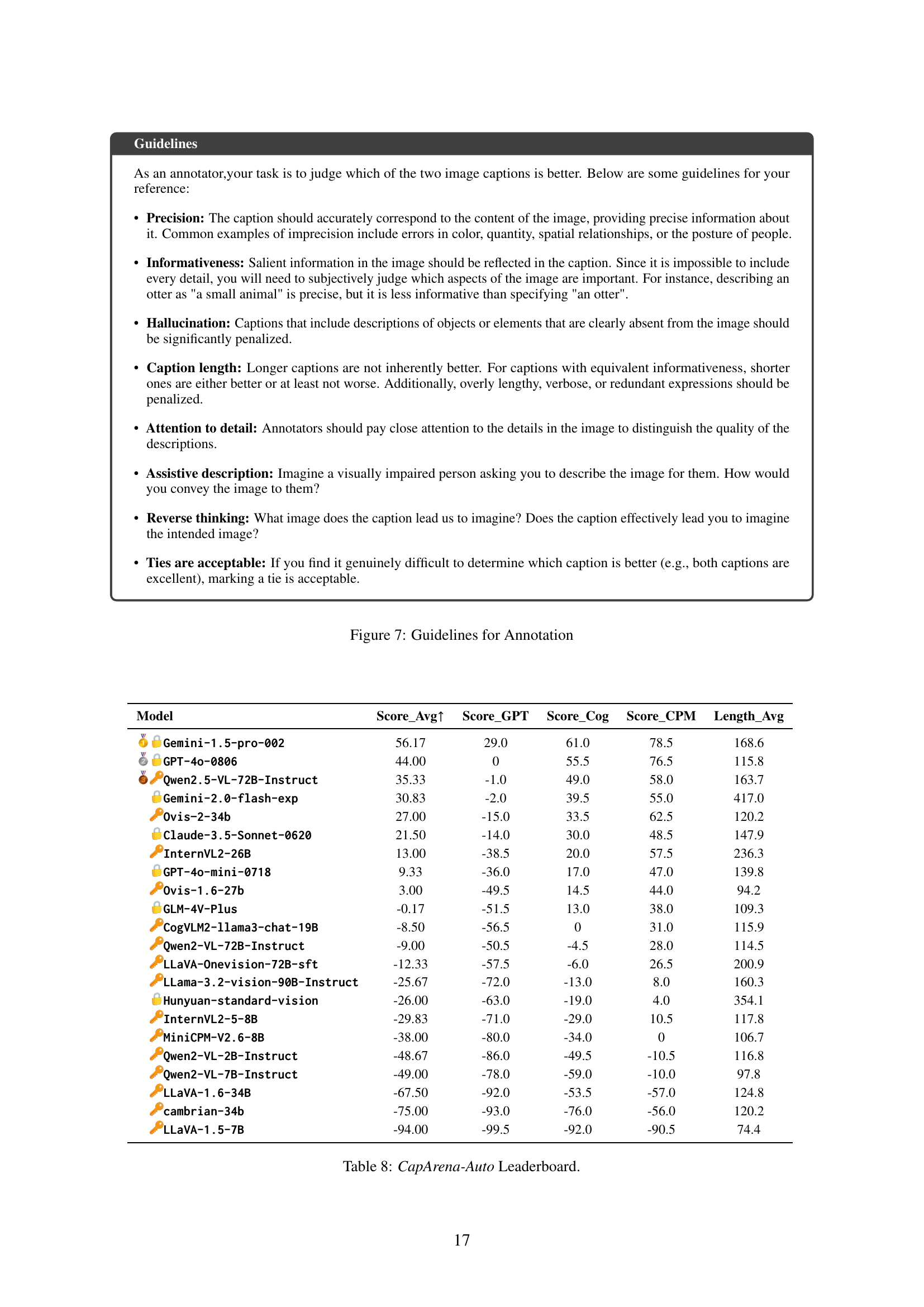

| Model | Score_Avg | Score_GPT | Score_Cog | Score_CPM | Length_Avg |

| 56.17 | 29.0 | 61.0 | 78.5 | 168.6 | |

| 44.00 | 0 | 55.5 | 76.5 | 115.8 | |

| 35.33 | -1.0 | 49.0 | 58.0 | 163.7 | |

| | 30.83 | -2.0 | 39.5 | 55.0 | 417.0 |

| | 27.00 | -15.0 | 33.5 | 62.5 | 120.2 |

| | 21.50 | -14.0 | 30.0 | 48.5 | 147.9 |

| | 13.00 | -38.5 | 20.0 | 57.5 | 236.3 |

| | 9.33 | -36.0 | 17.0 | 47.0 | 139.8 |

| | 3.00 | -49.5 | 14.5 | 44.0 | 94.2 |

| | -0.17 | -51.5 | 13.0 | 38.0 | 109.3 |

| | -8.50 | -56.5 | 0 | 31.0 | 115.9 |

| | -9.00 | -50.5 | -4.5 | 28.0 | 114.5 |

| | -12.33 | -57.5 | -6.0 | 26.5 | 200.9 |

| | -25.67 | -72.0 | -13.0 | 8.0 | 160.3 |

| | -26.00 | -63.0 | -19.0 | 4.0 | 354.1 |

| | -29.83 | -71.0 | -29.0 | 10.5 | 117.8 |

| | -38.00 | -80.0 | -34.0 | 0 | 106.7 |

| | -48.67 | -86.0 | -49.5 | -10.5 | 116.8 |

| | -49.00 | -78.0 | -59.0 | -10.0 | 97.8 |

| | -67.50 | -92.0 | -53.5 | -57.0 | 124.8 |

| | -75.00 | -93.0 | -76.0 | -56.0 | 120.2 |

| | -94.00 | -99.5 | -92.0 | -90.5 | 74.4 |

🔼 This table presents the leaderboard for CapArena-Auto, an automated benchmark for detailed image captioning. It shows the average scores achieved by various vision-language models (VLMs) on a set of 600 test images. The scores reflect the models’ performance in generating detailed and accurate image captions, compared against baseline models and assessed using a pairwise battle paradigm with a VLM acting as a judge. The table includes scores from GPT-4, CogVLM-19B, MiniCPM-8B, various open-source models, and the average caption length generated by each model.

read the caption

Table 8: CapArena-Auto Leaderboard.

Full paper#