TL;DR#

Existing methods for creating articulated objects are either data-driven, limited by the scale/quality of training data, or simulation-based, facing fidelity/labor challenges. High-quality articulated objects are desperately needed for embodied AI. Addressing this, the paper introduces a novel procedural pipeline to synthesize large-scale, high-fidelity articulated objects.

The approach, Infinite Mobility, uses procedural generation boosted by annotated 3D datasets. User studies and quantitative evaluations show the method excels against current state-of-the-art methods, rivaling human-annotated datasets in physics and mesh quality. The synthetic data is used to train generative models, enabling next-step scaling.

Key Takeaways#

Why does it matter?#

This paper is important for researchers because it addresses the critical need for high-quality, scalable articulated object datasets in embodied AI. By offering a procedural generation pipeline that rivals existing datasets and generative models, this research opens new avenues for training and evaluating AI agents in complex, interactive environments. The approach’s capacity for creating diverse and realistic articulated objects can significantly advance research in robotics, simulation, and computer vision.

Visual Insights#

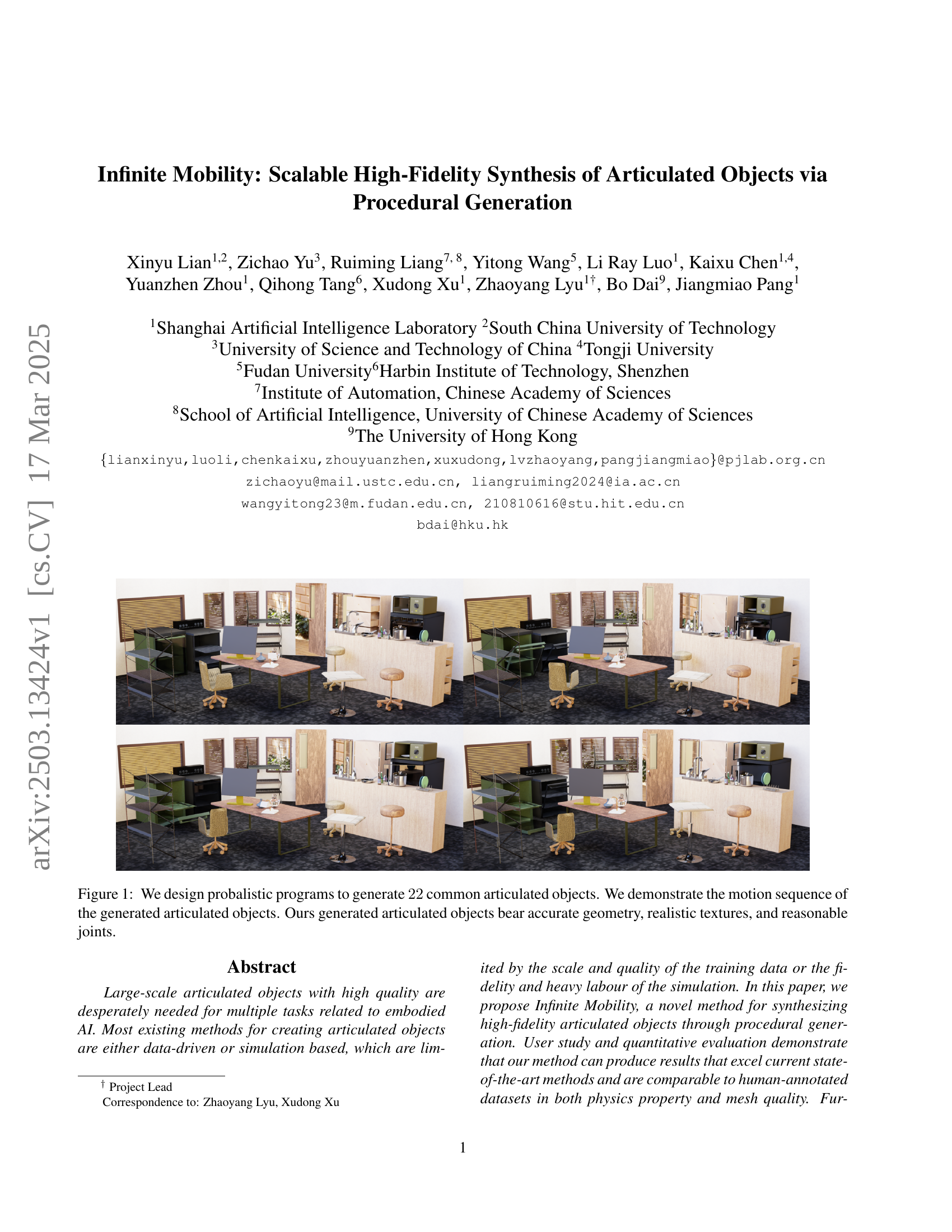

🔼 This figure showcases the results of a procedural generation approach for creating articulated objects. It displays a diverse set of 22 common objects (e.g., chairs, tables, cabinets) generated using probabilistic programs. The animation shows the generated objects’ motion sequences, demonstrating the accuracy of their geometry, realistic textures, and properly functioning joints. The figure highlights the system’s ability to produce high-fidelity articulated object models.

read the caption

Figure 1: We design probalistic programs to generate 22222222 common articulated objects. We demonstrate the motion sequence of the generated articulated objects. Ours generated articulated objects bear accurate geometry, realistic textures, and reasonable joints.

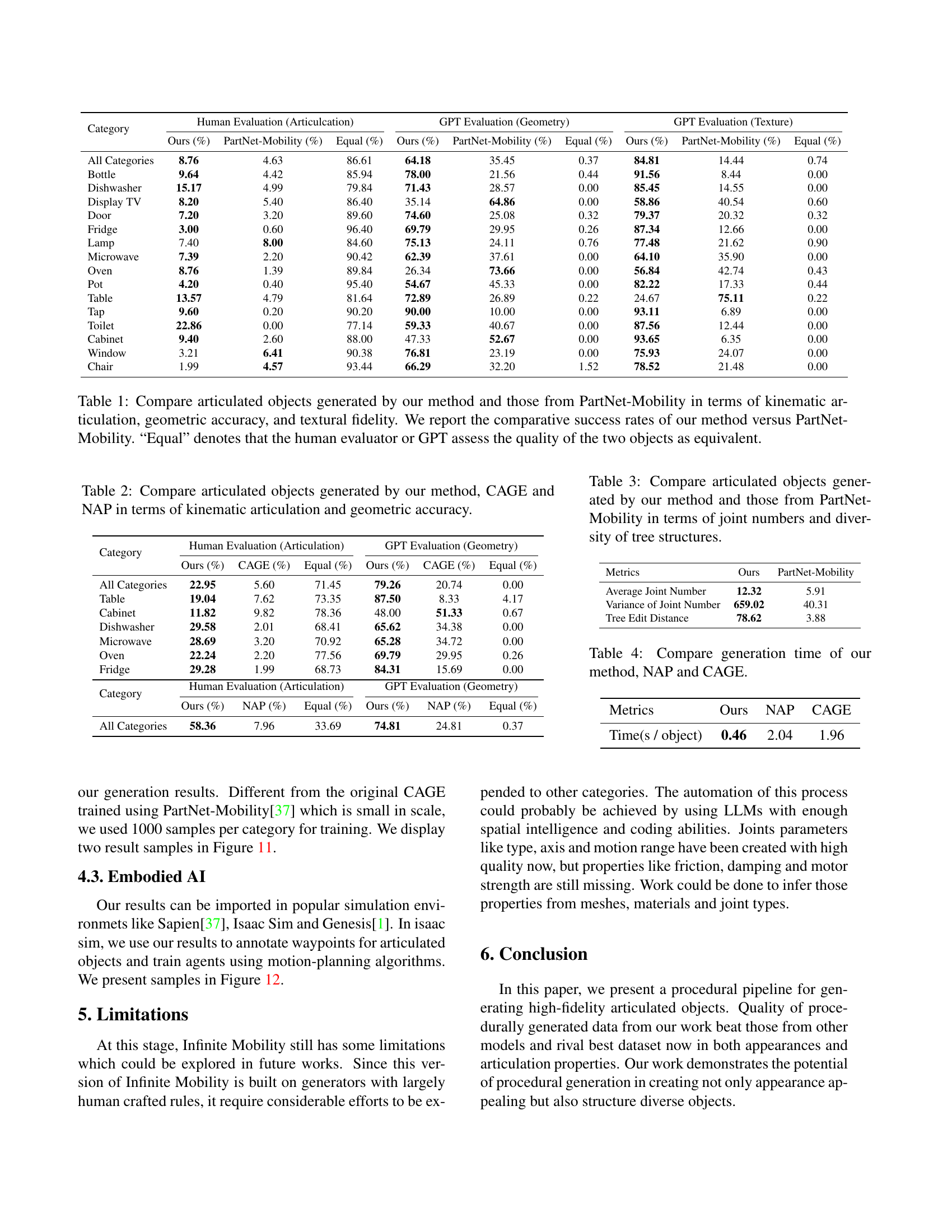

| Category | Human Evaluation (Articulcation) | GPT Evaluation (Geometry) | GPT Evaluation (Texture) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Ours (%) | PartNet-Mobility (%) | Equal (%) | Ours (%) | PartNet-Mobility (%) | Equal (%) | Ours (%) | PartNet-Mobility (%) | Equal (%) | |

| All Categories | 8.76 | 4.63 | 86.61 | 64.18 | 35.45 | 0.37 | 84.81 | 14.44 | 0.74 |

| Bottle | 9.64 | 4.42 | 85.94 | 78.00 | 21.56 | 0.44 | 91.56 | 8.44 | 0.00 |

| Dishwasher | 15.17 | 4.99 | 79.84 | 71.43 | 28.57 | 0.00 | 85.45 | 14.55 | 0.00 |

| Display TV | 8.20 | 5.40 | 86.40 | 35.14 | 64.86 | 0.00 | 58.86 | 40.54 | 0.60 |

| Door | 7.20 | 3.20 | 89.60 | 74.60 | 25.08 | 0.32 | 79.37 | 20.32 | 0.32 |

| Fridge | 3.00 | 0.60 | 96.40 | 69.79 | 29.95 | 0.26 | 87.34 | 12.66 | 0.00 |

| Lamp | 7.40 | 8.00 | 84.60 | 75.13 | 24.11 | 0.76 | 77.48 | 21.62 | 0.90 |

| Microwave | 7.39 | 2.20 | 90.42 | 62.39 | 37.61 | 0.00 | 64.10 | 35.90 | 0.00 |

| Oven | 8.76 | 1.39 | 89.84 | 26.34 | 73.66 | 0.00 | 56.84 | 42.74 | 0.43 |

| Pot | 4.20 | 0.40 | 95.40 | 54.67 | 45.33 | 0.00 | 82.22 | 17.33 | 0.44 |

| Table | 13.57 | 4.79 | 81.64 | 72.89 | 26.89 | 0.22 | 24.67 | 75.11 | 0.22 |

| Tap | 9.60 | 0.20 | 90.20 | 90.00 | 10.00 | 0.00 | 93.11 | 6.89 | 0.00 |

| Toilet | 22.86 | 0.00 | 77.14 | 59.33 | 40.67 | 0.00 | 87.56 | 12.44 | 0.00 |

| Cabinet | 9.40 | 2.60 | 88.00 | 47.33 | 52.67 | 0.00 | 93.65 | 6.35 | 0.00 |

| Window | 3.21 | 6.41 | 90.38 | 76.81 | 23.19 | 0.00 | 75.93 | 24.07 | 0.00 |

| Chair | 1.99 | 4.57 | 93.44 | 66.29 | 32.20 | 1.52 | 78.52 | 21.48 | 0.00 |

🔼 Table 1 compares the quality of articulated objects generated by the proposed method and those from the PartNet-Mobility dataset. The comparison considers three aspects: kinematic articulation (how well the parts move), geometric accuracy (how precise the shapes are), and textural fidelity (how realistic the surface details are). The table presents the percentage of times the proposed method was rated as better, worse, or equal to PartNet-Mobility by both human evaluators and GPT, providing a comprehensive quantitative assessment of the generated object quality.

read the caption

Table 1: Compare articulated objects generated by our method and those from PartNet-Mobility in terms of kinematic articulation, geometric accuracy, and textural fidelity. We report the comparative success rates of our method versus PartNet-Mobility. “Equal” denotes that the human evaluator or GPT assess the quality of the two objects as equivalent.

In-depth insights#

Procedural Gen.#

Procedural generation offers a powerful alternative to traditional, data-driven methods for creating articulated objects. Instead of relying on limited datasets or labor-intensive simulations, procedural generation uses algorithms and rules to synthesize objects from scratch. This approach allows for the creation of large-scale, diverse datasets with precise control over object properties. The key advantage is scalability, as the generation process can be automated to produce a virtually unlimited number of unique objects. The challenge lies in designing effective algorithms that can capture the complexity and realism of real-world articulated objects, ensuring plausible geometry, realistic textures, and functional joints. This requires careful consideration of semantic rules and physical constraints to avoid generating unrealistic or physically unstable structures.

URDF pipelines#

While the provided document doesn’t explicitly have a section titled “URDF pipelines,” the core methodology revolves around a procedural generation pipeline that effectively constructs URDF-like structures. The process starts with a tree-growing strategy, iteratively attaching new nodes (links) to existing ones, guided by semantic rules. This is analogous to how URDF defines articulated objects. A key advantage is the controlled nature of the pipeline, offering precise control over geometry and semantics, ensuring accurate physical properties, unlike methods relying on noisy model inference. It generates sophisticated articulation trees, building objects beyond existing datasets. The mesh assembly seamlessly integrates procedural meshes and curated dataset assets, ensuring the quality and diversity of the final mesh. Ensuring physical plausibility also appears as a critical aspect. Further work to infer friction, damping and motor strength would be helpful.

Ensuring Fidelity#

Ensuring Fidelity in procedurally generated articulated objects is paramount for their utility in embodied AI and simulation. The paper addresses this challenge through a multi-faceted approach. First, they leverage category-specific growth strategies for articulation trees, ensuring realistic part attachments based on semantic understanding. This moves beyond purely random generation towards structures aligned with real-world objects. Geometry generation combines procedural mesh creation with dataset retrieval, offering both flexibility and quality. Procedural refinement further refines retrieved meshes to ensure physically plausible connections, preventing issues like parts floating or intersecting. The attention to physical plausibility extends to modifications addressing collisions with the ground and insufficient gaps between parts. Quantitative and qualitative evaluations confirm the effectiveness of these design choices. A human evaluation of motion structure helps show the improvement in terms of kinematic articulations. Additionally, quantitative metrics comparing generated objects with existing datasets and state-of-the-art generative models help further solidify its purpose and the improvements it provides over other methods.

VLMs Evaluation#

Vision Language Models (VLMs) are employed to assess mesh quality in a scalable and unbiased manner. Following a recent evaluation paradigm, GPT-4v is utilized as an evaluator by feeding it normal maps and RGB images, enabling large-scale testing that rates both the geometry and texture of the meshes. This approach offers a significant advantage over traditional methods by leveraging the capabilities of VLMs to provide comprehensive and objective assessments of 3D object quality. By using both normal maps and RGB images, the VLMs can analyze the intricate details of the meshes, including their geometric accuracy and textural fidelity. The employment of VLMs not only enhances the efficiency of the evaluation process but also ensures a higher degree of accuracy and reliability in assessing the quality of generated 3D objects. By adopting a standardized and scalable evaluation method, the study aims to provide a benchmark for future research in procedural generation.

Scaling next step#

While the paper doesn’t explicitly have a section titled ‘Scaling Next Step,’ we can infer future research directions based on its content. A crucial next step involves automating the procedural generation process. Currently, the system relies heavily on human-crafted rules, limiting its scalability to new object categories. Leveraging Large Language Models (LLMs) with spatial reasoning and coding abilities could automate rule creation. Further, while joint parameters are high-quality, inferring properties like friction and damping from meshes and materials would be beneficial. The authors already train generative models like CAGE with the synthetic data from the framework so that articulated objects can be created with a single shot. Furthermore, integrating reinforcement learning techniques could refine articulation and interaction realism. Finally, exploring incorporating more intricate joint mechanisms and dynamic behaviors into the generative process to create more compelling and realistic simulations of articulated objects is another potential future step.

More visual insights#

More on figures

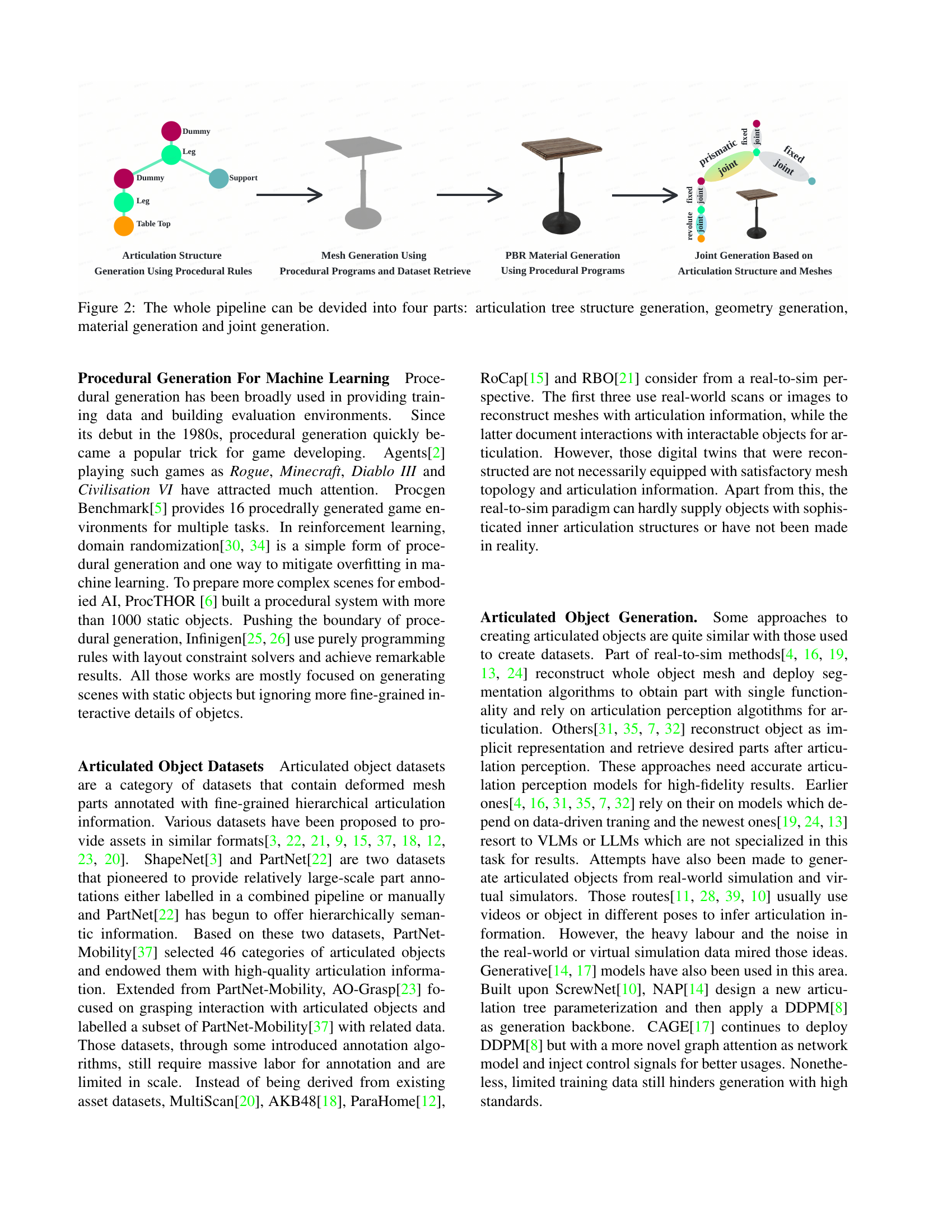

🔼 This figure illustrates the four main stages of the articulated object generation pipeline. First, an articulation tree structure is created, defining the hierarchical relationships between different parts of the object. Next, geometry is generated for each part, creating the 3D shapes. Then, materials are assigned to these shapes, adding realistic textures and appearance. Finally, joints are defined between parts based on the articulation tree, enabling the movement and interaction between them.

read the caption

Figure 2: The whole pipeline can be devided into four parts: articulation tree structure generation, geometry generation, material generation and joint generation.

🔼 This figure illustrates the structure of a Universal Robot Description Format (URDF) file, a standard for representing articulated objects. Each component of an articulated object is represented as a ’link,’ depicted here as a textured mesh. Joints connect pairs of links, defining how the parts move relative to each other and establishing the overall articulation structure of the object.

read the caption

Figure 3: Structure of the URDF file. Each link is a part of the object, which is represented as a textured mesh in our case. Each joint connects two links and describes the articulation structure between them.

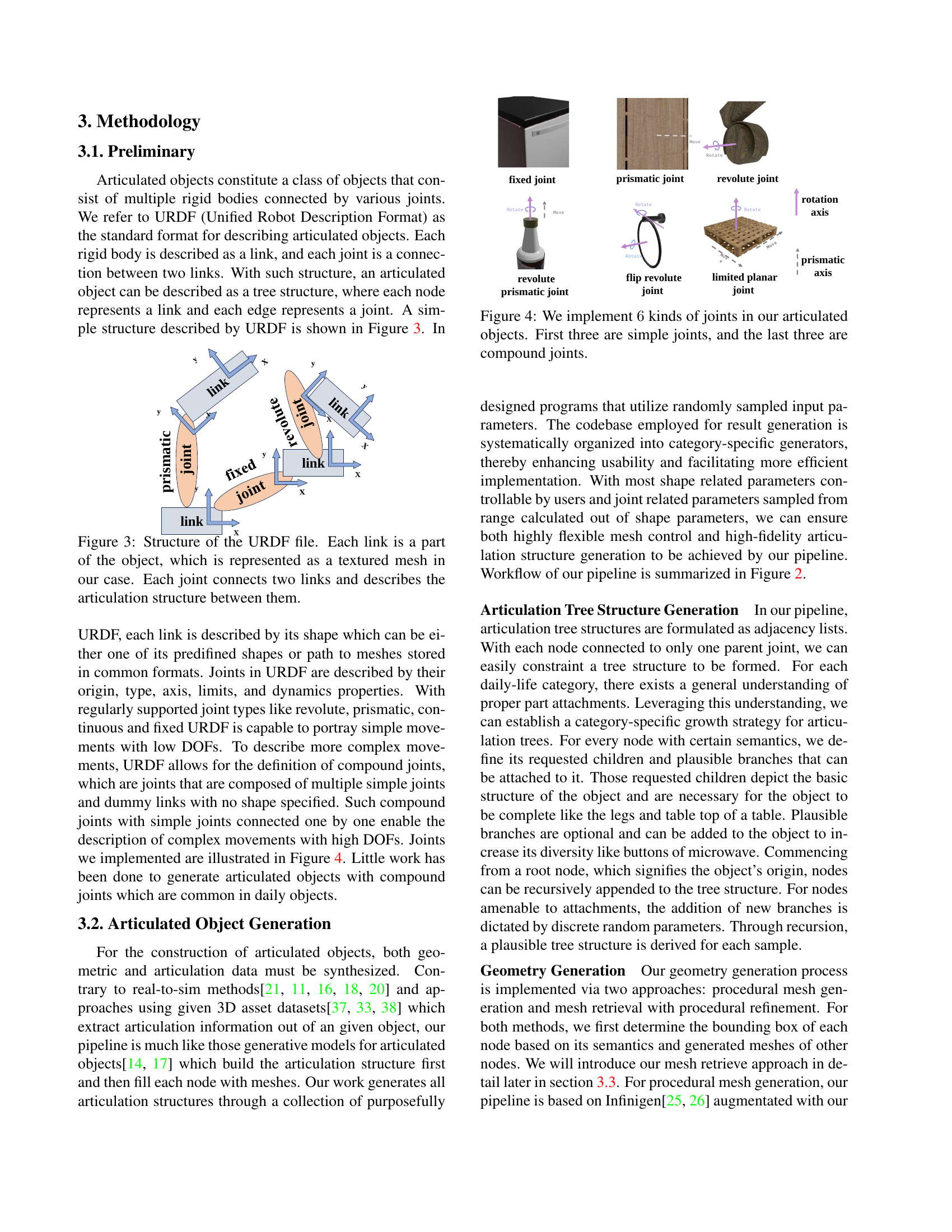

🔼 Figure 4 illustrates the six types of joints used in the construction of articulated objects within the paper. The first three depicted are simple joints: a fixed joint (immobile connection), a prismatic joint (linear motion along an axis), and a revolute joint (rotational motion around an axis). The latter three represent compound joints, which are combinations of simpler joint types to enable more complex movements. These include a flip revolute joint, a limited planar joint, and a combination of prismatic and revolute joints. The figure visually demonstrates the structure and range of motion for each joint type.

read the caption

Figure 4: We implement 6666 kinds of joints in our articulated objects. First three are simple joints, and the last three are compound joints.

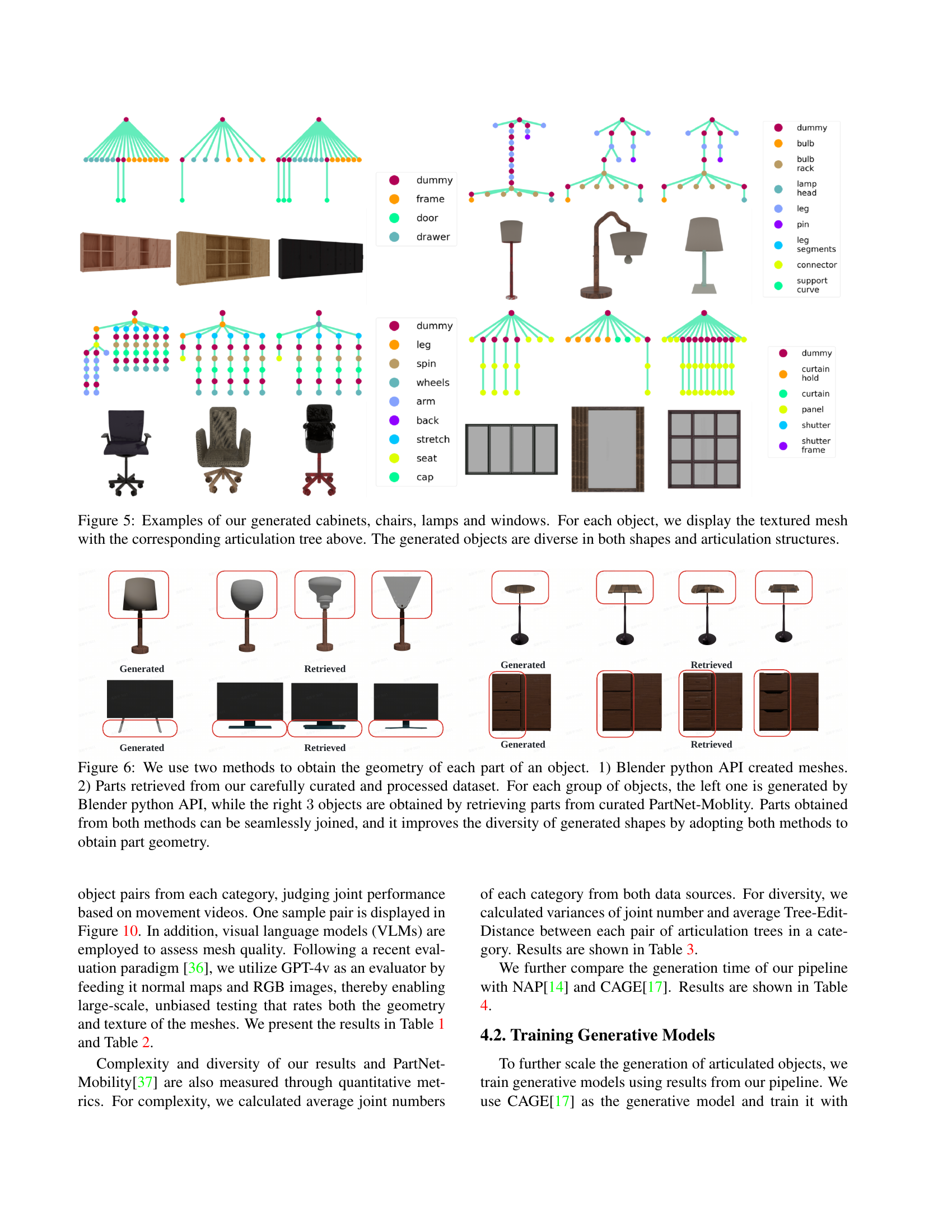

🔼 This figure showcases examples of procedurally generated articulated objects, including cabinets, chairs, lamps, and windows. Each object is presented with its textured 3D mesh and the corresponding articulation tree diagram displayed above it. The articulation tree visually represents the hierarchical structure of the object’s interconnected parts and joints. The figure highlights the diversity achieved in both the shapes and the articulation structures of the generated objects, demonstrating the versatility of the procedural generation method used.

read the caption

Figure 5: Examples of our generated cabinets, chairs, lamps and windows. For each object, we display the textured mesh with the corresponding articulation tree above. The generated objects are diverse in both shapes and articulation structures.

🔼 Figure 6 illustrates the two approaches used for generating the geometry of object parts: 1) using Blender’s Python API to create meshes, and 2) retrieving pre-existing meshes from a curated dataset (PartNet-Mobility). The figure shows four groups of objects. In each group, the leftmost object’s parts are generated using the Blender API, while the three objects to its right utilize parts from the curated dataset. The seamless integration of parts from both methods is highlighted, demonstrating how combining these approaches enhances the diversity of generated shapes.

read the caption

Figure 6: We use two methods to obtain the geometry of each part of an object. 1) Blender python API created meshes. 2) Parts retrieved from our carefully curated and processed dataset. For each group of objects, the left one is generated by Blender python API, while the right 3333 objects are obtained by retrieving parts from curated PartNet-Moblity. Parts obtained from both methods can be seamlessly joined, and it improves the diversity of generated shapes by adopting both methods to obtain part geometry.

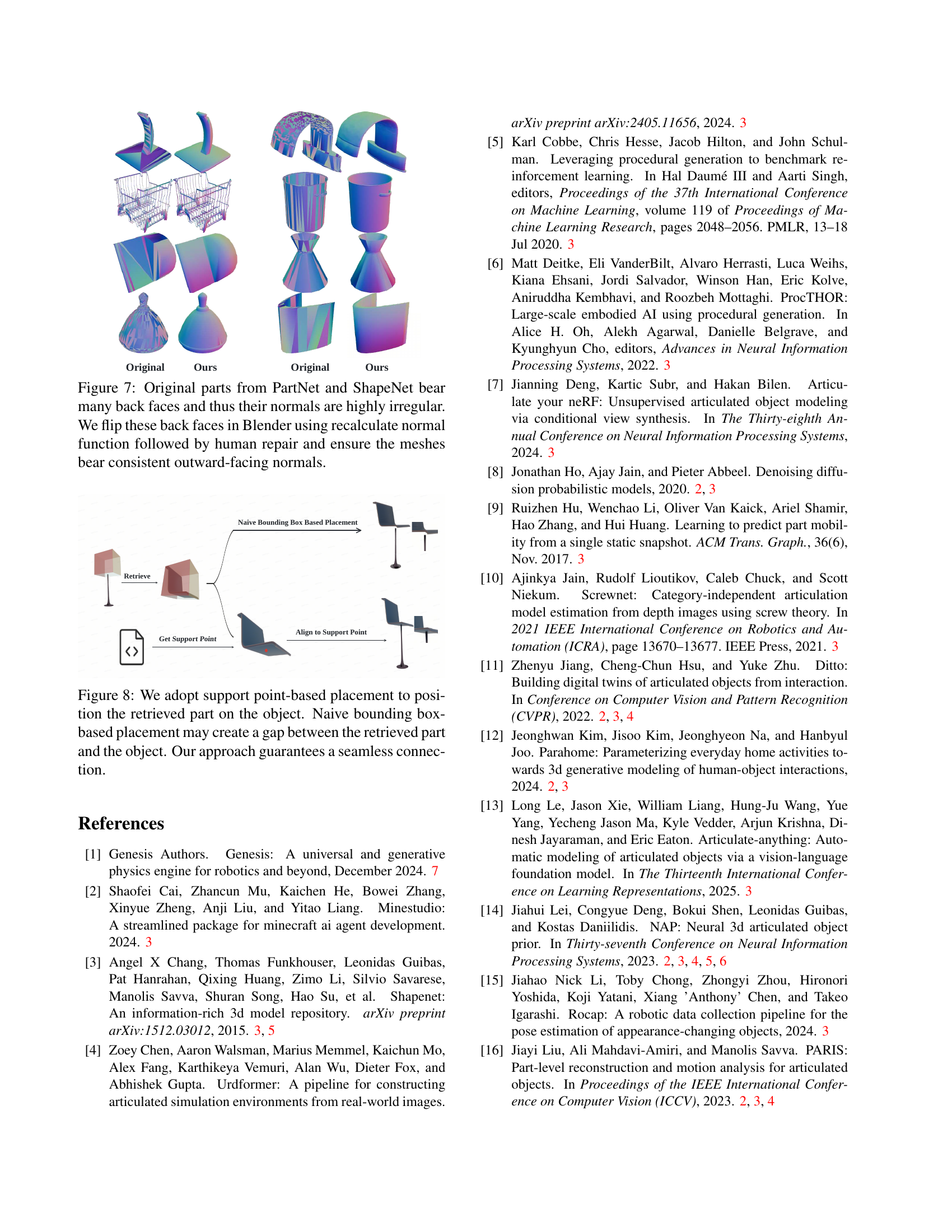

🔼 Figure 7 demonstrates the preprocessing step applied to 3D model datasets like PartNet and ShapeNet. These datasets often contain meshes with incorrectly oriented faces (back faces), leading to inconsistent and irregular surface normals. The image shows examples of original meshes from these datasets compared to the improved meshes after processing. This process involves using Blender software’s ‘recalculate normals’ function to correct the face orientation and flipping back faces. Following this automated step, manual human repair was performed to further refine the meshes and ensure all normals consistently point outwards, resulting in improved quality and suitability for downstream processing in the articulated object generation pipeline.

read the caption

Figure 7: Original parts from PartNet and ShapeNet bear many back faces and thus their normals are highly irregular. We flip these back faces in Blender using recalculate normal function followed by human repair and ensure the meshes bear consistent outward-facing normals.

🔼 This figure illustrates the improved mesh placement technique used in the paper. The standard method, using bounding boxes, often leaves gaps between parts of the assembled object, leading to unrealistic results. The proposed ‘support point-based placement’ method ensures a seamless connection between parts, enhancing the visual realism and physical plausibility of the generated 3D models. The figure directly compares the results of both methods.

read the caption

Figure 8: We adopt support point-based placement to position the retrieved part on the object. Naive bounding box-based placement may create a gap between the retrieved part and the object. Our approach guarantees a seamless connection.

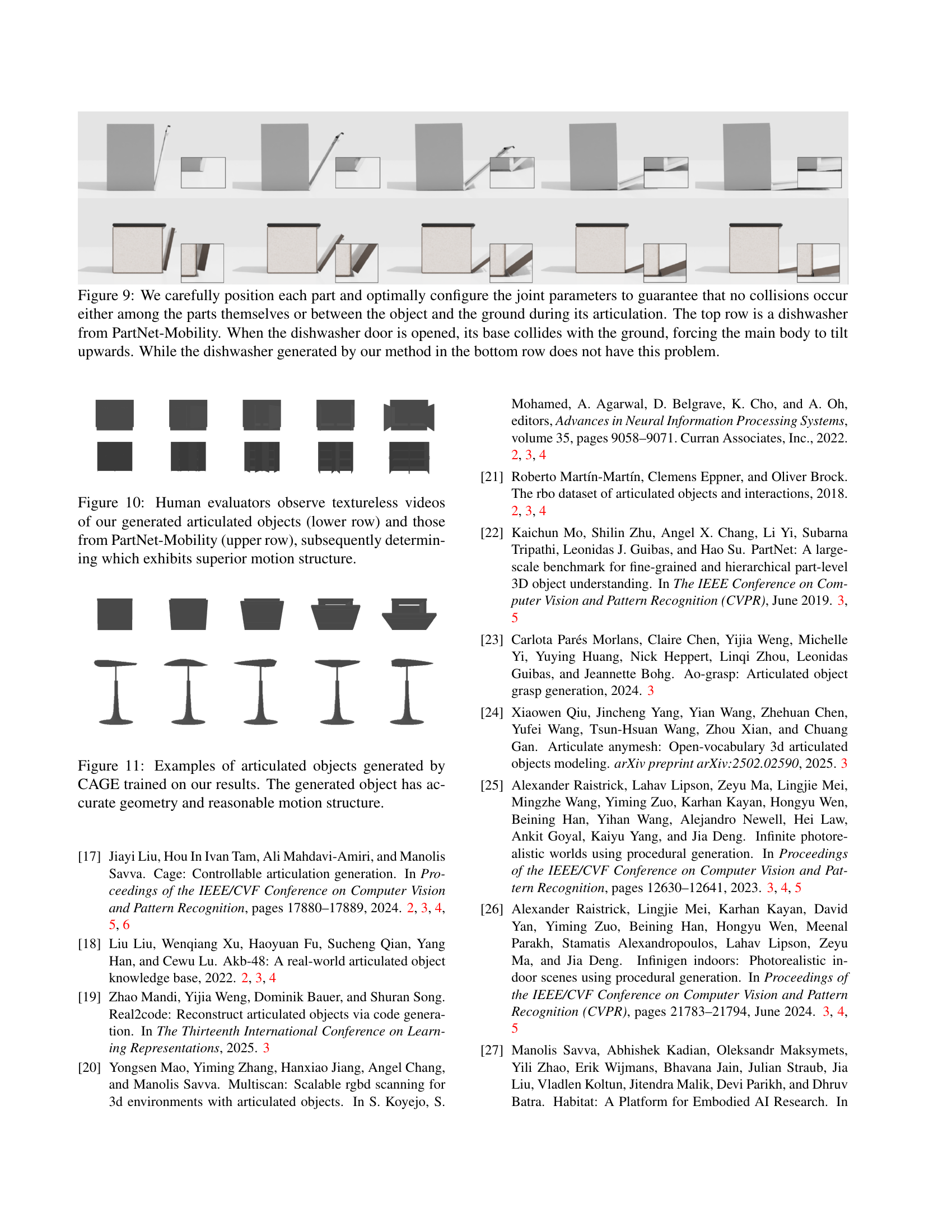

🔼 This figure demonstrates the importance of careful part placement and joint parameter configuration in articulated object generation to avoid collisions. The top row shows a dishwasher from the PartNet-Mobility dataset; when the door is opened, the base collides with the ground, causing the entire object to tilt. The bottom row shows a dishwasher generated by the method described in the paper, which avoids this problem due to improved part placement and joint parameters.

read the caption

Figure 9: We carefully position each part and optimally configure the joint parameters to guarantee that no collisions occur either among the parts themselves or between the object and the ground during its articulation. The top row is a dishwasher from PartNet-Mobility. When the dishwasher door is opened, its base collides with the ground, forcing the main body to tilt upwards. While the dishwasher generated by our method in the bottom row does not have this problem.

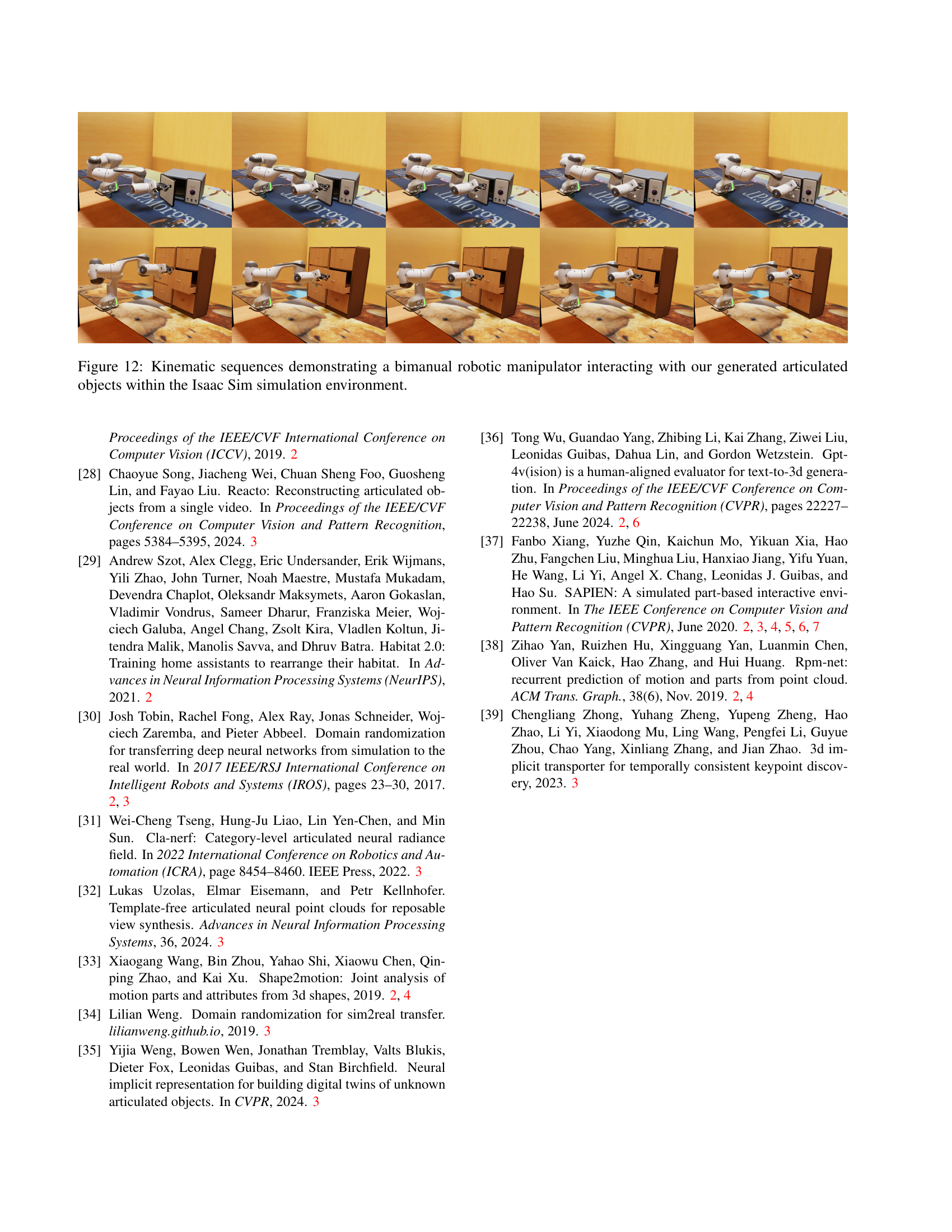

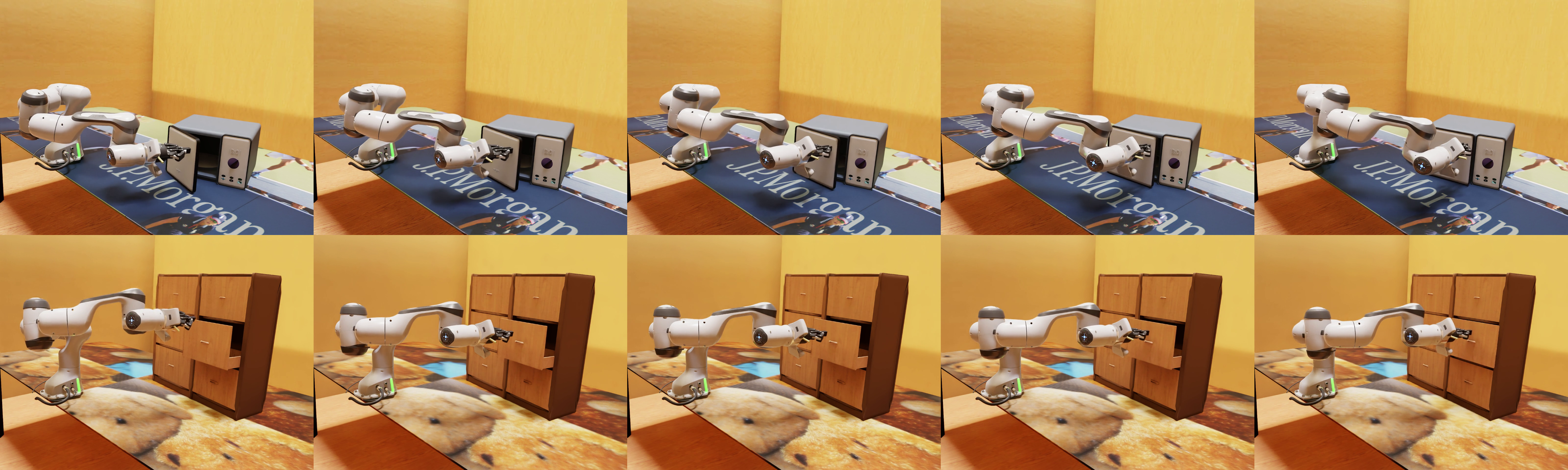

🔼 This figure shows a comparison of the motion quality of articulated objects generated by the proposed method and those from the PartNet-Mobility dataset. Human evaluators watched textureless videos of the objects in motion. The top row displays videos of objects from PartNet-Mobility, while the bottom row shows videos of objects generated by the Infinite Mobility method. The evaluators judged which set of objects demonstrated superior motion structure, based on the realism and fluidity of their movements.

read the caption

Figure 10: Human evaluators observe textureless videos of our generated articulated objects (lower row) and those from PartNet-Mobility (upper row), subsequently determining which exhibits superior motion structure.

🔼 This figure showcases examples of articulated objects generated using the CAGE (Controllable Articulation Generation) model. The model was trained on a dataset of synthetic articulated objects produced by the Infinite Mobility method described in the paper. The figure demonstrates the model’s capability to generate objects with accurate geometry and physically plausible articulation, indicating successful sim-to-real transfer learning.

read the caption

Figure 11: Examples of articulated objects generated by CAGE trained on our results. The generated object has accurate geometry and reasonable motion structure.

Full paper#