TL;DR#

Large language models (LLMs) have shown remarkable capabilities, but strong performance in specialized domains like mathematical reasoning and non-English languages often requires extensive training on massive datasets, leading to substantial computational costs. Furthermore, most multilingual LLMs are trained primarily on English-centric corpora, leading to a significant performance gap for other languages. Therefore, the conventional LLM training assumes that massive datasets are indispensable for strong performance, especially in complex reasoning tasks.

This paper investigates a contrasting approach: strategic fine-tuning on a small, high-quality, bilingual (English-French) dataset to enhance both reasoning capabilities and French language proficiency. The study introduces Pensez 7B, an LLM fine-tuned on a curated dataset of only 2,000 samples. The results demonstrate significant improvements in mathematical reasoning and French-specific tasks. Pensez 7B exhibits competitive performance on reasoning tasks and also outperforms knowledge retrieval with balanced proficiency. The researchers also release their dataset, training code, and fine-tuned model to encourage reproducibility and further investigation.

Key Takeaways#

Why does it matter?#

This paper is important for researchers because it challenges the traditional reliance on massive datasets for achieving strong reasoning performance in LLMs. By demonstrating the effectiveness of strategic fine-tuning on a small, high-quality dataset, the research opens new avenues for developing efficient, multilingual LLMs, particularly in resource-constrained scenarios. This approach enables broader accessibility and paves the way for more sustainable AI development.

Visual Insights#

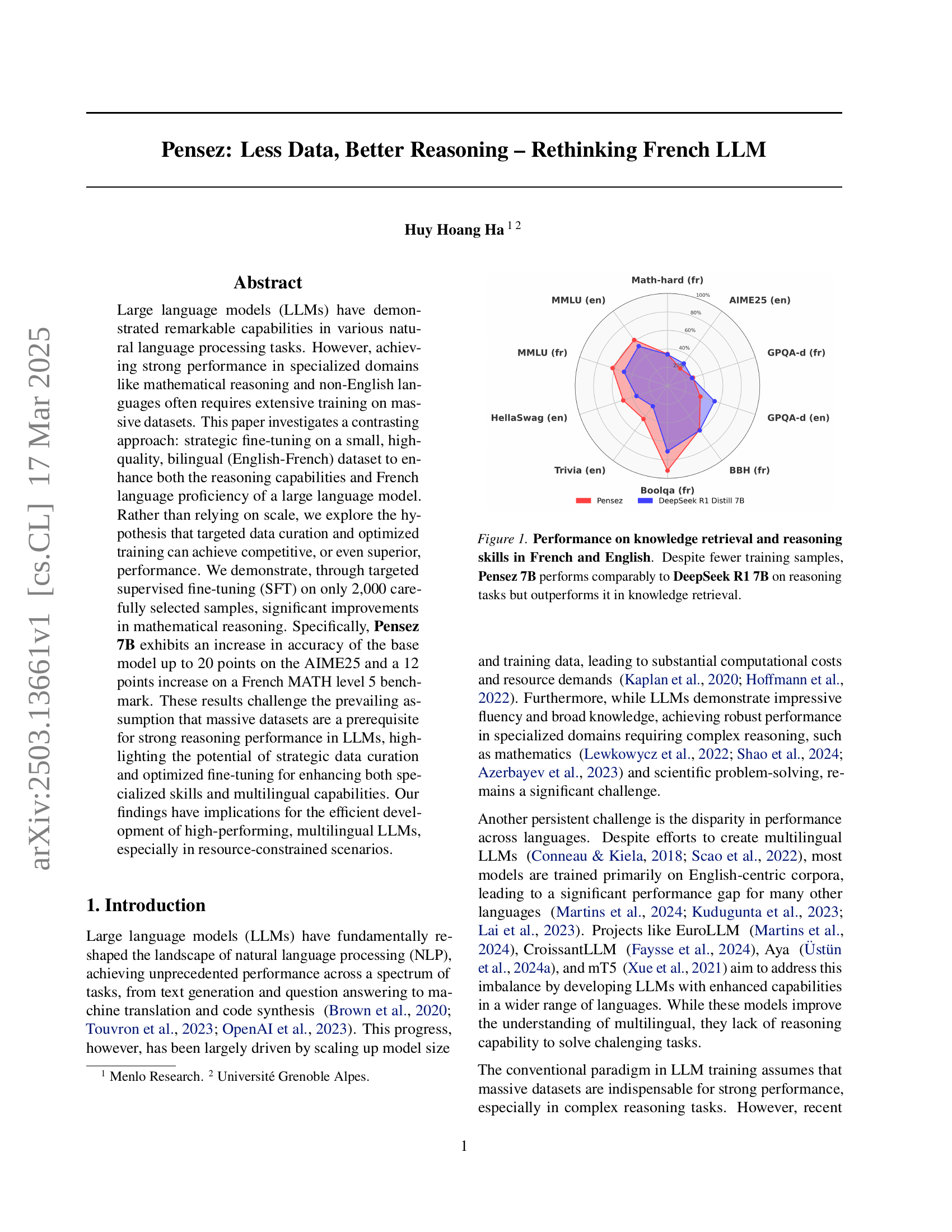

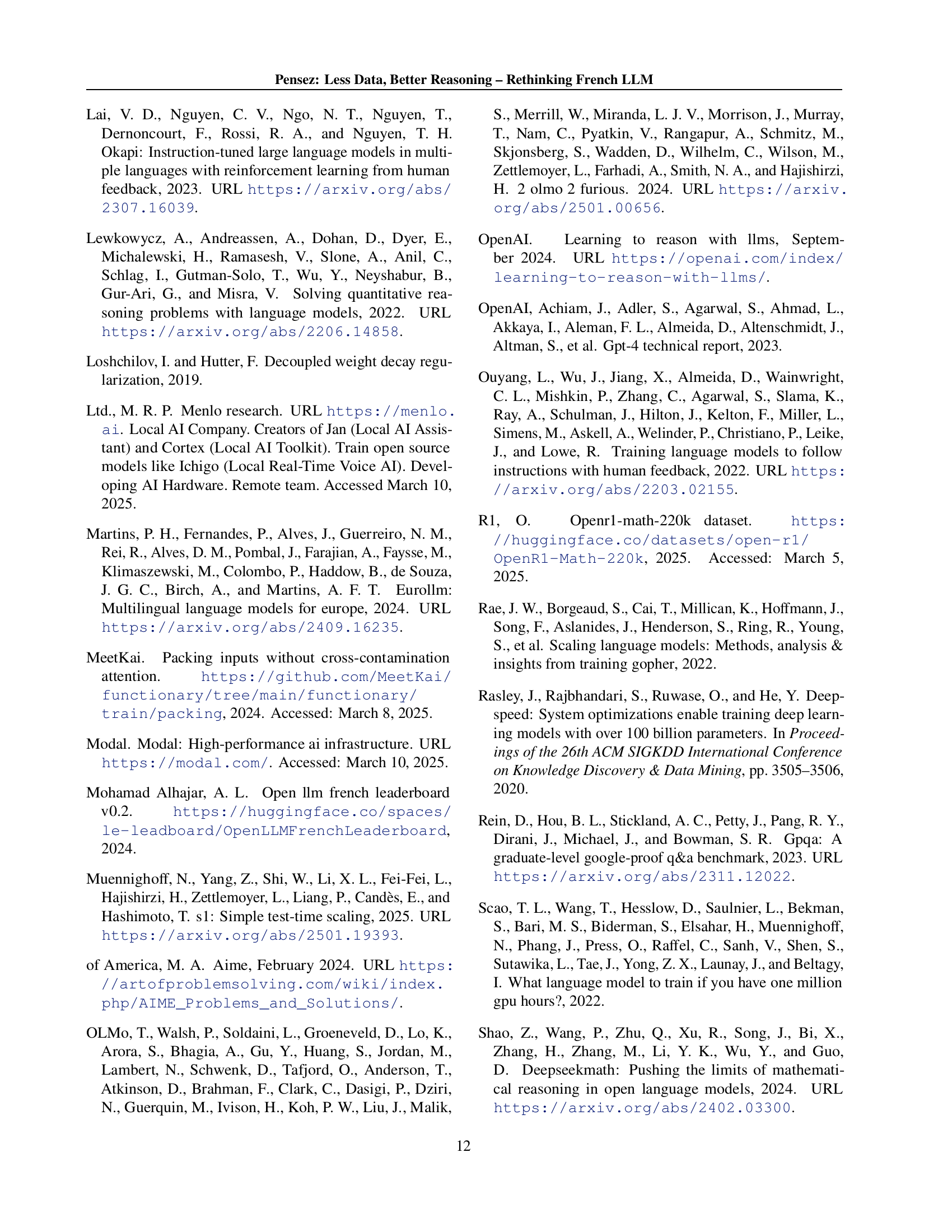

🔼 This figure displays a radar chart comparing the performance of various large language models (LLMs) on a range of knowledge retrieval and reasoning tasks in both English and French. The models compared include Pensez 7B, DeepSeek R1 7B, and others. Each axis represents a specific task, and the distance from the center reflects the model’s performance on that task (higher is better). The key takeaway is that despite being trained on significantly fewer samples than other models (as indicated in the figure and explicitly stated in the caption), Pensez 7B demonstrates comparable performance on reasoning tasks, and even surpasses other models in knowledge retrieval tasks.

read the caption

Figure 1: Performance on knowledge retrieval and reasoning skills in French and English. Despite fewer training samples, Pensez 7B performs comparably to DeepSeek R1 7B on reasoning tasks but outperforms it in knowledge retrieval.

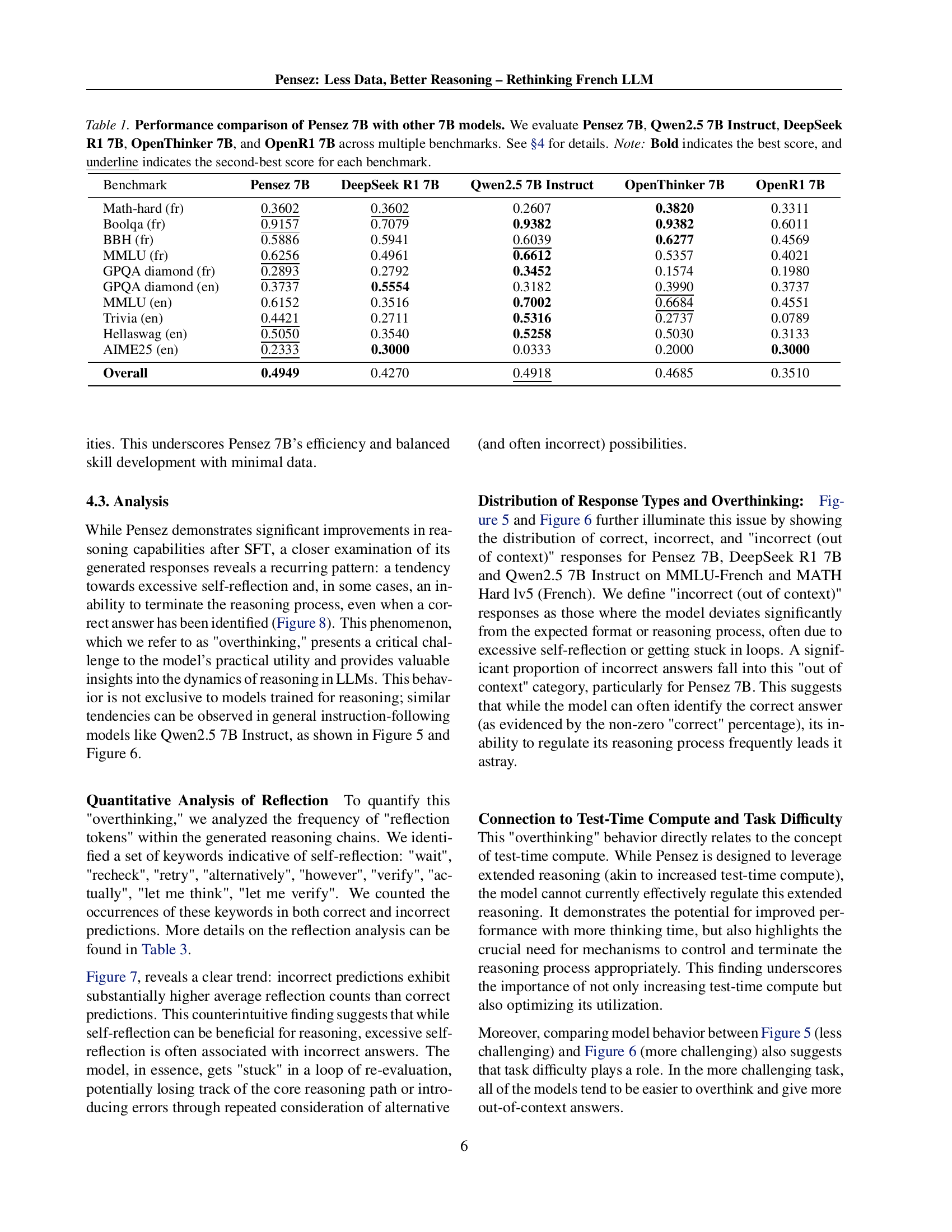

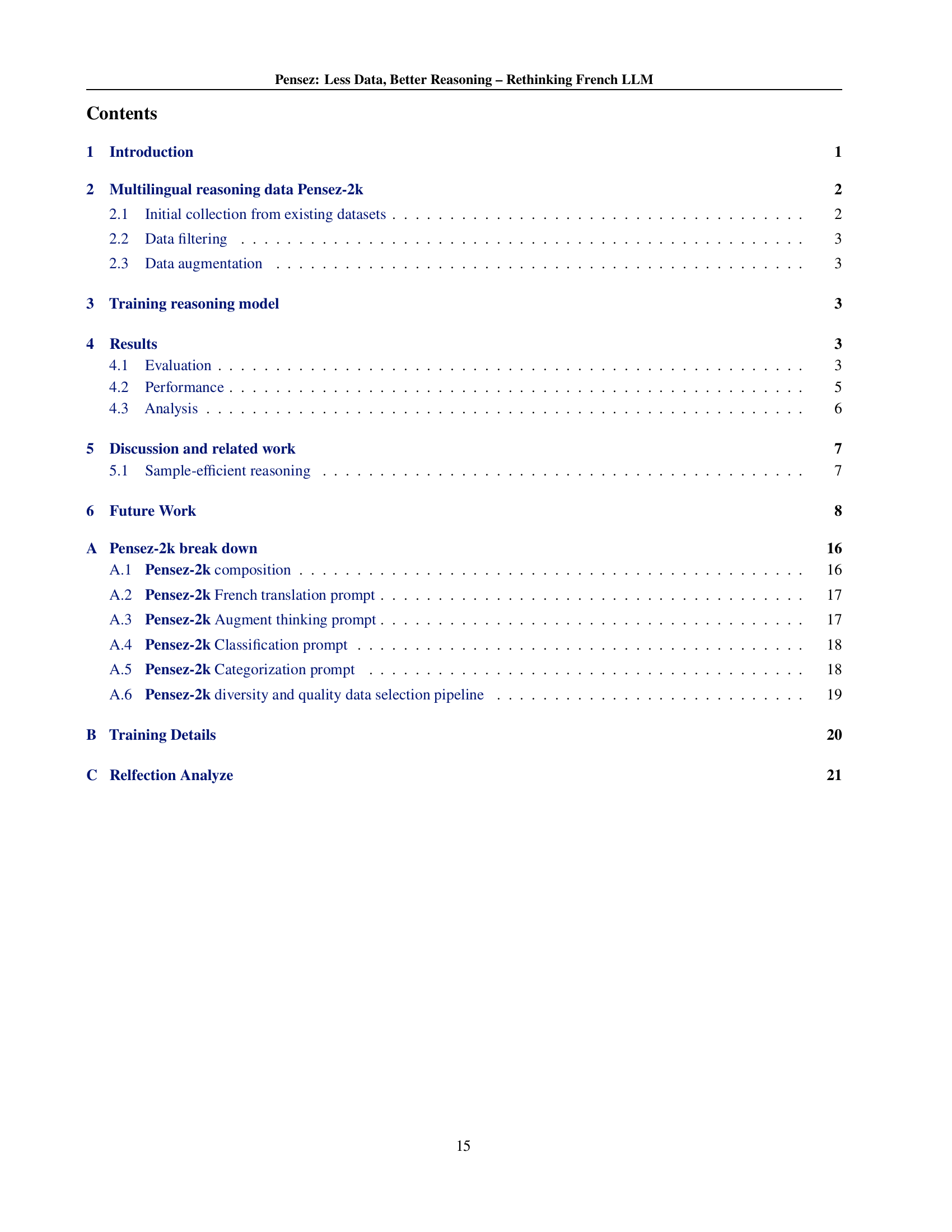

| Benchmark | Pensez 7B | DeepSeek R1 7B | Qwen2.5 7B Instruct | OpenThinker 7B | OpenR1 7B |

| Math-hard (fr) | 0.3602 | 0.3602 | 0.2607 | 0.3820 | 0.3311 |

| Boolqa (fr) | 0.9157 | 0.7079 | 0.9382 | 0.9382 | 0.6011 |

| BBH (fr) | 0.5886 | 0.5941 | 0.6039 | 0.6277 | 0.4569 |

| MMLU (fr) | 0.6256 | 0.4961 | 0.6612 | 0.5357 | 0.4021 |

| GPQA diamond (fr) | 0.2893 | 0.2792 | 0.3452 | 0.1574 | 0.1980 |

| GPQA diamond (en) | 0.3737 | 0.5554 | 0.3182 | 0.3990 | 0.3737 |

| MMLU (en) | 0.6152 | 0.3516 | 0.7002 | 0.6684 | 0.4551 |

| Trivia (en) | 0.4421 | 0.2711 | 0.5316 | 0.2737 | 0.0789 |

| Hellaswag (en) | 0.5050 | 0.3540 | 0.5258 | 0.5030 | 0.3133 |

| AIME25 (en) | 0.2333 | 0.3000 | 0.0333 | 0.2000 | 0.3000 |

| Overall | 0.4949 | 0.4270 | 0.4918 | 0.4685 | 0.3510 |

🔼 This table presents a performance comparison of five different 7B language models across various benchmark tasks. The models compared are Pensez 7B, Qwen2.5 7B Instruct, DeepSeek R1 7B, OpenThinker 7B, and OpenR1 7B. Benchmarks assess performance in both English and French, covering reasoning, knowledge retrieval, and multilingual capabilities. The scores are presented for each benchmark, highlighting the best (bold) and second-best (underlined) performing models. Refer to section 4 of the paper for detailed explanations of each benchmark.

read the caption

Table 1: Performance comparison of Pensez 7B with other 7B models. We evaluate Pensez 7B, Qwen2.5 7B Instruct, DeepSeek R1 7B, OpenThinker 7B, and OpenR1 7B across multiple benchmarks. See §4 for details. Note: Bold indicates the best score, and underline indicates the second-best score for each benchmark.

In-depth insights#

Bilingual LLM#

Bilingual LLMs present a fascinating challenge: bridging linguistic divides while maintaining performance. A key issue is data scarcity for many languages compared to English, potentially leading to performance disparities. The approach of strategic fine-tuning on smaller, high-quality bilingual datasets is promising, especially in resource-constrained settings. Balanced representation across languages is crucial, avoiding the English-centric bias common in many models. The inclusion of reasoning chains within the training data can enhance cross-lingual transfer of reasoning abilities. Further research is needed to explore techniques like cross-lingual transfer learning, data augmentation, and innovative training methodologies to develop truly robust and equitable multilingual LLMs.

Data Curation#

Data curation is crucial for enhancing the quality and reliability of datasets used in training LLMs. It involves strategies like filtering for length and language purity to ensure high-fidelity samples and augmentation to support advanced reasoning. This iterative refinement aims to create compact, high-quality datasets, which reduces computational demands while preserving linguistic robustness. By employing meticulous curation processes, we create balanced bilingual datasets capable of supporting reasoning tasks while improving the overall efficacy of training resources.

Pensez-2k data#

The paper introduces Pensez-2k, a small but high-quality, bilingual (English-French) dataset designed for targeted SFT. It prioritizes data quality, diversity, balanced bilingual representation and detailed reasoning chains. The dataset creation involves collecting data from reliable internet sources, filtering it based on length, language purity and diversity, and augmenting it through translation and reasoning chain generation. The aim is to demonstrate that strategic data curation can achieve competitive performance compared to models trained on larger datasets, challenging the assumption that massive datasets are indispensable for strong reasoning.

Overthinking?#

The idea of “overthinking” in LLMs is intriguing. It suggests that, beyond a certain point, increased computational effort or complexity in reasoning doesn’t necessarily translate to improved accuracy. Instead, it may lead to a counterproductive loop of re-evaluation, possibly derailing the core reasoning path. This concept aligns with the broader discussion on efficient resource allocation in AI, challenging the assumption that “more is always better”. Understanding and mitigating overthinking could lead to more streamlined, efficient reasoning strategies, enhancing the practical utility of LLMs. It underscore the importance of not only increasing test-time compute but also optimizing its utilization.

Future of LLM#

The future of LLMs points toward several exciting directions. Firstly, data efficiency will become paramount, moving beyond the current reliance on massive datasets. Strategic fine-tuning and data curation, as seen in the paper’s approach, will unlock significant performance gains, especially in resource-constrained scenarios and for specific languages. Secondly, multilingual capabilities will be enhanced, not just in terms of understanding different languages, but also in reasoning across them. Balancing bilingual training approaches can address the common performance gap. Thirdly, reasoning abilities will be further developed through techniques like reinforcement learning and increased test-time compute. LLMs will not only retrieve knowledge but also demonstrate sophisticated problem-solving skills. The challenge lies in regulating this extended reasoning to avoid overthinking. Finally, integration with external tools and expansion into reasoning-intensive fields like medicine hold immense potential. LLMs could become versatile agents capable of interacting with the real world and assisting in complex decision-making processes.

More visual insights#

More on figures

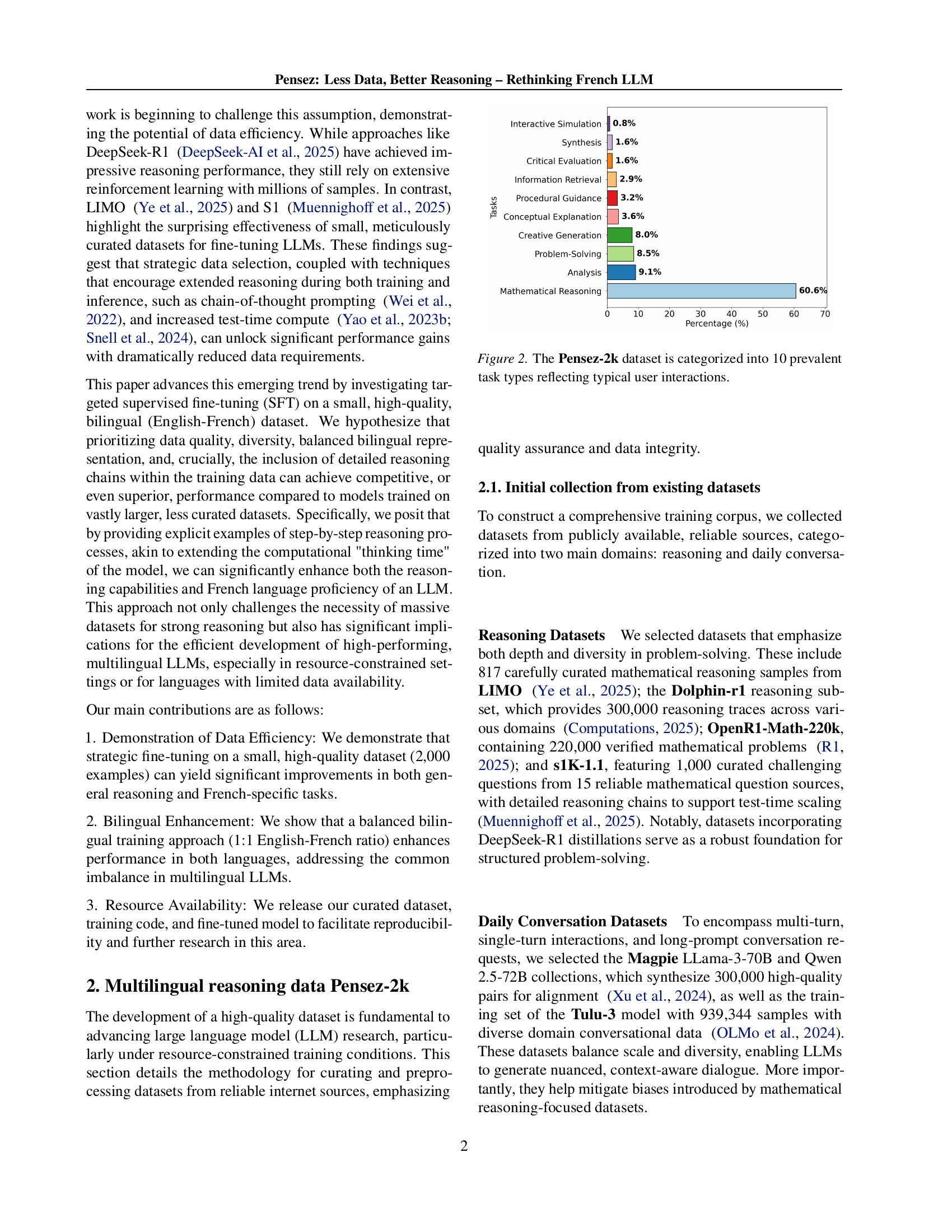

🔼 Figure 2 is a bar chart that visualizes the distribution of task types within the Pensez-2k dataset. The dataset is categorized into ten common interaction types, revealing the diversity of tasks. The chart illustrates the percentage of samples belonging to each category. This provides insights into the balance of task types included in the dataset and shows how the data is designed to represent the variety of interactions a large language model might encounter. The most frequent types of tasks are analysis (9.1%), mathematical reasoning (60.6%), and problem-solving (8.5%), while less prevalent categories such as interactive simulations, critical evaluation, and synthesis represent lower percentages of data.

read the caption

Figure 2: The Pensez-2k dataset is categorized into 10 prevalent task types reflecting typical user interactions.

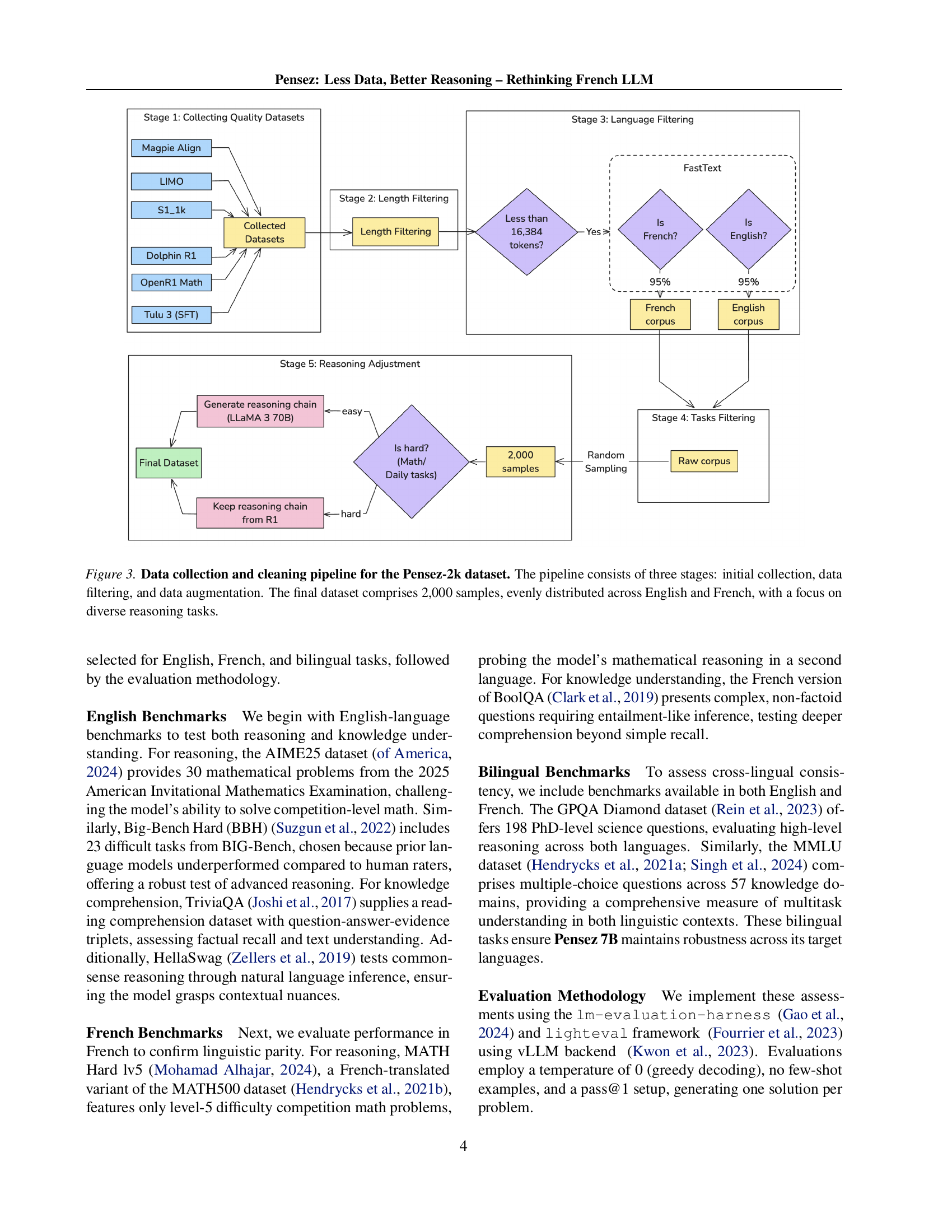

🔼 The figure illustrates the process of creating the Pensez-2k dataset, which is divided into three stages: data collection, data filtering, and data augmentation. The initial stage involves gathering various datasets from publicly available resources, categorized into reasoning and daily conversation datasets. The data filtering stage refines the initial dataset using several criteria, including length, language purity, and diversity, to ensure that all samples are of high quality and relevant to the target task. This stage aims to reduce redundancy and improve data quality. Finally, the data augmentation stage expands the dataset through various techniques, including bilingual (English-French) translation to enhance the model’s multilingual capabilities and reasoning chain augmentation to provide detailed reasoning steps within the training samples. The end result is a final dataset of 2000 samples (1000 in English and 1000 in French), carefully curated to support the development of a strong multilingual reasoning model. The process of selection emphasizes diversity across task types, including mathematical reasoning, to ensure the model’s reasoning abilities are well-rounded.

read the caption

Figure 3: Data collection and cleaning pipeline for the Pensez-2k dataset. The pipeline consists of three stages: initial collection, data filtering, and data augmentation. The final dataset comprises 2,000 samples, evenly distributed across English and French, with a focus on diverse reasoning tasks.

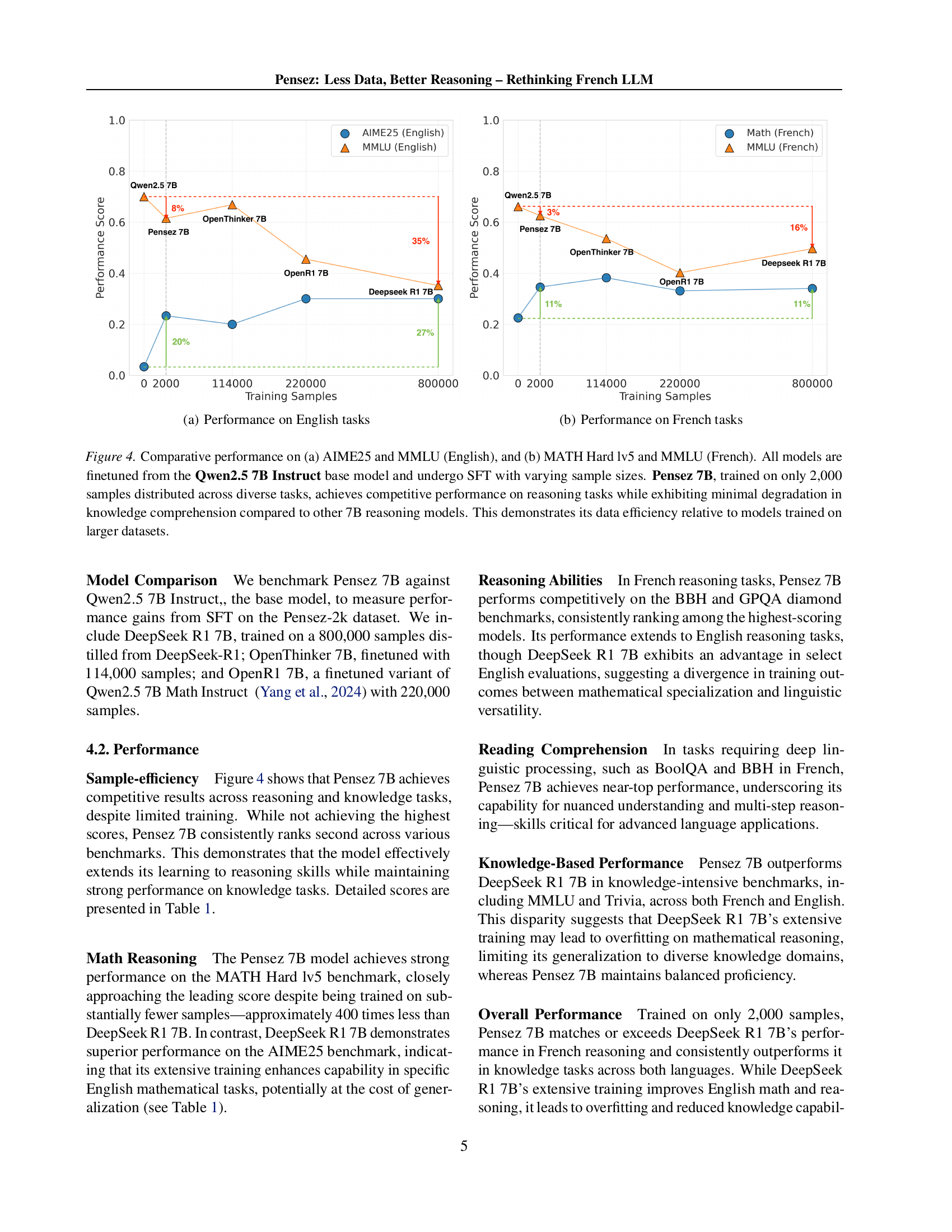

🔼 This figure displays the performance comparison of various language models across different English-language tasks. Specifically, it shows how the performance scores of Pensez 7B, Qwen2.5 7B, OpenThinker 7B, DeepSeek R1 7B, and OpenR1 7B vary depending on the size of the training dataset used. The x-axis represents the number of training samples, while the y-axis shows the reasoning performance scores. This visualization helps demonstrate the data efficiency of the Pensez model.

read the caption

(a) Performance on English tasks

🔼 This figure displays the performance of different language models on French-language tasks. It shows the relationship between the number of training samples used and the performance score achieved. The models compared include Pensez 7B, Qwen2.5 7B, OpenThinker 7B, OpenR1 7B, and DeepSeek R1 7B. The x-axis represents the number of training samples, while the y-axis represents the performance score. The plot helps visualize the data efficiency of Pensez 7B in comparison to other models trained on significantly larger datasets.

read the caption

(b) Performance on French tasks

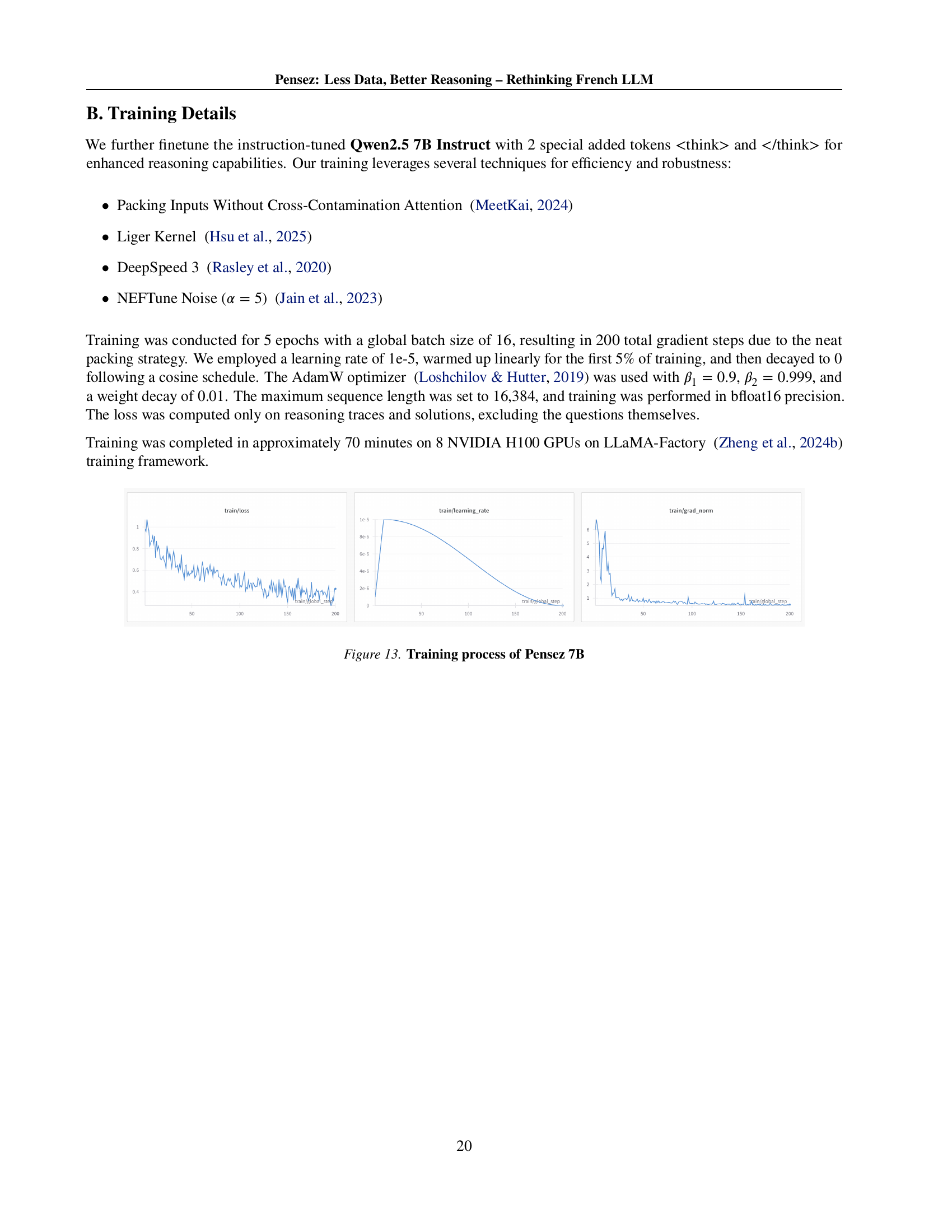

🔼 Figure 4 presents a comparative analysis of the performance of several language models, including Pensez 7B, on various benchmarks assessing reasoning and knowledge comprehension. The benchmarks include AIME25 and MMLU (English) in subfigure (a) and MATH Hard lv5 and MMLU (French) in subfigure (b). All models are fine-tuned from the same base model (Qwen2.5 7B Instruct) but using different training datasets of varying sizes. The key takeaway is that Pensez 7B, despite being trained on a significantly smaller dataset (only 2,000 samples), achieves competitive performance in reasoning tasks with minimal reduction in knowledge comprehension abilities when compared to larger models.

read the caption

Figure 4: Comparative performance on (a) AIME25 and MMLU (English), and (b) MATH Hard lv5 and MMLU (French). All models are finetuned from the Qwen2.5 7B Instruct base model and undergo SFT with varying sample sizes. Pensez 7B, trained on only 2,000 samples distributed across diverse tasks, achieves competitive performance on reasoning tasks while exhibiting minimal degradation in knowledge comprehension compared to other 7B reasoning models. This demonstrates its data efficiency relative to models trained on larger datasets.

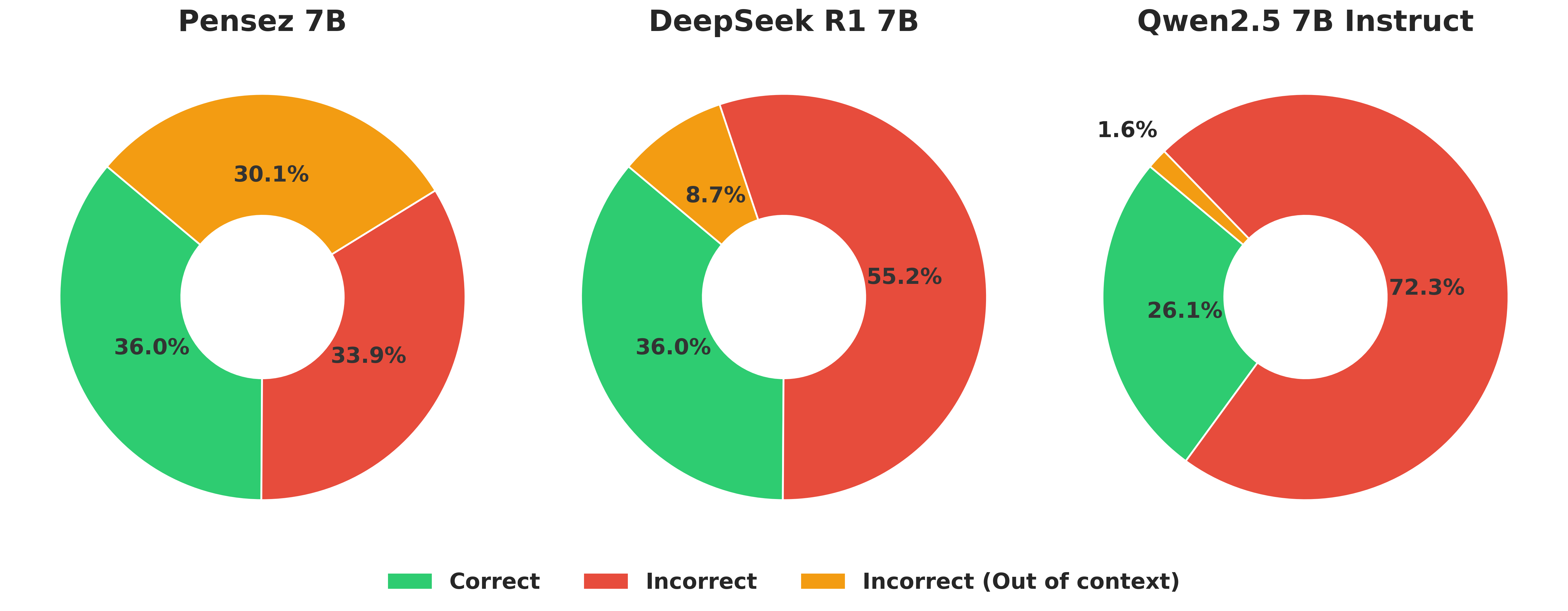

🔼 This figure presents a comparison of the performance of four different language models on the MMLU-French benchmark. The models compared are Pensez 7B, DeepSeek R1 7B, and Qwen2.5 7B Instruct. The chart uses a donut chart visualization to show the percentage of correct, incorrect, and incorrect (out of context) predictions for each model. The ‘incorrect (out of context)’ category signifies answers which are factually wrong but not necessarily because of reasoning failures; rather, the model may have strayed from the task or engaged in excessive self-reflection.

read the caption

Figure 5: Model Performance Comparison on MMLU-French.

🔼 This figure presents a comparison of model performance on the MATH Hard lv5 (French) benchmark. It shows the proportion of correct, incorrect, and incorrect-out-of-context responses for three models: Pensez 7B, DeepSeek R1 7B, and Qwen2.5 7B Instruct. The ‘incorrect-out-of-context’ category represents instances where the model’s responses deviated significantly from the expected format or reasoning process, often due to excessive self-reflection or getting ‘stuck’ in a loop. The figure highlights the different strengths and weaknesses of each model in mathematical reasoning tasks, particularly revealing Pensez 7B’s tendency toward overthinking.

read the caption

Figure 6: Model Performance Comparison on MATH Hard lv5 (French).

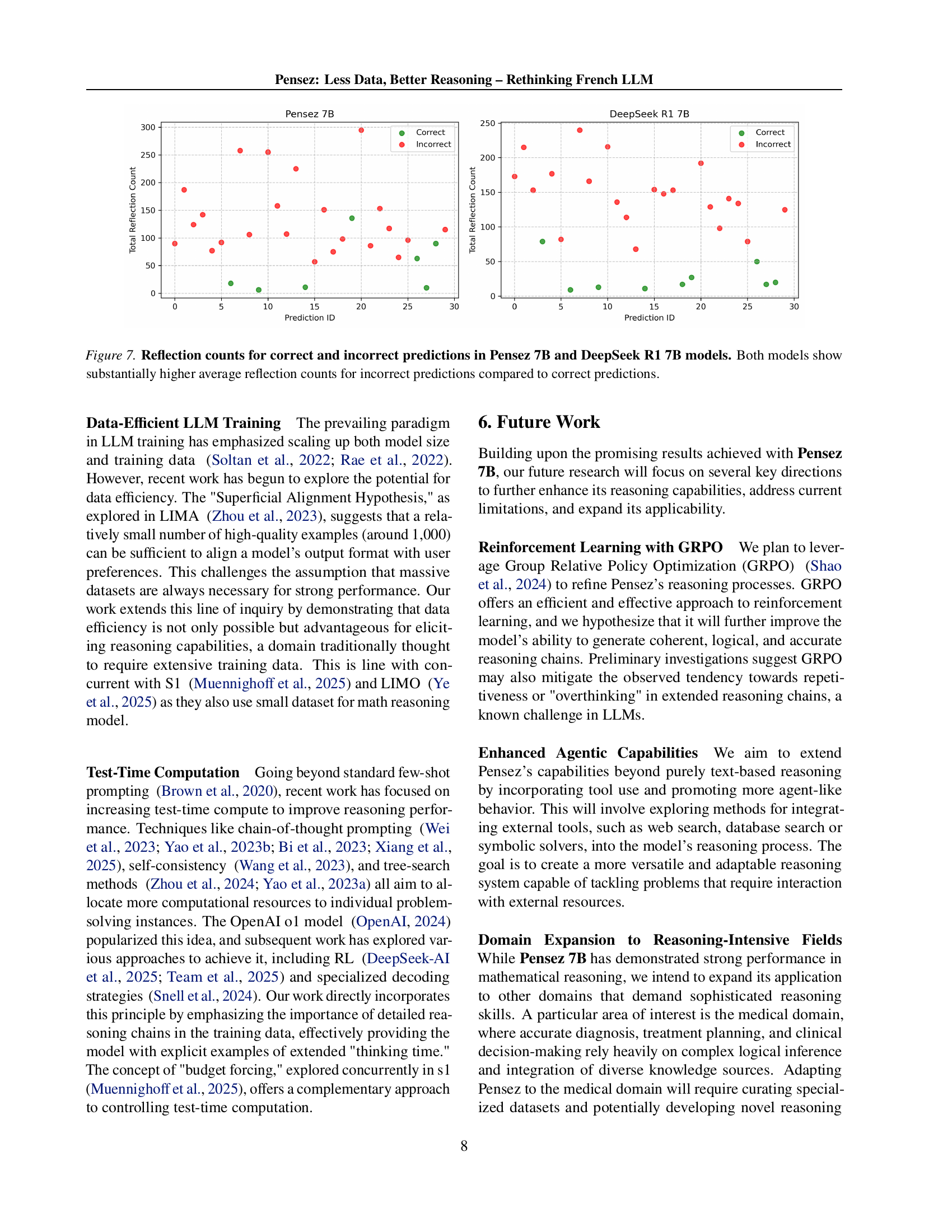

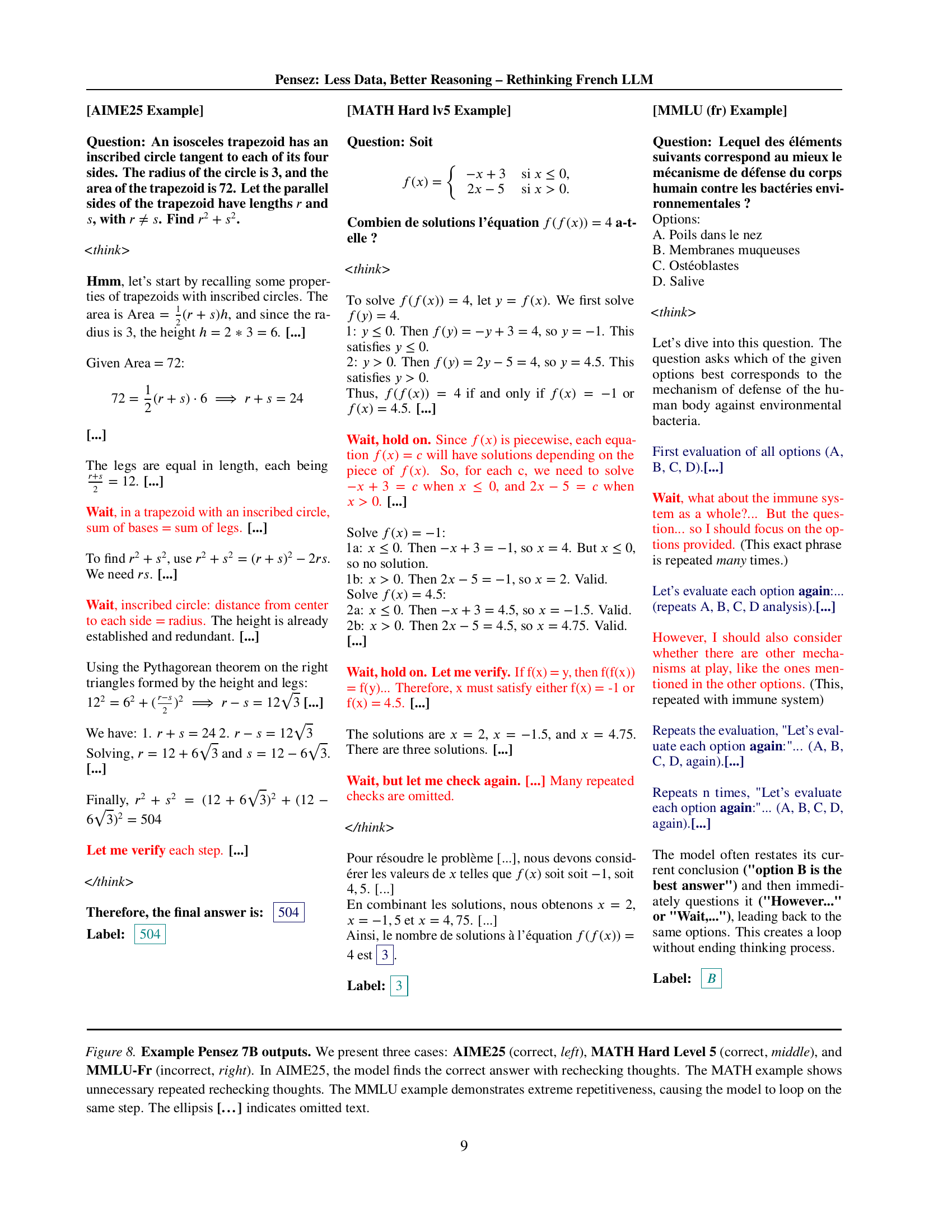

🔼 Figure 7 presents a comparative analysis of reflection counts in correct versus incorrect predictions generated by two language models: Pensez 7B and DeepSeek R1 7B. The x-axis represents individual predictions (prediction ID), while the y-axis displays the total count of reflection tokens. Reflection tokens are words or phrases indicating self-doubt or a reconsideration of the model’s reasoning process. The figure visually demonstrates a significant trend: both models exhibit substantially more reflection tokens in their incorrect predictions compared to their correct ones. This suggests that excessive self-reflection may be correlated with an increased likelihood of producing incorrect answers. The plot allows for a direct visual comparison of the two models’ tendencies toward overthinking.

read the caption

Figure 7: Reflection counts for correct and incorrect predictions in Pensez 7B and DeepSeek R1 7B models. Both models show substantially higher average reflection counts for incorrect predictions compared to correct predictions.

More on tables

| [AIME25 Example] Question: An isosceles trapezoid has an inscribed circle tangent to each of its four sides. The radius of the circle is 3, and the area of the trapezoid is 72. Let the parallel sides of the trapezoid have lengths and , with . Find . ¡think¿ Hmm, let’s start by recalling some properties of trapezoids with inscribed circles. The area is , and since the radius is 3, the height . […] Given Area = 72: […] The legs are equal in length, each being . […] Wait, in a trapezoid with an inscribed circle, sum of bases = sum of legs. […] To find , use . We need . […] Wait, inscribed circle: distance from center to each side = radius. The height is already established and redundant. […] Using the Pythagorean theorem on the right triangles formed by the height and legs: […] We have: 1. 2. Solving, and . […] Finally, Let me verify each step. […] ¡/think¿ Therefore, the final answer is: Label: | [MATH Hard lv5 Example] Question: Soit Combien de solutions l’équation a-t-elle ? ¡think¿ To solve , let . We first solve . 1: . Then , so . This satisfies . 2: . Then , so . This satisfies . Thus, if and only if or . […] Wait, hold on. Since is piecewise, each equation will have solutions depending on the piece of . So, for each c, we need to solve when , and when . […] Solve : 1a: . Then , so . But , so no solution. 1b: . Then , so . Valid. Solve : 2a: . Then , so . Valid. 2b: . Then , so . Valid. […] Wait, hold on. Let me verify. If f(x) = y, then f(f(x)) = f(y)… Therefore, x must satisfy either f(x) = -1 or f(x) = 4.5. […] The solutions are , , and . There are three solutions. […] Wait, but let me check again. […] Many repeated checks are omitted. ¡/think¿ Pour résoudre le problème […], nous devons considérer les valeurs de telles que soit soit , soit . […] En combinant les solutions, nous obtenons , et . […] Ainsi, le nombre de solutions à l’équation est . Label: | [MMLU (fr) Example] Question: Lequel des éléments suivants correspond au mieux le mécanisme de défense du corps humain contre les bactéries environnementales ? Options: A. Poils dans le nez B. Membranes muqueuses C. Ostéoblastes D. Salive ¡think¿ Let’s dive into this question. The question asks which of the given options best corresponds to the mechanism of defense of the human body against environmental bacteria. First evaluation of all options (A, B, C, D).[…] Wait, what about the immune system as a whole?… But the question… so I should focus on the options provided. (This exact phrase is repeated many times.) Let’s evaluate each option again:… (repeats A, B, C, D analysis).[…] However, I should also consider whether there are other mechanisms at play, like the ones mentioned in the other options. (This, repeated with immune system) Repeats the evaluation, ”Let’s evaluate each option again:”… (A, B, C, D, again).[…] Repeats n times, ”Let’s evaluate each option again:”… (A, B, C, D, again).[…] The model often restates its current conclusion (”option B is the best answer”) and then immediately questions it (”However…” or ”Wait,…”), leading back to the same options. This creates a loop without ending thinking process. Label: |

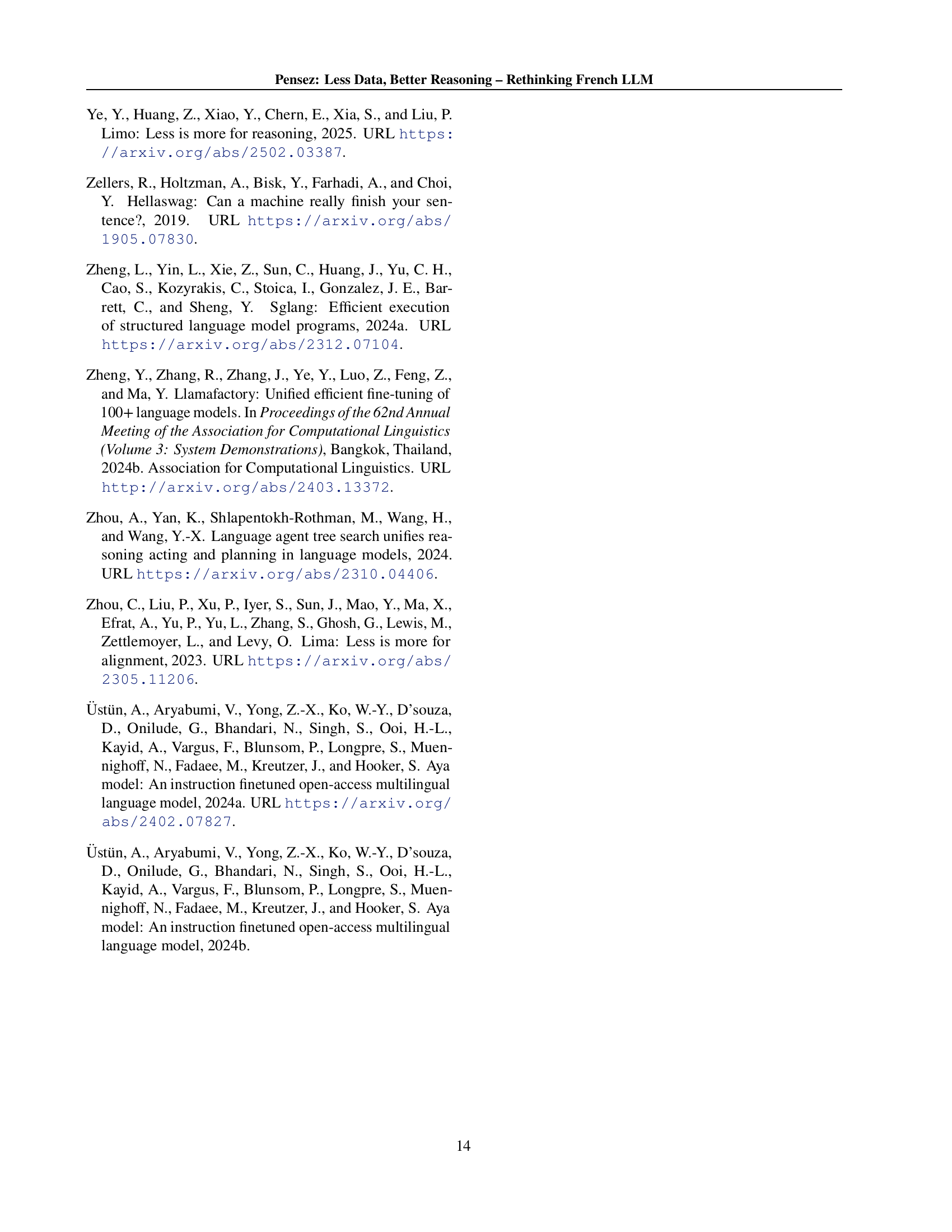

🔼 Table 2 shows the composition of the Pensez-2k dataset used to fine-tune the language model. It breaks down the dataset by language (English or French), the original source dataset (e.g., LIMO, OpenR1-Math, Magpie), the type of interaction (single-turn, multi-turn, or long context), and the task category (math reasoning or daily conversation). The table provides the number of samples and the total number of tokens for each subset.

read the caption

Table 2: Composition of the dataset. The dataset is broken down by language, source, type, and task.

| Language | Source | Type | #Samples | Total tokens |

| Math Reasoning | ||||

| English | LIMO | Single-turn | 700 | 4,596,147 |

| French | OpenR1 Math | Single-turn | 358 | 1,825,706 |

| French | S1.1K | Single-turn | 142 | 1,197,929 |

| French | Dolphin R1 | Single-turn | 200 | 799,873 |

| Daily Tasks | ||||

| English | Magpie Align | Single-turn | 179 | 270,614 |

| English | Tulu 3 (SFT) | Multi-turn | 91 | 388,958 |

| English | Tulu 3 (SFT) | Long context | 30 | 250,941 |

| French | Magpie Align | Single-turn | 88 | 115,321 |

| French | Tulu 3 (SFT) | Single-turn | 100 | 143,409 |

| French | Tulu 3 (SFT) | Multi-turn | 87 | 180,734 |

| French | Tulu 3 (SFT) | Long context | 25 | 159,883 |

| Pensez-2k | - | - | 2,000 | 9,967,320 |

🔼 This table details the frequency of self-reflection words used in both correct and incorrect reasoning outputs by the Pensez and DeepSeek models. It categorizes the occurrences of these words (‘wait’, ‘alternatively’, ‘however’, ‘actually’, ’let’, ‘verify’, ‘recheck’) into counts for correct and incorrect predictions, providing a total count for each word within each model. This allows for a quantitative analysis of the relationship between self-reflection and reasoning accuracy.

read the caption

Table 3: Top Reflection Types for Pensez and DeepSeek. The table shows the frequency of reflection types, categorized by correct and incorrect counts, along with the total occurrences for each model.

Full paper#