TL;DR#

Reasoning in LLMs relies on reinforcement learning, but key details are often hidden, hindering reproducibility. This paper addresses this by proposing the Decoupled Clip and Dynamic sampling Policy Optimization (DAPO) algorithm. The authors identified critical issues in naive GRPO baselines, such as entropy collapse and training instability, mirroring challenges faced by others trying to reproduce existing results.

To overcome these hurdles, the authors present DAPO and open-source a large-scale RL system. The system achieves state-of-the-art AIME scores using a Qwen2.5-32B model. They introduce four key techniques: Clip-Higher, Dynamic Sampling, Token-Level Policy Gradient Loss, and Overlong Reward Shaping. The training code, built on the verl framework, and a carefully curated dataset are also open-sourced.

Key Takeaways#

Why does it matter?#

This paper addresses the crucial need for reproducible research in LLM reinforcement learning. By open-sourcing DAPO, the authors empower researchers to replicate state-of-the-art results, explore new techniques, and accelerate progress in reasoning LLMs. The work fosters transparency and collaboration, driving innovation in this rapidly evolving field.

Visual Insights#

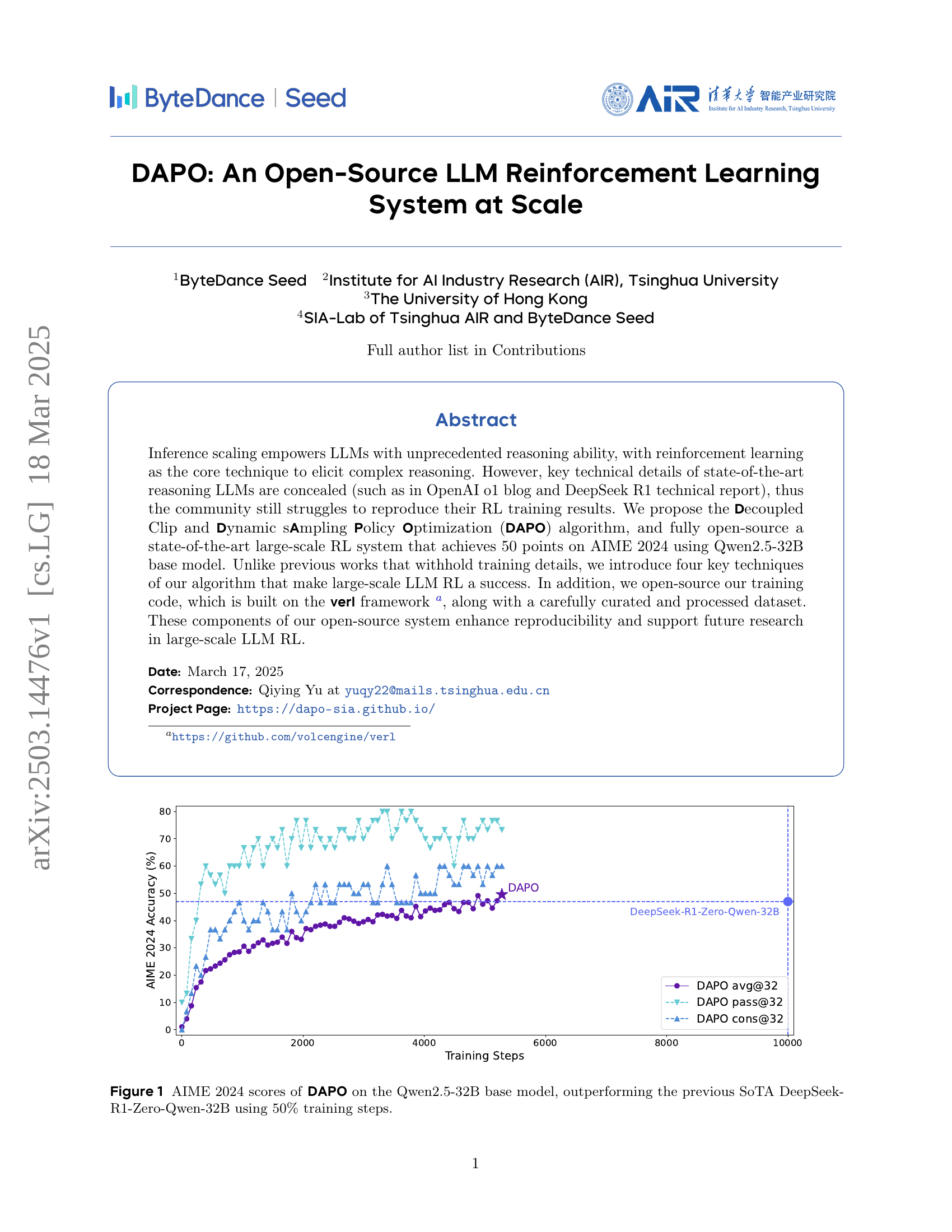

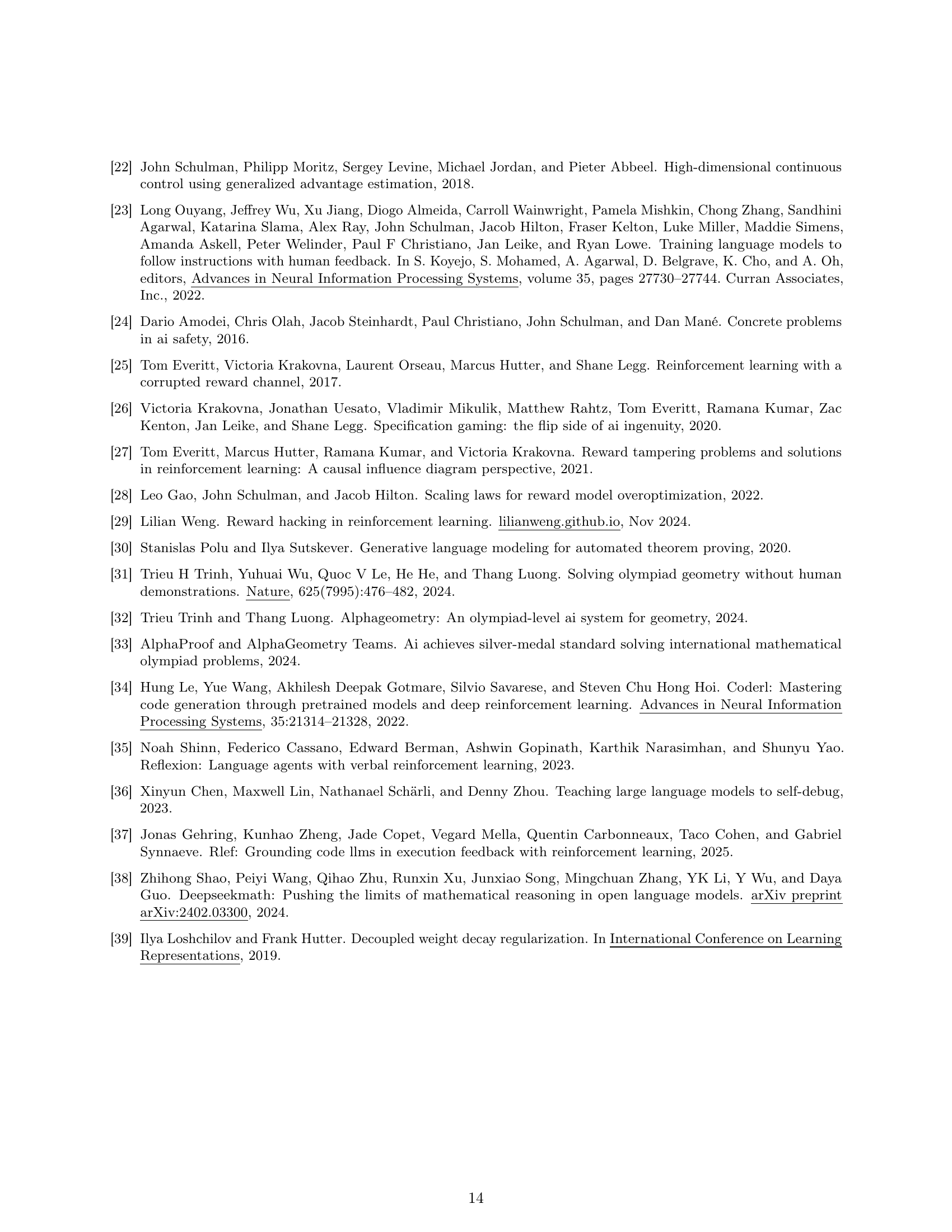

🔼 The figure shows the performance of the DAPO reinforcement learning algorithm on the AIME 2024 benchmark. The y-axis represents the accuracy score on AIME 2024, while the x-axis shows the number of training steps. The blue lines represent different metrics tracked by DAPO during training. The key takeaway is that DAPO, using the Qwen2.5-32B base language model, surpasses the prior state-of-the-art DeepSeek-R1-Zero-Qwen-32B model’s performance while using only 50% of the training steps. This demonstrates DAPO’s efficiency and effectiveness in improving the reasoning capabilities of large language models.

read the caption

Figure 1: AIME 2024 scores of DAPO on the Qwen2.5-32B base model, outperforming the previous SoTA DeepSeek-R1-Zero-Qwen-32B using 50% training steps.

| Algorithm 1 DAPO: Decoupled Clip and Dynamic sAmpling Policy Optimization |

| Input initial policy model ; reawrd model ; task prompts ; hyperparameters |

| 1: for step = 1,…,M do |

| 2: Sample a batch from |

| 3: Update the old policy model |

| 4: Sample G outputs for each question |

| 5: Compute rewards for each sampled output by running |

| 6: Filter out and add the remaining to the dynamic sampling buffer (Dynamic Sampling Equation 11) |

| 7: if buffer size : |

| 8: continue |

| 9: For each in the buffer, compute for the t-th token of (Equation 9) |

| 10: for iteration = 1, …, do |

| 11: Update the policy model by maximizing the DAPO objective (Equation 8) |

| Output |

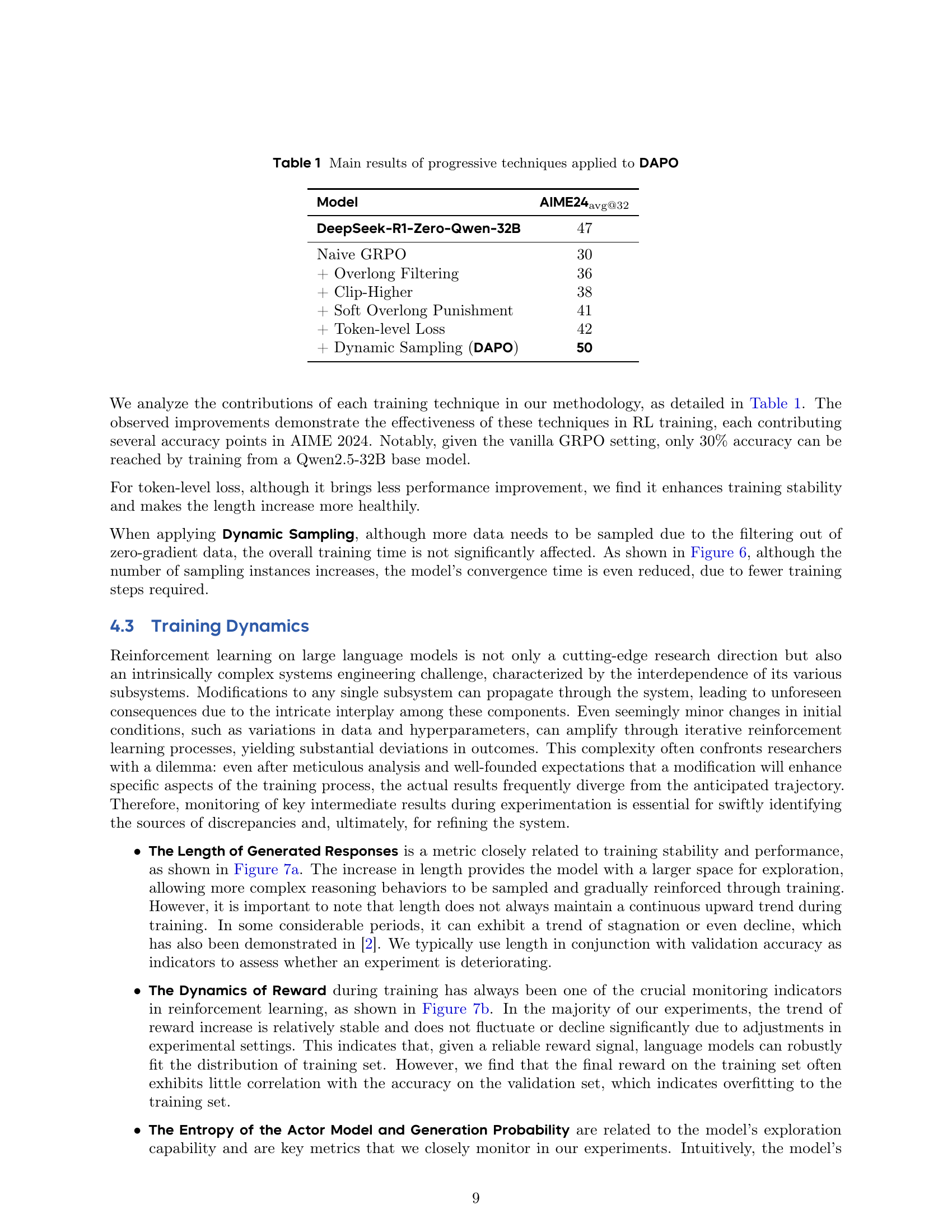

🔼 This table presents the results of progressively applying different techniques to the DAPO algorithm, showing the impact on the AIME 2024 accuracy. It starts with the baseline performance of a naive GRPO approach and then incrementally adds techniques like overlong filtering, clip-higher, soft overlong punishment, token-level loss, and finally dynamic sampling (the complete DAPO). Each row shows the improvement in accuracy obtained by incorporating another technique. The final row shows the DAPO model’s improved accuracy compared to previous state-of-the-art.

read the caption

Table 1:

In-depth insights#

LLM RL at Scale#

LLM RL at Scale presents significant challenges and opportunities. Scaling reinforcement learning to large language models requires addressing issues like sample efficiency, stability, and reward shaping. Effective strategies involve carefully designed algorithms, such as balancing exploration and exploitation, and mitigating reward hacking. Overcoming these hurdles enables LLMs to achieve unprecedented reasoning capabilities, unlocking potential in complex tasks. The intersection of RL and LLMs necessitates robust infrastructure and datasets for democratized access.

DAPO Algorithm#

The DAPO algorithm, as described in the paper, centers around refining reinforcement learning for LLMs, particularly in complex reasoning scenarios. It addresses limitations in existing methods like GRPO by introducing several key techniques. The core idea seems to be enhancing exploration while maintaining training stability. Clip-Higher decouples the upper and lower clipping ranges, allowing for more aggressive exploration of low-probability tokens, counteracting entropy collapse. Dynamic Sampling addresses gradient issues by filtering prompts, ensuring a consistent and effective gradient signal. Furthermore, Token-Level Policy Gradient Loss rebalances the impact of long sequences to avoid issues arising from overly long or low-quality samples. Overlong Reward Shaping mitigates reward noise from truncated sequences, by using a length-aware penalty.

Clip-Higher Insight#

Clip-Higher" strategy addresses entropy collapse in RL training of LLMs. Standard clipping restricts policy updates, hindering exploration. Raising the upper clip increases exploration, especially for low-probability tokens. This promotes diversity in generated responses, preventing premature convergence to deterministic policies. By decoupling lower and upper clipping ranges, exploration-exploitation trade-off is better managed. The strategy enhances policy entropy and improves the generation of diverse and high-quality samples, which is crucial for effective reasoning.

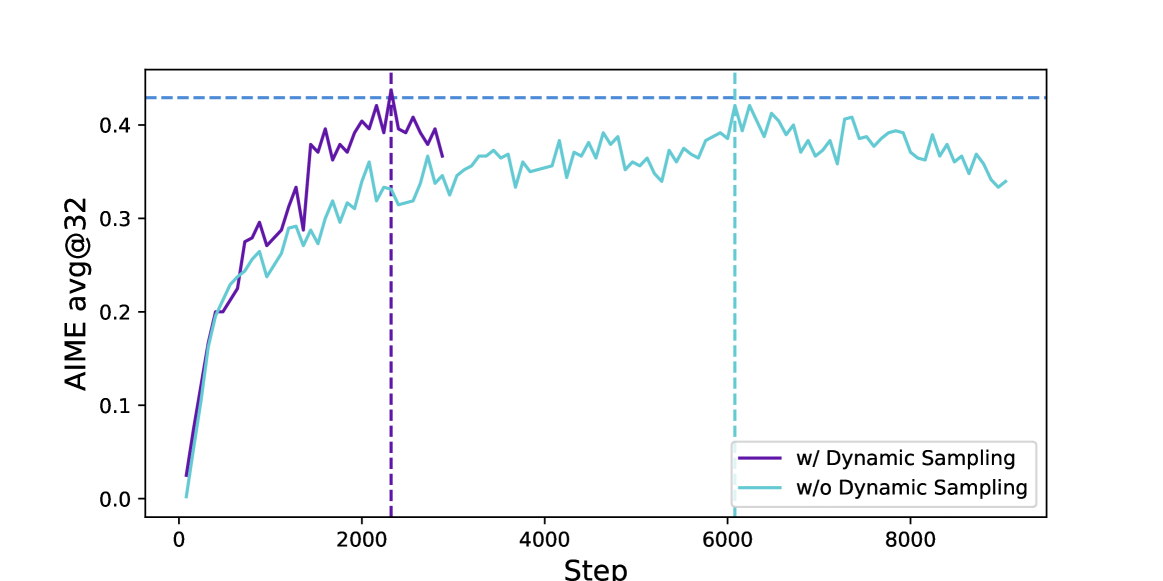

Dynamic Sampling#

The “Dynamic Sampling” method addresses a key challenge in Reinforcement Learning (RL) for Large Language Models (LLMs): the gradient-decreasing problem when prompts consistently yield high accuracy. By oversampling prompts that don’t consistently produce perfect scores (accuracy of 1) and filtering out prompts that do, the method ensures a more balanced gradient signal during training. This approach effectively combats the tendency for gradients to diminish as the model excels on certain prompts, preventing premature convergence and allowing the model to continue learning from a diverse set of examples. This dynamic adjustment of the training dataset ensures that the model is consistently exposed to prompts that contribute meaningfully to its learning process, preventing the model from being stuck in local optimums. Overall it promotes improved exploration of the solution space.

Overlong Shaping#

Overlong shaping is a nuanced technique in reinforcement learning, particularly relevant for tasks involving sequential decision-making like long-form text generation. The core idea centers around handling situations where an agent’s actions exceed a predefined length or step limit. Naïve truncation can introduce unintended consequences, such as biasing the agent against exploring longer, potentially beneficial action sequences or creating artificial reward discontinuities at the truncation point. Effective overlong shaping seeks to mitigate these issues through several strategies. One approach involves reward shaping, where a small negative reward is assigned for each step beyond a certain threshold, discouraging excessive length while still allowing the agent to explore the space. Another strategy is truncation with a ‘done’ signal, signaling the end of an episode when the length limit is reached, but without assigning a punitive reward, thus maintaining a more stable learning signal. Finally, masking future time steps to avoid propagating gradients or providing extra incentive or penalization to these overlong sequences are also considered. These techniques provide useful insights and improve the effiency of the model.

More visual insights#

More on figures

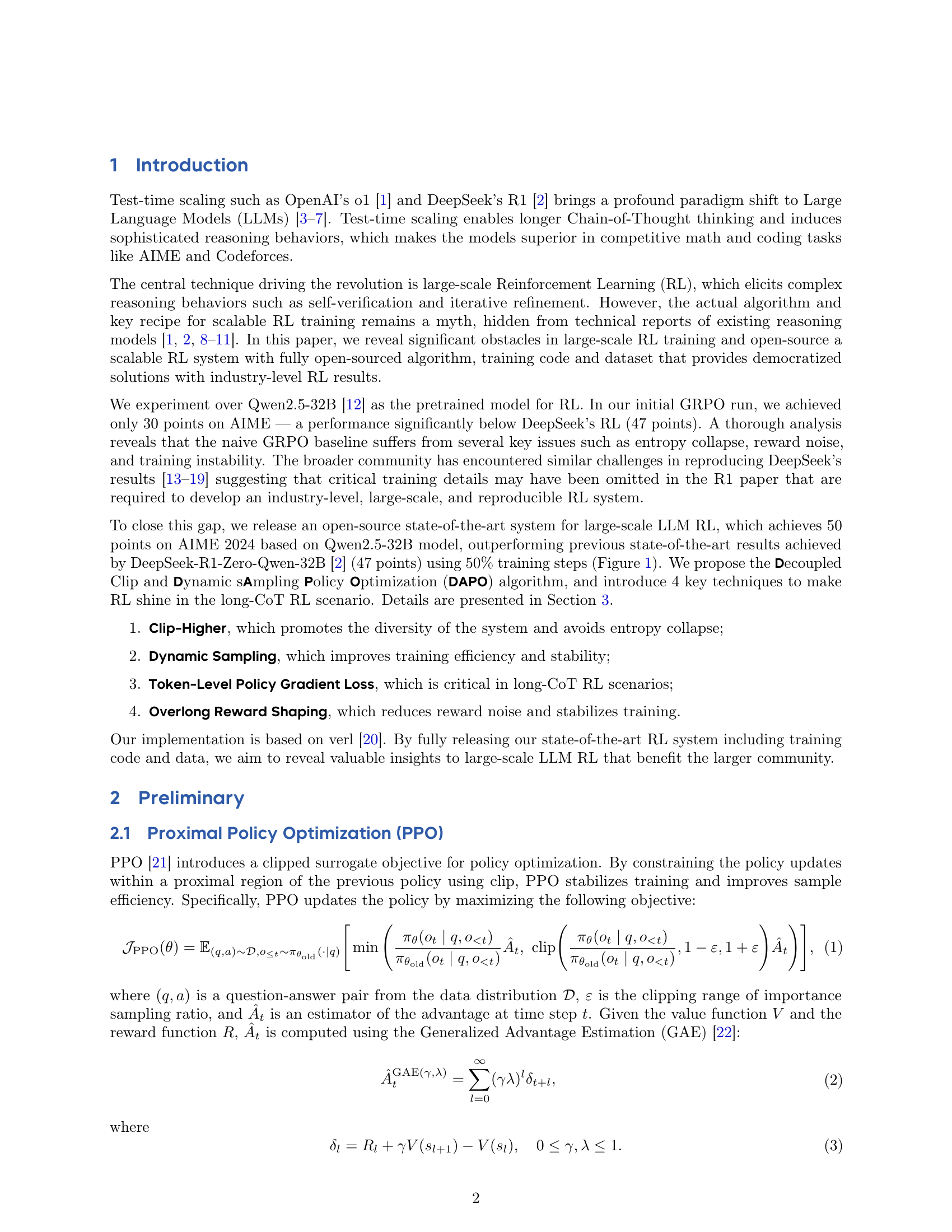

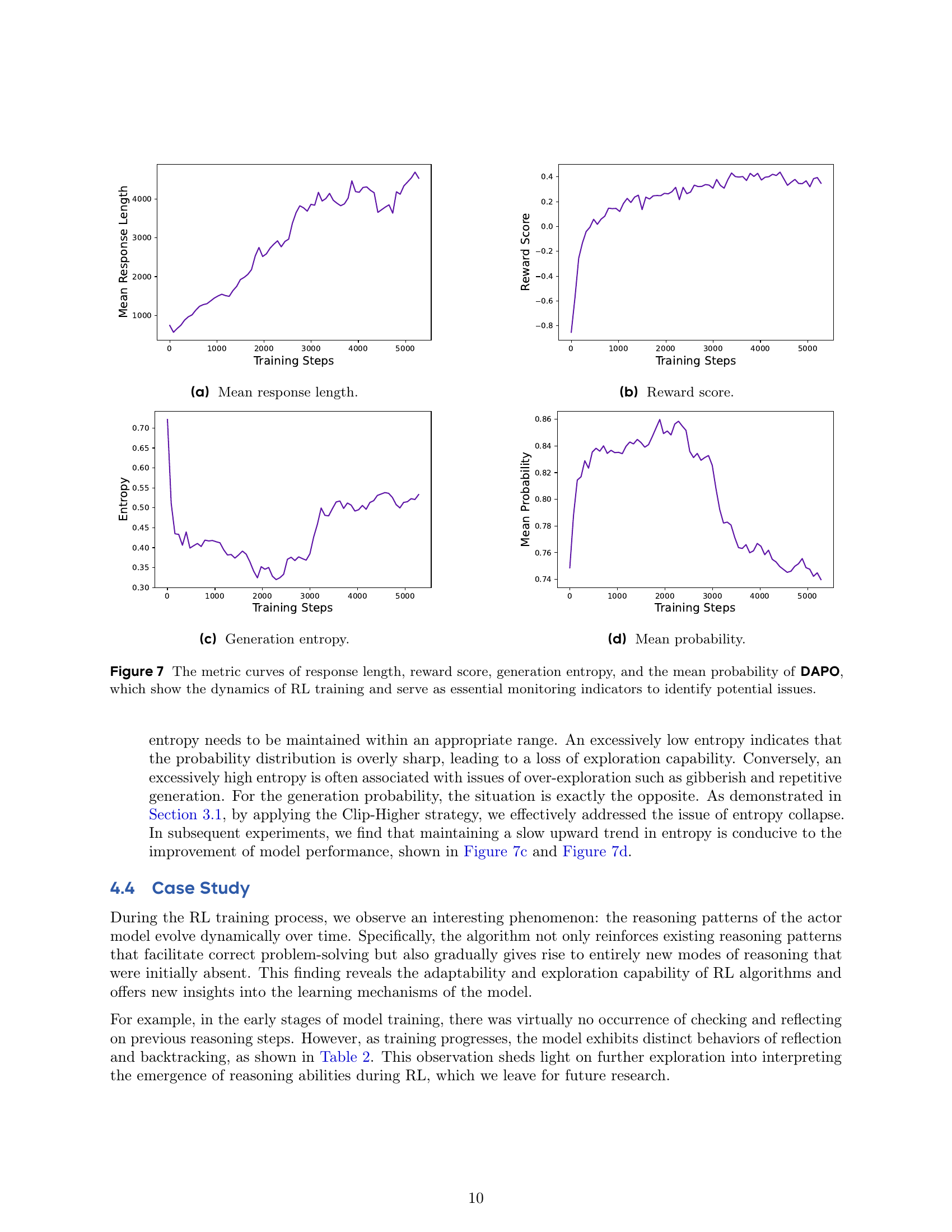

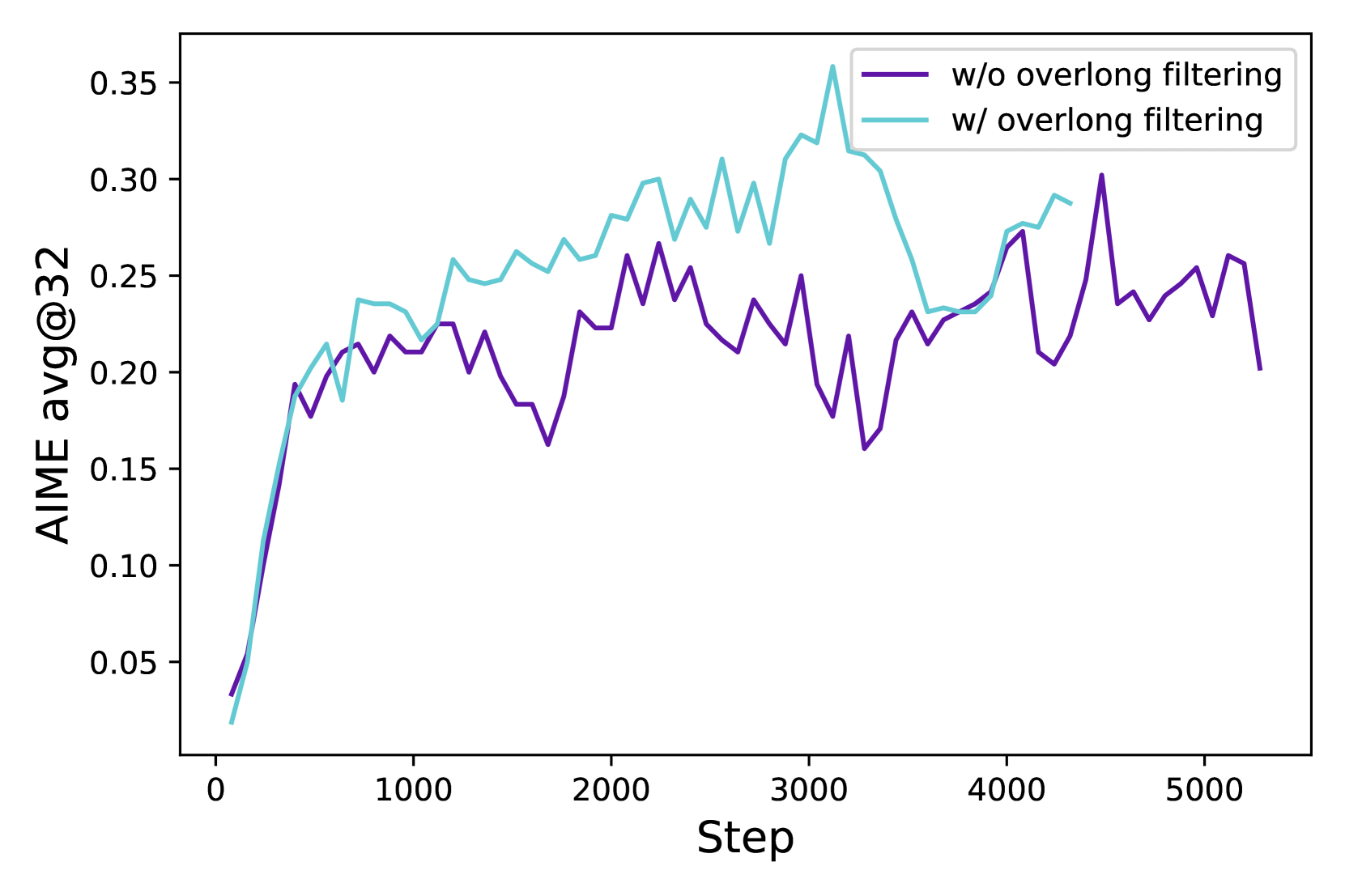

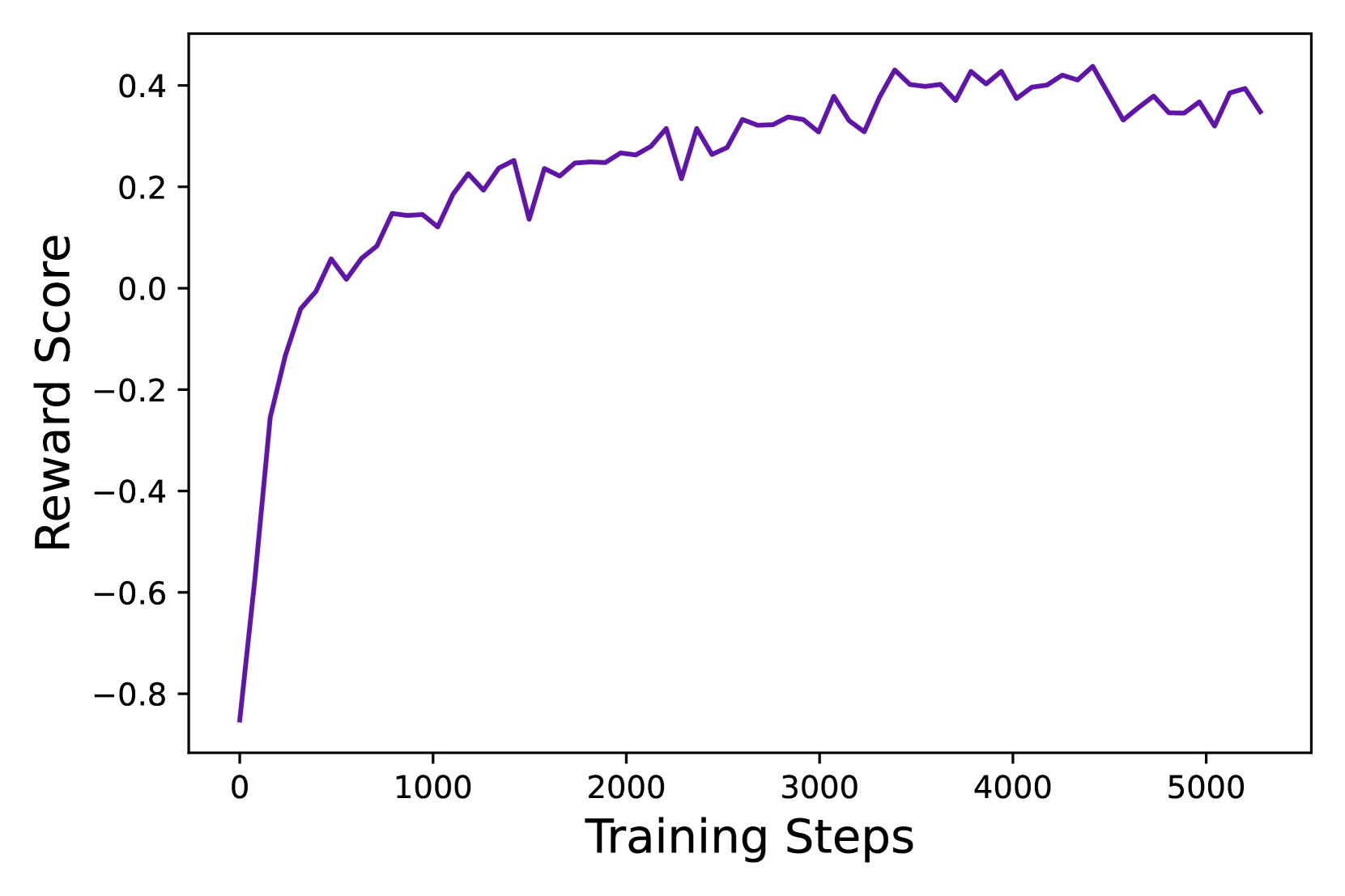

🔼 This figure shows the accuracy of the model on the AIME test set over the course of reinforcement learning training. It demonstrates how the model’s performance on AIME improves as the number of training steps increases. The x-axis represents the training steps and the y-axis represents the accuracy on AIME.

read the caption

(a) Accuracies on AIME.

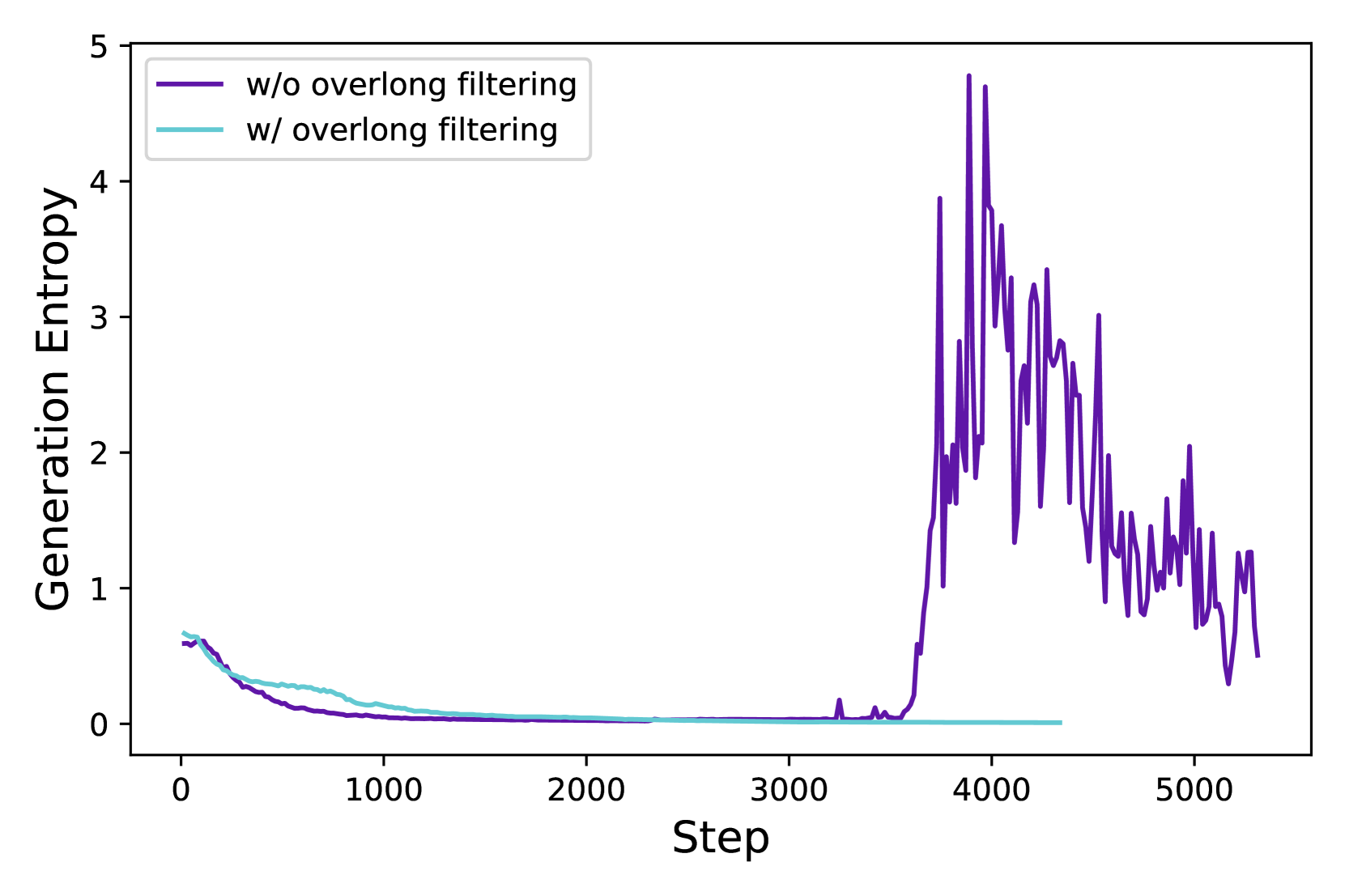

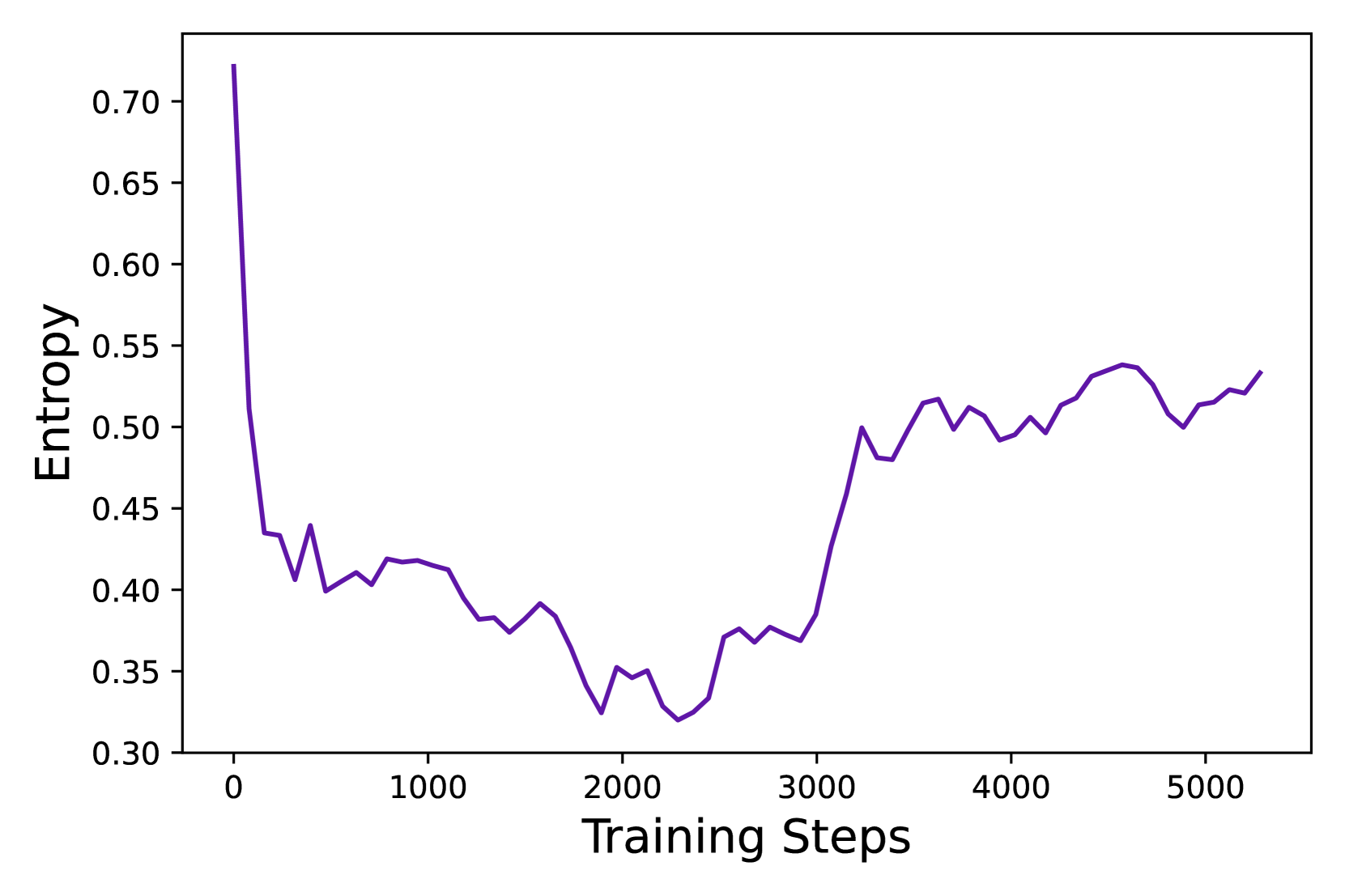

🔼 The figure shows the entropy of the actor model during the reinforcement learning training process. The entropy measures the uncertainty or randomness in the model’s probability distribution over actions. A high entropy indicates that the model explores different actions with roughly equal probability, while a low entropy indicates that the model is more deterministic and tends to favor a smaller subset of actions. This plot helps to visualize the exploration-exploitation balance during training. A sharp decrease in entropy might suggest premature convergence or an issue with the training process. In this case, the figure shows an entropy collapse phenomenon, which is often undesirable.

read the caption

(b) Entropy of actor model.

🔼 This figure displays two line graphs illustrating the impact of the Clip-Higher strategy on the performance of a reinforcement learning model during training. The left graph shows the accuracy on the AIME (Algebra, IIME, Mathematics) test set, demonstrating improved performance with Clip-Higher. The right graph depicts the entropy of the model’s generated probability distribution, revealing that Clip-Higher prevents entropy collapse which is a common problem that leads to poor performance in reinforcement learning. The comparison highlights how Clip-Higher enhances both accuracy and the diversity of the model’s output during training.

read the caption

Figure 2: The accuracy on the AIME test set and the entropy of the actor model’s generated probabilities during the RL training process, both before and after applying Clip-Higher strategy.

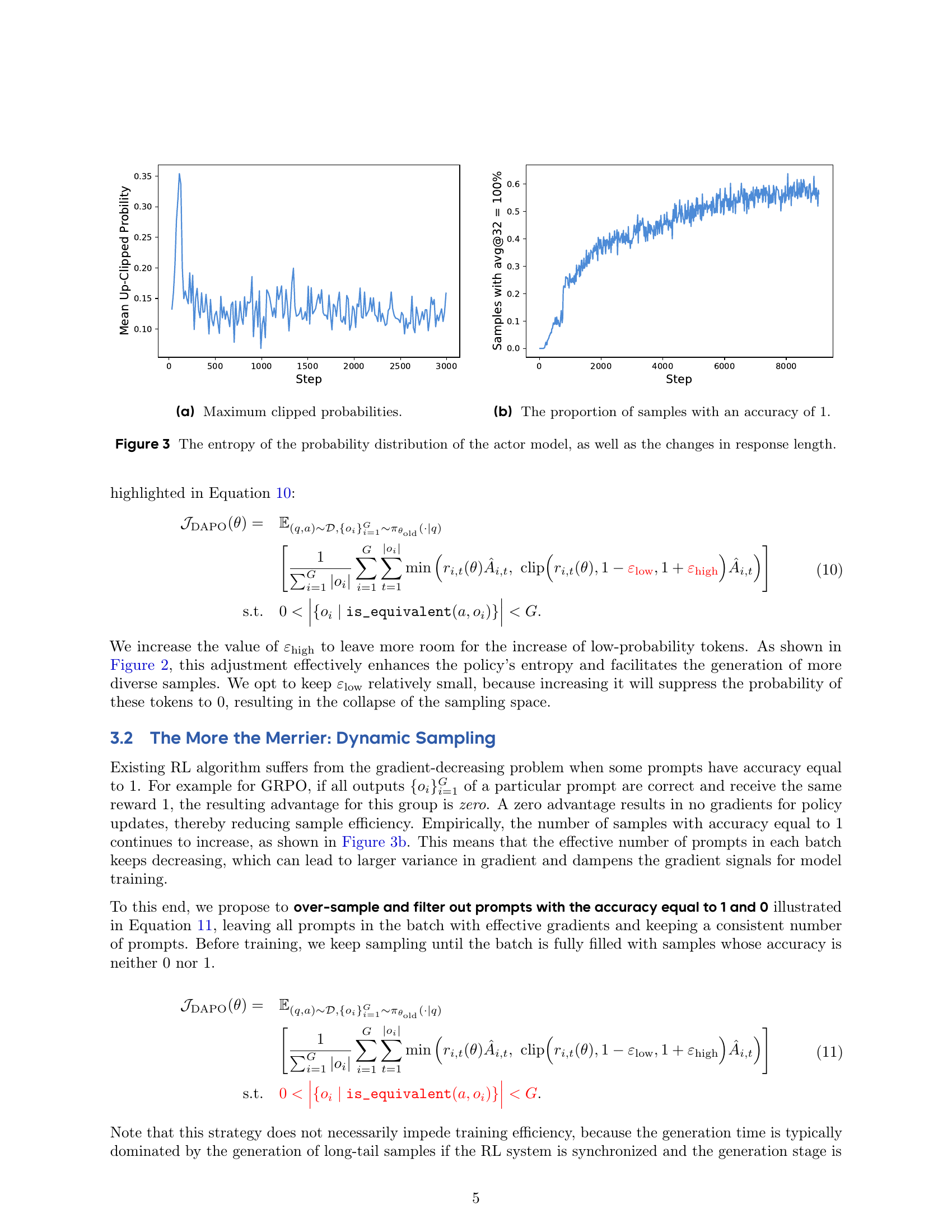

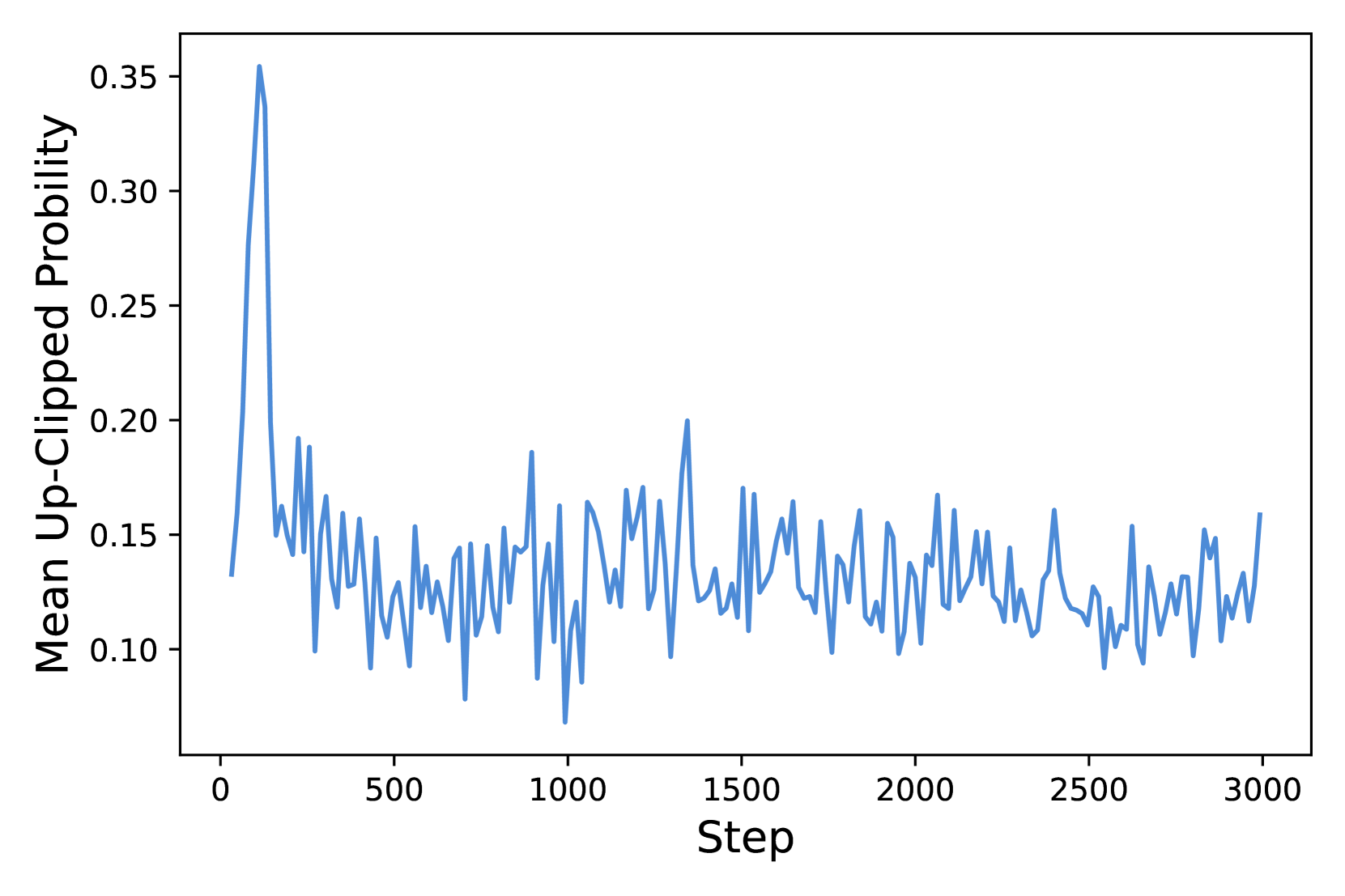

🔼 The figure shows the maximum probability of clipped tokens during training. The Clip-Higher strategy, discussed in the paper, modifies the clipping range of the importance sampling ratio in the policy optimization. This graph illustrates the effect of this modification on the probability distribution, revealing how the strategy impacts the exploration-exploitation trade-off during training, by decoupling the lower and upper bounds of the clipping range. It indirectly demonstrates the effect of the Clip-Higher method on the diversity of the model’s responses.

read the caption

(a) Maximum clipped probabilities.

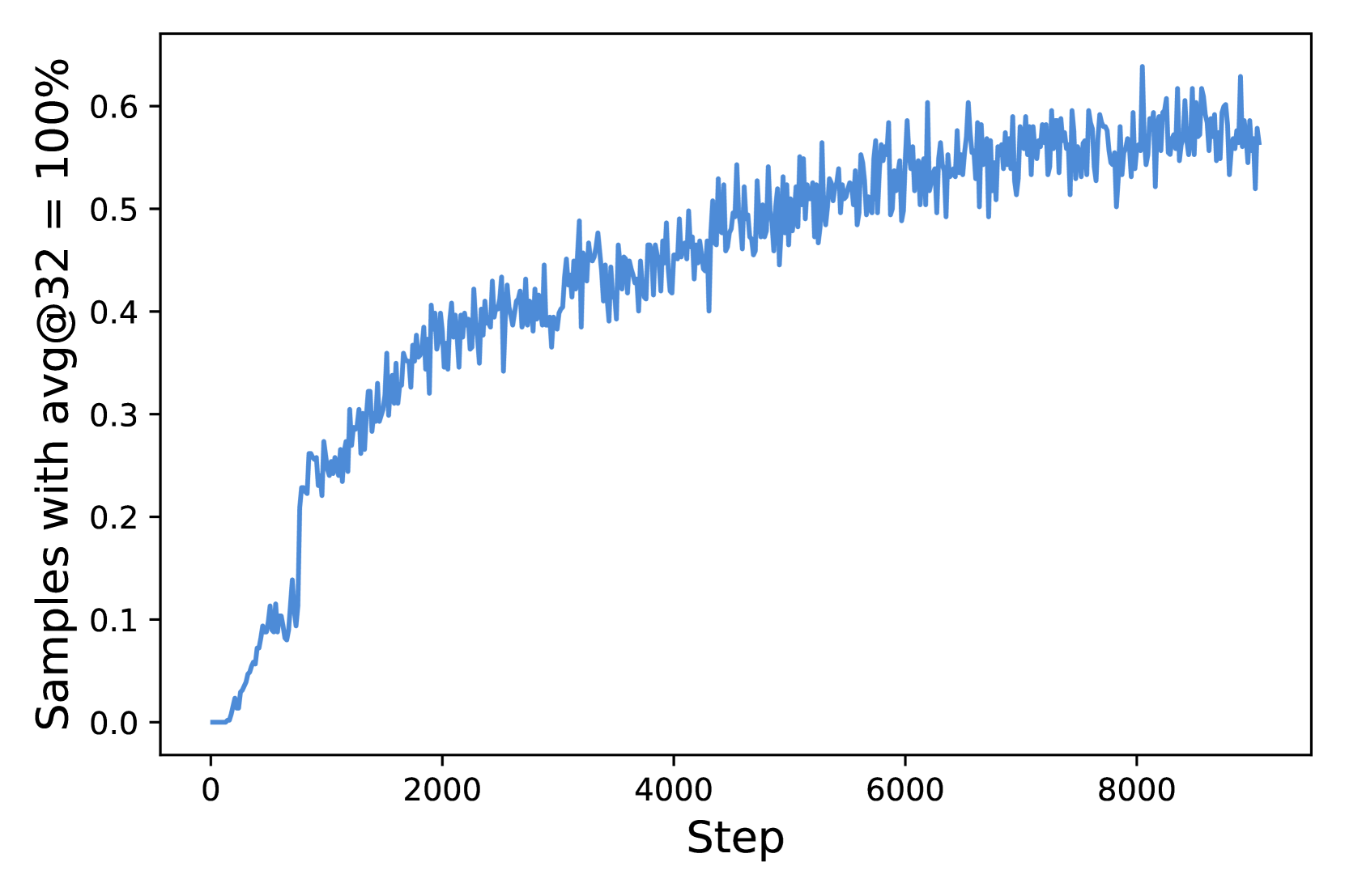

🔼 This figure shows the percentage of samples in each training batch that achieve 100% accuracy on the AIME dataset. It illustrates how frequently the model produces perfect answers, potentially indicating overfitting or a lack of exploration in later training steps.

read the caption

(b) The proportion of samples with an accuracy of 1.

🔼 This figure displays two sub-figures visualizing the training dynamics of the actor model in a reinforcement learning process. The left sub-figure shows the maximum clipped probabilities during training, indicating the diversity of the model’s output distribution. The right sub-figure presents the proportion of samples with 100% accuracy over training steps, illustrating how often the model produces perfect answers.

read the caption

Figure 3: The entropy of the probability distribution of the actor model, as well as the changes in response length.

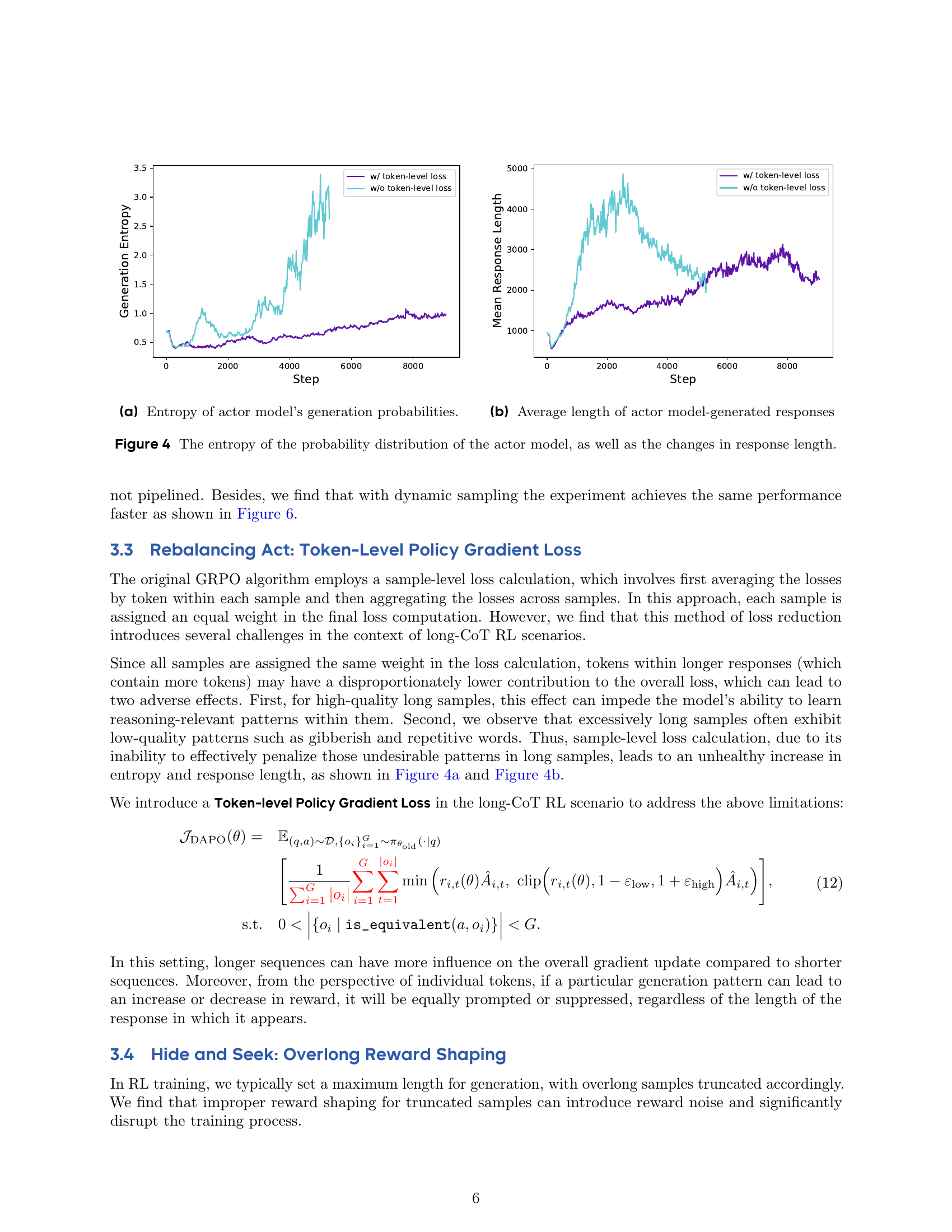

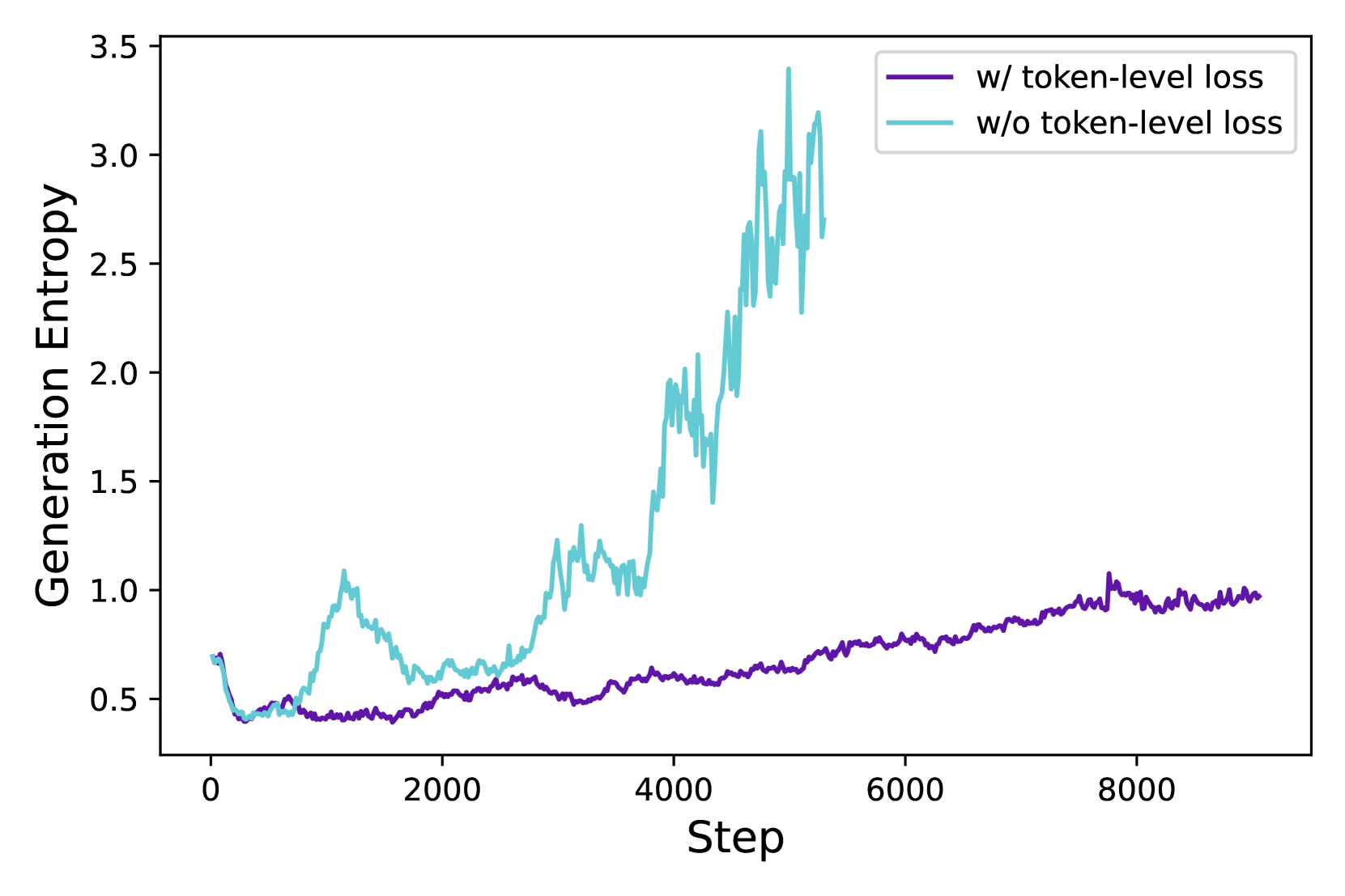

🔼 This figure shows a graph illustrating the change in entropy of the probability distribution of the actor model’s generated responses during the reinforcement learning process. High entropy indicates greater diversity in the model’s responses, while low entropy suggests less diverse and potentially repetitive outputs.

read the caption

(a) Entropy of actor model’s generation probabilities.

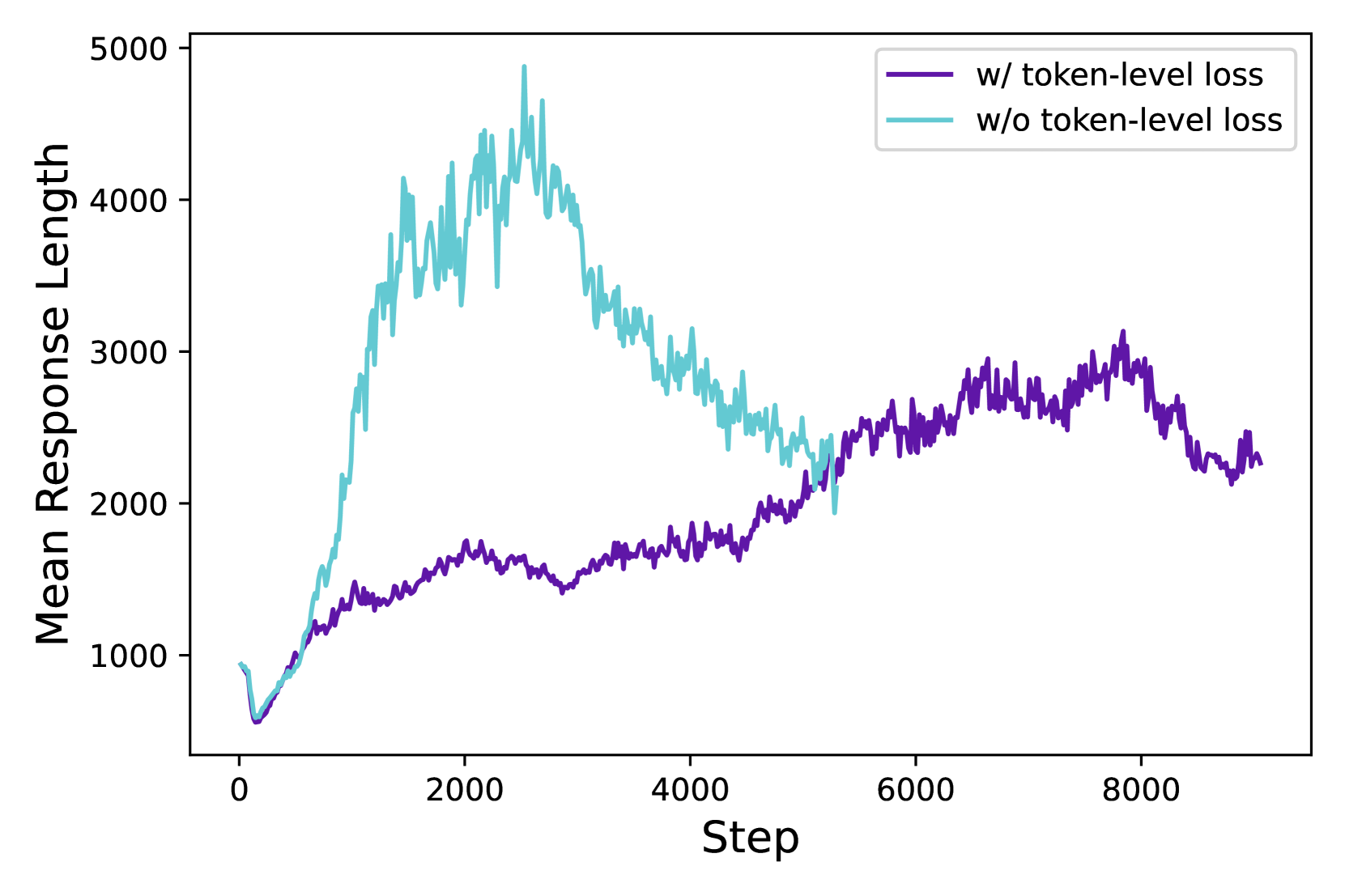

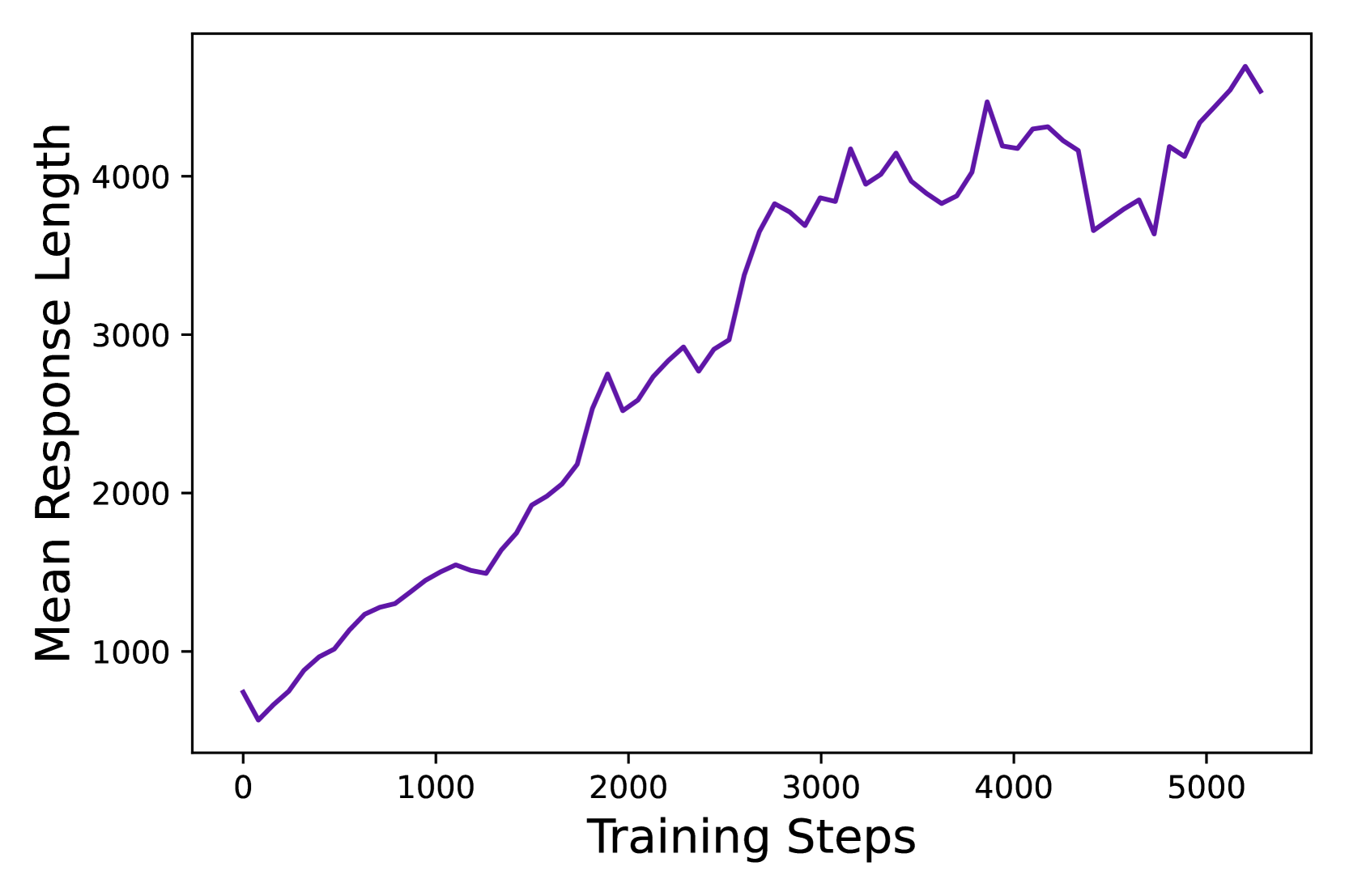

🔼 This figure shows the average length of the responses generated by the actor model during reinforcement learning training. The x-axis represents the training steps, and the y-axis represents the average response length in tokens or words. The plot visualizes how the average length of generated responses changes over the course of the training. It likely illustrates whether the model learns to generate longer, more comprehensive responses as it progresses through training.

read the caption

(b) Average length of actor model-generated responses

🔼 Figure 4 presents two line graphs illustrating the training dynamics of a reinforcement learning model for large language models. The left graph shows the entropy of the actor model’s probability distribution over training steps. High entropy indicates greater exploration and diversity in the model’s responses, while low entropy suggests a more deterministic and potentially less effective model. The right graph displays the average response length generated by the model over the same training steps. This length is important because it relates to the complexity of reasoning involved. Together, these graphs provide insights into how the model’s exploration-exploitation balance and response complexity evolve during training, indicating the model’s learning progress and potential issues.

read the caption

Figure 4: The entropy of the probability distribution of the actor model, as well as the changes in response length.

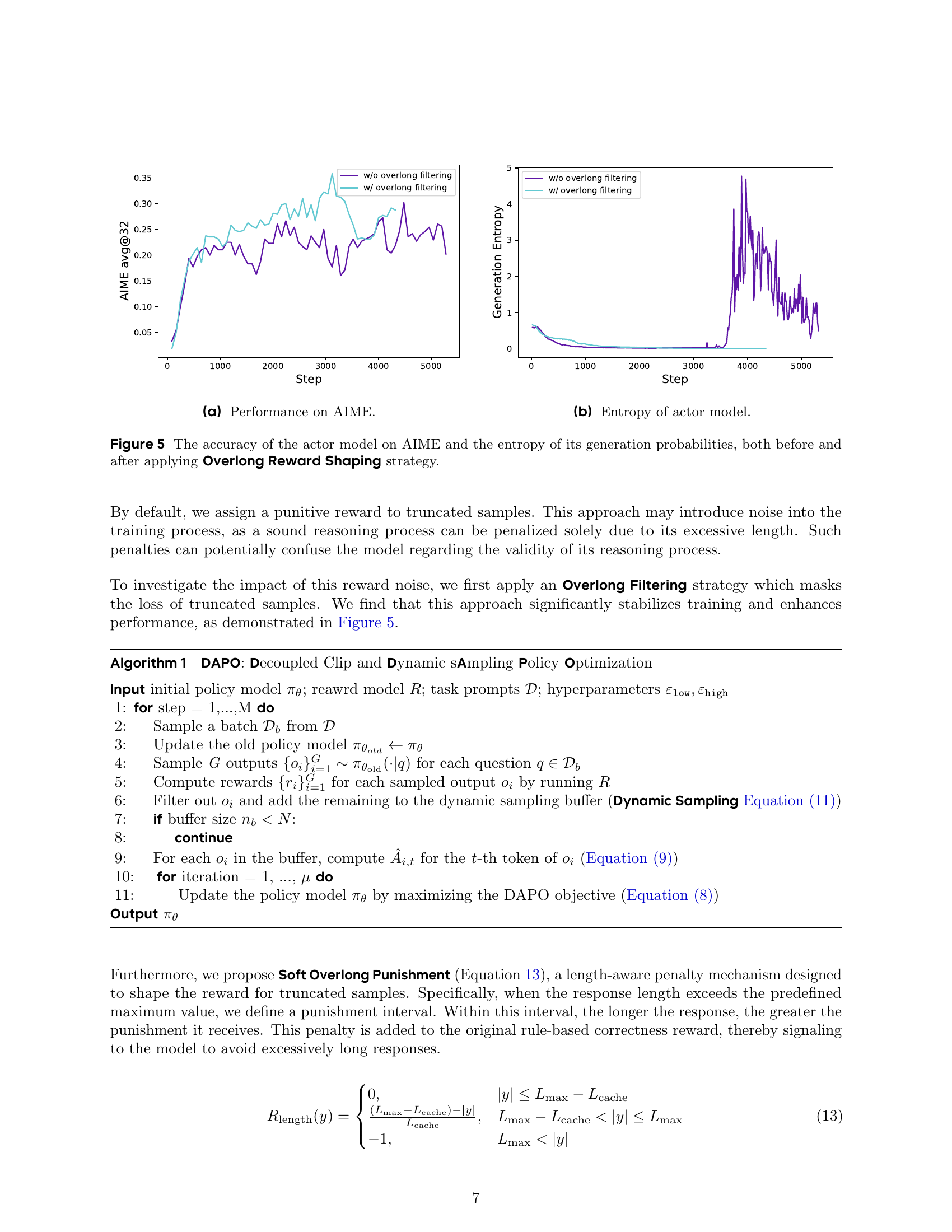

🔼 This figure shows the average accuracy scores achieved by the DAPO algorithm on the AIME 2024 benchmark during the reinforcement learning training process. The x-axis represents the training steps, and the y-axis represents the average accuracy (avg@32) across multiple evaluations. The plot displays how the accuracy of the model improves over the course of training.

read the caption

(a) Performance on AIME.

🔼 The figure shows the entropy of the actor model during reinforcement learning training. Entropy measures the uncertainty or randomness in the model’s predicted probability distribution. High entropy indicates the model is exploring diverse actions, while low entropy suggests the model is becoming deterministic and less exploratory. This plot helps analyze if the model is learning effectively or getting stuck in a suboptimal solution due to premature convergence.

read the caption

(b) Entropy of actor model.

🔼 Figure 5 displays the performance of the actor model (the part of the reinforcement learning model that selects actions) on the AIME (Algebra, including Mathematical Intelligence) benchmark and the entropy of its generated probabilities. Two scenarios are presented: one without the ‘Overlong Reward Shaping’ strategy and another with it. The ‘Overlong Reward Shaping’ strategy adjusts rewards for excessively long generated responses, aiming to prevent the model from producing overly long, and potentially less accurate, responses. The figure illustrates how this strategy influences both the model’s accuracy on AIME and the randomness of the responses it produces (measured by entropy). The comparison allows for analysis of the impact of Overlong Reward Shaping on both the accuracy and the diversity of the model’s outputs.

read the caption

Figure 5: The accuracy of the actor model on AIME and the entropy of its generation probabilities, both before and after applying Overlong Reward Shaping strategy.

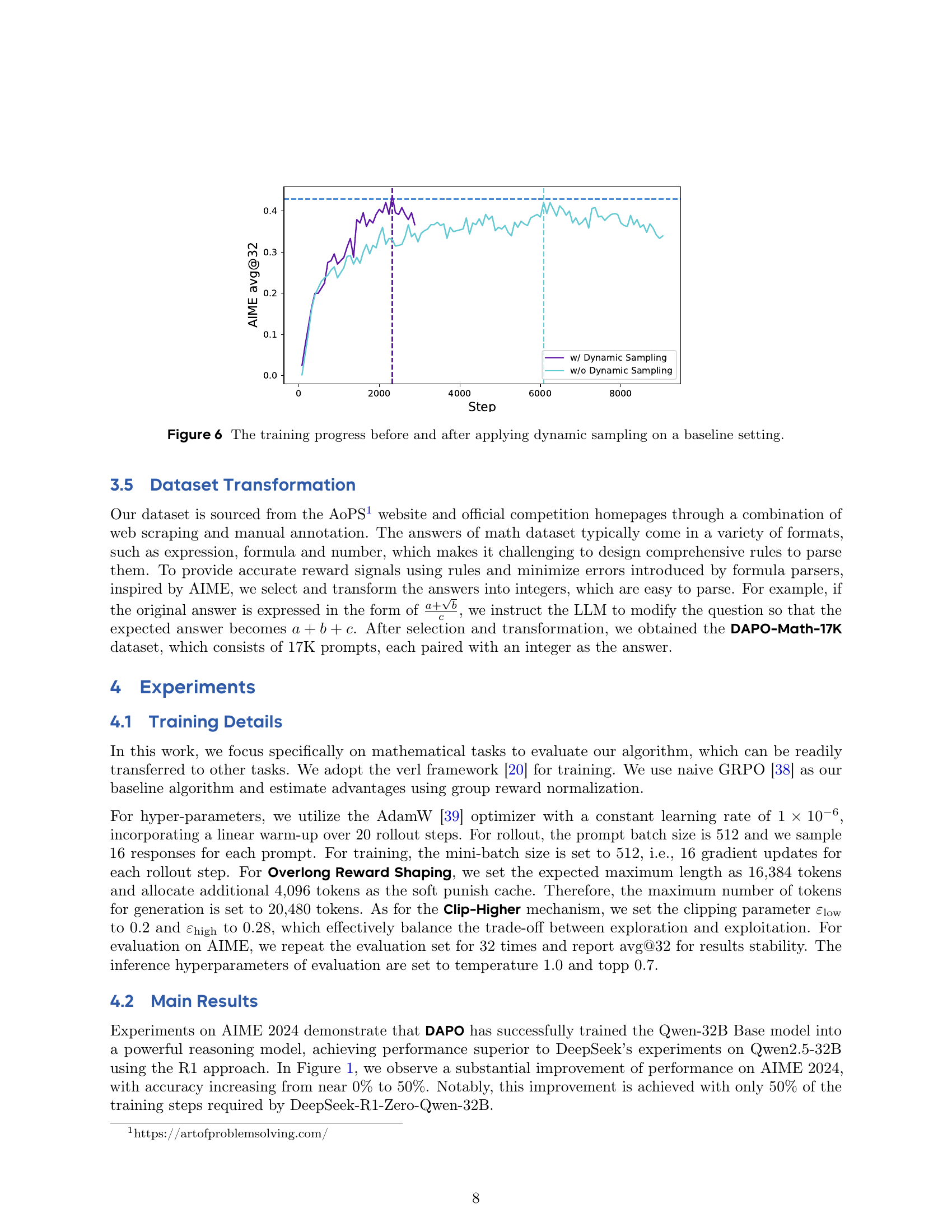

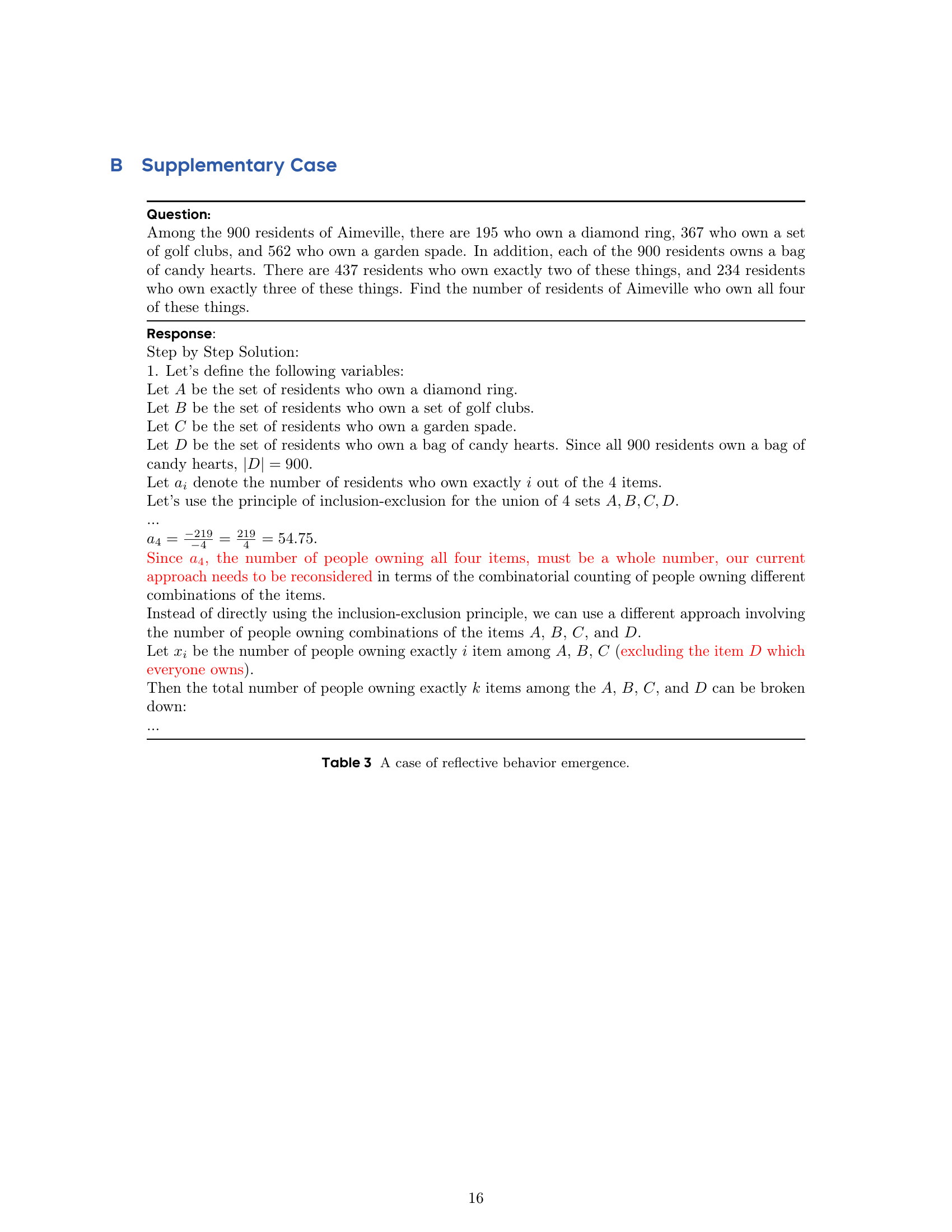

🔼 This figure displays the training progress curves for a baseline setting of the reinforcement learning algorithm, both before and after the implementation of dynamic sampling. The x-axis represents the training steps, and the y-axis likely shows a metric related to performance or accuracy (likely AIME avg@32), indicating how well the model performs on the AIME 2024 benchmark over the course of training. The curves illustrate the effect of dynamic sampling on the model’s learning trajectory. Dynamic sampling likely leads to a faster improvement in performance and improved stability of the training process, as suggested by the smoother, steeper curve after its implementation.

read the caption

Figure 6: The training progress before and after applying dynamic sampling on a baseline setting.

More on tables

| Model | |

| DeepSeek-R1-Zero-Qwen-32B | 47 |

| Naive GRPO | 30 |

| + Overlong Filtering | 36 |

| + Clip-Higher | 38 |

| + Soft Overlong Punishment | 41 |

| + Token-level Loss | 42 |

| + Dynamic Sampling (DAPO) | 50 |

🔼 This table presents the performance of the Decoupled Clip and Dynamic sampling Policy Optimization (DAPO) algorithm on the AIME 2024 benchmark. It shows the incremental improvements in accuracy achieved by progressively adding key techniques to a baseline Group Relative Policy Optimization (GRPO) approach. The techniques include Overlong Filtering, Clip-Higher, Soft Overlong Punishment, Token-level Loss, and finally Dynamic Sampling, culminating in the DAPO algorithm. The table highlights the contribution of each technique to the overall accuracy improvement.

read the caption

Table 1: Main results of progressive techniques applied to DAPO

| Question: |

| Given that the base of the tetrahedron is an equilateral triangle, the projection of point on the face is the orthocenter of , the dihedral angle is , and , find the volume of the tetrahedron. The answer is in the form , so give the value of . |

| Response: |

| Let us consider the given problem: We need to determine … |

| … |

| Next, let have coordinates . Since , the distance formula gives us , so we can write . Now, remember that is the orthogonal projection of |

| … |

| However, wait a moment, let’s rethink about the dihedral angle involving planes in a more thoughtful geometric way. Consider the plane , which lies entirely on the xy coordinate plane (as ). Let the plane . The point projected perpendicularly to plane lands on . The line … |

| … |

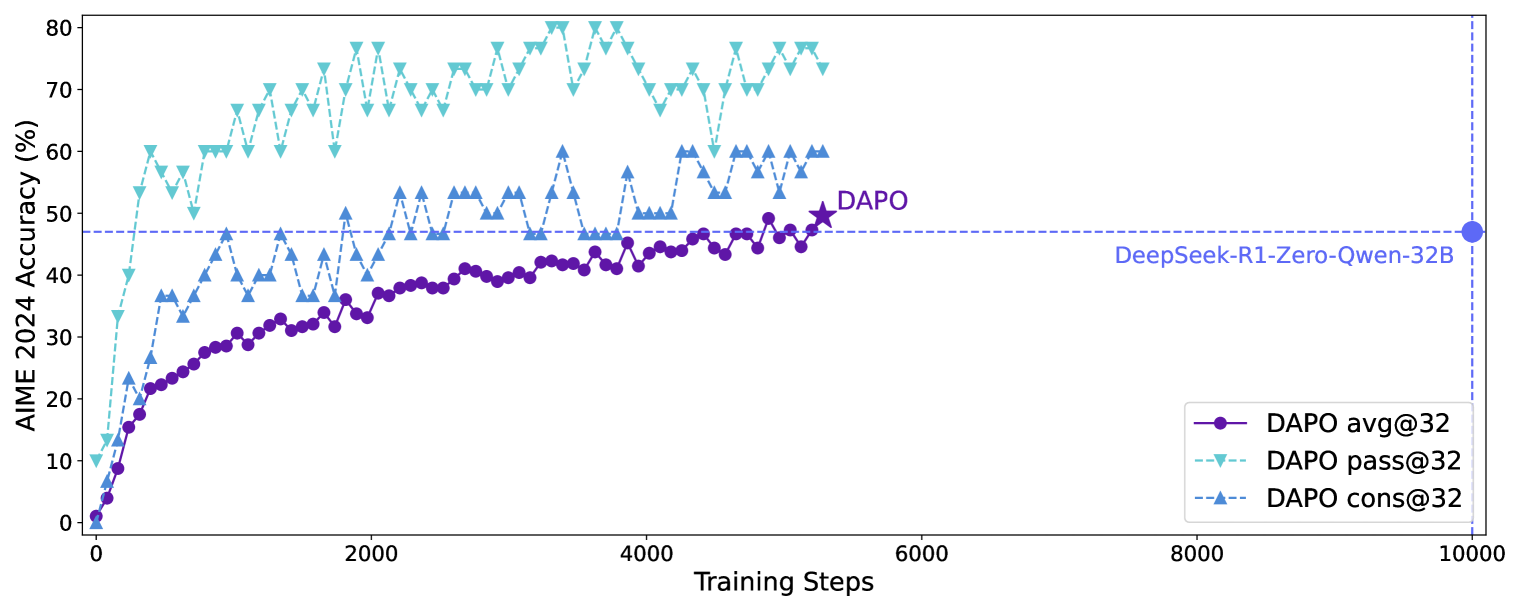

🔼 This table showcases examples of reasoning patterns exhibited by the language model during reinforcement learning. It demonstrates the emergence of reflective behaviors, such as checking and re-evaluating previous steps in the reasoning process, that develop over the course of training. The examples highlight how the model’s problem-solving approach evolves from a more straightforward, linear process to a more sophisticated approach involving self-correction and critical evaluation.

read the caption

Table 2: Emergence of Reflective Behavior in Reinforcement Learning

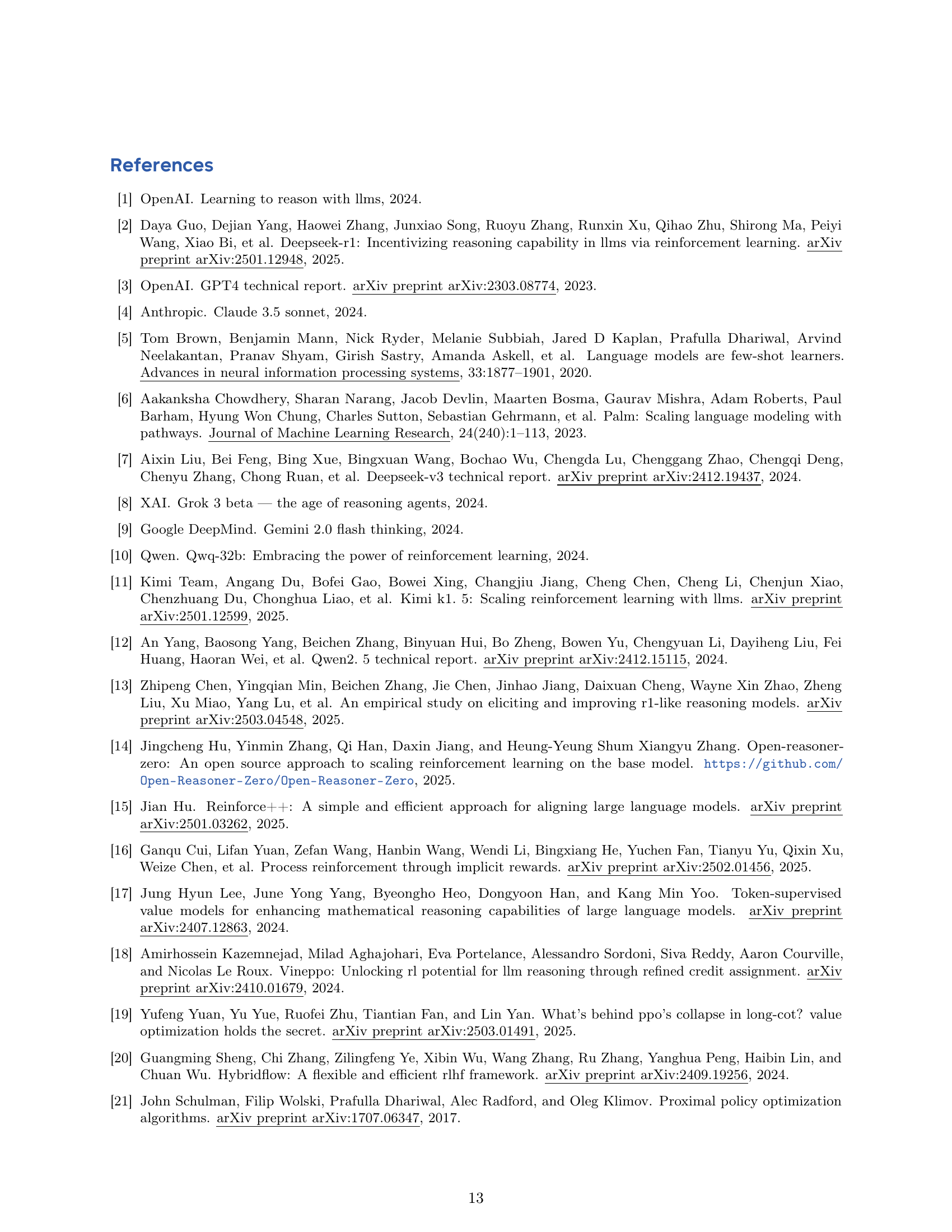

| Question: |

| Among the 900 residents of Aimeville, there are 195 who own a diamond ring, 367 who own a set of golf clubs, and 562 who own a garden spade. In addition, each of the 900 residents owns a bag of candy hearts. There are 437 residents who own exactly two of these things, and 234 residents who own exactly three of these things. Find the number of residents of Aimeville who own all four of these things. |

| Response: |

| Step by Step Solution: |

| 1. Let’s define the following variables: |

| Let be the set of residents who own a diamond ring. |

| Let be the set of residents who own a set of golf clubs. |

| Let be the set of residents who own a garden spade. |

| Let be the set of residents who own a bag of candy hearts. Since all 900 residents own a bag of candy hearts, . |

| Let denote the number of residents who own exactly out of the 4 items. |

| Let’s use the principle of inclusion-exclusion for the union of 4 sets . |

| … |

| . |

| Since , the number of people owning all four items, must be a whole number, our current approach needs to be reconsidered in terms of the combinatorial counting of people owning different combinations of the items. Instead of directly using the inclusion-exclusion principle, we can use a different approach involving the number of people owning combinations of the items , , , and . |

| Let be the number of people owning exactly item among , , (excluding the item which everyone owns). |

| Then the total number of people owning exactly items among the , , , and can be broken down: |

| … |

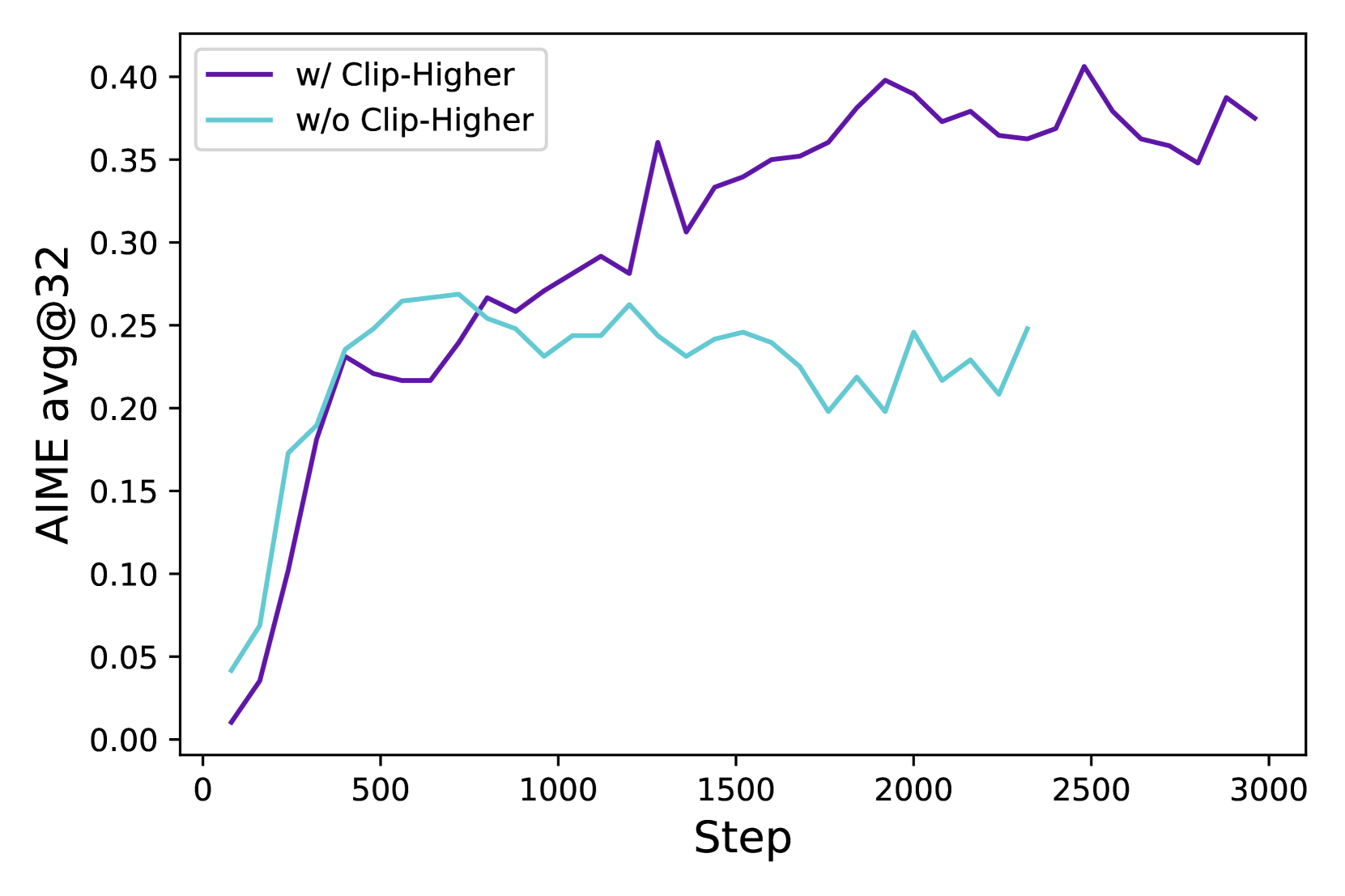

🔼 This table illustrates the emergence of reflective reasoning in a large language model (LLM) during reinforcement learning. It shows an example where, in the early stages of training, the model’s reasoning process is straightforward and lacks self-reflection. However, as training progresses, the model begins to exhibit more sophisticated reasoning, including introspection and correction of previous steps. This demonstrates the LLM’s evolving ability to reflect on its own reasoning process during the training process and indicates the effectiveness of the reinforcement learning approach. The specific example is a mathematical reasoning problem where the model’s solution process significantly improves over time.

read the caption

Table 3: A case of reflective behavior emergence.

Full paper#