TL;DR#

Large language models (LLMs) typically need massive computational resources and datasets. This limits accessibility for resource-constrained settings. It is important to investigate the potential of reinforcement learning (RL) to improve reasoning in smaller LLMs. The study will focus on a 1.5B parameter model under strict constraints. Training is done on 4 NVIDIA A40 GPUs within 24 hours. The study aims to addresses the question of whether LLM can be elevated using an RL-based approach.

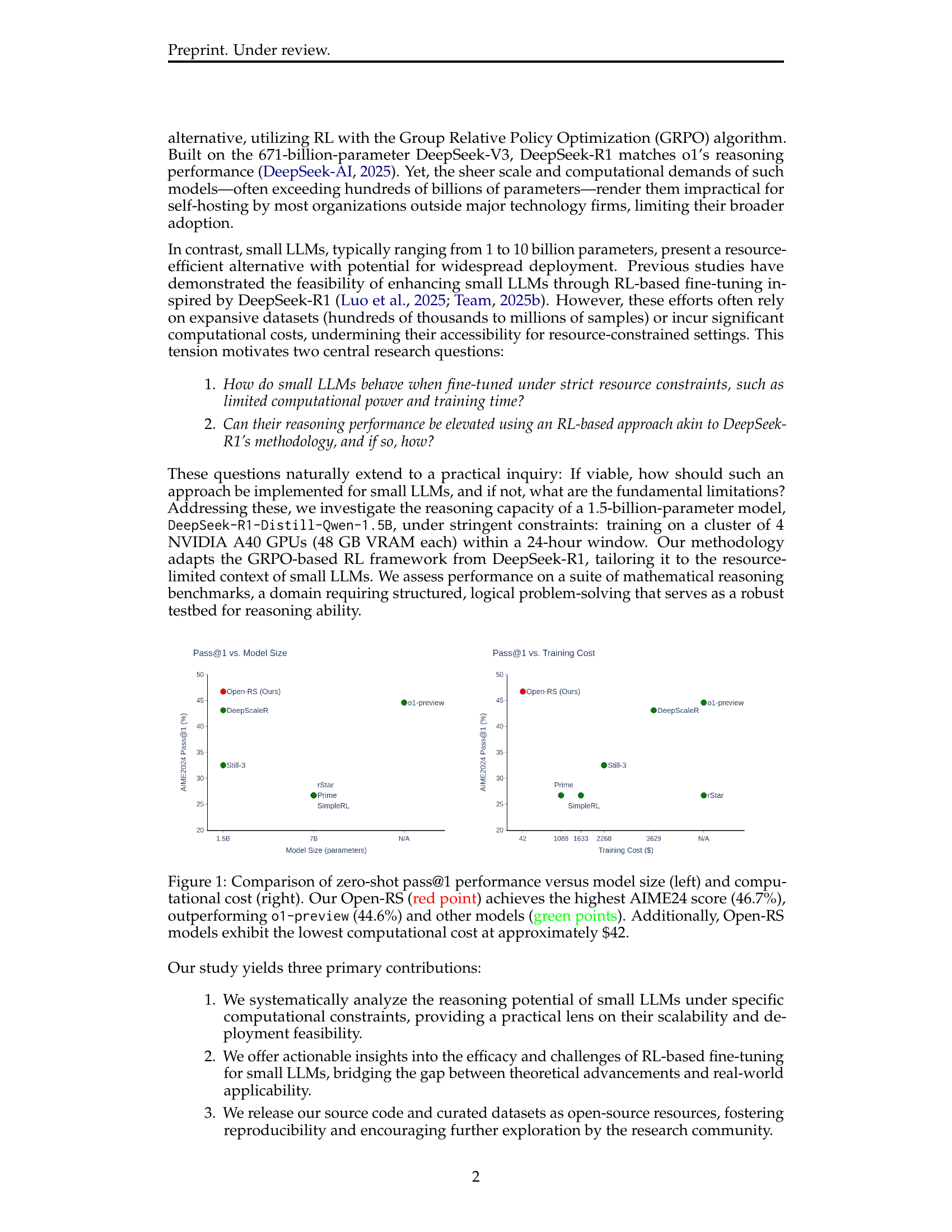

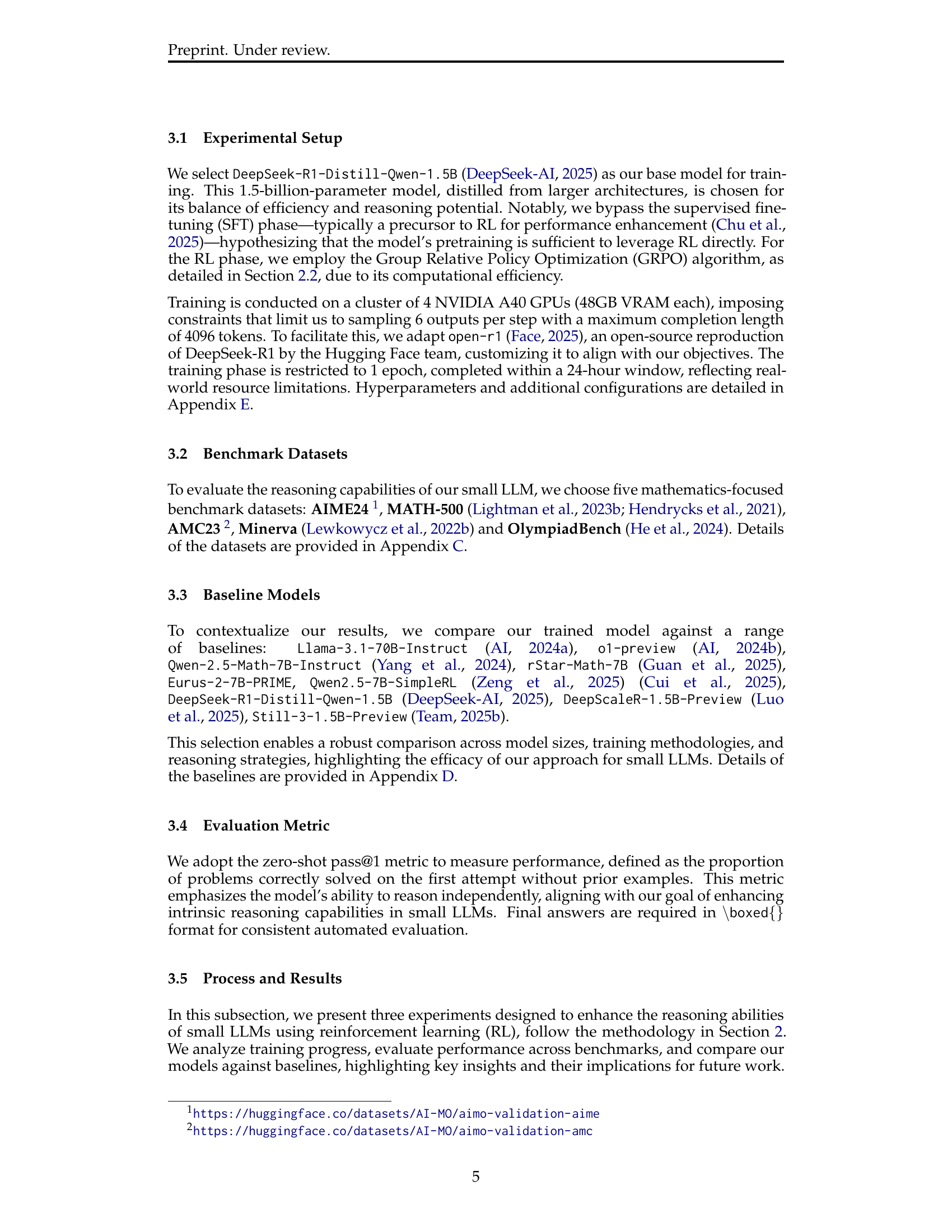

The paper adapts the Group Relative Policy Optimization (GRPO) algorithm and curates a compact, high-quality mathematical reasoning dataset. This enables three experiments to explore model behavior and performance. The findings show reasoning gains using only 7,000 samples at a low training cost. The code and datasets are released as open-source resources. The Open-RS models outperform other models. For example, the Open-RS3 achieves the highest AIME24 score, surpassing o1-preview.

Key Takeaways#

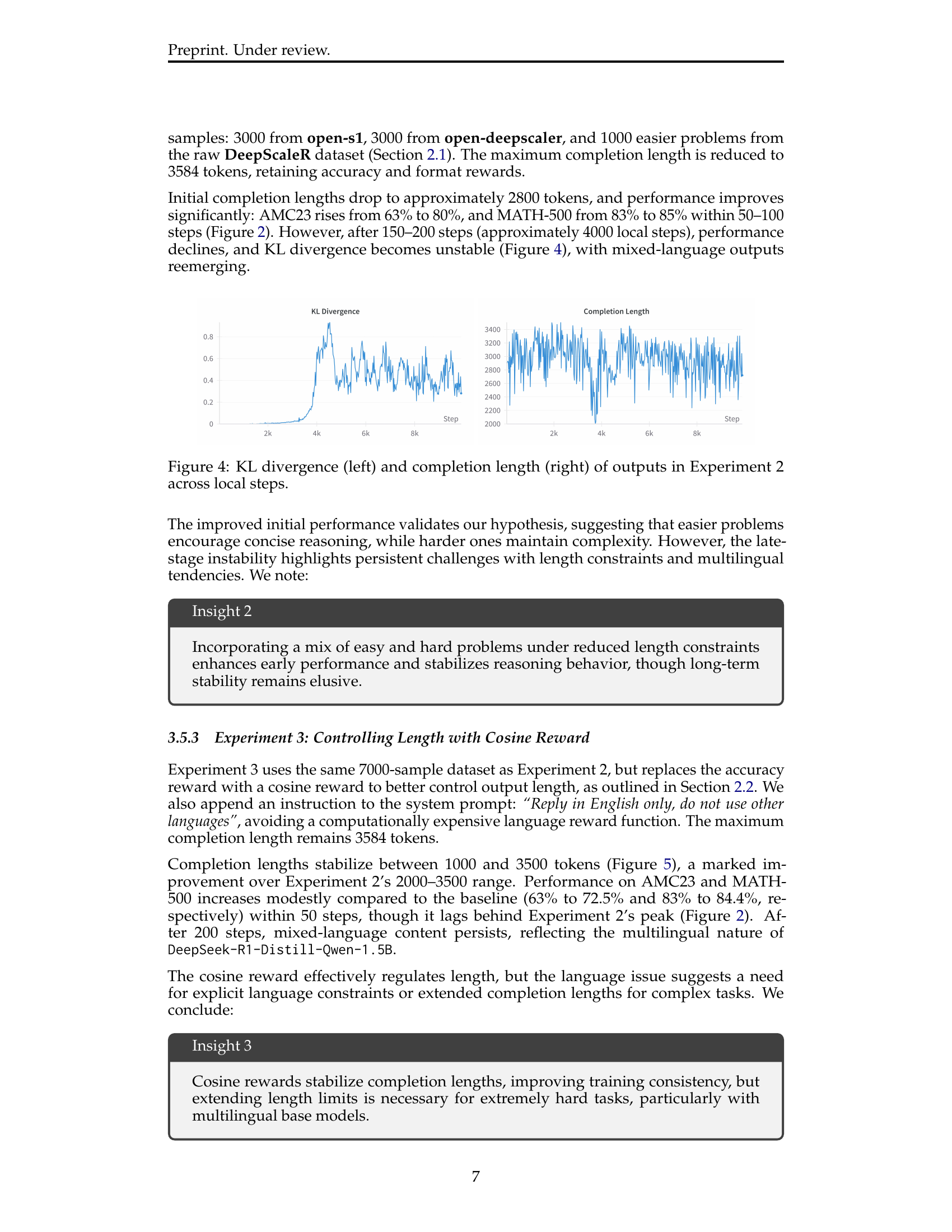

Why does it matter?#

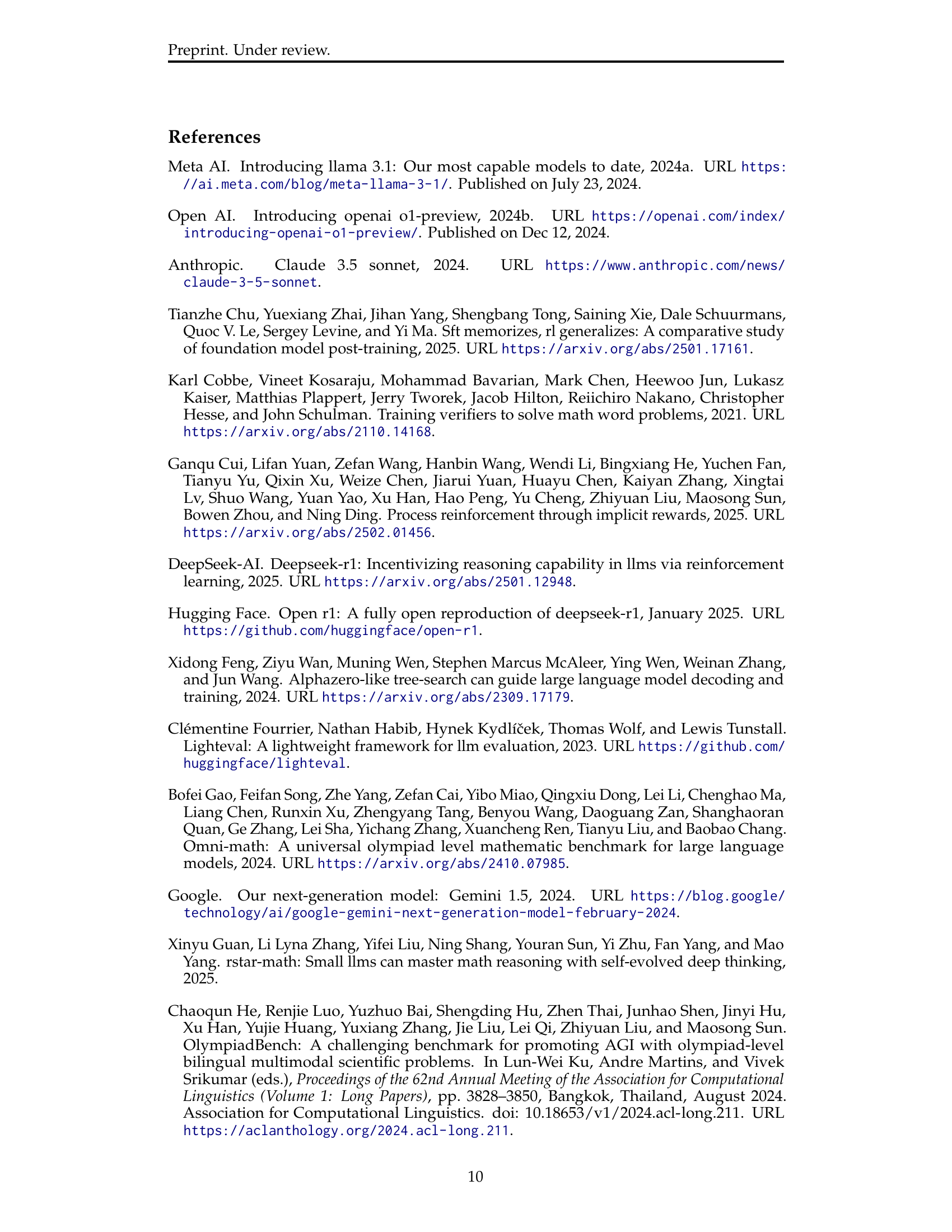

This research offers a cost-effective alternative to scaling LLMs. It provides insights into trade-offs and lays the foundation for scalable, reasoning-capable LLMs in resource-limited environments. By releasing code/datasets as open-source resources, it fosters reproducibility and further exploration by the research community.

Visual Insights#

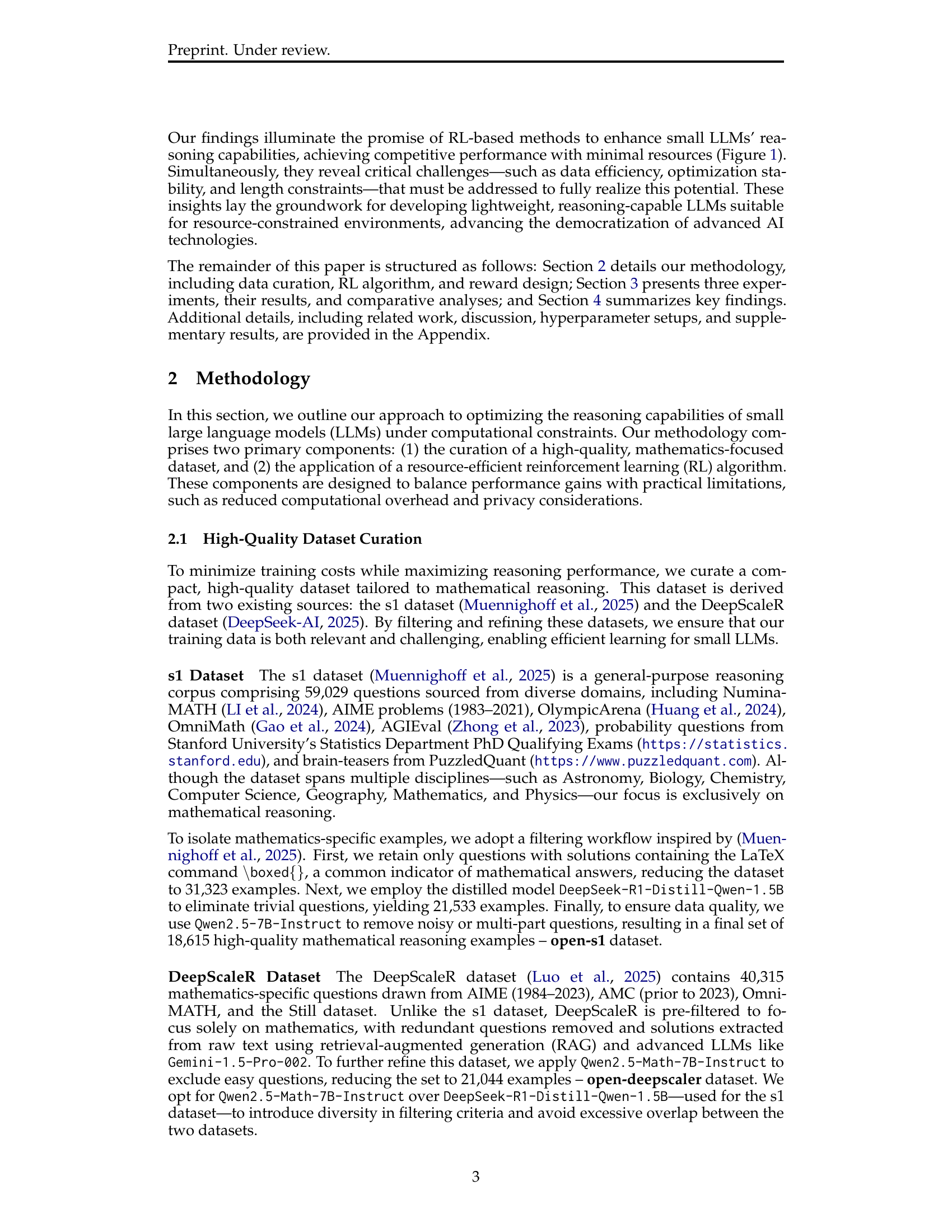

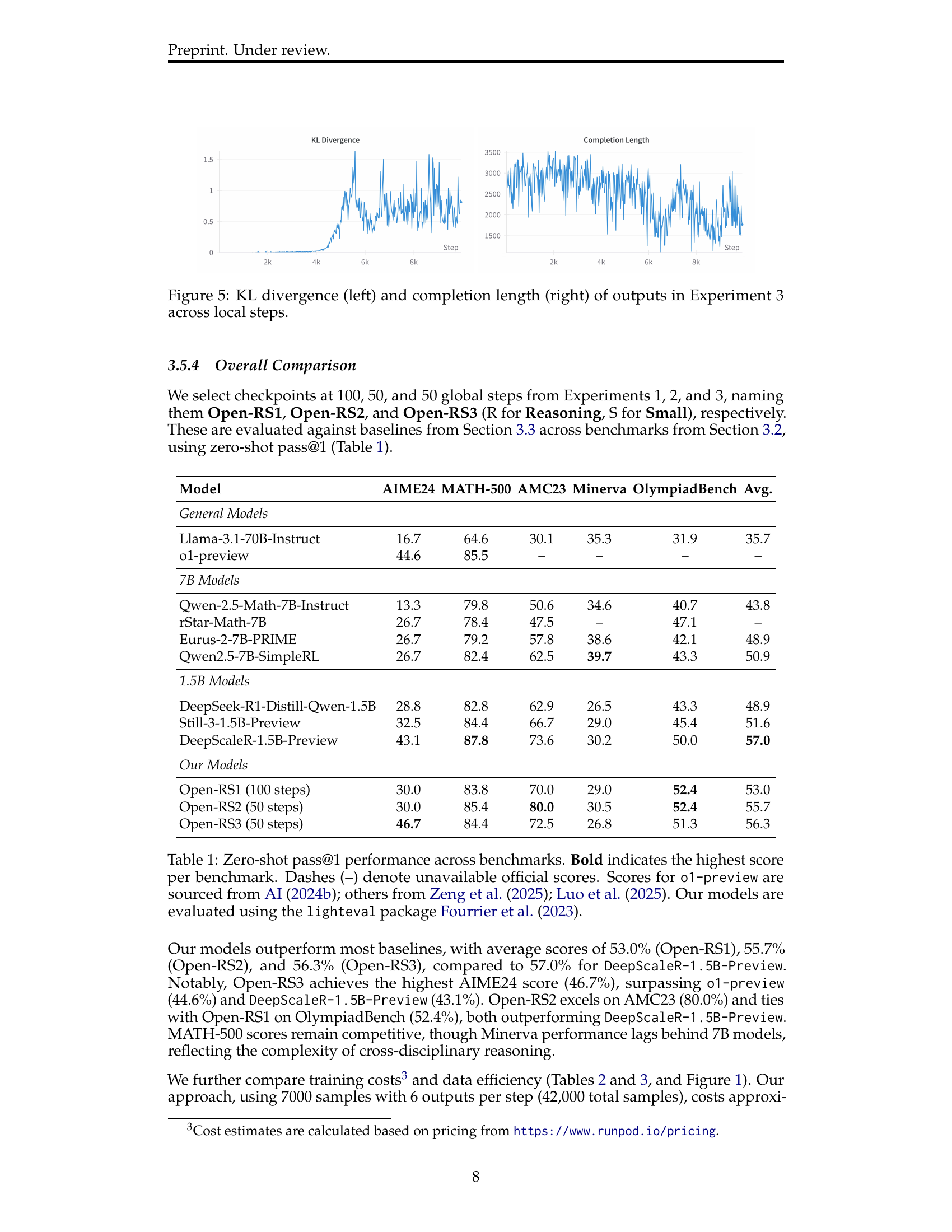

| Model | AIME24 | MATH-500 | AMC23 | Minerva | OlympiadBench | Avg. |

| General Models | ||||||

| Llama-3.1-70B-Instruct | 16.7 | 64.6 | 30.1 | 35.3 | 31.9 | 35.7 |

| o1-preview | 44.6 | 85.5 | – | – | – | – |

| 7B Models | ||||||

| Qwen-2.5-Math-7B-Instruct | 13.3 | 79.8 | 50.6 | 34.6 | 40.7 | 43.8 |

| rStar-Math-7B | 26.7 | 78.4 | 47.5 | – | 47.1 | – |

| Eurus-2-7B-PRIME | 26.7 | 79.2 | 57.8 | 38.6 | 42.1 | 48.9 |

| Qwen2.5-7B-SimpleRL | 26.7 | 82.4 | 62.5 | 39.7 | 43.3 | 50.9 |

| 1.5B Models | ||||||

| DeepSeek-R1-Distill-Qwen-1.5B | 28.8 | 82.8 | 62.9 | 26.5 | 43.3 | 48.9 |

| Still-3-1.5B-Preview | 32.5 | 84.4 | 66.7 | 29.0 | 45.4 | 51.6 |

| DeepScaleR-1.5B-Preview | 43.1 | 87.8 | 73.6 | 30.2 | 50.0 | 57.0 |

| Our Models | ||||||

| Open-RS1 (100 steps) | 30.0 | 83.8 | 70.0 | 29.0 | 52.4 | 53.0 |

| Open-RS2 (50 steps) | 30.0 | 85.4 | 80.0 | 30.5 | 52.4 | 55.7 |

| Open-RS3 (50 steps) | 46.7 | 84.4 | 72.5 | 26.8 | 51.3 | 56.3 |

🔼 This table presents a comparison of zero-shot performance across multiple mathematics reasoning benchmarks. Zero-shot means the models were not given any examples before evaluation. Pass@1 refers to the percentage of problems solved correctly on the first try. The table compares the performance of several different large language models (LLMs), including the authors’ models (Open-RS1, Open-RS2, and Open-RS3) and various baselines. The best result for each benchmark is highlighted in bold. Note that some official scores were unavailable for some baselines, indicated by dashes. The table indicates the source of the scores used for comparison.

read the caption

Table 1: Zero-shot pass@1 performance across benchmarks. Bold indicates the highest score per benchmark. Dashes (–) denote unavailable official scores. Scores for o1-preview are sourced from AI (2024b); others from Zeng et al. (2025); Luo et al. (2025). Our models are evaluated using the lighteval package Fourrier et al. (2023).

In-depth insights#

RL’s Efficiency#

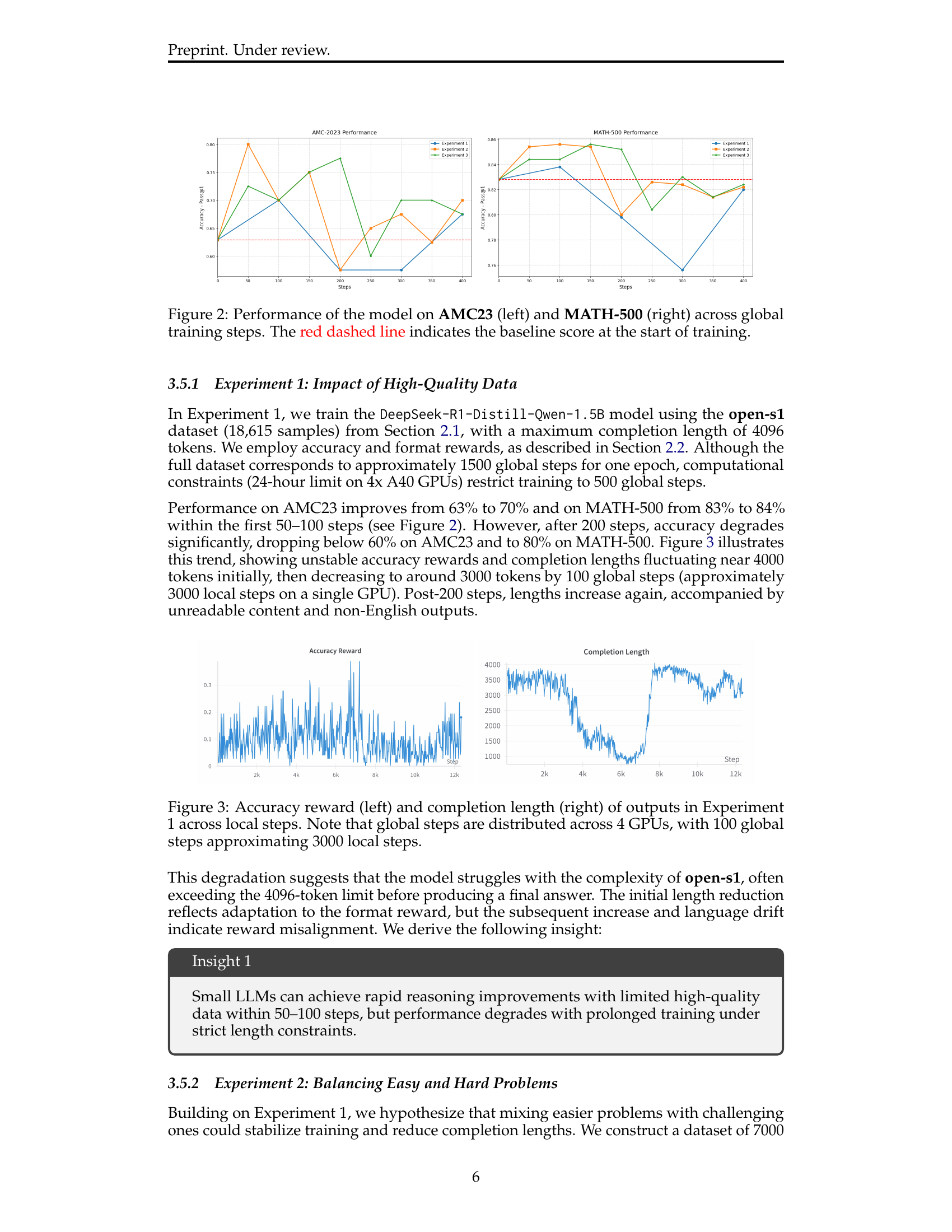

RL’s efficiency in this context centers on achieving substantial reasoning gains with minimal resources. The paper demonstrates that even under strict computational constraints, small LLMs can exhibit rapid improvement. This contrasts with traditional approaches that demand extensive datasets and computational power. The authors highlight the importance of a compact, high-quality dataset curated for mathematical reasoning, enabling efficient learning. Furthermore, the adaptation of the GRPO algorithm, designed to reduce computational overhead by eliminating the need for a separate critic model, contributes to resource efficiency. However, the study also reveals challenges, such as optimization instability and length constraints, that emerge with prolonged training, suggesting a trade-off between efficiency and sustained performance.

GRPO Adaptation#

Adapting GRPO for smaller LLMs involves key considerations. Resource constraints necessitate a balance between exploration and exploitation, with careful tuning of hyperparameters like clipping range and KL penalty. GRPO’s inherent efficiency, eliminating the need for a separate critic model, makes it suitable, but the group size ‘G’ must be optimized to ensure sufficient baseline estimation without excessive computational overhead. Reward design is critical; a rule-based system balancing correctness, efficiency, and structural clarity is preferable to resource-intensive neural reward models. Additionally, techniques like reward shaping and curriculum learning could further enhance the adaptation process for better optimization.

Data’s Impact#

While the paper does not explicitly have a heading called “Data’s Impact,” one can infer the significance of data throughout the study. High-quality data is crucial for effective training, especially in resource-constrained scenarios. The study shows that smaller, well-curated datasets tailored to mathematical reasoning can be surprisingly effective in improving the performance of small LLMs. It’s highlighted that mixing ’easy’ and ‘hard’ examples can improve the learning dynamics. However, data alone is not sufficient; there are trade-offs with model size and optimization strategies. Length constraints during training also influence the reasoning abilities of LLMs, implying careful consideration is needed to find the right balance between data quantity, quality, and training methodologies. The cost-effectiveness of the RL-based fine-tuning suggests that with thoughtfully designed data curation, one can achieve comparable, and in some cases superior, results to larger models trained with more extensive resources.

Length Limits#

Length limits present a multifaceted challenge in training language models, particularly smaller ones via reinforcement learning. Constrained generation length, as observed, can prematurely truncate reasoning processes, hindering performance on complex tasks needing extended chains of thought. Balancing generation length is crucial; too short, and solutions are cut off, while excessive lengths can lead to instability and irrelevant content. Optimal length must align with task complexity. Length constraints likely interact with data complexity; simpler datasets may necessitate shorter generations, while harder ones demand expanded limits. The use of cosine reward is a promising strategy to regulate completion lengths, however, extending length limits are necessary for extremely hard tasks, particularly with multilingual base models. Future solutions might involve multi-stage length schedules that adjust generation length dynamically. Further research should explore balancing solution completeness with the risk of instability during prolonged generation.

Scaling Small LLMs#

Scaling small LLMs presents a resource-efficient alternative to large models. The paper investigates the potential of Reinforcement Learning (RL) to improve reasoning in small LLMs under strict constraints, aiming to balance performance gains with limitations such as reduced computational overhead. A critical aspect is data curation focusing on high-quality datasets tailored to mathematical reasoning, which can minimize training costs. RL-based fine-tuning enables the models to refine decision-making processes by optimizing for task-specific rewards. Overcoming challenges like data efficiency, optimization stability, and length constraints is crucial. The study demonstrates that small LLMs can achieve competitive reasoning performance. A key finding is the potential of minimal resources to significantly enhance reasoning, thus promoting the democratization of advanced AI. The emphasis is on scalable, reasoning-capable models.

More visual insights#

More on tables

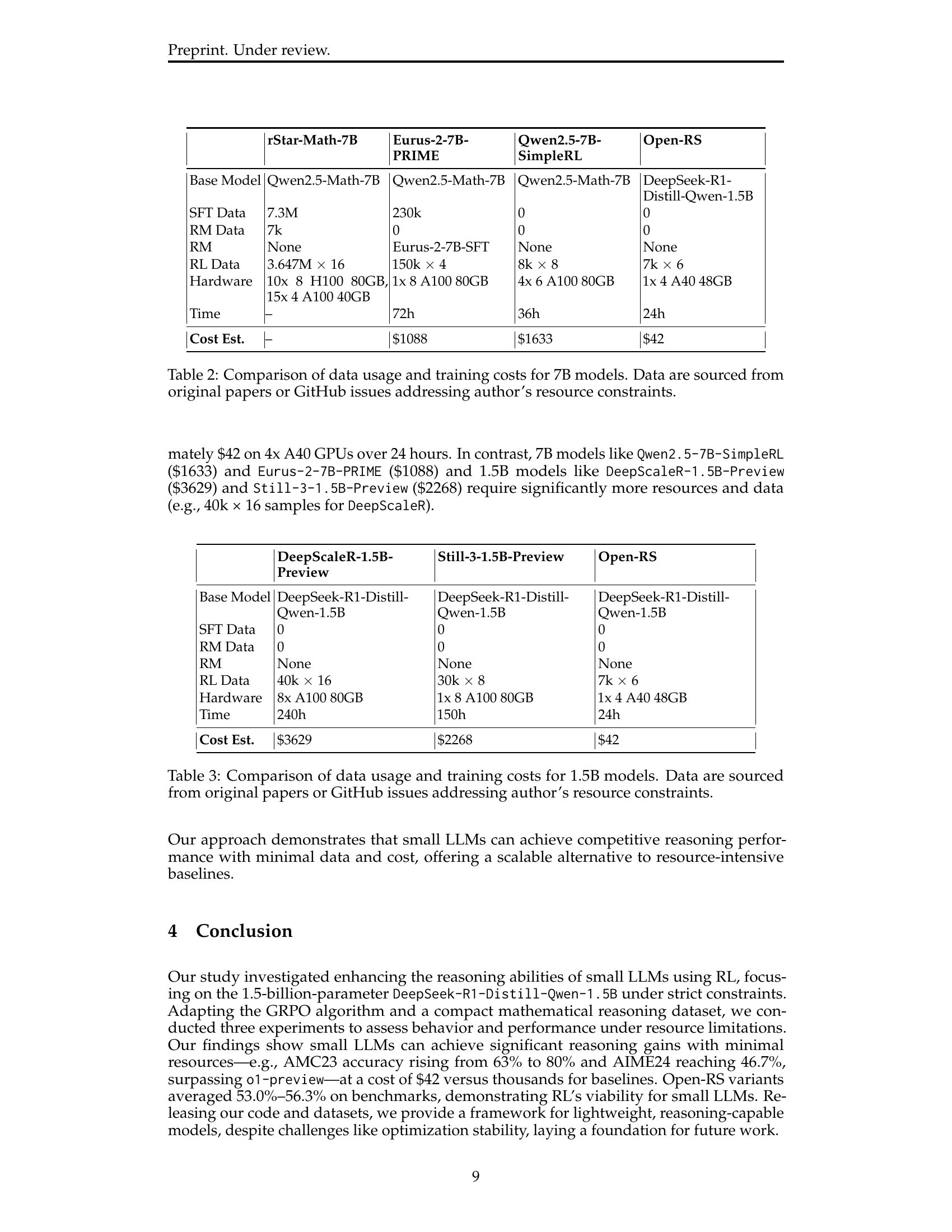

| rStar-Math-7B | Eurus-2-7B-PRIME | Qwen2.5-7B-SimpleRL | Open-RS | |

|---|---|---|---|---|

| Base Model | Qwen2.5-Math-7B | Qwen2.5-Math-7B | Qwen2.5-Math-7B | DeepSeek-R1-Distill-Qwen-1.5B |

| SFT Data | 7.3M | 230k | 0 | 0 |

| RM Data | 7k | 0 | 0 | 0 |

| RM | None | Eurus-2-7B-SFT | None | None |

| RL Data | 3.647M 16 | 150k 4 | 8k 8 | 7k 6 |

| Hardware | 10x 8 H100 80GB, 15x 4 A100 40GB | 1x 8 A100 80GB | 4x 6 A100 80GB | 1x 4 A40 48GB |

| Time | – | 72h | 36h | 24h |

| Cost Est. | – | $1088 | $1633 | $42 |

🔼 This table compares the resource requirements and costs associated with training several 7-billion parameter language models. It specifically highlights the differences in data used (supervision data, reinforcement learning data), the hardware used for training, the training time, and the estimated cost. The data for the models are either taken directly from the papers where the models were introduced or from publicly available GitHub issues where authors discuss training specifics and resource constraints.

read the caption

Table 2: Comparison of data usage and training costs for 7B models. Data are sourced from original papers or GitHub issues addressing author’s resource constraints.

| DeepScaleR-1.5B-Preview | Still-3-1.5B-Preview | Open-RS | |

|---|---|---|---|

| Base Model | DeepSeek-R1-Distill-Qwen-1.5B | DeepSeek-R1-Distill-Qwen-1.5B | DeepSeek-R1-Distill-Qwen-1.5B |

| SFT Data | 0 | 0 | 0 |

| RM Data | 0 | 0 | 0 |

| RM | None | None | None |

| RL Data | 40k 16 | 30k 8 | 7k 6 |

| Hardware | 8x A100 80GB | 1x 8 A100 80GB | 1x 4 A40 48GB |

| Time | 240h | 150h | 24h |

| Cost Est. | $3629 | $2268 | $42 |

🔼 This table compares the resource requirements (data usage and training costs) for training three different 1.5-billion parameter language models. It highlights the significant differences in computational cost and dataset size between existing models and the model proposed in this paper (Open-RS). The comparison is made to demonstrate the efficiency gains achieved by the proposed method, especially considering the constraints of limited resources.

read the caption

Table 3: Comparison of data usage and training costs for 1.5B models. Data are sourced from original papers or GitHub issues addressing author’s resource constraints.

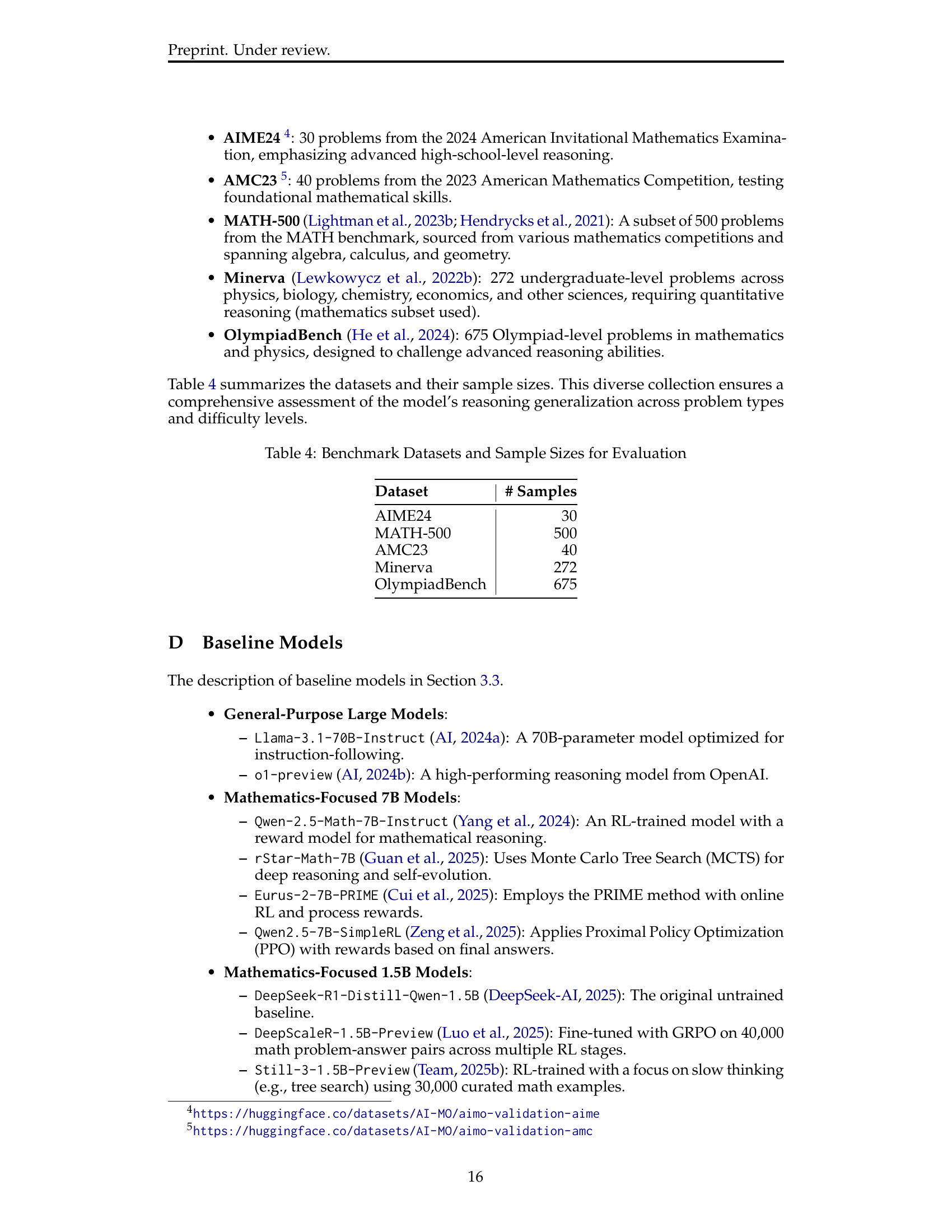

| Dataset | # Samples |

|---|---|

| AIME24 | 30 |

| MATH-500 | 500 |

| AMC23 | 40 |

| Minerva | 272 |

| OlympiadBench | 675 |

🔼 This table lists the benchmark datasets used to evaluate the reasoning capabilities of the models. It includes the dataset name, and the number of samples in each dataset. These datasets represent a diverse range of mathematical reasoning challenges, including problems from various competitions, academic sources, and other domains, varying in difficulty and problem type. The purpose is to provide a comprehensive evaluation of model performance across different problem characteristics and difficulty levels.

read the caption

Table 4: Benchmark Datasets and Sample Sizes for Evaluation

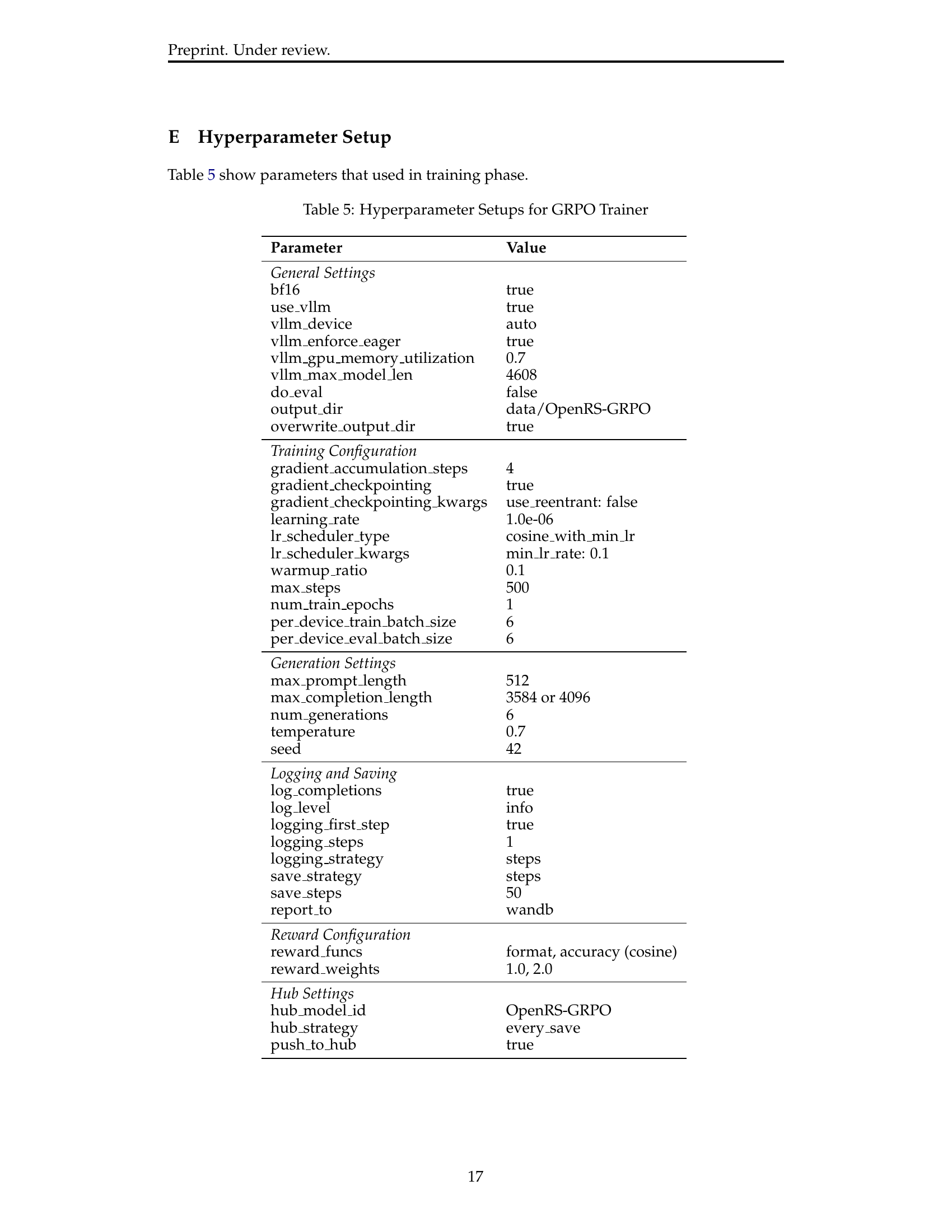

| Parameter | Value |

|---|---|

| General Settings | |

| bf16 | true |

| use_vllm | true |

| vllm_device | auto |

| vllm_enforce_eager | true |

| vllm_gpu_memory_utilization | 0.7 |

| vllm_max_model_len | 4608 |

| do_eval | false |

| output_dir | data/OpenRS-GRPO |

| overwrite_output_dir | true |

| Training Configuration | |

| gradient_accumulation_steps | 4 |

| gradient_checkpointing | true |

| gradient_checkpointing_kwargs | use_reentrant: false |

| learning_rate | 1.0e-06 |

| lr_scheduler_type | cosine_with_min_lr |

| lr_scheduler_kwargs | min_lr_rate: 0.1 |

| warmup_ratio | 0.1 |

| max_steps | 500 |

| num_train_epochs | 1 |

| per_device_train_batch_size | 6 |

| per_device_eval_batch_size | 6 |

| Generation Settings | |

| max_prompt_length | 512 |

| max_completion_length | 3584 or 4096 |

| num_generations | 6 |

| temperature | 0.7 |

| seed | 42 |

| Logging and Saving | |

| log_completions | true |

| log_level | info |

| logging_first_step | true |

| logging_steps | 1 |

| logging_strategy | steps |

| save_strategy | steps |

| save_steps | 50 |

| report_to | wandb |

| Reward Configuration | |

| reward_funcs | format, accuracy (cosine) |

| reward_weights | 1.0, 2.0 |

| Hub Settings | |

| hub_model_id | OpenRS-GRPO |

| hub_strategy | every_save |

| push_to_hub | true |

🔼 This table details the hyperparameters used to configure the Group Relative Policy Optimization (GRPO) trainer during the reinforcement learning process. It’s divided into sections for general settings (overall training parameters), training configuration (optimizer specifics), generation settings (parameters controlling text generation), logging and saving settings (frequency of logging and model checkpoints), and reward configuration (weighting of different reward components). Each parameter’s value is specified, offering a clear view of the training setup.

read the caption

Table 5: Hyperparameter Setups for GRPO Trainer

Full paper#