TL;DR#

Video Large Language Models (ViLLMs) struggle with identity-aware comprehension, limiting their applicability in personalized scenarios. Existing methods are limited to static image-based understanding and cannot model dynamic visual cues present in videos. This paper addresses the limitation by proposing a one-shot learning framework that enables subject-aware question answering from a single video for each subject.

The paper introduces an automated augmentation pipeline that synthesizes identity-preserving positive samples and retrieves hard negatives from existing video corpora, generating a diverse training dataset with four QA types. It proposes a ReLU Routing MoH attention mechanism, alongside two novel objectives: Smooth Proximity Regularization and Head Activation Enhancement. The paper presents a two-stage training strategy, transitioning from image pre-training to video fine-tuning. The model is evaluated on diverse datasets, demonstrating its superiority in personalized feature understanding.

Key Takeaways#

Why does it matter?#

This paper is important for researchers as it introduces a novel framework for personalized video understanding. It addresses the limitations of current ViLLMs and opens new avenues for research in identity-aware video analysis and personalized AI applications. The publicly released dataset facilitates further research in this area.

Visual Insights#

🔼 Figure 1 showcases PVChat’s capacity for one-shot learning. Two example queries are presented. Each query involves a video featuring specific individuals (

and in the examples). PVChat successfully answers questions requiring personalized information (understanding of the specific individuals’ actions and activities) gleaned from a single reference video. In contrast, other state-of-the-art models (VideoLLaMA2 and InternVideo2) fail to accurately answer these personalized questions, highlighting PVChat’s unique capabilities in personalized video comprehension. read the caption

Figure 1: Examples of PVChat’s ability with one-shot learning (e.g.,and ). PVChat can answer questions about the personalized information correctly while other models [5, 50] fail.

| Model Type | Acc | BLEU | BS | ES | DC |

|---|---|---|---|---|---|

| InternVideo2 [50] | 0.342 | 0.046 | 0.875 | 3.041 | 1.812 |

| VideoLLaMA2 [5] | 0.470 | 0.082 | 0.890 | 3.012 | 3.301 |

| PVChat (Ours) | 0.901 | 0.562 | 0.952 | 4.940 | 4.201 |

🔼 This table presents a quantitative comparison of the proposed PVChat model against two state-of-the-art (SOTA) video large language models (ViLLMs): InternVideo2 and VideoLLaMA2. The comparison is based on five evaluation metrics: Accuracy (Acc), Bilingual Evaluation Understudy (BLEU), BERTscore (BS), Entity Specificity (ES), and Descriptive Completeness (DC). Higher scores indicate better performance. The results demonstrate that PVChat significantly outperforms both SOTA models across all five metrics, highlighting its superior performance in personalized video understanding.

read the caption

Table 1: Quantitative evaluation of our method against state-of-the-art methods [50, 5]. Compared with these SOTA models, our model exhibits superior performance across five metrics.

In-depth insights#

One-Shot ViLLM#

The concept of a ‘One-Shot ViLLM’ is intriguing, suggesting the ability to personalize video understanding with minimal data. Such a model would need to efficiently extract and encode subject-specific features from a single video, differentiating them from general knowledge. Challenges include overfitting to the limited data, robustly handling variations in appearance and context, and generalizing to novel situations. Success would hinge on sophisticated techniques like meta-learning, few-shot adaptation, or generative augmentation to create diverse training examples from the single reference video. This contrasts standard ViLLMs that rely on massive datasets and struggle with personalized scenarios that require distinguishing specific individuals. A One-Shot ViLLM has potential for applications in healthcare and smart homes.

ReMoH Attention#

Based on the context, ReMoH attention likely refers to a novel attention mechanism that leverages a ReLU Routing Mixture-of-Heads approach. This suggests a departure from traditional softmax-based or top-k head selection methods, opting instead for a ReLU-driven dynamic routing strategy. The core idea is to enhance the model’s ability to learn domain-specific information, which in this case, focuses on personalized video understanding with limited data. This ReLU router is designed to offer greater flexibility and differentiability in the head selection process, aiming to improve learning and reduce computational redundancy by enabling the model to selectively activate specific “expert” heads relevant to the input. This approach probably involves using a ReLU function to modulate the output of different expert heads, allowing for smoother and more scalable training compared to traditional MoH implementations. The method balances attention heads, mitigating gradient issues.

Video Augment#

While ‘Video Augment’ isn’t explicitly mentioned, the paper heavily focuses on data augmentation techniques, crucial for one-shot learning in video understanding. The core idea revolves around synthesizing personalized video data to overcome the scarcity of individual-specific video content. They employ a pipeline for generating identity-preserving positive samples using techniques like facial attribute extraction, demographic categorization, and high-fidelity video synthesis with tools like ConsisID and PhotoMaker. Crucially, they also retrieve hard negative samples with similar faces to enhance discrimination. This involves facial retrieval from large datasets like Laion-Face-5B and CelebV-HQ, followed by video synthesis. This addresses a critical limitation: the tendency of models to focus solely on positives, resulting in poor generalization and difficulty in distinguishing individuals. The generation of diverse video clips with varying scenes, actions, and contexts, all while maintaining identity consistency, is essential for training a robust personalized ViLLM. By generating QA pairs, the augmented video data further enhanced personalized feature learning. This synthetic data generation helps the model learn individual characteristics and perform subject-specific question answering.

Dataset Diversity#

Based on the supplementary material, the dataset appears to cover a range of scenarios, including Friends(6), Good Doctor(5), Ne Zha(2), doctor(3), patient(3), Big Bang(6)), suggesting an attempt to incorporate diversity in terms of character types, media formats (TV series, anime), and possibly even levels of realism. This variability is crucial for training a model to generalize well and avoid biases toward specific contexts. Moreover, it’s implied that the prompts used for data augmentation are designed to account for different ages and genders. This attention to demographic representation is a positive step towards creating a more inclusive and unbiased personalized video chat system, ensuring it performs reliably across various user demographics. The variety in prompts, spanning different scenarios and demographic factors, helps the model learn more robust associations between visual cues and personalized information, ultimately leading to improved question-answering performance and a better user experience. The detail is helpful, however the exact details are still vague.

Personalized QA#

Personalized Question Answering (QA) is a challenging task that requires understanding the context of a question and the individual it is referring to. This goes beyond traditional QA, which focuses on general knowledge. In personalized QA, the system must be able to access and reason about specific information related to a particular person. This requires the ability to identify the individual, understand their preferences, and use this information to answer the question accurately. This task is particularly relevant in scenarios such as personalized assistants, healthcare, and education, where the ability to tailor responses to individual needs is crucial. Personalized QA is a complex problem that requires a combination of techniques, including natural language processing, knowledge representation, and reasoning. Developing effective personalized QA systems is essential for building intelligent and helpful AI applications that can truly understand and respond to individual needs, going far beyond what generic models can deliver. One-shot learning is very relevant for this QA, since obtaining many data for a person may violate privacy issues.

More visual insights#

More on figures

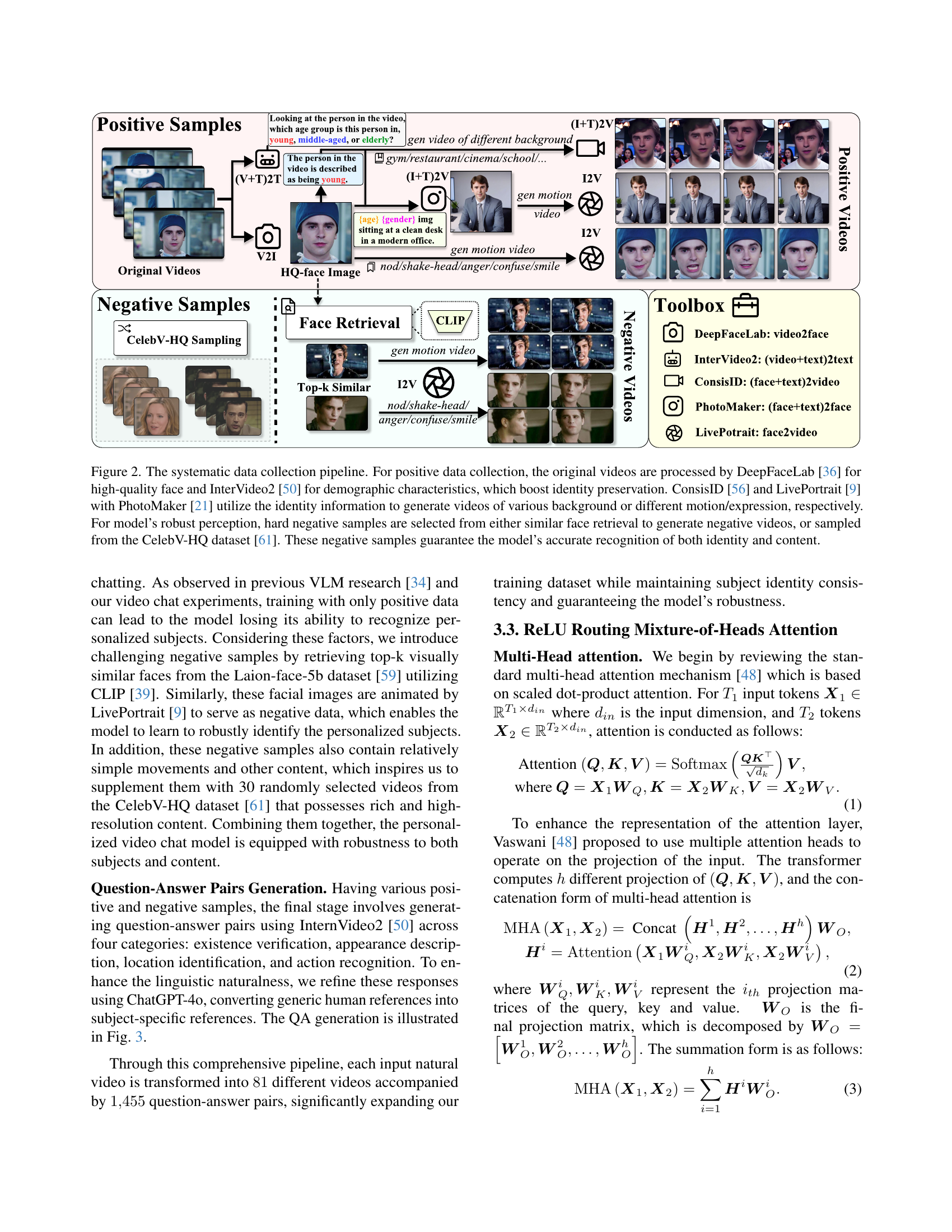

🔼 This figure illustrates the process of data augmentation for training a personalized video chat model. Starting with original videos, high-quality facial images are extracted using DeepFaceLab. InternVideo2 analyzes these images to determine demographic information (age, gender, etc.), which is crucial for identity preservation. This demographic information, along with the extracted face, is then used by ConsisID and PhotoMaker to synthesize new videos with the same identity but different backgrounds and actions (positive samples). Hard negative samples are generated by retrieving similar faces from Laion-Face-5B and CelebV-HQ, creating a diverse dataset with varying visual similarities to the positive samples. This ensures the model learns to distinguish between genuine and similar-looking individuals, improving accuracy and robustness.

read the caption

Figure 2: The systematic data collection pipeline. For positive data collection, the original videos are processed by DeepFaceLab [36] for high-quality face and InterVideo2 [50] for demographic characteristics, which boost identity preservation. ConsisID [56] and LivePortrait [9] with PhotoMaker [21] utilize the identity information to generate videos of various background or different motion/expression, respectively. For model’s robust perception, hard negative samples are selected from either similar face retrieval to generate negative videos, or sampled from the CelebV-HQ dataset [61]. These negative samples guarantee the model’s accurate recognition of both identity and content.

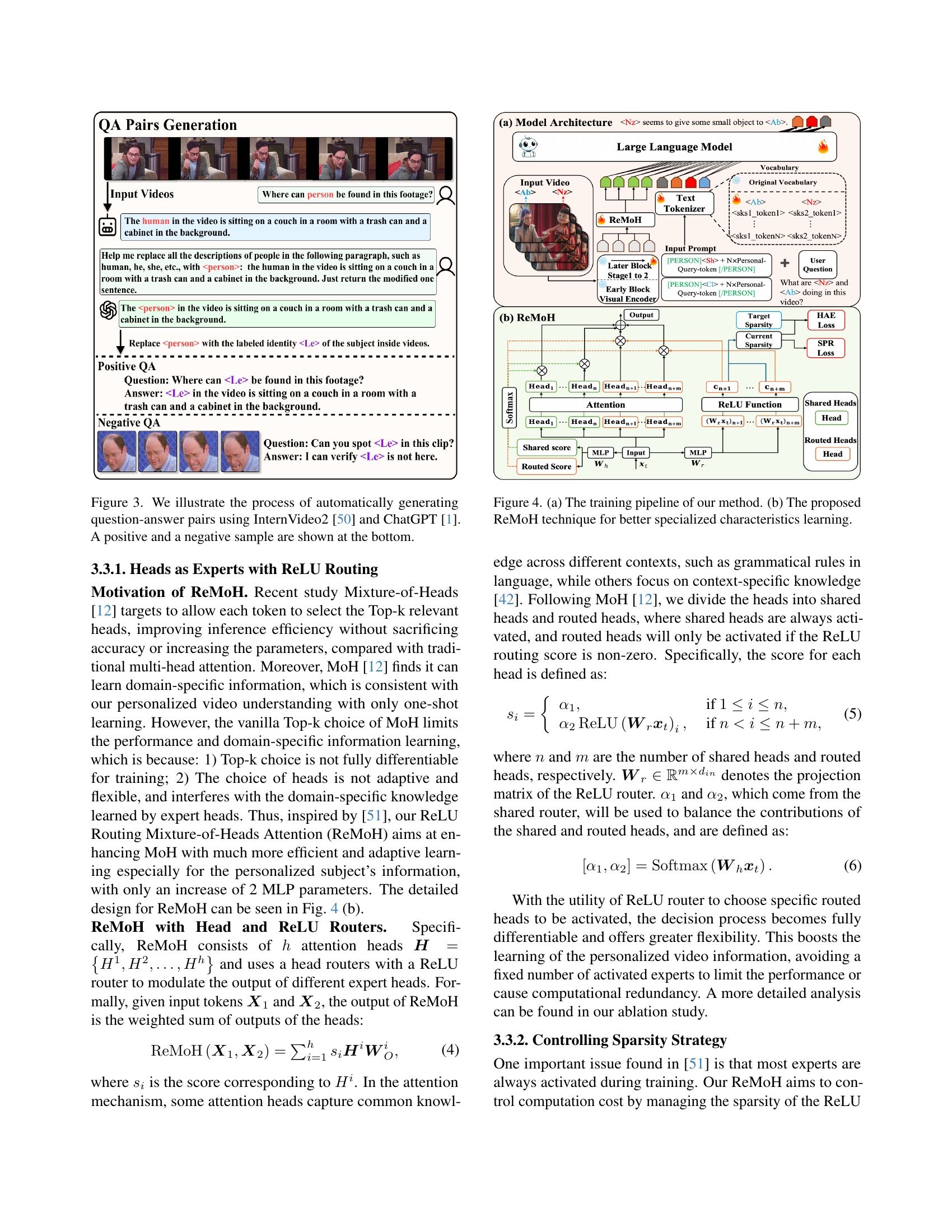

🔼 This figure details the automated pipeline for generating question-answer pairs used in training the PVChat model. InternVideo2 [50], a video question answering model, initially generates the question-answer pairs. ChatGPT [1] then refines these pairs, ensuring natural language and consistency. The bottom of the figure shows examples of both a positive (correctly answered) and a negative (incorrectly answered) question-answer pair, illustrating the types of data used in model training.

read the caption

Figure 3: We illustrate the process of automatically generating question-answer pairs using InternVideo2 [50] and ChatGPT [1]. A positive and a negative sample are shown at the bottom.

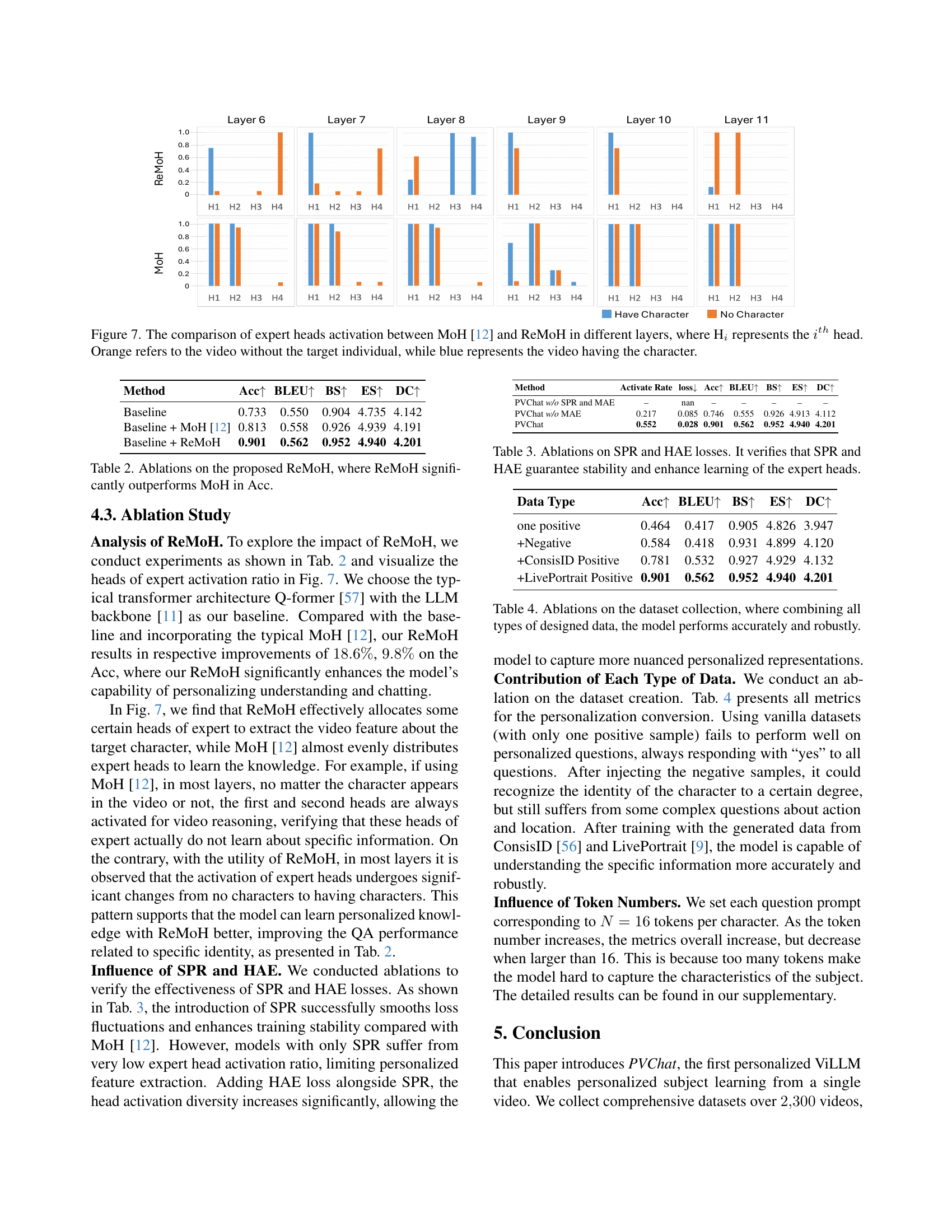

🔼 Figure 4 illustrates two key aspects of the PVChat model. (a) presents the overall training pipeline, showcasing a two-stage process: initial image-based training for static feature learning followed by video-based fine-tuning to incorporate dynamic aspects and refine subject-specific understanding. (b) details the ReLU Routing Mixture-of-Heads (ReMoH) attention mechanism, a core component of the model designed for efficient and adaptive learning of personalized features. ReMoH is depicted as a routing system that dynamically selects relevant attention heads, enhancing model performance and understanding of individual characteristics.

read the caption

Figure 4: (a) The training pipeline of our method. (b) The proposed ReMoH technique for better specialized characteristics learning.

🔼 Figure 5 presents a comparison of PVChat’s performance against two other state-of-the-art video LLMs (InternVideo2 and VideoLLaMA2) on personalized video question answering. The figure showcases two examples. In the left example, PVChat correctly identifies and describes a person’s condition from a single video, while the other models fail. The right example demonstrates PVChat’s ability to answer questions about two individuals in a scene, achieving success where the other models fail to even detect the presence of both individuals. This highlights PVChat’s superior ability to learn personalized characteristics from only a single reference video.

read the caption

Figure 5: Examples of PVChat’s ability with a learned video (e.g., a man namedand another man named ). PVChat can recognize and answer questions about the personalized concept in various scenarios, such as medical scenarios (left) and TV series (right).

🔼 This figure illustrates the hierarchical structure of the prompt library used in the PVChat model. The library is organized into four levels: gender, age, scenario, and specific descriptions. Different prompts are generated based on the specific combination of these four factors to ensure that the model receives detailed and contextually relevant information about the target subject in each video. This approach allows for diverse and accurate training data, especially important for personalized video understanding.

read the caption

Figure 6: The hierarchical structure of our prompt library, which is carefully divided into four levels, such as gender, age, and scenarios, and provides different descriptions according to the specific subject.

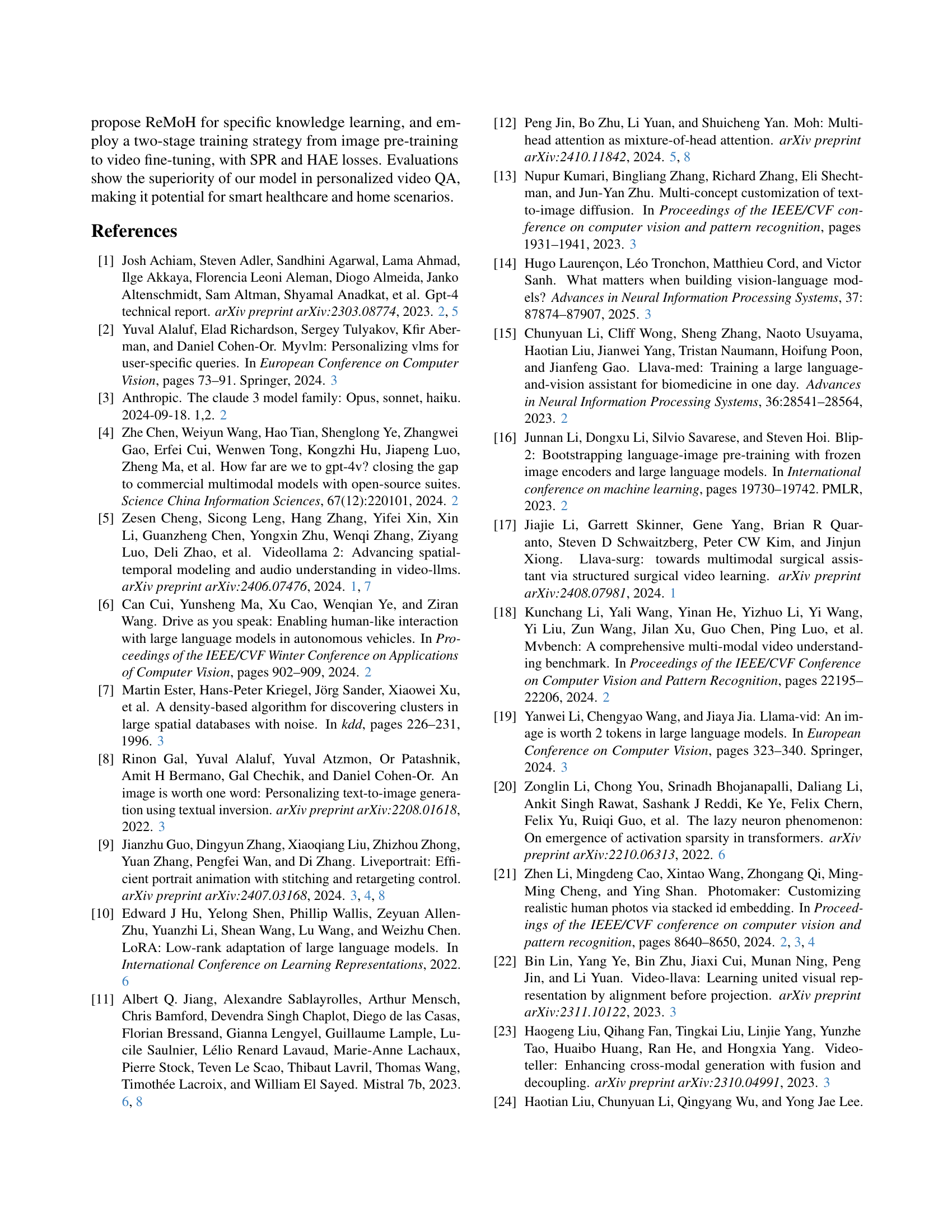

🔼 Figure 7 presents a comparison of expert head activation between the standard Mixture-of-Heads (MoH) attention mechanism and the proposed ReLU Routing Mixture-of-Heads (ReMoH) mechanism. The figure displays the activation of multiple attention heads (Hi) across different layers of a neural network. The activation levels are shown for two conditions: videos containing the target character (blue bars) and videos without the target character (orange bars). This visualization helps to demonstrate how the ReMoH mechanism focuses the attention of specific heads on relevant features related to the target character compared to the MoH approach.

read the caption

Figure 7: The comparison of expert heads activation between MoH [12] and ReMoH in different layers, where HisubscriptH𝑖\text{H}_{i}H start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT represents the ithsuperscript𝑖𝑡ℎi^{th}italic_i start_POSTSUPERSCRIPT italic_t italic_h end_POSTSUPERSCRIPT head. Orange refers to the video without the target individual, while blue represents the video having the character.

🔼 This figure demonstrates PVChat’s ability to answer personalized questions about individuals in videos using only one example video of that person. The left side shows a single-person evaluation where the model correctly identifies the activity of person Nz. The right side shows a multi-person evaluation where the model identifies the activity of individuals Nz and Ab. The results show that PVChat outperforms other ViLLMs in personalized video understanding.

read the caption

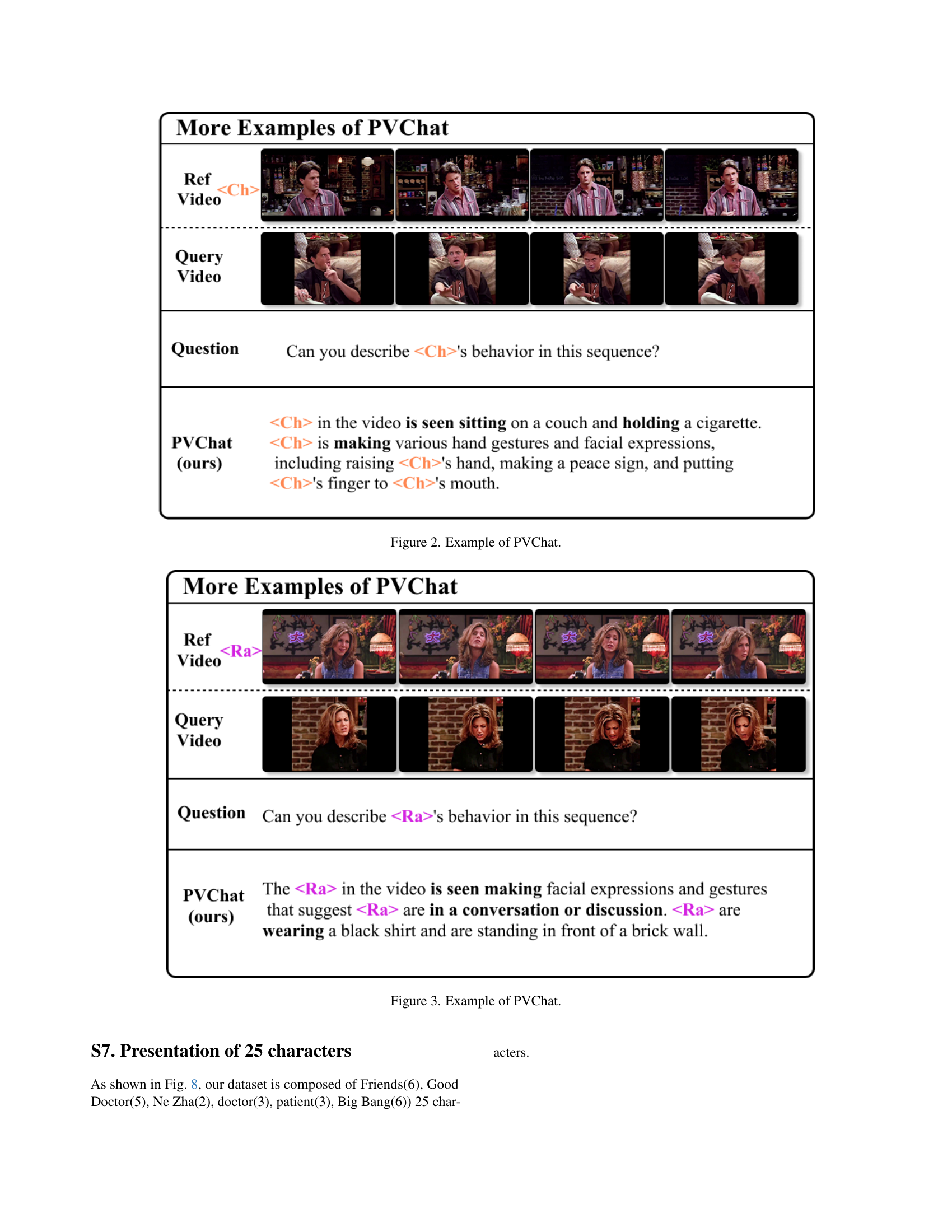

Figure 1: Example of PVChat.

🔼 This figure shows an example of PVChat’s ability to perform personalized video understanding. It demonstrates the system’s capability to answer questions about a specific individual’s actions and behavior in a video clip, even when the video contains multiple individuals or complex actions. The example highlights the model’s ability to go beyond general video understanding and provide precise, identity-aware answers.

read the caption

Figure 2: Example of PVChat.

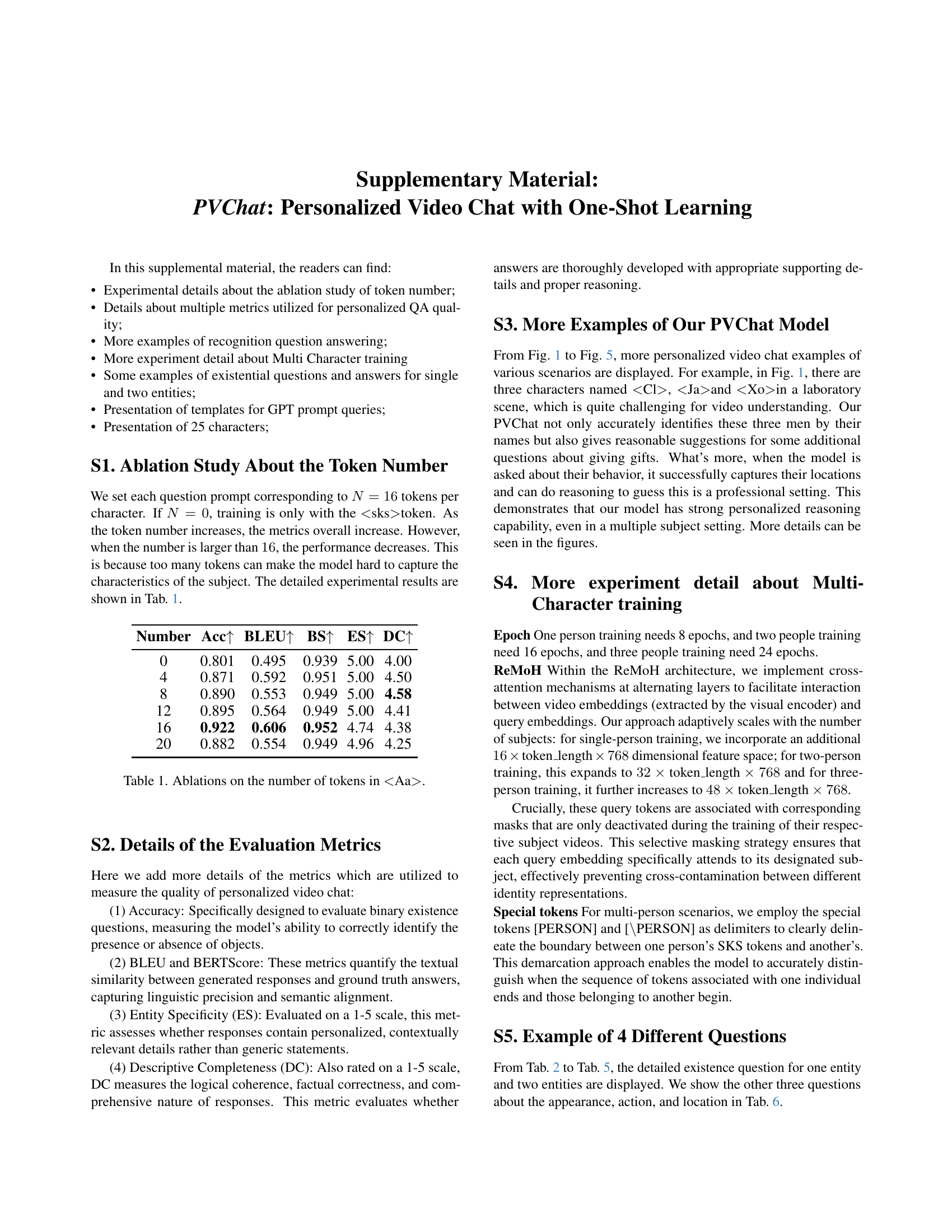

🔼 This figure showcases PVChat’s ability to answer personalized video questions using only one reference video. The example shows three different queries related to a single video involving three characters (

, , ). PVChat successfully identifies and provides information about all three characters, including their potential gift preferences and an in-depth description of their actions in the scene, demonstrating subject-aware question answering capability. The figure highlights the model’s superiority compared to other models that struggle with such personalized video understanding tasks. read the caption

Figure 3: Example of PVChat.

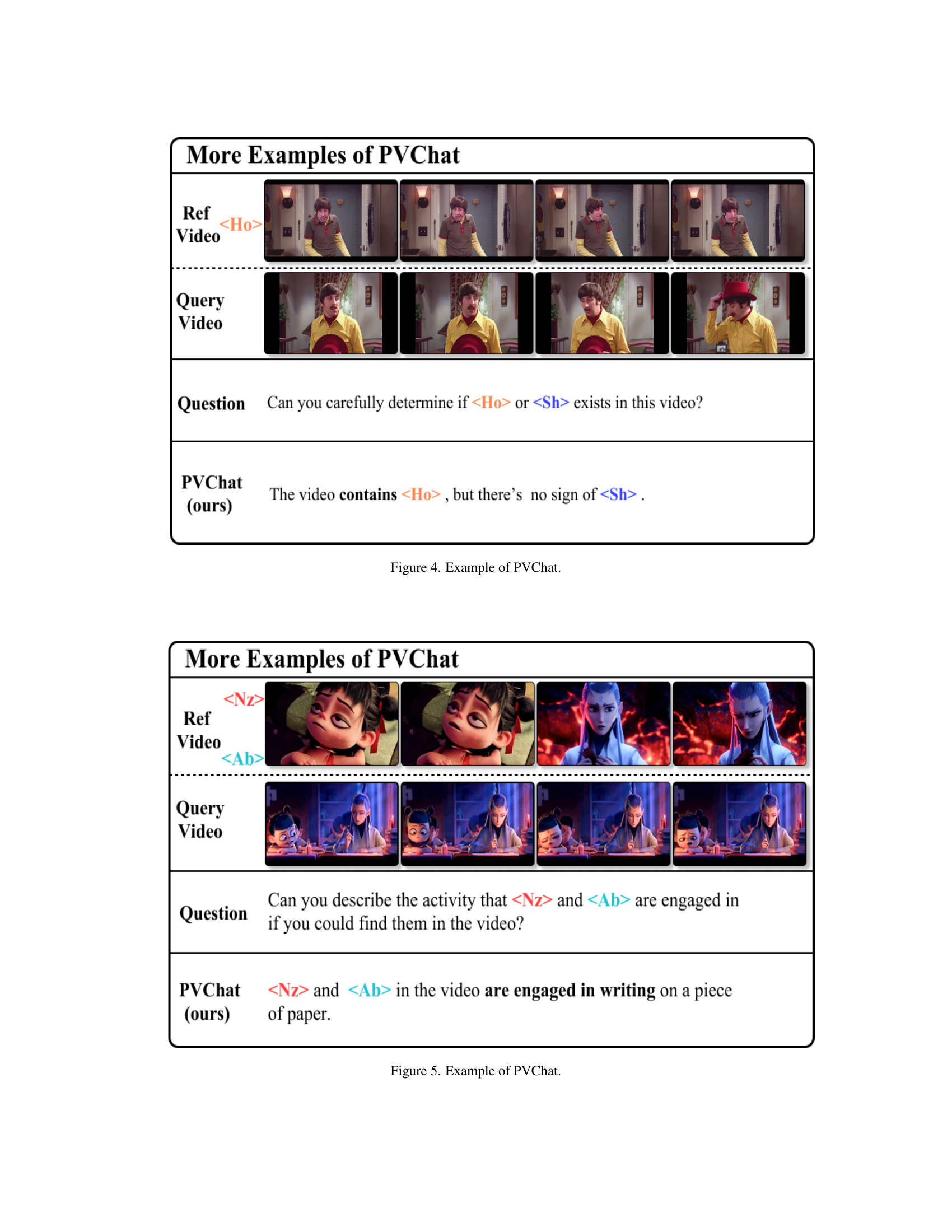

🔼 This figure showcases PVChat’s ability to answer complex questions about individuals in videos, even with only one-shot learning. It presents a comparison between PVChat and other state-of-the-art video LLMs on a question-answering task. In the example shown, PVChat accurately identifies and describes the actions of individuals in the video, while the others struggle.

read the caption

Figure 4: Example of PVChat.

🔼 This figure showcases PVChat’s ability to answer personalized questions about individuals in videos. The example demonstrates correct identification and detailed description of actions and appearances even after only seeing a single reference video of each subject. It highlights the model’s ability to handle both single-person and multi-person scenarios.

read the caption

Figure 5: Example of PVChat.

🔼 This figure shows the prompts used in the data augmentation pipeline. First, a video is fed into InternVideo2, a model that extracts textual descriptions of the video content. The output text is then fed to ChatGPT-4, which refines the text and replaces all pronouns with the designated character identifier. The refined caption is used to help generate training data.

read the caption

Figure 6: Prompt for Internvideo and GPT query

More on tables

| Method | Acc | BLEU | BS | ES | DC |

|---|---|---|---|---|---|

| Baseline | 0.733 | 0.550 | 0.904 | 4.735 | 4.142 |

| Baseline + MoH [12] | 0.813 | 0.558 | 0.926 | 4.939 | 4.191 |

| Baseline + ReMoH | 0.901 | 0.562 | 0.952 | 4.940 | 4.201 |

🔼 This table presents the results of ablation studies comparing the performance of three different models: a baseline model, a model incorporating the Mixture-of-Heads (MoH) attention mechanism, and a model using the proposed ReLU Routing Mixture-of-Heads (ReMoH) attention mechanism. The comparison focuses on the accuracy (Acc) metric, demonstrating a significant improvement achieved by ReMoH compared to both the baseline and the standard MoH approach. The table shows that ReMoH enhances the model’s ability to accurately perform personalized video understanding.

read the caption

Table 2: Ablations on the proposed ReMoH, where ReMoH significantly outperforms MoH in Acc.

| Method | Activate Rate | loss | Acc | BLEU | BS | ES | DC |

|---|---|---|---|---|---|---|---|

| PVChat w/o SPR and MAE | – | nan | – | – | – | – | – |

| PVChat w/o MAE | 0.217 | 0.085 | 0.746 | 0.555 | 0.926 | 4.913 | 4.112 |

| PVChat | 0.552 | 0.028 | 0.901 | 0.562 | 0.952 | 4.940 | 4.201 |

🔼 This ablation study investigates the impact of Smooth Proximity Regularization (SPR) and Head Activation Enhancement (HAE) on the model’s performance. It shows how these loss functions contribute to training stability and the effective learning of expert heads within the ReLU Routing Mixture-of-Heads (ReMoH) attention mechanism. The table likely compares different model variants: a baseline without SPR and HAE, one with only SPR, and the full model with both SPR and HAE. The results demonstrate the effectiveness of the proposed loss functions in achieving better performance metrics.

read the caption

Table 3: Ablations on SPR and HAE losses. It verifies that SPR and HAE guarantee stability and enhance learning of the expert heads.

| Data Type | Acc | BLEU | BS | ES | DC |

|---|---|---|---|---|---|

| one positive | 0.464 | 0.417 | 0.905 | 4.826 | 3.947 |

| +Negative | 0.584 | 0.418 | 0.931 | 4.899 | 4.120 |

| +ConsisID Positive | 0.781 | 0.532 | 0.927 | 4.929 | 4.132 |

| +LivePortrait Positive | 0.901 | 0.562 | 0.952 | 4.940 | 4.201 |

🔼 This ablation study investigates the impact of different data augmentation strategies on the performance of the PVChat model. Specifically, it examines the effect of using only positive samples, positive samples with hard negative samples from CelebV-HQ, positive samples generated using ConsisID, and finally, the combination of all these data types. The results demonstrate that a diverse and comprehensive dataset, combining identity-preserving positive samples with hard negative samples, leads to more robust and accurate performance by the PVChat model. This highlights the crucial role of high-quality data augmentation in achieving effective one-shot learning for personalized video understanding.

read the caption

Table 4: Ablations on the dataset collection, where combining all types of designed data, the model performs accurately and robustly.

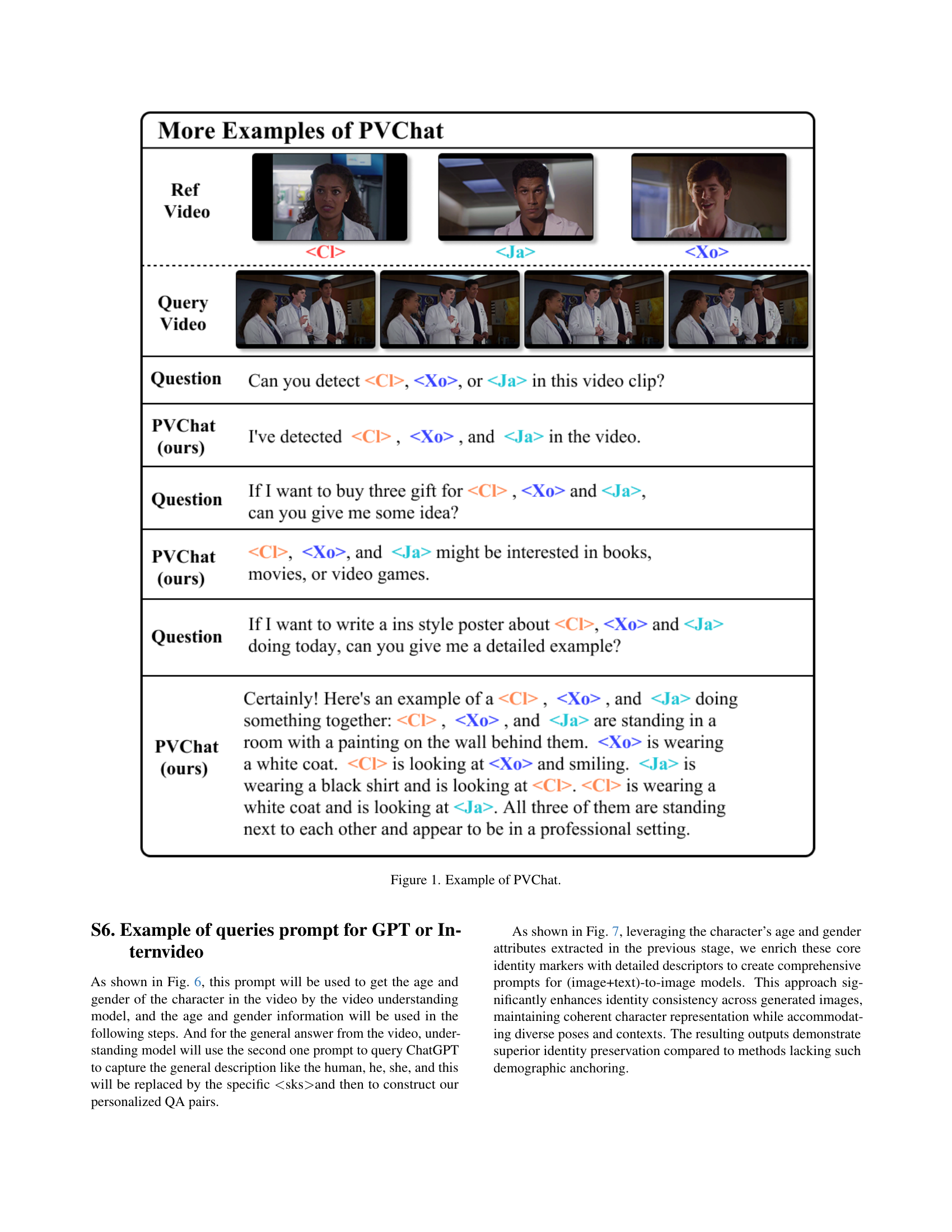

| Number | Acc | BLEU | BS | ES | DC |

|---|---|---|---|---|---|

| 0 | 0.801 | 0.495 | 0.939 | 5.00 | 4.00 |

| 4 | 0.871 | 0.592 | 0.951 | 5.00 | 4.50 |

| 8 | 0.890 | 0.553 | 0.949 | 5.00 | 4.58 |

| 12 | 0.895 | 0.564 | 0.949 | 5.00 | 4.41 |

| 16 | 0.922 | 0.606 | 0.952 | 4.74 | 4.38 |

| 20 | 0.882 | 0.554 | 0.949 | 4.96 | 4.25 |

🔼 This table presents the results of an ablation study investigating the impact of varying the number of tokens used in the prompt for the model. Specifically, it examines the performance of the model (measured by Accuracy, BLEU score, BERTScore, Entity Specificity, and Descriptive Completeness) when processing video data related to subject

using different numbers of tokens (0, 4, 8, 12, 16, 20) in the input prompt. The goal is to determine the optimal number of tokens that balances model performance and efficiency. read the caption

Table 1: Ablations on the number of tokens in.

| Index | Question | Yes Answer |

|---|---|---|

| 1 | Is there any trace of <sks> in this footage? | Yes, <sks> is in this video. |

| 2 | Can you detect <sks> in this video clip? | I can confirm that <sks> appears. |

| 3 | Does <sks> show up anywhere in this recording? | <sks> is present in this recording. |

| 4 | Is <sks> visible in this video? | The video contains <sks>. |

| 5 | Could you verify if <sks> is here? | I’ve identified <sks>. |

| 6 | Does this footage include <sks>? | <sks> is shown in this video. |

| 7 | Can you spot <sks> in this clip? | Yes, <sks> appears here. |

| 8 | Is <sks> present in this video? | I can verify that <sks> is present. |

| 9 | Does <sks> appear in this footage? | The footage shows <sks>. |

| 10 | Can you tell if <sks> is shown here? | <sks> is in this video clip. |

| 11 | Is <sks> in this video segment? | I’ve detected <sks>. |

| 12 | Can you confirm <sks>’s presence? | Yes, <sks> is featured. |

| 13 | Does this clip contain <sks>? | The video includes <sks>. |

| 14 | Is <sks> featured in this recording? | I can see <sks>. |

| 15 | Can you find <sks> in this video? | <sks> is definitely here. |

| 16 | Is <sks> shown in any frame? | Yes, I’ve found <sks>. |

| 17 | Does this video show <sks>? | This video shows <sks>. |

| 18 | Is <sks> visible anywhere? | <sks> is visible. |

| 19 | Can you see <sks>? | Yes, <sks> has been captured. |

| 20 | Is <sks> in this video? | The video clearly shows <sks>. |

| 21 | Can you recognize <sks>? | I’ve spotted <sks>. |

| 22 | Does <sks> appear at all? | <sks> appears in this video. |

| 23 | Is <sks> recorded here? | Yes, this footage contains <sks>. |

| 24 | Can you identify <sks>? | I can recognize <sks>. |

| 25 | Is <sks> present? | <sks> is clearly visible. |

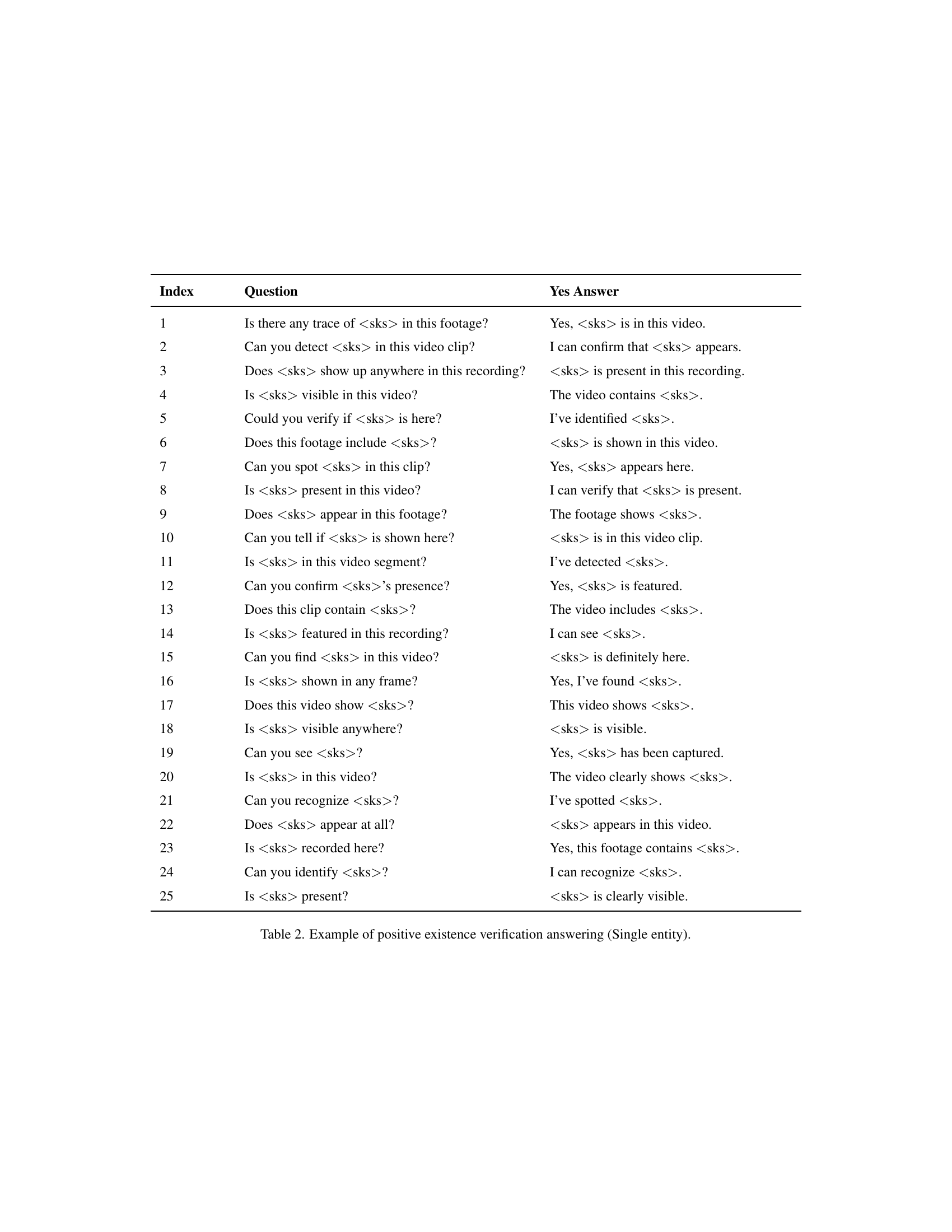

🔼 This table presents 25 example questions and their corresponding positive answers related to verifying the existence of a single entity (person) within a video. Each question is phrased differently to explore variations in natural language, ensuring the model is robust to diverse queries. The answers consistently confirm the presence of the specified entity.

read the caption

Table 2: Example of positive existence verification answering (Single entity).

| Index | Question | No Answer |

|---|---|---|

| 1 | Is there any trace of <sks> in this footage? | No, <sks> is not in this video. |

| 2 | Can you detect <sks> in this video clip? | I cannot detect <sks>. |

| 3 | Does <sks> show up anywhere in this recording? | This video does not contain <sks>. |

| 4 | Is <sks> visible in this video? | <sks> is not shown. |

| 5 | Could you verify if <sks> is here? | There is no sign of <sks>. |

| 6 | Does this footage include <sks>? | <sks> does not appear. |

| 7 | Can you spot <sks> in this clip? | I can confirm <sks> is not here. |

| 8 | Is <sks> present in this video? | The footage does not include <sks>. |

| 9 | Does <sks> appear in this footage? | There’s no evidence of <sks>. |

| 10 | Can you tell if <sks> is shown here? | <sks> is not in this video. |

| 11 | Is <sks> in this video segment? | I’ve checked, <sks> is not present. |

| 12 | Can you confirm <sks>’s presence? | This video does not show <sks>. |

| 13 | Does this clip contain <sks>? | I see no sign of <sks>. |

| 14 | Is <sks> featured in this recording? | <sks> is absent. |

| 15 | Can you find <sks> in this video? | The video does not show <sks>. |

| 16 | Is <sks> shown in any frame? | I cannot find <sks>. |

| 17 | Does this video show <sks>? | <sks> is not visible. |

| 18 | Is <sks> visible anywhere? | I can verify <sks> is not here. |

| 19 | Can you see <sks>? | The video has no <sks>. |

| 20 | Is <sks> in this video? | <sks> does not exist in this video. |

| 21 | Can you recognize <sks>? | I find no trace of <sks>. |

| 22 | Does <sks> appear at all? | This clip does not contain <sks>. |

| 23 | Is <sks> recorded here? | <sks> is not present. |

| 24 | Can you identify <sks>? | I cannot identify <sks>. |

| 25 | Is <sks> present? | There is no <sks> here. |

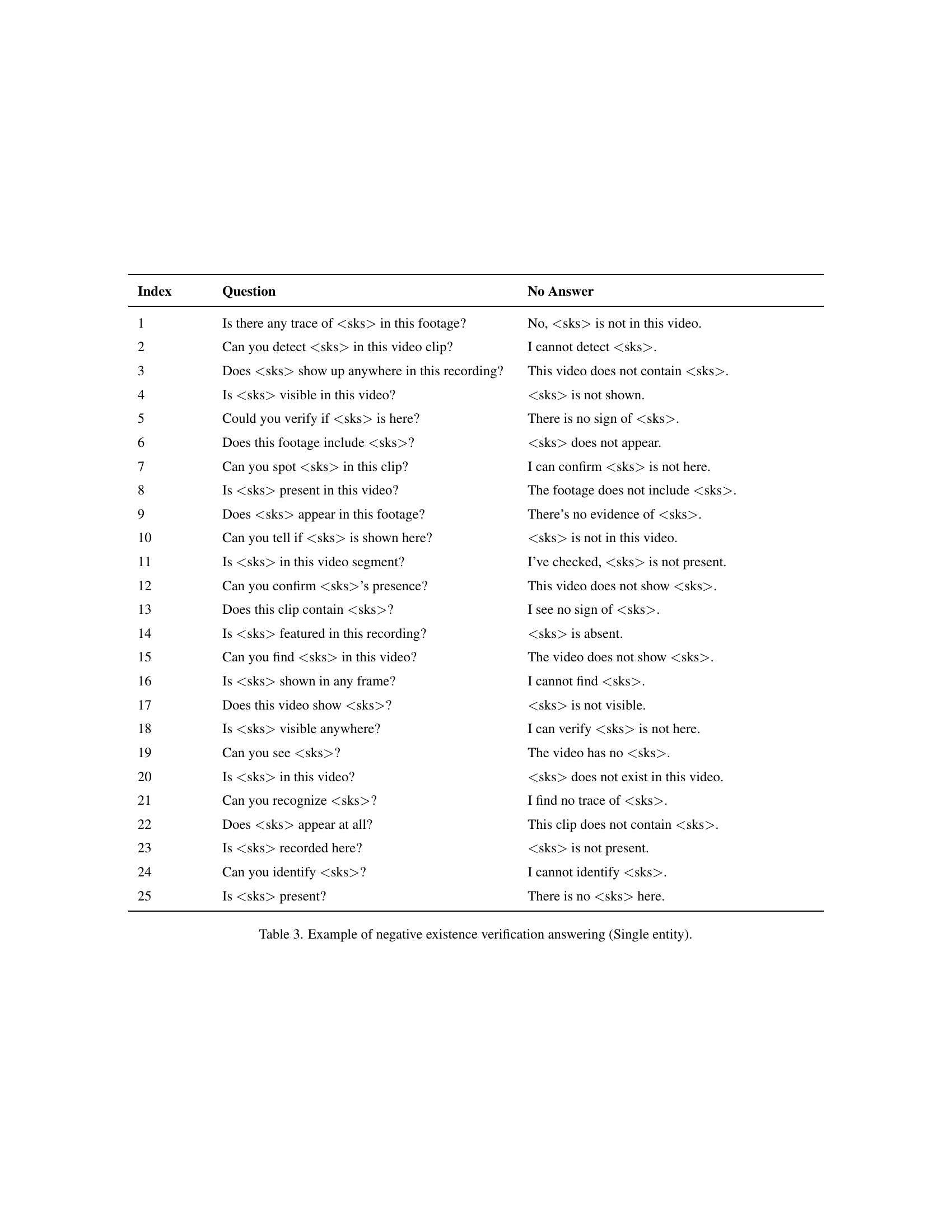

🔼 This table presents 25 examples of questions and answers related to the absence of a single person in a video. Each question asks if a specific individual is present or visible in the video clip. The answers are consistently ’no,’ indicating that the person is not found in the video.

read the caption

Table 3: Example of negative existence verification answering (Single entity).

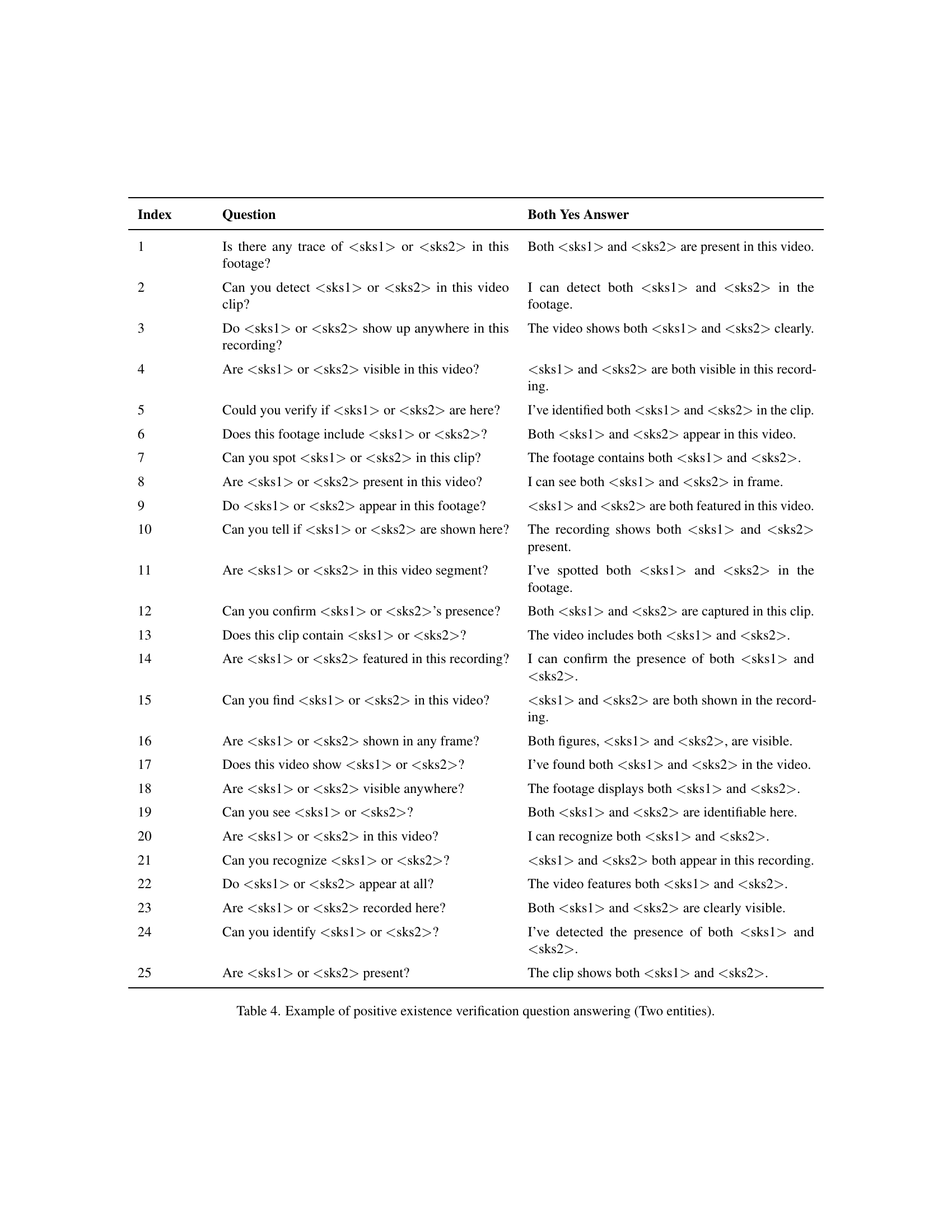

| Index | Question | Both Yes Answer |

|---|---|---|

| 1 | Is there any trace of <sks1> or <sks2> in this footage? | Both <sks1> and <sks2> are present in this video. |

| 2 | Can you detect <sks1> or <sks2> in this video clip? | I can detect both <sks1> and <sks2> in the footage. |

| 3 | Do <sks1> or <sks2> show up anywhere in this recording? | The video shows both <sks1> and <sks2> clearly. |

| 4 | Are <sks1> or <sks2> visible in this video? | <sks1> and <sks2> are both visible in this recording. |

| 5 | Could you verify if <sks1> or <sks2> are here? | I’ve identified both <sks1> and <sks2> in the clip. |

| 6 | Does this footage include <sks1> or <sks2>? | Both <sks1> and <sks2> appear in this video. |

| 7 | Can you spot <sks1> or <sks2> in this clip? | The footage contains both <sks1> and <sks2>. |

| 8 | Are <sks1> or <sks2> present in this video? | I can see both <sks1> and <sks2> in frame. |

| 9 | Do <sks1> or <sks2> appear in this footage? | <sks1> and <sks2> are both featured in this video. |

| 10 | Can you tell if <sks1> or <sks2> are shown here? | The recording shows both <sks1> and <sks2> present. |

| 11 | Are <sks1> or <sks2> in this video segment? | I’ve spotted both <sks1> and <sks2> in the footage. |

| 12 | Can you confirm <sks1> or <sks2>’s presence? | Both <sks1> and <sks2> are captured in this clip. |

| 13 | Does this clip contain <sks1> or <sks2>? | The video includes both <sks1> and <sks2>. |

| 14 | Are <sks1> or <sks2> featured in this recording? | I can confirm the presence of both <sks1> and <sks2>. |

| 15 | Can you find <sks1> or <sks2> in this video? | <sks1> and <sks2> are both shown in the recording. |

| 16 | Are <sks1> or <sks2> shown in any frame? | Both figures, <sks1> and <sks2>, are visible. |

| 17 | Does this video show <sks1> or <sks2>? | I’ve found both <sks1> and <sks2> in the video. |

| 18 | Are <sks1> or <sks2> visible anywhere? | The footage displays both <sks1> and <sks2>. |

| 19 | Can you see <sks1> or <sks2>? | Both <sks1> and <sks2> are identifiable here. |

| 20 | Are <sks1> or <sks2> in this video? | I can recognize both <sks1> and <sks2>. |

| 21 | Can you recognize <sks1> or <sks2>? | <sks1> and <sks2> both appear in this recording. |

| 22 | Do <sks1> or <sks2> appear at all? | The video features both <sks1> and <sks2>. |

| 23 | Are <sks1> or <sks2> recorded here? | Both <sks1> and <sks2> are clearly visible. |

| 24 | Can you identify <sks1> or <sks2>? | I’ve detected the presence of both <sks1> and <sks2>. |

| 25 | Are <sks1> or <sks2> present? | The clip shows both <sks1> and <sks2>. |

🔼 This table presents example question-answer pairs demonstrating positive existence verification in a video with two subjects. The questions aim to confirm the presence of either or both entities within the video. Each row represents a different question phrasing, while the answers confirm the existence of one or both subjects as indicated in the question.

read the caption

Table 4: Example of positive existence verification question answering (Two entities).

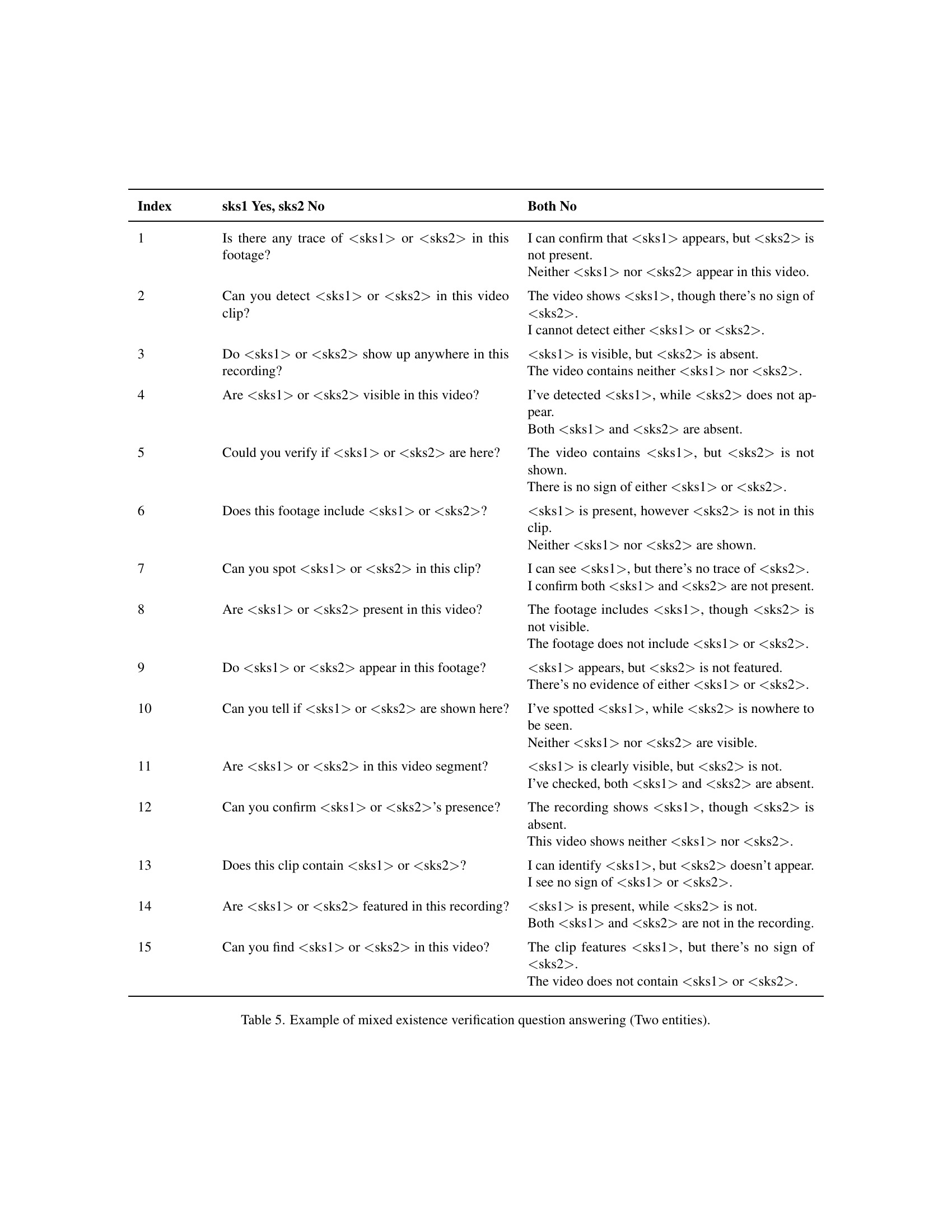

| Index | sks1 Yes, sks2 No | Both No |

|---|---|---|

| 1 | Is there any trace of <sks1> or <sks2> in this footage? | I can confirm that <sks1> appears, but <sks2> is not present. Neither <sks1> nor <sks2> appear in this video. |

| 2 | Can you detect <sks1> or <sks2> in this video clip? | The video shows <sks1>, though there’s no sign of <sks2>. I cannot detect either <sks1> or <sks2>. |

| 3 | Do <sks1> or <sks2> show up anywhere in this recording? | <sks1> is visible, but <sks2> is absent. The video contains neither <sks1> nor <sks2>. |

| 4 | Are <sks1> or <sks2> visible in this video? | I’ve detected <sks1>, while <sks2> does not appear. Both <sks1> and <sks2> are absent. |

| 5 | Could you verify if <sks1> or <sks2> are here? | The video contains <sks1>, but <sks2> is not shown. There is no sign of either <sks1> or <sks2>. |

| 6 | Does this footage include <sks1> or <sks2>? | <sks1> is present, however <sks2> is not in this clip. Neither <sks1> nor <sks2> are shown. |

| 7 | Can you spot <sks1> or <sks2> in this clip? | I can see <sks1>, but there’s no trace of <sks2>. I confirm both <sks1> and <sks2> are not present. |

| 8 | Are <sks1> or <sks2> present in this video? | The footage includes <sks1>, though <sks2> is not visible. The footage does not include <sks1> or <sks2>. |

| 9 | Do <sks1> or <sks2> appear in this footage? | <sks1> appears, but <sks2> is not featured. There’s no evidence of either <sks1> or <sks2>. |

| 10 | Can you tell if <sks1> or <sks2> are shown here? | I’ve spotted <sks1>, while <sks2> is nowhere to be seen. Neither <sks1> nor <sks2> are visible. |

| 11 | Are <sks1> or <sks2> in this video segment? | <sks1> is clearly visible, but <sks2> is not. I’ve checked, both <sks1> and <sks2> are absent. |

| 12 | Can you confirm <sks1> or <sks2>’s presence? | The recording shows <sks1>, though <sks2> is absent. This video shows neither <sks1> nor <sks2>. |

| 13 | Does this clip contain <sks1> or <sks2>? | I can identify <sks1>, but <sks2> doesn’t appear. I see no sign of <sks1> or <sks2>. |

| 14 | Are <sks1> or <sks2> featured in this recording? | <sks1> is present, while <sks2> is not. Both <sks1> and <sks2> are not in the recording. |

| 15 | Can you find <sks1> or <sks2> in this video? | The clip features <sks1>, but there’s no sign of <sks2>. The video does not contain <sks1> or <sks2>. |

🔼 This table presents example questions and answers for a mixed existence verification task in a video involving two entities. The questions aim to determine whether either or both of the two specified entities are present in the video. The answers show various responses, demonstrating situations where one entity is present, both entities are present, or neither entity is present. This illustrates the model’s ability to handle nuanced queries about the existence of multiple individuals in a video.

read the caption

Table 5: Example of mixed existence verification question answering (Two entities).

| Index | Question |

|---|---|

| 1 | What activity is <sks> engaged in during this video? |

| 2 | Could you describe what <sks> is doing in this footage? |

| 3 | What specific actions can you observe <sks> performing in this recording? |

| 4 | What movements or actions does <sks> perform here? |

| 5 | Can you describe <sks>’s behavior in this sequence? |

| 6 | What is <sks> wearing in this video? |

| 7 | Could you describe <sks>’s outfit in this footage? |

| 8 | What color and style of clothing is <sks> dressed in? |

| 9 | How would you describe <sks>’s appearance and attire? |

| 10 | What notable features can you see in <sks>’s clothing? |

| 11 | Where is <sks> positioned in this video? |

| 12 | What color and style of clothing is <sks> dressed in? |

| 13 | Can you describe <sks>’s location relative to others? |

| 14 | Which part of the scene does <sks> appear in? |

| 15 | How does <sks>’s position change throughout the video? |

| 16 | Where can <sks> be found in this footage? |

🔼 This table presents example questions and answers related to negative existence verification in a single-entity scenario. The questions aim to determine whether a specific person, referred to as ‘

’, is present in a video. Each question is phrased differently to explore various ways of asking the same question, and the answers consistently confirm that the individual is not in the video clip. read the caption

Table 6: Example of negative existence verification question answering (Single entity).

Full paper#