TL;DR#

Traditional game development faces creativity and cost challenges due to predetermined content and the need for substantial resources. Current game engines also struggle with personalized content tailored to individual player preferences. Addressing these issues is critical, requiring innovative solutions that minimize costs while enabling the creation of diverse and adaptive gaming experiences.

This research introduces Interactive Generative Video (IGV) as the core of Generative Game Engines (GGE), enabling limitless content generation for next-generation gaming. The paper details GGE’s core modules: Generation, Control, Memory, Dynamics, and Intelligence. A hierarchical maturity roadmap (L0-L4) guides its evolution, envisioning a future where AI-powered generative systems reshape how games are made and experienced.

Key Takeaways#

Why does it matter?#

This paper introduces the Generative Game Engine (GGE), powered by Interactive Generative Video, offering a new paradigm for game development. It could revolutionize content creation by lowering development costs, fostering innovation and opening avenues for AI-driven game experiences and personalized content generation.

Visual Insights#

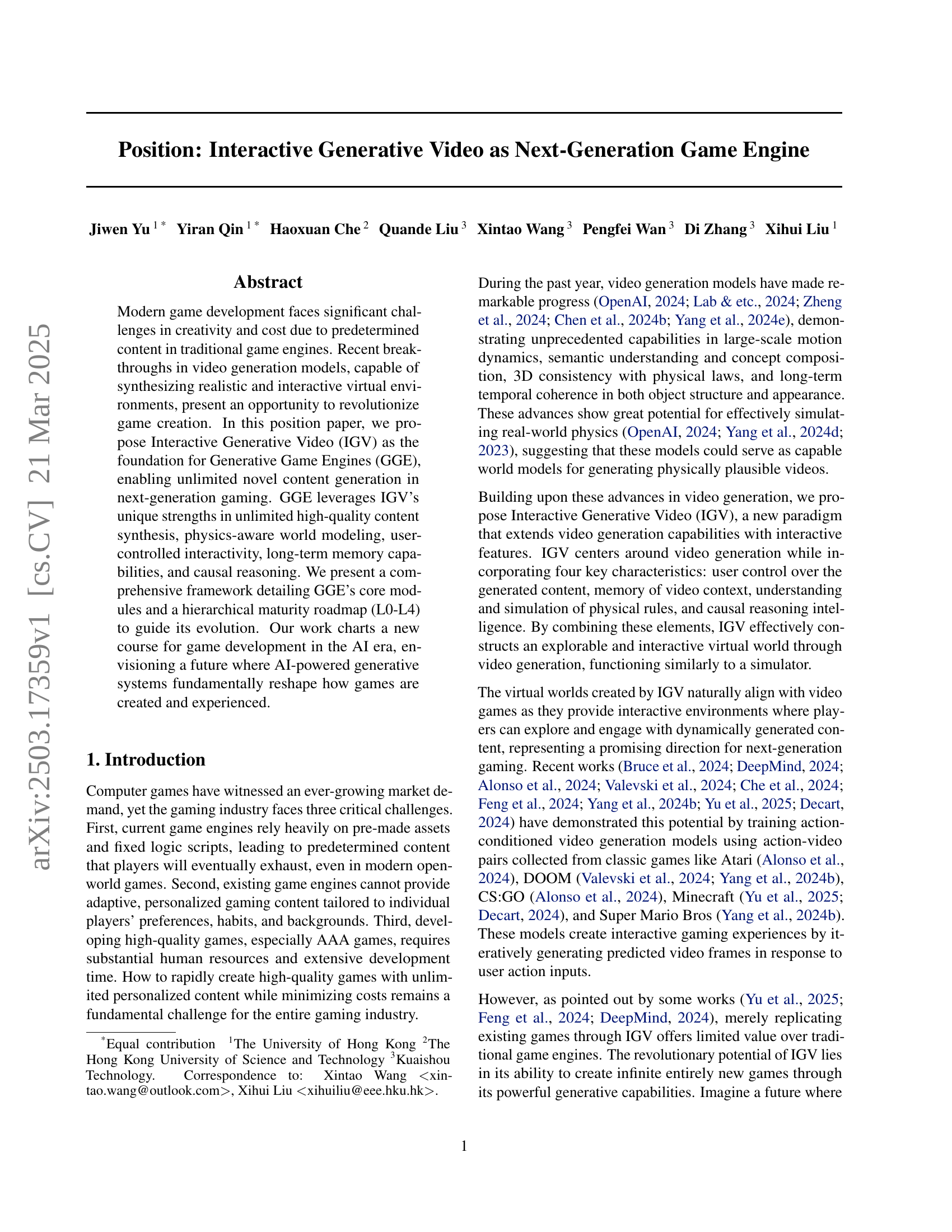

🔼 Figure 1 demonstrates GameFactory’s capacity to generalize learned action controls from Minecraft to various open-world settings. The figure showcases examples from the GameFactory homepage depicting diverse environments where the learned control mechanisms successfully enable agent navigation and interaction.

read the caption

Figure 1: Demonstration of GameFactory (Yu et al., 2025)’s ability to generalize action control capabilities learned from Minecraft data to open-domain scenarios. Examples from its homepage showcase various generalized environments where the learned control mechanisms have been successfully applied.

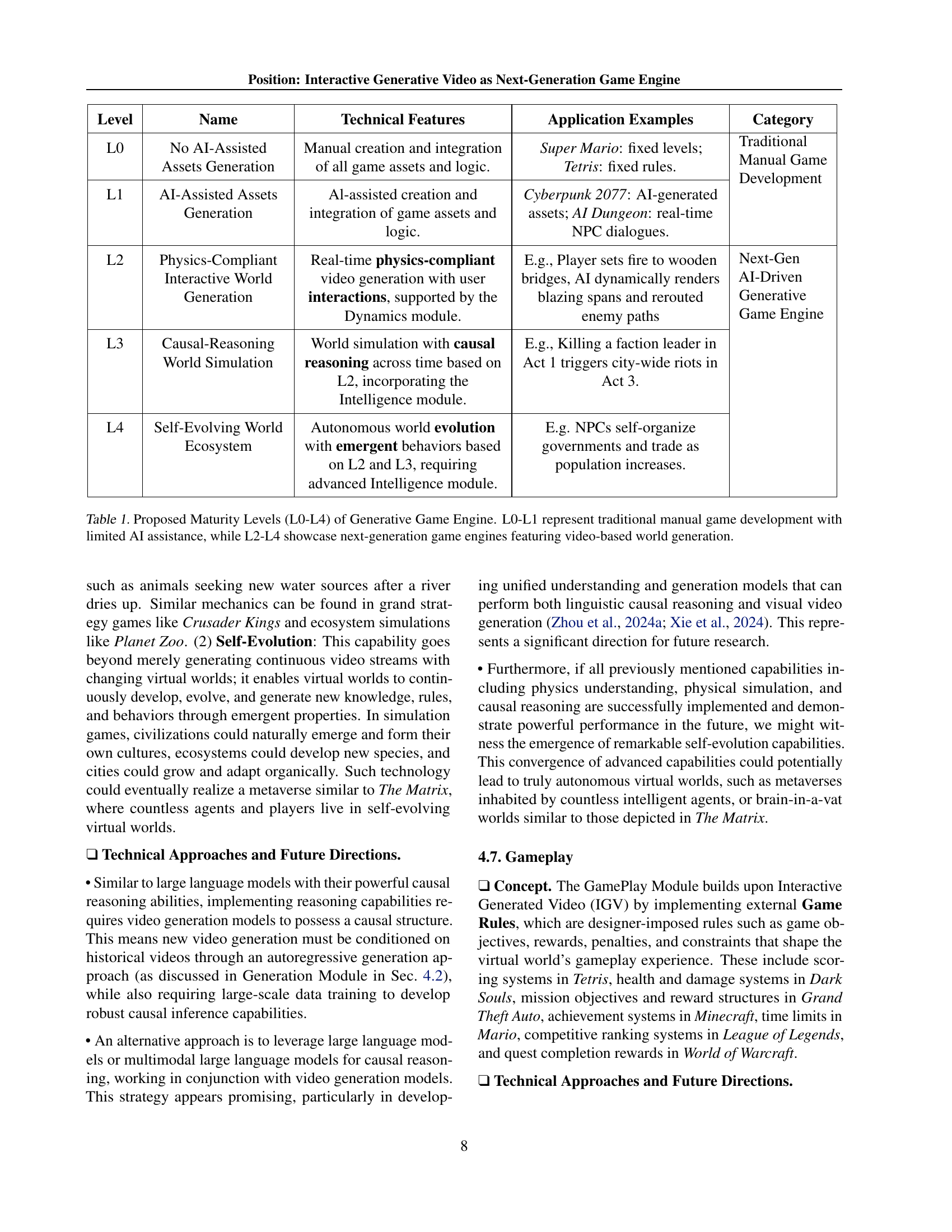

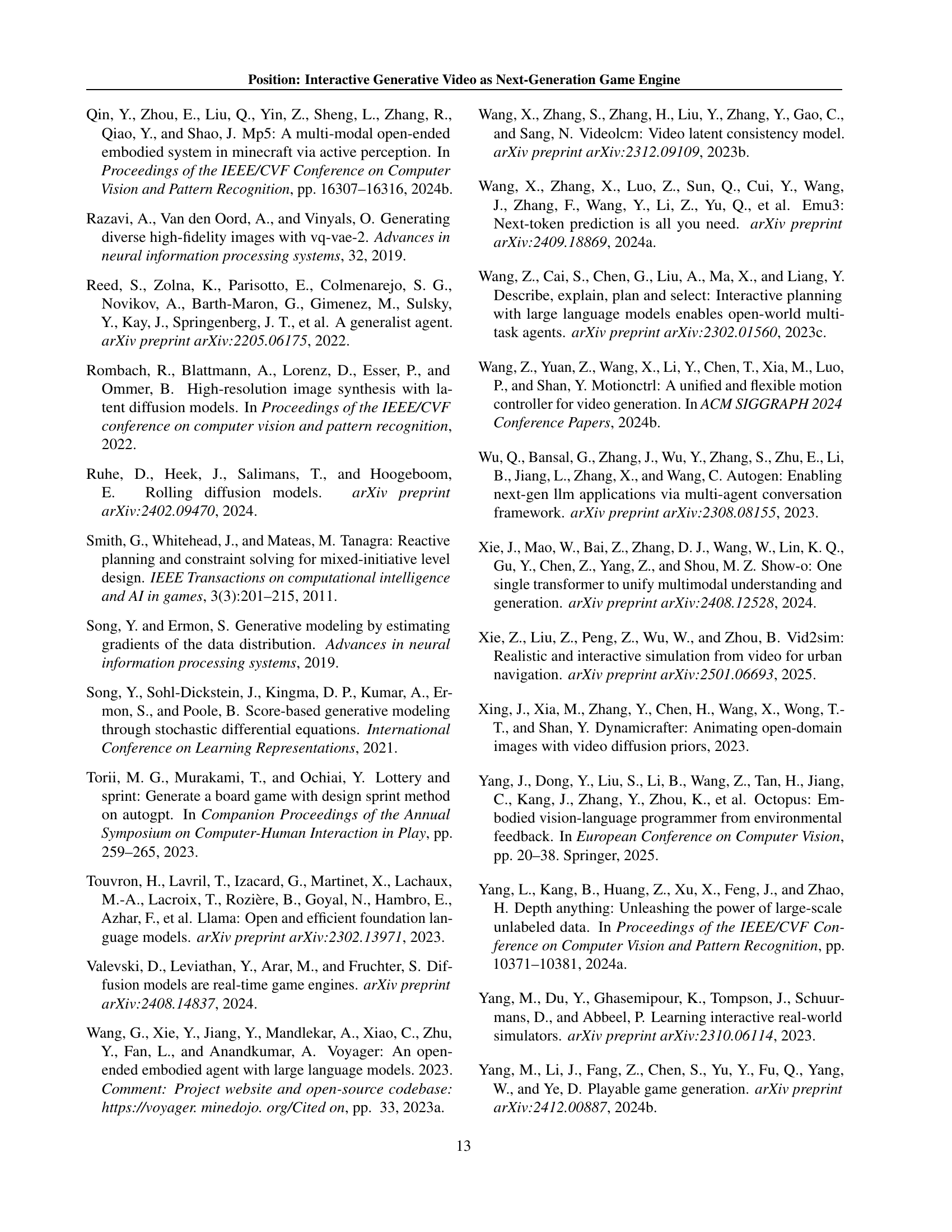

| Level | Name | Technical Features | Application Examples | Category |

| L0 | No AI-Assisted Assets Generation | Manual creation and integration of all game assets and logic. | Super Mario: fixed levels; Tetris: fixed rules. | Traditional Manual Game Development |

| L1 | AI-Assisted Assets Generation | Al-assisted creation and integration of game assets and logic. | Cyberpunk 2077: AI-generated assets; AI Dungeon: real-time NPC dialogues. | |

| L2 | Physics-Compliant Interactive World Generation | Real-time physics-compliant video generation with user interactions, supported by the Dynamics module. | E.g., Player sets fire to wooden bridges, AI dynamically renders blazing spans and rerouted enemy paths | Next-Gen AI-Driven Generative Game Engine |

| L3 | Causal-Reasoning World Simulation | World simulation with causal reasoning across time based on L2, incorporating the Intelligence module. | E.g., Killing a faction leader in Act 1 triggers city-wide riots in Act 3. | |

| L4 | Self-Evolving World Ecosystem | Autonomous world evolution with emergent behaviors based on L2 and L3, requiring advanced Intelligence module. | E.g. NPCs self-organize governments and trade as population increases. |

🔼 This table outlines a five-level maturity model (L0-L4) for Generative Game Engines (GGEs), illustrating the progression from traditional game development to AI-driven video generation. Levels L0 and L1 represent traditional manual game development with minimal AI assistance. Levels L2, L3, and L4 showcase next-generation GGEs that leverage video-based world generation, progressing from physics-compliant worlds to causal reasoning and ultimately self-evolving ecosystems.

read the caption

Table 1: Proposed Maturity Levels (L0-L4) of Generative Game Engine. L0-L1 represent traditional manual game development with limited AI assistance, while L2-L4 showcase next-generation game engines featuring video-based world generation.

In-depth insights#

IGV for GGE Core#

Interactive Generative Video (IGV) holds immense potential as the core of Generative Game Engines (GGE) due to its unique capabilities. IGV transcends traditional video generation by offering user control, memory of context, physics-aware simulations, and causal reasoning. These features are crucial for creating dynamic and interactive game environments. Traditional game engines rely on pre-made assets, limiting creativity and content. IGV overcomes this by enabling the generation of unlimited, novel content. Its capacity to simulate physics enables realistic interactions within the game world, enhancing immersion. User control allows for interactive experiences, making gameplay more engaging. Furthermore, the long-term memory and causal reasoning abilities of IGV enable the creation of complex narratives and emergent gameplay. Existing video game data can be utilized to train these models.

Physics Learning#

While the paper doesn’t explicitly focus on “Physics Learning”, the Dynamics module and discussions on physics-aware world modeling are highly relevant. The potential for Interactive Generative Video (IGV) to simulate physical laws and interactions offers exciting prospects for game-based physics education. Imagine players learning about gravity, momentum, and collisions through interactive gameplay within a dynamically generated environment. The ability to tune physics parameters, as mentioned in the context, could also allow for exploring different physical constants and their effects. By visualizing complex physical phenomena, IGV can offer a more intuitive and engaging approach to physics education compared to traditional methods. Overcoming the challenge of generating accurate and diverse physical simulations will be crucial for realizing this educational potential and creating truly immersive and informative gaming experiences. Furthermore, the development of evaluation benchmarks for assessing the physical accuracy of generated videos, as suggested in the paper, would be essential for ensuring the reliability of IGV as a learning tool. The connection between causal reasoning and physics should also be strengthened.

GGE Framework#

The proposed GGE framework consists of five core modules. Generation offers fundamental video creation, while Control allows user interaction. Memory preserves historical data for consistency, and Dynamics models physical rules. The Intelligence module introduces causal reasoning, enabling realistic world behavior. GGE also have Gameplay module, implementing external rules such as designer-imposed rewards or penality, objectives, and constraints. These components work together to create dynamic and immersive gaming experience.

GGE Evolution#

The evolution of Generative Game Engines (GGEs) represents a staged progression towards increasingly sophisticated and autonomous game creation. The initial stage (L0-L1) mirrors traditional game development, relying heavily on manual asset creation, with AI serving primarily as a tool to accelerate content generation, but the game structure remains pre-defined. The real shift occurs at L2, marked by the emergence of physics-compliant, interactive worlds powered by video generation. This stage signifies a move away from fixed content toward dynamically generated environments shaped by player actions. Progressing to L3 introduces causal reasoning, enabling the game world to respond to player choices in a long-term, consequential manner, generating complex storylines. The final and most advanced stage, L4, envisions self-evolving game worlds, where rules and behaviors emerge autonomously, leading to virtual ecosystems populated by intelligent agents, mirroring metaverses. This staged evolution provides a roadmap, highlighting key areas for research, including enhanced physics understanding, improved reasoning capabilities, and the development of sophisticated AI agents.

IGV Limitations#

IGV (Interactive Generative Video) limitations stem from several factors. Current models lack sufficient physical understanding, making realistic game environments difficult to create. Objects’ trajectories, velocities, and interactions are often inaccurate. Scene consistency is also a challenge. Simple camera movements can cause dramatic and unrealistic scene changes, breaking spatial continuity. Furthermore, current IGV models have limited logical reasoning capabilities, hindering complex narrative progression. They function more as rendering engines, failing to address deeper game environment logic. To overcome the limitation, combine video models with LLMs to enable robust understanding, high-quality data is essential for addressing the limitations and to effectively function as GGE’s core technology.

More visual insights#

More on figures

🔼 Figure 2 showcases the physics simulation capabilities of advanced video generation models. The top half displays examples from NVIDIA’s Cosmos (2025) model, illustrating its ability to generate realistic simulations across various domains. These include scenarios featuring robots interacting with objects, self-driving cars navigating complex environments, manufacturing processes, and everyday activities in home settings. All examples demonstrate the model’s understanding and accurate depiction of physical laws. The bottom half presents examples of human motion sequences generated by the Kling model, further highlighting the capacity of these models to accurately simulate complex physical interactions.

read the caption

Figure 2: Physics-aware generation capabilities of video models. Top: Examples from Cosmos (NVIDIA, 2025) demonstrating physical understanding in diverse scenarios including robotics, autonomous driving, manufacturing, and home environments. Bottom: Human motion examples generated by Kling.

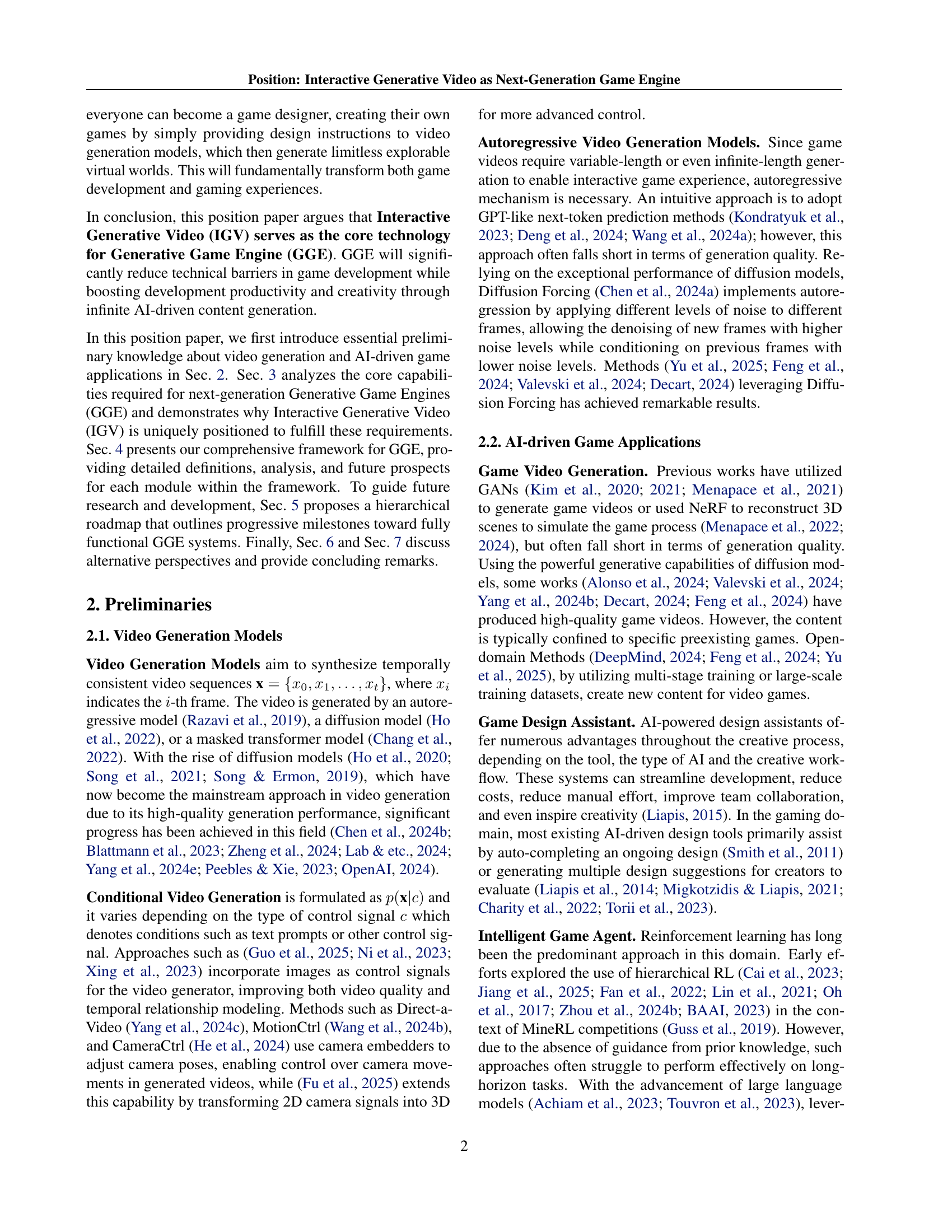

🔼 This figure illustrates the proposed framework for a Generative Game Engine (GGE). Part (a) shows a diagram of the GGE architecture, highlighting the interactions between its five core modules: Generation, Control, Memory, Dynamics, and Intelligence, and an additional GamePlay module. Part (b) provides a table listing the technical keywords associated with each module. Examples of how each module’s capabilities are applied in various games are displayed in gray boxes, with further details discussed in the paper.

read the caption

Figure 3: Proposed framework of Generative Game Engine (GGE). (a) Framework of GGE shows the architecture and interactions between modules. (b) Technical keywords of each module. The game examples shown in gray boxes demonstrate typical applications of each module’s capabilities, with detailed analysis provided in the main text.

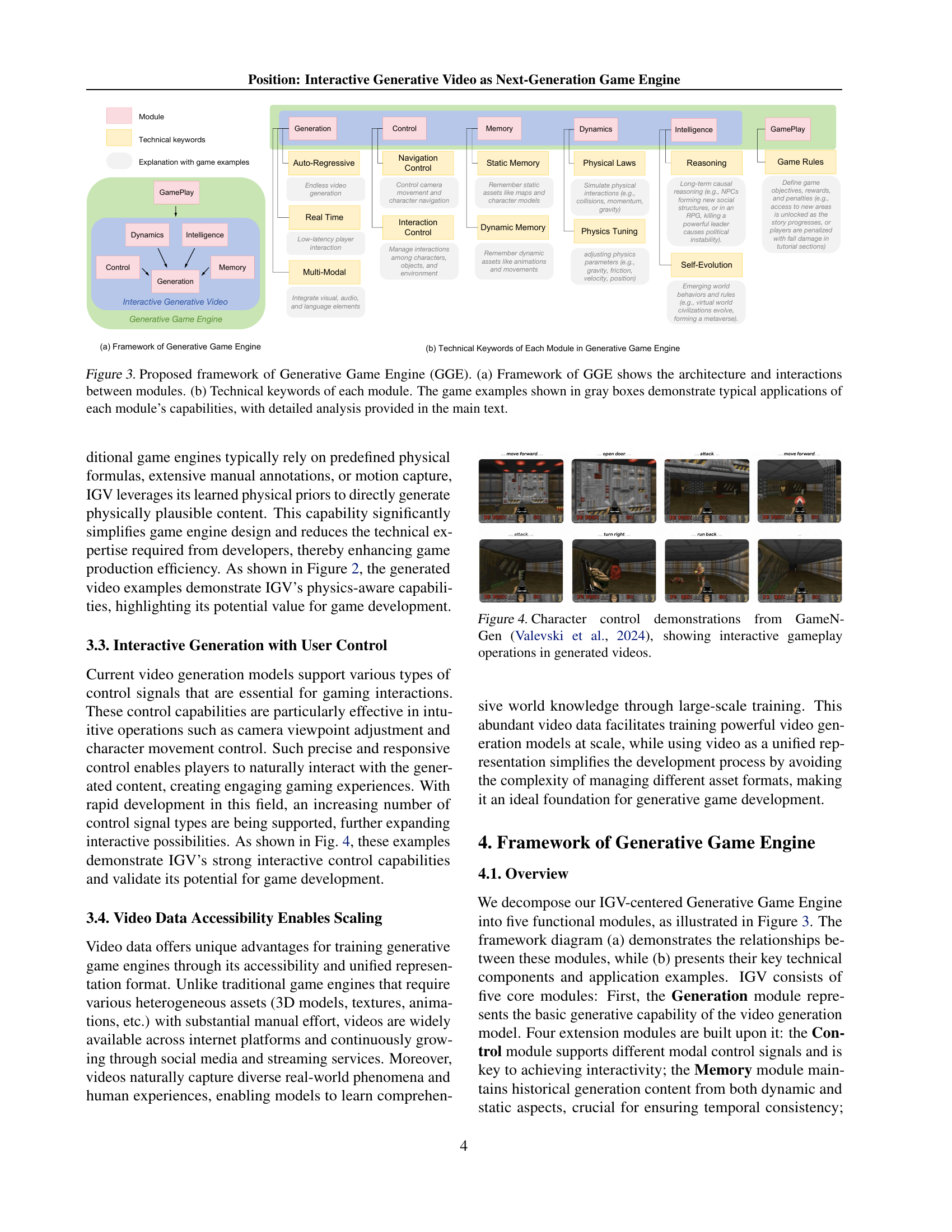

🔼 Figure 4 showcases examples from the GameNGen model (Valevski et al., 2024) demonstrating interactive gameplay. The figure visually represents how users can control character actions within videos generated by the model, illustrating the model’s ability to produce dynamic and responsive game environments.

read the caption

Figure 4: Character control demonstrations from GameNGen (Valevski et al., 2024), showing interactive gameplay operations in generated videos.

🔼 This figure illustrates the Control module within the Interactive Generative Video (IGV) framework. The Control module is responsible for managing player interactions within the game environment. It is divided into two main aspects: 1. Navigation Control: This aspect allows players to navigate the game’s virtual world. This could involve moving a camera, moving a character, or adjusting the player’s viewpoint. 2. Interaction Control: This aspect enables players to interact with objects and elements within the game environment. Examples include picking up items, manipulating objects, breaking objects, or interacting with characters. The diagram visually represents these control mechanisms.

read the caption

Figure 5: The Control module of IGV manages player control through two aspect: Navigation Control and Interaction Control.

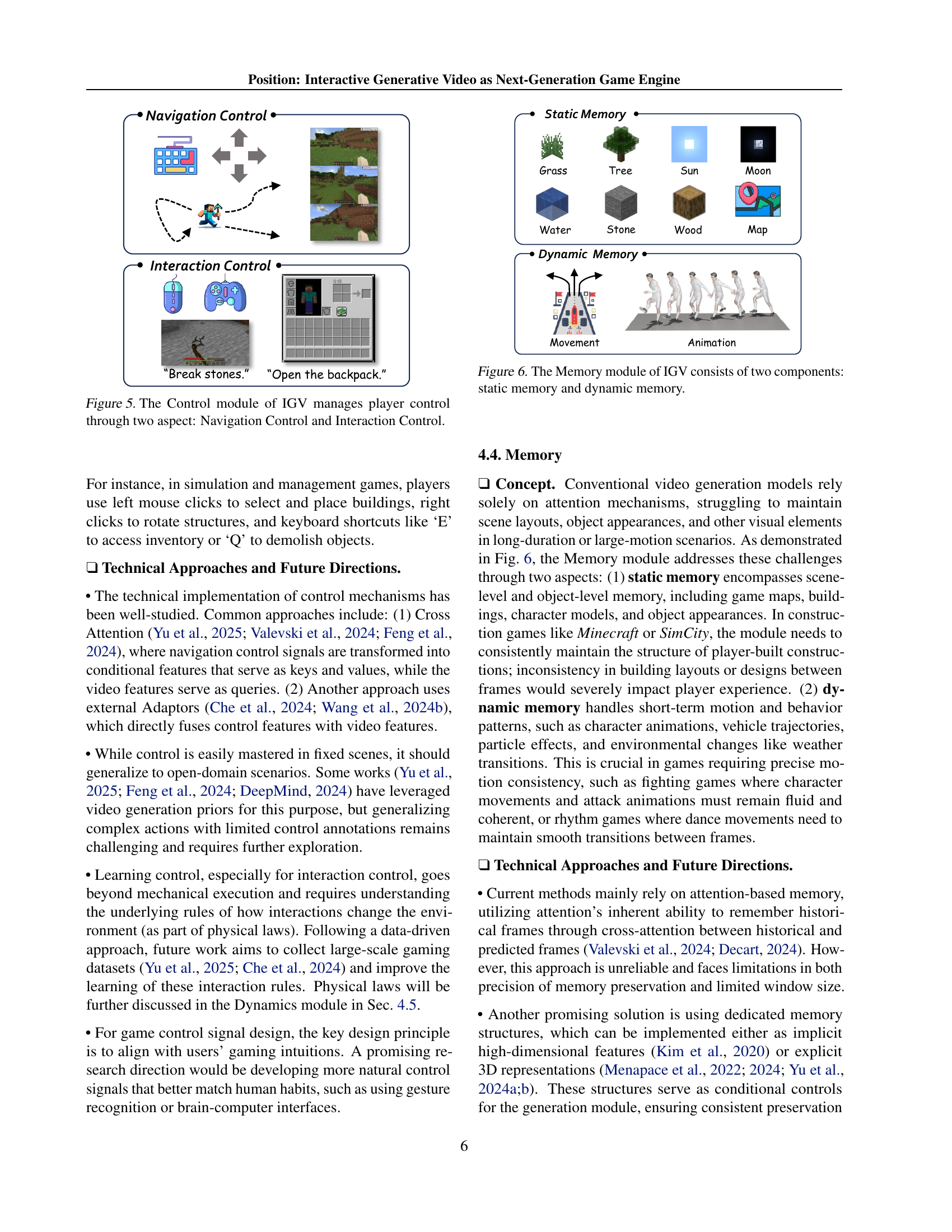

🔼 The figure illustrates the Memory module within the Interactive Generative Video (IGV) framework. This module is crucial for maintaining consistency in generated videos over time. It’s divided into two main components: static memory and dynamic memory. Static memory stores persistent elements of the game world, such as maps, building structures, and character models. Dynamic memory tracks temporary elements and changes within the game, including character animations, movement, and environmental effects like weather changes. The interplay between static and dynamic memory ensures that generated videos remain coherent and consistent despite changes in game states.

read the caption

Figure 6: The Memory module of IGV consists of two components: static memory and dynamic memory.

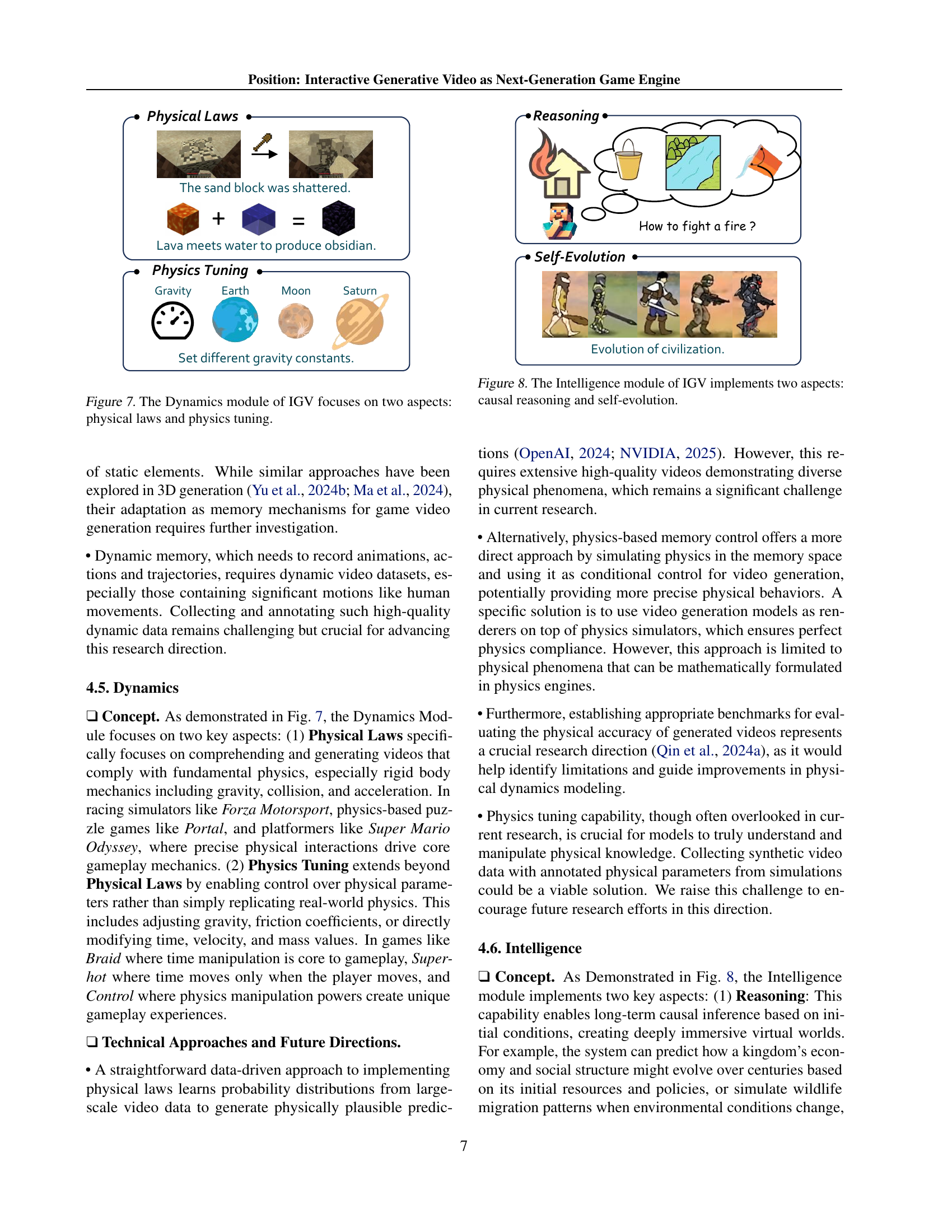

🔼 The figure illustrates the Dynamics module within the Interactive Generative Video (IGV) framework. This module is responsible for ensuring that the generated videos adhere to the laws of physics. It achieves this through two key approaches: (1) the simulation of fundamental physical laws (like gravity, collisions, and momentum) which govern the interactions within the simulated world; (2) the ability to adjust or ’tune’ physics parameters (such as gravity strength or friction) to achieve desired gameplay effects, thus creating flexibility in how the physics within the game world are implemented. The image visually depicts these concepts.

read the caption

Figure 7: The Dynamics module of IGV focuses on two aspects: physical laws and physics tuning.

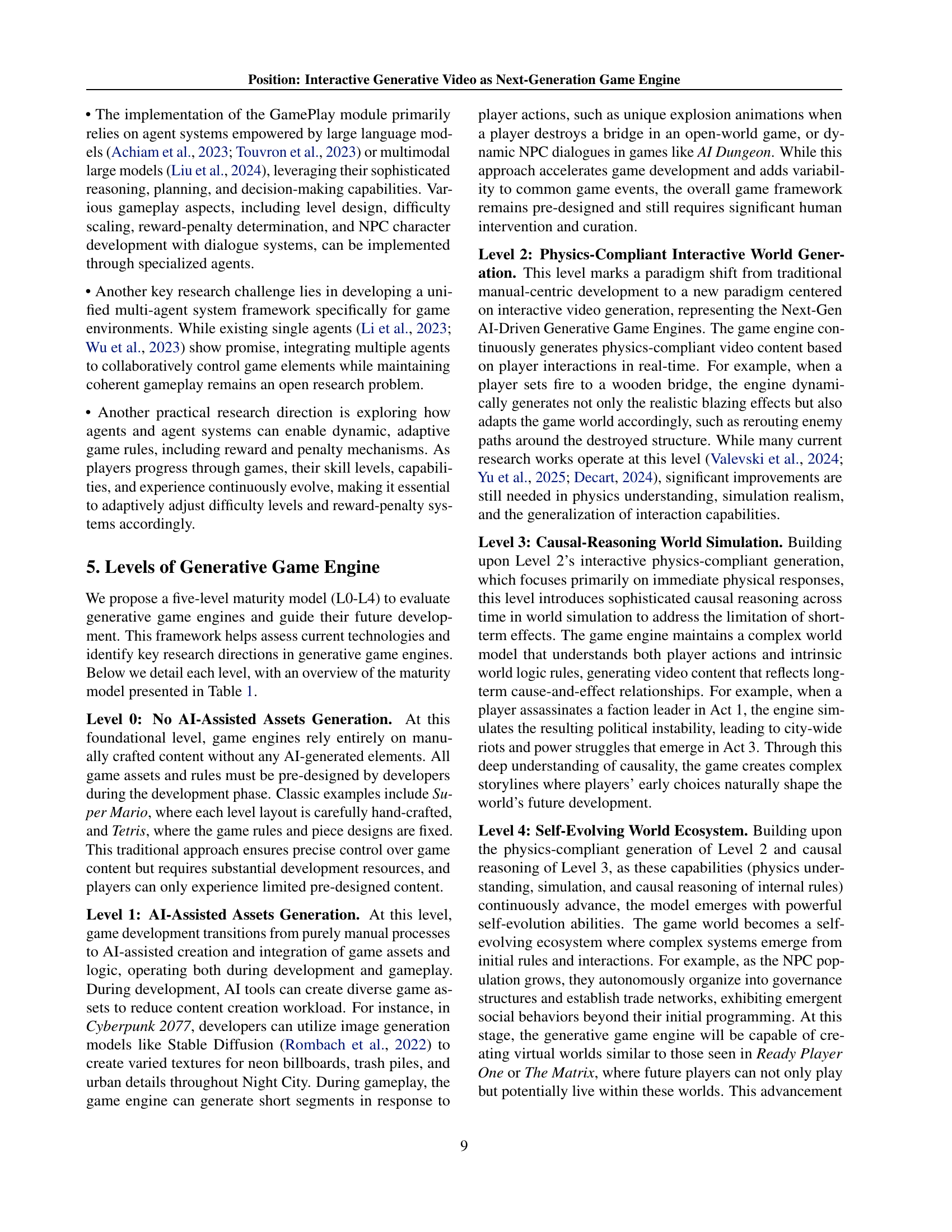

🔼 The Intelligence module within the proposed Interactive Generative Video (IGV) framework is responsible for two key functionalities: causal reasoning and self-evolution. Causal reasoning allows the model to understand and predict long-term consequences within the simulated environment. This means the system can anticipate how changes made by players (or the system itself) will affect the world over time. Self-evolution refers to the model’s ability to create and evolve dynamic rules and behaviors within the simulated world. This could result in the emergence of new civilizations, evolving ecosystems, or other unforeseen events that were not explicitly programmed.

read the caption

Figure 8: The Intelligence module of IGV implements two aspects: causal reasoning and self-evolution.

Full paper#