TL;DR#

Large Language Models’ (LLMs) rapid evolution requires efficient KV cache management due to increasing context window sizes. Existing methods for KV Cache compression either remove less important tokens or reduce token precision, often struggling with accurate importance identification and facing performance bottlenecks or mispredictions. The paper addresses these shortcomings by observing that attention spikes follow a log distribution, becoming sparser farther from the current position.

To address these issues, LogQuant is introduced to significantly improves accuracy through better token preservation. Ignoring absolute KV cache entry positions optimizes quantization/dequantization speed. Benchmarks show a 25% throughput increase and 60% batch size boost without extra memory. Complex tasks like Math and Code see 40-200% accuracy gains, surpassing KiVi and H2O. LogQuant integrates with Python’s transformers library.

Key Takeaways#

Why does it matter?#

This paper introduces LogQuant, an innovative 2-bit quantization technique for KV caches in LLMs, offering superior accuracy and efficiency. It addresses the critical challenge of balancing memory savings and performance, paving the way for more practical deployment of large models, especially in resource-constrained environments. The findings open new avenues for optimizing LLM inference and enhancing performance across various tasks.

Visual Insights#

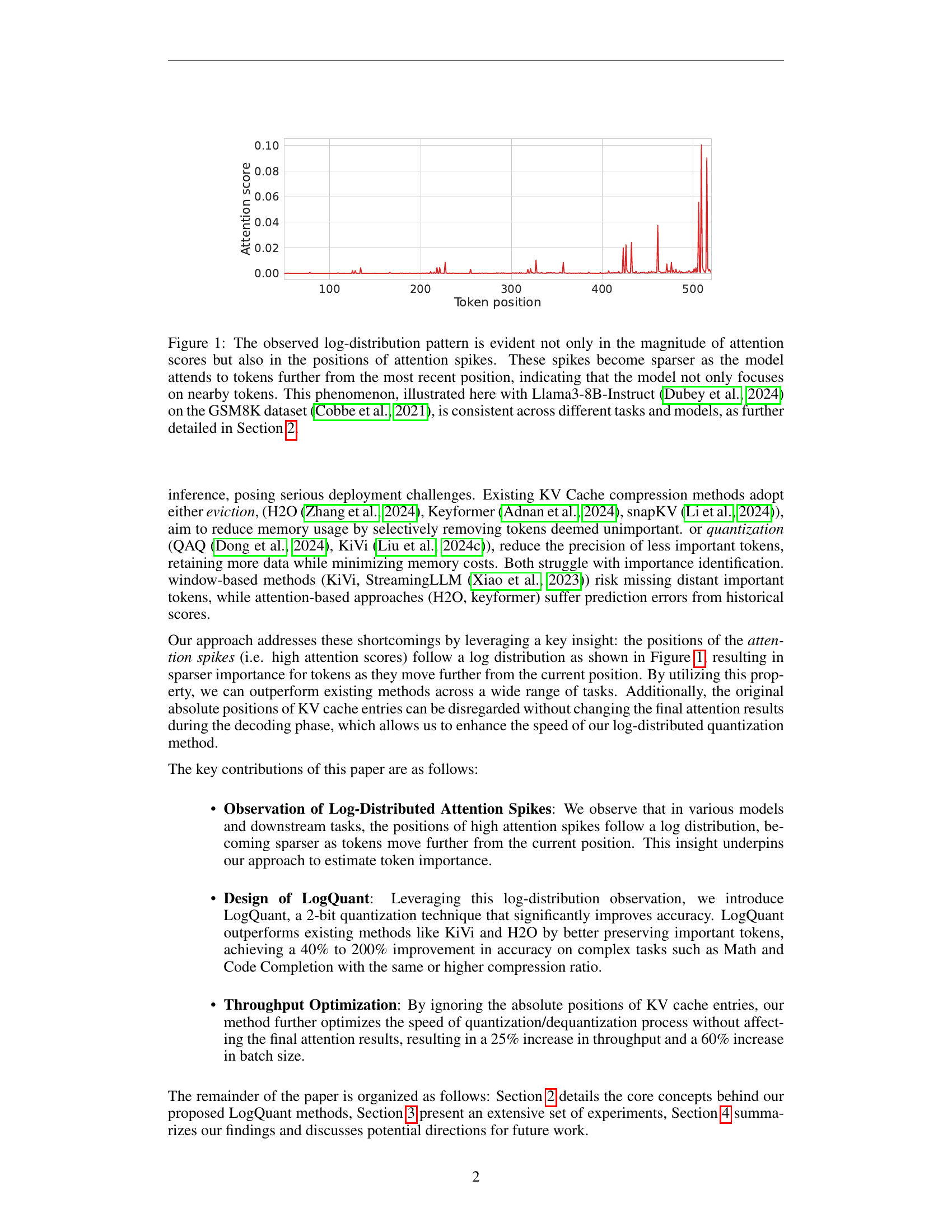

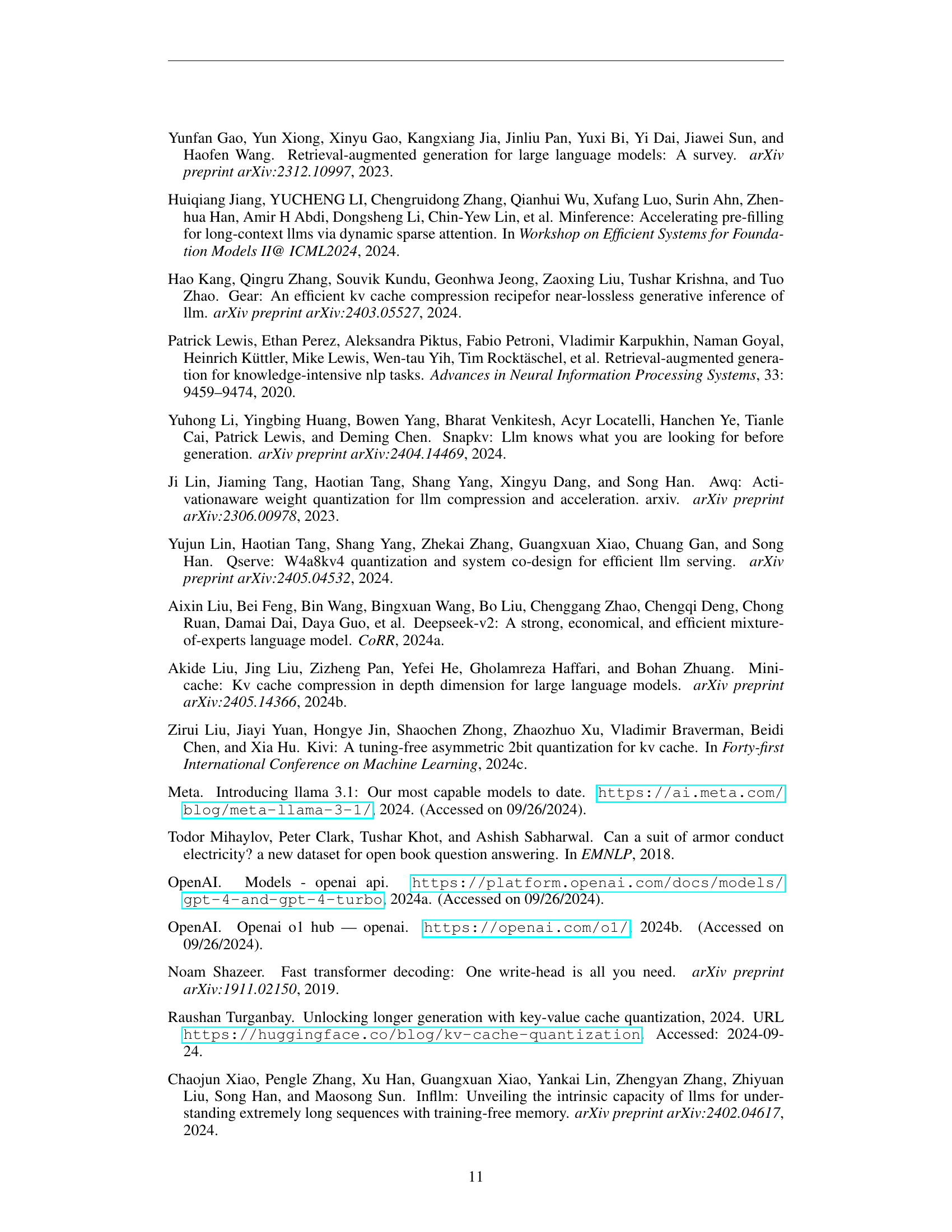

🔼 This figure displays a graph showing the distribution of attention scores across different token positions. The x-axis represents the token position, and the y-axis represents the attention score. The graph shows that the attention scores follow a log-distribution pattern, with higher scores concentrated near the most recent token position and gradually decreasing as the distance from the most recent token increases. The figure illustrates this phenomenon using the Llama3-8B-Instruct model and the GSM8K dataset. The observation is consistent across different models and tasks, and it forms the basis of the LogQuant algorithm for efficiently compressing KV cache in LLMs. The log-distribution means the model’s attention is more focused on recent tokens.

read the caption

Figure 1: The observed log-distribution pattern is evident not only in the magnitude of attention scores but also in the positions of attention spikes. These spikes become sparser as the model attends to tokens further from the most recent position, indicating that the model not only focuses on nearby tokens. This phenomenon, illustrated here with Llama3-8B-Instruct (Dubey et al., 2024) on the GSM8K dataset (Cobbe et al., 2021), is consistent across different tasks and models, as further detailed in Section 2.

| Model | baseline(BF16) | KiVi(4-bit) | KiVi(2-bit) | KiVi(2-bit)+Sink(BF16) | |

| Llama3.1-8B-Instruct | 71.41 | 67.24 | 18.04 | 18.49 | +0.45 |

| Qwen1.5-7B-Chat | 57.24 | 52.27 | 39.80 | 39.42 | -0.38 |

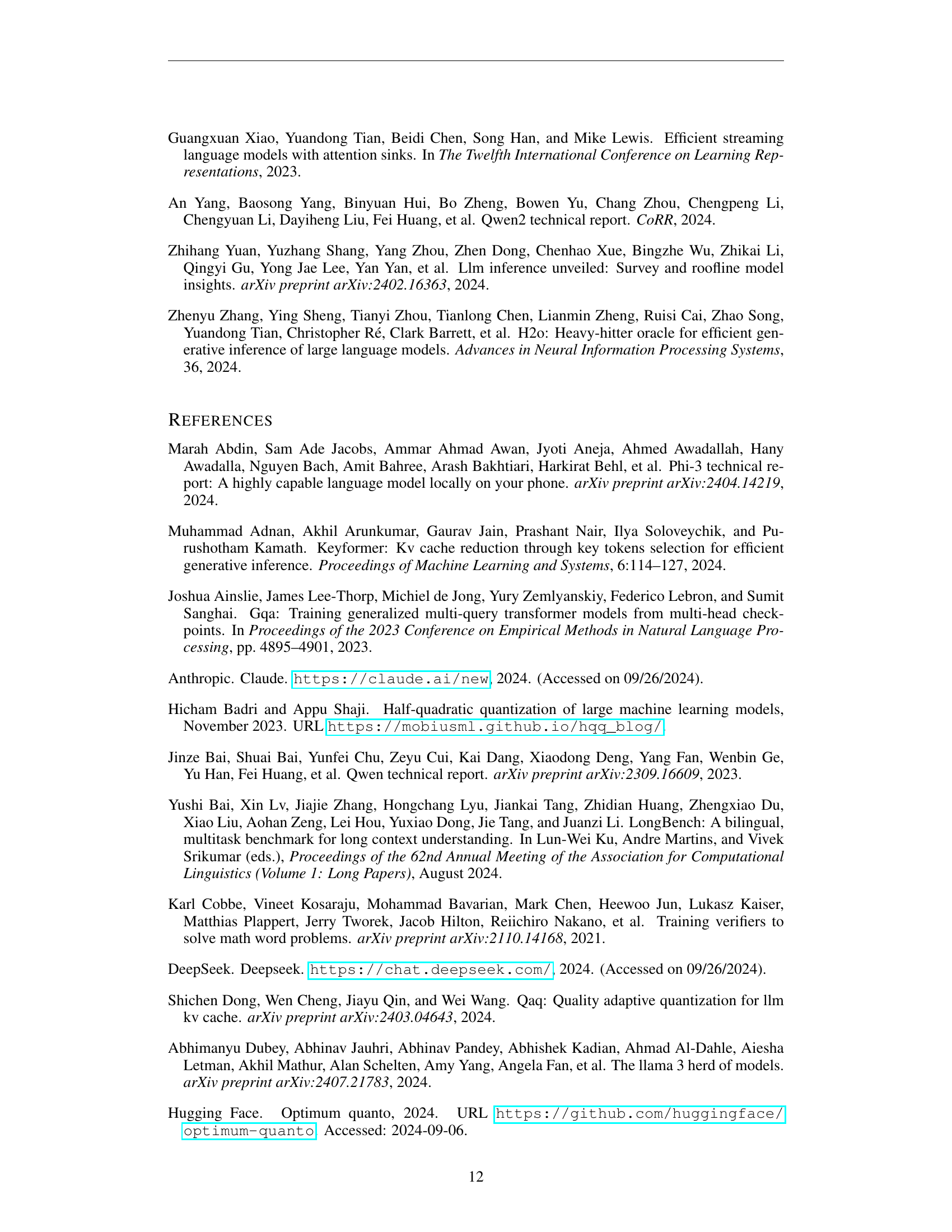

🔼 This table investigates the effect of preserving the first two tokens (referred to as ‘sink tokens’) at their original precision (full precision, not quantized) during 2-bit quantization of the KV cache. The experiment is conducted using the GSM8K dataset. It shows the final answer accuracy for different quantization methods: a baseline method without any tokens preserved at full precision and a method that keeps the first two tokens at original precision. The difference in accuracy between these two methods (ΔSink) is calculated and shown to illustrate the impact of retaining the sink tokens. Both methods use the most recent 128 tokens at original precision.

read the caption

Table 1: Impact of retaining the first two tokens (referred to as ”Sink”) at original precision. The final answer accuracy results on GSM8K Cobbe et al. (2021) are presented. We present the improvement as ΔSinksubscriptΔSink\Delta_{\text{Sink}}roman_Δ start_POSTSUBSCRIPT Sink end_POSTSUBSCRIPT. Both methods maintain the recent 128 tokens at original precision.

In-depth insights#

LogQuant Intro#

The paper introduces LogQuant, a novel 2-bit quantization technique designed to optimize KV Cache in LLMs, addressing memory limitations in long-context scenarios. LogQuant employs a log-distributed approach, selectively compressing the KV Cache across the entire context based on the observation that attention spikes follow a log distribution. This means, the KV cache entries becomes sparser as the model attends to tokens further away from the most recent position. This strategy contrasts with previous methods that assume later tokens are more important or attempt to predict important tokens based on earlier attention patterns, which often leads to performance bottlenecks. The log-based filtering mechanism enables LogQuant to preserve superior performance and enhance throughput by 25% and boost batch size by 60% without increasing memory consumption. Most importantly, this enables the model to improve accuracy on challenging tasks by 40-200% at the same compression ratio. This improvement outperforms comparable techniques. LogQuant integrates with transformers library. This is readily available on github.

Log-Spike Aware#

The concept of ‘Log-Spike Aware’ hints at a system that intelligently identifies and manages data spikes exhibiting a logarithmic distribution. In the context of KV cache optimization, this could mean recognizing that certain data points or memory locations, when plotted on a logarithmic scale, show sudden, significant increases in activity or importance. Log-Spike Aware quantization may involve dynamically allocating more resources or applying finer-grained quantization to these spikes, ensuring high accuracy is maintained for critical information. This method likely leverages the observation that spikes are sparser farther away from the current token. A ‘Log-Spike Aware’ system could proactively adjust its quantization strategy based on the predicted or observed log-spike distribution, preventing bottlenecks and improving overall efficiency by reserving full precision to important spikes. It could selectively filter less valuable spikes by applying a log-based mechanism while improving LLM inference and performance.

Quant vs Evict#

Quantization versus eviction presents a fundamental trade-off in KV cache compression for LLMs. Quantization reduces the precision of token representations, offering memory savings while retaining all tokens, but potentially introducing inaccuracies due to the lower bit-depth. Eviction, on the other hand, discards tokens entirely, leading to a smaller cache size but potentially losing crucial context. The choice hinges on the sensitivity of the LLM to precision loss versus contextual information loss. Quantization can be less disruptive, as it preserves the overall structure of the attention mechanism, while eviction can drastically alter the attention distribution, particularly with softmax normalization. Effective strategies must consider the model architecture, task requirements, and desired compression ratio to optimize for accuracy and efficiency. LogQuant strategy focuses on the quantization for maintaining the accuracy.

Pos-Agnostic Calc#

I believe ‘Pos-Agnostic Calc’ refers to a computation method independent of the positional embeddings in a transformer network. This suggests an approach that may disregard the absolute or relative positions of tokens when performing calculations, potentially for efficiency or to handle variable-length inputs. Positional encodings are crucial for transformers to understand sequential data, so removing this might lead to issues. Positional agnosticism has been applied to KV Cache entries, enabling memory locality and speeding up inference without altering attention outputs. This can be achieved by concatenating high-precision tokens with quantized ones, disregarding their original order. Such ‘Pos-Agnostic Calc’ can be useful for optimization to reduce complexity when the precise positions don’t drastically affect the meaning. Although it might mean less accurate context extraction, it could offer an efficient way to summarize information, or when the calculation involves properties invariant to order, a Pos-Agnostic method can improve computational speed and memory footprint. It works by reordering the KV cache without impacting final results.

Future:Op Fusion#

Operator fusion presents a promising avenue for future research in optimizing large language model (LLM) inference. By combining multiple operations into a single kernel, we can reduce memory traffic and overhead, leading to significant performance gains. This is especially beneficial for KV cache quantization, where dequantization operations can be fused with attention calculations. Exploring different fusion strategies, such as horizontal and vertical fusion, and developing specialized fusion kernels for quantized data types are worthwhile directions. Furthermore, investigating dynamic fusion techniques that adaptively fuse operations based on the input data and hardware characteristics could lead to even greater efficiency. Addressing challenges like kernel complexity and hardware compatibility is crucial for realizing the full potential of operator fusion.

More visual insights#

More on figures

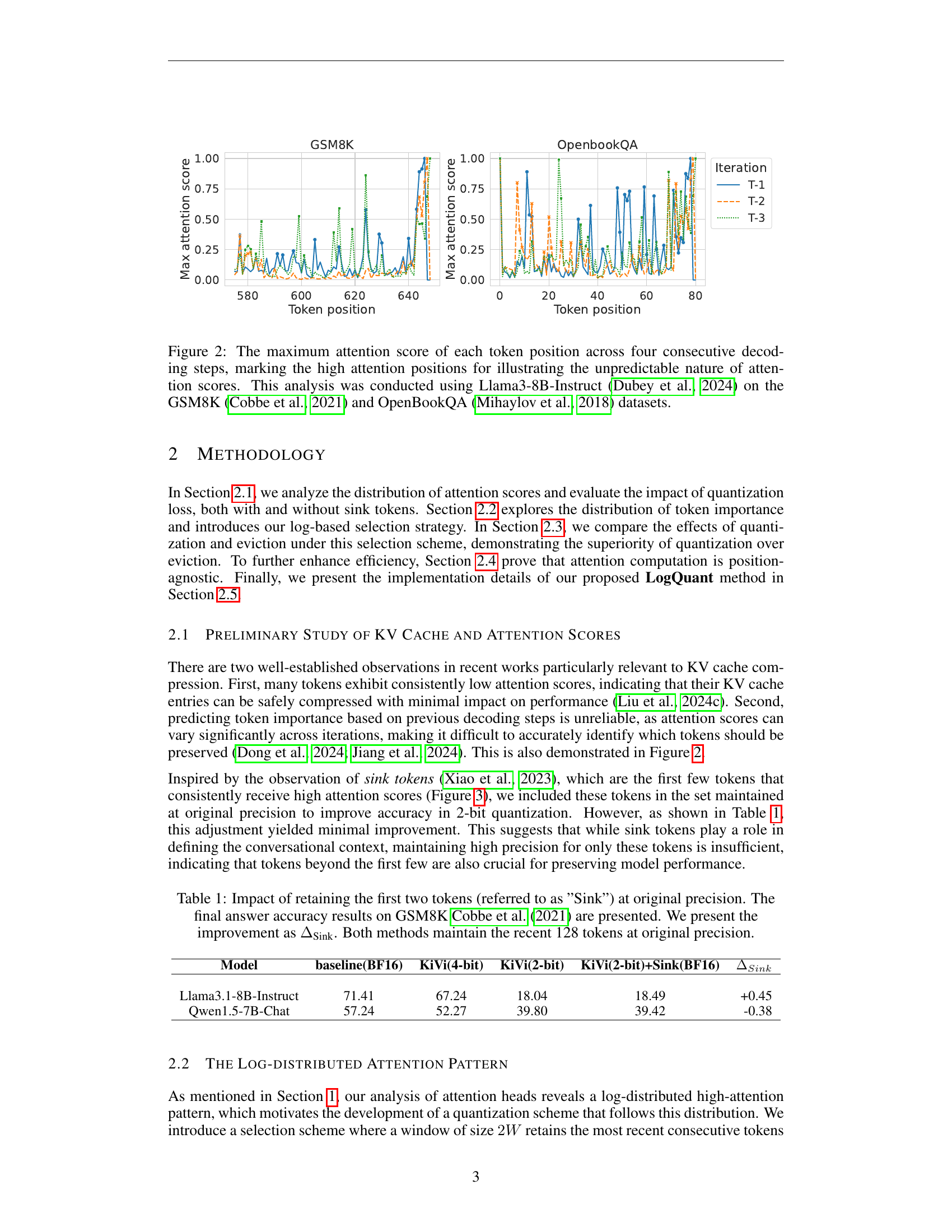

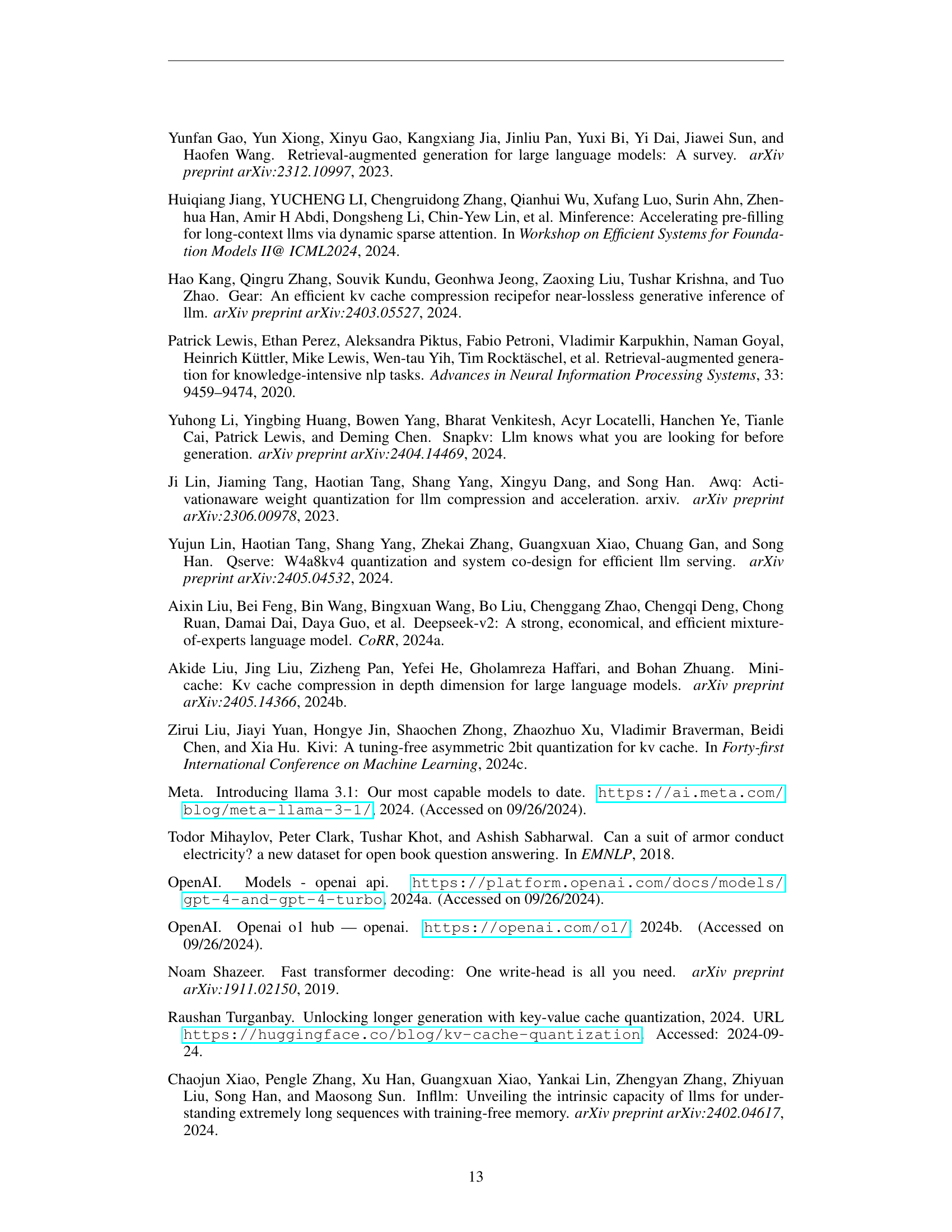

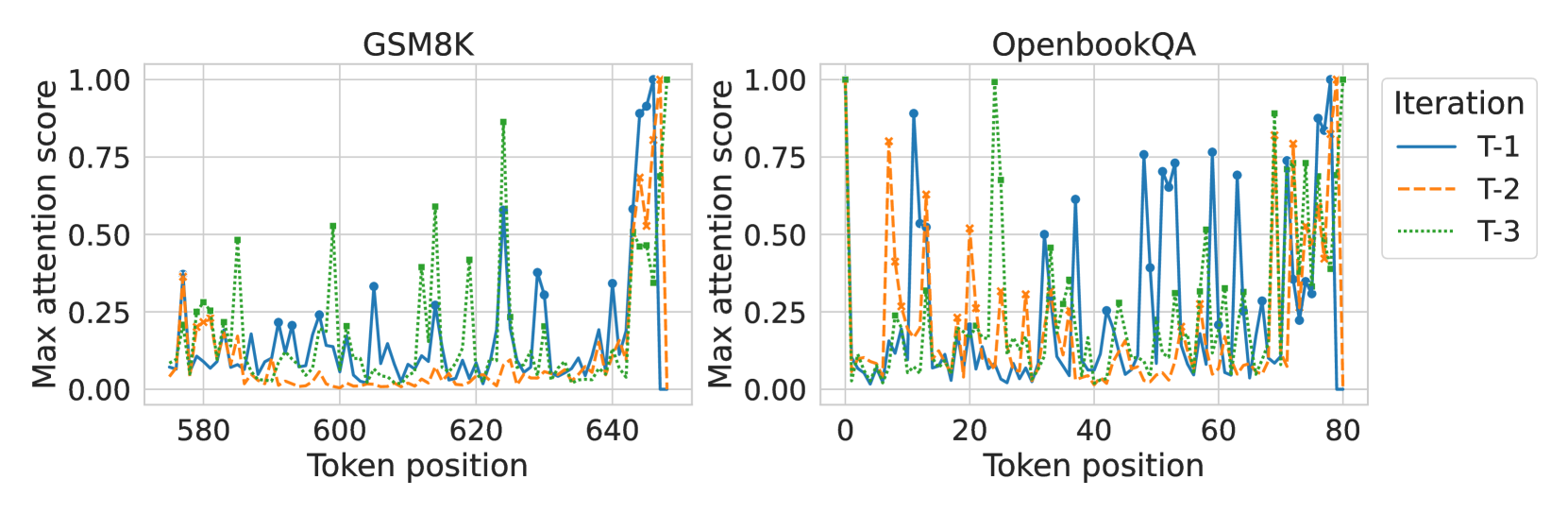

🔼 Figure 2 visualizes the unpredictable nature of attention weights in LLMs over time. It displays the maximum attention score for each token position across four consecutive decoding steps, using the Llama3-8B-Instruct model on both GSM8K and OpenBookQA datasets. The unpredictable fluctuations highlight the challenges in accurately predicting important tokens for efficient memory management, especially when considering compression techniques.

read the caption

Figure 2: The maximum attention score of each token position across four consecutive decoding steps, marking the high attention positions for illustrating the unpredictable nature of attention scores. This analysis was conducted using Llama3-8B-Instruct (Dubey et al., 2024) on the GSM8K (Cobbe et al., 2021) and OpenBookQA (Mihaylov et al., 2018) datasets.

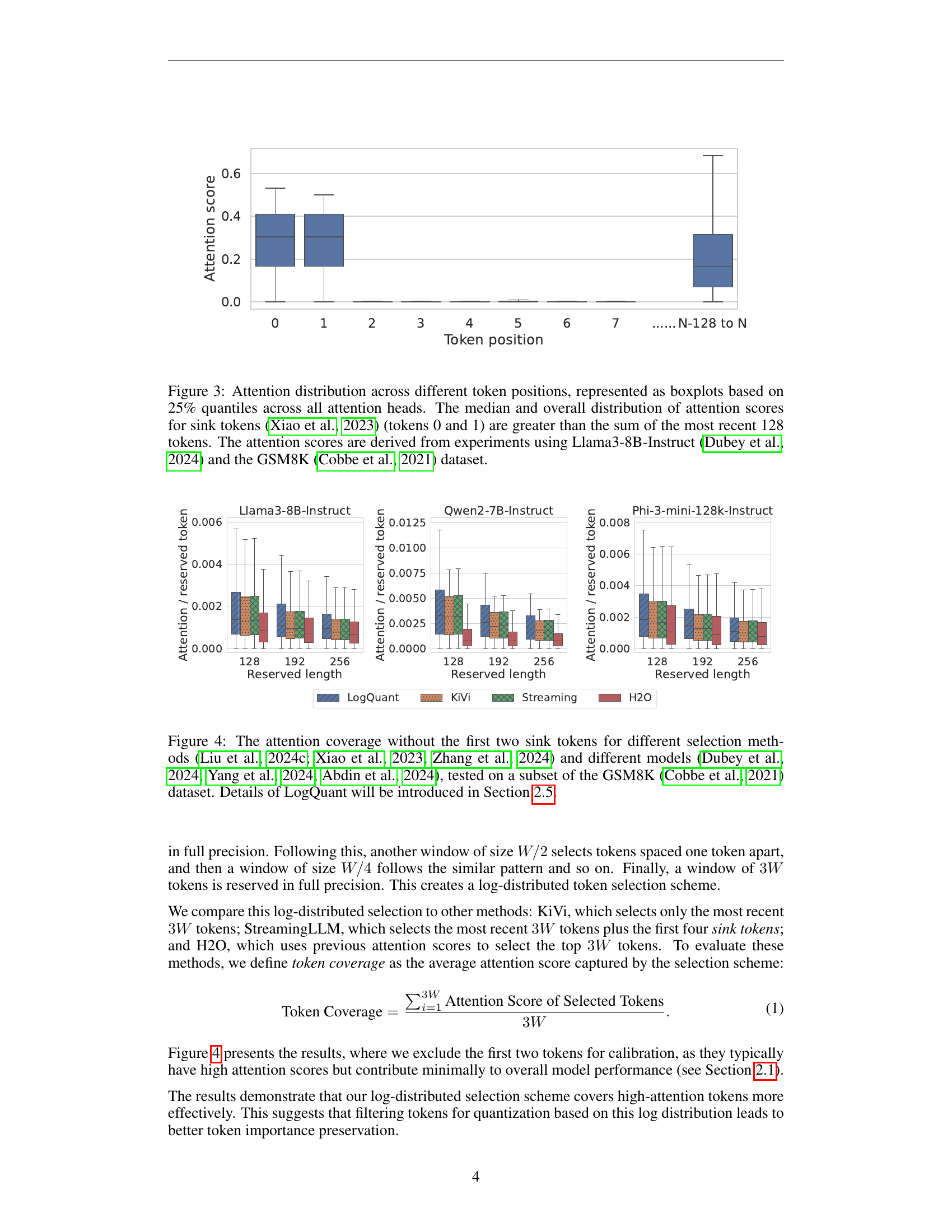

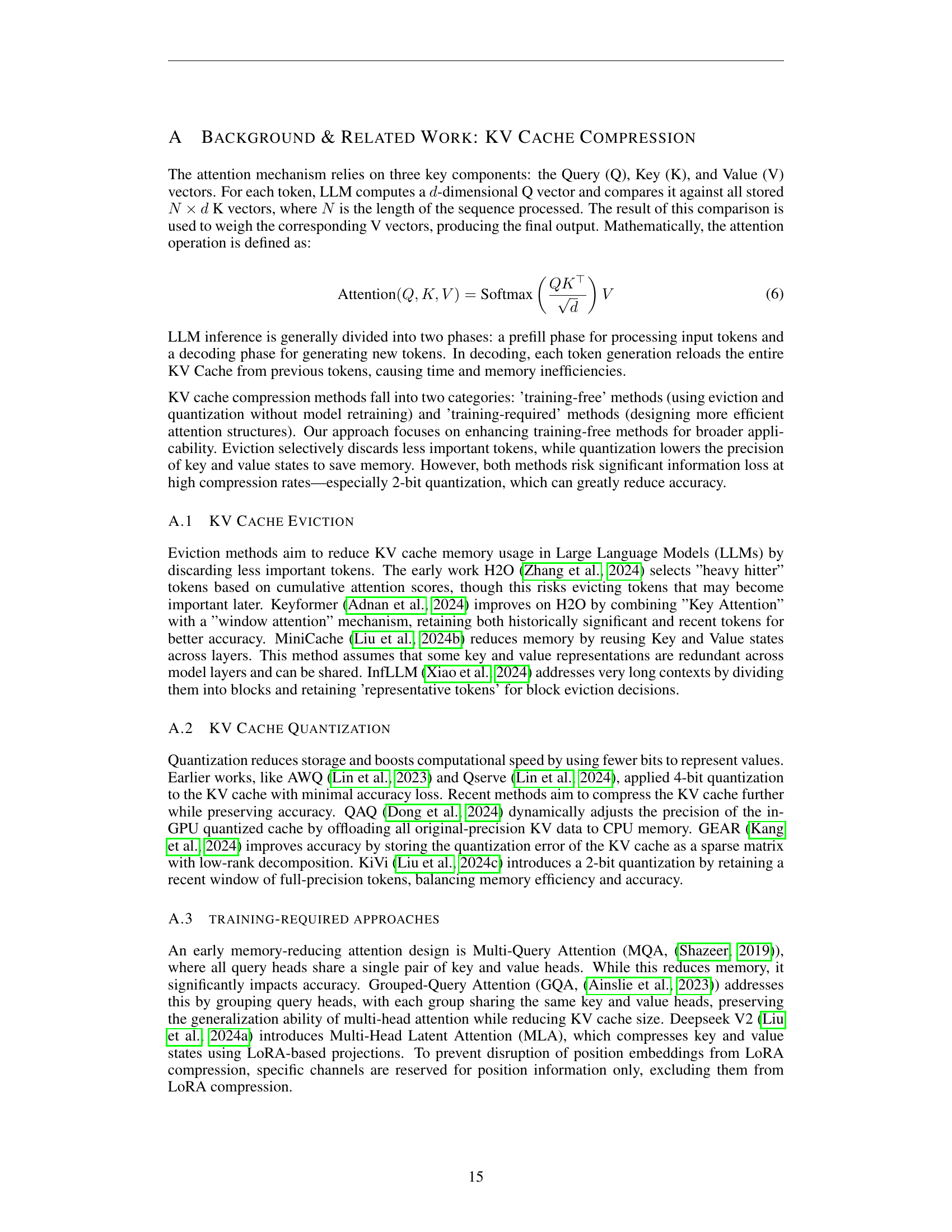

🔼 Figure 3 illustrates the distribution of attention weights across different token positions within the context window of a large language model. Boxplots summarize the attention scores across all attention heads, showing the median and interquartile range (25th and 75th percentiles) for each token position. The figure highlights that the attention scores for the first two tokens (referred to as ‘sink tokens’), which are typically the most recently processed tokens, exhibit a higher median and overall distribution of scores compared to the combined scores of the subsequent 128 tokens. This observation supports the notion that the most recent tokens carry more weight in the attention mechanism. The data presented in this graph is derived from experiments conducted using the Llama3-8B-Instruct model on the GSM8K dataset.

read the caption

Figure 3: Attention distribution across different token positions, represented as boxplots based on 25% quantiles across all attention heads. The median and overall distribution of attention scores for sink tokens (Xiao et al., 2023) (tokens 0 and 1) are greater than the sum of the most recent 128 tokens. The attention scores are derived from experiments using Llama3-8B-Instruct (Dubey et al., 2024) and the GSM8K (Cobbe et al., 2021) dataset.

🔼 Figure 4 compares the effectiveness of different token selection methods for compressing the key-value (KV) cache in large language models (LLMs). It shows the attention coverage achieved by four different methods: LogQuant, Kivi, Streaming, and H2O. The comparison is made across various LLMs (Llama3-8B-Instruct, Qwen-2-7B-Instruct, Phi-3-mini-128k-Instruct) and uses a subset of the GSM8K dataset. The x-axis represents the length of the reserved portion of the KV cache, while the y-axis shows the average attention scores captured by each selection method. The figure demonstrates that LogQuant achieves better attention coverage than the other methods, indicating its superior ability to select and retain important tokens while reducing memory usage. The first two sink tokens (tokens with consistently high attention scores) are excluded from the analysis to focus on the relative performance of the selection methods.

read the caption

Figure 4: The attention coverage without the first two sink tokens for different selection methods (Liu et al., 2024c; Xiao et al., 2023; Zhang et al., 2024) and different models (Dubey et al., 2024; Yang et al., 2024; Abdin et al., 2024), tested on a subset of the GSM8K (Cobbe et al., 2021) dataset. Details of LogQuant will be introduced in Section 2.5.

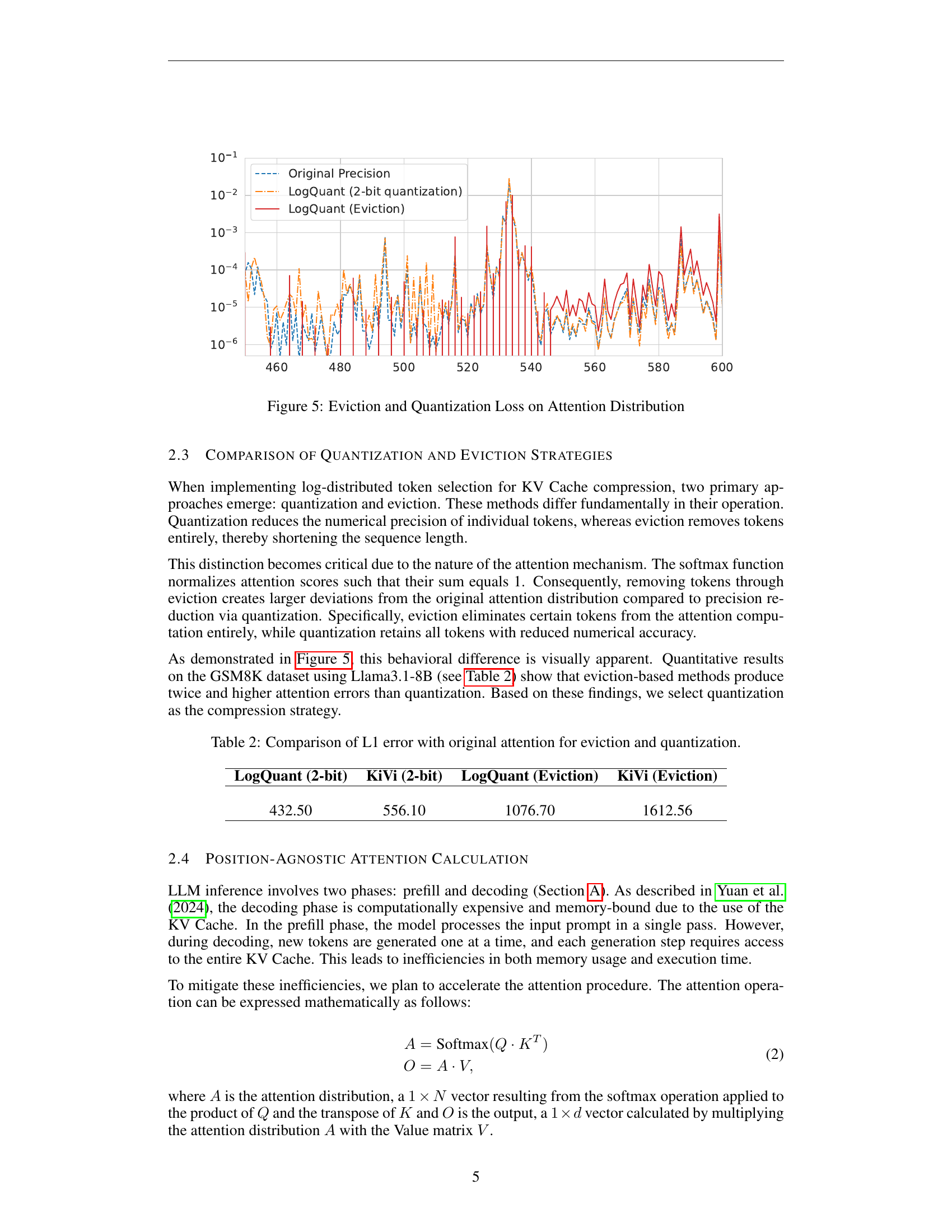

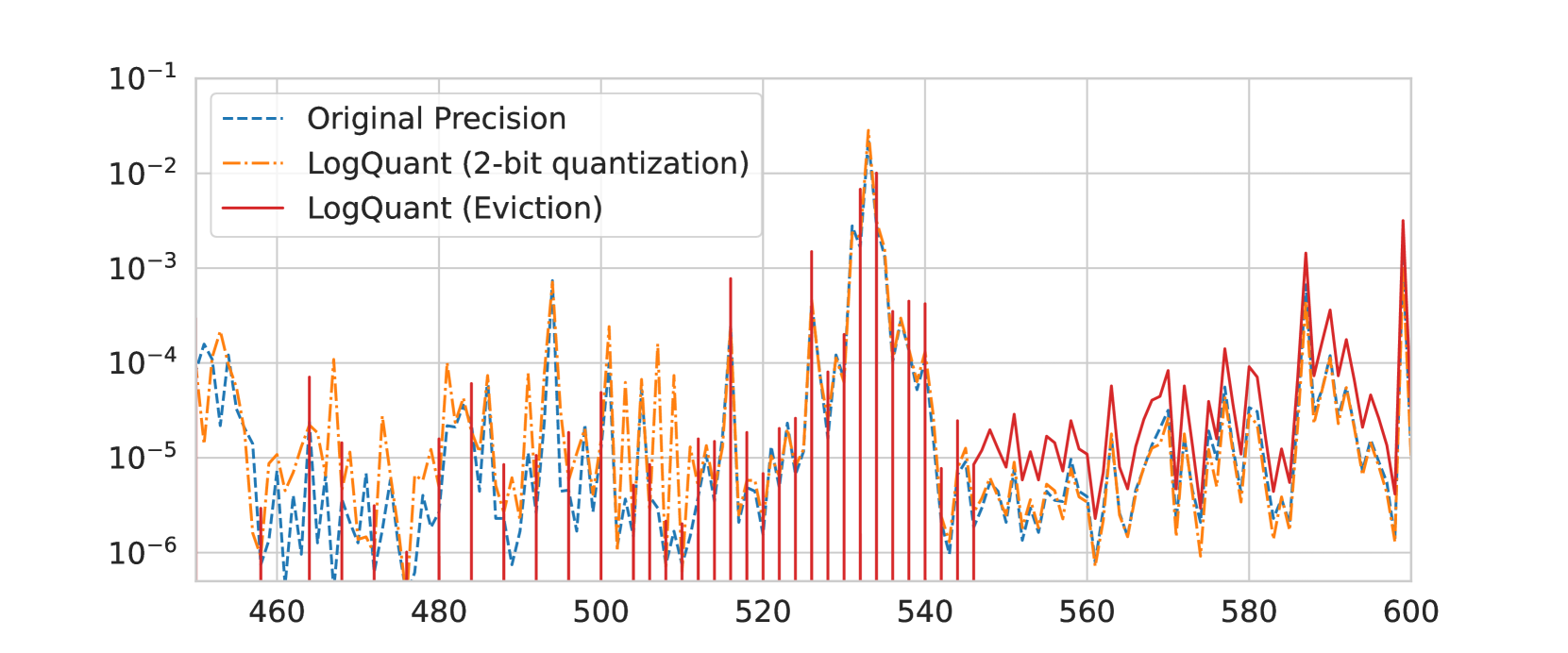

🔼 This figure compares the effects of two different KV Cache compression strategies: quantization and eviction. It demonstrates that using quantization to reduce the numerical precision of tokens instead of removing them entirely (eviction) leads to significantly less distortion of the attention distribution. The plot visualizes the L1 error between the original attention distribution and the distributions after compression using both methods.

read the caption

Figure 5: Eviction and Quantization Loss on Attention Distribution

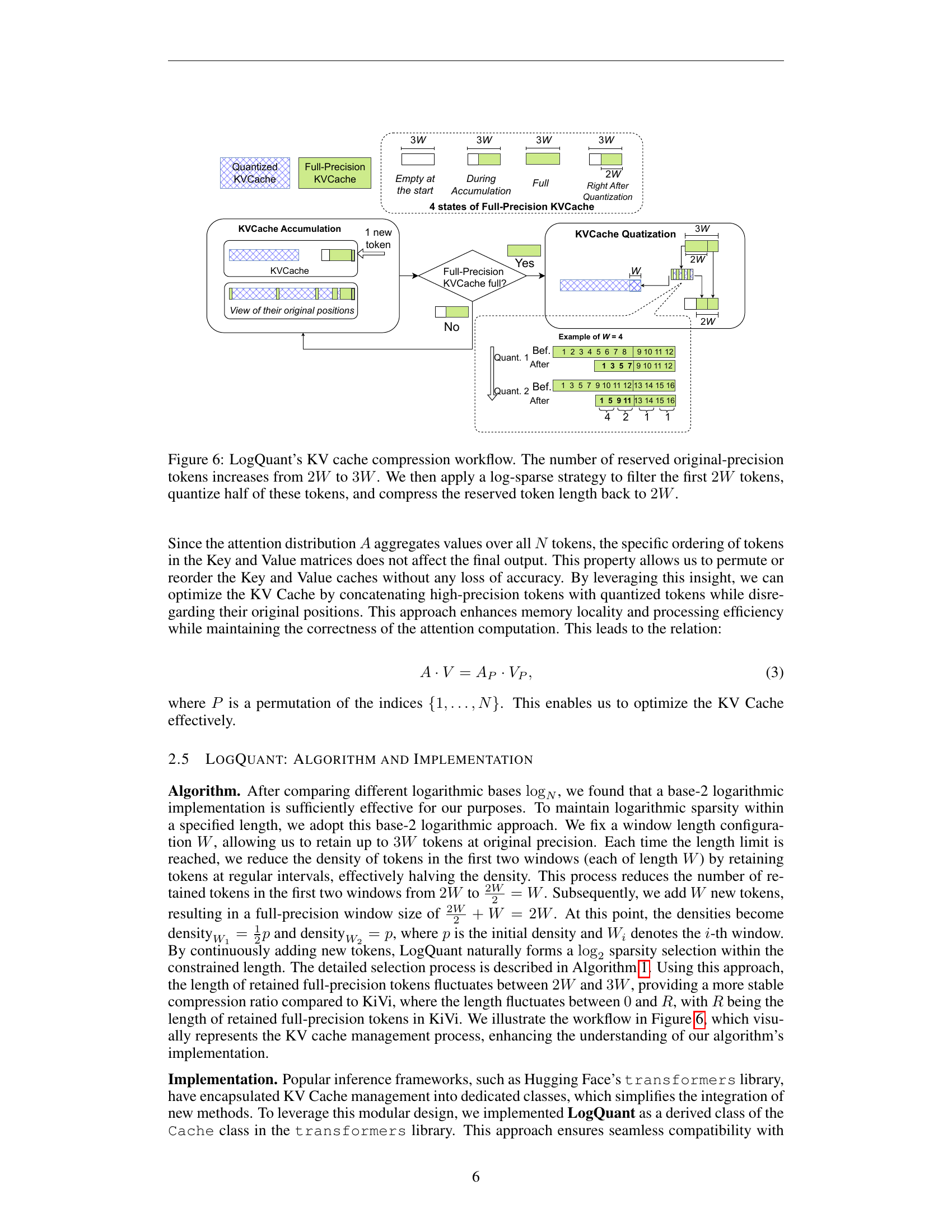

🔼 LogQuant’s KV cache compression workflow is illustrated. Initially, 3W tokens are kept at full precision. A log-sparse filtering strategy is then applied to the first 2W tokens, resulting in half of them being quantized. This process reduces the number of full-precision tokens, ultimately compressing the reserved token length back down to 2W. This cyclical process ensures efficient memory management.

read the caption

Figure 6: LogQuant’s KV cache compression workflow. The number of reserved original-precision tokens increases from 2W2𝑊2W2 italic_W to 3W3𝑊3W3 italic_W. We then apply a log-sparse strategy to filter the first 2W2𝑊2W2 italic_W tokens, quantize half of these tokens, and compress the reserved token length back to 2W2𝑊2W2 italic_W.

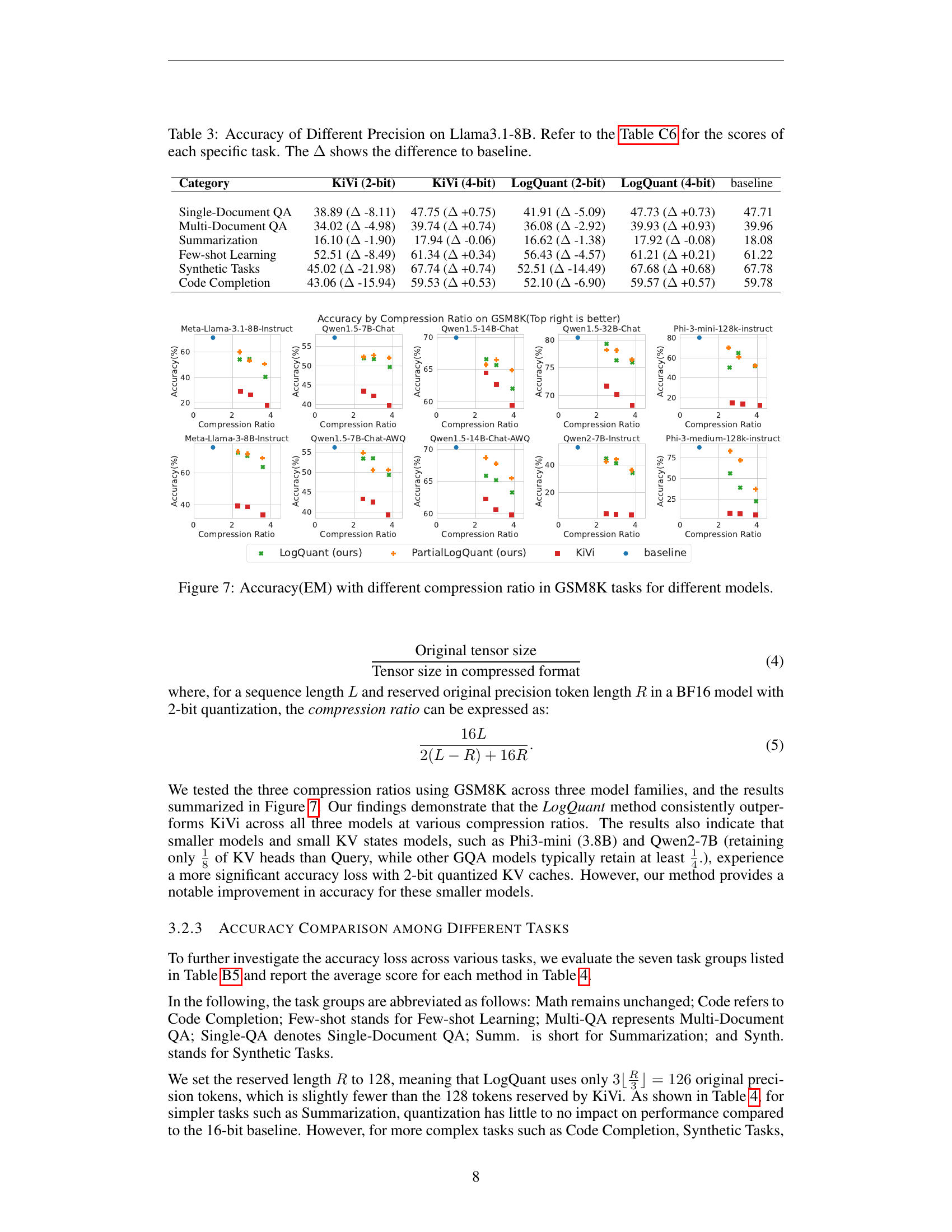

🔼 This figure displays the accuracy (exact match) results on the GSM8K dataset for various language models using different compression ratios. It visualizes the performance trade-off between compression and accuracy for different models and compression strategies, allowing for a comparison of the effectiveness of LogQuant relative to other approaches.

read the caption

Figure 7: Accuracy(EM) with different compression ratio in GSM8K tasks for different models.

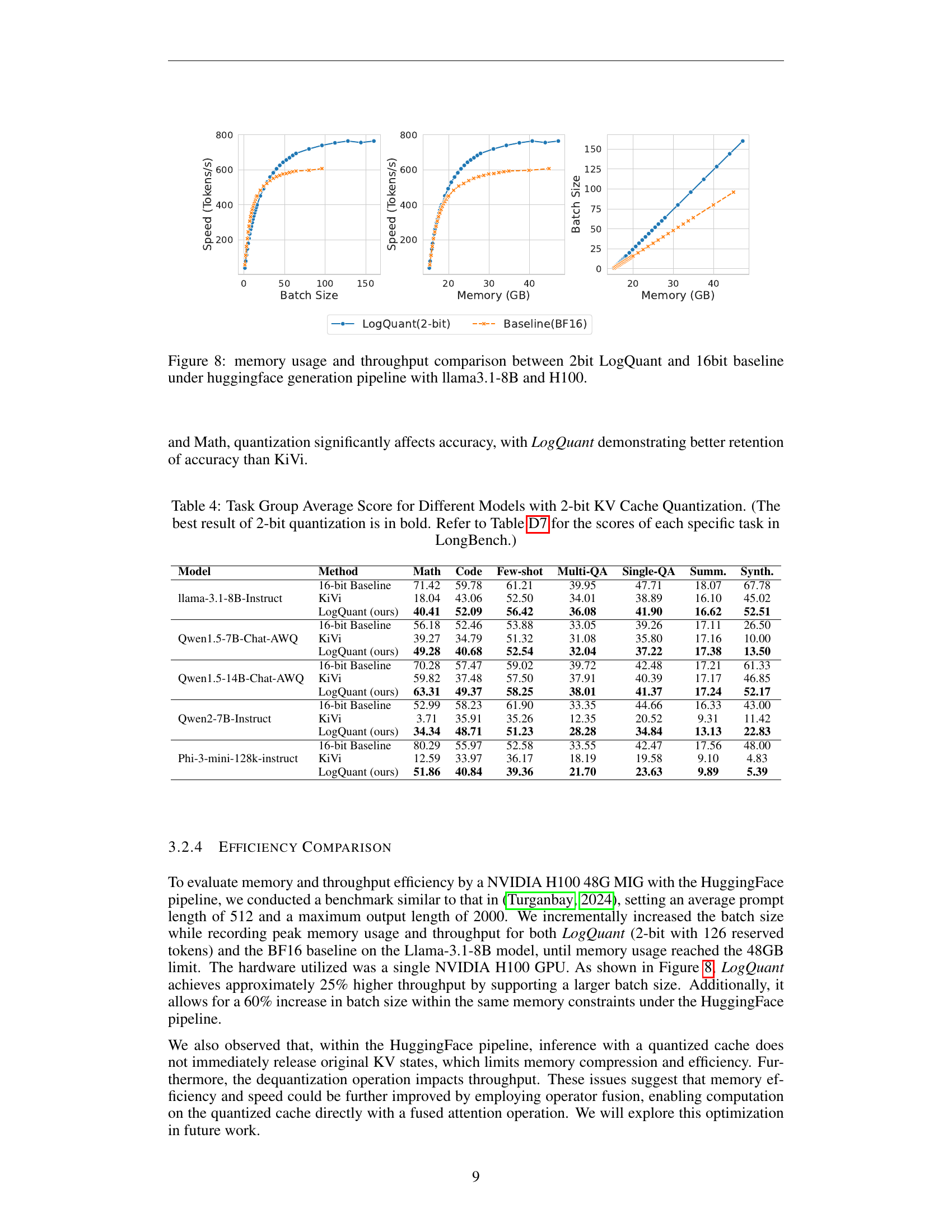

🔼 Figure 8 illustrates a comparison of memory usage and throughput between LogQuant with 2-bit quantization and a 16-bit baseline. The experiment used the Hugging Face generation pipeline, the Llama 3.1-8B model, and an NVIDIA H100 GPU. The graph shows how throughput and memory consumption change as the batch size increases for both methods. This helps to demonstrate the memory efficiency and performance gains achieved by LogQuant.

read the caption

Figure 8: memory usage and throughput comparison between 2bit LogQuant and 16bit baseline under huggingface generation pipeline with llama3.1-8B and H100.

More on tables

| LogQuant (2-bit) | KiVi (2-bit) | LogQuant (Eviction) | KiVi (Eviction) |

| 432.50 | 556.10 | 1076.70 | 1612.56 |

🔼 This table compares the L1 error, a measure of difference between the original attention scores and those obtained after applying either eviction or quantization techniques. It shows how much the attention distribution is altered by each method, indicating the potential impact on model accuracy. Lower L1 error values suggest better preservation of the original attention distribution.

read the caption

Table 2: Comparison of L1 error with original attention for eviction and quantization.

| Category | KiVi (2-bit) | KiVi (4-bit) | LogQuant (2-bit) | LogQuant (4-bit) | baseline |

| Single-Document QA | 38.89 ( -8.11) | 47.75 ( +0.75) | 41.91 ( -5.09) | 47.73 ( +0.73) | 47.71 |

| Multi-Document QA | 34.02 ( -4.98) | 39.74 ( +0.74) | 36.08 ( -2.92) | 39.93 ( +0.93) | 39.96 |

| Summarization | 16.10 ( -1.90) | 17.94 ( -0.06) | 16.62 ( -1.38) | 17.92 ( -0.08) | 18.08 |

| Few-shot Learning | 52.51 ( -8.49) | 61.34 ( +0.34) | 56.43 ( -4.57) | 61.21 ( +0.21) | 61.22 |

| Synthetic Tasks | 45.02 ( -21.98) | 67.74 ( +0.74) | 52.51 ( -14.49) | 67.68 ( +0.68) | 67.78 |

| Code Completion | 43.06 ( -15.94) | 59.53 ( +0.53) | 52.10 ( -6.90) | 59.57 ( +0.57) | 59.78 |

🔼 This table presents a comparison of the accuracy achieved using different bit precisions (2-bit and 4-bit) for both KiVi and LogQuant quantization methods, in relation to the baseline accuracy (using original precision) on Llama3.1-8B model for various tasks. The Delta (Δ) column indicates the difference in accuracy percentage between each method and the baseline. For detailed per-task accuracy scores, please refer to Table C6.

read the caption

Table 3: Accuracy of Different Precision on Llama3.1-8B. Refer to the Table C6 for the scores of each specific task. The ΔΔ\Deltaroman_Δ shows the difference to baseline.

| Model | Method | Math | Code | Few-shot | Multi-QA | Single-QA | Summ. | Synth. |

| llama-3.1-8B-Instruct | 16-bit Baseline | 71.42 | 59.78 | 61.21 | 39.95 | 47.71 | 18.07 | 67.78 |

| KiVi | 18.04 | 43.06 | 52.50 | 34.01 | 38.89 | 16.10 | 45.02 | |

| LogQuant (ours) | 40.41 | 52.09 | 56.42 | 36.08 | 41.90 | 16.62 | 52.51 | |

| Qwen1.5-7B-Chat-AWQ | 16-bit Baseline | 56.18 | 52.46 | 53.88 | 33.05 | 39.26 | 17.11 | 26.50 |

| KiVi | 39.27 | 34.79 | 51.32 | 31.08 | 35.80 | 17.16 | 10.00 | |

| LogQuant (ours) | 49.28 | 40.68 | 52.54 | 32.04 | 37.22 | 17.38 | 13.50 | |

| Qwen1.5-14B-Chat-AWQ | 16-bit Baseline | 70.28 | 57.47 | 59.02 | 39.72 | 42.48 | 17.21 | 61.33 |

| KiVi | 59.82 | 37.48 | 57.50 | 37.91 | 40.39 | 17.17 | 46.85 | |

| LogQuant (ours) | 63.31 | 49.37 | 58.25 | 38.01 | 41.37 | 17.24 | 52.17 | |

| Qwen2-7B-Instruct | 16-bit Baseline | 52.99 | 58.23 | 61.90 | 33.35 | 44.66 | 16.33 | 43.00 |

| KiVi | 3.71 | 35.91 | 35.26 | 12.35 | 20.52 | 9.31 | 11.42 | |

| LogQuant (ours) | 34.34 | 48.71 | 51.23 | 28.28 | 34.84 | 13.13 | 22.83 | |

| Phi-3-mini-128k-instruct | 16-bit Baseline | 80.29 | 55.97 | 52.58 | 33.55 | 42.47 | 17.56 | 48.00 |

| KiVi | 12.59 | 33.97 | 36.17 | 18.19 | 19.58 | 9.10 | 4.83 | |

| LogQuant (ours) | 51.86 | 40.84 | 39.36 | 21.70 | 23.63 | 9.89 | 5.39 |

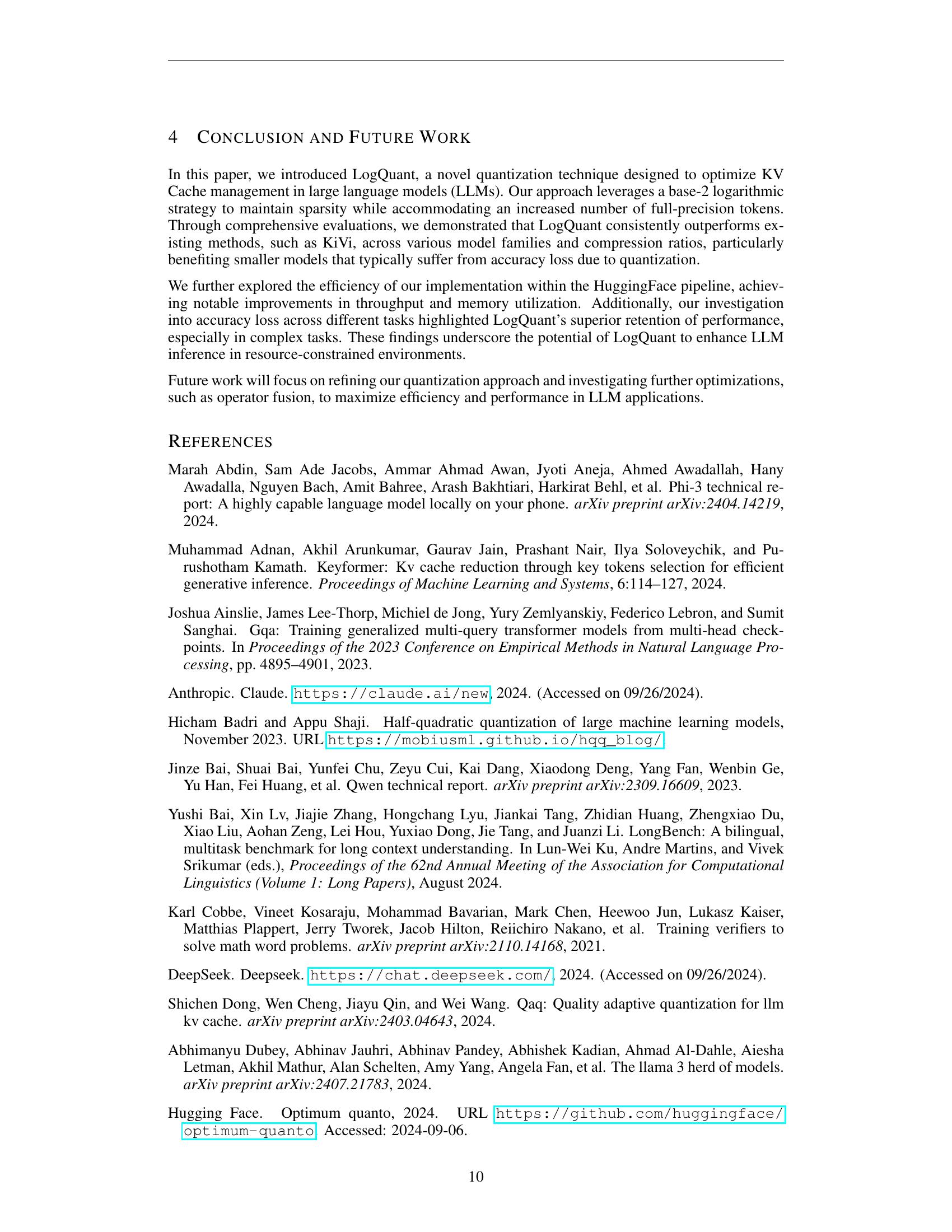

🔼 This table presents the average performance across seven task groups (Math, Code, Few-shot, Multi-QA, Single-QA, Summarization, and Synthetic) for three different LLMs (Llama-3.1-8B, Qwen1.5-7B-Chat, and Phi-3-mini-128k) using 2-bit quantization for the KV cache. It compares the accuracy of LogQuant against Kivi and a 16-bit baseline. The best result for each model and task using 2-bit quantization is highlighted in bold. Detailed results for each individual task within each group can be found in Table D7.

read the caption

Table 4: Task Group Average Score for Different Models with 2-bit KV Cache Quantization. (The best result of 2-bit quantization is in bold. Refer to Table LABEL:tab:longbench_all for the scores of each specific task in LongBench.)

| Task Group | Dataset | Avg len | Metric | Language | #data |

| Math | GSM8K | 240 | Accuracy (EM) | English | 1319 |

| Single-Document QA | NarrativeQA | 18,409 | F1 | English | 200 |

| Qasper | 3,619 | F1 | English | 200 | |

| MultiFieldQA-en | 4,559 | F1 | English | 150 | |

| MultiFieldQA-zh | 6,701 | F1 | Chinese | 200 | |

| Multi-Document QA | HotpotQA | 9,151 | F1 | English | 200 |

| 2WikiMultihopQA | 4,887 | F1 | English | 200 | |

| MuSiQue | 11,214 | F1 | English | 200 | |

| DuReader | 15,768 | Rouge-L | Chinese | 200 | |

| Summarization | GovReport | 8,734 | Rouge-L | English | 200 |

| QMSum | 10,614 | Rouge-L | English | 200 | |

| MultiNews | 2,113 | Rouge-L | English | 200 | |

| VCSUM | 15,380 | Rouge-L | Chinese | 200 | |

| Few-shot Learning | TREC | 5,177 | Accuracy (CLS) | English | 200 |

| TriviaQA | 8,209 | F1 | English | 200 | |

| SAMSum | 6,258 | Rouge-L | English | 200 | |

| LSHT | 22,337 | Accuracy (CLS) | Chinese | 200 | |

| Synthetic Task | PassageCount | 11,141 | Accuracy (EM) | English | 200 |

| PassageRetrieval-en | 9,289 | Accuracy (EM) | English | 200 | |

| PassageRetrieval-zh | 6,745 | Accuracy (EM) | Chinese | 200 | |

| Code Completion | LCC | 1,235 | Edit Sim | Python/C#/Java | 500 |

| RepoBench-P | 4,206 | Edit Sim | Python/Java | 500 |

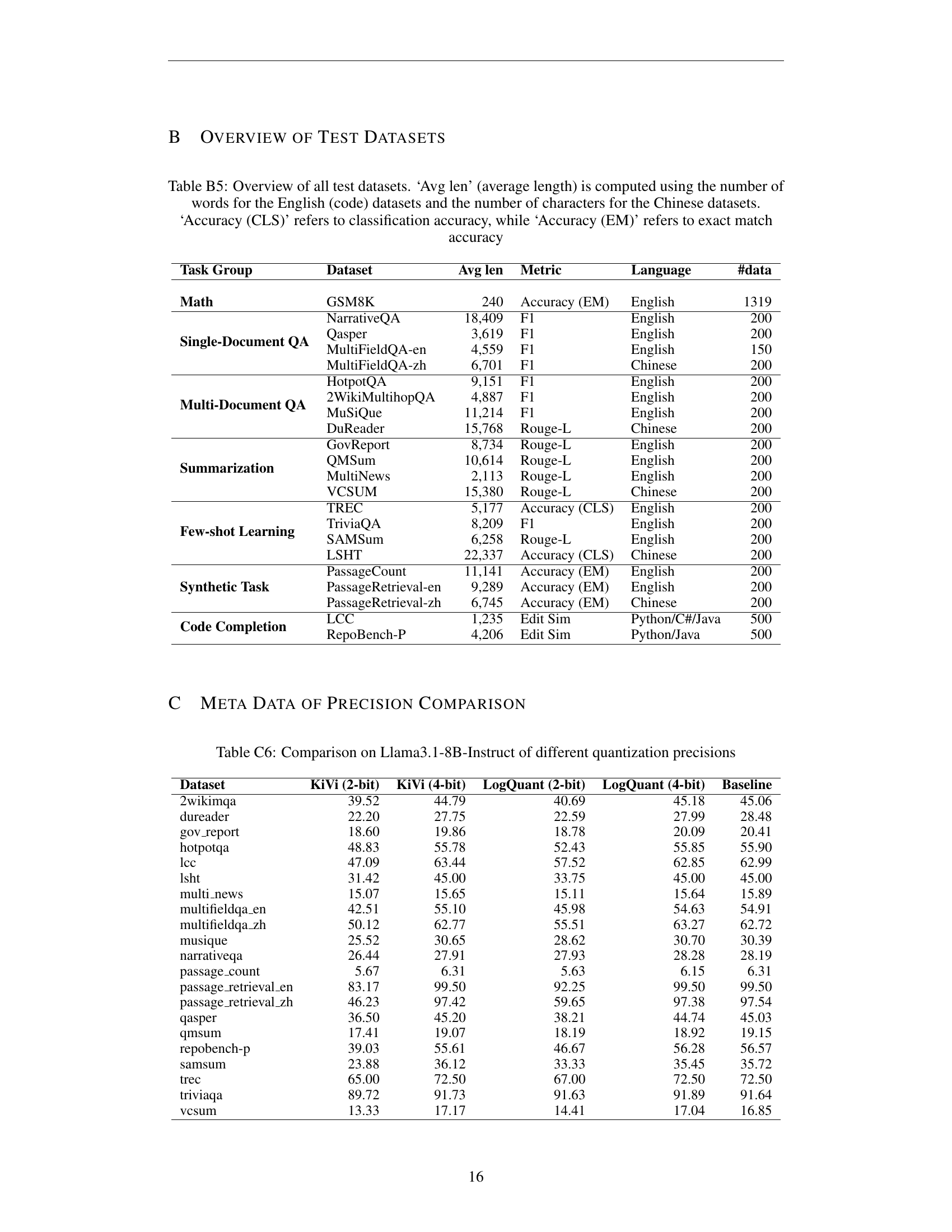

🔼 Table B5 presents a comprehensive overview of the datasets used for evaluating the performance of the proposed model. For each dataset, the table provides the task group it belongs to (e.g., Math, Single-Document QA, etc.), the dataset name, the average length of the data points (calculated as the number of words for English datasets and the number of characters for Chinese datasets), the evaluation metric used (e.g., Accuracy (EM) for Exact Match accuracy, Accuracy (CLS) for classification accuracy, F1 score, Rouge-L score), the language of the dataset (English or Chinese), and the total number of data samples.

read the caption

Table B5: Overview of all test datasets. ‘Avg len’ (average length) is computed using the number of words for the English (code) datasets and the number of characters for the Chinese datasets. ‘Accuracy (CLS)’ refers to classification accuracy, while ‘Accuracy (EM)’ refers to exact match accuracy

| Dataset | KiVi (2-bit) | KiVi (4-bit) | LogQuant (2-bit) | LogQuant (4-bit) | Baseline |

| 2wikimqa | 39.52 | 44.79 | 40.69 | 45.18 | 45.06 |

| dureader | 22.20 | 27.75 | 22.59 | 27.99 | 28.48 |

| gov_report | 18.60 | 19.86 | 18.78 | 20.09 | 20.41 |

| hotpotqa | 48.83 | 55.78 | 52.43 | 55.85 | 55.90 |

| lcc | 47.09 | 63.44 | 57.52 | 62.85 | 62.99 |

| lsht | 31.42 | 45.00 | 33.75 | 45.00 | 45.00 |

| multi_news | 15.07 | 15.65 | 15.11 | 15.64 | 15.89 |

| multifieldqa_en | 42.51 | 55.10 | 45.98 | 54.63 | 54.91 |

| multifieldqa_zh | 50.12 | 62.77 | 55.51 | 63.27 | 62.72 |

| musique | 25.52 | 30.65 | 28.62 | 30.70 | 30.39 |

| narrativeqa | 26.44 | 27.91 | 27.93 | 28.28 | 28.19 |

| passage_count | 5.67 | 6.31 | 5.63 | 6.15 | 6.31 |

| passage_retrieval_en | 83.17 | 99.50 | 92.25 | 99.50 | 99.50 |

| passage_retrieval_zh | 46.23 | 97.42 | 59.65 | 97.38 | 97.54 |

| qasper | 36.50 | 45.20 | 38.21 | 44.74 | 45.03 |

| qmsum | 17.41 | 19.07 | 18.19 | 18.92 | 19.15 |

| repobench-p | 39.03 | 55.61 | 46.67 | 56.28 | 56.57 |

| samsum | 23.88 | 36.12 | 33.33 | 35.45 | 35.72 |

| trec | 65.00 | 72.50 | 67.00 | 72.50 | 72.50 |

| triviaqa | 89.72 | 91.73 | 91.63 | 91.89 | 91.64 |

| vcsum | 13.33 | 17.17 | 14.41 | 17.04 | 16.85 |

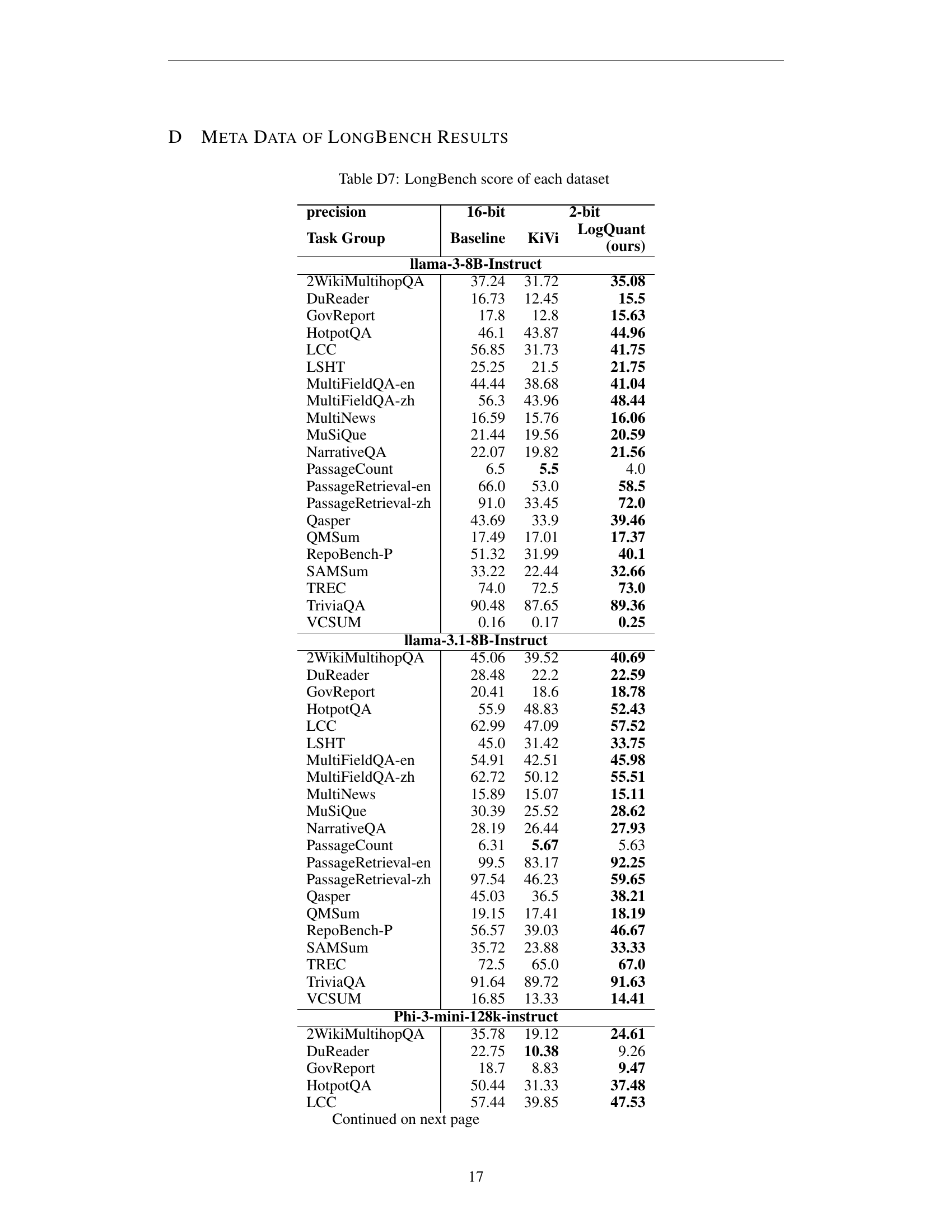

🔼 This table presents a comparison of the accuracy achieved by different quantization methods (KiVi and LogQuant) on the Llama3.1-8B-Instruct model across various datasets. It shows the performance of both methods using 2-bit and 4-bit quantization, comparing them against a baseline of original precision (16-bit). The results highlight the trade-off between compression ratio and accuracy, indicating how much accuracy is lost when using lower precision quantization. The datasets included are diverse, encompassing various natural language tasks.

read the caption

Table C6: Comparison on Llama3.1-8B-Instruct of different quantization precisions

| precision | 16-bit | 2-bit | |

|---|---|---|---|

| Task Group | Baseline | KiVi | LogQuant (ours) |

| llama-3-8B-Instruct | |||

| 2WikiMultihopQA | 37.24 | 31.72 | 35.08 |

| DuReader | 16.73 | 12.45 | 15.5 |

| GovReport | 17.8 | 12.8 | 15.63 |

| HotpotQA | 46.1 | 43.87 | 44.96 |

| LCC | 56.85 | 31.73 | 41.75 |

| LSHT | 25.25 | 21.5 | 21.75 |

| MultiFieldQA-en | 44.44 | 38.68 | 41.04 |

| MultiFieldQA-zh | 56.3 | 43.96 | 48.44 |

| MultiNews | 16.59 | 15.76 | 16.06 |

| MuSiQue | 21.44 | 19.56 | 20.59 |

| NarrativeQA | 22.07 | 19.82 | 21.56 |

| PassageCount | 6.5 | 5.5 | 4.0 |

| PassageRetrieval-en | 66.0 | 53.0 | 58.5 |

| PassageRetrieval-zh | 91.0 | 33.45 | 72.0 |

| Qasper | 43.69 | 33.9 | 39.46 |

| QMSum | 17.49 | 17.01 | 17.37 |

| RepoBench-P | 51.32 | 31.99 | 40.1 |

| SAMSum | 33.22 | 22.44 | 32.66 |

| TREC | 74.0 | 72.5 | 73.0 |

| TriviaQA | 90.48 | 87.65 | 89.36 |

| VCSUM | 0.16 | 0.17 | 0.25 |

| llama-3.1-8B-Instruct | |||

| 2WikiMultihopQA | 45.06 | 39.52 | 40.69 |

| DuReader | 28.48 | 22.2 | 22.59 |

| GovReport | 20.41 | 18.6 | 18.78 |

| HotpotQA | 55.9 | 48.83 | 52.43 |

| LCC | 62.99 | 47.09 | 57.52 |

| LSHT | 45.0 | 31.42 | 33.75 |

| MultiFieldQA-en | 54.91 | 42.51 | 45.98 |

| MultiFieldQA-zh | 62.72 | 50.12 | 55.51 |

| MultiNews | 15.89 | 15.07 | 15.11 |

| MuSiQue | 30.39 | 25.52 | 28.62 |

| NarrativeQA | 28.19 | 26.44 | 27.93 |

| PassageCount | 6.31 | 5.67 | 5.63 |

| PassageRetrieval-en | 99.5 | 83.17 | 92.25 |

| PassageRetrieval-zh | 97.54 | 46.23 | 59.65 |

| Qasper | 45.03 | 36.5 | 38.21 |

| QMSum | 19.15 | 17.41 | 18.19 |

| RepoBench-P | 56.57 | 39.03 | 46.67 |

| SAMSum | 35.72 | 23.88 | 33.33 |

| TREC | 72.5 | 65.0 | 67.0 |

| TriviaQA | 91.64 | 89.72 | 91.63 |

| VCSUM | 16.85 | 13.33 | 14.41 |

| Phi-3-mini-128k-instruct | |||

| 2WikiMultihopQA | 35.78 | 19.12 | 24.61 |

| DuReader | 22.75 | 10.38 | 9.26 |

| GovReport | 18.7 | 8.83 | 9.47 |

| HotpotQA | 50.44 | 31.33 | 37.48 |

| LCC | 57.44 | 39.85 | 47.53 |

| LSHT | 27.25 | 14.25 | 13.75 |

| MultiFieldQA-en | 54.9 | 29.04 | 34.91 |

| MultiFieldQA-zh | 52.09 | 8.16 | 12.32 |

| MultiNews | 15.52 | 12.72 | 13.33 |

| MuSiQue | 25.23 | 11.92 | 15.46 |

| NarrativeQA | 23.28 | 15.34 | 17.37 |

| PassageCount | 3.0 | 2.25 | 4.5 |

| PassageRetrieval-en | 82.5 | 11.0 | 9.68 |

| PassageRetrieval-zh | 58.5 | 1.25 | 2.0 |

| Qasper | 39.6 | 25.78 | 29.91 |

| QMSum | 17.97 | 5.88 | 7.04 |

| RepoBench-P | 54.49 | 28.09 | 34.16 |

| SAMSum | 30.62 | 9.23 | 13.03 |

| TREC | 66.0 | 59.5 | 62.5 |

| TriviaQA | 86.43 | 61.72 | 68.15 |

| VCSUM | 18.04 | 8.97 | 9.74 |

| Qwen1.5-14B-Chat-AWQ | |||

| 2WikiMultihopQA | 44.81 | 44.35 | 44.39 |

| DuReader | 26.02 | 23.34 | 23.28 |

| GovReport | 16.31 | 16.23 | 16.25 |

| HotpotQA | 55.67 | 53.69 | 53.9 |

| LCC | 56.69 | 36.94 | 50.95 |

| LSHT | 37.0 | 32.5 | 34.5 |

| MultiFieldQA-en | 48.36 | 44.75 | 45.68 |

| MultiFieldQA-zh | 60.35 | 58.54 | 59.43 |

| MultiNews | 14.95 | 15.01 | 14.94 |

| MuSiQue | 32.38 | 30.25 | 30.45 |

| NarrativeQA | 22.26 | 21.73 | 22.83 |

| PassageCount | 1.0 | 2.55 | 2.0 |

| PassageRetrieval-en | 94.5 | 71.0 | 80.0 |

| PassageRetrieval-zh | 88.5 | 67.0 | 74.5 |

| Qasper | 38.93 | 36.56 | 37.54 |

| QMSum | 18.16 | 18.03 | 18.13 |

| RepoBench-P | 58.25 | 38.03 | 47.79 |

| SAMSum | 32.95 | 32.69 | 33.34 |

| TREC | 77.5 | 76.5 | 77.5 |

| TriviaQA | 88.63 | 88.32 | 87.66 |

| VCSUM | 19.41 | 19.42 | 19.65 |

| Qwen1.5-7B-Chat | |||

| 2WikiMultihopQA | 32.8 | 31.83 | 32.14 |

| DuReader | 25.96 | 22.64 | 24.06 |

| GovReport | 16.66 | 15.57 | 15.84 |

| HotpotQA | 48.11 | 47.37 | 48.91 |

| LCC | 58.17 | 45.87 | 53.77 |

| LSHT | 28.0 | 24.0 | 24.5 |

| MultiFieldQA-en | 47.14 | 42.26 | 43.72 |

| MultiFieldQA-zh | 53.4 | 50.18 | 51.68 |

| MultiNews | 15.02 | 15.0 | 14.92 |

| MuSiQue | 26.74 | 25.88 | 27.09 |

| NarrativeQA | 20.06 | 19.02 | 20.06 |

| PassageCount | 1.0 | 0.5 | 0.0 |

| PassageRetrieval-en | 40.5 | 20.0 | 24.0 |

| PassageRetrieval-zh | 59.0 | 18.25 | 29.0 |

| Qasper | 39.84 | 37.19 | 37.28 |

| QMSum | 18.25 | 17.59 | 18.18 |

| RepoBench-P | 45.46 | 26.33 | 30.76 |

| SAMSum | 33.01 | 29.7 | 33.31 |

| TREC | 70.5 | 69.5 | 67.5 |

| TriviaQA | 86.76 | 86.51 | 87.37 |

| VCSUM | 17.98 | 19.15 | 19.34 |

| Qwen1.5-7B-Chat-AWQ | |||

| 2WikiMultihopQA | 32.43 | 30.82 | 33.46 |

| DuReader | 25.84 | 23.1 | 24.36 |

| GovReport | 16.98 | 16.31 | 16.65 |

| HotpotQA | 47.77 | 47.17 | 46.0 |

| LCC | 57.98 | 44.56 | 52.33 |

| LSHT | 29.0 | 25.5 | 27.0 |

| MultiFieldQA-en | 46.72 | 42.87 | 45.85 |

| MultiFieldQA-zh | 50.97 | 45.51 | 46.73 |

| MultiNews | 14.97 | 15.04 | 15.16 |

| MuSiQue | 26.18 | 23.23 | 24.36 |

| NarrativeQA | 20.93 | 19.58 | 20.14 |

| PassageCount | 0.5 | 0.0 | 0.0 |

| PassageRetrieval-en | 30.5 | 16.0 | 18.5 |

| PassageRetrieval-zh | 48.5 | 14.0 | 22.0 |

| Qasper | 38.45 | 35.27 | 36.16 |

| QMSum | 17.85 | 17.34 | 17.77 |

| RepoBench-P | 46.95 | 25.02 | 29.03 |

| SAMSum | 31.98 | 28.3 | 32.06 |

| TREC | 67.0 | 65.0 | 63.5 |

| TriviaQA | 87.56 | 86.48 | 87.61 |

| VCSUM | 18.66 | 19.95 | 19.96 |

| Qwen2-7B-Instruct | |||

| 2WikiMultihopQA | 44.15 | 11.33 | 40.12 |

| DuReader | 19.22 | 13.08 | 15.01 |

| GovReport | 18.09 | 10.82 | 16.07 |

| HotpotQA | 44.3 | 17.39 | 39.92 |

| LCC | 57.72 | 36.63 | 51.46 |

| LSHT | 44.0 | 23.0 | 26.25 |

| MultiFieldQA-en | 46.89 | 21.97 | 36.42 |

| MultiFieldQA-zh | 61.48 | 33.67 | 47.57 |

| MultiNews | 15.58 | 8.53 | 13.6 |

| MuSiQue | 25.71 | 7.58 | 18.07 |

| NarrativeQA | 24.43 | 5.29 | 18.43 |

| PassageCount | 5.0 | 5.5 | 5.5 |

| PassageRetrieval-en | 69.0 | 19.25 | 33.5 |

| PassageRetrieval-zh | 55.0 | 9.5 | 29.5 |

| Qasper | 45.82 | 21.16 | 36.94 |

| QMSum | 17.92 | 9.08 | 12.25 |

| RepoBench-P | 58.74 | 35.18 | 45.95 |

| SAMSum | 35.94 | 18.23 | 28.03 |

| TREC | 78.0 | 58.25 | 68.0 |

| TriviaQA | 89.66 | 41.56 | 82.63 |

| VCSUM | 13.74 | 8.82 | 10.58 |

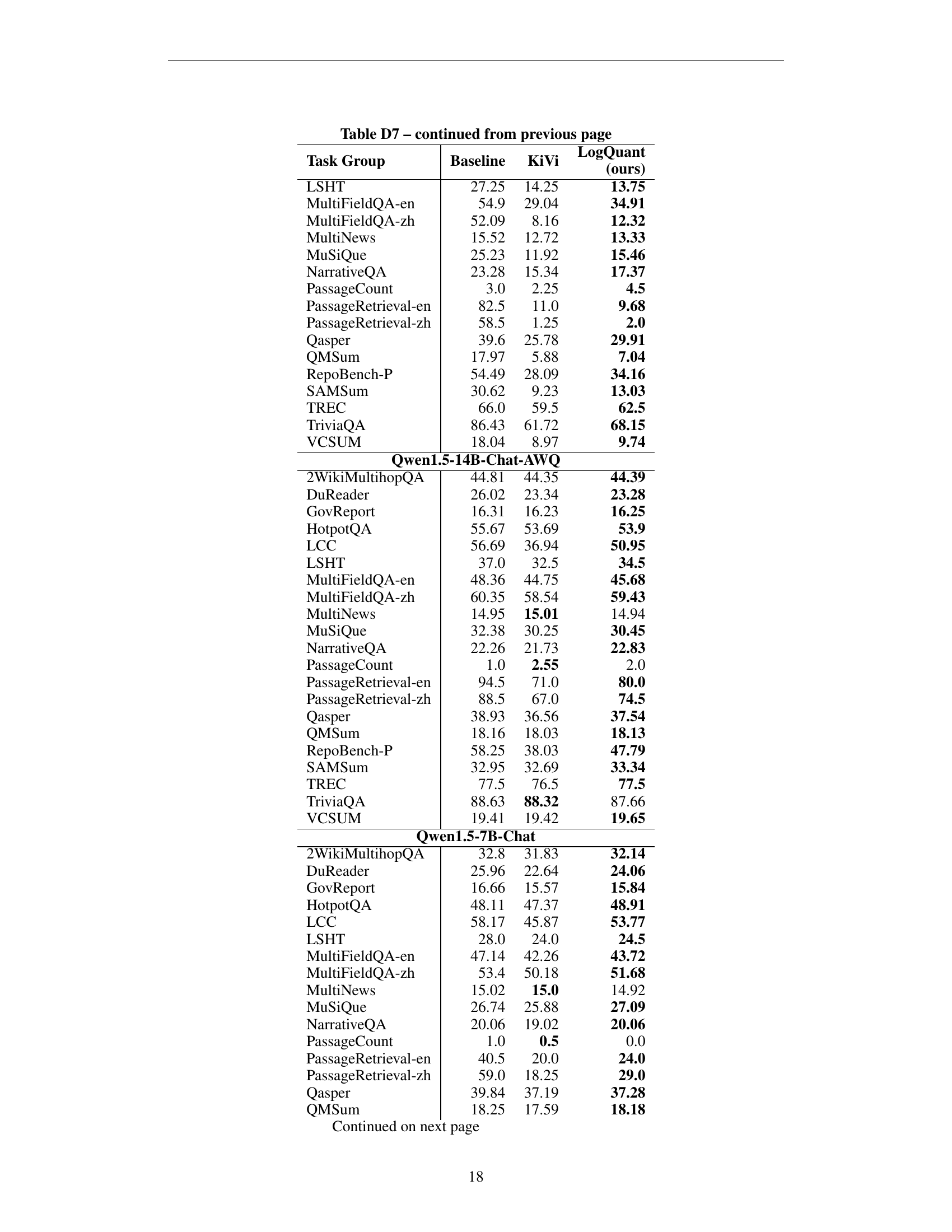

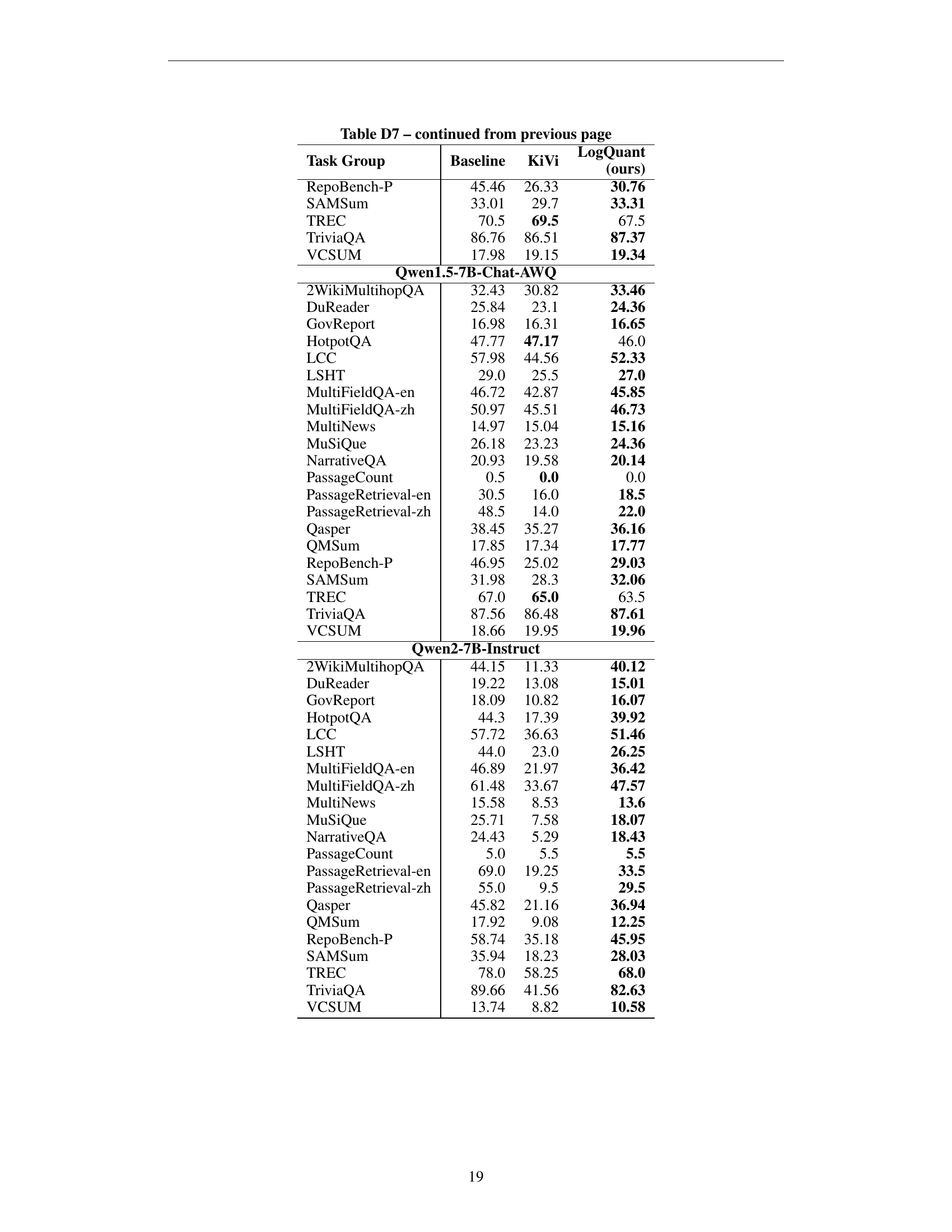

🔼 This table presents the performance of different models (Llama-3.8B-Instruct, Llama-3.1-8B-Instruct, Qwen1.5-7B-Chat-AWQ, Qwen1.5-14B-Chat-AWQ, Qwen2-7B-Instruct) on various subtasks within the LongBench benchmark, categorized into groups like Math, Single/Multi-Document QA, Summarization, Few-shot Learning, Synthetic Tasks, and Code Completion. It shows the accuracy (score) achieved using the 16-bit baseline, KiVi’s 2-bit quantization, and LogQuant’s 2-bit quantization method for each model on each subtask. This allows for a comparison of the accuracy loss introduced by each quantization technique against the full precision baseline.

read the caption

Table D7: LongBench score of each dataset

Full paper#