TL;DR#

Diffusion models are used to generate images/videos by adding noise and iteratively removing it. Conditional diffusion models allow to steer the generation based on conditions (e.g. text prompts). Classifier-Free Guidance (CFG) is a technique to train conditional diffusion models but fine-tuning diffusion models with CFG can degrade the quality of the unconditional noise prediction, which then lowers the conditional image generation quality.

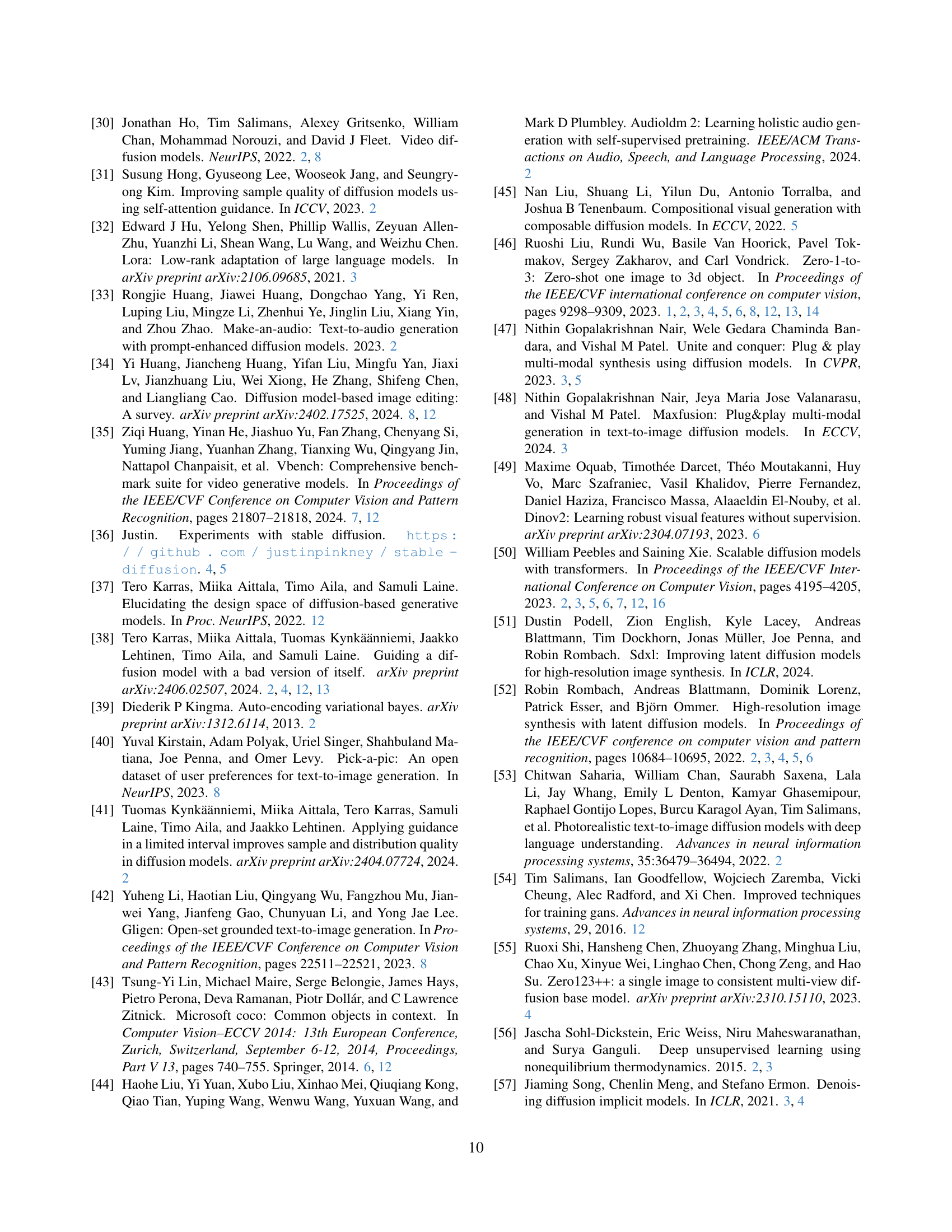

This paper shows that replacing the unconditional noise prediction from a fine-tuned diffusion model with that of a base model improves the generation quality. Surprisingly, the base model does not have to be the one the fine-tuned model branched out from but can be any diffusion model with high generation quality. The approach can be applied to image and video generation tasks based on CFG such as Zero-1-to-3, Versatile Diffusion, and DynamiCrafter.

Key Takeaways#

Why does it matter?#

This paper is important because it tackles a key problem in conditional diffusion models, improving generation quality and offering a training-free solution. It is highly relevant to the diffusion model research and opens new avenues for enhancing conditional image and video generation.

Visual Insights#

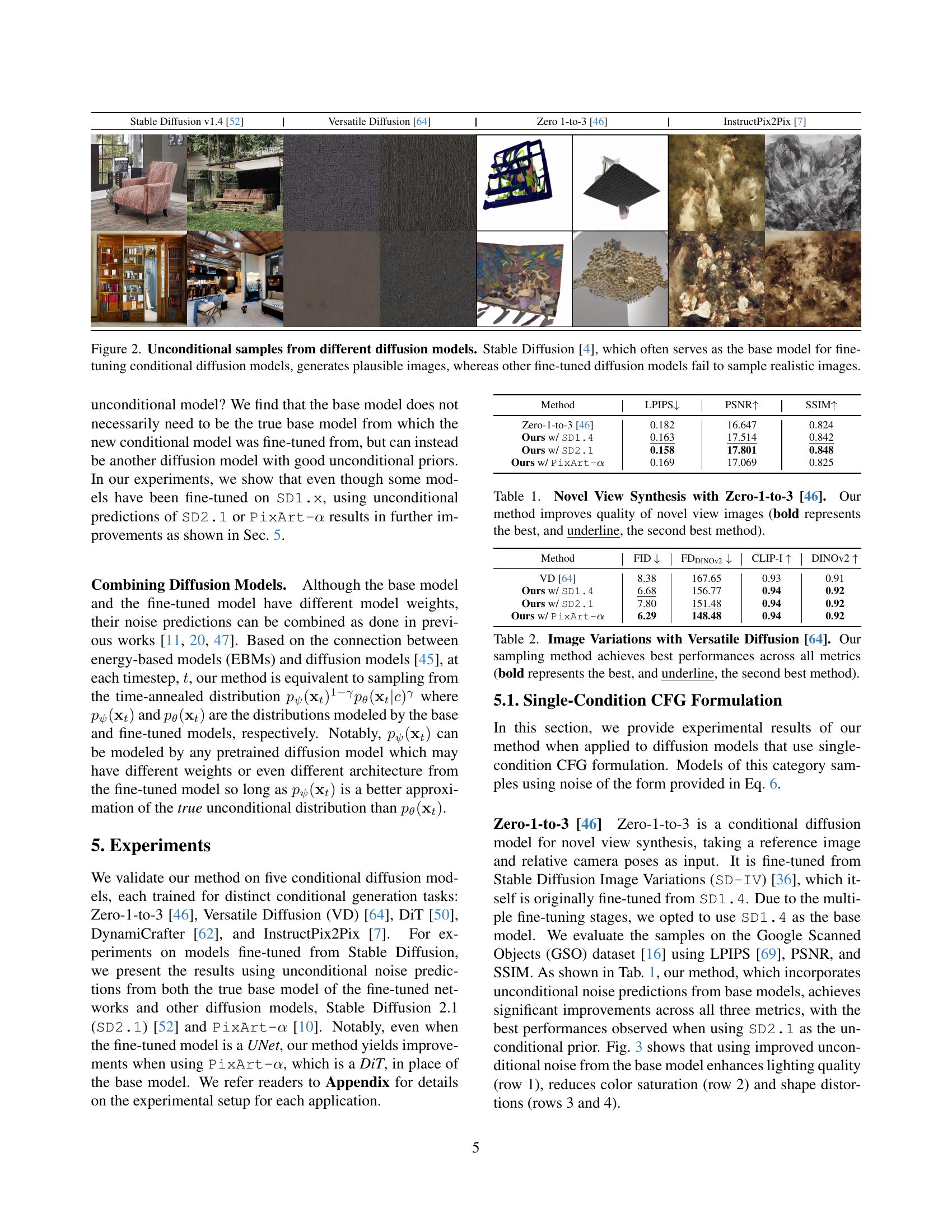

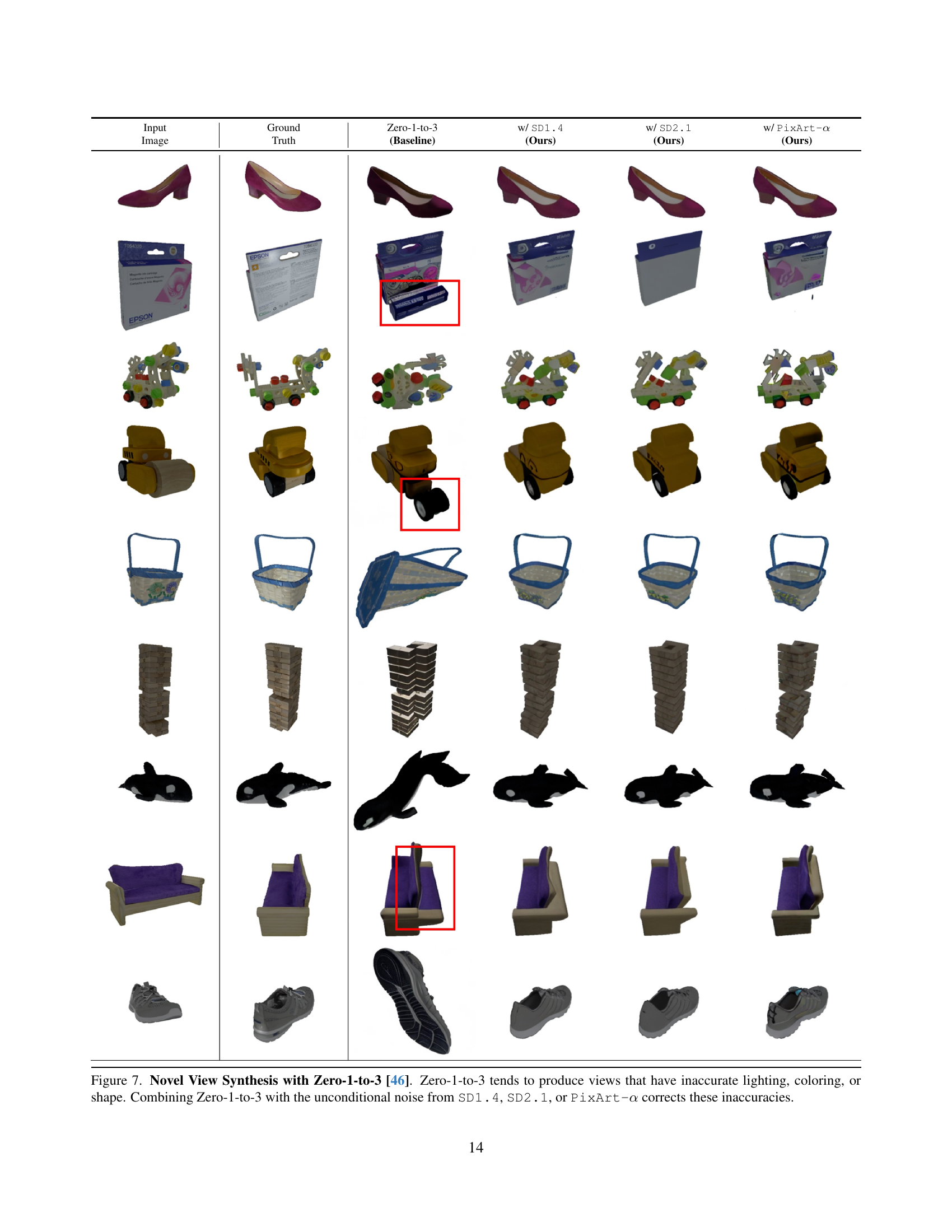

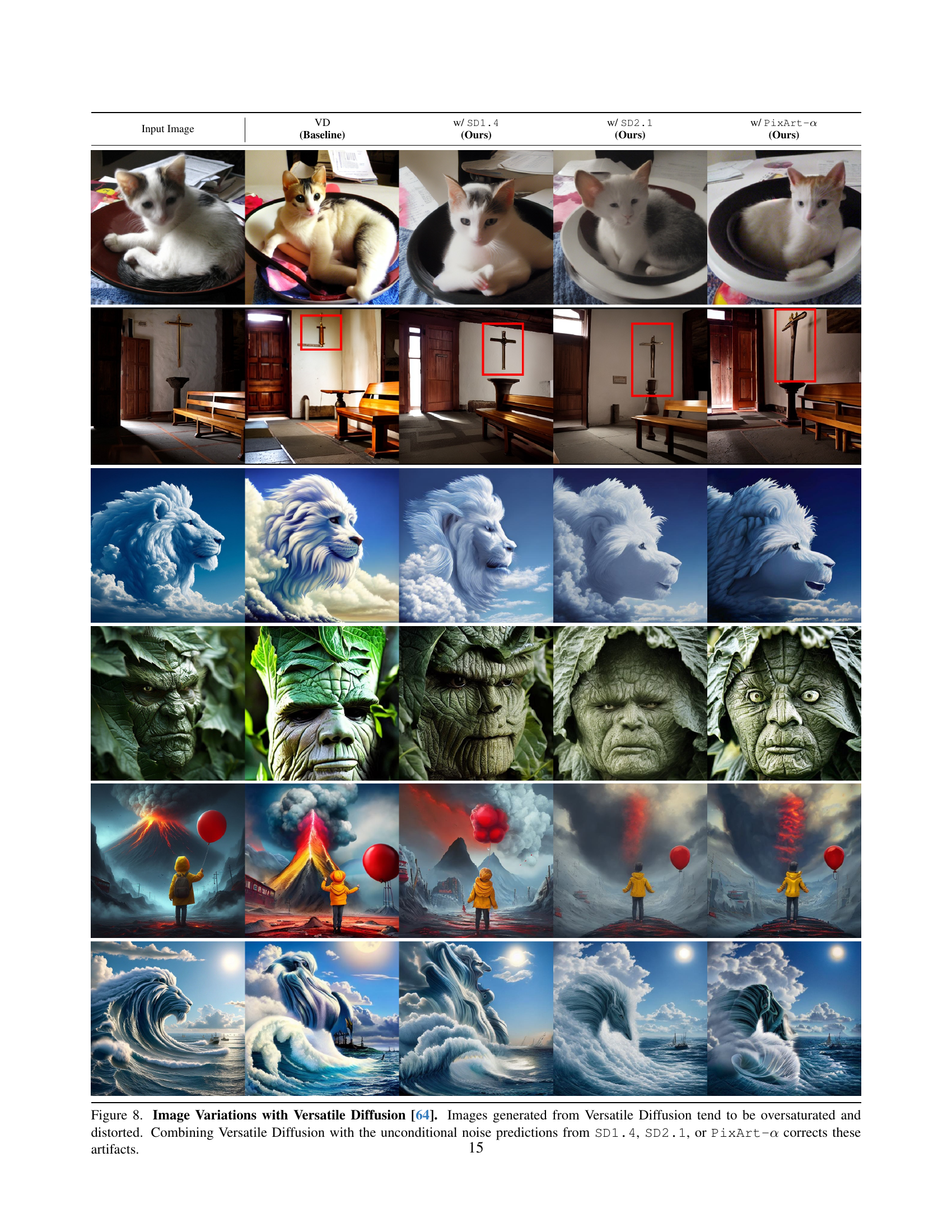

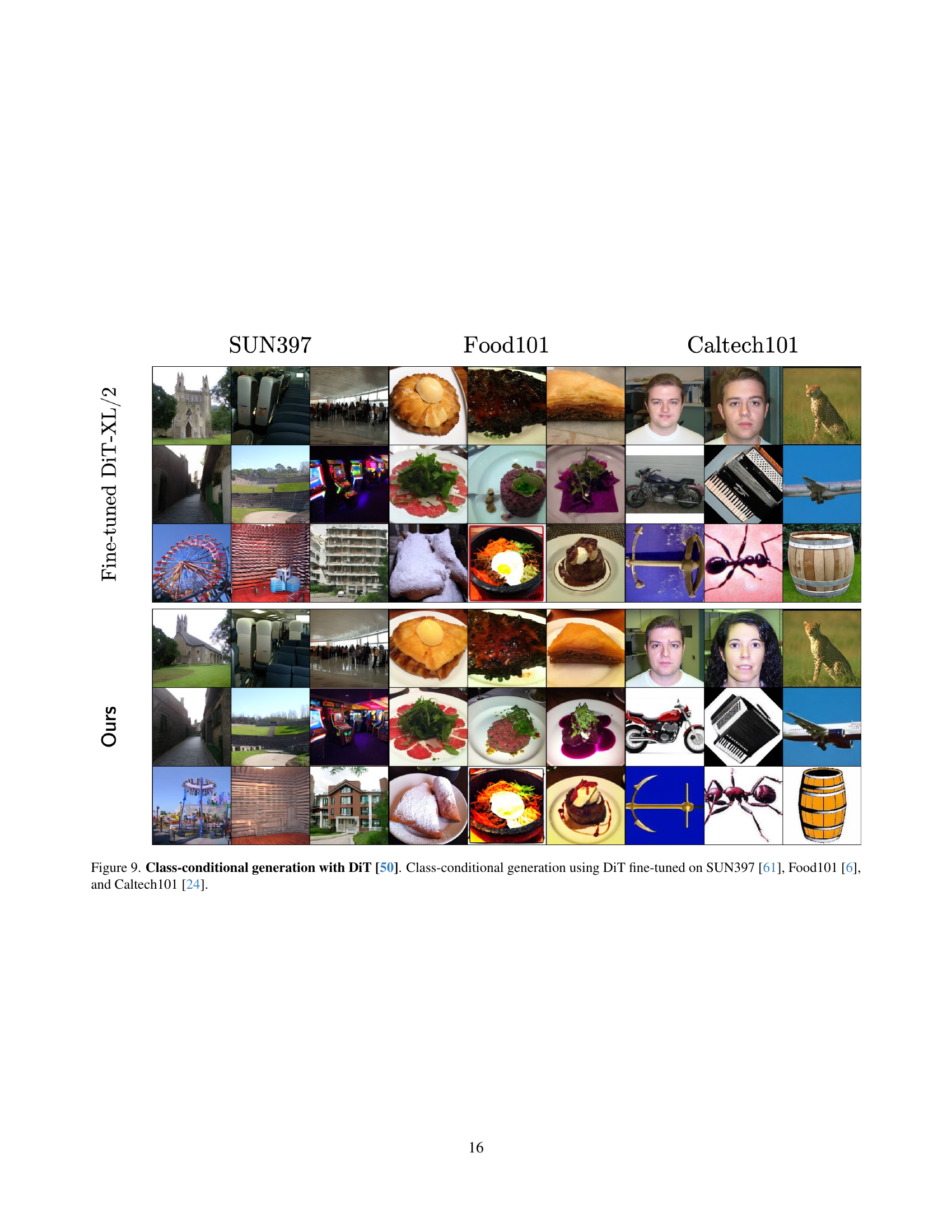

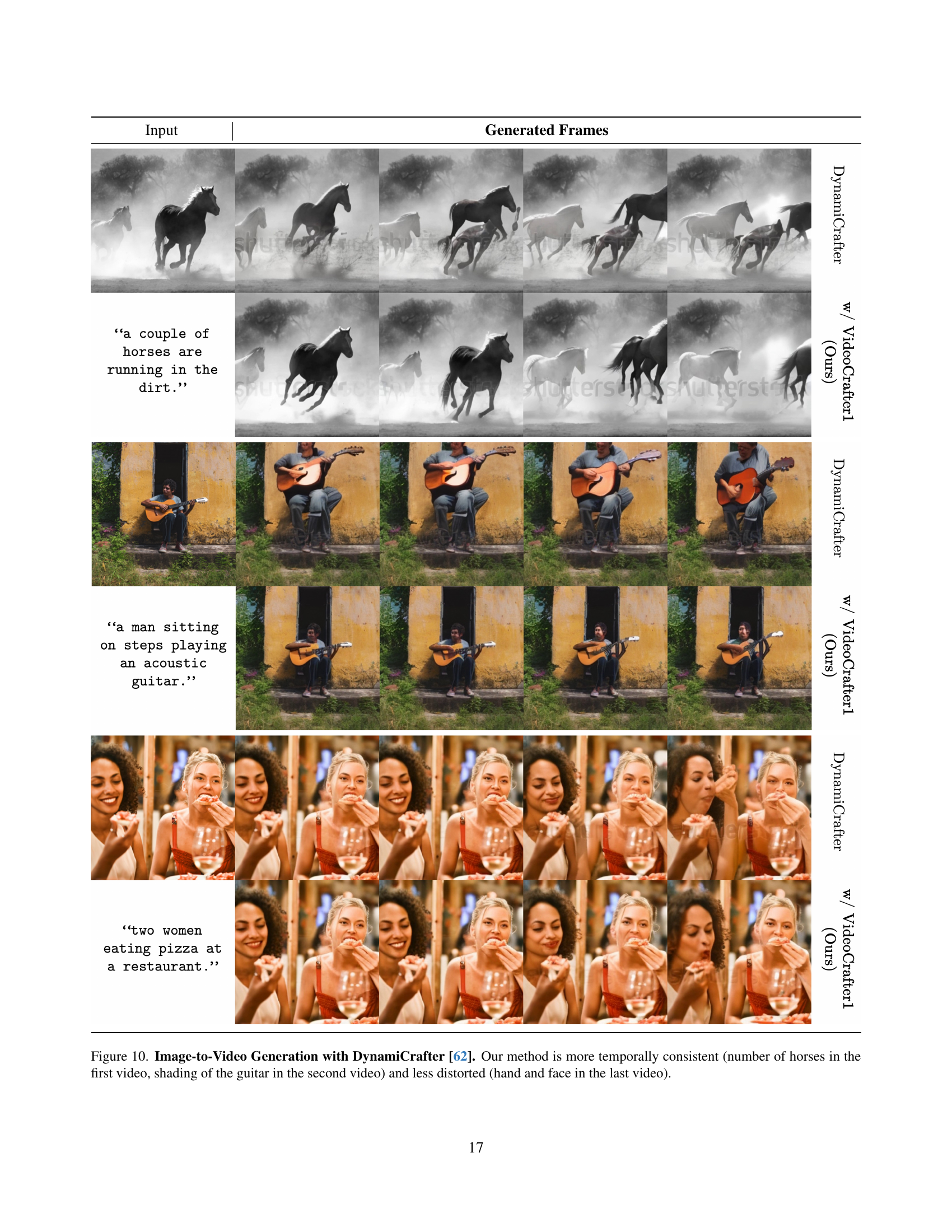

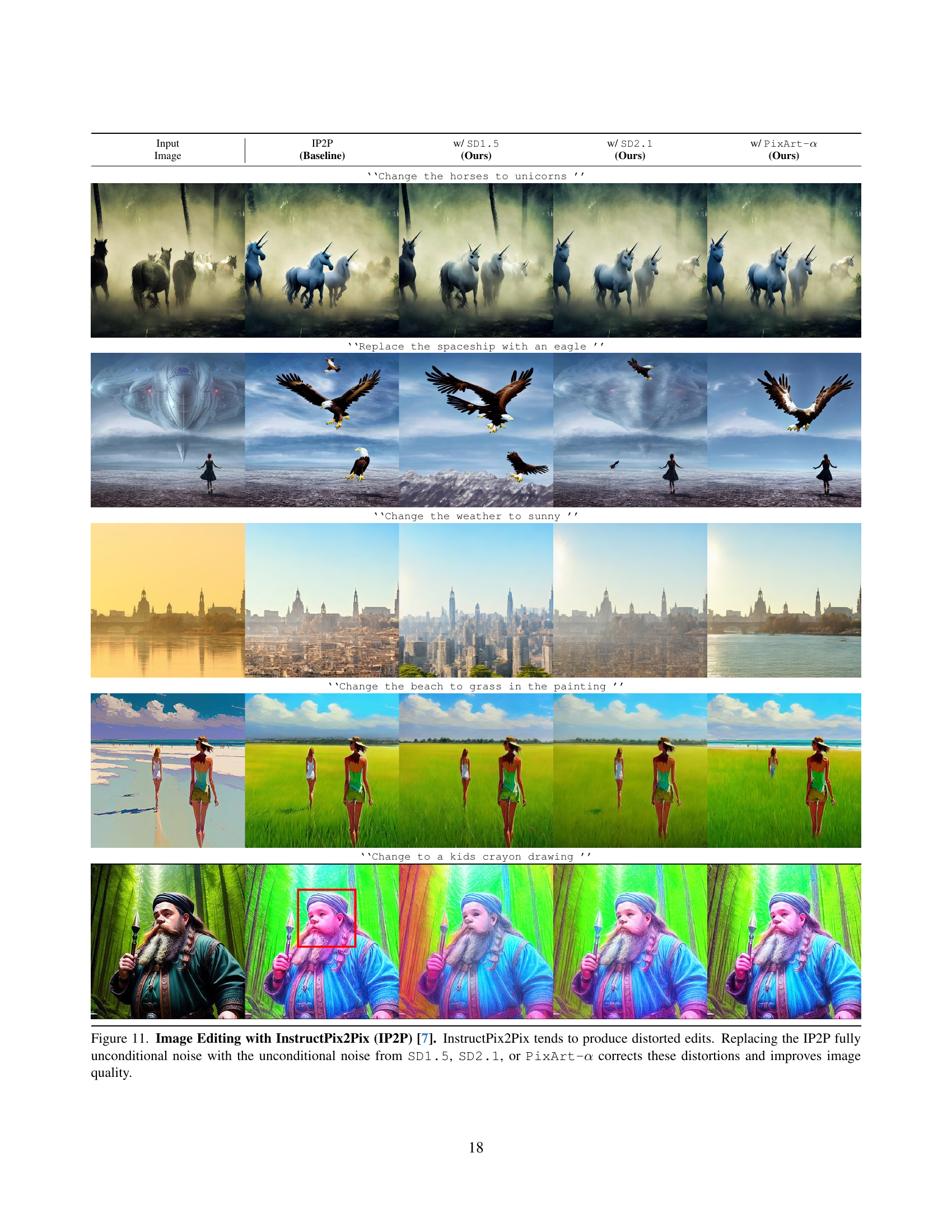

🔼 Figure 1 showcases how leveraging a strong unconditional prior improves the conditional generation capabilities of fine-tuned diffusion models. Fine-tuning often diminishes the quality of a model’s unconditional generation, which negatively impacts performance when using techniques like Classifier-Free Guidance (CFG). This figure demonstrates that incorporating a richer unconditional prior from a separate, well-trained diffusion model significantly enhances the results of conditional generation tasks. Examples across various models (Zero-1-to-3, Versatile Diffusion, InstructPix2Pix, and DynamiCrafter) illustrate this improvement.

read the caption

Figure 1: Unconditional Priors Matter in CFG-Based Conditional Generation. Fine-tuned conditional diffusion models often show drastic degradation in their unconditional priors, adversely affecting conditional generation when using techniques such as CFG [28]. We demonstrate that leveraging a diffusion model with a richer unconditional prior and combining its unconditional noise prediction with the conditional noise prediction from the fine-tuned model can lead to substantial improvements in conditional generation quality. This is demonstrated across diverse conditional diffusion models including Zero-1-to-3 [46], Versatile Diffusion [64], InstructPix2Pix [7], and DynamiCrafter [62].

Full paper#