TL;DR#

LLM agents, powered by large language models, are intelligent entities that can perceive environments, reason about goals, and execute actions. Unlike traditional AI, they actively engage through continuous learning & adaptation, marking a technological leap & reimagining human-machine relationships. However, challenges remain to construct high-quality multi-agent system. Therefore, existing research can be fragmented and lack of organized taxonomy, while others examine components separately.

To address these challenges, this survey systematically deconstructs LLM agent systems through construction, collaboration, and evolution. It offers a comprehensive perspective on how agents are built, interact, and evolve, while addressing evaluation, tools, real-world challenges, and applications. The study highlights fundamental connections between agent design principles and emergent behaviors, providing a unified architectural view and identifying promising research directions. The collection is available in github.

Key Takeaways#

Why does it matter?#

This survey is important for researchers to navigate the rapidly evolving landscape of LLM agents. It provides a structured taxonomy for understanding agent architectures, identifies key challenges, and suggests directions for future research. The survey could inspire researchers to develop more robust, reliable, and ethically aligned agent systems.

Visual Insights#

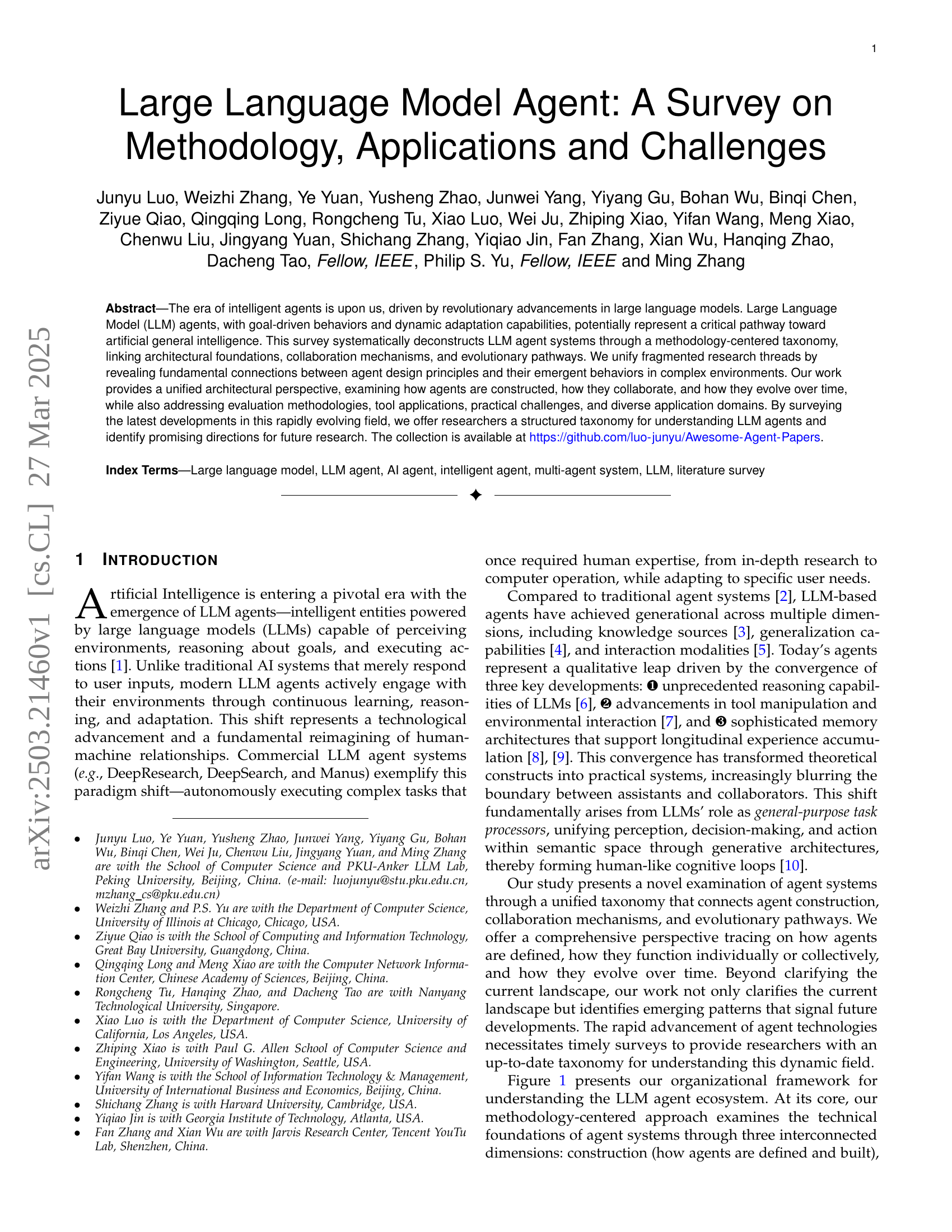

🔼 This figure presents a comprehensive overview of the Large Language Model (LLM) agent ecosystem. It’s structured around four interconnected dimensions: Agent Methodology (construction, collaboration, and evolution), Evaluation and Tools (benchmarks, assessment frameworks, development tools), Real-World Issues (security, privacy, and social impact), and Applications (diverse domains of LLM agent deployment). This framework helps in understanding the entire lifecycle of modern LLM-based agent systems, from their initial design and development to their real-world application and the challenges they present.

read the caption

Figure 1: An overview of the LLM agent ecosystem organized into four interconnected dimensions: ❶ Agent Methodology, covering the foundational aspects of construction, collaboration, and evolution; ❷ Evaluation and Tools, presenting benchmarks, assessment frameworks, and development tools; ❸ Real-World Issues, addressing critical concerns around security, privacy, and social impact; and ❹ Applications, highlighting diverse domains where LLM agents are being deployed. We provide a structured framework for understanding the complete lifecycle of modern LLM-based agent systems.

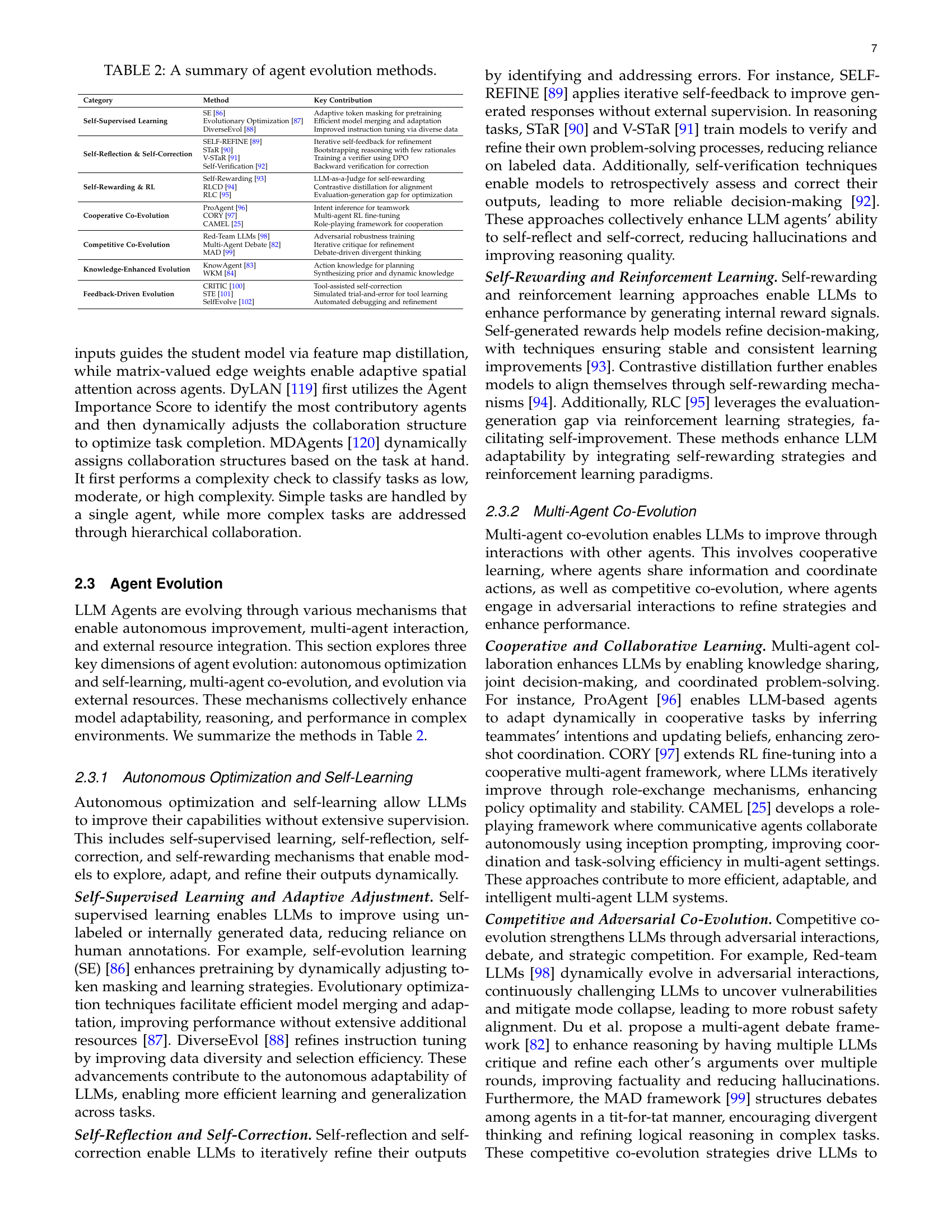

| Category | Method | Key Contribution |

| Centralized Control | Coscientist [73] | Human-centralized experimental control |

| LLM-Blender [74] | Cross-attention response fusion | |

| MetaGPT [27] | Role-specialized workflow management | |

| AutoAct [75] | Triple-agent task differentiation | |

| Meta-Prompting [76] | Meta-prompt task decomposition | |

| WJudge [77] | Weak-discriminator validation | |

| Decentralized Collaboration | MedAgents [78] | Expert voting consensus |

| ReConcile [79] | Multi-agent answer refinement | |

| METAL [115] | Domain-specific revision agents | |

| DS-Agent [116] | Database-driven revision | |

| MAD [80] | Structured anti-degeneration protocols | |

| MADR [81] | Verifiable fact-checking critiques | |

| MDebate [82] | Stubborn-collaborative consensus | |

| AutoGen [26] | Group-chat iterative debates | |

| Hybrid Architecture | CAMEL [25] | Grouped role-play coordination |

| AFlow [29] | Three-tier hybrid planning | |

| EoT [117] | Multi-topology collaboration patterns | |

| DiscoGraph [118] | Pose-aware distillation | |

| DyLAN [119] | Importance-aware topology | |

| MDAgents [120] | Complexity-aware routing |

🔼 This table categorizes and summarizes various Large Language Model (LLM) agent collaboration methods, contrasting centralized control, decentralized collaboration, and hybrid approaches. Each method is listed with a key contribution, illustrating the different ways LLM agents can interact and work together to achieve a shared goal.

read the caption

TABLE I: A summary of agent collaboration methods.

In-depth insights#

Agent Lifecycle#

While the provided paper doesn’t explicitly use the term ‘Agent Lifecycle,’ its content allows us to infer the key stages. The construction phase defines the agent’s architecture, integrating memory, planning, and action execution. Collaboration dictates interaction with other agents or humans, using centralized, decentralized, or hybrid approaches. Finally, evolution focuses on adaptation through self-learning, multi-agent co-evolution, or external knowledge incorporation. This lifecycle underscores the dynamic nature of LLM agents, moving beyond static systems to entities that learn, adapt, and improve over time. Evaluation at every stage is critical.

RAG as Memory#

RAG (Retrieval-Augmented Generation) as memory enhances LLMs by integrating external knowledge, overcoming training data limitations. This paradigm encompasses static knowledge grounding via text corpora or knowledge graphs, interactive retrieval that uses agent dialogues for external queries, and reasoning-integrated retrieval, exemplified by interleaving step-by-step reasoning with dynamic knowledge acquisition. Advanced methods like KG-RAR construct task-specific subgraphs, and DeepRAG balances parametric knowledge with external evidence. These architectures maintain contextual relevance and are critical for scalable memory systems.

Multi-Agent Collab#

Multi-agent collaboration enables LLMs to extend problem-solving beyond individual reasoning. Effective collaboration leverages distributed intelligence, coordinates actions, and refines decisions through multi-agent interactions. Centralized architectures employ a hierarchical coordination mechanism where a central controller organizes agent activities through task allocation and decision integration, while other sub-agents can only communicate with the controller. In decentralized architectures, collaboration enables direct node-to-node interaction through self-organizing protocols. Finally, hybrid architectures strategically combine centralized coordination and decentralized collaboration to balance controllability with flexibility and adapt to heterogeneous task requirements.

Dataset Genesis#

Dataset genesis in LLM agent research focuses on how datasets are created and utilized. This involves exploring methodologies for constructing datasets that effectively train and evaluate LLM agents. A core aspect is the creation of diverse datasets covering various tasks and environments. The method involves the creation of new datasets by multiple agents. Constructing datasets with high-quality labels and annotations is a key challenge, which involves the creation of custom tools. Efficient dataset management practices are crucial to ensure scalability and accessibility. These methods are employed to create realistic testing scenarios to enhance agent robustness. Datasets are also actively curated to improve agent adaptability. Data collection and synthesis is also crucial, to have higher fidelity and trustworthiness for the agents in use.

LLM Privacy#

LLM Privacy is a pressing concern. The inherent memory capabilities of LLMs, while enabling sophisticated interactions, also create vulnerabilities. Data breaches can expose sensitive information learned during training or interaction. Mitigating strategies are vital, focusing on techniques like differential privacy to inject noise during training, thereby obscuring individual data points. Another approach is knowledge distillation, which transfers learned representations from a private model to a public one, minimizing the risk of memorization. Moreover, strict data governance policies and user controls are essential to manage access and retention. The goal is to establish a balance between functionality and responsible handling of private data.

More visual insights#

More on figures

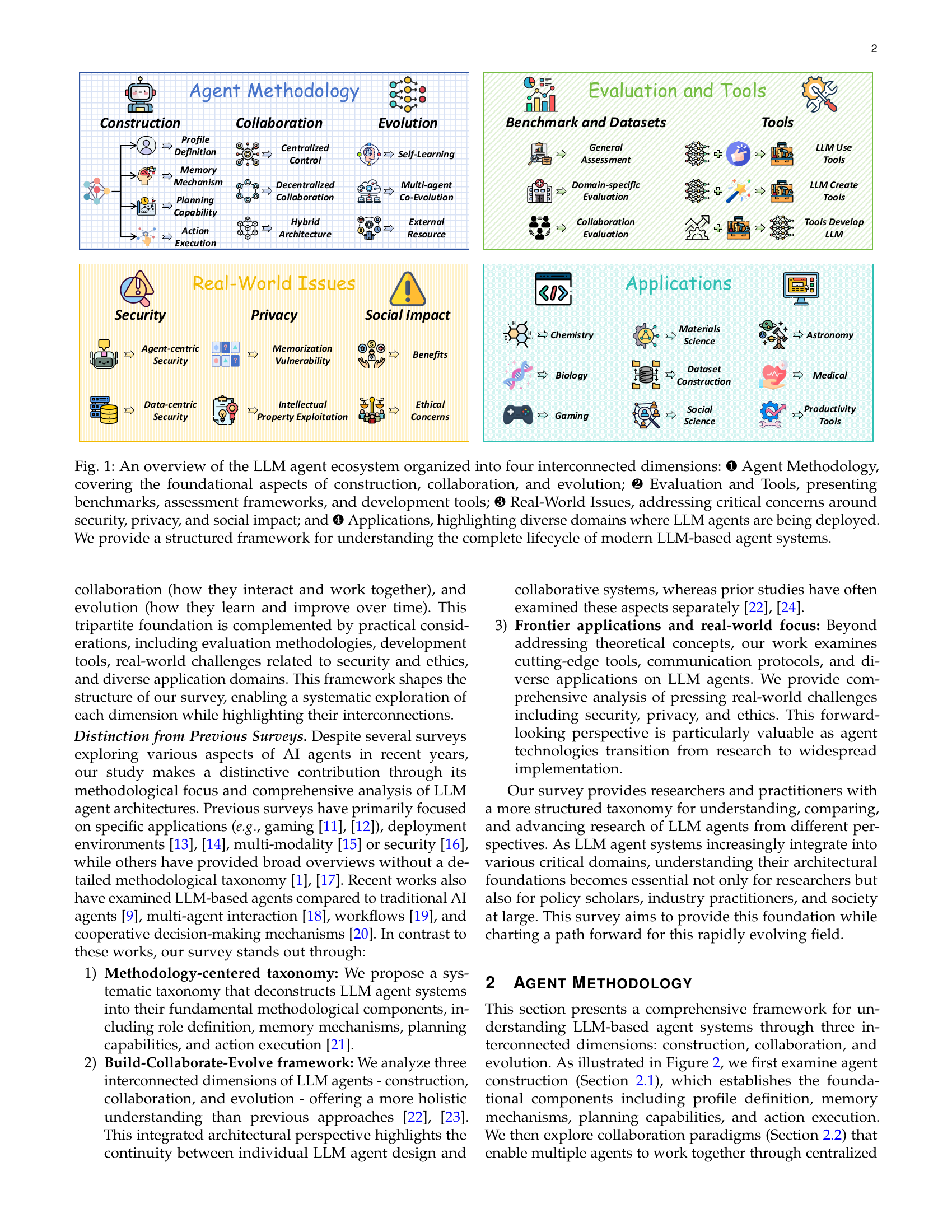

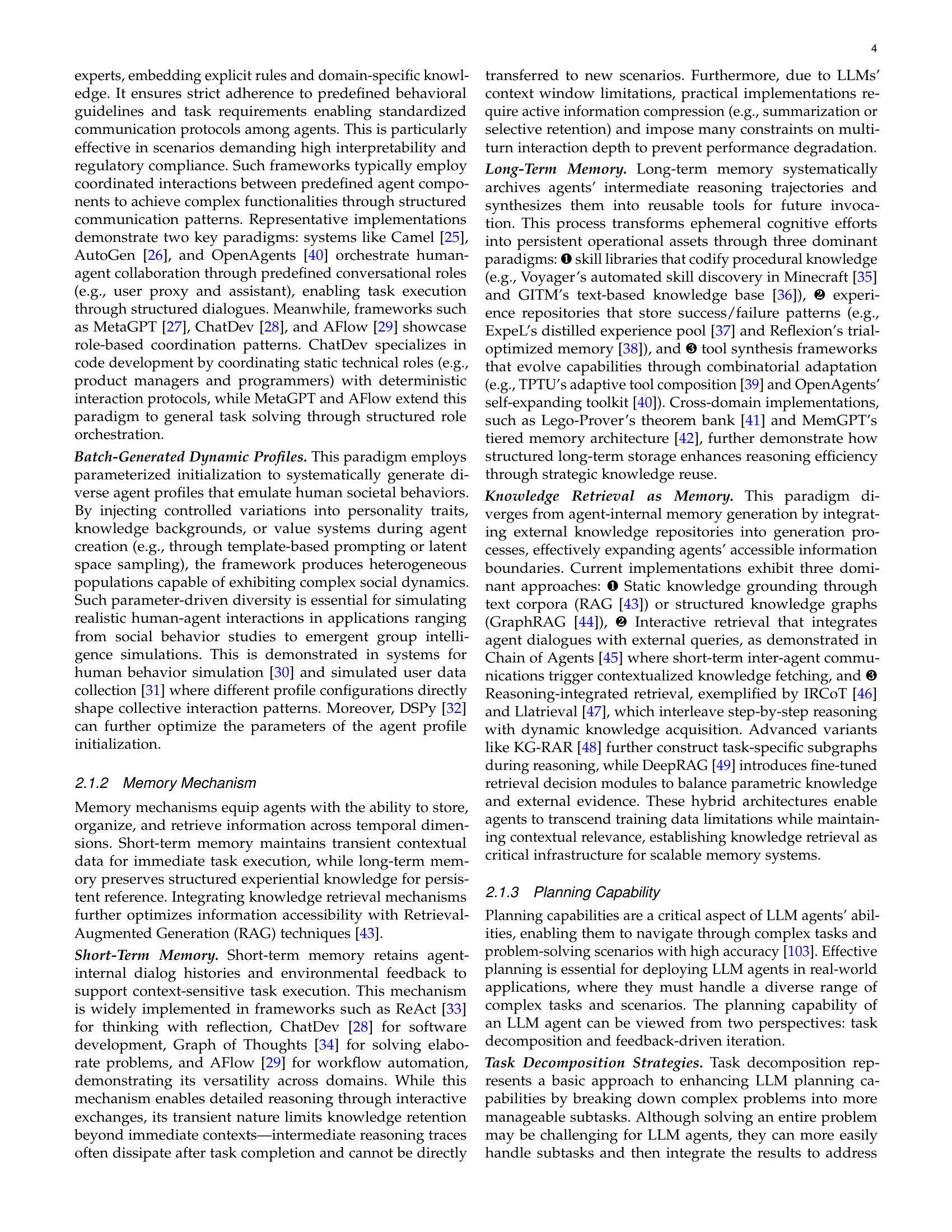

🔼 This figure presents a taxonomy that categorizes the methodologies used in creating large language model (LLM) agents. It’s structured into three main sections: Agent Construction, Agent Collaboration, and Agent Evolution. Each section further breaks down into sub-categories detailing different approaches and techniques used within each stage of agent development. This taxonomy helps to illustrate the different paths researchers and developers take when designing LLM agents, from basic profile definitions to sophisticated multi-agent collaboration mechanisms and strategies for long-term adaptation and improvement.

read the caption

Figure 2: A taxonomy of large language model agent methodologies.

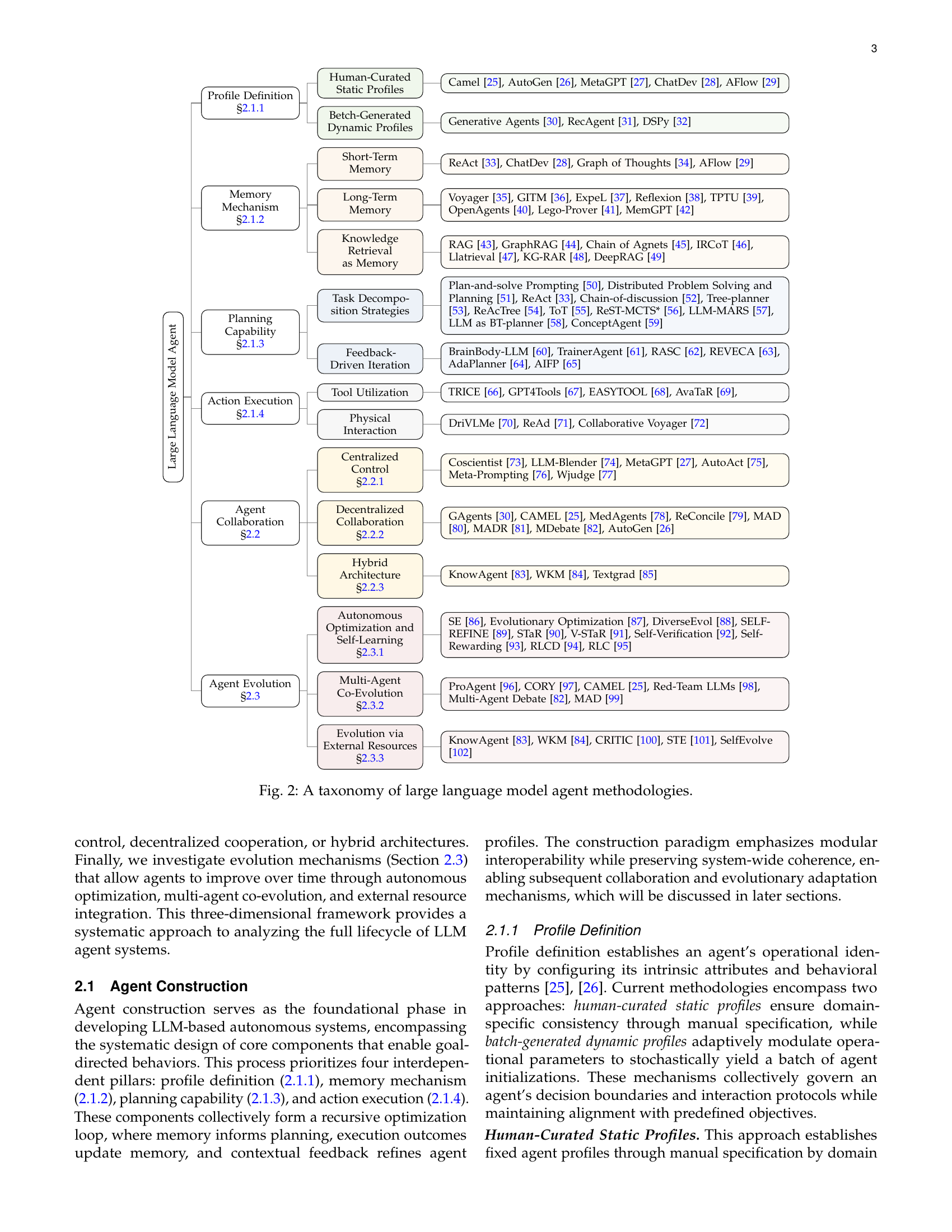

🔼 Figure 3 provides a comprehensive overview of the evaluation methods and tools used for Large Language Model (LLM) agents. The figure is divided into two main sections. The left section categorizes various evaluation frameworks based on their scope and focus, including general assessment, domain-specific evaluations, and collaboration-based evaluations. This helps researchers and practitioners understand the different aspects of LLM agent performance and choose the most suitable methods for their specific needs. The right section showcases the various types of tools involved in the LLM agent ecosystem. These include tools utilized by LLM agents during task execution, tools created by LLM agents to extend functionality, and tools required for deploying, managing, and maintaining LLM agents in practical applications.

read the caption

Figure 3: An overview of evaluation benchmarks and tools for LLM agents. The left side shows various evaluation frameworks categorized by general assessment, domain-specific evaluation, and collaboration evaluation. The right side illustrates tools used by LLM agents, tools created by agents, and tools for deploying agents.

More on tables

| Category | Method | Key Contribution |

| Self-Supervised Learning | SE [86] | Adaptive token masking for pretraining |

| Evolutionary Optimization [87] | Efficient model merging and adaptation | |

| DiverseEvol [88] | Improved instruction tuning via diverse data | |

| Self-Reflection & Self-Correction | SELF-REFINE [89] | Iterative self-feedback for refinement |

| STaR [90] | Bootstrapping reasoning with few rationales | |

| V-STaR [91] | Training a verifier using DPO | |

| Self-Verification [92] | Backward verification for correction | |

| Self-Rewarding & RL | Self-Rewarding [93] | LLM-as-a-Judge for self-rewarding |

| RLCD [94] | Contrastive distillation for alignment | |

| RLC [95] | Evaluation-generation gap for optimization | |

| Cooperative Co-Evolution | ProAgent [96] | Intent inference for teamwork |

| CORY [97] | Multi-agent RL fine-tuning | |

| CAMEL [25] | Role-playing framework for cooperation | |

| Competitive Co-Evolution | Red-Team LLMs [98] | Adversarial robustness training |

| Multi-Agent Debate [82] | Iterative critique for refinement | |

| MAD [99] | Debate-driven divergent thinking | |

| Knowledge-Enhanced Evolution | KnowAgent [83] | Action knowledge for planning |

| WKM [84] | Synthesizing prior and dynamic knowledge | |

| Feedback-Driven Evolution | CRITIC [100] | Tool-assisted self-correction |

| STE [101] | Simulated trial-and-error for tool learning | |

| SelfEvolve [102] | Automated debugging and refinement |

🔼 This table provides a comprehensive summary of different agent evolution methods categorized by their approach (such as self-supervised learning, self-reflection, and co-evolution). For each method, it lists the key contributions and provides a reference to the relevant research paper. This allows readers to easily compare various techniques used for enhancing LLM agents’ capabilities over time.

read the caption

TABLE II: A summary of agent evolution methods.

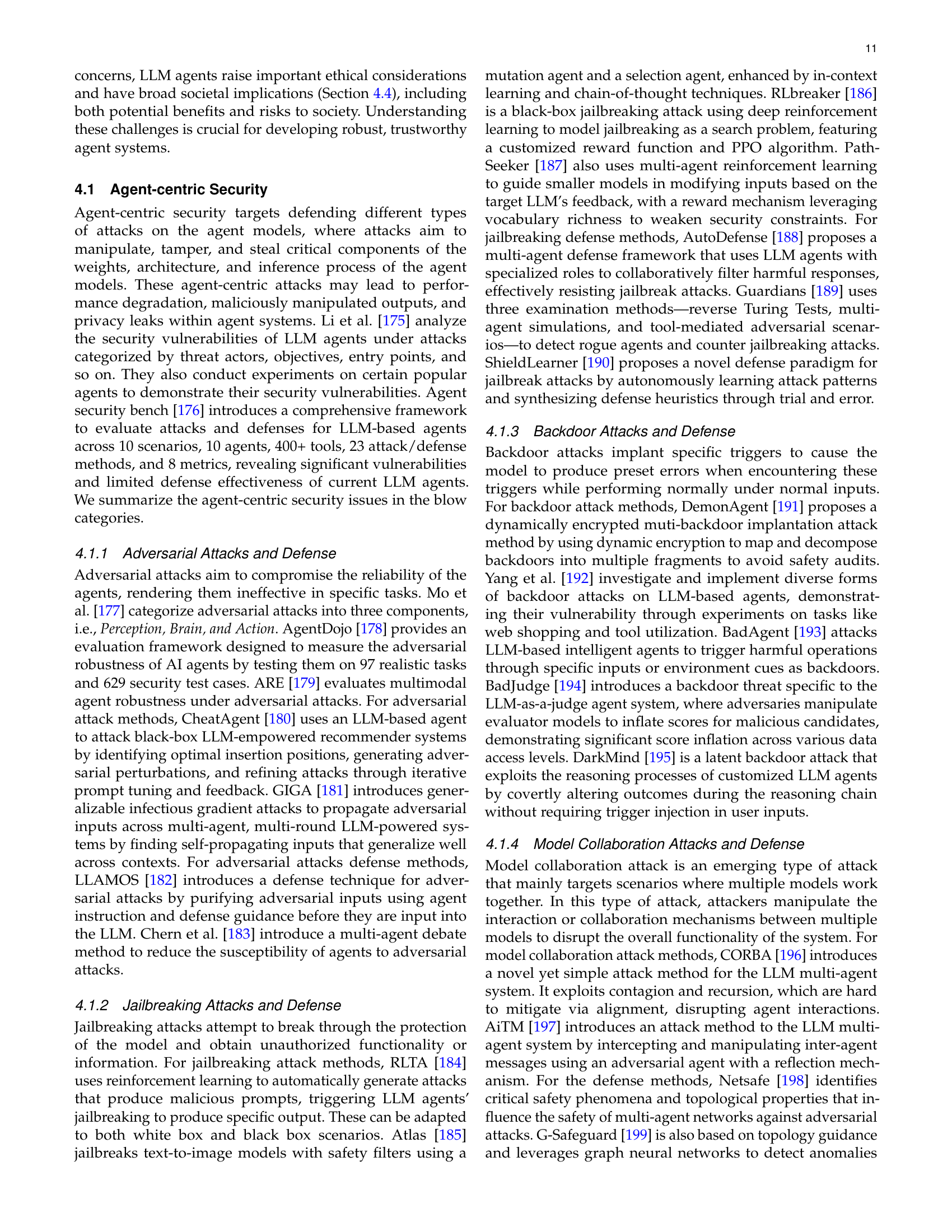

| Reference | Description |

|---|---|

| Adversarial Attacks and Defense | |

| Mo et al. [177] | Attack: Adversarial attack benchmark |

| AgentDojo [178] | Attack: Adversarial attack framework |

| ARE [179] | Attack: Adversarial attack evaluation for multimodal agents |

| GIGA [181] | Attack: Generalizable infectious gradient attacks |

| CheatAgent [180] | Attack: Adversarial attack agent for recommender systems |

| LLAMOS [182] | Defense: Purifying adversarial attack input |

| Chern et al. [183] | Defense: Defense via multi-agent debate |

| Jailbreaking Attacks and Defense | |

| RLTA [184] | Attack: Produce jailbreaking prompts via reinforcement learning |

| Atlas [185] | Attack: Jailbreaks text-to-image models with safety filters |

| RLbreaker [186] | Attack: Model jailbreaking as a search problem |

| PathSeeker [187] | Attack: Use multi-agent reinforcement learning to jailbreak |

| AutoDefense [188] | Defense: Multi-agent defense to filter harmful responses |

| Guardians [189] | Defense: Detect rogue agents to counter jailbreaking attacks. |

| ShieldLearner [190] | Defense: Learn attack jailbreaking patterns. |

| Backdoor Attacks and Defense | |

| DemonAgent [191] | Attack: Encrypted muti-backdoor implantation attack |

| Yang et al. [192] | Attack: Backdoor attacks evaluations on LLM-based agents |

| BadAgent [193] | Attack: Inputs or environment cues as backdoors |

| BadJudge [194] | Attack: Backdoor to the LLM-as-a-judge agent system |

| DarkMind [195] | Attack: latent backdoor attack to customized LLM agents |

| Agent Collaboration Attacks and Defense | |

| CORBA [196] | Attack: Multi-agent attack via multi-agent |

| AiTM [197] | Attack: Intercepte and manipulate inter-agent messages |

| Netsafe [198] | Defense: Identify critical safety phenomena in multi-agent networks |

| G-Safeguard [199] | Defense: leverages graph neural networks to detect anomalies |

| Trustagent [200] | Defense: Agent constitution in task planning. |

| PsySafe [201] | Defense: Mitigate safety risks via agent psychology |

🔼 This table provides a comprehensive summary of various agent-centric attacks and their corresponding defenses in Large Language Model (LLM) agents. It categorizes attacks by type (Adversarial, Jailbreaking, Backdoor, Model Collaboration) and includes the specific method used for the attack, a description of that attack, and a reference to the source publication. For each attack, the table may also include information about defenses against it.

read the caption

TABLE III: Summary of agent-centric attacks and defense in LLM agents.

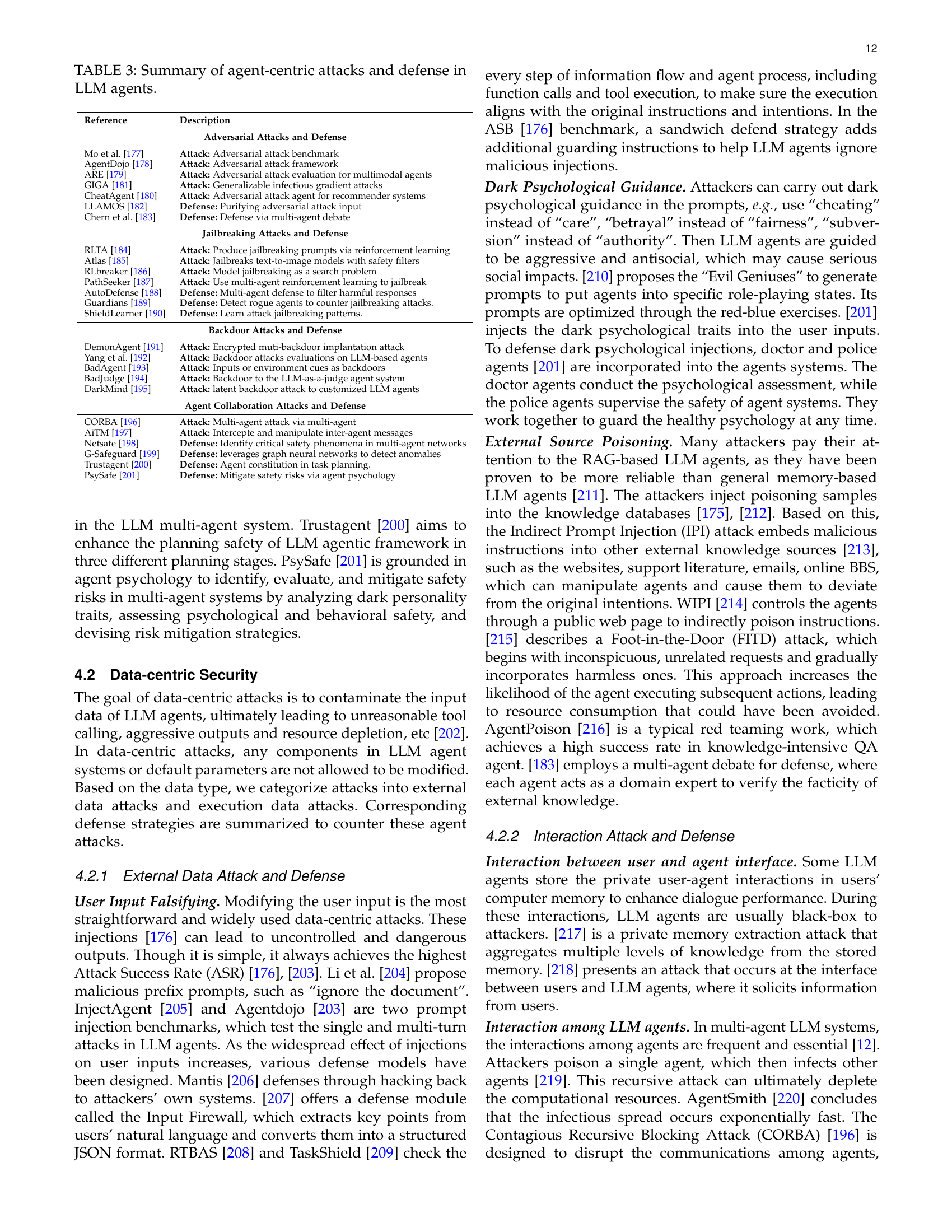

| Reference | Description |

|---|---|

| External Data Attacks and Security | |

| Li et al. [204] | Attack: Malicious prefix injection |

| Psysafe [201] | Attack: A dark psychological injection benchmark |

| Tian et al. [210] | Attack: Guide agents into specific role-playing states |

| InjectAgent [205] | Attack: A prompting injection benchmark |

| Agentdojo [203] | Attack: A user injection benchmark |

| AgentPoison [216] | Attack: Poisoning samples in knowledge databases |

| Nakash et al.[215] | Attack: Indirect prompt injection through FITD attack |

| WIPI [214] | Attack: control agents through a public web page |

| ASB [176] | Attack: A multi-type attack benchmark |

| AgentHarm [223] | Attack: A multi-type attack benchmark |

| Mantis [206] | Defense: Hacking back to attackers |

| Chern et al.[183] | Defense: Employ multi-agent debate to verify external knowledge |

| RTBAS [208] | Defense: Check every step of agent information flow |

| TaskShield [209] | Defense: Check every step of agent process |

| Zhang et al. [201] | Defense: Doctor and police agents guard the healthy psychology |

| Interaction Attacks and Security | |

| Wang et al. [217] | Attack: Private memory extraction attack |

| CORBA [196] | Attack: Disrupt the communications among agents |

| AgentSmith [220] | Attack: Poison one agent to infectious other agents |

| Lee et al. [221] | Attack: Conduct injections to self-replicate among agents |

| He et al. [197] | Attack: Inject semantic disruptions to agent communications |

| BlockAgents [222] | Defense: Incorporate blockchain and PoT against byzantine attacks |

| Abdelnabi et al. [207] | Defense: A multi-layer agent firewall |

🔼 This table summarizes various data-centric attacks and defense mechanisms targeting Large Language Model (LLM) agents. Data-centric attacks focus on manipulating the input data provided to the LLM agents to cause undesirable outputs or behaviors, rather than directly targeting the model’s internal structure. The table categorizes these attacks based on their approach (external data falsification vs. interaction attacks), and also includes defenses against each type of attack.

read the caption

TABLE IV: Summary of data-centric attack and defense in LLM agents.

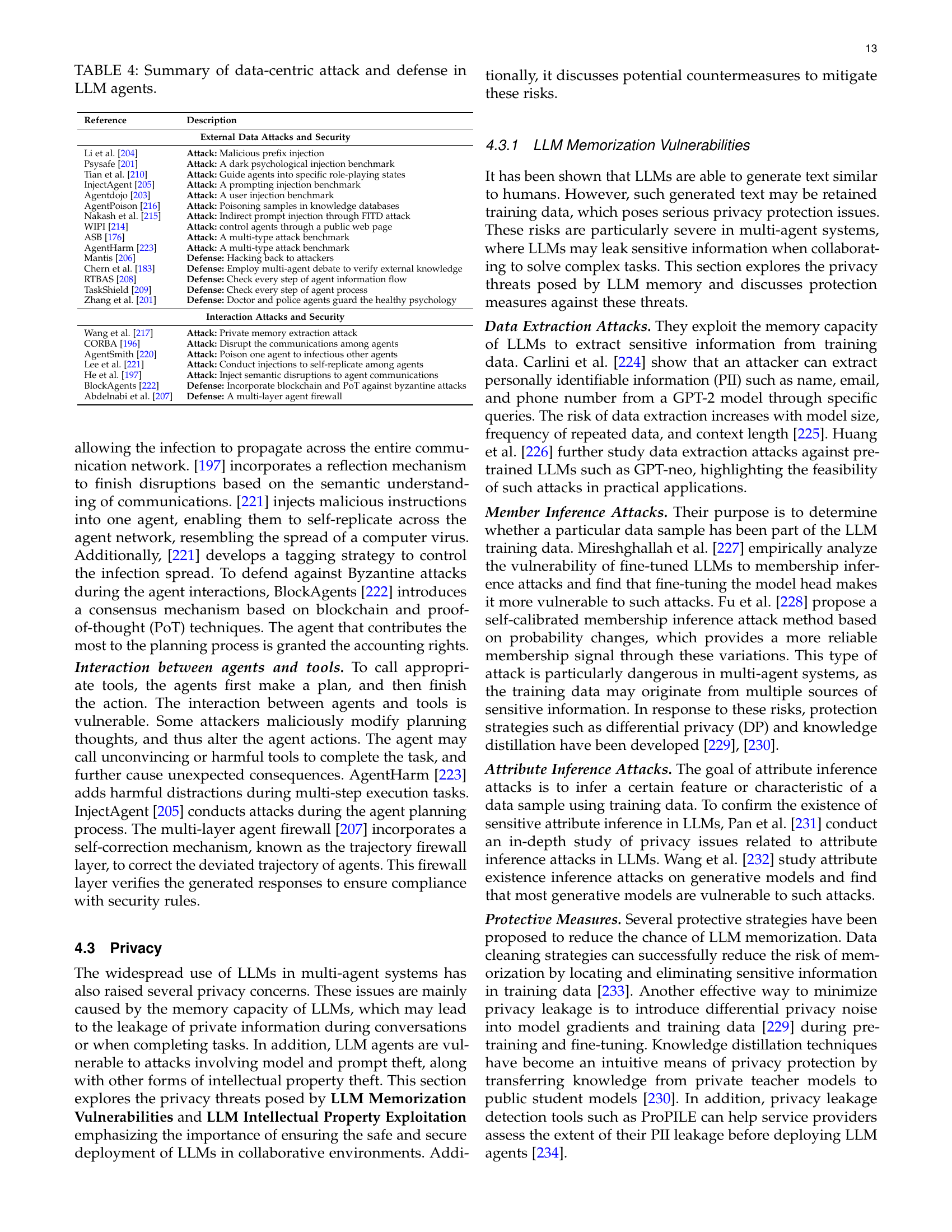

| Reference | Description |

|---|---|

| LM Memorization Vulnerabilities | |

| Carlini et al. [224] | Attack: Data Extraction |

| Huang et al. [226] | Attack: Data Extraction on Pretrained LLMs |

| Mireshghallah et al. [227] | Attack: Membership Inference on Fine-Tuned LLMs |

| Fu et al. [228] | Attack: Self-Calibrated Membership Inference |

| Pan et al. [231] | Attack: Attribute Inference in General-Purpose LLMs |

| Wang et al. [232] | Attack: Property Existence Inference in Generative Models |

| Kandpal et al. [233] | Defense: Data Sanitization to Mitigate Memorization |

| Hoory et al. [229] | Defense: Differential Privacy for Pre-Trained LLMs |

| Kang et al. [230] | Defense: Knowledge Distillation for Privacy Preservation |

| Kim et al. [234] | Defense: Privacy Leakage Assessment Tool |

| LM Intellectual Property Exploitation | |

| Krishna et al. [235] | Attack: Model Stealing via Query APIs |

| Naseh et al. [236] | Attack: Stealing Decoding Algorithms of LLMs |

| Li et al. [237] | Attack: Extracting Specialized Code Abilities from LLMs |

| Shen et al. [240] | Attack: Prompt Stealing in Text-to-Image Models |

| Sha et al. [241] | Attack: Prompt Stealing in LLMs |

| Hui et al. [242] | Attack: Closed-Box Prompt Extraction |

| Kirchenbauer et al. [238] | Defense: Model Watermarking for IP Protection |

| Lin et al. [239] | Defense: Blockchain for IP Verification |

🔼 This table summarizes various privacy threats associated with Large Language Model (LLM) agents and the corresponding countermeasures. It categorizes privacy threats into two main areas: LLM Memorization Vulnerabilities (data extraction attacks, membership inference attacks, attribute inference attacks) and LLM Intellectual Property Exploitation (model stealing attacks, prompt stealing attacks). For each type of threat, the table lists specific attack methods and relevant references to research papers, along with countermeasures to mitigate these privacy concerns. The countermeasures include techniques like data sanitization, differential privacy, knowledge distillation, model watermarking, and blockchain-based IP protection.

read the caption

TABLE V: Summary of privacy threats and countermeasures in LLM agents.

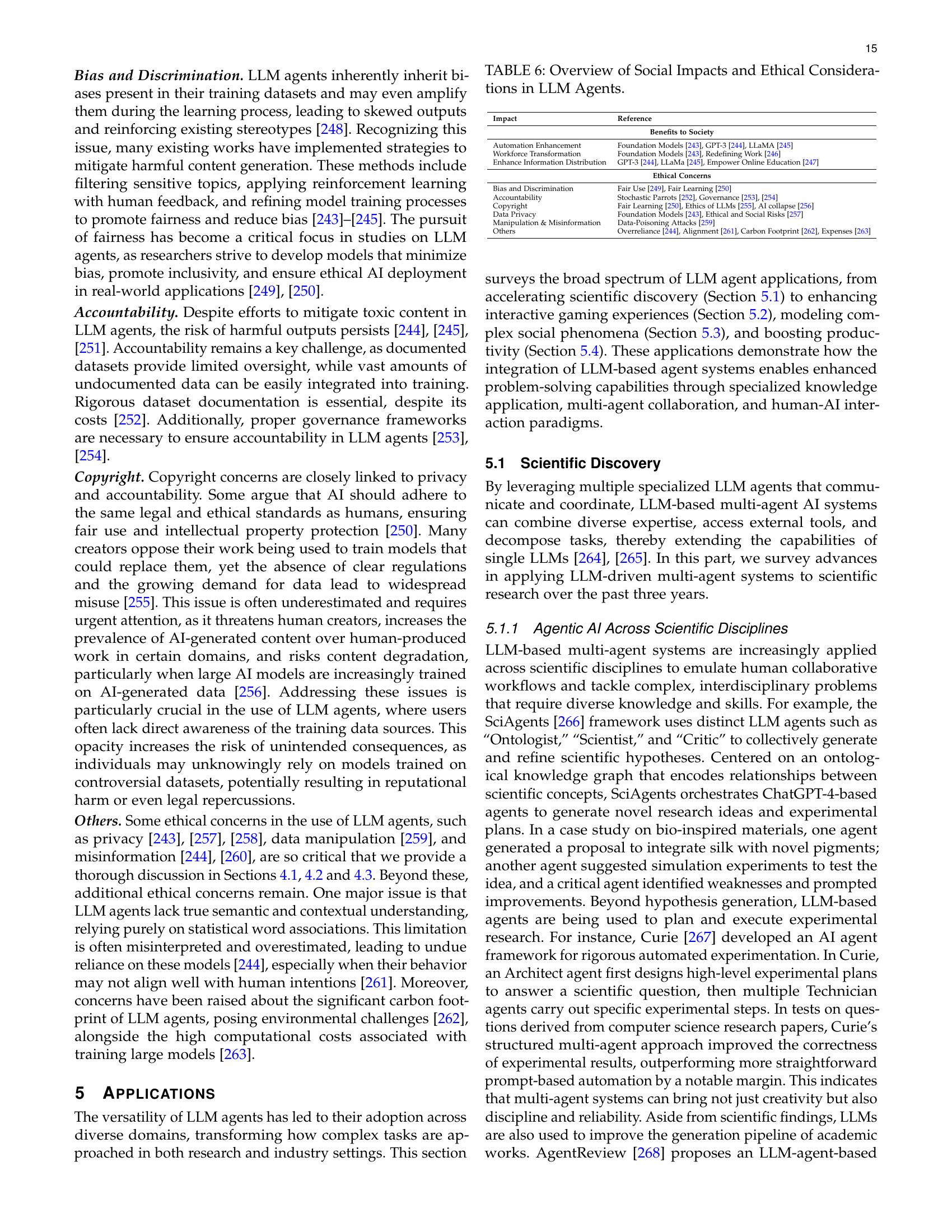

| Impact | Reference |

|---|---|

| Benefits to Society | |

| Automation Enhancement | Foundation Models [243], GPT-3 [244], LLaMA [245] |

| Workforce Transformation | Foundation Models [243], Redefining Work [246] |

| Enhance Information Distribution | GPT-3 [244], LLaMa [245], Empower Online Education [247] |

| Ethical Concerns | |

| Bias and Discrimination | Fair Use [249], Fair Learning [250] |

| Accountability | Stochastic Parrots [252], Governance [253, 254] |

| Copyright | Fair Learning [250], Ethics of LLMs [255], AI collapse [256] |

| Data Privacy | Foundation Models [243], Ethical and Social Risks [257] |

| Manipulation & Misinformation | Data-Poisoning Attacks [259] |

| Others | Overreliance [244], Alignment [261], Carbon Footprint [262], Expenses [263] |

🔼 This table presents a comprehensive overview of the societal impacts and ethical considerations associated with the use of Large Language Model (LLM) agents. It categorizes the effects into benefits and ethical concerns, providing specific examples and references for each category. The benefits include automation enhancement, workforce transformation, and improved information distribution. The ethical concerns encompass bias and discrimination, accountability issues, copyright implications, data privacy risks, potential for manipulation and misinformation, and other emerging concerns. This detailed breakdown helps to provide a balanced perspective on the significant influence of LLM agents on society.

read the caption

TABLE VI: Overview of Social Impacts and Ethical Considerations in LLM Agents.

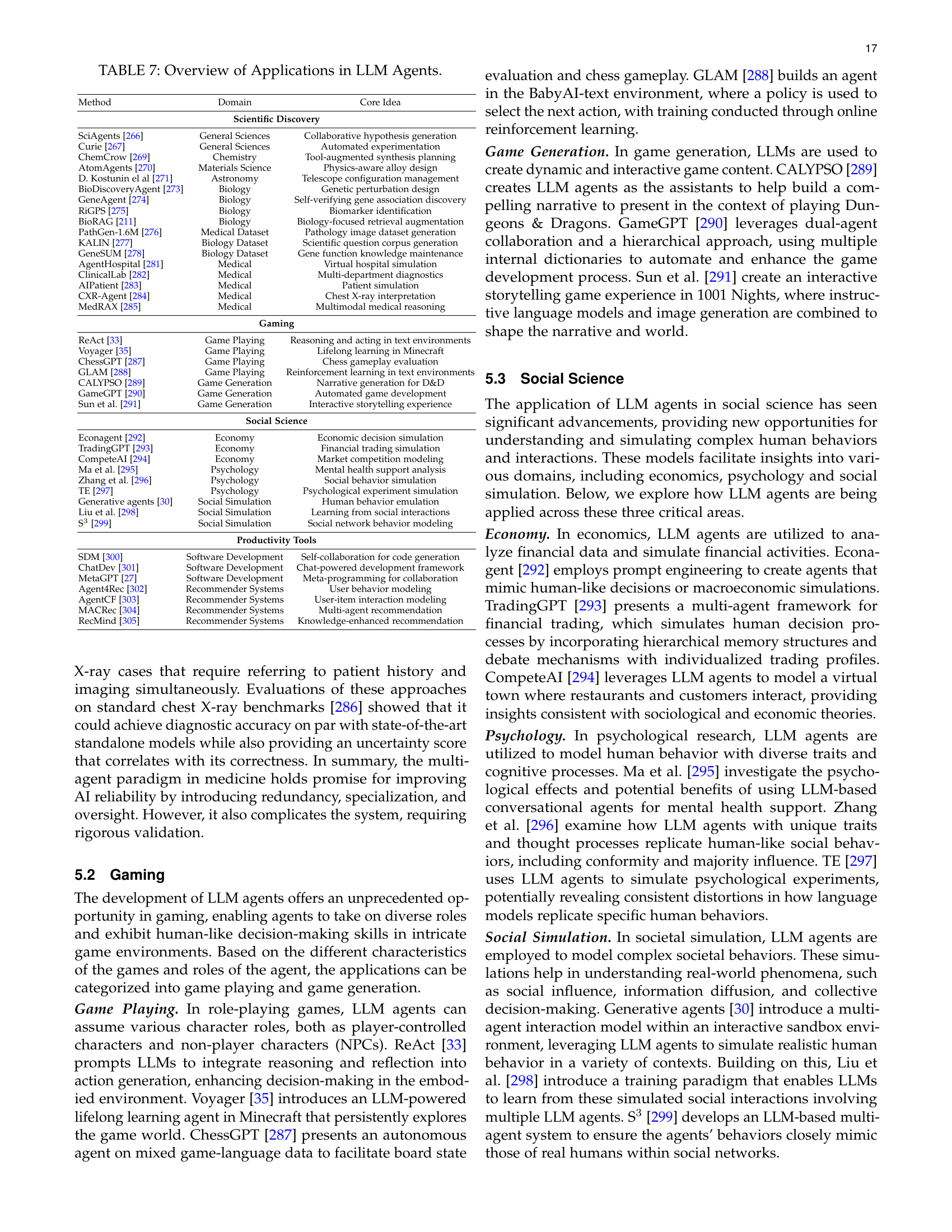

| Method | Domain | Core Idea |

|---|---|---|

| Scientific Discovery | ||

| SciAgents [266] | General Sciences | Collaborative hypothesis generation |

| Curie [267] | General Sciences | Automated experimentation |

| ChemCrow [269] | Chemistry | Tool-augmented synthesis planning |

| AtomAgents [270] | Materials Science | Physics-aware alloy design |

| D. Kostunin el al [271] | Astronomy | Telescope configuration management |

| BioDiscoveryAgent [273] | Biology | Genetic perturbation design |

| GeneAgent [274] | Biology | Self-verifying gene association discovery |

| RiGPS [275] | Biology | Biomarker identification |

| BioRAG [211] | Biology | Biology-focused retrieval augmentation |

| PathGen-1.6M [276] | Medical Dataset | Pathology image dataset generation |

| KALIN [277] | Biology Dataset | Scientific question corpus generation |

| GeneSUM [278] | Biology Dataset | Gene function knowledge maintenance |

| AgentHospital [281] | Medical | Virtual hospital simulation |

| ClinicalLab [282] | Medical | Multi-department diagnostics |

| AIPatient [283] | Medical | Patient simulation |

| CXR-Agent [284] | Medical | Chest X-ray interpretation |

| MedRAX [285] | Medical | Multimodal medical reasoning |

| Gaming | ||

| ReAct [33] | Game Playing | Reasoning and acting in text environments |

| Voyager [35] | Game Playing | Lifelong learning in Minecraft |

| ChessGPT [287] | Game Playing | Chess gameplay evaluation |

| GLAM [288] | Game Playing | Reinforcement learning in text environments |

| CALYPSO [289] | Game Generation | Narrative generation for D&D |

| GameGPT [290] | Game Generation | Automated game development |

| Sun et al. [291] | Game Generation | Interactive storytelling experience |

| Social Science | ||

| Econagent [292] | Economy | Economic decision simulation |

| TradingGPT [293] | Economy | Financial trading simulation |

| CompeteAI [294] | Economy | Market competition modeling |

| Ma et al. [295] | Psychology | Mental health support analysis |

| Zhang et al. [296] | Psychology | Social behavior simulation |

| TE [297] | Psychology | Psychological experiment simulation |

| Generative agents [30] | Social Simulation | Human behavior emulation |

| Liu et al. [298] | Social Simulation | Learning from social interactions |

| S3 [299] | Social Simulation | Social network behavior modeling |

| Productivity Tools | ||

| SDM [300] | Software Development | Self-collaboration for code generation |

| ChatDev [301] | Software Development | Chat-powered development framework |

| MetaGPT [27] | Software Development | Meta-programming for collaboration |

| Agent4Rec [302] | Recommender Systems | User behavior modeling |

| AgentCF [303] | Recommender Systems | User-item interaction modeling |

| MACRec [304] | Recommender Systems | Multi-agent recommendation |

| RecMind [305] | Recommender Systems | Knowledge-enhanced recommendation |

🔼 This table presents a comprehensive overview of various real-world applications of Large Language Model (LLM) agents across diverse domains. It categorizes applications by field (e.g., scientific discovery, gaming, social sciences, productivity tools) and details the core ideas and methodologies behind each example. This offers a broad perspective on the versatility and potential impact of LLM agents in various sectors.

read the caption

TABLE VII: Overview of Applications in LLM Agents.

Full paper#