TL;DR#

Large Reasoning Models (LRMs) demonstrate reasoning abilities but rely on parametric knowledge, limiting factual accuracy. RL-based LRMs with retrieval suffer from overthinking and lack robustness. To address this, a factuality-enhanced reasoning model that explores diverse queries without excessive iterations is needed. This model is expected to solve issues that other prompt based solutions have such as unreliable token generation.

To this end, ReaRAG leverages an LRM to generate thinking and select actions from a predefined action space (Search/Finish). Search queries are executed against a RAG engine, with results guiding later steps until a Finish action is chosen. A data framework with reasoning chain length upper bound is created to improve retrieval robustness. The experiment demonstrated substantial performance improvement.

Key Takeaways#

Why does it matter?#

This paper is important for researchers as it introduces ReaRAG, a framework enhancing factuality in LRMs for question answering. The framework’s knowledge-guided reasoning addresses overthinking in RL-based models and improves integration of reasoning and RAG. The result will open new directions for integrating reasoning into RAG.

Visual Insights#

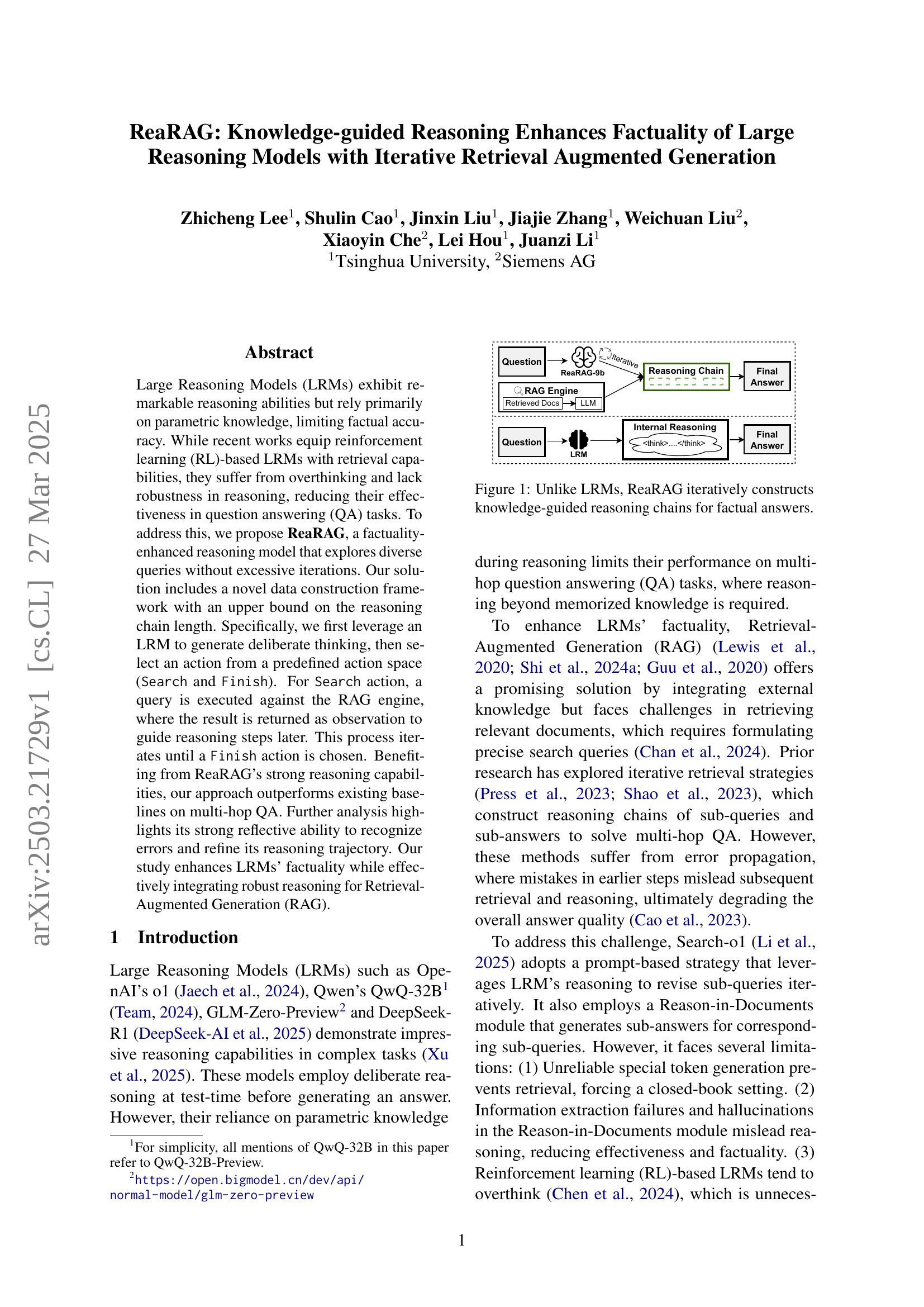

🔼 This figure illustrates the key difference between traditional Large Reasoning Models (LRMs) and the proposed ReaRAG model. LRMs generate answers based solely on their internal knowledge, which can limit factual accuracy, particularly for complex, multi-hop questions. ReaRAG, on the other hand, employs an iterative retrieval-augmented generation (RAG) approach. It starts with an LRM generating a reasoning chain, but it then uses a RAG engine to retrieve external knowledge based on queries generated within that reasoning chain. This process continues iteratively until a final answer is produced. The diagram visually represents this iterative process, showing how ReaRAG constructs its answer by incorporating external information, thus enhancing factual accuracy compared to the single-step approach of LRMs.

read the caption

Figure 1: Unlike LRMs, ReaRAG iteratively constructs knowledge-guided reasoning chains for factual answers.

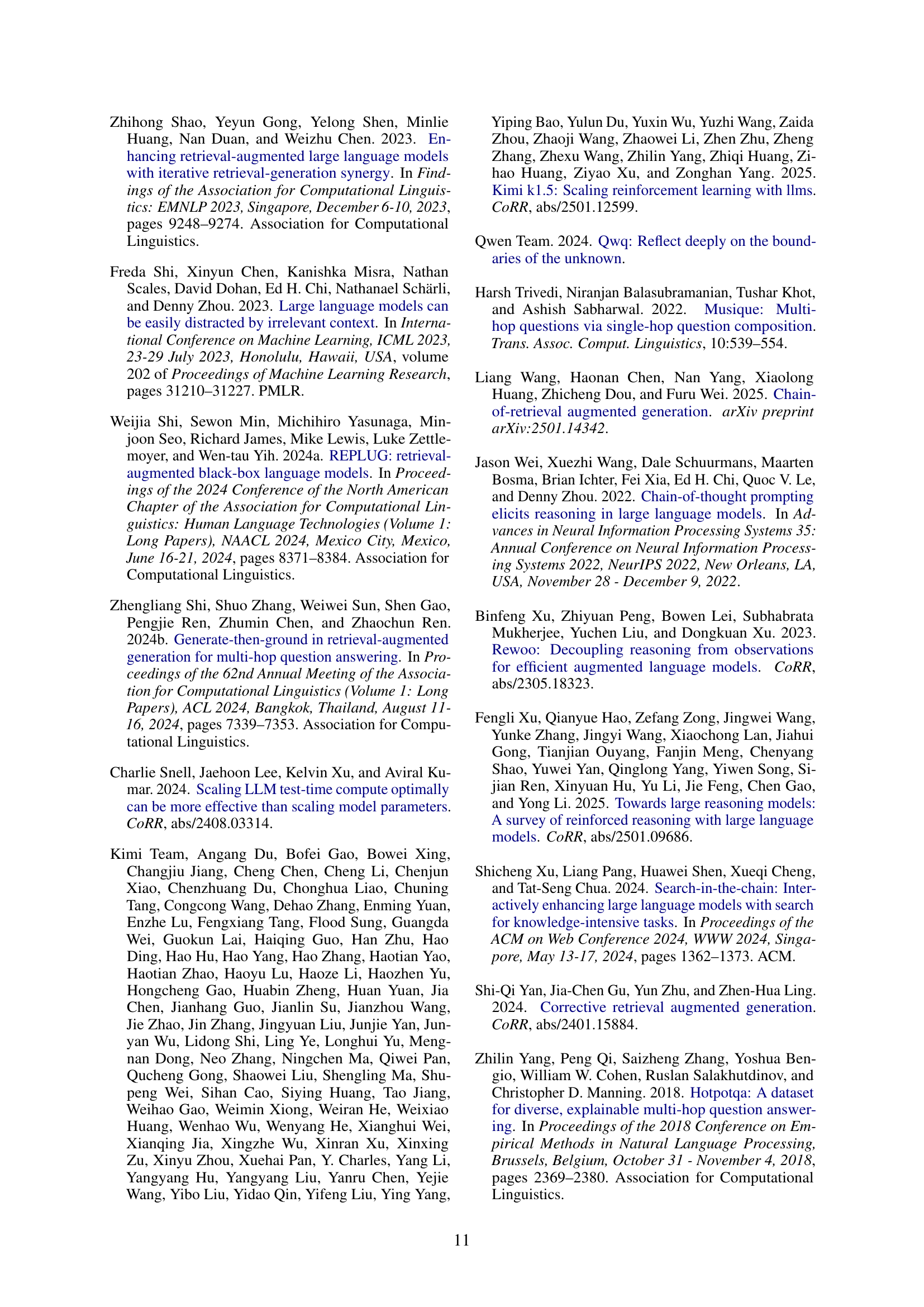

| Category | Model | Multi-hop | Single-hop | ||||||

| MuSiQue | HotpotQA | IIRC | NQ | ||||||

| EM | EM | EM | EM | ||||||

| In-context | GLM-4-9B(128k) | 23.50 | 15.00 | 58.00 | 47.00 | 20.50 | 18.00 | 45.50 | 26.00 |

| GLM-4-32B(128k) | 33.50 | 17.00 | 65.50 | 50.00 | 25.00 | 16.00 | 52.50 | 24.00 | |

| Vanilla RAG | GLM-4-9B(128k) | 25.50 | 14.00 | 68.00 | 52.00 | 28.25 | 23.00 | 49.00 | 32.00 |

| GLM-4-32B(128k) | 29.00 | 17.00 | 67.50 | 52.00 | 28.25 | 17.00 | 53.00 | 39.00 | |

| QwQ-32B | 36.00 | 20.00 | 67.00 | 47.00 | 38.25 | 32.00 | 48.00 | 26.00 | |

| Advanced RAG | Self-RAG(Llama2-7b) | 24.00 | 13.00 | 45.50 | 31.00 | 25.00 | 13.00 | 40.00 | 28.00 |

| SearChain(GPT-4o) | 51.50 | 33.00 | 69.00 | 49.00 | 40.50 | 20.50 | 54.00 | 25.00 | |

| Search-o1(QwQ-32B) | 40.50 | 32.00 | 55.50 | 38.00 | 32.25 | 27.00 | 43.00 | 28.00 | |

| Ours | ReaRAG-9B | 66.00 | 40.00 | 75.50 | 56.00 | 42.75 | 29.00 | 52.00 | 25.00 |

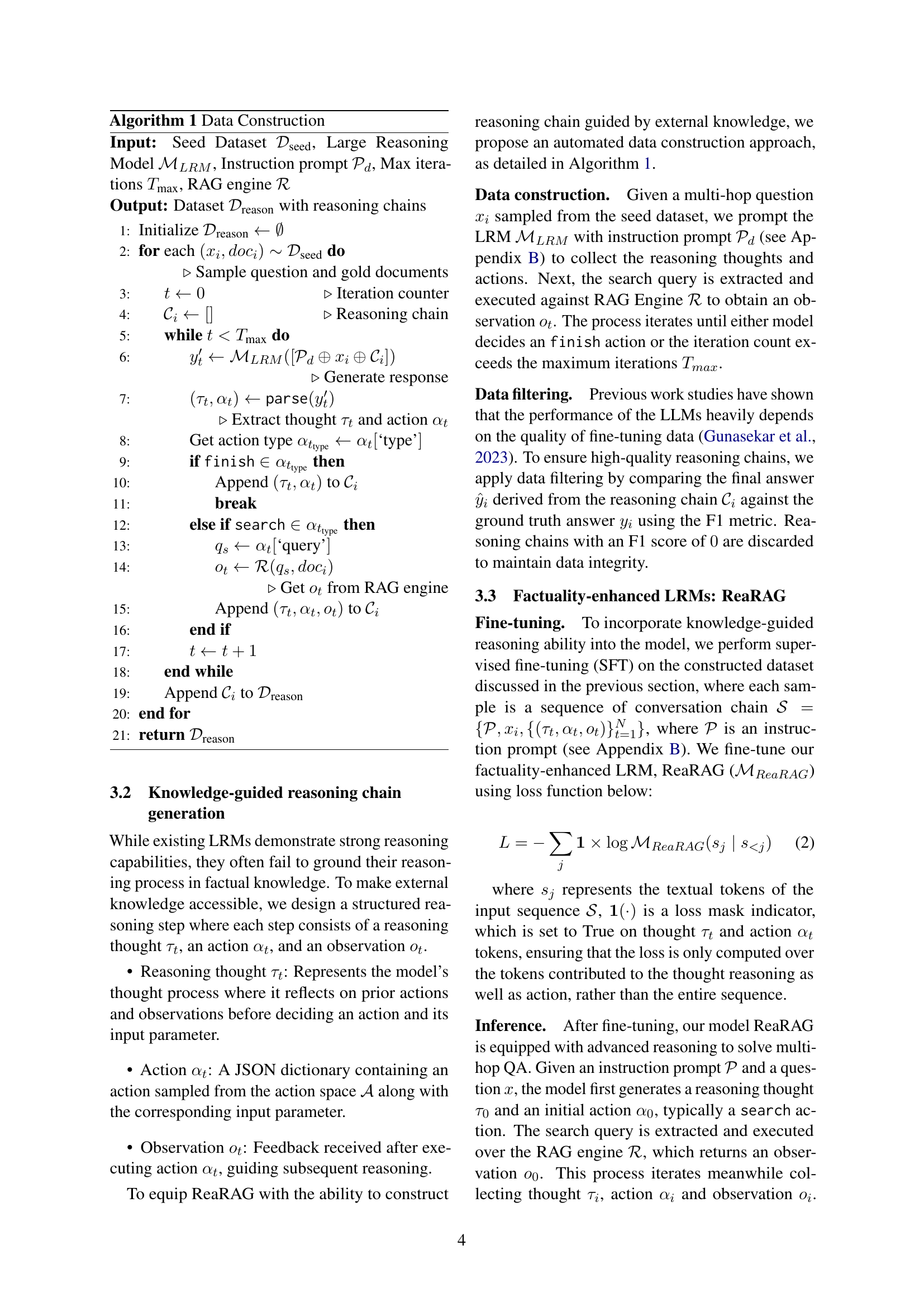

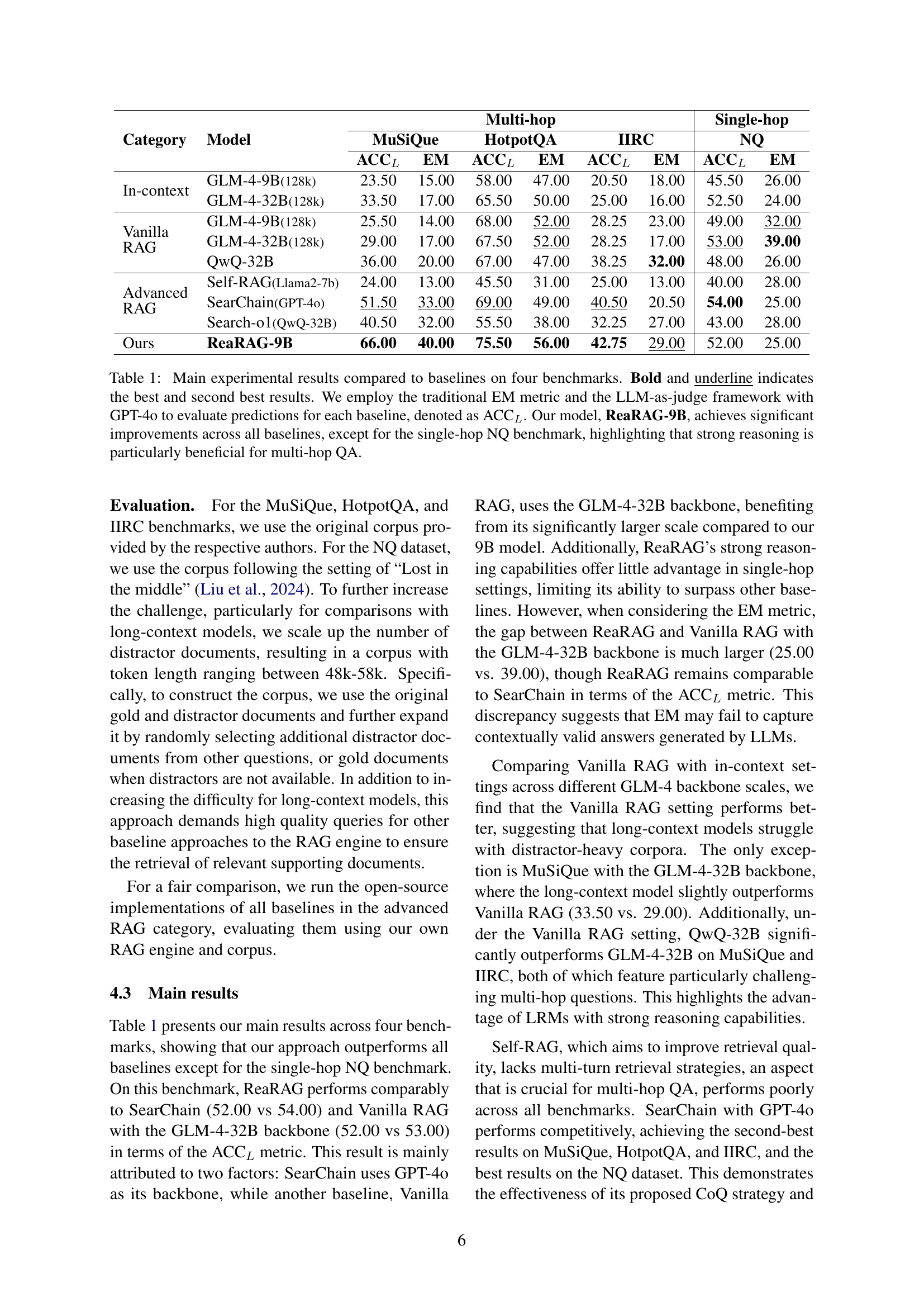

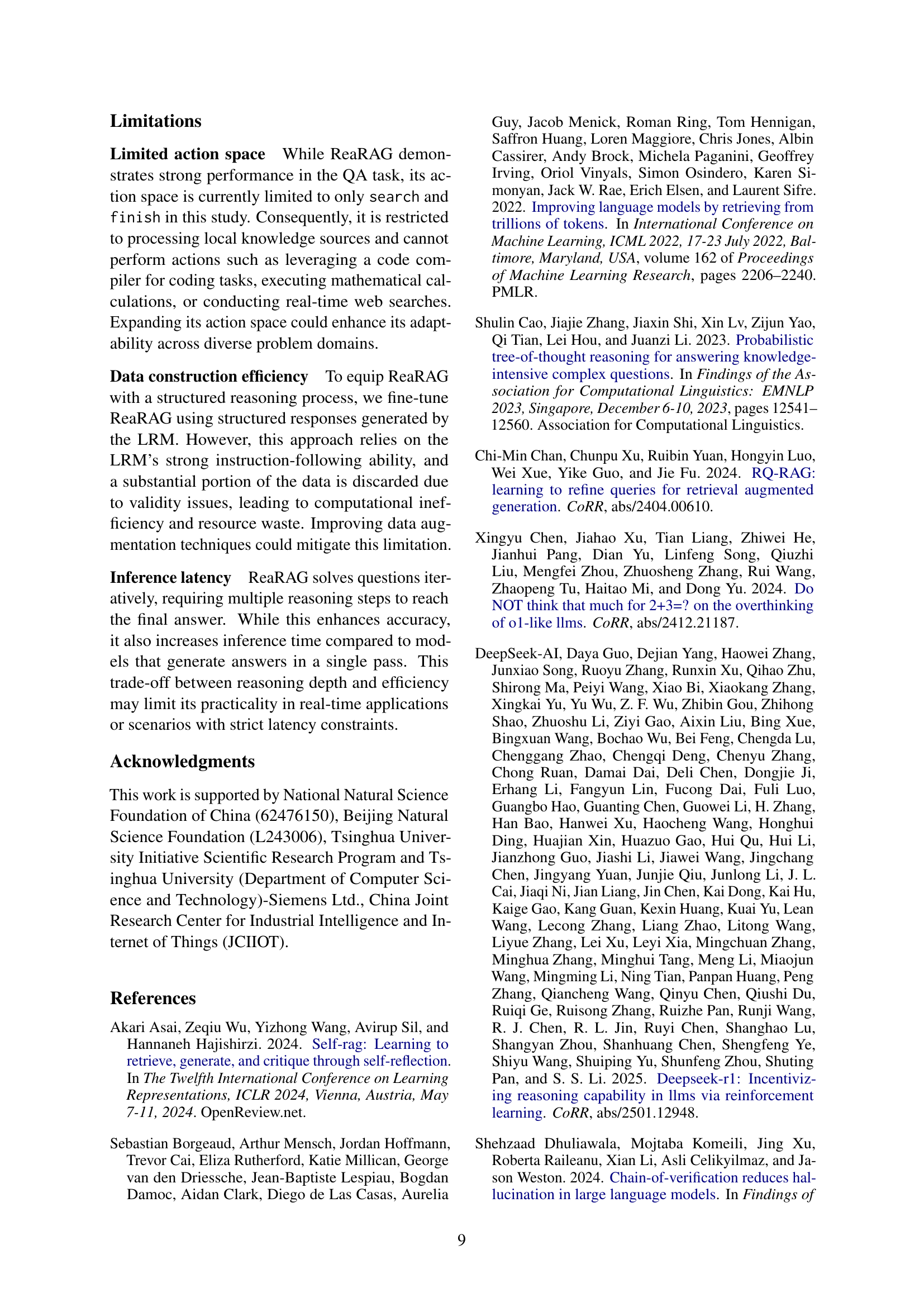

🔼 Table 1 presents a comprehensive comparison of the ReaRAG-9B model’s performance against various baseline models on four distinct question answering (QA) benchmarks: MuSiQue, HotpotQA, IIRC, and NQ. The benchmarks are categorized into multi-hop and single-hop QA tasks to assess the model’s reasoning capabilities under different complexity levels. The table reports two key evaluation metrics: Exact Match (EM) and LLM-as-judge accuracy (ACCL), calculated using GPT-4. EM measures the exactness of the generated answer against the ground truth, while ACCL represents the accuracy of the answer as judged by GPT-4. The results demonstrate ReaRAG-9B’s superior performance in multi-hop QA scenarios compared to the baselines, showcasing the effectiveness of its iterative retrieval and knowledge-guided reasoning mechanism. However, in the single-hop NQ benchmark, ReaRAG-9B’s performance is comparable to existing state-of-the-art methods, indicating that its strengths primarily lie in more complex reasoning tasks. The table highlights the best and second-best performing models for each benchmark and metric, enabling a clear and detailed understanding of the model’s strengths and weaknesses across different QA types.

read the caption

Table 1: Main experimental results compared to baselines on four benchmarks. Bold and underline indicates the best and second best results. We employ the traditional EM metric and the LLM-as-judge framework with GPT-4o to evaluate predictions for each baseline, denoted as ACCLsubscriptACC𝐿\text{ACC}_{L}ACC start_POSTSUBSCRIPT italic_L end_POSTSUBSCRIPT. Our model, ReaRAG-9B, achieves significant improvements across all baselines, except for the single-hop NQ benchmark, highlighting that strong reasoning is particularly beneficial for multi-hop QA.

In-depth insights#

RAG:Factuality#

RAG’s factuality enhancement is a central theme, addressing the limitations of LRMs reliant on parametric knowledge. The paper proposes ReaRAG to enhance factual accuracy through knowledge-guided reasoning. It mitigates issues like overthinking, which can hinder performance in QA tasks. Retrieval robustness is also key. The iterative nature of ReaRAG allows for error correction and refinement during reasoning. The paper emphasizes the integration of strong reasoning with external knowledge.

ReaRAG:Knowledge#

ReaRAG: Knowledge-guided Reasoning Enhances Factuality. It targets the limitations of LRMs which primarily rely on parametric knowledge, hindering factual accuracy. Recent RL-based LRMs are prone to overthinking & reasoning instability. The proposed ReaRAG model enhances factuality, exploring diverse queries efficiently. It uses a novel data construction framework with a bounded reasoning chain length. The method first generates deliberate thinking, selects actions(Search/Finish). Search queries RAG, observations guide steps. It enhances factuality and integrates robust reasoning. The results show strong reflection, error recognition, and trajectory refinement.

RL Overthinking#

RL overthinking in reasoning models refers to a phenomenon where reinforcement learning agents, despite having strong reasoning capabilities, engage in excessive or redundant thinking steps, especially in tasks like multi-hop question answering. This can manifest as unnecessary searches, repetitive verification of already established facts, or exploring irrelevant information pathways, ultimately reducing the efficiency and effectiveness of the reasoning process. This excessive deliberation often stems from a lack of robustness in integrating external knowledge, causing the model to rely too heavily on parametric knowledge or explore too many potential solutions.Mitigation strategies involve fine-tuning models on datasets with controlled reasoning chain lengths, enabling reflective reasoning before action, and employing strategic mechanisms to trigger termination of reasoning at the opportune moment.

Data:Reason Chain#

Generating high-quality reasoning chains is crucial. The authors likely explore methods to construct these chains, potentially using the LRM itself in an iterative process of thought, action, and observation. Data filtering is a critical step, ensuring only high-quality chains are used for fine-tuning. This involves comparing the final answer derived from the chain to the ground truth answer, using metrics like the F1 score. Chains with low F1 scores are discarded. The final chains are of high quality, and are used for the next stages in the project. Automated methods are used to restrict maximum length of reasoning chains, preventing infinite loops during inference. These automated chains equips the RAG to have enhanced factuality in reasoning.

Limited Actions#

The research paper recognizes a significant limitation in its current implementation: the restricted action space of the ReaRAG model, primarily confined to ‘search’ and ‘finish’ actions. This constraint inherently limits the model’s ability to engage with diverse problem-solving scenarios. By design, it is prevented from using external tools such as code interpreters or real-time data retrieval from the web, which are essential for dynamic tasks. Expanding the action space is crucial to make the model more adaptable and versatile across a broader range of tasks. This would enable ReaRAG to tackle more complex problems by dynamically using various resources beyond its current capabilities.

More visual insights#

More on figures

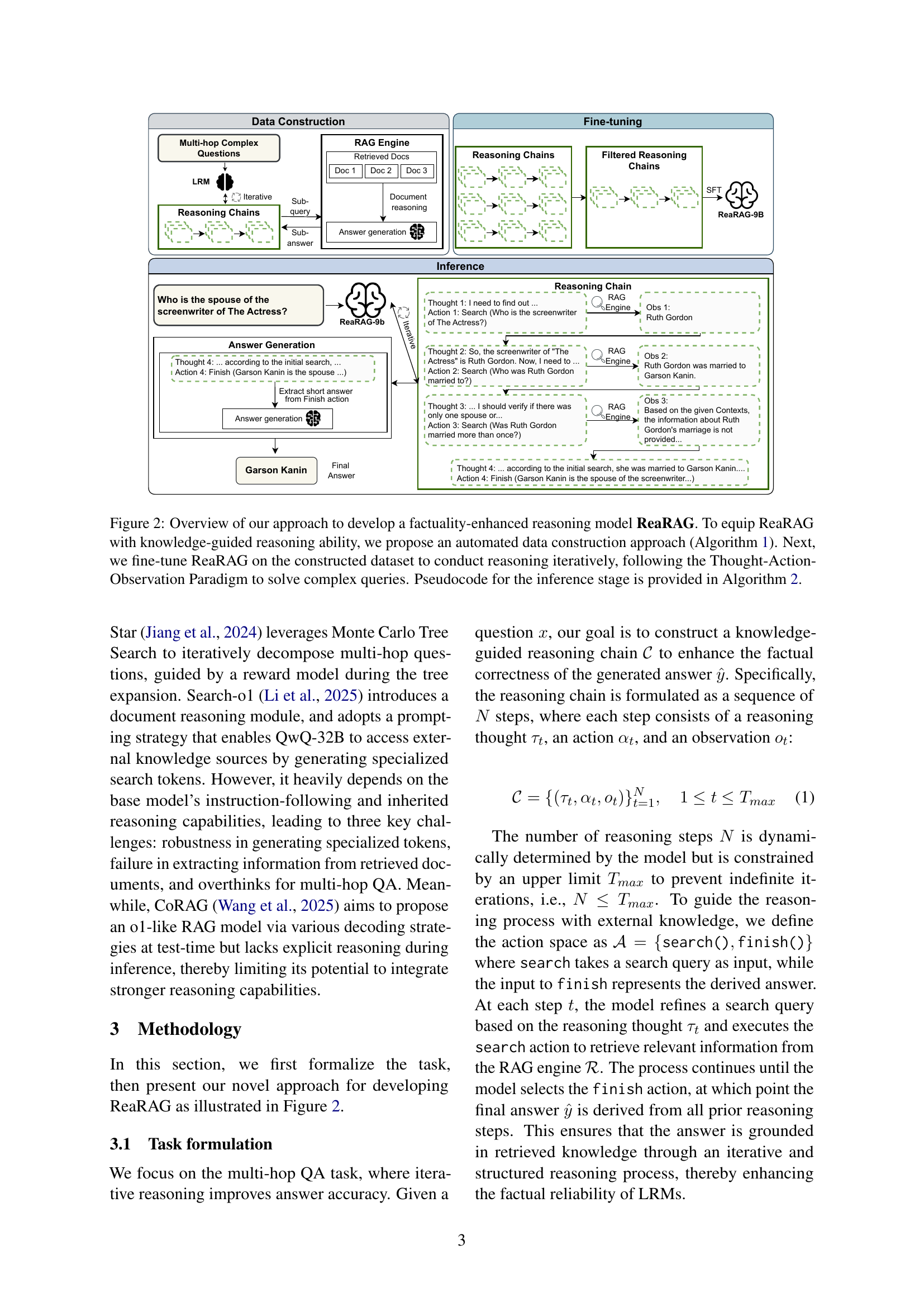

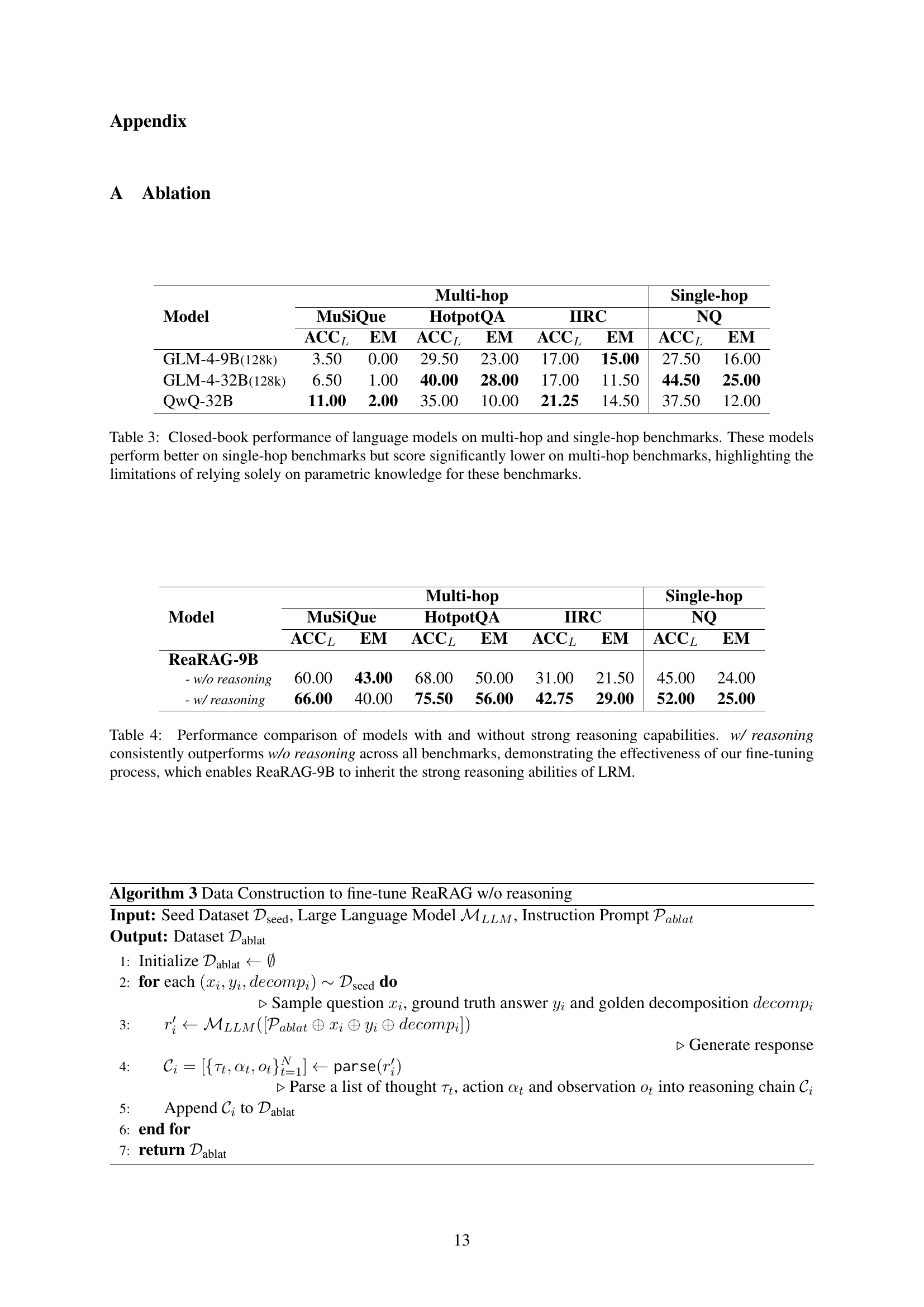

🔼 Figure 2 illustrates the ReaRAG model, a factuality-enhanced reasoning model. The figure details the model’s development process, beginning with an automated data construction approach (Algorithm 1) to create a dataset with knowledge-guided reasoning chains. This dataset is then used to fine-tune the ReaRAG model. The core of ReaRAG iteratively solves complex queries by leveraging the Thought-Action-Observation paradigm, where the model generates a thought, performs an action (either searching for external knowledge or concluding with a final answer), observes the outcome, and repeats this iterative reasoning process until a final answer is reached. Algorithm 2 provides pseudocode for the inference stage.

read the caption

Figure 2: Overview of our approach to develop a factuality-enhanced reasoning model ReaRAG. To equip ReaRAG with knowledge-guided reasoning ability, we propose an automated data construction approach (Algorithm 1). Next, we fine-tune ReaRAG on the constructed dataset to conduct reasoning iteratively, following the Thought-Action-Observation Paradigm to solve complex queries. Pseudocode for the inference stage is provided in Algorithm 2.

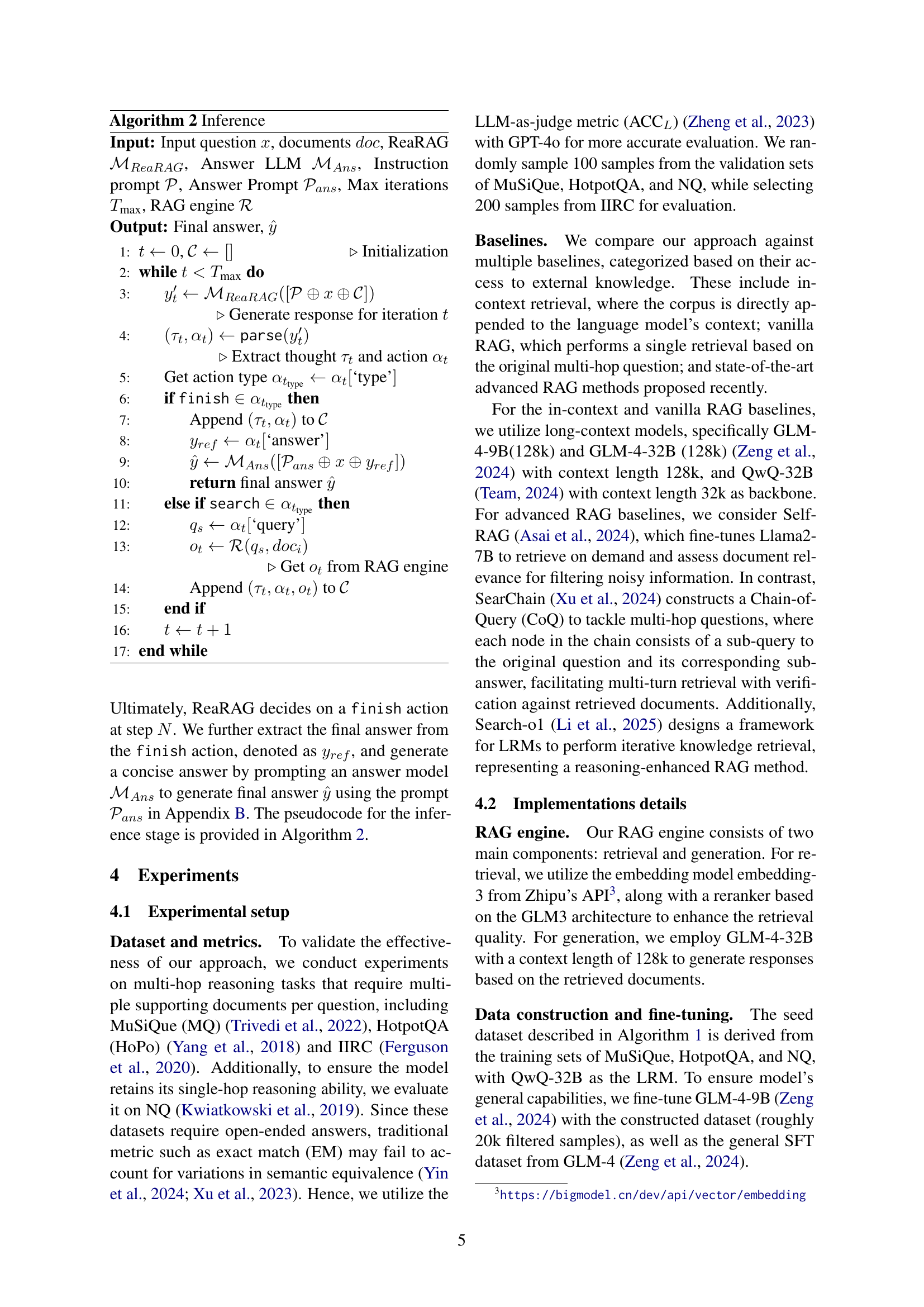

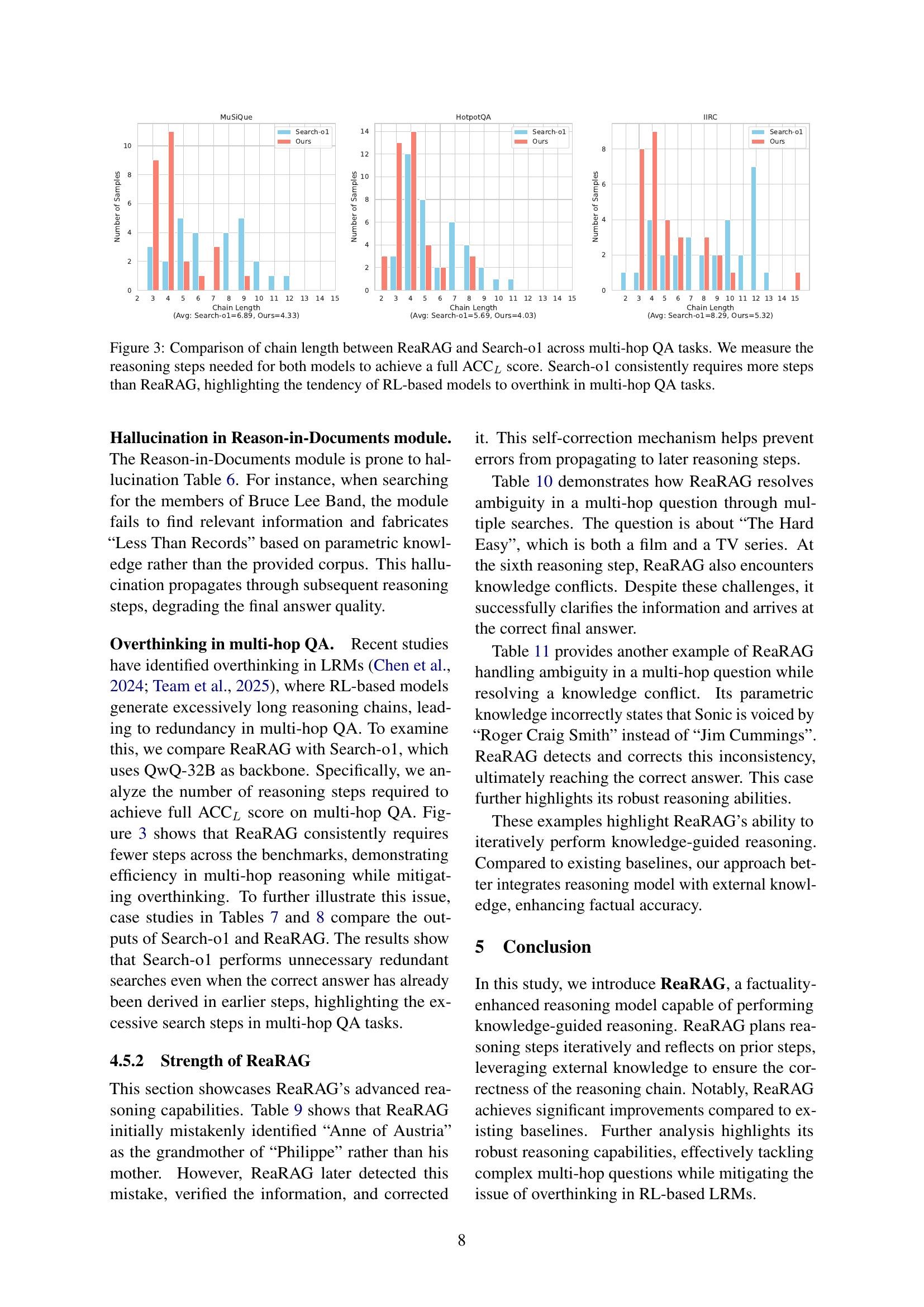

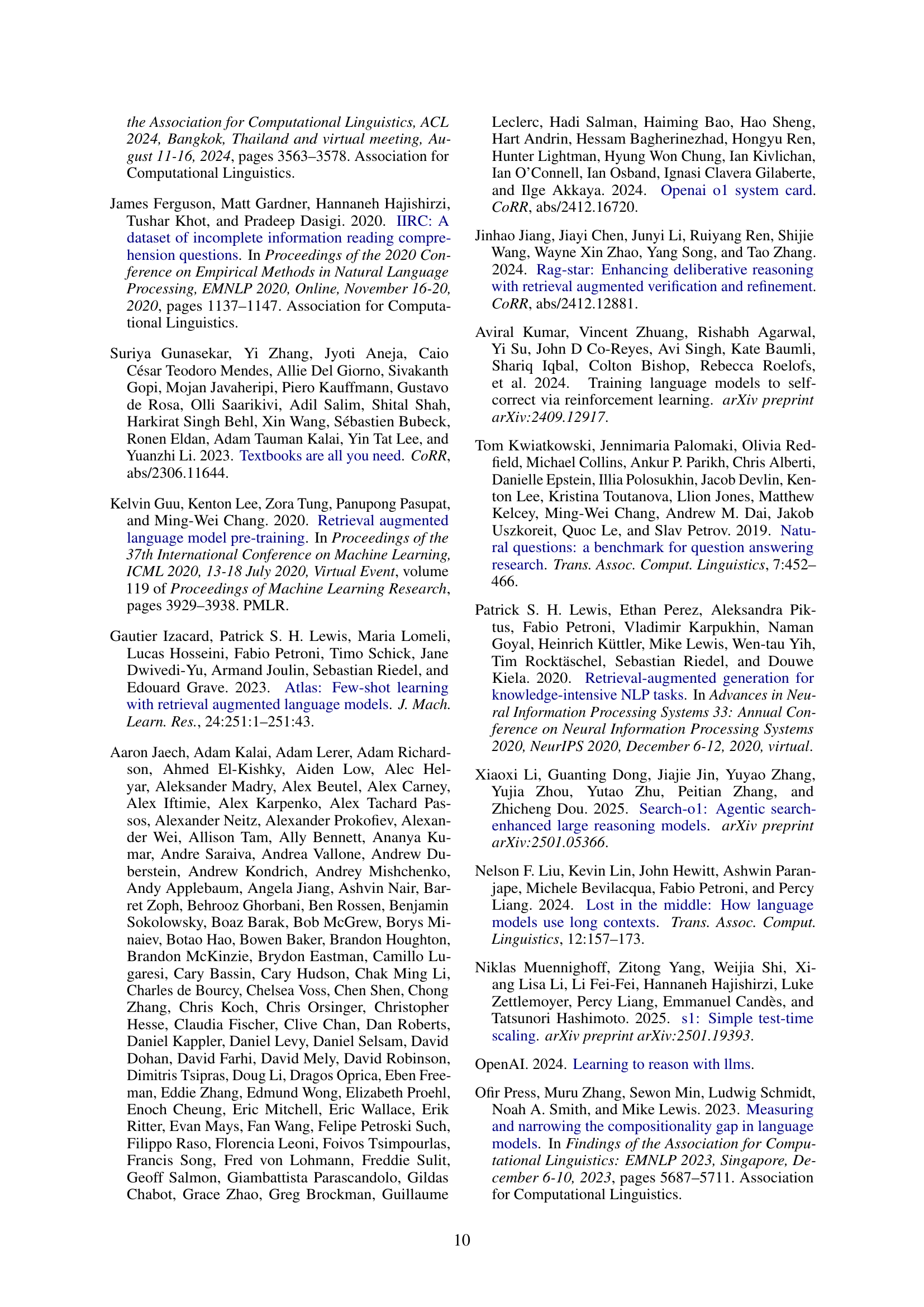

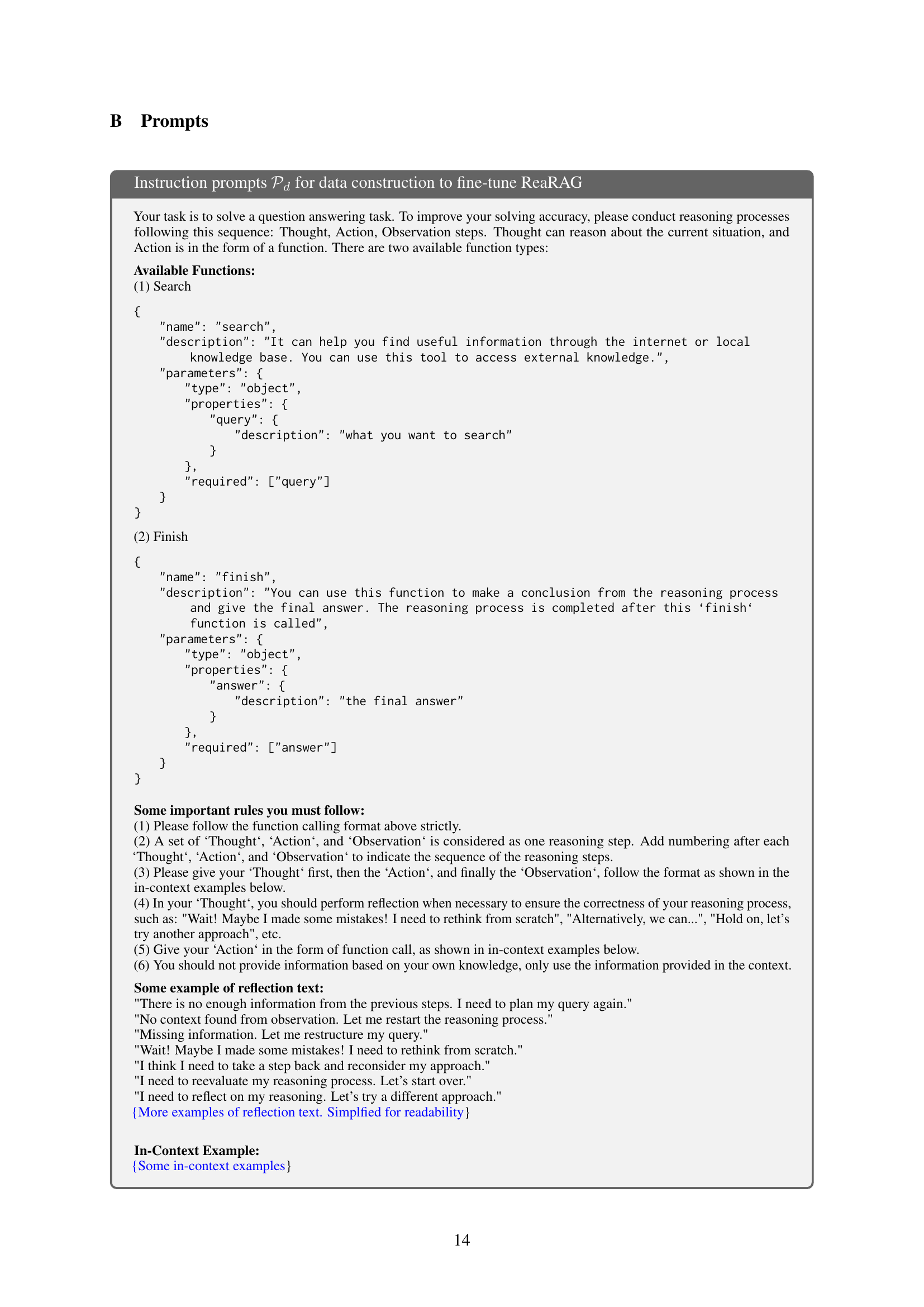

🔼 This figure compares the number of reasoning steps taken by ReaRAG and Search-o1 to achieve a perfect ACCL score (accuracy using LLM as judge) across various multi-hop question answering tasks. The x-axis represents the chain length (number of reasoning steps), and the y-axis shows the number of instances at each chain length. The bars are grouped for each model (ReaRAG and Search-o1) and dataset. The average chain length is shown for each model. The key finding is that Search-o1 consistently needs more reasoning steps than ReaRAG, demonstrating the overthinking tendency often observed in reinforcement learning (RL)-based models on complex reasoning tasks.

read the caption

Figure 3: Comparison of chain length between ReaRAG and Search-o1 across multi-hop QA tasks. We measure the reasoning steps needed for both models to achieve a full ACCLsubscriptACC𝐿\text{ACC}_{L}ACC start_POSTSUBSCRIPT italic_L end_POSTSUBSCRIPT score. Search-o1 consistently requires more steps than ReaRAG, highlighting the tendency of RL-based models to overthink in multi-hop QA tasks.

More on tables

| Multi-hop | Single-hop | |||

| MQ | HoPo | IIRC | NQ | |

| Invalid rate (%) | 19.00 | 28.00 | 23.00 | 25.00 |

🔼 Table 2 presents the percentage of instances where the QwQ-32B model failed to generate the necessary special tokens required by the Search-o1 method for retrieving information. These failures resulted in a breakdown of the retrieval process within Search-o1, highlighting a key weakness of that approach. The table shows the invalid generation rates for special tokens broken down by dataset: MuSiQue, HotpotQA, IIRC, and NQ. Higher percentages indicate a greater frequency of retrieval failures due to token generation issues. This directly impacts the performance of Search-o1, since it relies on these tokens for effective retrieval.

read the caption

Table 2: Invalid generation rates of special tokens in QwQ-32B, leading to retrieval failures in Search-o1.

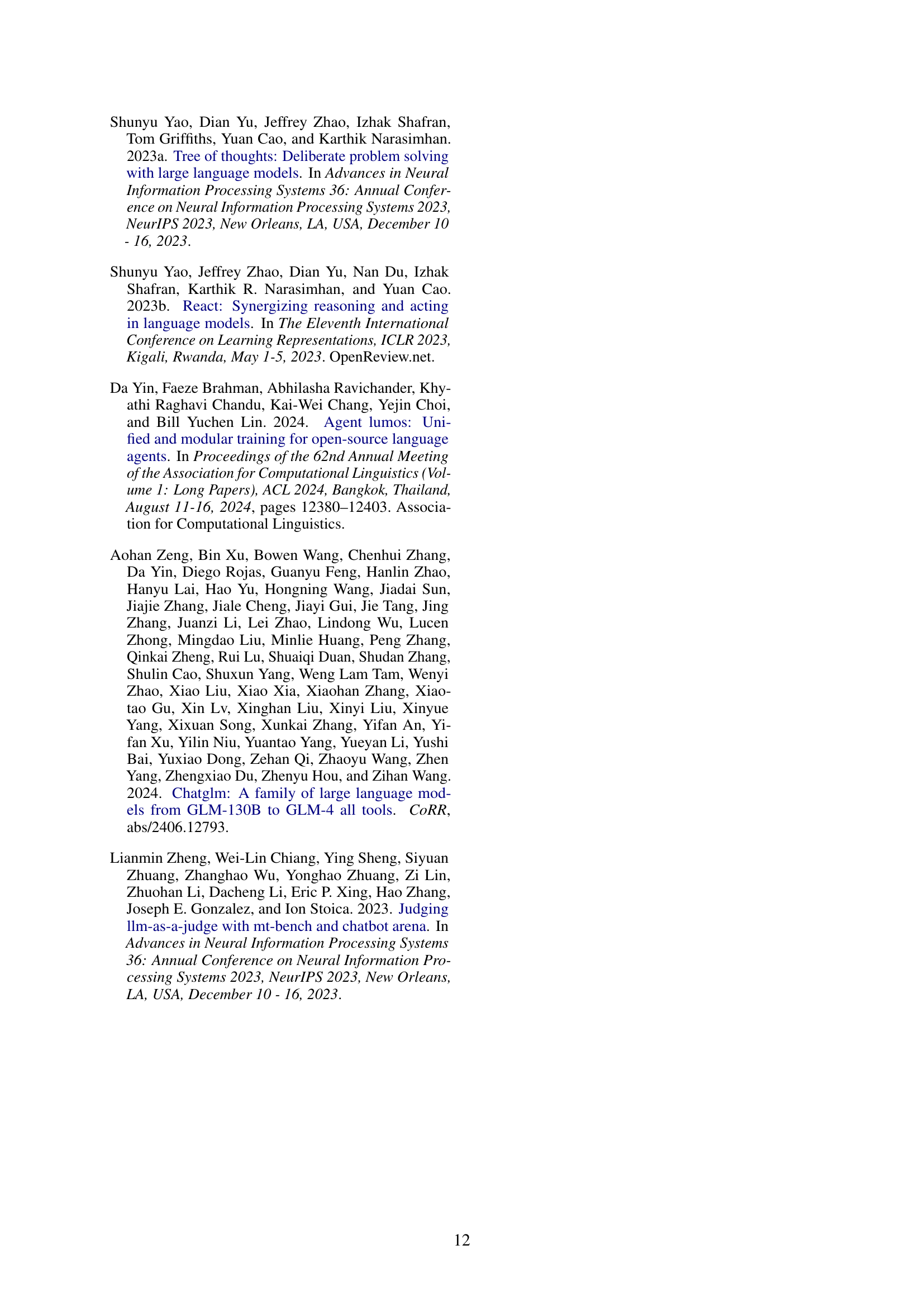

| Model | Multi-hop | Single-hop | ||||||

|---|---|---|---|---|---|---|---|---|

| MuSiQue | HotpotQA | IIRC | NQ | |||||

| EM | EM | EM | EM | |||||

| GLM-4-9B(128k) | 3.50 | 0.00 | 29.50 | 23.00 | 17.00 | 15.00 | 27.50 | 16.00 |

| GLM-4-32B(128k) | 6.50 | 1.00 | 40.00 | 28.00 | 17.00 | 11.50 | 44.50 | 25.00 |

| QwQ-32B | 11.00 | 2.00 | 35.00 | 10.00 | 21.25 | 14.50 | 37.50 | 12.00 |

🔼 Table 3 presents the performance of several large language models (LLMs) on both single-hop and multi-hop question answering benchmarks, without allowing the models to access external knowledge. The results demonstrate that while LLMs exhibit strong performance on single-hop questions, their accuracy drastically decreases when faced with multi-hop questions. This highlights a critical limitation of solely relying on parametric knowledge (the knowledge encoded within the model’s weights) for complex, multi-step reasoning tasks. The table provides quantitative evidence supporting the argument that accessing and effectively using external knowledge is crucial for enhancing the performance of LLMs in multi-hop question answering.

read the caption

Table 3: Closed-book performance of language models on multi-hop and single-hop benchmarks. These models perform better on single-hop benchmarks but score significantly lower on multi-hop benchmarks, highlighting the limitations of relying solely on parametric knowledge for these benchmarks.

| Model | Multi-hop | Single-hop | ||||||

|---|---|---|---|---|---|---|---|---|

| MuSiQue | HotpotQA | IIRC | NQ | |||||

| EM | EM | EM | EM | |||||

| ReaRAG-9B | ||||||||

| - w/o reasoning | 60.00 | 43.00 | 68.00 | 50.00 | 31.00 | 21.50 | 45.00 | 24.00 |

| - w/ reasoning | 66.00 | 40.00 | 75.50 | 56.00 | 42.75 | 29.00 | 52.00 | 25.00 |

🔼 This table presents a comparison of ReaRAG-9B’s performance with and without strong reasoning capabilities. The ‘w/ reasoning’ model leverages the model’s inherent strong reasoning abilities during the fine-tuning process, while the ‘w/o reasoning’ model does not. The results across four multi-hop question answering benchmarks (MuSiQue, HotpotQA, IIRC, and NQ) demonstrate that incorporating strong reasoning significantly improves the model’s accuracy, as measured by the ACCL and EM metrics. This highlights the effectiveness of the fine-tuning process used to integrate robust reasoning into the ReaRAG-9B model.

read the caption

Table 4: Performance comparison of models with and without strong reasoning capabilities. w/ reasoning consistently outperforms w/o reasoning across all benchmarks, demonstrating the effectiveness of our fine-tuning process, which enables ReaRAG-9B to inherit the strong reasoning abilities of LRM.

| # Case Study 1: Where was the author of Hannibal and Scipio educated at? |

|---|

| Ground truth: Exeter College |

| Search-o1 |

| Alright, I’ve got this question: … Simplified here. |

| So, I’ll do a search for "Hannibal and Scipio book." |

| <|begin_search_query|>Hannibal and Scipio book<|end_search_query|> |

| <|begin_search_result|>No helpful information found.<|end_search_result|> |

| Hmm, that didn’t turn up anything. Maybe the book has a different title. Let me think differently. |

| … Simplified here. Search-o1 reasoning diverges and hence unable to find related information. |

| Only search queries and results are listed below for simplicity. |

| <|begin_search_query|>Punic Wars book<|end_search_query|> |

| <|begin_search_result|>No helpful information found.<|end_search_result|> |

| <|begin_search_query|>Hannibal vs. Scipio book<|end_search_query|> |

| <|begin_search_result|>No helpful information found.<|end_search_result|> |

| <|begin_search_query|>Adrian Goldsworthy books<|end_search_query|> |

| <|begin_search_result|>No helpful information found.**Thoughts**After carefully reviewing the three web pages provided, it is evident that none of them … Simplified here.<|end_search_result|> |

| <|begin_search_query|>Robert F. Pennell education<|end_search_query|> |

| <|begin_search_result|>No helpful information found.<|end_search_result|> |

| … Simplified here. Search-o1 tries many unrelated search queries, demonstrating divergent reasoning. |

| I’ve reached the maximum number of searches. Based on the information I have, I need to make an educated guess.… Simplified here. Maximum searches reaches |

| Search-o1 tries to make a guess when there is not enough information to answer the multi-hop question. |

| Considering that Oxford and Cambridge are traditional centers for classical studies in the UK, I’ll go with Oxford. |

| Therefore, my answer is: |

| Oxford |

| ReaRAG-9B |

| Thought 1: So I need to find out where the author of "Hannibal" and "Scipio" was educated. First, I need to know who the author is. |

| Action 1: {'function': 'search', 'parameters': {'query': 'Who is the author of Hannibal and Scipio?'}} |

| Observation 1: The author of "Hannibal and Scipio" is Thomas Nabbes. |

| \hdashline Thought 2: Okay, now that I know the author is Thomas Nabbes, I need to find out where he was educated. |

| Action 2: {'function': 'search', 'parameters': {'query': 'Where was Thomas Nabbes educated?'}} |

| Observation 2: Thomas Nabbes was educated at Exeter College, Oxford. |

| \hdashline Thought 3: Great, so Thomas Nabbes studied at Exeter College in Oxford. I should make sure that this the correct information. |

| Action 3: {'function': 'search', 'parameters': {'query': 'Confirm the educational background of Thomas Nabbes'}} |

| Observation 3: Thomas Nabbes was educated at Exeter College, Oxford in 1621. He left the university without taking a degree. |

| \hdashline Thought 4: So, he studied at Exeter College in Oxford, but didn’t finish his degree. That’s interesting. I think this is the information I need. |

| Action 4: {'function': 'finish', 'parameters': {'answer': 'Thomas Nabbes was educated at Exeter College, Oxford.'}} |

| \hdashlineFinal Answer: Exeter College, Oxford |

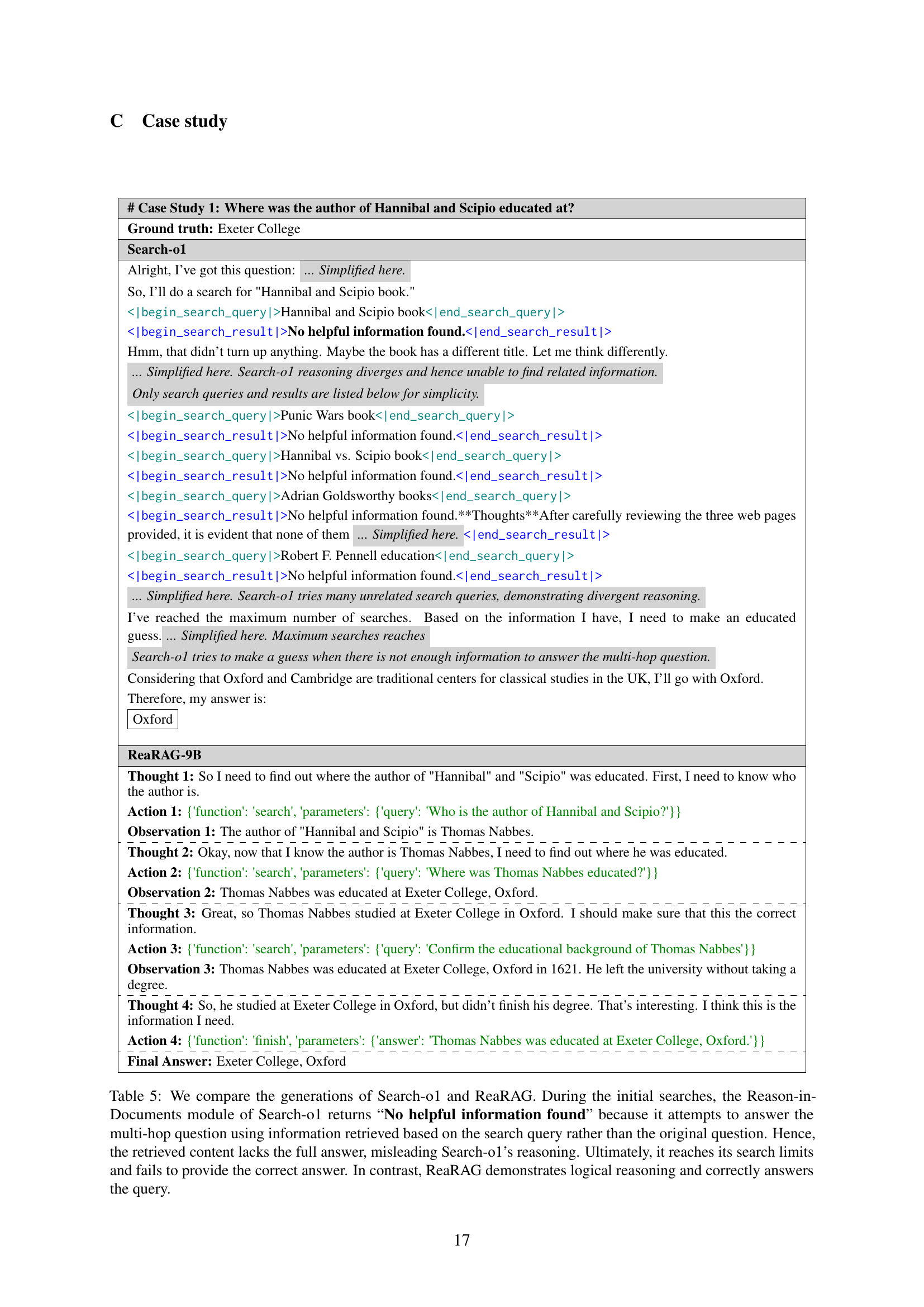

🔼 This table compares the performance of Search-01 and ReaRAG on a multi-hop question answering task. Search-01 uses a Reason-in-Documents module that retrieves information based on the generated search query. However, if the search query is not well-formulated, the retrieved information might be insufficient to answer the multi-hop question, leading to failure. In contrast, ReaRAG employs iterative reasoning and knowledge-guided retrieval, enabling it to overcome this limitation and arrive at the correct answer.

read the caption

Table 5: We compare the generations of Search-o1 and ReaRAG. During the initial searches, the Reason-in-Documents module of Search-o1 returns “No helpful information found” because it attempts to answer the multi-hop question using information retrieved based on the search query rather than the original question. Hence, the retrieved content lacks the full answer, misleading Search-o1’s reasoning. Ultimately, it reaches its search limits and fails to provide the correct answer. In contrast, ReaRAG demonstrates logical reasoning and correctly answers the query.

| # Case Study 2: What record label did the person who is part of The Bruce Lee Band start? |

|---|

| Ground truth: Asian Man Records |

| Search-o1 |

| Alright, so I need to find out what record label the person who is part of The Bruce Lee Band … Simplified here. |

| So, I’ll do a search for "The Bruce Lee Band." |

| <|begin_search_query|>The Bruce Lee Band<|end_search_query|> |

| <|begin_search_result|>No helpful information found.<|end_search_result|> |

| Hmm, that’s strange. Maybe the band name is different or not well-known. Perhaps I should try searching for "Bruce Lee tribute band" or something like that. |

| … Simplified here. Search-o1 tries different searches, but Reason-in-Documents returns “No helpful information” |

| Only search queries and search results are listed here for simplicity |

| <|begin_search_query|>Bruce Lee tribute band<|end_search_query|> |

| <|begin_search_result|>No helpful information found.<|end_search_result|> |

| <|begin_search_query|>Bruce Lee band<|end_search_query|> |

| <|begin_search_result|>No helpful information found.<|end_search_result|> |

| … Simplified here. |

| <|begin_search_query|>The Bruce Lee Band members<|end_search_query|> |

| <|begin_search_result|>Less Than Records, started by a member of Less Than Jake, who is part of The Bruce Lee Band.**Explanation**Based on the information from Web Page 1, The Bruce Lee Band features Less Than Jake as part of its backing band. Less Than Jake is known for starting their own record label, Less Than Records. … Simplified here. Search-o1 fabricates the name "Less Than Records" |

| <|begin_search_query|>Less Than Jake record label<|end_search_query|> |

| <|begin_search_result|>No helpful information found.**Explanation**After reviewing the three web pages provided for the search query "Less Than Jake record label," none of them contained specific information about which member of Less Than Jake started Less Than Records. … Simplified here.<|end_search_result|> |

| … Simplified here.Less Than Records was started by a member of Less Than Jake, who is also part of The Bruce Lee Band.Therefore, the record label started by the person who is part of The Bruce Lee Band is Less Than Records. |

| Final Answer |

| Less Than Records |

| ReaRAG-9B |

| Thought 1: I need to find out which record label the person who is part of The Bruce Lee Band started. First, I should figure out who is part of The Bruce Lee Band. |

| Action 1: {'function': 'search', 'parameters': {'query': 'Who is part of The Bruce Lee Band?'}} |

| Observation 1: Mike Park and his backing band, which has included Less Than Jake and the Rx Bandits, are part of The Bruce Lee Band. |

| \hdashline Thought 2: So, Mike Park is involved with The Bruce Lee Band. I need to find out if he started a record label. |

| Action 2: {'function': 'search', 'parameters': {'query': 'Did Mike Park start a record label?'}} |

| Observation 2: Yes, Mike Park started a record label. |

| \hdashline Thought 3: Great, now I need to find out the name of the record label he started. |

| Action 3: {'function': 'search', 'parameters': {'query': 'What record label did Mike Park start?'}} |

| Observation 3: Mike Park started the record label Asian Man Records. |

| \hdashline Thought 4: So, Asian Man Records is the label Mike Park started. But I should make sure that this is correct and see if there’s any other relevant information. |

| Action 4: {'function': 'search', 'parameters': {'query': 'Confirm if Asian Man Records was started by Mike Park.'}} |

| Observation 4: Yes, Asian Man Records was started by Mike Park. |

| \hdashline Thought 5: Alright, I have confirmed that Mike Park started Asian Man Records. Since he is part of The Bruce Lee Band, this should be the answer. |

| Action 5: {'function': 'finish', 'parameters': {'answer': 'Asian Man Records'}} |

| \hdashlineFinal Answer: Asian Man Records |

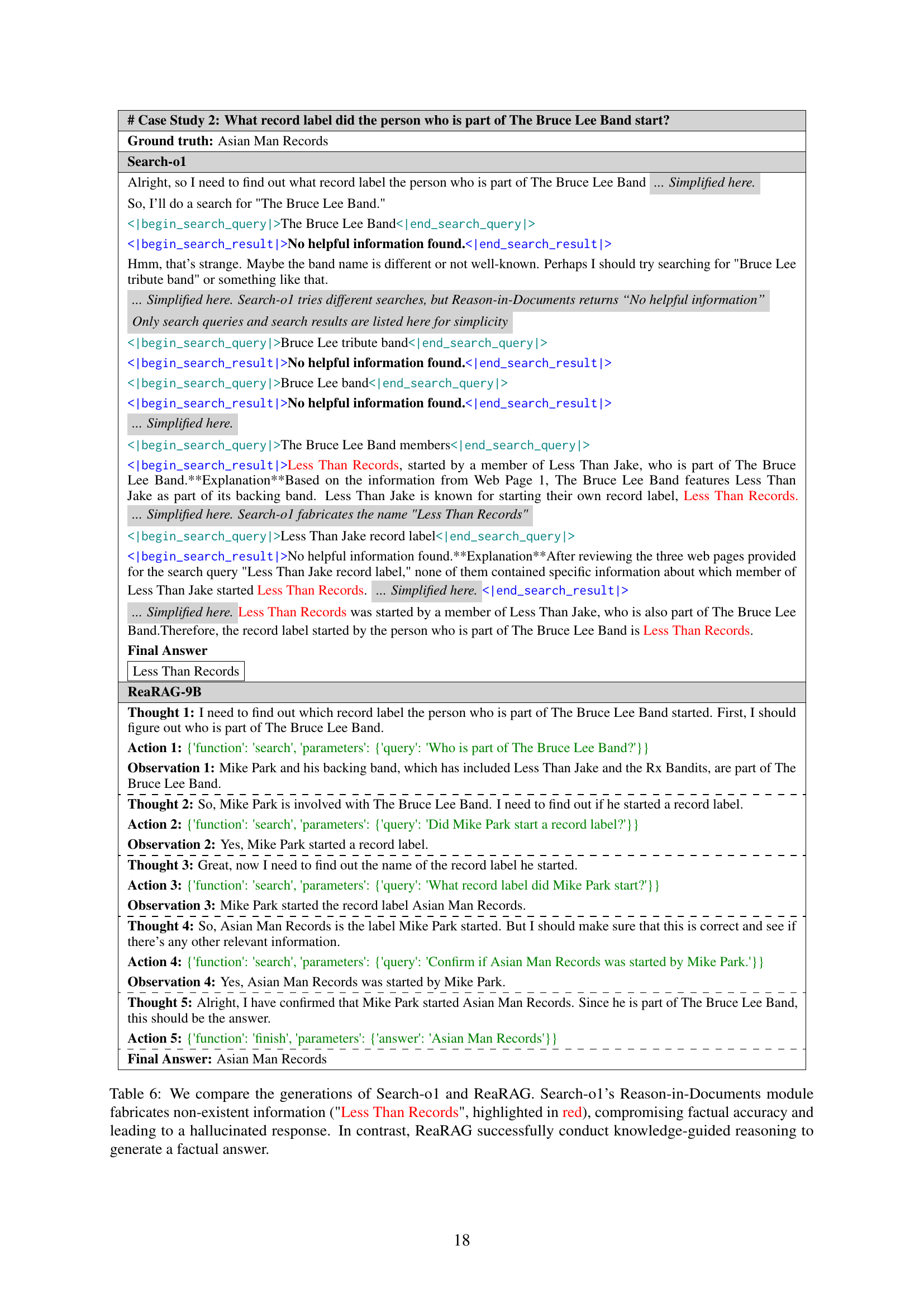

🔼 This table compares the performance of Search-01 and ReaRAG on a multi-hop question answering task. Search-01 uses a Reason-in-Documents module that attempts to generate answers from retrieved documents. However, in this example, the module hallucinates a non-existent record label (‘Less Than Records’), which leads to an inaccurate answer. ReaRAG, in contrast, uses knowledge-guided reasoning to accurately answer the question by iteratively refining its search queries and integrating external knowledge.

read the caption

Table 6: We compare the generations of Search-o1 and ReaRAG. Search-o1’s Reason-in-Documents module fabricates non-existent information ('Less Than Records', highlighted in red), compromising factual accuracy and leading to a hallucinated response. In contrast, ReaRAG successfully conduct knowledge-guided reasoning to generate a factual answer.

| # Case Study 3: In what county is William W. Blair’s birthplace located? |

|---|

| Ground truth: Orleans County |

| Search-o1 |

| … Simplified here. Maybe I can search for "Holley, New York county" to find out which county it’s part of. |

| <|begin_search_query|>Holley, New York county<|end_search_query|> |

| <|begin_search_result|>Holley is located in Orleans County, New York.<|end_search_result|> |

| … Simplified here. But wait, let me double-check to make sure that Holley is indeed in Orleans County. Sometimes place names can be ambiguous, and there might be multiple Holleys in different counties or states. |

| Let me search for "Holley, New York county" again to confirm. |

| <|begin_search_query|>Holley, New York county<|end_search_query|> |

| <|begin_search_result|>You have searched this query. Please refer to previous results.<|end_search_result|> |

| Alright, since the previous search already indicated that Holley is in Orleans County, New York, and no other information contradicts that, I can confidently say that William W. Blair was born in Orleans County. |

| However, to be thorough, perhaps I should verify if there are any other places named Holley in New York or elsewhere that might be confused with the one where William W. Blair was born. |

| Let me search for "Holley, New York" to see if there’s only one such place. |

| <|begin_search_query|>Holley, New York<|end_search_query|> |

| <|begin_search_result|>Holley is a village … Simplified here. <|end_search_result|> |

| But just to cover all bases, maybe there’s another Holley in New York state that I should consider. Let me search for "New York state places named Holley" to see if there are multiple locations with that name. |

| <|begin_search_query|>New York state places named Holley<|end_search_query|> |

| <|begin_search_result|>Holley is a village … Simplified here.<|end_search_result|> |

| Search-o1 continues to execute excessive and unnecessary searches. Only search queries are listed here for simplicity. |

| <|begin_search_query|>William W. Blair historian<|end_search_query|> |

| <|begin_search_query|>William W. Blair Latter Day Saints<|end_search_query|> |

| <|begin_search_query|>William W. Blair birth certificate Orleans County<|end_search_query|> |

| <|begin_search_query|>William W. Blair biography<|end_search_query|> |

| Perfect, so this biography confirms that he was born in Holley, Orleans County, New York. This aligns with all the previous information I’ve found. |

| Therefore, I can confidently say that William W. Blair’s birthplace is located in Orleans County. … Simplified here. |

| Final Answer |

| Orleans County |

| ReaRAG-9B |

| Thought 1: I need to find out in which county William W. Blair was born. First, I should figure out where he was born, meaning his birthplace city or town. |

| Action 1: {'function': 'search', 'parameters': {'query': 'Where was William W. Blair born? '}} |

| Observation 1: William W. Blair was born in Holley, New York. |

| \hdashline Thought 2: Okay, so now I know that his birthplace is Holley, New York. Next, I need to determine which county Holley is located in. |

| Action 2: {'function': 'search', 'parameters': {'query': 'Which county is Holley, New York in?'}} |

| Observation 2: Holley, New York is in Orleans County. |

| \hdashline Thought 3: So, if Holley is in Orleans County, then that should be the answer. But I should double-check if there’s any ambiguity or if there are multiple places named Holley in New York. |

| Action 3: {'function': 'search', 'parameters': {'query': 'Are there multiple Holleys in New York State?'}} |

| Observation 3: Based on the provided context, there is only one Holley mentioned, which is in Orleans County, New York. The context does not provide information about other Holleys in New York State. Therefore, based on the given context, I cannot confirm whether there are multiple Holleys in New York State. |

| \hdashline Thought 4: Given that, and considering the information I have, I think it’s safe to conclude that William W. Blair’s birthplace, Holley, is in Orleans County, New York. |

| Action 4: {'function': 'finish', 'parameters': {'answer': 'Orleans County'}} |

| \hdashlineFinal Answer: Orleans County |

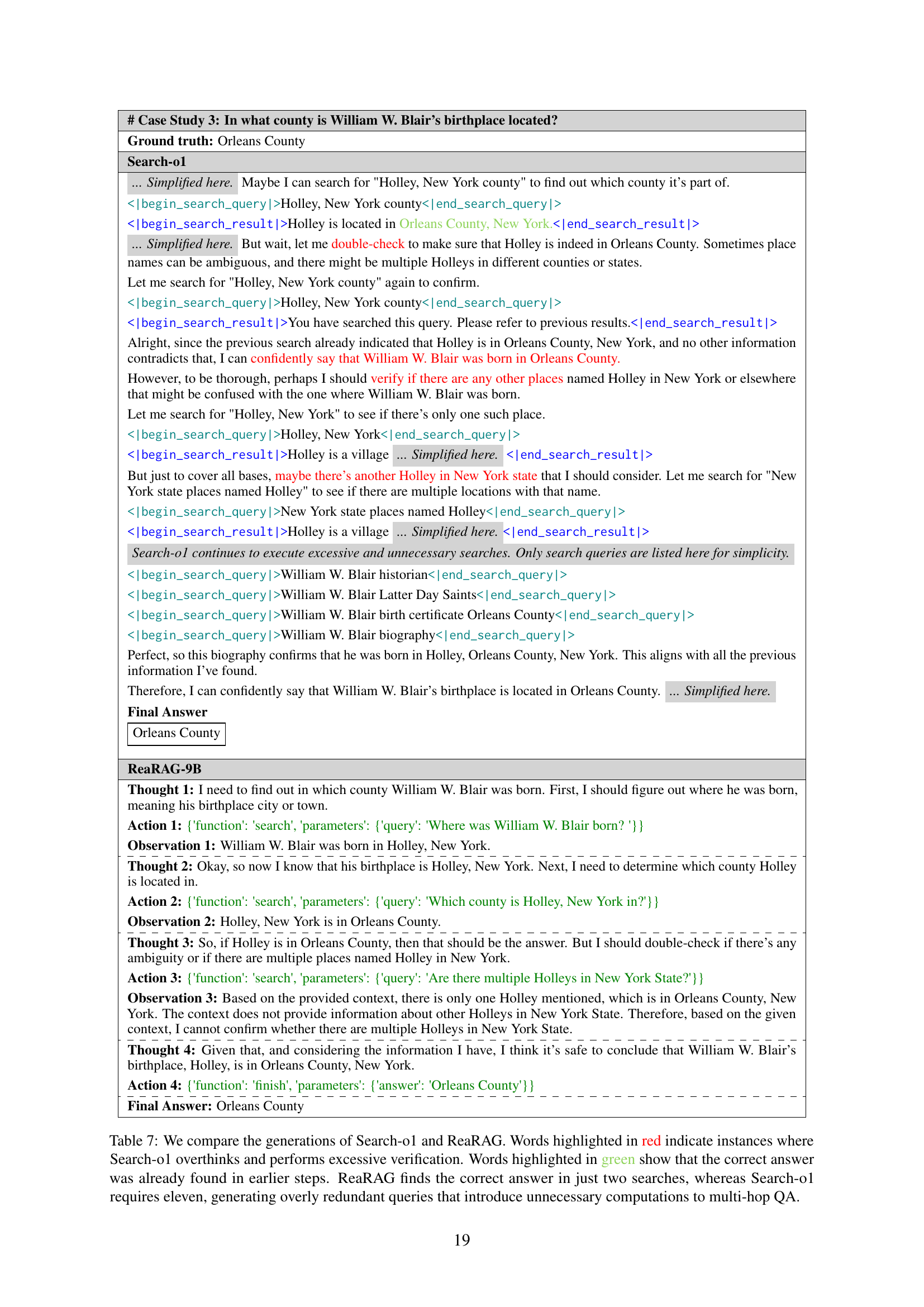

🔼 This table compares the performance of Search-01 and ReaRAG on a multi-hop question answering task. Search-01 exhibits overthinking, making many more queries than necessary, even after obtaining the correct answer in earlier steps. The table highlights these redundant queries in red. Conversely, ReaRAG efficiently finds the correct answer with far fewer queries. The green highlights show where Search-01 already had the information needed to answer the question, but continued to search needlessly. The comparison demonstrates ReaRAG’s superior efficiency and reduced computational cost.

read the caption

Table 7: We compare the generations of Search-o1 and ReaRAG. Words highlighted in red indicate instances where Search-o1 overthinks and performs excessive verification. Words highlighted in green show that the correct answer was already found in earlier steps. ReaRAG finds the correct answer in just two searches, whereas Search-o1 requires eleven, generating overly redundant queries that introduce unnecessary computations to multi-hop QA.

| # Case Study 4: Where is Ulrich Walter’s employer headquartered? |

|---|

| Ground truth: Cologne |

| Search-o1 |

| Alright, I have this question: "Where is Ulrich Walter’s employer … Simplified here. |

| <|begin_search_query|>Who is Ulrich Walter?<|end_search_query|> |

| <|begin_search_result|>Ulrich Walter is a German astronaut who was selected in 1987 to join the German astronaut team and trained at the German Aerospace Center (DLR). His employer, the German Aerospace Center, is headquartered in Cologne, Germany.<|end_search_result|> |

| Hmm, according to this search result, Ulrich Walter is a German astronaut who was selected in 1987 and trained at the German Aerospace Center (DLR). It also says that his employer, the DLR, is headquartered in Cologne, Germany. |

| … Simplified here. <|begin_search_query|>Headquarters of German Aerospace Center<|end_search_query|> |

| <|begin_search_result|>The German Aerospace Center (DLR) is headquartered in Cologne, Germany.<|end_search_result|> |

| So, according to this, the DLR is headquartered in Cologne, Germany. |

| But just to be thorough, let me see if there is any other information about Ulrich Walter’s employer. |

| <|begin_search_query|>Ulrich Walter workplace<|end_search_query|> |

| <|begin_search_result|>Ulrich Walter’s employer is the German Aerospace Center (DLR), headquartered in Cologne, Germany.<|end_search_result|> |

| … Simplified here. |

| I should probably confirm if Ulrich Walter is still active or if he has retired. … Simplified here. |

| <|begin_search_query|>Ulrich Walter retirement<|end_search_query|> |

| <|begin_search_result|>No helpful information found.<|end_search_result|>… Simplified here. |

| Alternatively, perhaps he has taken up an academic position at a university. |

| <|begin_search_query|>Ulrich Walter university affiliation<|end_search_query|> |

| <|begin_search_result|>Ulrich Walter’s employer, the German Aerospace Center (DLR), is headquartered in Cologne, Germany.<|end_search_result|> |

| … Simplified here.Maybe I should look for his professional profile or LinkedIn page to see his current employer. |

| <|begin_search_query|>Ulrich Walter LinkedIn<|end_search_query|> |

| … Simplified here. the answer to the question "Where is Ulrich Walter’s employer headquartered?" is Cologne, Germany. |

| Final Answer |

| Cologne, Germany |

| ReaRAG-9B |

| Thought 1: I need to find out where Ulrich Walter’s employer is headquartered. First, I should figure out who Ulrich Walter is and where he works. |

| Action 1: {'function': 'search', 'parameters': {'query': 'Who is Ulrich Walter and where does he work?'}} |

| Observation 1: Ulrich Walter is a German astronaut who worked at the German Aerospace Center. |

| \hdashline Thought 2: So, Ulrich Walter works at the German Aerospace Center. Now, I need to find out where this center is headquartered. |

| Action 2: {'function': 'search', 'parameters': {'query': 'Where is the German Aerospace Center headquartered?'}} |

| Observation 2: The German Aerospace Center (DLR) is headquartered in Cologne, Germany. |

| \hdashline Thought 3: Alright, so if DLR is headquartered in Cologne, Germany, then that should be the answer. But maybe I should double-check if Ulrich Walter works there still or if there’s any update. |

| Action 3: {'function': 'search', 'parameters': {'query': 'Is Ulrich Walter still working at DLR?'}} |

| Observation 3: I cannot provide real-time information or updates on whether Ulrich Walter is still working at the German Aerospace Center (DLR) since my training data only goes up until 2023. To get the most current information on Ulrich Walter’s employment status, you would need to check the latest sources or contact the German Aerospace Center directly. |

| \hdashline Thought 4: Given that, I should probably proceed with the information I have, which is that Ulrich Walter works at DLR, which is headquartered in Cologne, Germany. |

| Action 4: {'function': 'finish', 'parameters': {'answer': 'Cologne, Germany'}} |

| \hdashlineFinal Answer: Cologne, Germany |

🔼 Table 8 presents a detailed comparison of the reasoning processes employed by Search-01 and ReaRAG to answer a multi-hop question. The table highlights Search-01’s tendency towards overthinking, as evidenced by its eight search queries, many of which are redundant because the correct answer was already discovered in previous steps (highlighted in green). In contrast, ReaRAG demonstrates efficiency and conciseness by reaching the correct answer in only three searches. The excessive search queries of Search-01 are highlighted in red, underscoring the problem of unnecessary computation in multi-hop question answering. The comparison illustrates ReaRAG’s superior ability to efficiently integrate external knowledge into the reasoning process.

read the caption

Table 8: We compare the generations of Search-o1 and ReaRAG. Words highlighted in red indicate instances where Search-o1 overthinks and performs excessive verification. Words highlighted in green show that the correct answer was already found in earlier steps. As shown above, ReaRAG arrives at the correct answer in just three searches, while Search-o1 requires eight, producing excessive queries that are redundant in multi-hop QA.

| # Case Study 5: Who is the grandmother of Philippe, Duke of Orléans? |

|---|

| Ground truth: Marie de’ Medici |

| ReaRAG-9B |

| Thought 1: So I need to find out who the grandmother of Philippe, Duke of Orléans, is. I don’t know much about French royalty, so I’ll need to look this up. |

| Action 1: {'function': 'search', 'parameters': {'query': 'Who is the grandmother of Philippe, Duke of Orléans?'}} |

| Observation 1: The grandmother of Philippe, Duke of Orléans (Philippe I) is Anne of Austria. Wrong answer! |

| \hdashline Thought 2: Wait, let me make sure about this. I have found that his grandmother is Anne of Austria, but I should verify this information from multiple sources to ensure accuracy. |

| Action 2: {'function': 'search', 'parameters': {'query': 'Philippe, Duke of Orléans family tree'}} |

| Observation 2: Philippe, Duke of Orléans, was the younger son of Louis XIII of France and Anne of Austria. His older brother was Louis XIV, famously known as the "Sun King." Philippe became Duke of Orléans upon the death of his uncle Gaston in 1660. In 1661, he also received the dukedoms of Valois and Chartres. |

| \hdashline Thought 3: So, according to this, his grandmother is Anne of Austria. But let me see if there’s any confusion here. Philippe’s parents were Louis XIII and Anne of Austria, so she would be his mother, not grandmother. Wait, that doesn’t make sense. Maybe I need to clarify the generational relationships. ReaRAG notices the mistakes. |

| Action 3: {'function': 'search', 'parameters': {'query': 'Genealogy of the House of Orléans '}} |

| Observation 3: The House of Orléans is a cadet branch of the House of Bourbon, which is one of the most prominent royal houses in European history. The Orléans branch originated with Philippe I, Duke of Orléans, who was the younger son of Louis XIII of France and Anne of Austria. Philippe I was the brother of Louis XIV, the "Sun King." The genealogy of the House of Orléans can be traced through various descendants of Philippe I, including: 1. Philippe II, Duke of Orléans, who was the father of Louise Élisabeth d’Orléans mentioned in Document 351. Philippe II was also known as Philippe I’s grandson, as he was the son of Philippe I’s son, Louis XIV. 2. Louis Philippe, Duke of Orléans, who became King of the French after the July Revolution of 1830. He was the father of François d’Orléans, Prince of Joinville, mentioned in Document 393. These are just a few examples of the descendants of Philippe I, Duke of Orléans, and the House of Orléans continues to have living members today. |

| \hdashline Thought 4: Hmm, I think I’m getting confused here. Philippe I, Duke of Orléans, was the son of Louis XIII and Anne of Austria. So, Anne of Austria would be his mother, not his grandmother. His grandmother would be the mother of his parents, which would be Louis XIII’s mother. Let me check who that was. ReaRAG realigns its reasoning direction. |

| Action 4: {'function': 'search', 'parameters': {'query': 'Who was the mother of Louis XIII of France? '}} |

| Observation 4: The mother of Louis XIII of France was Marie de’ Medici. |

| \hdashline Thought 5: So, if Marie de’ Medici was the mother of Louis XIII, and Anne of Austria was his wife and the mother of Philippe I, then Anne of Austria would be Philippe I’s mother, not grandmother. So, his grandmother would be Marie de’ Medici. That makes more sense. But to be thorough, I should confirm the relationships again. Louis XIII married Anne of Austria, and they had children, including Philippe I and Louis XIV. So, Anne of Austria is Philippe I’s mother, and Marie de’ Medici is his grandmother. I think that’s correct, but I’ll double-check with another search. |

| Action 5: {'function': 'search', 'parameters': {'query': 'Philippe, Duke of Orléans family tree '}} |

| Observation 5: Philippe, Duke of Orléans (1640-1701) was the younger son of Louis XIII of France and Anne of Austria. His older brother was Louis XIV, the famous "Sun King." Philippe was styled Duke of Anjou from birth and became Duke of Orléans upon the death of his uncle Gaston in 1660. He also received the dukedoms of Valois and Chartres in 1661. Philippe had several children, including Louise Élisabeth d’Orléans, who was one of his seven daughters. Louise Élisabeth was known as Élisabeth and was a "princesse du sang" as a member of the reigning House of Bourbon. |

| \hdashline Thought 6: Okay, so this confirms that Anne of Austria was Philippe I’s mother. Therefore, his grandmother would be Marie de’ Medici, who was Louis XIII’s mother. I think that’s the correct answer. But just to be absolutely sure, let’s look up the genealogy again. Louis XIII was the son of Henry IV and Marie de’ Medici, so Marie de’ Medici would be his mother, and Anne of Austria would be his wife and Philippe I’s mother. I think that’s consistent across all the information I’ve found. So, the grandmother of Philippe, Duke of Orléans, is Marie de’ Medici. I’ll go ahead and provide that as the answer. |

| Action 6: {'function': 'finish', 'parameters': {'answer': 'Marie de’ Medici'}} |

| \hdashlineFinal Answer: Marie de’ Medici |

🔼 ReaRAG initially makes a mistake, incorrectly identifying Anne of Austria as Philippe’s grandmother. However, it quickly detects this error, reviews its previous reasoning steps, and corrects its approach. Through iterative refinement of its reasoning process, ReaRAG ultimately reaches the correct answer, showcasing its capacity for adaptive and self-correcting reasoning.

read the caption

Table 9: In the first reasoning step, ReaRAG makes an incorrect deduction, mistakenly identifying Anne of Austria as Philippe’s grandmother instead of his mother. However, it quickly recognizes the error, then verifies its previous reasoning steps, and recalibrates its logic. By refining its approach, ReaRAG ultimately arrives at the correct answer, demonstrating its adaptive reasoning ability.

| # Case Study 6: who is the younger brother of The episode guest stars of The Hard Easy |

|---|

| Ground truth: Bill Murray |

| ReaRAG-9B |

| Thought 1: I need to find out who the younger brother is of the episode guest stars of "The Hard Easy." First, I should figure out what "The Hard Easy" is. It sounds like a TV show or a movie. |

| Action 1: {'function': 'search', 'parameters': {'query': 'What is The Hard Easy?'}} |

| Observation 1: The Hard Easy is a 2006 American action crime drama film directed by Ari Ryan and written by Jon Lindstrom and Tom Schanley. It stars Henry Thomas, Vera Farmiga, David Boreanaz, Bruce Dern, Peter Weller, Gary Busey, and Nick Lachey. The film was released straight-to-DVD in the United States on May 22, 2007. |

| \hdashline Thought 2: So, "The Hard Easy" is a 2006 film with several guest stars. I need to identify the guest stars and then find out who the younger brother of one of them is. But the question seems a bit unclear. Maybe it’s asking for the younger brother of one of the guest stars in a specific episode, but since it’s a film, not a TV series, perhaps there are multiple episodes. |

| Action 2: {'function': 'search', 'parameters': {'query': 'Are there multiple episodes of The Hard Easy?'}} |

| Observation 2: No, there is only one episode of "The Hard Easy" as part of the "Adventure Time" series. |

| \hdashline Thought 3: Wait, now I’m getting confused. Earlier, I thought "The Hard Easy" was a 2006 film, but now it’s saying it’s an episode of "Adventure Time." Maybe there’s some confusion here. I need to clarify what "The Hard Easy" refers to. |

| ReaRAG actively performs searches to resolve the confusion. |

| Action 3: {'function': 'search', 'parameters': {'query': 'Is The Hard Easy a film or an episode? '}} |

| Observation 3: The Hard Easy is both a film and an episode. The film version was released straight-to-DVD in 2007, while the episode is part of the animated television series "Adventure Time" and aired on Cartoon Network in 2012. |

| \hdashline Thought 4: Okay, so there’s a 2006 film called "The Hard Easy" and an episode with the same name in the "Adventure Time" series. The question might be referring to the episode in "Adventure Time." I need to find out the guest stars of that episode and then find out who the younger brother of one of them is. |

| Action 4: {'function': 'search', 'parameters': {'query': 'Who are the guest stars in the Adventure Time episode "The Hard Easy"? '}} |

| Observation 4: The guest stars in the Adventure Time episode "The Hard Easy" are Brian Doyle-Murray as Prince Huge and Jonathan Katz as the Mudscamp elder. |

| \hdashline Thought 5: So, the guest stars are Brian Doyle-Murray and Jonathan Katz. Now, I need to find out who the younger brother of one of them is. But I don’t know their family relationships off the top of my head. I’ll need to look that up. |

| Action 5: {'function': 'search', 'parameters': {'query': 'Who is the younger brother of Brian Doyle-Murray? '}} |

| Observation 5: The younger brother of Brian Doyle-Murray is Bill Murray. |

| \hdashline Thought 6: Wait, that can’t be right. Bill Murray is a famous actor, but I don’t think he’s the younger brother of Brian Doyle-Murray. Maybe I need to check their relationship again. |

| ReaRAG encounters conflicts with its parametric knowledge. |

| Action 6: {'function': 'search', 'parameters': {'query': 'Are Bill Murray and Brian Doyle-Murray brothers? '}} |

| Observation 6: Yes, Bill Murray and Brian Doyle-Murray are brothers. |

| \hdashline Thought 7: So, if the question is asking for the younger brother of one of the guest stars in the Adventure Time episode "The Hard Easy," and the guest stars are Brian Doyle-Murray and Jonathan Katz, and the younger brother of Brian Doyle-Murray is Bill Murray, then the answer should be Bill Murray. |

| Action 7: {'function': 'finish', 'parameters': {'answer': 'Bill Murray'}} |

| \hdashlineFinal Answer: Bill Murray |

🔼 Table 10 presents a case study demonstrating ReaRAG’s ability to handle ambiguity and knowledge conflicts in multi-hop questions. The example question is complex and initially leads to uncertainty. ReaRAG actively uses search to refine its understanding, identifying and resolving contradictions in information retrieved from different sources. Despite facing conflicting data, ReaRAG’s iterative reasoning process and error correction mechanisms enable it to arrive at the correct answer, highlighting the system’s robustness and reflective capabilities.

read the caption

Table 10: During the first three iterations, ReaRAG attempts to resolve ambiguity in the original multi-hop question, actively conducting searches to eliminate confusion. Additionally, at the sixth iteration, it encounters knowledge conflicts. Despite these challenges, ReaRAG successfully derives the correct answer, showcasing its robust reasoning abilities.

| # Case Study 7: This singer of A Rather Blustery Day also voiced what hedgehog? |

|---|

| Ground truth: Sonic |

| ReaRAG-9B |

| Thought 1: I need to find out which singer performed "A Rather Blustery Day" and then find out what hedgehog they voiced. |

| Action 1: {'function': 'search', 'parameters': {'query': 'Who sang "A Rather Blustery Day"?'}} |

| Observation 1: Jim Cummings sang "A Rather Blustery Day". |

| \hdashline Thought 2: Now that I know Jim Cummings sang that song, I need to find out what hedgehog he voiced. |

| Action 2: {'function': 'search', 'parameters': {'query': 'What hedgehog did Jim Cummings voice?'}} |

| Observation 2: Jim Cummings voiced Dr. Robotnik from "Sonic the Hedgehog". |

| \hdashline Thought 3: Wait, but Dr. Robotnik is a robot, not a hedgehog. Maybe I need to check again. |

| Action 3: {'function': 'search', 'parameters': {'query': 'What characters did Jim Cummings voice in Sonic the Hedgehog? '}} |

| Observation 3: Jim Cummings voiced Dr. Robotnik in the "Sonic the Hedgehog" series. |

| \hdashline Thought 4: It seems like there might be confusion here. Dr. Robotnik is a robot, but the question mentions a hedgehog. Maybe the hedgehog is a different character. |

| The original question is confusing, but ReaRAG manages to resolve it. |

| Action 4: {'function': 'search', 'parameters': {'query': 'List of characters voiced by Jim Cummings in Sonic the Hedgehog. '}} |

| Observation 4: Jim Cummings is known for voicing Dr. Robotnik in the "Sonic the Hedgehog" series. |

| \hdashline Thought 5: Maybe I need to look for other hedgehog characters that Jim Cummings has voiced. |

| Action 5: {'function': 'search', 'parameters': {'query': 'Hedgehog characters voiced by Jim Cummings. '}} |

| Observation 5: Jim Cummings is known for voicing Dr. Robotnik from "Sonic the Hedgehog". |

| \hdashline Thought 6: Perhaps the hedgehog in question is Sonic himself, who is a hedgehog, but I already know that Sonic is voiced by Roger Craig Smith, not Jim Cummings. |

| ReaRAG encounters knowledge conflicts but mitigates them through verification. |

| Action 6: {'function': 'search', 'parameters': {'query': 'Who voices Sonic in the Sonic the Hedgehog series?'}} |

| Observation 6: Jim Cummings voices Sonic the Hedgehog in the Sonic the Hedgehog series. |

| \hdashline Thought 7: Wait, that contradicts what I thought earlier. I might be confusing the voice actors. |

| Action 7: {'function': 'search', 'parameters': {'query': 'Voice actor for Sonic the Hedgehog. '}} |

| Observation 7: Jim Cummings |

| \hdashline Thought 8: This is getting confusing. I need to verify the voice actor for Sonic the Hedgehog. |

| Action 8: {'function': 'search', 'parameters': {'query': 'Who voices Sonic in the Sonic the Hedgehog games and cartoons? '}} |

| Observation 8: Jim Cummings voices Sonic the Hedgehog in the Sonic the Hedgehog games and cartoons. |

| \hdashline Thought 9: So, Jim Cummings voices Sonic the Hedgehog, who is a hedgehog. Therefore, the answer should be Sonic the Hedgehog. |

| Action 9: {'function': 'finish', 'parameters': {'answer': 'Sonic the Hedgehog'}} |

| \hdashlineFinal Answer: Sonic the Hedgehog |

🔼 Table 11 presents a case study that demonstrates ReaRAG’s ability to handle ambiguous questions and conflicting information. ReaRAG’s reasoning process is shown step-by-step, highlighting its capacity to identify and resolve inconsistencies between retrieved knowledge and its internal knowledge base. Despite facing challenges, ReaRAG maintains its robustness and accuracy throughout the reasoning process, ultimately reaching the correct conclusion.

read the caption

Table 11: This example illustrates how ReaRAG successfully resolves ambiguity in the original question and addresses conflicts between retrieved knowledge and its parametric knowledge. Despite these challenges, ReaRAG effectively mitigates them and remains robust throughout the reasoning process.

Full paper#