TL;DR#

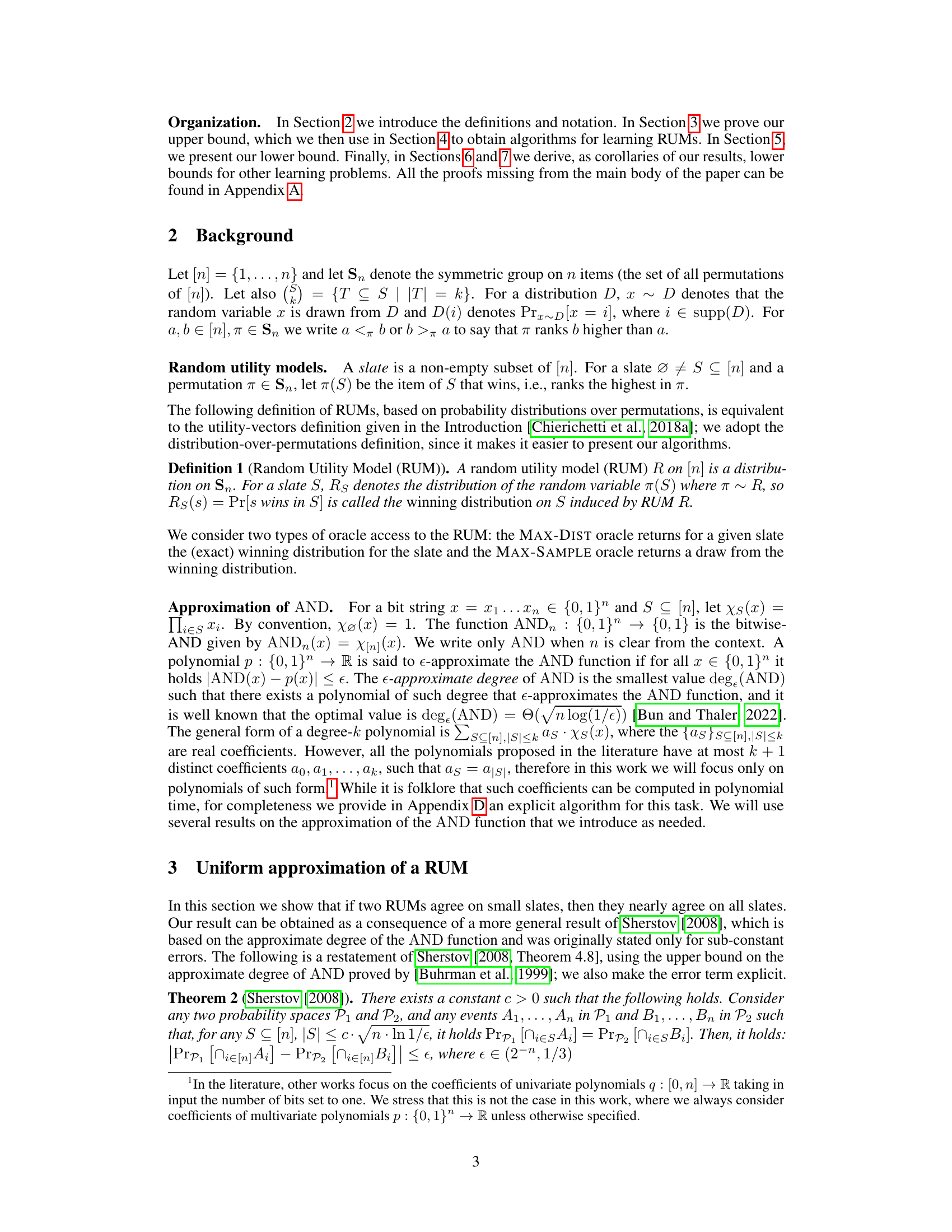

Random Utility Models (RUMs) are commonly used to model user choices, but learning accurate RUMs often requires extensive datasets. This presents a significant challenge for applications where only limited user feedback is available (e.g., web search results). Existing learning algorithms, while providing some solutions, are often inefficient and impractical for real-world settings. This paper addresses the problem of learning RUMs from small slates (subsets of available choices).

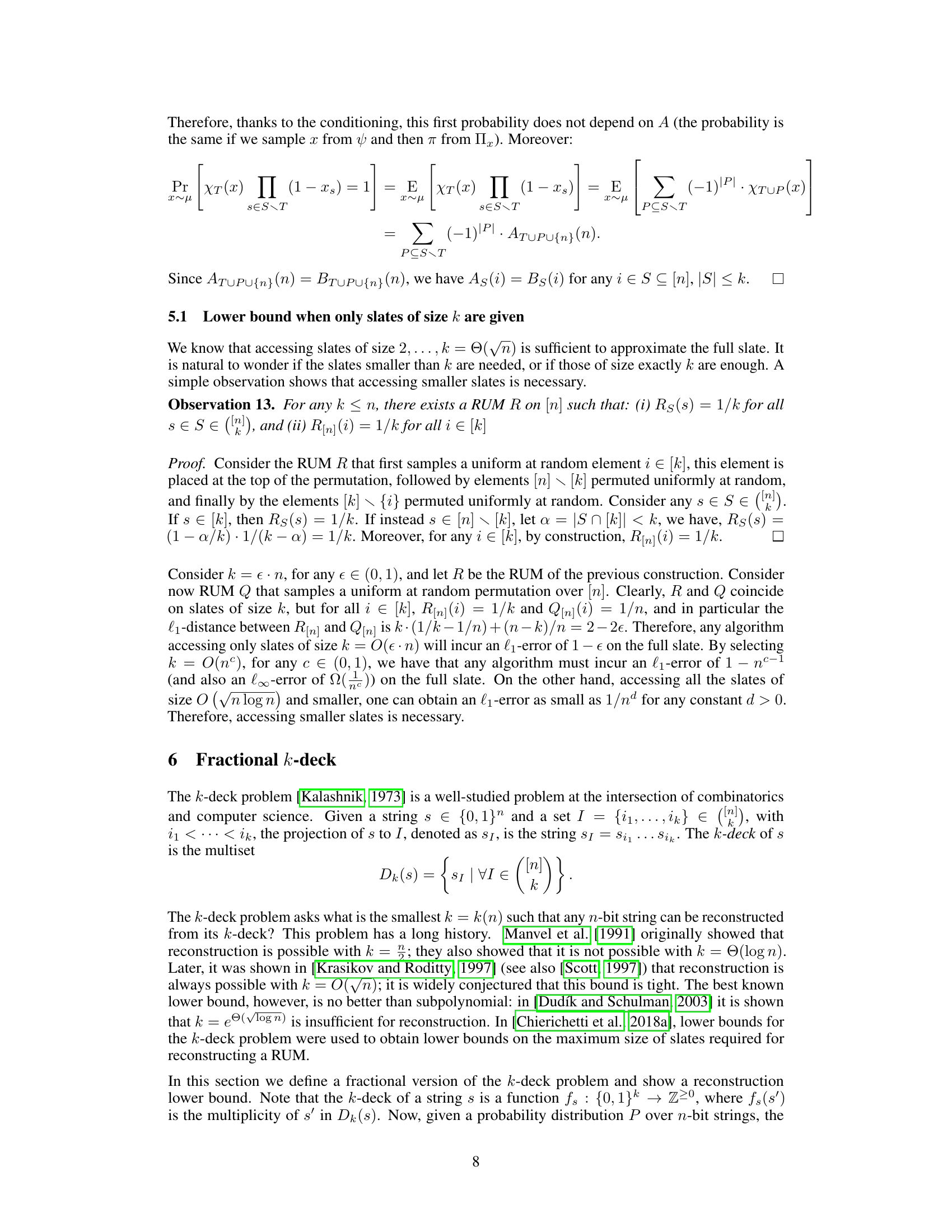

The researchers demonstrate that for accurate predictions, a surprisingly small slate size is sufficient for accurate prediction. They then introduce new algorithms to efficiently learn RUMs from these limited data points and establish matching lower bounds. These lower bounds provide critical insights into the limitations of learning RUMs from small datasets. The work also has implications for related theoretical problems, like fractional k-deck and trace reconstruction. This addresses a fundamental challenge in the field, leading to better predictive models and insights.

Key Takeaways#

Why does it matter?#

This paper is crucial because it bridges the gap between theoretical understanding and practical application in RUM learning. By establishing tight bounds for learning RUMs from small slates, it significantly advances algorithm design and provides insights into related problems like k-deck and trace reconstruction. The results offer new directions for research and improve the efficiency of existing methods.

Visual Insights#

Full paper#