TL;DR#

Traditional online algorithms often struggle with real-world scenarios due to their reliance on worst-case analysis and lack of prediction incorporation. Existing learning-augmented algorithms also have shortcomings because they treat machine learning predictors as “black boxes” without considering their design.This limits their effectiveness, especially when predictions are imperfect.

This research presents a new approach. It proposes integrating the learning process directly into the algorithm design.This enables the algorithm to adapt dynamically based on the data available at each step. The study focuses on caching, load balancing, and scheduling, creating new algorithms specifically tailored to these problems. The results show that this approach significantly outperforms previous methods by producing simpler, more efficient algorithms with better performance bounds.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in online algorithms and machine learning. It bridges the gap between theoretical online algorithms and practical machine learning, offering a novel framework for designing algorithms that effectively leverage predictions. This opens exciting new avenues for research, particularly in areas like caching, load balancing, and scheduling, where prediction accuracy is often variable and unpredictable.

Visual Insights#

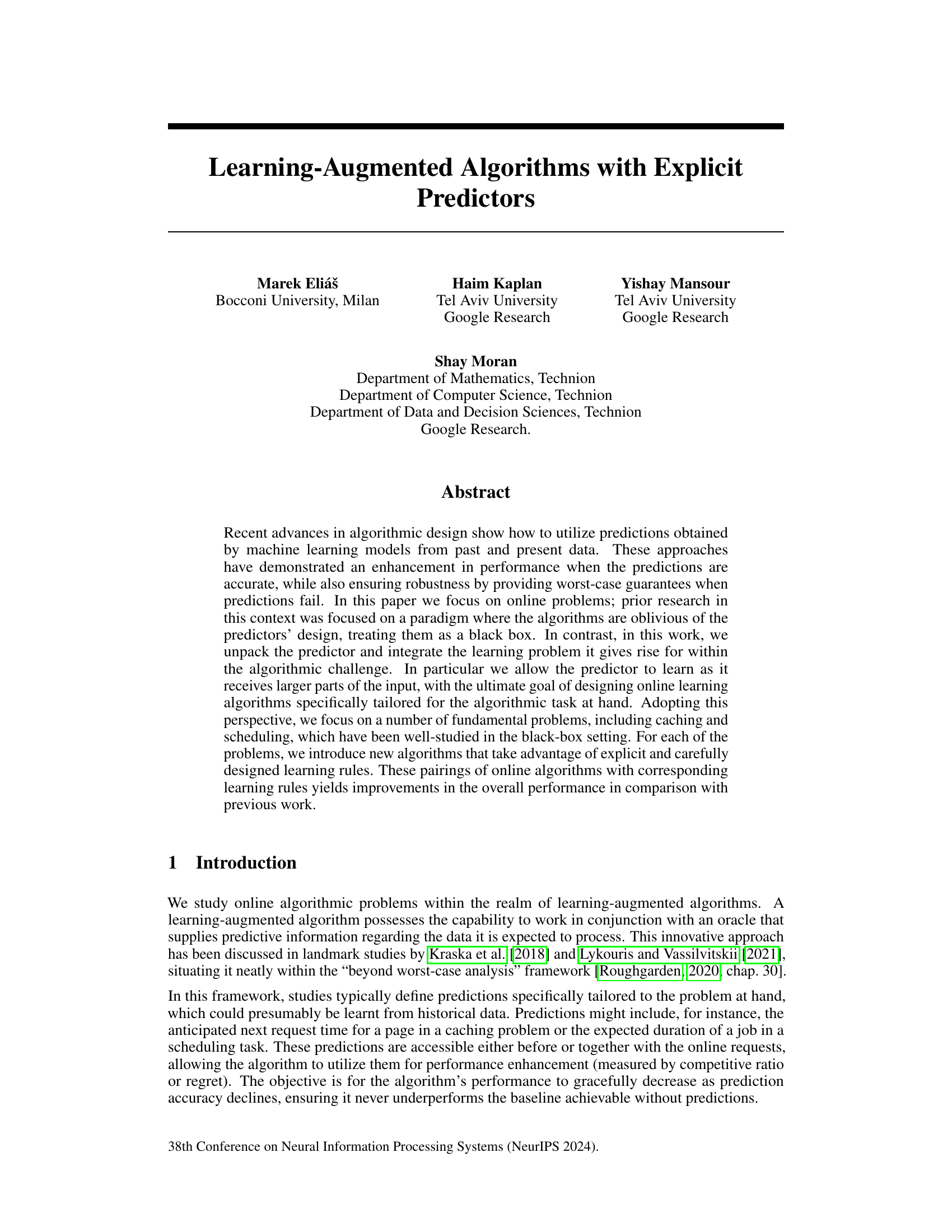

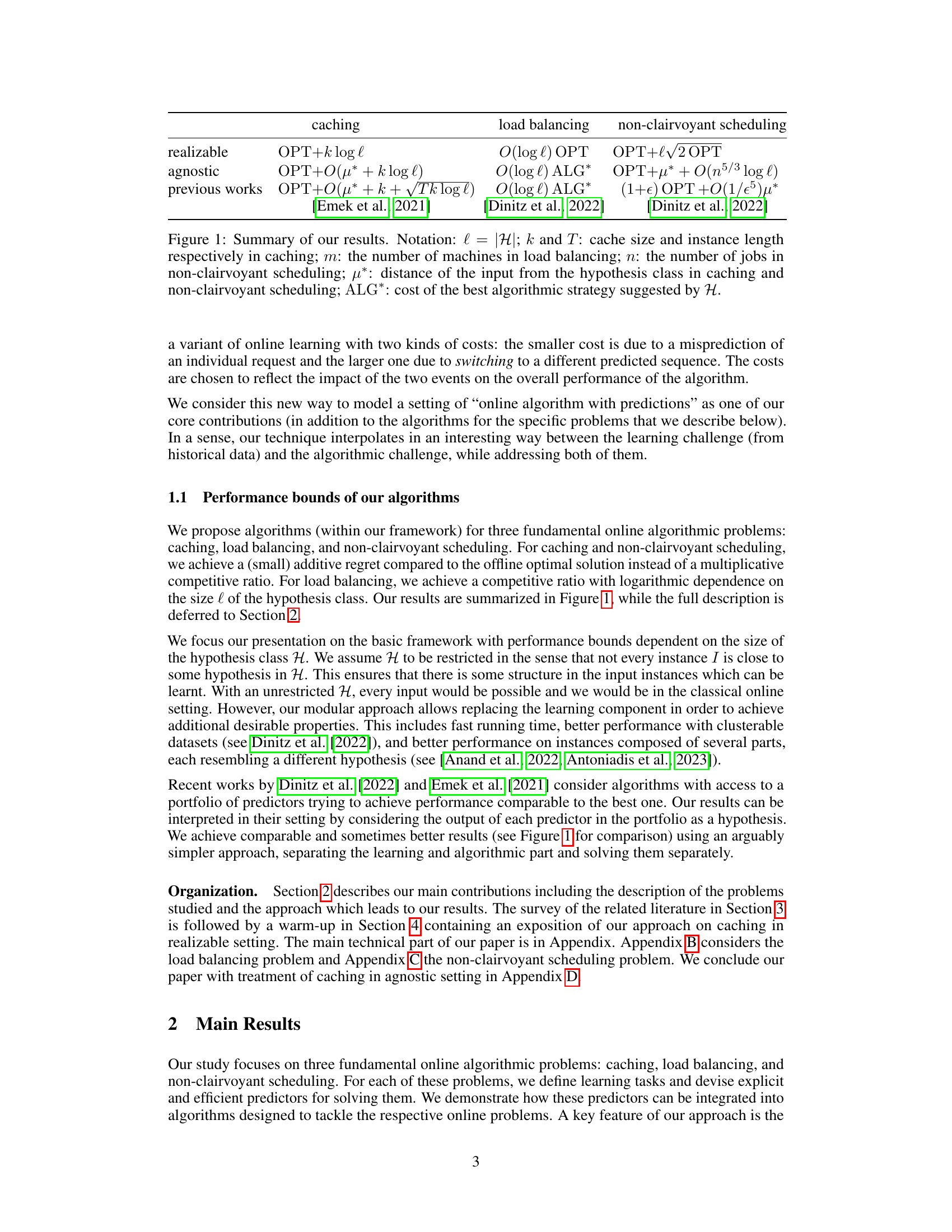

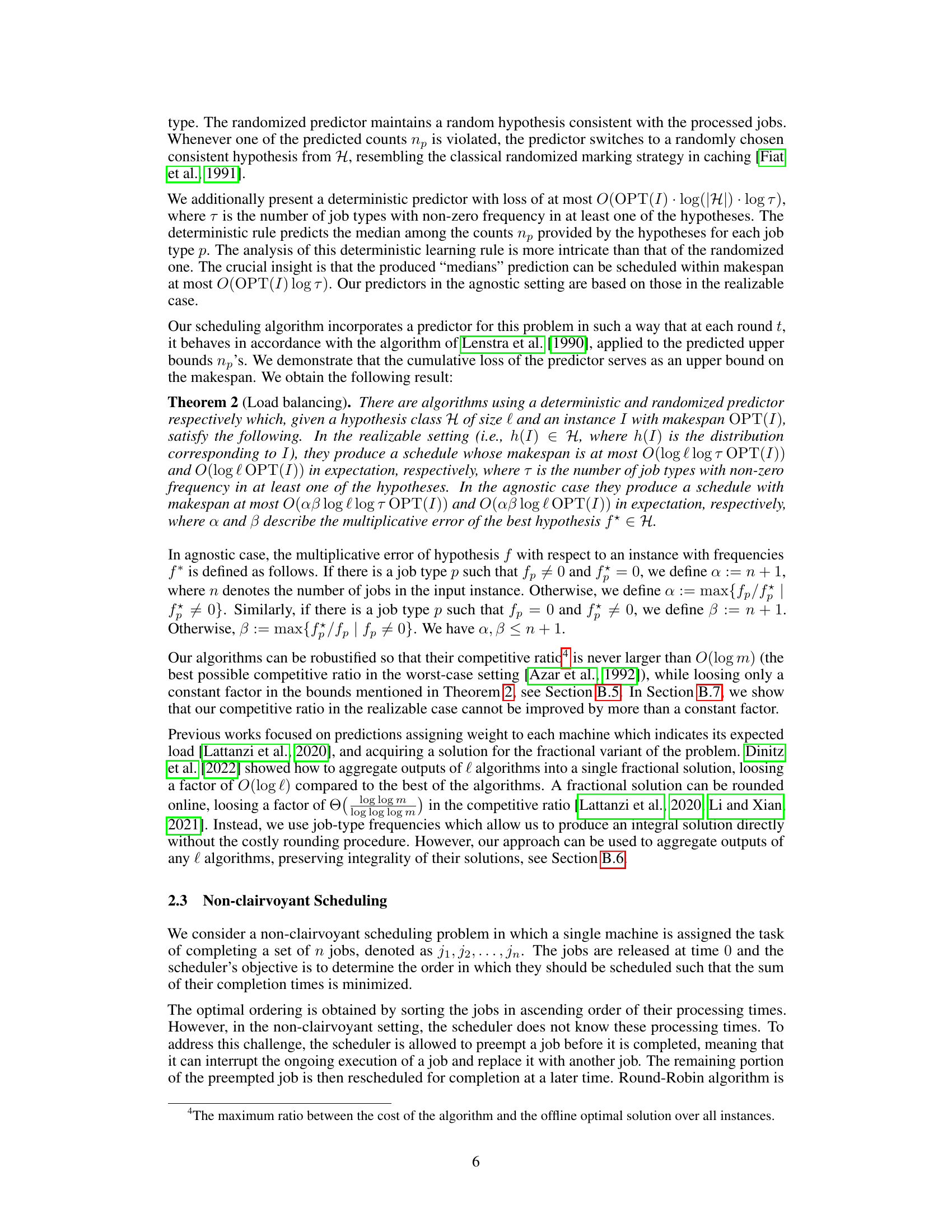

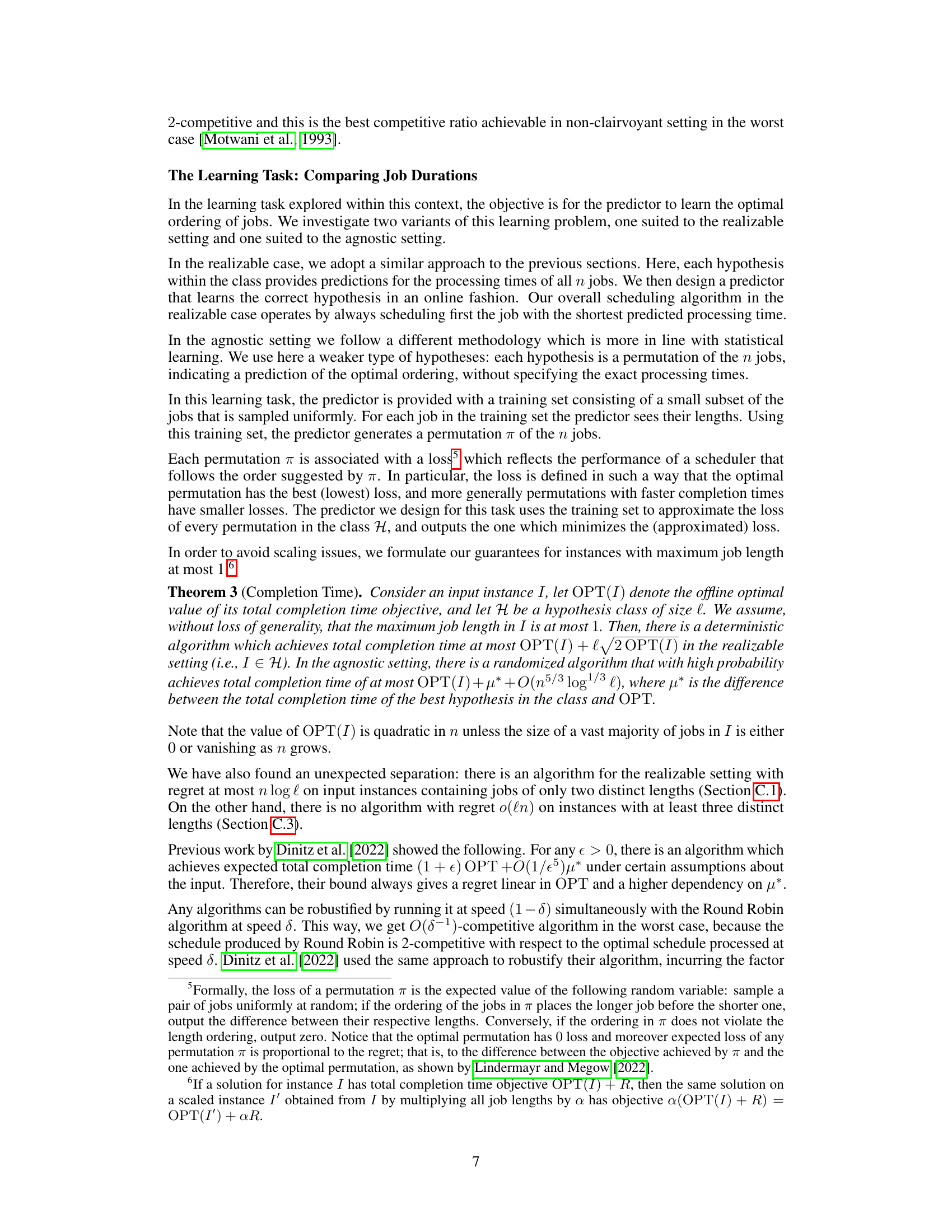

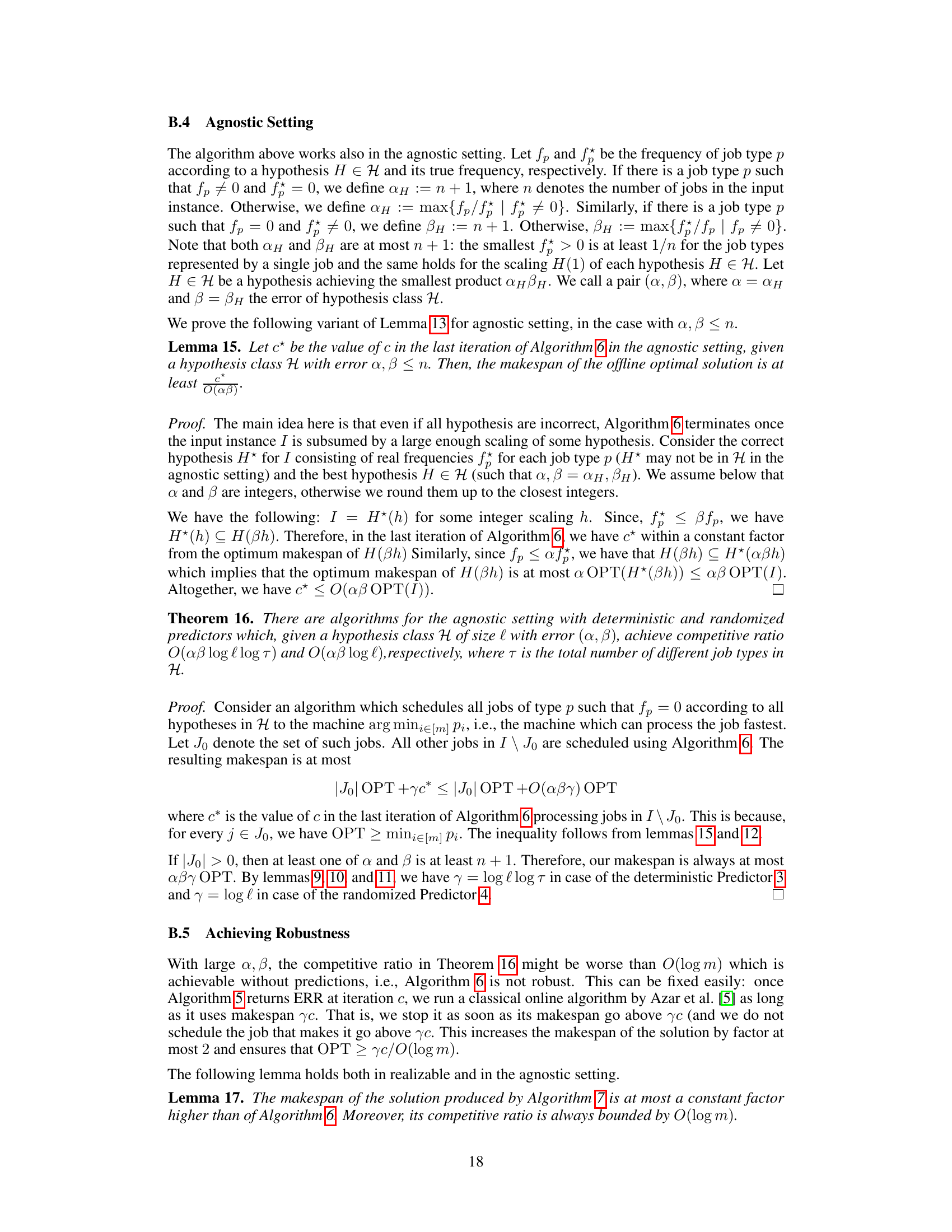

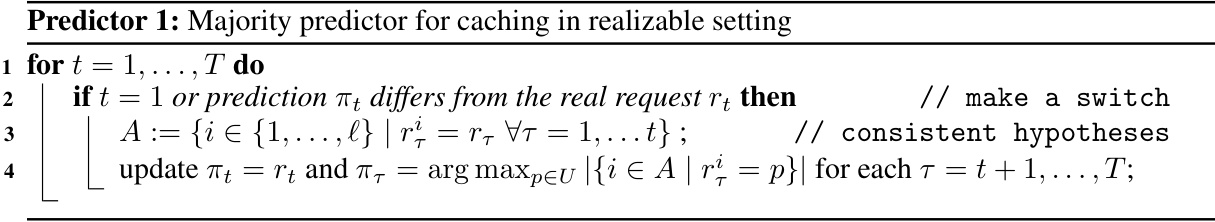

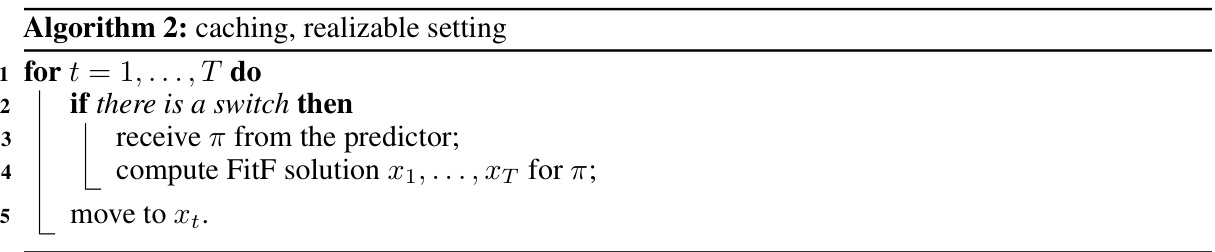

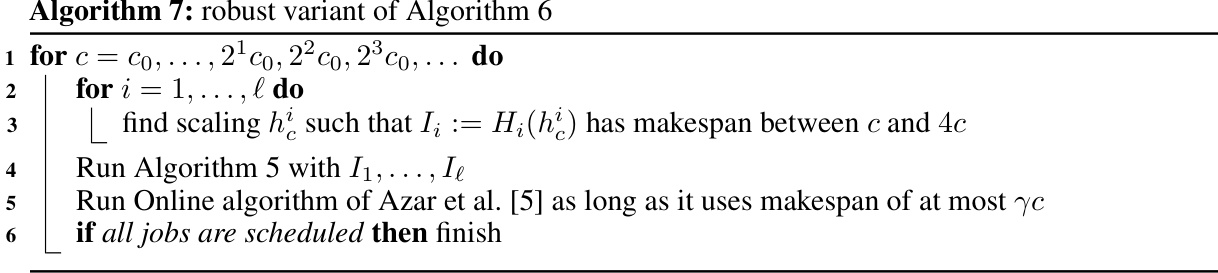

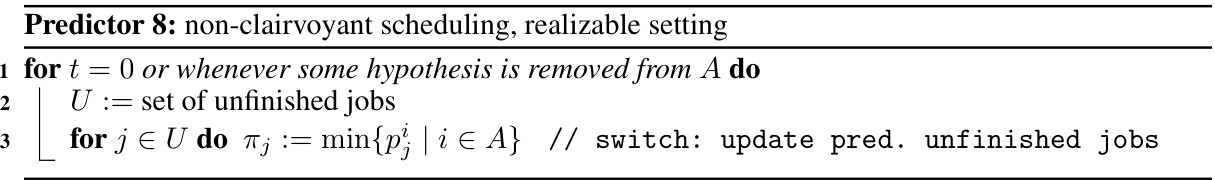

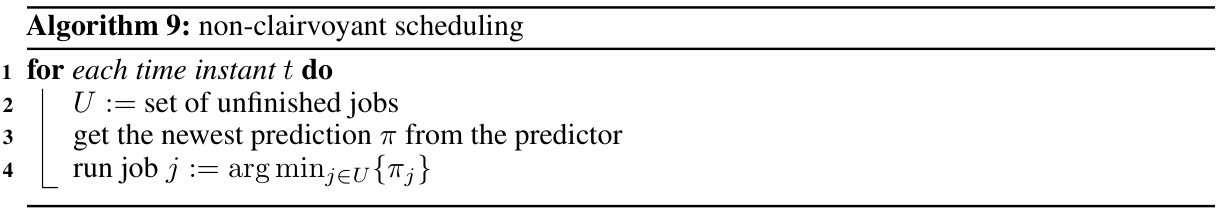

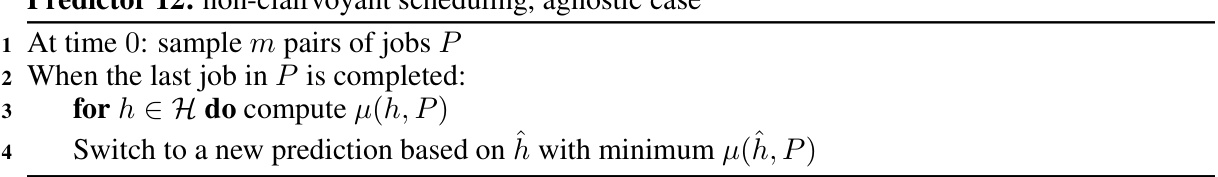

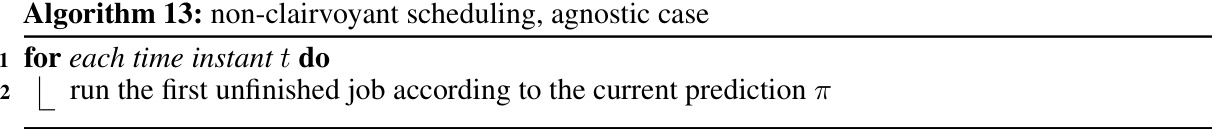

🔼 This figure summarizes the results of the proposed algorithms for three online problems: caching, load balancing, and non-clairvoyant scheduling. It shows the performance bounds achieved by the new algorithms (in terms of competitive ratio or additive regret) in both realizable and agnostic settings, comparing them to previous works. The notation clarifies the meaning of the symbols used in the table to represent different aspects of each problem (e.g., cache size, number of machines, etc.).

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the results of the proposed algorithms for three fundamental online algorithmic problems: caching, load balancing, and non-clairvoyant scheduling. It compares the performance bounds of the new algorithms (in realizable and agnostic settings) to the best previously known bounds for each problem. The notations used are defined in the caption and clarify the meaning of the results.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

In-depth insights#

Explicit Predictors#

The concept of “Explicit Predictors” in the context of learning-augmented algorithms signifies a paradigm shift from treating machine learning models as “black boxes.” Instead of passively receiving predictions, the algorithm actively incorporates the predictor’s design and learning process. This transparency allows for a deeper integration, tailoring the learning rules to the specific algorithmic task. The approach moves beyond the limitations of ad-hoc predictions, offering the algorithm the ability to discern patterns from data prefixes, improving decision-making before incurring significant costs and potentially identifying beneficial actions overlooked by black-box predictors. This integration enhances performance by leveraging both learning and algorithmic strengths, resulting in improved bounds compared to the black-box approach. The explicit nature allows for the development of learning rules specifically designed to improve performance on specific algorithmic problems, addressing the shortcomings of ad-hoc prediction methods. The carefully designed learning rules used in conjunction with well-suited online algorithms achieve improved performance in caching, scheduling, and load balancing. This approach enhances robustness by gracefully handling prediction inaccuracies while maintaining worst-case guarantees.

Online Algorithm Design#

Online algorithm design tackles problems where input arrives sequentially, demanding immediate decisions without future knowledge. This contrasts with offline algorithms which receive the entire input beforehand. Key challenges in online settings include balancing the need to make good decisions now with the uncertainty of future inputs. Competitive analysis frequently evaluates online algorithms, comparing their performance against an optimal offline solution. Regret minimization provides another framework, focusing on the difference between an online algorithm’s cumulative cost and that of an optimal solution. Prediction and learning play a significant role in modern online algorithm design; incorporating predictions from machine learning models can often improve performance. However, a key challenge remains integrating these predictions seamlessly into robust algorithms with provable guarantees, even when predictions are imperfect, focusing on designing algorithms specifically tailored for the prediction model.

Learning Rules Impact#

A hypothetical section titled ‘Learning Rules Impact’ in a research paper would delve into how the design and implementation of learning rules significantly affect the performance of learning-augmented algorithms. It would likely explore the interplay between different learning rule choices and the overall algorithm’s efficiency and accuracy. Key factors considered could include the learning rate (impact on convergence speed and stability), the choice of loss function (influencing what aspects of the prediction are emphasized), and the learning algorithm itself (e.g., gradient descent, stochastic gradient descent, etc.). The analysis might assess the robustness of the algorithm under different prediction accuracy levels and examine the sensitivity of the learning rules to noise or errors in the input data. Furthermore, the section could investigate whether particular learning rules exhibit superior performance for specific problem types or instances. Comparative studies evaluating different learning rules against each other and against baseline algorithms (without learning) would be critical to demonstrate the impact of the learning rules and to offer guidelines for best practice. The overall goal is to highlight the crucial role learning rules play in achieving optimal performance and highlight directions for future research in learning-augmented algorithm design.

Agnostic Setting#

In the agnostic setting of learning-augmented algorithms, the assumption of perfectly matching real-world data to a hypothesis from a predefined set is removed. This contrasts with the realizable setting, where such a perfect match is assumed. The challenge in the agnostic setting lies in handling scenarios where the predictions are not perfectly aligned with the input, requiring algorithms robust to prediction errors. The algorithms must gracefully degrade in performance as prediction accuracy decreases, never underperforming a baseline without predictions. The paper likely presents novel algorithms specifically designed to address this uncertainty, potentially incorporating techniques such as regularization or error-handling mechanisms to mitigate the effects of imperfect predictions. Evaluating performance in this setting requires nuanced metrics that can capture the tradeoff between the benefits of using predictions when accurate and mitigating the negative impact when predictions fail, possibly involving novel measures of ‘distance’ between the real data and the hypothesis class. Overall, the agnostic setting presents a more realistic and challenging problem that pushes the development of more resilient and adaptive algorithms.

Future Research#

Future research directions stemming from this work on learning-augmented algorithms could explore more sophisticated learning models beyond the simple majority and randomized predictors used here. Investigating the effectiveness of deep learning techniques or other advanced machine learning methods, particularly in the agnostic settings, warrants attention. Another key area is developing robust methods for handling prediction errors, potentially using ensemble techniques or adversarial training. The current work focuses on specific problems (caching, load balancing, scheduling); a broader investigation of its applicability across a wider range of online algorithms is vital. Finally, empirical evaluations are crucial to validate theoretical findings and demonstrate real-world performance improvements against existing state-of-the-art approaches. This would provide concrete evidence of the practical benefits of the proposed framework.

More visual insights#

More on figures

🔼 This figure summarizes the results of the proposed learning-augmented algorithms for three online problems: caching, load balancing, and non-clairvoyant scheduling. It compares the performance bounds (regret or competitive ratio) achieved by the new algorithms to those of previous works. The notation used clarifies the meaning of various parameters like the size of the hypothesis class, cache size, instance length, and the distance between the input instance and the hypothesis class.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This figure summarizes the performance bounds achieved by the proposed algorithms for three online problems: caching, load balancing, and non-clairvoyant scheduling. It compares the results (in terms of additive or multiplicative regret relative to the optimal offline solution) to previous works in the literature, highlighting improvements achieved using the authors’ learning-augmented approach. The notation used in the table is defined for each problem: cache size (k), number of machines (m), number of jobs (n), hypothesis class size (l), instance length (T), and the distance of the input from the hypothesis class (μ*).

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

More on tables

🔼 This table summarizes the performance bounds achieved by the authors’ algorithms for three fundamental online algorithmic problems: caching, load balancing, and non-clairvoyant scheduling. It compares their results to those of previous works, highlighting improvements achieved through the use of explicit predictors. The notation used in the table is defined to clarify the meaning of the presented results.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the performance bounds achieved by the authors’ algorithms for three fundamental online algorithmic problems: caching, load balancing, and non-clairvoyant scheduling. It compares their results to those of previous works, highlighting improvements in terms of additive regret or competitive ratio. The notation used in the table is clearly defined for each of the problems. The table shows that the new algorithms offer improvements, particularly when the input instance is close to the hypothesis class.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the results of the proposed algorithms for three online algorithmic problems: caching, load balancing, and non-clairvoyant scheduling. For each problem, it shows the performance bounds achieved by the proposed algorithms in both realizable and agnostic settings, comparing them to the results from previous work. The notation clarifies the meaning of various parameters used in the bounds, such as cache size, instance length, number of machines, and the distance of the input from the hypothesis class.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the results of the proposed algorithms for three online problems: caching, load balancing, and non-clairvoyant scheduling. It compares the performance bounds (additive regret or competitive ratio) achieved by the new algorithms with those of previous works. The notation clarifies the meaning of various parameters like cache size, number of machines, number of jobs, and the distance of input from the hypothesis class.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the performance bounds achieved by the proposed algorithms for three online algorithmic problems: caching, load balancing, and non-clairvoyant scheduling. It compares the results obtained in the realizable and agnostic settings, highlighting the improvements achieved by the new algorithms compared to previous works. The notation used to represent various parameters and costs involved in the analysis is also explained.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the results obtained by the proposed algorithms for three online algorithmic problems: caching, load balancing, and non-clairvoyant scheduling. It compares the performance of the proposed algorithms against previous works, showing improvements in terms of additive regret or competitive ratio. The notation used in the table is defined to describe the hypothesis class size, cache size, instance length, number of machines, and jobs, and the distance between the input and the hypothesis class. Finally, the cost of the best algorithmic strategy suggested by the hypothesis class is also considered.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the performance bounds achieved by the authors’ proposed algorithms for three fundamental online algorithmic problems: caching, load balancing, and non-clairvoyant scheduling. It compares these bounds to those achieved by previous works. The notation clarifies the meaning of the symbols used, such as l representing the size of the hypothesis class, k and T representing cache size and instance length (for caching), m for the number of machines (in load balancing), and n for the number of jobs (in non-clairvoyant scheduling). The μ* represents the distance of the input from the hypothesis class (for caching and non-clairvoyant scheduling), and ALG* represents the cost of the best algorithmic strategy suggested by the hypothesis class H.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the results of the proposed algorithms for three online algorithmic problems: caching, load balancing, and non-clairvoyant scheduling. It compares the performance of the proposed algorithms with previous works, showing improvements in terms of additive regret or competitive ratio. The notations used are defined to help understanding.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the results of the proposed learning-augmented algorithms for three online problems: caching, load balancing, and non-clairvoyant scheduling. It compares the performance bounds achieved by the new algorithms to those of previous works, highlighting improvements obtained by explicitly incorporating the learning process into the algorithm design. The notation used in the table is defined to clarify the meaning of each performance bound.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

🔼 This table summarizes the results of the proposed algorithms for three fundamental online algorithmic problems: caching, load balancing, and non-clairvoyant scheduling. It shows the performance bounds achieved by the new algorithms in both realizable (where the input instance perfectly aligns with one of the hypotheses) and agnostic (where the input may not perfectly align with any hypothesis) settings. The bounds are compared to previous work, highlighting improvements in terms of additive regret and competitive ratio. Notation clarifies the meaning of variables used to represent the size of the hypothesis class, cache size, instance length, number of machines, and distance from the hypothesis class.

read the caption

Figure 1: Summary of our results. Notation: l = |H|; k and T: cache size and instance length respectively in caching; m: the number of machines in load balancing; n: the number of jobs in non-clairvoyant scheduling; μ*: distance of the input from the hypothesis class in caching and non-clairvoyant scheduling; ALG*: cost of the best algorithmic strategy suggested by H.

Full paper#