↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Multi-agent reinforcement learning struggles to create cooperative agents that generalize well to unseen teammates, a problem known as ‘ad-hoc teamwork’. Current state-of-the-art methods for generating diverse training partners often inadvertently create partners that are overfit to their training environment, thus hindering the development of robust generalist agents. This severely limits the potential of such systems for real-world applications.

This paper tackles this challenge head-on. The researchers propose a principled method for measuring partner diversity and specialization, and then introduce two novel approaches, SpecTRL and SpecTRL DAgger, to extract beneficial behaviors from the generated partners while effectively removing overfitting. Experimental results demonstrate that their proposed methods successfully generate more robust generalist agents that outperform those trained with standard techniques. The improved generalizability makes these approaches significantly more promising for the development of reliable, real-world applications for multi-agent systems.

Key Takeaways#

Why does it matter?#

This paper is crucial because it addresses the critical issue of overfitting in multi-agent reinforcement learning, a prevalent challenge hindering the development of robust cooperative agents. By introducing novel methods to improve partner quality, it opens up new avenues for creating more effective and generalizable AI systems for complex collaborative tasks. This is highly relevant to current trends in AI safety and robust AI development, making it important reading for researchers in the field.

Visual Insights#

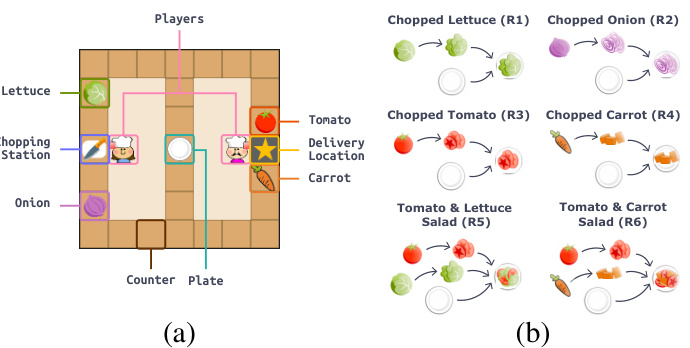

This figure shows the layout of the multi-recipe Overcooked game environment. Panel (a) illustrates the game’s spatial setup, including the positions of the players, the chopping station, the counter, and the plate, as well as the delivery location for completed dishes. The different colored lines connecting the ingredients to the workspaces indicate which ingredients can be used at which workspace. Panel (b) illustrates the six different recipes that can be created in the game, including the single-ingredient recipes (R1-R4) and the double-ingredient recipes (R5-R6). The arrows represent the workflow of creating each recipe.

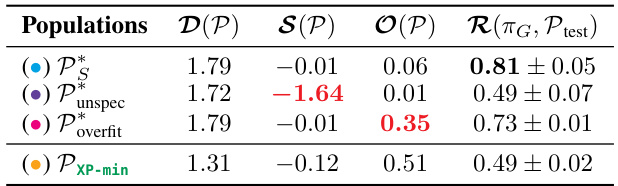

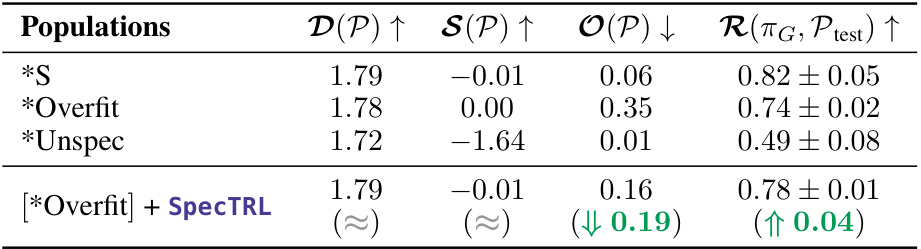

This table shows the diversity, specialization, and overfitness of four different partner populations: Oracle specialists (Ps), Unspecialized partners (Punspec), Overfit partners (Poverfit), and XP-min partners (Pxp-min). The diversity (D) represents how diverse the partners are in their behaviors. The specialization (S) shows how focused each partner is on a particular strategy. The overfitness (O) indicates how much the partners overfit to specific situations or partners during training. Finally, the harmonic mean of success (R(πG, Ptest)) shows the average success rate of generalist agents trained using each partner population, when tested on a set of unseen partners. This table highlights the relationships between these qualities and the robustness of the resulting generalist agents.

In-depth insights#

Robust Coop Agents#

The concept of “Robust Coop Agents” centers on creating multi-agent systems capable of effective collaboration even under unforeseen circumstances. Robustness implies resilience to unexpected situations, such as encountering novel environments or interacting with unfamiliar agents. The research likely explores methods for training these agents, perhaps focusing on techniques that promote generalization beyond the training data. This might involve generating diverse training partners, or using specialized agents to broaden the experience. Overfitting is a key concern, as agents may perform well with known partners but fail with new ones. The research may propose strategies to mitigate overfitting, perhaps through regularization techniques or data augmentation. Ultimately, the goal is to design agents that are not only effective collaborators but also adaptable and dependable in dynamic, real-world scenarios. The research could also evaluate different training methodologies, comparing their relative success in producing robust and cooperative agents.

XP-min Overfitting#

XP-min, while effective at generating diverse cooperative agents, suffers from a critical overfitting issue. Partners trained with XP-min learn to exploit the cross-play (XP) setup, developing “handshaking” behaviors or sabotaging strategies that maximize self-play (SP) returns but hinder generalization to unseen partners. This overfitting undermines the very goal of creating robust generalist agents, rendering the diverse partner population less valuable. The core problem lies in the XP-min objective’s incentive for partners to identify and react to whether they’re in SP or XP interactions, leading to the learning of arbitrary and context-specific strategies, rather than general cooperative behaviors. Addressing this overfitting is crucial for harnessing the potential of XP-min. This necessitates developing techniques that maintain partner diversity and specialization while mitigating this undesirable overfitting to achieve the true objective of robust generalist agent training.

SpecTRL Method#

The proposed SpecTRL method is a novel approach to address the overfitting problem in cooperative multi-agent reinforcement learning. It leverages the diverse and specialized behaviors learned by XP-min agents without inheriting their overfitness. This is achieved through a reinforcement learning-based transfer mechanism that distills the useful knowledge from the XP-min agents, creating a new population of distilled partners. The distillation process is carefully designed to preserve the diversity and specialization while mitigating the handshaking behaviors and other overfit aspects. SpecTRL aims to provide a robust set of training partners that enhance the generalization capabilities of downstream generalist agents. A key strength is its simplicity relative to prior regularization methods, offering a more straightforward and effective means of leveraging XP-min partners. SpecTRL’s performance is further improved by combining it with DAgger, resulting in SpecTRL-DAgger, which enhances the distillation process and stability. This combination effectively reduces the number of incapable distilled partners. Overall, SpecTRL presents a significant step toward creating more robust and versatile cooperative AI agents.

Partner Qualities#

The concept of “Partner Qualities” is crucial for understanding robust cooperative multi-agent systems. The paper highlights that diversity alone is insufficient; specialization and overfitness are equally important. A diverse partner population exposes the generalist agent to various strategies, but specialized partners teach it nuanced solutions. Overfit partners, however, hinder generalization by promoting handshaking and sabotaging behaviors, thus reducing downstream robustness. The authors propose quantifiable metrics for these qualities and demonstrate how managing specialization and overfitness leads to more robust generalist agents. This nuanced understanding of partner qualities suggests that future research should focus on generating diverse yet specialized and appropriately fit partners to optimize cooperative agent learning.

Future Work#

Future research could explore several promising avenues. Investigating alternative methods for reducing overfitness in training partners beyond the proposed SpecTRL and SpecTRL DAgger techniques is crucial. This might involve exploring different regularization strategies or incorporating other learning paradigms. A deeper understanding of the relationship between partner diversity, specialization, and overfitness and its impact on generalist robustness needs further investigation. This requires a more nuanced mathematical framework to quantify these aspects. Additionally, extending the research to other domains beyond the current Overcooked environment will demonstrate generalizability and robustness. Finally, exploring the impact of different training objectives and how they interact with partner qualities and generalist performance, including opponent modeling techniques, would provide further valuable insights.

More visual insights#

More on figures

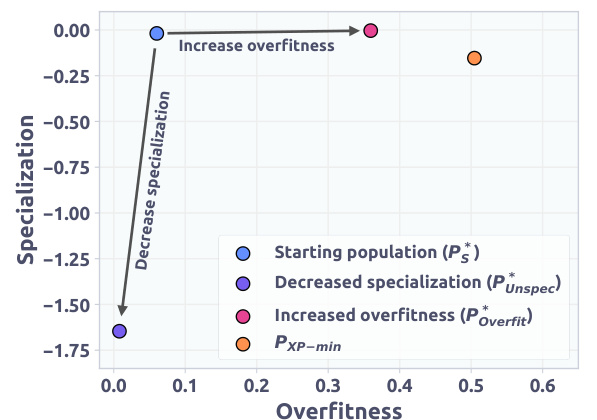

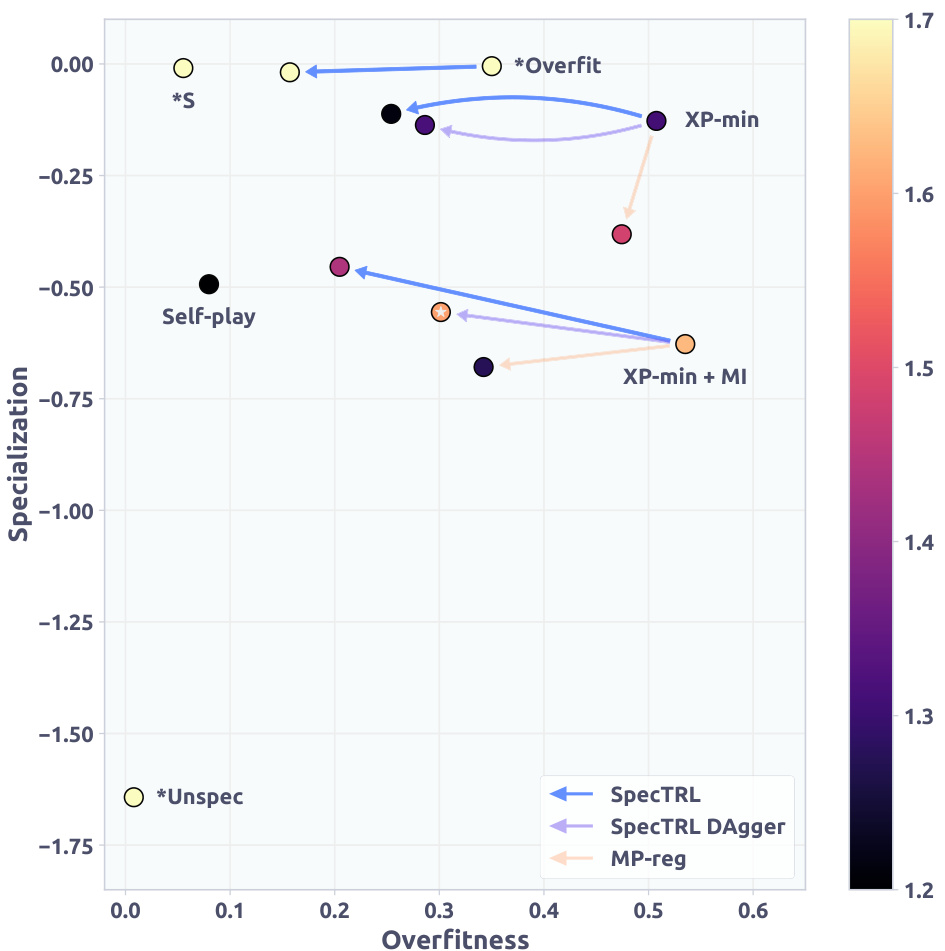

This figure visualizes the relationship between specialization and overfitness of different training populations in a two-dimensional space. The x-axis represents overfitness, and the y-axis represents specialization. Four different populations are plotted: the starting population (PS), a population with decreased specialization (Punspec), a population with increased overfitness (Poverfit), and a population generated using the cross-play minimization (XP-min) technique. Arrows are used to show the direction of change in specialization and overfitness from the starting population to the other populations. This allows a clear visualization of how different training methods impact the key qualities of the training populations.

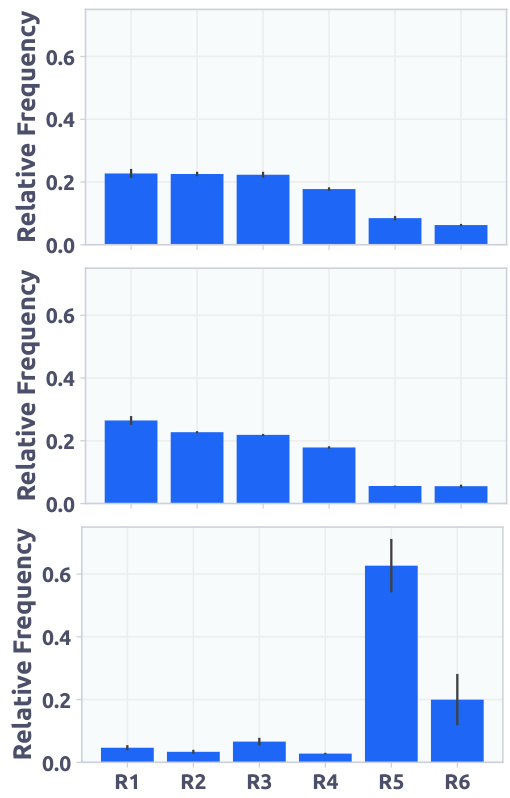

This figure shows the recipe distribution at the end of training for generalist agents trained using three different partner populations: the oracle specialists (Ps), the overfit partners (Poverfit), and the unspecialized partners (Punspec). The top panel displays the distribution for generalists trained with the oracle specialists, showcasing a relatively even distribution across all six recipes. The middle panel shows the distribution for generalists trained with overfit partners, indicating a slightly less diverse distribution compared to the oracle specialists. The bottom panel shows the distribution for generalists trained with unspecialized partners, exhibiting a highly uneven distribution concentrated heavily on recipes R5 and R6. This visualization highlights the impact of partner quality (specialization and overfitness) on the diversity of experiences learned by generalist agents.

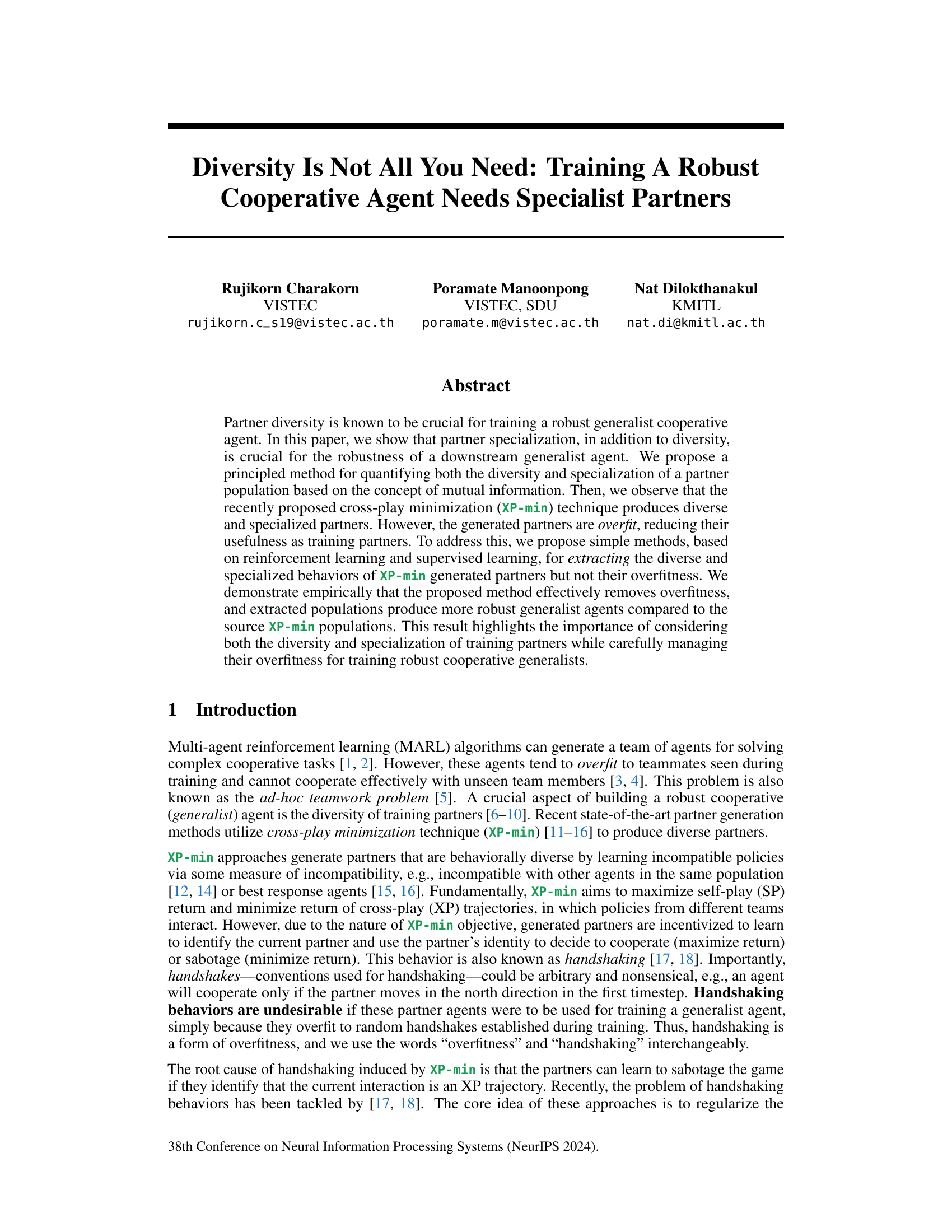

The figure shows the training curves of generalist agents trained with different populations of oracle partners. The x-axis represents the training iteration, and the y-axis represents the average return achieved by the generalist agents. The plot includes separate curves for generalists trained with the original oracle specialist population (P*), a population with increased overfitness (Poverfit), and a population with decreased specialization (Punspec). A dashed line also shows the average return obtained by self-play agents. The figure helps to illustrate the impact of partner specialization and overfitness on the performance of the trained generalist agents.

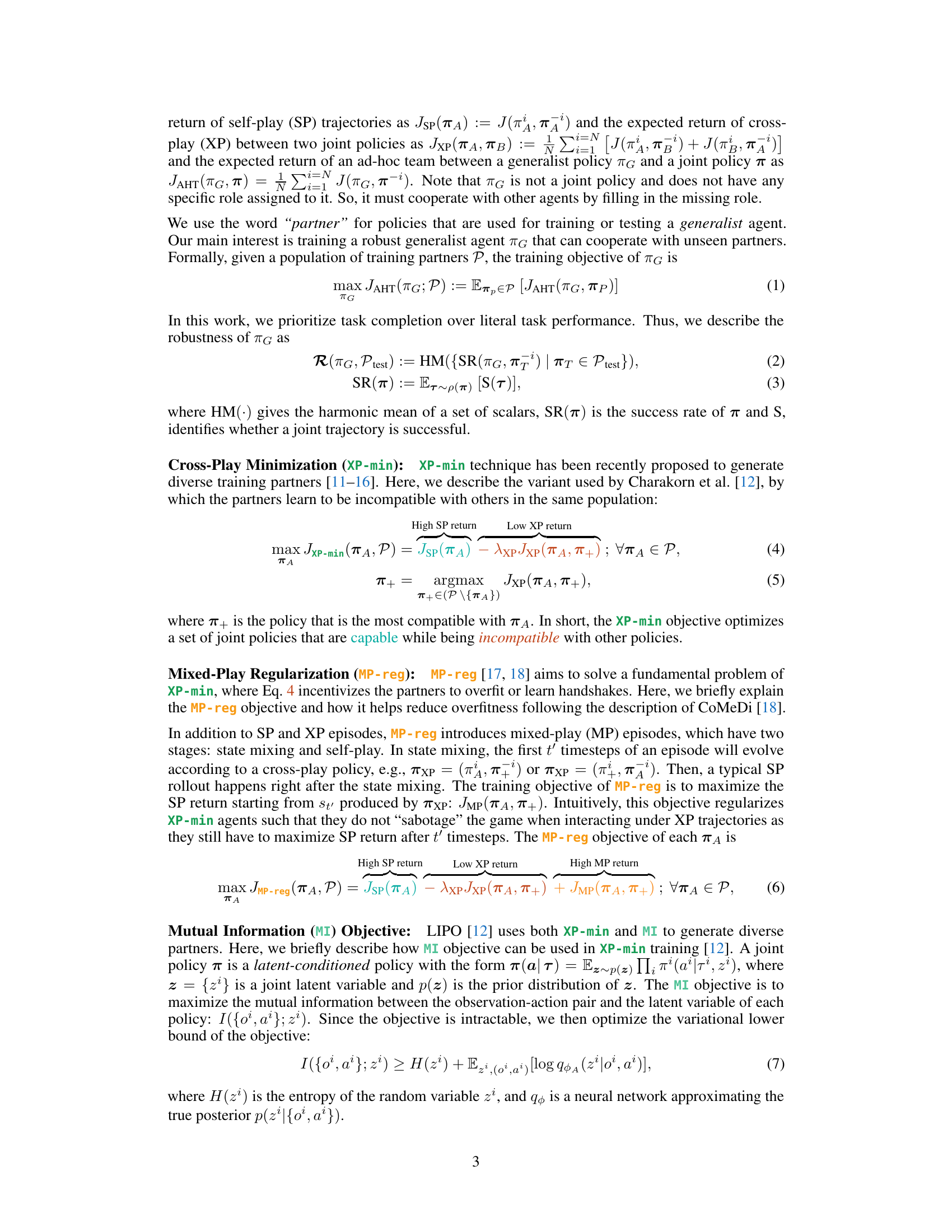

The figure illustrates the training pipeline of a generalist agent. First, XP-min training generates XP-min partners. Then, a specialization transfer process distills the knowledge from these partners into a new set of distilled partners. Finally, these distilled partners are used to train a generalist agent.

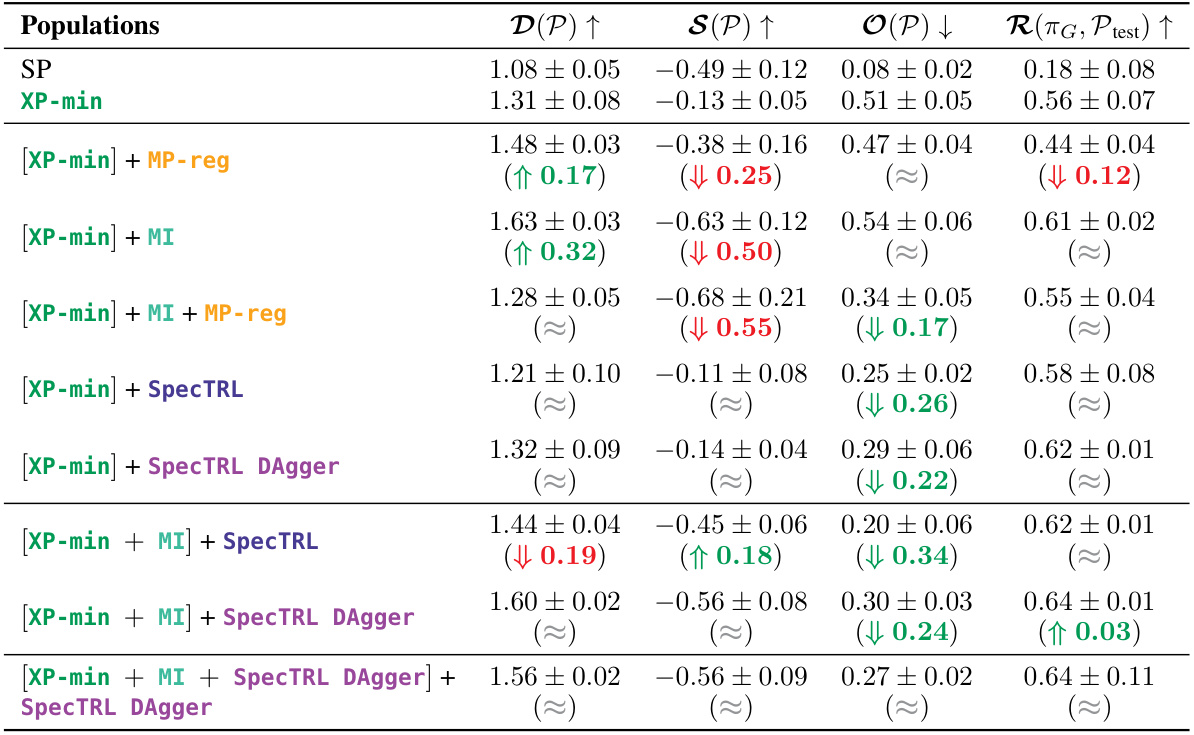

This figure visualizes the diversity, specialization, and overfitness of different partner populations generated using various methods (XP-min, MP-reg, SpecTRL, SpecTRL DAgger, etc.). The x-axis represents overfitness, the y-axis represents specialization, and the color intensity represents diversity. Arrows show how different methods affect these qualities. The results show that SpecTRL DAgger effectively reduces overfitness and maintains a relatively high level of diversity and specialization, resulting in more robust downstream generalist agents.

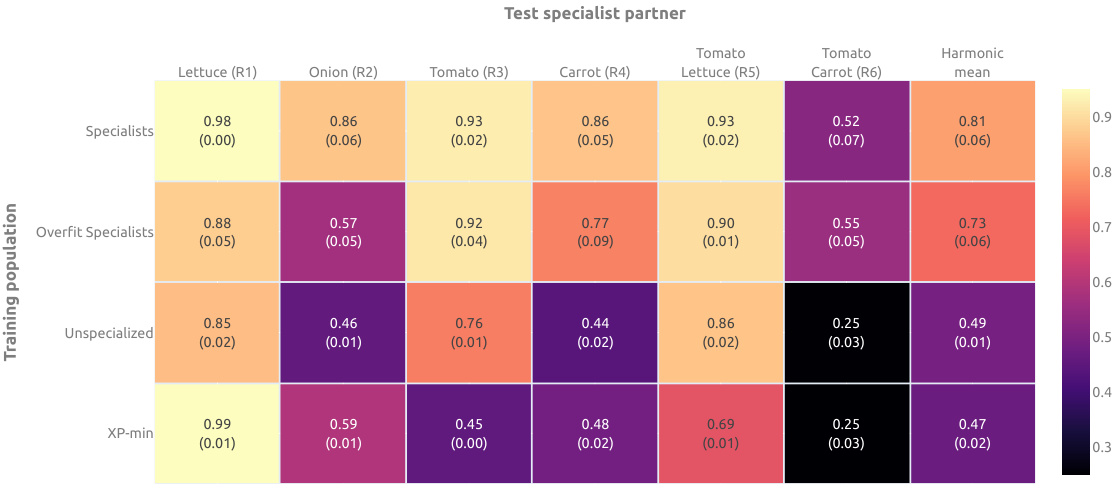

This figure shows a heatmap representing the success rate of generalist agents trained with four different populations (Specialists, Overfit Specialists, Unspecialized, XP-min) when tested against six different specialist partners (Lettuce (R1), Onion (R2), Tomato (R3), Carrot (R4), Tomato Lettuce (R5), Tomato Carrot (R6)). Each cell in the heatmap represents the success rate (with standard deviation in parentheses) of a generalist agent trained on a specific training population when tested on a specific specialist partner. The last column shows the harmonic mean success rates for each training population across all six specialist partners. This illustrates how the robustness of the generalist agents, trained with different populations, varies with different unseen partners.

More on tables

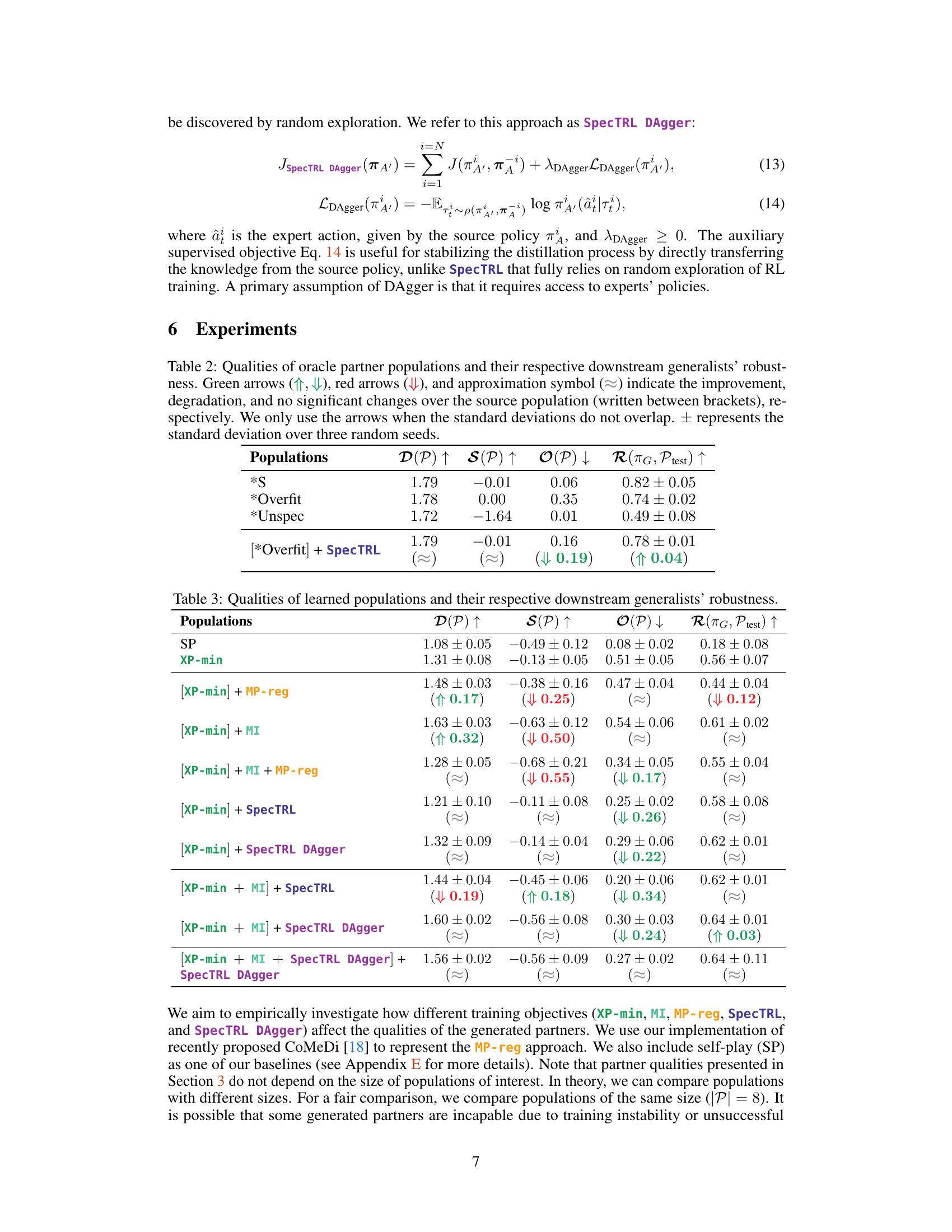

This table presents the diversity, specialization, and overfitness of four different partner populations. The populations include oracle specialists (*S), overfit oracle specialists (*Overfit), unspecialized oracle specialists (*Unspec), and a population generated by applying SpecTRL to the *Overfit population. The harmonic mean of the success rate of downstream generalist agents trained using each population is also included, demonstrating the impact of partner quality on generalist performance. The absence of FCP (Fictitious Co-Play) in this experiment is noted.

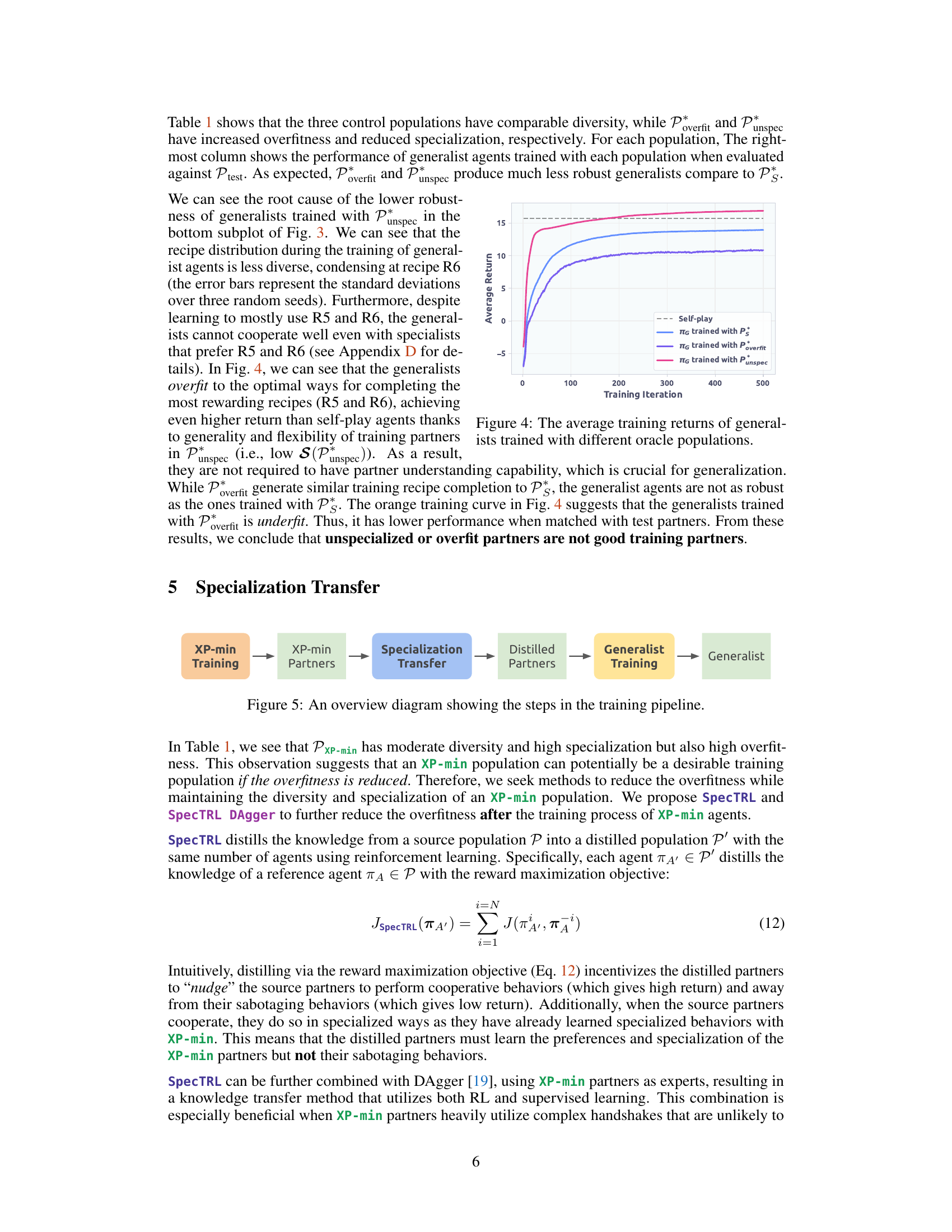

This table presents the diversity, specialization, and overfitness of different partner populations used in the experiments. It compares the performance of downstream generalist agents trained on each population, measured by the harmonic mean of their success rates against a test population (Ptest). The table highlights how these characteristics of the partner populations correlate with the robustness of the resulting generalist agents. Notably, the table shows that the FCP method was not used in this particular set of experiments.

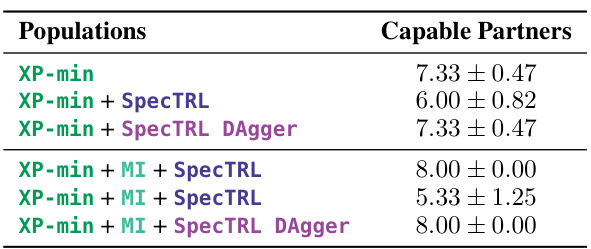

This table shows the number of capable partners (i.e., partners that successfully complete at least 50% of their self-play episodes) resulting from different training methods. It highlights that SpecTRL sometimes produces fewer capable partners than the original XP-min method, but SpecTRL DAgger mitigates this issue. The results suggest that SpecTRL DAgger is more effective at preserving the number of capable partners than SpecTRL alone, especially when combined with the Mutual Information (MI) objective.

Full paper#