↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

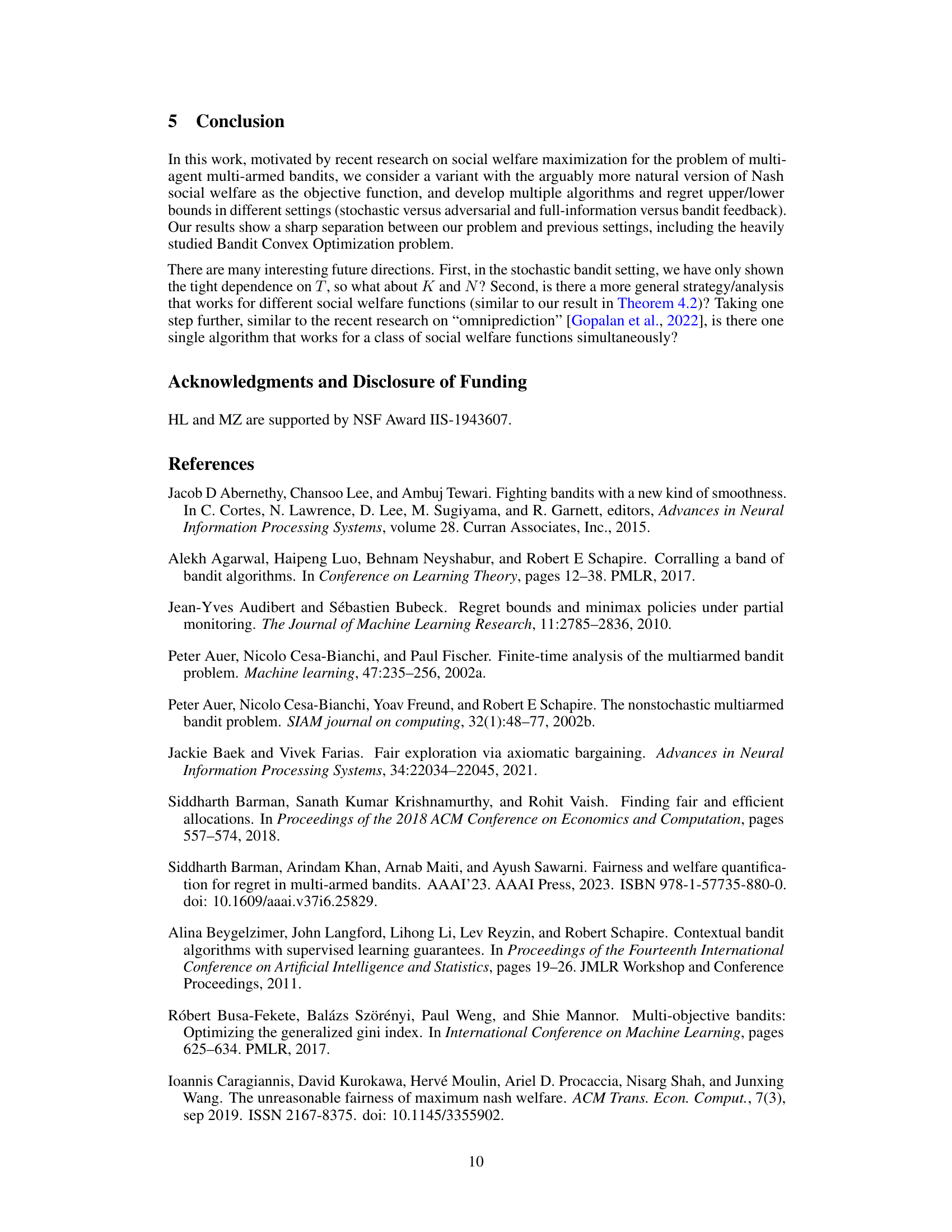

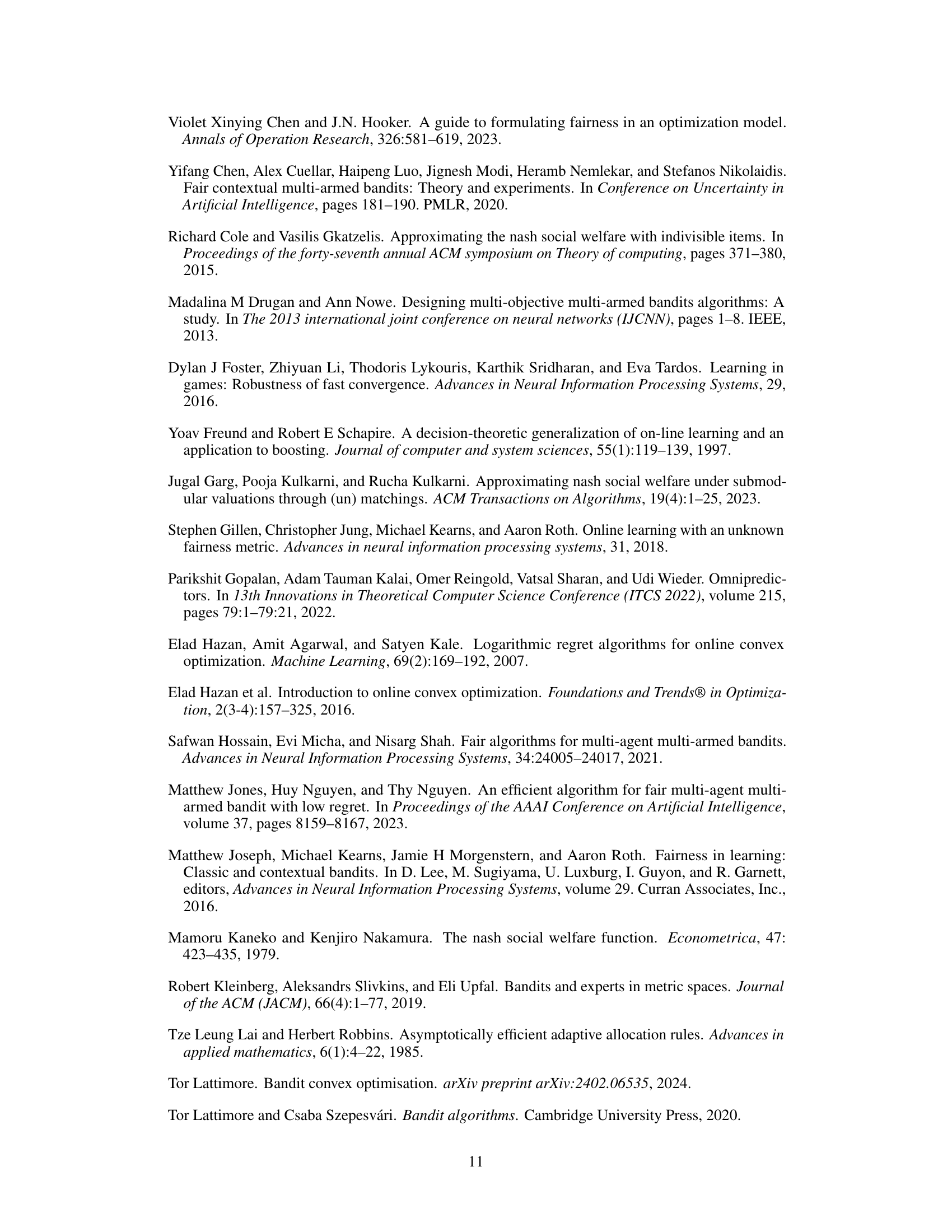

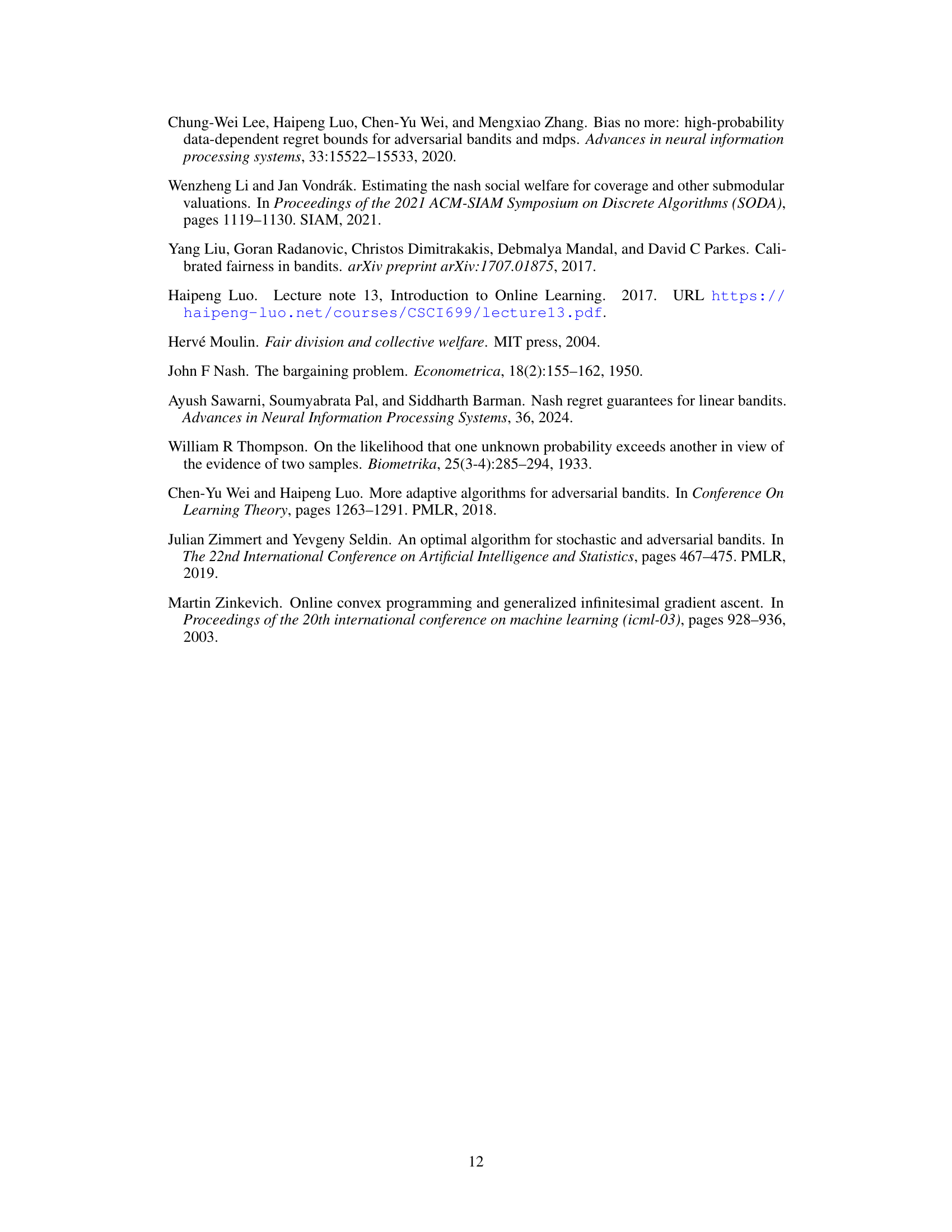

Many real-world problems require fair resource allocation among multiple agents. A common measure of fairness is Nash Social Welfare (NSW), where the goal is to maximize the geometric mean of all agents’ rewards. However, achieving this in online settings, where agents’ rewards are revealed sequentially, poses a significant challenge due to the complexity of NSW. Previous research has mostly studied a simpler product-based fairness metric.

This paper tackles this challenge head-on. The authors study online multi-agent NSW maximization in several settings, including stochastic and adversarial environments with different feedback mechanisms (bandit vs. full information). They develop novel algorithms with sharp regret bounds. Surprisingly, they find that sublinear regret is impossible to achieve in the adversarial setting with only bandit feedback. However, they propose new algorithms that achieve sublinear regret in the full-information adversarial setting. The paper’s theoretical results are significant, offering a better understanding of the challenges and possibilities of fair online learning.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in multi-agent systems and online learning. It addresses a critical gap in fair learning by focusing on the Nash Social Welfare (NSW), a widely used fairness measure. The results challenge existing bounds and provide novel algorithms for various settings, opening new avenues for research in fair optimization. The tight regret bounds offer significant theoretical advancements, while the proposed algorithms offer practical solutions for fair multi-agent decision making.

Visual Insights#

Full paper#