↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

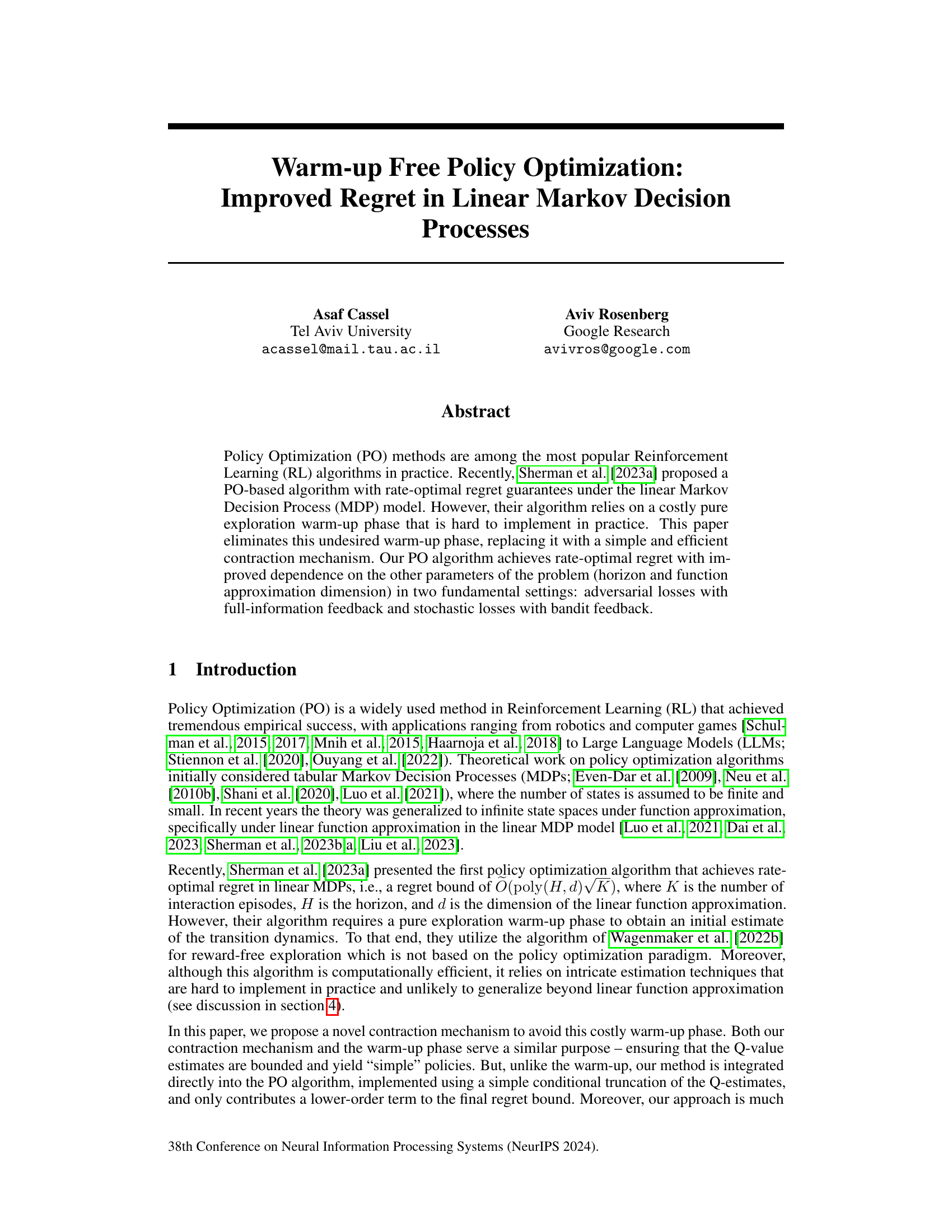

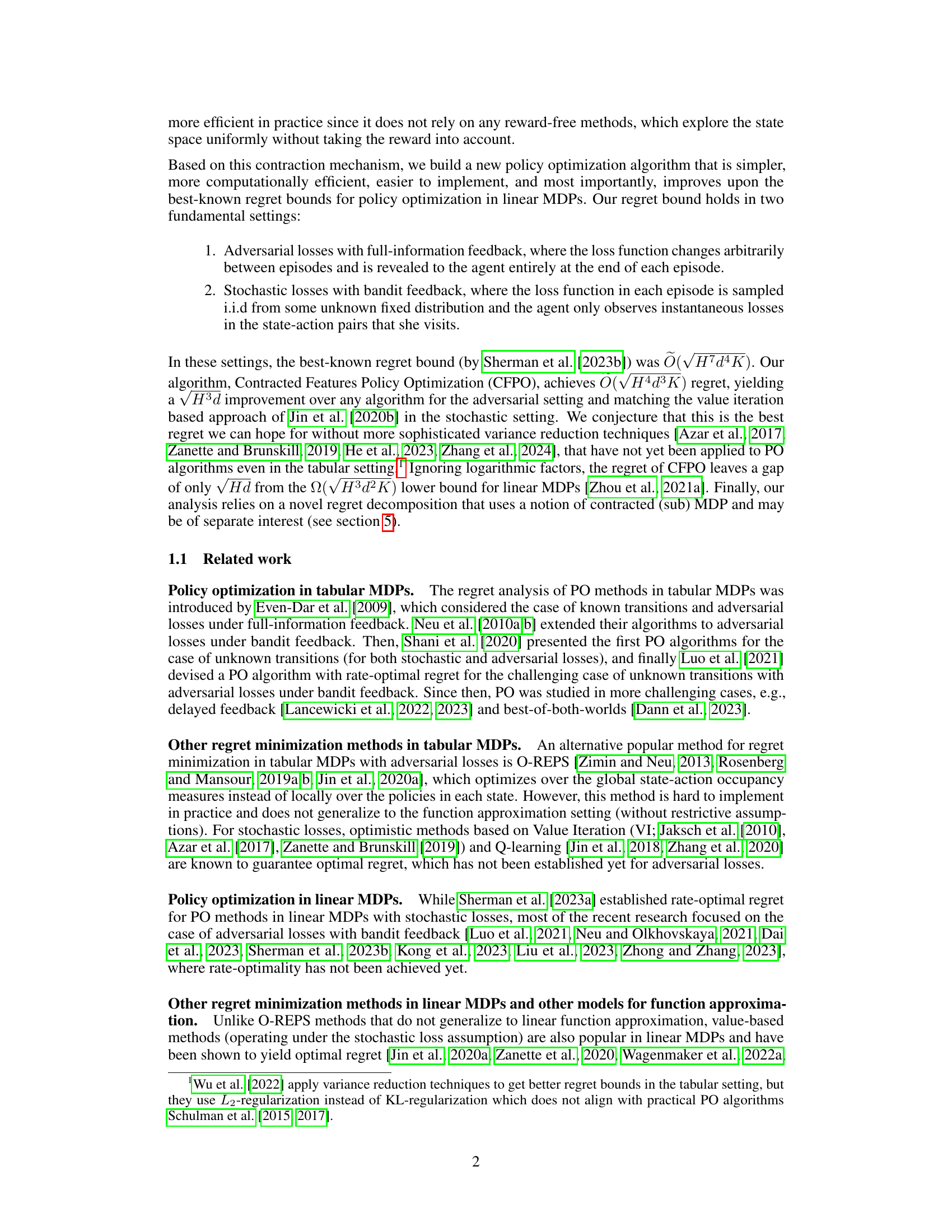

Policy optimization (PO) is a popular Reinforcement Learning (RL) approach, but existing rate-optimal algorithms for linear Markov Decision Processes (MDPs) suffer from a costly ‘warm-up’ phase needed to get initial estimates. This makes them impractical. The existing best-known regret bound is also suboptimal.

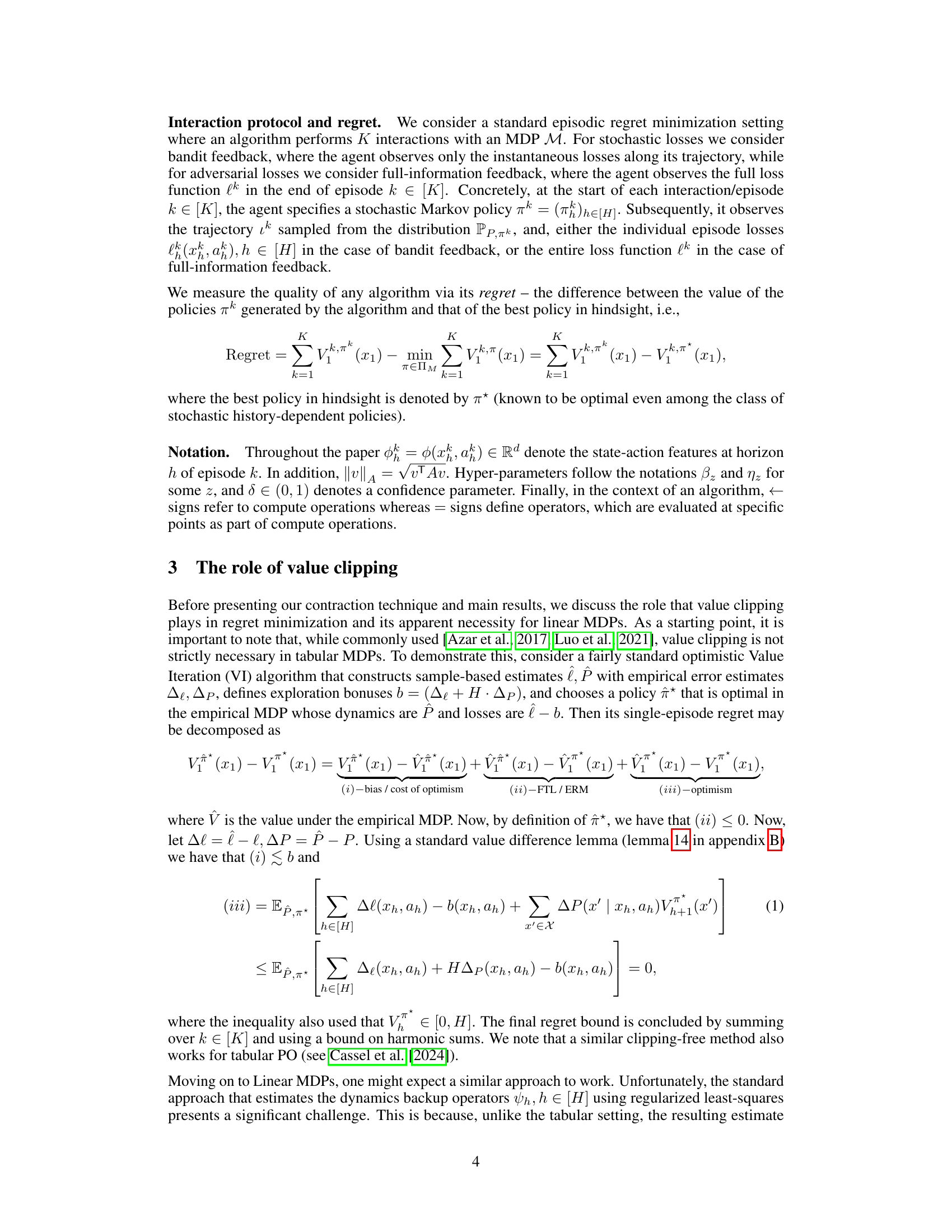

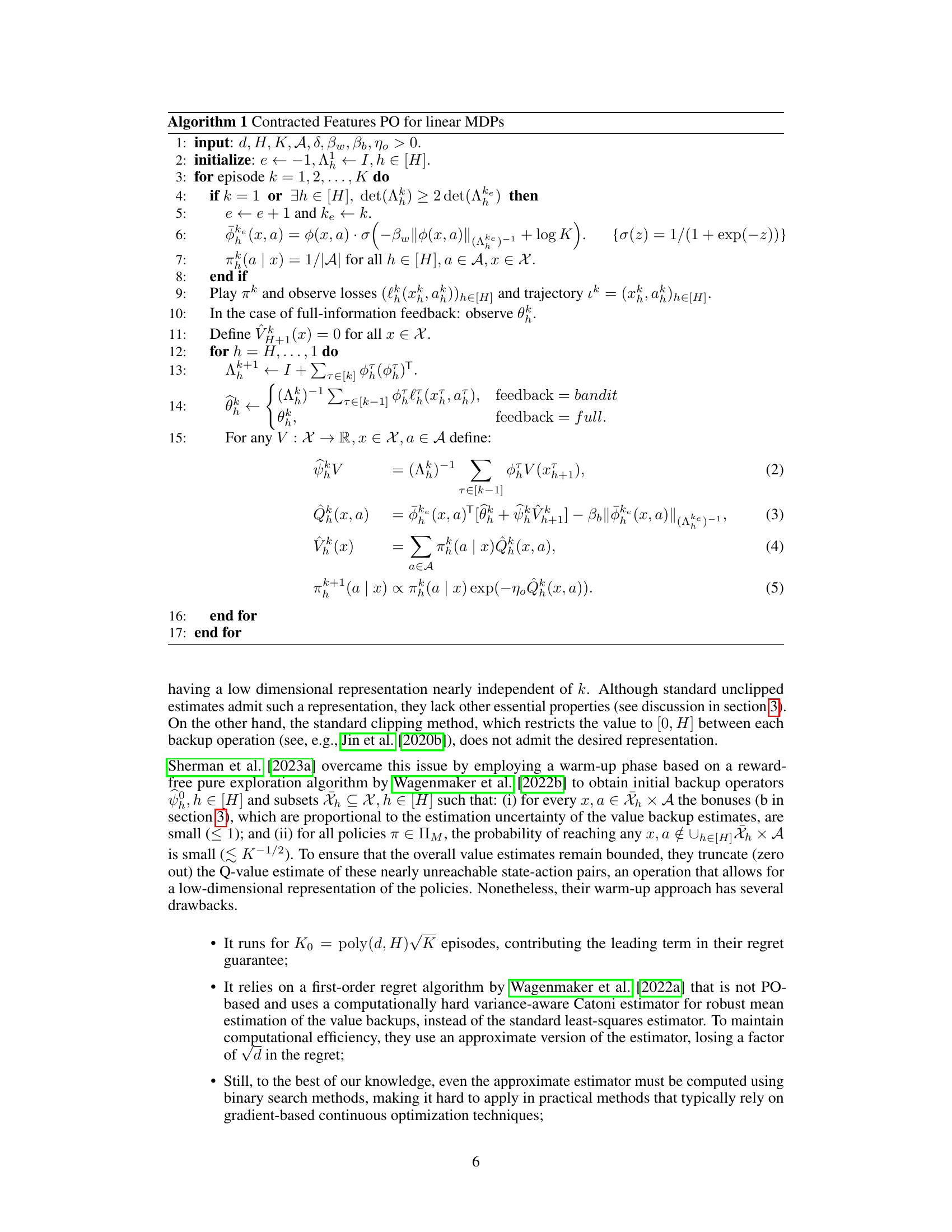

This work introduces a novel algorithm called Contracted Features Policy Optimization (CFPO) which overcomes these limitations. CFPO incorporates a ‘contraction mechanism’ that replaces the warm-up phase, leading to a simpler, more efficient, and rate-optimal algorithm with significantly improved regret bounds. The improved regret and efficiency make it a significant advancement in the field.

Key Takeaways#

Why does it matter?#

This paper is crucial because it presents a novel, efficient policy optimization algorithm that significantly improves upon existing methods. It offers rate-optimal regret guarantees in linear Markov Decision Processes without the computationally expensive warm-up phase, opening new avenues for research in reinforcement learning and function approximation.

Visual Insights#

Full paper#