↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Current drag-based image editing methods rely on iterative latent semantic optimization, which is slow. This limits practical applications, especially in scenarios requiring real-time interaction or high-throughput processing. There is a need for a faster approach that maintains the high precision and quality expected from generative model-based editing.

FastDrag introduces a novel one-step latent warpage function (LWF) to directly adjust pixel locations in latent space, significantly speeding up the editing process. It also incorporates a bilateral nearest neighbor interpolation (BNNI) to address the null regions that may arise from the LWF and a consistency-preserving strategy to maintain the coherence between the edited and original images. The results on the DragBench dataset show that FastDrag is much faster than other state-of-the-art methods while achieving comparable image quality.

Key Takeaways#

Why does it matter?#

This paper is important because it presents FastDrag, a novel one-step drag-based image editing method that significantly accelerates the editing process. This addresses a critical limitation of existing methods, which typically require multiple iterations, thus impacting real-world applications and opening new avenues for research in efficient image manipulation using generative models. The improved speed and efficiency achieved by FastDrag make it particularly relevant for real-time or interactive image editing scenarios.

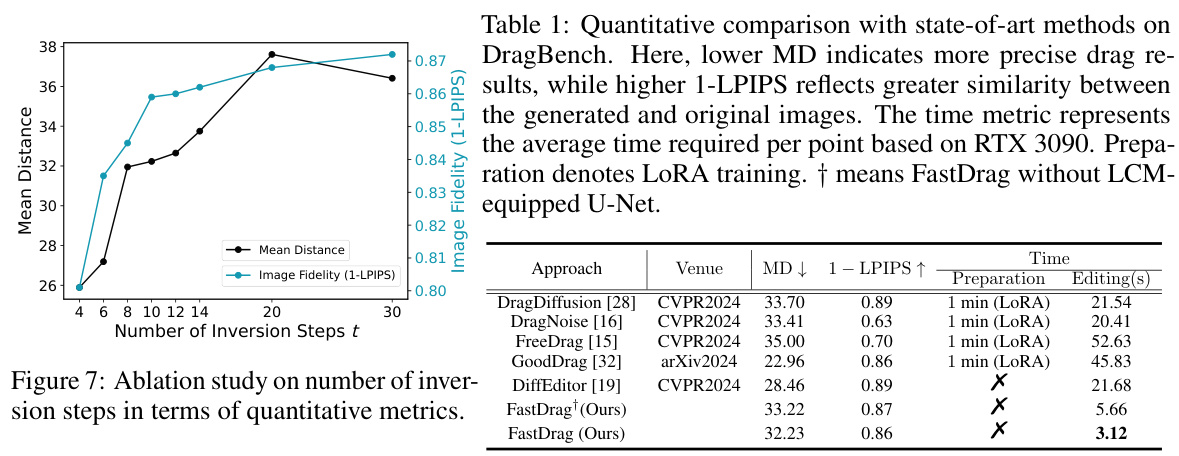

Visual Insights#

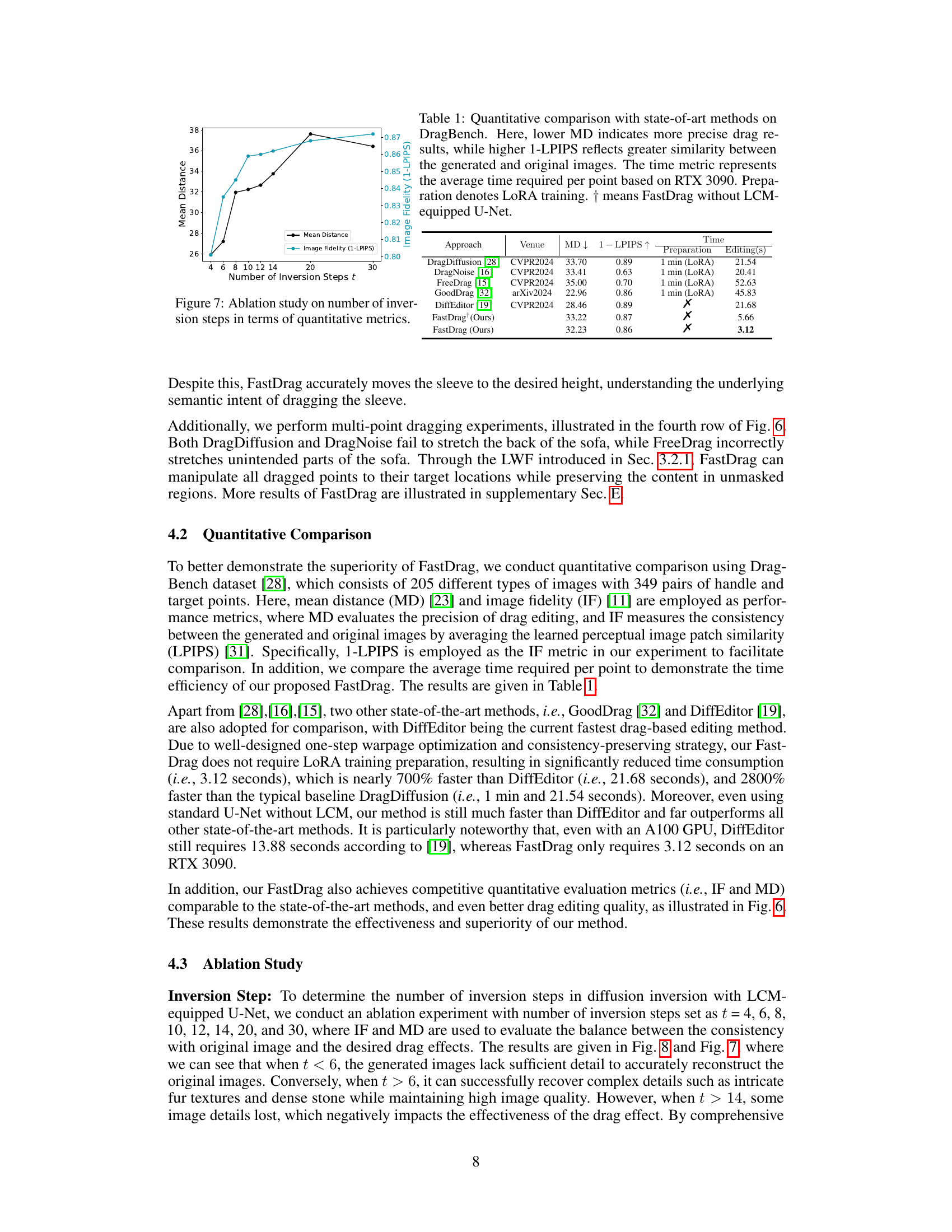

This figure compares existing drag-based image editing methods with the proposed FastDrag method. (a) illustrates how traditional methods typically involve multiple iterative steps (n-step) to achieve semantic optimization, using either motion-based or gradient-based approaches. Each step requires small-scale adjustments. (b) shows that FastDrag uses a novel one-step warpage optimization strategy, making the process significantly faster. It leverages a latent warpage function (LWF) to directly calculate the necessary adjustments in one go.

In-depth insights#

One-Step Drag Editing#

The concept of “One-Step Drag Editing” in image manipulation signifies a paradigm shift from traditional iterative methods. Instead of multiple optimization steps, it aims for instantaneous results, offering significant improvements in processing speed and user experience. This is achieved through innovative techniques such as latent warpage functions that directly manipulate latent space representations, effectively simulating the desired changes in a single step. Addressing the challenges of null regions created by such direct manipulation is crucial, and methods like bilateral nearest neighbor interpolation are essential to maintain image coherence. While promising, limitations remain, especially concerning the preservation of fine details and the handling of extremely long-distance drag operations. The success of this one-step approach hinges on the effectiveness of these latent space manipulation and interpolation techniques, showcasing a trade-off between speed and accuracy. Future research could focus on enhancing the fidelity of one-step editing while maintaining its speed advantage.

Latent Warping Function#

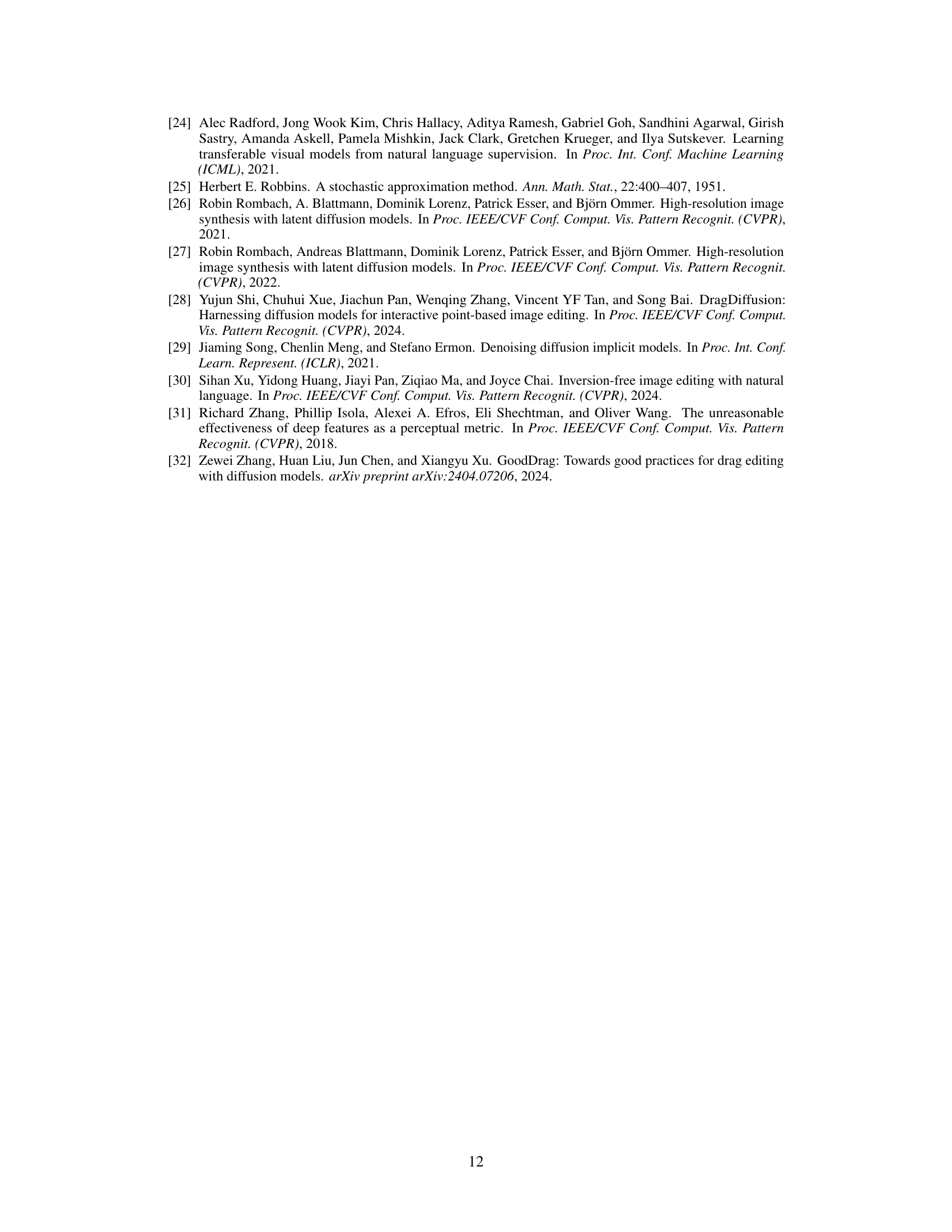

The concept of a “Latent Warping Function” in the context of image editing using generative models is a novel approach to achieve one-step manipulation. It elegantly addresses the limitations of n-step iterative methods by directly manipulating the latent representation of an image. The core idea is to simulate the behavior of a stretched material, where drag instructions act as external forces, and the function calculates the displacement of individual pixels in the latent space. This direct approach leads to a significant speedup compared to iterative refinement. The effectiveness of the LWF hinges on its ability to accurately model the transformation from user input to latent space adjustments. It requires careful design and parameter tuning to balance semantic consistency and prevent artifacts or distortions. A successful LWF would need to consider factors like the type of drag interaction, material properties (simulated via stretch factor), and feature correspondence to maintain image integrity. Ultimately, the Latent Warping Function’s success depends on its ability to produce a visually coherent and semantically consistent edit in a single step, making it a powerful tool for efficient image editing.

BNNI Interpolation#

The proposed BNNI (Bilateral Nearest Neighbor Interpolation) method is a crucial component of FastDrag, addressing the issue of null regions created during the latent warpage process. These null regions arise from the displacement of pixels in latent space and, if left unaddressed, would compromise the semantic integrity of the edited image. BNNI cleverly tackles this by interpolating the missing pixel values using similar features from the neighboring areas. This approach leverages the spatial relationships between pixels to maintain a coherent and natural look, enhancing the realism and visual quality of the edited image. The bilateral aspect of the interpolation likely involves considering both spatial proximity and feature similarity, ensuring a smoother transition at the boundaries of the manipulated region. The effectiveness of BNNI is demonstrated through its significant contribution to improving the overall editing performance and minimizing semantic inconsistencies. By seamlessly filling in the missing data, BNNI effectively bridges the gap between the one-step warpage process and the final output, making the FastDrag system more robust and efficient.

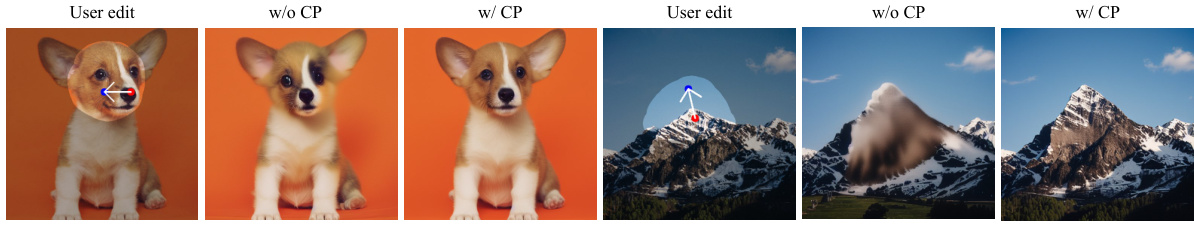

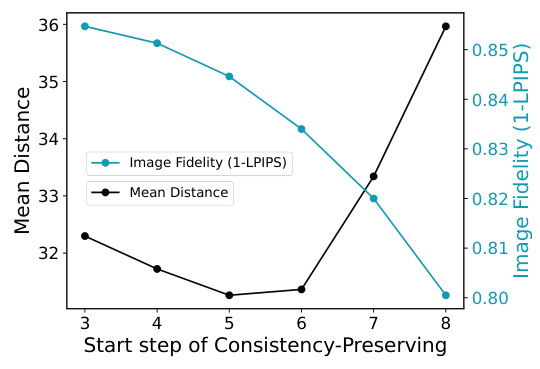

Consistency-Preserving#

The heading ‘Consistency-Preserving’ highlights a crucial aspect of image manipulation techniques. It suggests a method designed to maintain the integrity and coherence of the original image during editing. This is especially important in drag-based image editing, where modifications are made through direct interaction, potentially leading to inconsistencies and semantic errors. The approach likely involves leveraging semantic information from the original image, perhaps extracted during a diffusion inversion process, to guide the editing process and prevent abrupt changes or distortions. This could involve the use of latent space representations to subtly adjust pixel positions while preserving the overall semantic meaning and visual fidelity. The success of such a method would be reflected in edited images that appear natural and consistent with the original, avoiding unnatural artifacts or visually jarring modifications. Preserving consistency is vital for creating realistic and high-quality edits.

Future Work#

The authors could explore enhancing FastDrag’s handling of extremely long-distance manipulations by investigating more sophisticated latent space warping techniques or incorporating additional semantic constraints. Addressing the sensitivity to precise drag instructions is crucial; future work might focus on more robust methods for interpreting user input, potentially using machine learning to predict user intent or employing adaptive masking strategies. Improving the preservation of fine details during editing could involve advanced denoising techniques or exploring alternative latent diffusion models. Finally, exploring the application of FastDrag to other image editing tasks beyond the current drag-based paradigm would be valuable, such as expanding to tasks involving more complex image manipulations or different modalities of user interaction.

More visual insights#

More on figures

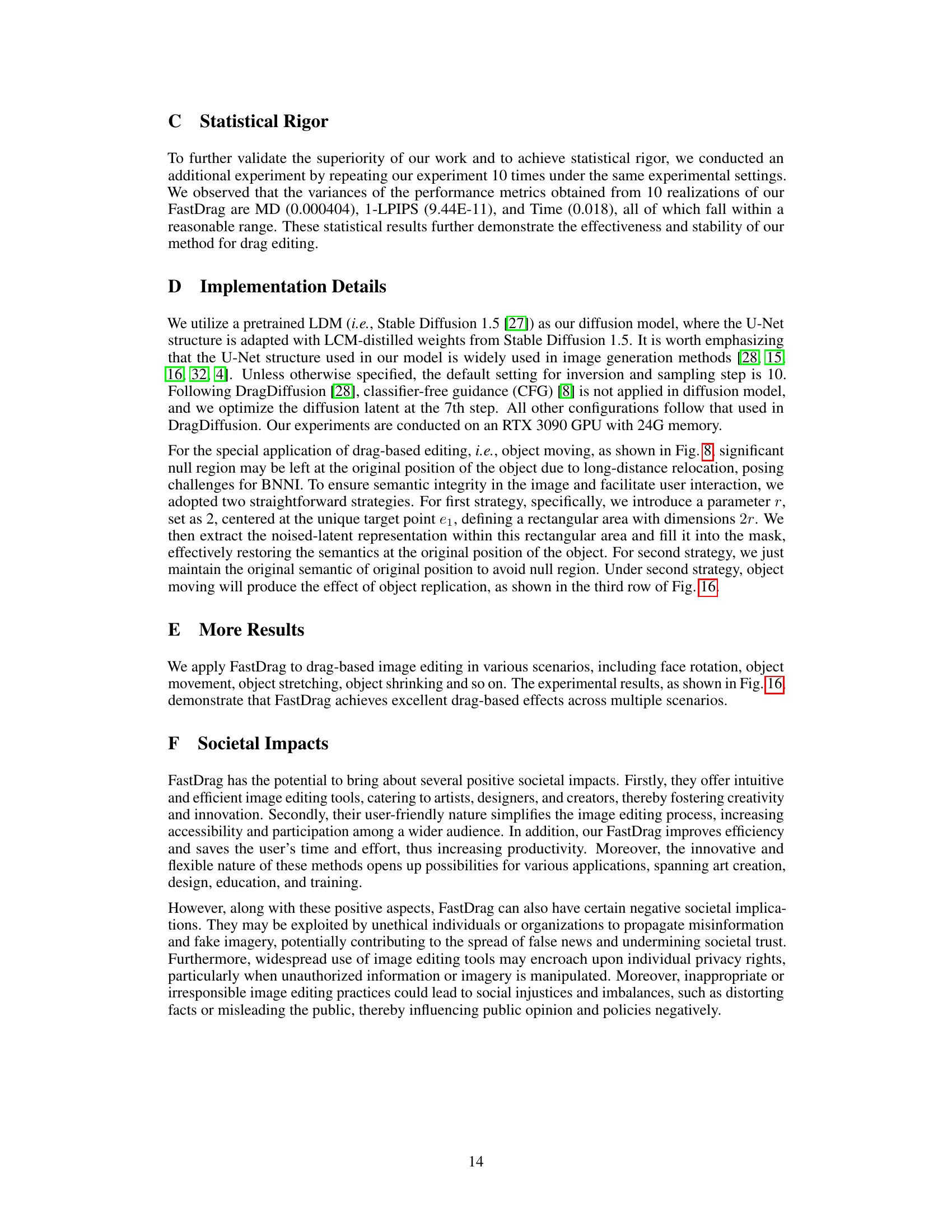

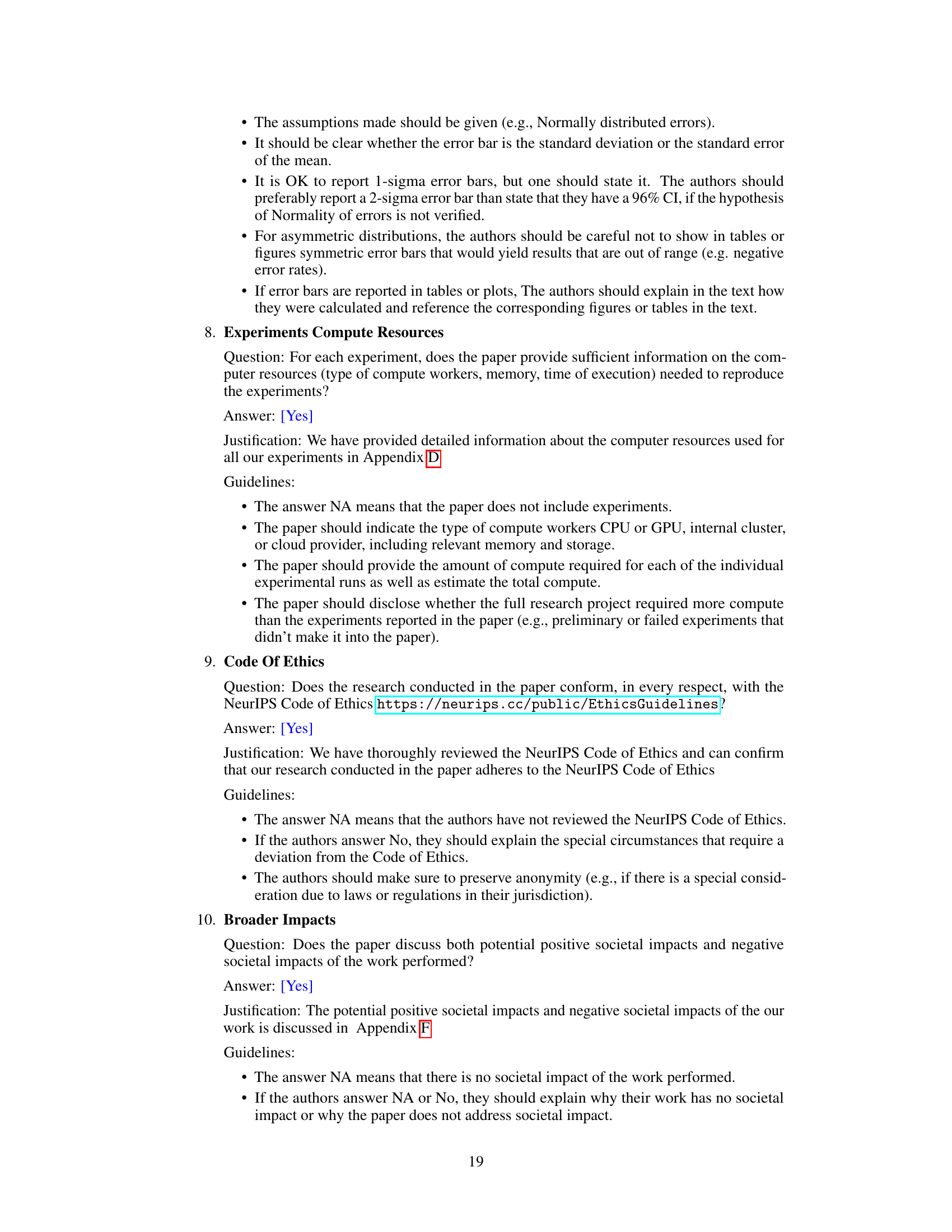

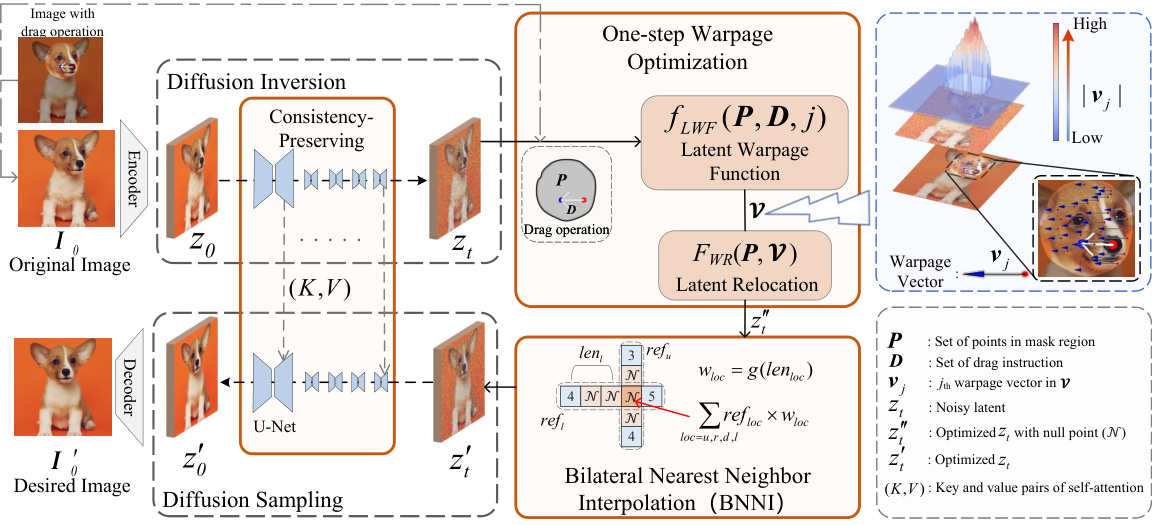

The figure shows the overall framework of the FastDrag method, which consists of four main phases: diffusion inversion, one-step warpage optimization, bilateral nearest neighbor interpolation (BNNI), and diffusion sampling. Diffusion inversion generates a noisy latent representation of the input image. The one-step warpage optimization uses a latent warpage function (LWF) to adjust pixel locations in the latent space based on user-specified drag instructions. BNNI interpolates null regions that may arise from the warpage. Finally, diffusion sampling reconstructs the edited image from the optimized latent representation. The process also incorporates a consistency-preserving strategy to maintain coherence between the original and edited images.

This figure illustrates the geometric interpretation of the component warpage vector v*. A circle O circumscribes the mask region. The vector v* connects a feature point pj to its new position p* after a drag operation. The length of v* is inversely proportional to the distance between the feature point pj and the handle point si of the drag instruction di. The vector v* is parallel to the drag instruction di. The point q is the intersection of the lines extending from si to pj and ei to p*. The length of v* is determined by the distances si to pj, ei to p*, and si to q, showing how the magnitude of the warpage vector decreases as the feature point pj approaches the circle O.

This figure illustrates the Bilateral Nearest Neighbor Interpolation (BNNI) method used in FastDrag to address the issue of null regions in the latent space after applying the latent warpage function. The leftmost image shows the noisy latent with null points represented by the beige color. The image in the middle shows how BNNI finds the four nearest neighboring points (with values) to a null point, denoted as up, right, down, and left, and then calculates the weighted average of their values to interpolate the null point’s value. This weighted average is computed using a weight formula which involves distances to the reference points and numbers of pixels in each direction, as described in the caption. The rightmost image shows the interpolated latent space after BNNI is applied, thus effectively enhancing the semantic integrity of the edited image.

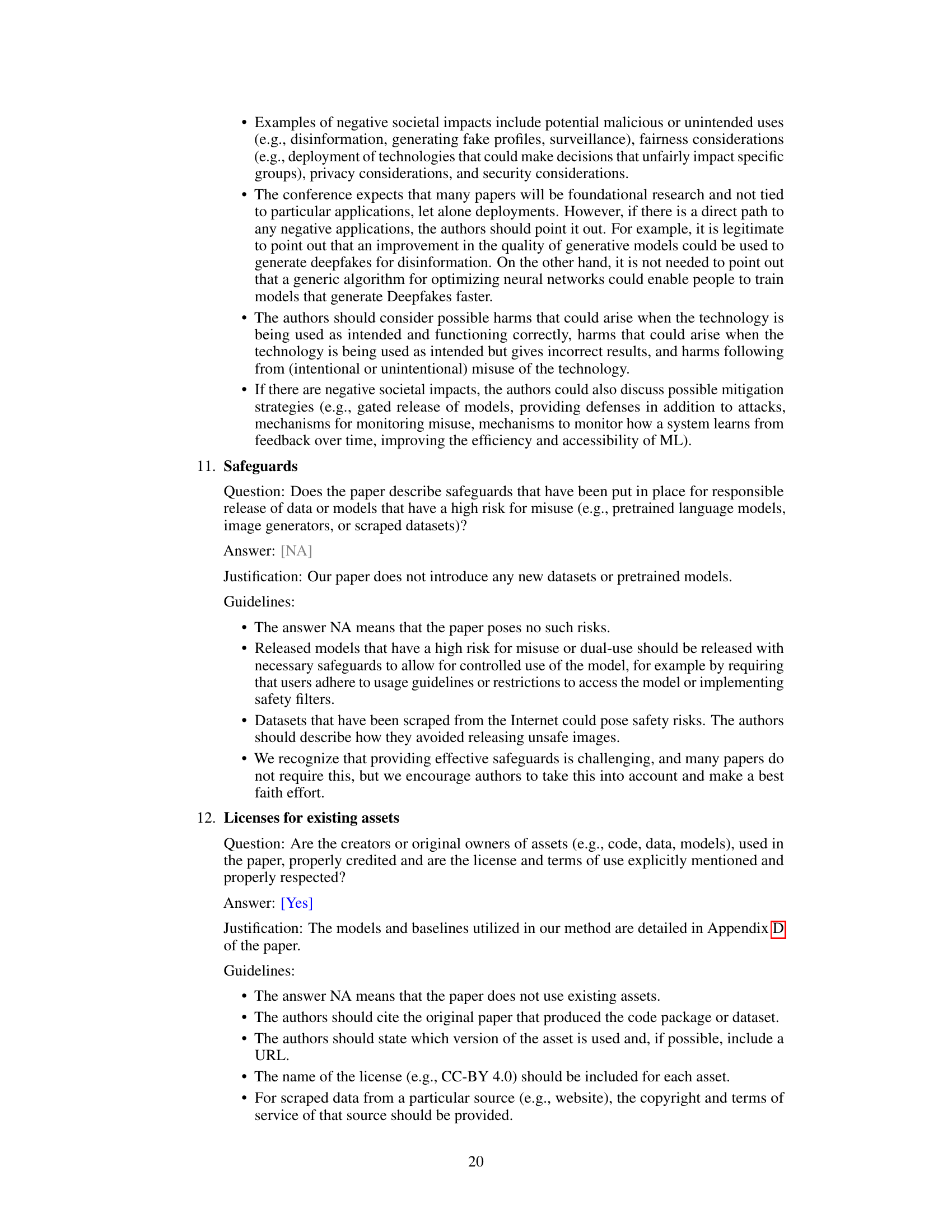

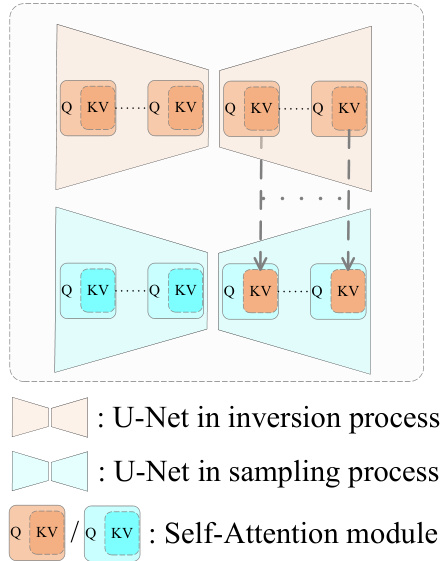

This figure illustrates the consistency-preserving strategy used in FastDrag. The top half shows the self-attention module (Q, K, V) within the U-Net during the inversion process. The bottom half shows the same module during the sampling process. Key and value pairs (K, V) from the inversion process are used to guide the sampling process, ensuring consistency between the edited and original images. The dotted lines indicate the flow of information from the inversion to the sampling stage.

This figure shows a qualitative comparison of FastDrag with three other state-of-the-art drag-based image editing methods: DragDiffusion, FreeDrag, and DragNoise. The results are shown for four different image editing tasks. Each row shows the user edit instruction (leftmost column) and the results obtained by each method. The figure highlights that FastDrag achieves better image quality and more effective drag operations, especially in complex scenarios.

This figure illustrates the overall framework of the FastDrag method, which consists of four main phases: 1. Diffusion inversion: generating a noisy latent representation from the original image. 2. One-step warpage optimization: using a Latent Warpage Function (LWF) and a latent relocation operation to adjust the position of individual pixels based on drag instructions, achieving one-step semantic optimization. 3. Bilateral Nearest Neighbor Interpolation (BNNI): interpolating null regions in the latent space to improve semantic integrity. 4. Diffusion sampling: generating the final edited image from the optimized noisy latent. A consistency-preserving strategy is also employed to ensure consistency between the original and edited images.

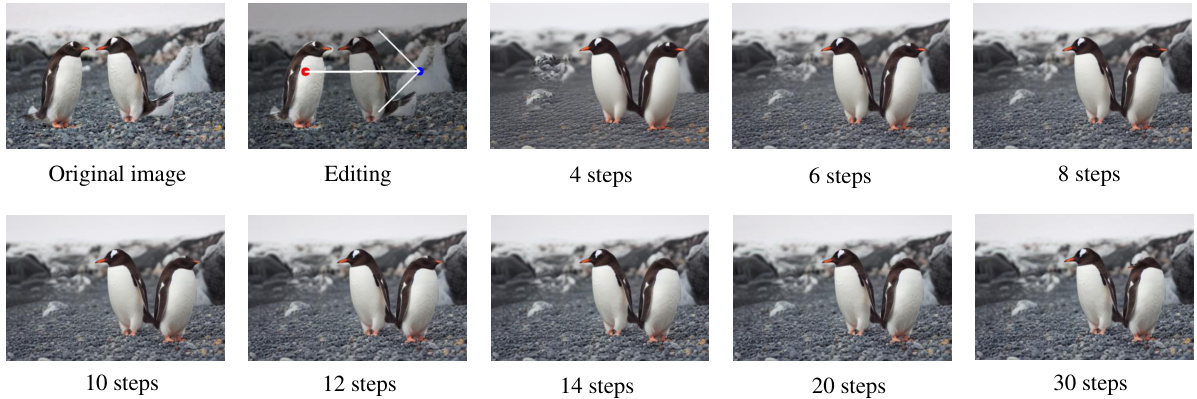

This figure shows the results of an ablation study on the number of inversion steps used in the FastDrag method. The top row shows the original image and the user edit. The subsequent rows show the results of the editing process with different numbers of inversion steps (4, 6, 8, 10, 12, 14, 20, and 30). The goal is to visually demonstrate how the number of inversion steps affects the outcome of the image editing process. The image shows two penguins. The user intends to move the penguin on the right, slightly away from the penguin on the left.

This figure shows an ablation study comparing different interpolation methods for handling null points (missing data) in the latent space during image editing. The methods compared are: maintaining the original value, interpolating with zeros, interpolating with random noise, and using the proposed Bilateral Nearest Neighbor Interpolation (BNNI). The results demonstrate that BNNI effectively addresses semantic losses by using similar features from neighboring areas to improve the quality of drag-based image editing.

This ablation study compares the results of FastDrag with and without the consistency-preserving strategy. The images show that using the consistency-preserving strategy helps maintain the image consistency between the original and the edited image, resulting in better drag editing effects. The consistency-preserving strategy uses information from the original image, saved in the self-attention module during diffusion inversion, to guide the diffusion sampling process.

This figure shows a qualitative comparison of FastDrag with three other state-of-the-art drag-based image editing methods: DragDiffusion, FreeDrag, and DragNoise. Each row represents a different image editing task, and each column shows the results of a different method. The results demonstrate that FastDrag produces more effective drag results while maintaining high image quality, even in images with complex textures, compared to existing methods. FastDrag successfully rotates an animal’s face while preserving intricate fur textures, stretches a sofa’s back while preserving the content in unmasked regions, and moves a sleeve to a higher position accurately. In contrast, other methods may fail to perform the drag operation correctly, or the drag results appear less natural.

This figure shows two examples of drag-based image editing where the success of the editing depends highly on the precision of the user’s drag instruction. The first example (a) shows an attempt to thin the hair while keeping the face size. It shows a failed and a successful result. The failure is likely due to including the face in the mask region. The second example (b) shows an attempt to lengthen the beak of a hummingbird. It also shows a failed and a successful result. Here, the failure is likely due to not placing the handle point precisely on the beak. This figure highlights the importance of precise drag instructions for successful drag-based image editing, especially for fine-grained manipulations.

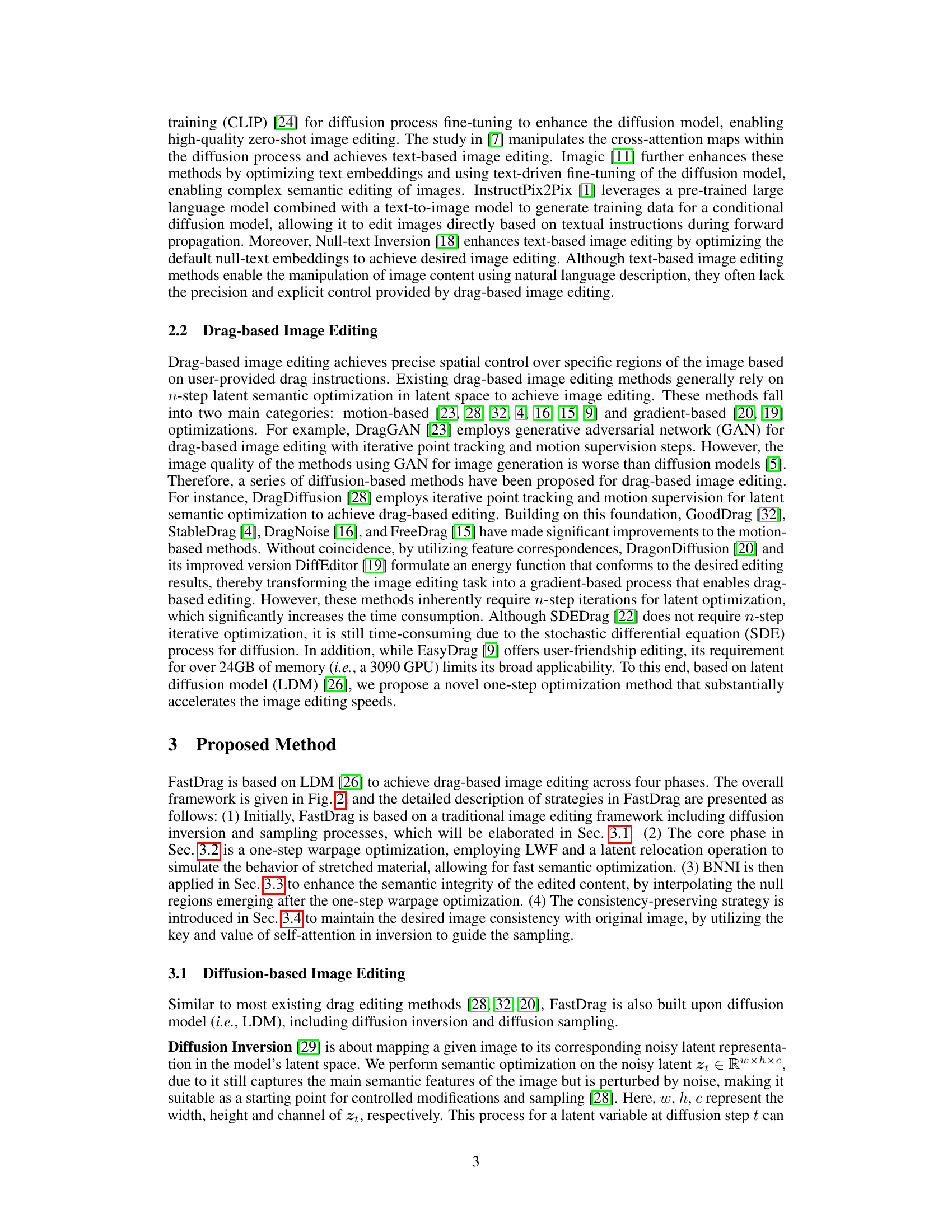

This figure shows the result of an ablation study on the number of inversion steps used in the FastDrag method. The x-axis represents the number of inversion steps, while the y-axis shows the mean distance (MD) and image fidelity (1 - LPIPS) values. The graph illustrates how the number of inversion steps affects the quantitative metrics of the method. The optimal number of steps for this method is shown to be around 6-14 steps.

This figure shows the impact of varying the number of inversion steps during the diffusion process on the quantitative metrics of the FastDrag model. The x-axis represents the number of inversion steps, while the y-axis shows the Mean Distance (MD) and Image Fidelity (1-LPIPS). Lower MD values indicate more precise drag results, and higher 1-LPIPS values reflect better similarity between the generated and original images. The graph helps to determine the optimal number of inversion steps that balance image quality and editing precision. The results show that a sweet spot exists, with too few steps resulting in poor results and too many steps not significantly improving the metrics.

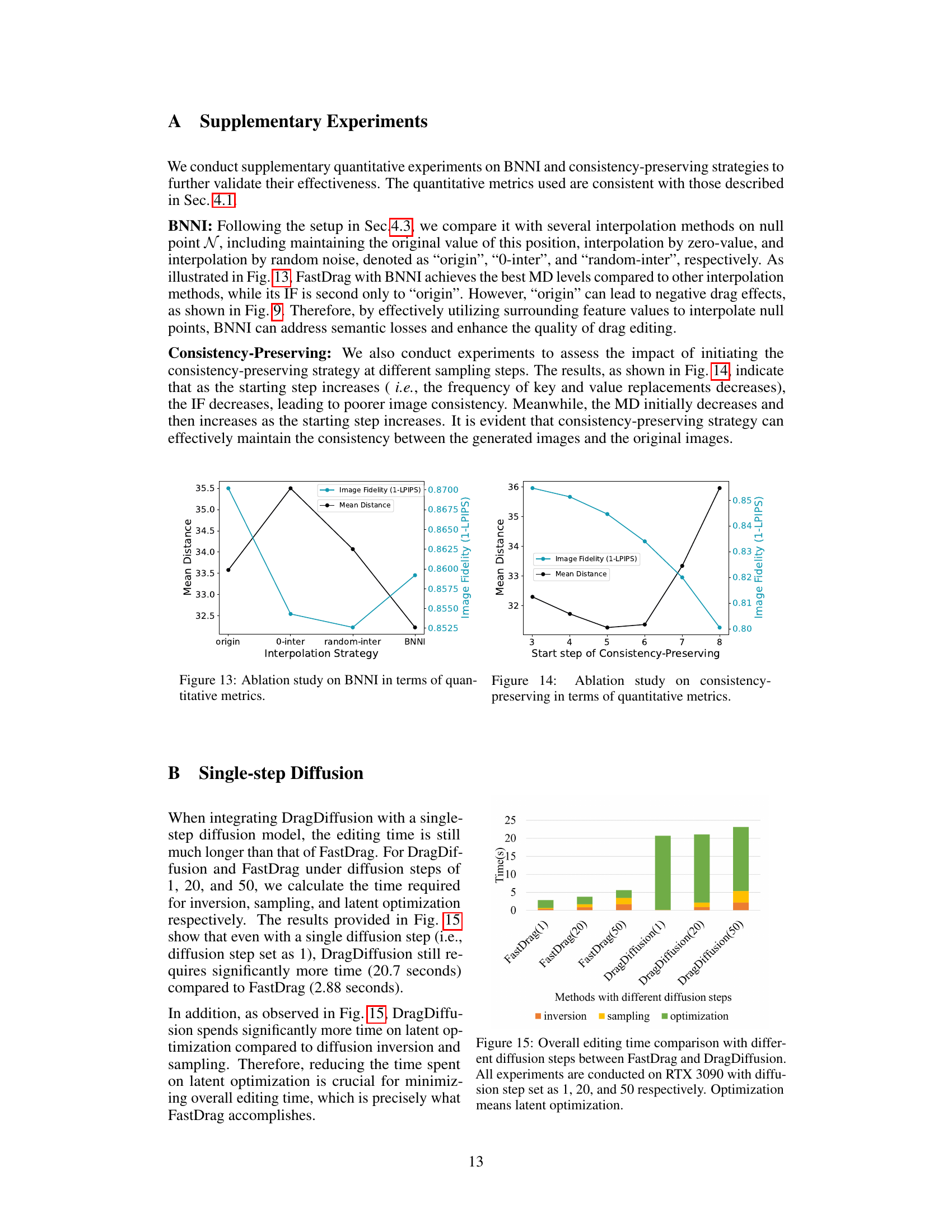

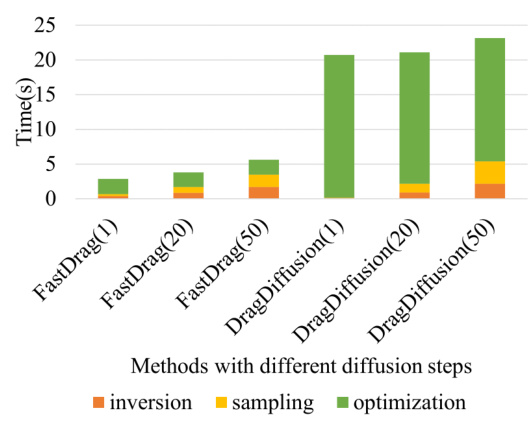

This figure shows a bar chart comparing the time taken for different stages (inversion, sampling, optimization) of image editing using FastDrag and DragDiffusion methods with varying diffusion steps (1, 20, and 50). It highlights FastDrag’s significantly faster processing time across all stages, especially in the optimization phase, which is the most time-consuming part in conventional methods.

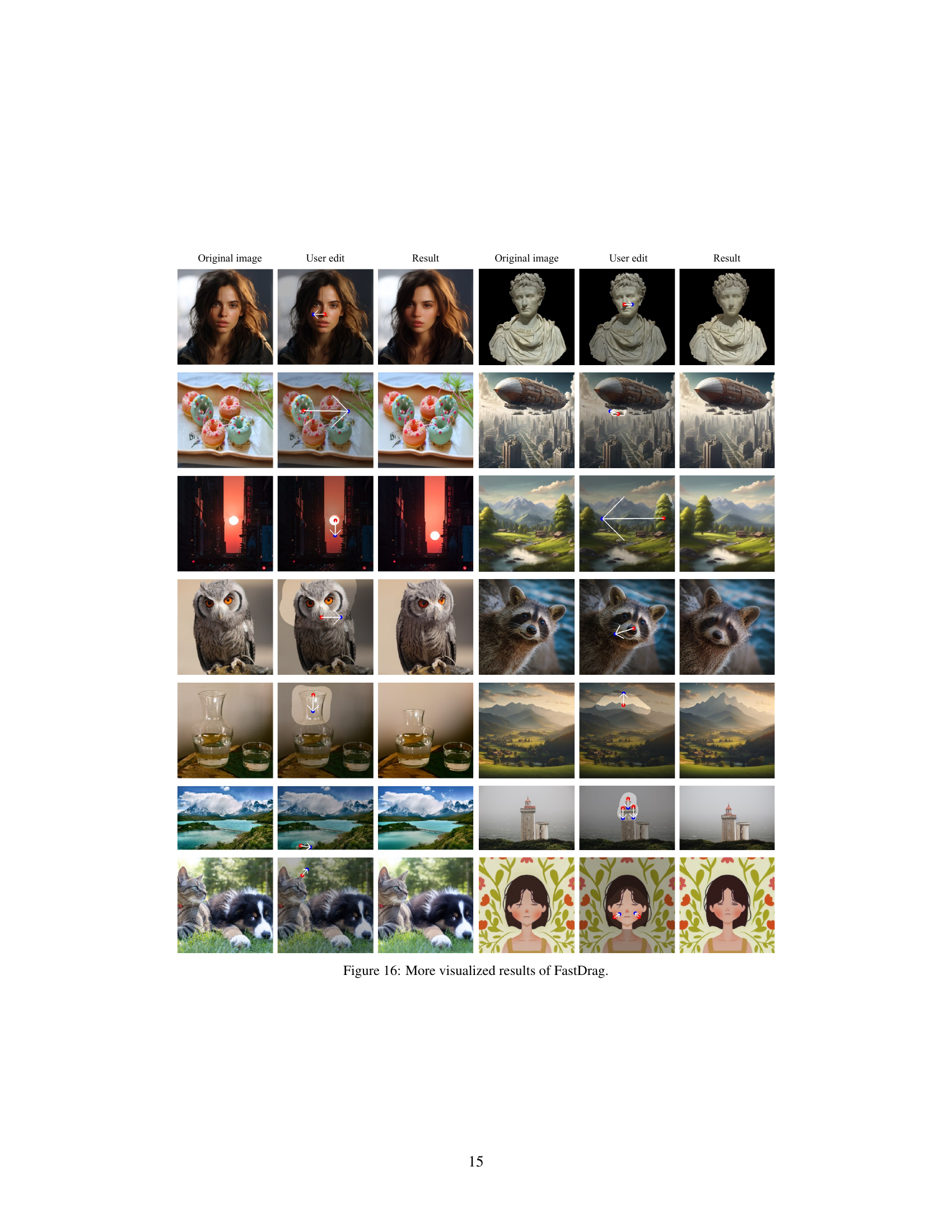

This figure shows additional examples of image manipulations achieved using the FastDrag method. It showcases the versatility of the method across a range of image types and editing tasks, demonstrating its ability to accurately reflect user intentions in manipulating objects or image regions, even those with intricate details or complex textures.

Full paper#