↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Autonomous driving simulation heavily relies on realistic traffic trajectory generation for effective testing. Existing methods primarily focus on individual vehicle trajectories, lacking the complexity of interactive traffic dynamics which significantly limits the controllability and realism of generated scenarios. This makes it hard to test autonomous vehicles’ ability to respond to complex situations and real-world challenges such as traffic jams.

InteractTraj tackles this by directly learning the mapping between abstract language descriptions of traffic scenarios and concrete, formatted interaction-aware numerical codes. It interprets natural language into three types of codes: interaction, vehicle, and map codes, which are then used by the code-to-trajectory decoder to produce the final interactive trajectories. The decoder employs a two-step interaction-aware feature aggregation to effectively integrate code information with environmental map data. Extensive experiments demonstrate InteractTraj’s superior performance over state-of-the-art methods, achieving significant improvements in accuracy and demonstrating high controllability via diverse natural language commands. User studies confirm the model’s improved realism.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces InteractTraj, the first language-driven traffic trajectory generator capable of producing interactive traffic scenarios. This addresses a critical limitation in autonomous driving simulation, paving the way for more realistic and controllable testing environments. The novel interaction-aware encoding strategy and two-step feature aggregation method are significant contributions to the field, opening avenues for more sophisticated and user-friendly trajectory generation techniques.

Visual Insights#

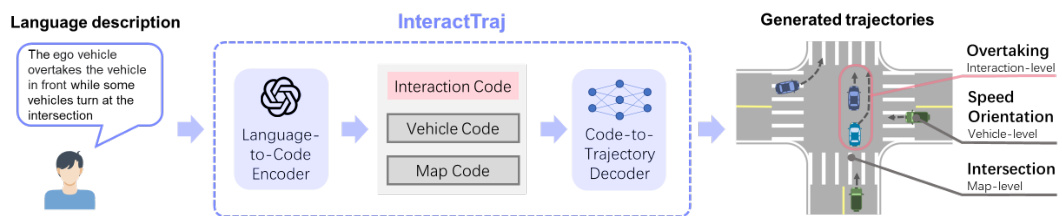

This figure shows the overall architecture of InteractTraj, a language-driven interactive traffic trajectory generation model. It takes a natural language description as input. This description is processed by a Language-to-Code Encoder, which utilizes an LLM to convert the description into three types of numerical codes: Interaction Code, Vehicle Code, and Map Code. These codes are then fed into a Code-to-Trajectory Decoder, which generates the final interactive traffic trajectories. The figure also visually represents an example scenario involving an overtaking maneuver, highlighting the interaction-aware nature of the generated trajectories.

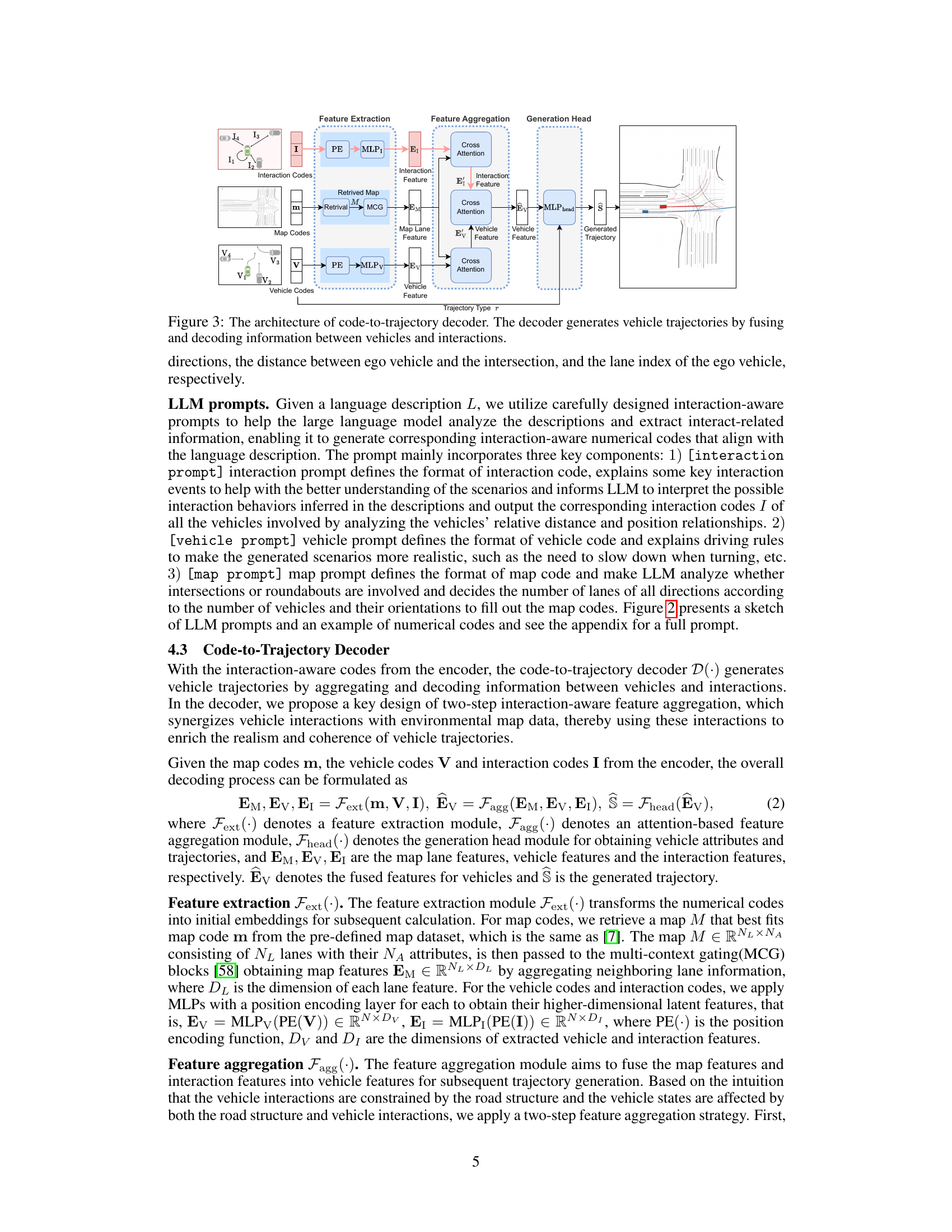

This table presents a quantitative comparison of the proposed InteractTraj model against other state-of-the-art methods for traffic trajectory generation realism. The evaluation is performed on two widely used datasets: Waymo Open Motion Dataset (WOMD) and nuPlan. The metrics used to assess realism include mean average displacement error (mADE), minimum average displacement error (minADE), mean final displacement error (mFDE), minimum final displacement error (minFDE), scenario collision rate (SCR), and Hausdorff distance (HD). Lower values in these metrics generally indicate higher realism. The table shows that InteractTraj achieves significantly better results than the other methods, demonstrating its superior performance in generating realistic traffic trajectories.

In-depth insights#

InteractTraj: Overview#

InteractTraj is presented as a novel language-driven interactive traffic trajectory generator. Its core innovation lies in bridging abstract trajectory descriptions from natural language to concrete, interaction-aware numerical codes. These codes encapsulate key aspects of the traffic scene, including vehicle interactions (relative positions, distances, and directions), individual vehicle states (speed, position, lane), and map features (number of lanes, intersection presence). This multi-faceted code representation allows InteractTraj to synergize interactions, vehicle dynamics, and environmental context. The system leverages a two-module architecture. A language-to-code encoder, using an LLM and novel interaction-aware encoding, translates language commands into these numerical codes. A subsequent code-to-trajectory decoder then utilizes interaction-aware feature aggregation to synthesize the codes into realistic interactive traffic trajectories. This two-step process enables high controllability and superior realism, particularly concerning complex interactions absent in prior methods, like traffic jams. The ultimate goal of InteractTraj is to offer enhanced controllability over traffic trajectory generation via natural language, a significant step towards advancing autonomous driving simulation.

LLM-Based Encoding#

LLM-based encoding leverages the power of large language models to transform abstract descriptions of traffic scenarios into structured numerical representations. This approach offers several advantages. First, it enables the generation of highly realistic and interactive traffic trajectories by incorporating complex relationships between vehicles. Second, it significantly improves controllability, allowing users to fine-tune the generated trajectories through natural language commands. The use of LLMs is crucial because of their ability to understand the nuances and contextual information within natural language descriptions, far surpassing the capabilities of traditional rule-based methods. This results in a more flexible and user-friendly system. However, there are also potential drawbacks, such as the computational cost associated with employing LLMs and the potential for biases in the model’s output. Further research could focus on mitigating these drawbacks and exploring the use of different LLMs or techniques to enhance the encoding process. The development of robust interaction-aware prompts is crucial, as it directly impacts the quality and accuracy of the resulting numerical codes. The success of this approach depends heavily on designing prompts capable of effectively guiding the LLM towards generating codes that accurately reflect the intended traffic scenarios.

Interaction Modeling#

Interaction modeling in traffic trajectory generation is crucial for realism. Accurately representing the complex interplay between vehicles is key to producing believable and useful simulations. Without robust interaction modeling, autonomous vehicle testing and development are severely limited. The challenge lies in capturing not only the spatial relationships between vehicles—distance and relative direction—but also the temporal dynamics, such as the anticipation and response of one vehicle to the actions of others. This requires sophisticated algorithms that go beyond simple rule-based approaches. Methods utilizing deep learning, particularly those leveraging graph neural networks or attention mechanisms, offer promise in handling the inherent complexity of multi-agent interactions. Furthermore, integrating contextual information from the environment, such as lane markings and traffic signals, into the interaction model significantly improves accuracy and predictability. Finally, the ability to generate diverse and controllable interaction scenarios through natural language commands opens up exciting possibilities for advanced driving simulation and autonomous driving development.

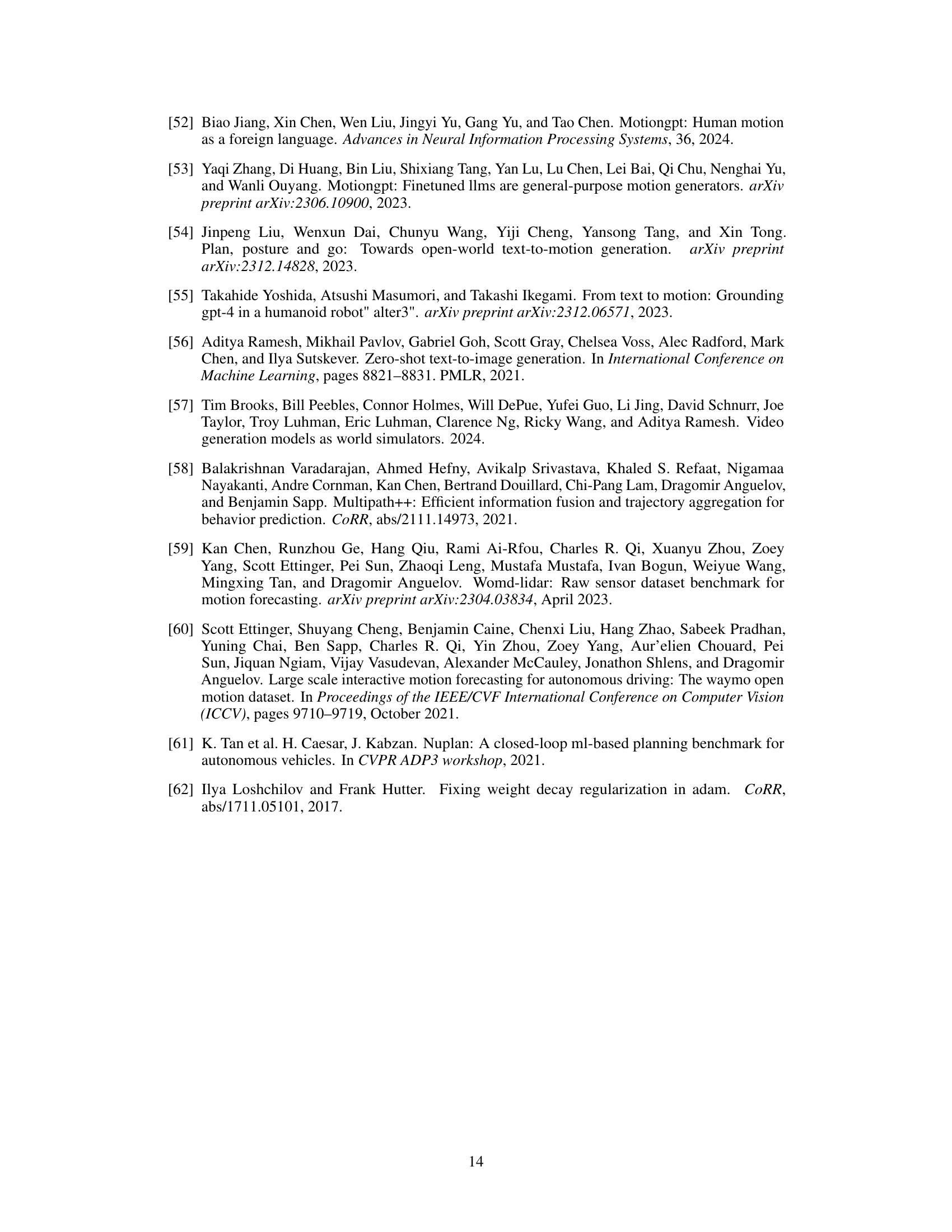

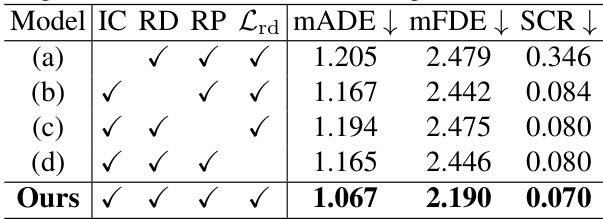

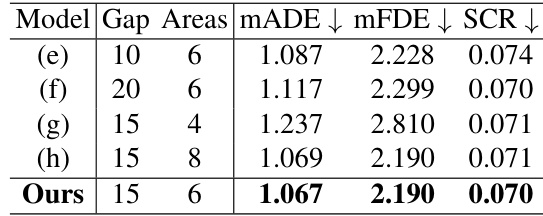

Ablation Study Results#

Ablation studies systematically remove components of a model to assess their individual contributions. In the context of a research paper, an ‘Ablation Study Results’ section would present these findings. A strong ablation study will demonstrate the importance of each component, showing a clear degradation in performance when specific parts are removed. Results should be presented quantitatively, typically using metrics relevant to the model’s task (e.g., accuracy, precision, recall for classification; mean average displacement error (mADE), final displacement error (FDE) for trajectory prediction). The discussion should highlight the relative importance of each component and explain any unexpected results. A well-executed ablation study strengthens the paper’s claims by providing evidence for the design choices made. It helps establish a causal relationship between model components and performance, moving beyond simple correlation.

Future Work#

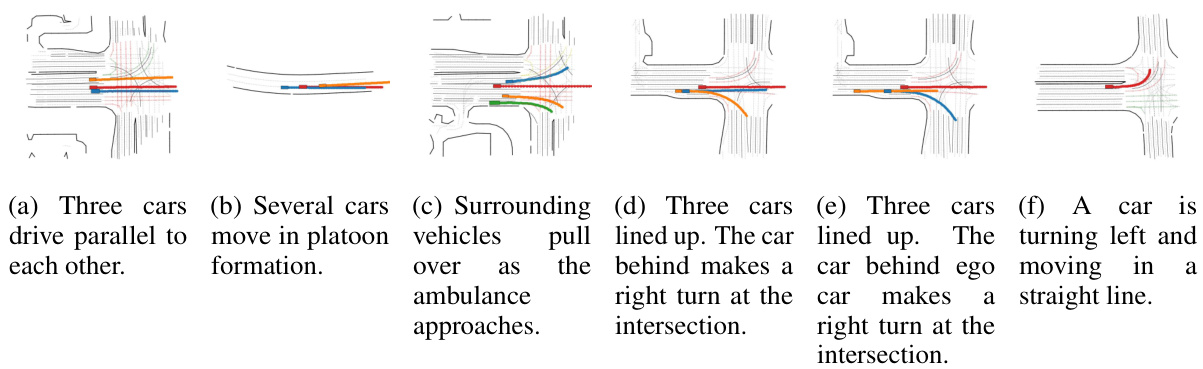

The authors mention several promising avenues for future work. Extending the model to incorporate diverse traffic participants beyond vehicles (e.g., pedestrians, cyclists) would significantly enhance realism and applicability. Furthermore, improving the map generation capabilities beyond a simple lane map to handle more complex road networks and environmental elements is crucial for wider deployment and more realistic simulation. Investigating more sophisticated interaction models that incorporate more nuanced behavioral dynamics and real-world driving patterns is essential to address limitations in current modeling. Lastly, and critically, applying InteractTraj to real-world autonomous driving systems for training and testing purposes could unlock significant advancements and demonstrate the model’s true potential. A thorough investigation into various LLM prompts to achieve more flexible and controllable outputs and addressing limitations in interaction-aware code representation is warranted. Finally, exploring the integration of additional sensory data (e.g., weather, lighting) to generate richer and more comprehensive traffic scenarios is another exciting possibility.

More visual insights#

More on figures

This figure illustrates the overall architecture of InteractTraj, a language-driven interactive traffic trajectory generation system. It shows how language descriptions are first processed by an LLM-based language-to-code encoder which converts the descriptions into interaction-aware numerical codes. These codes then serve as input for a code-to-trajectory decoder, which generates the final interactive trajectories. The process involves mapping abstract language descriptions to concrete numerical representations that capture the complex interactions between various traffic participants.

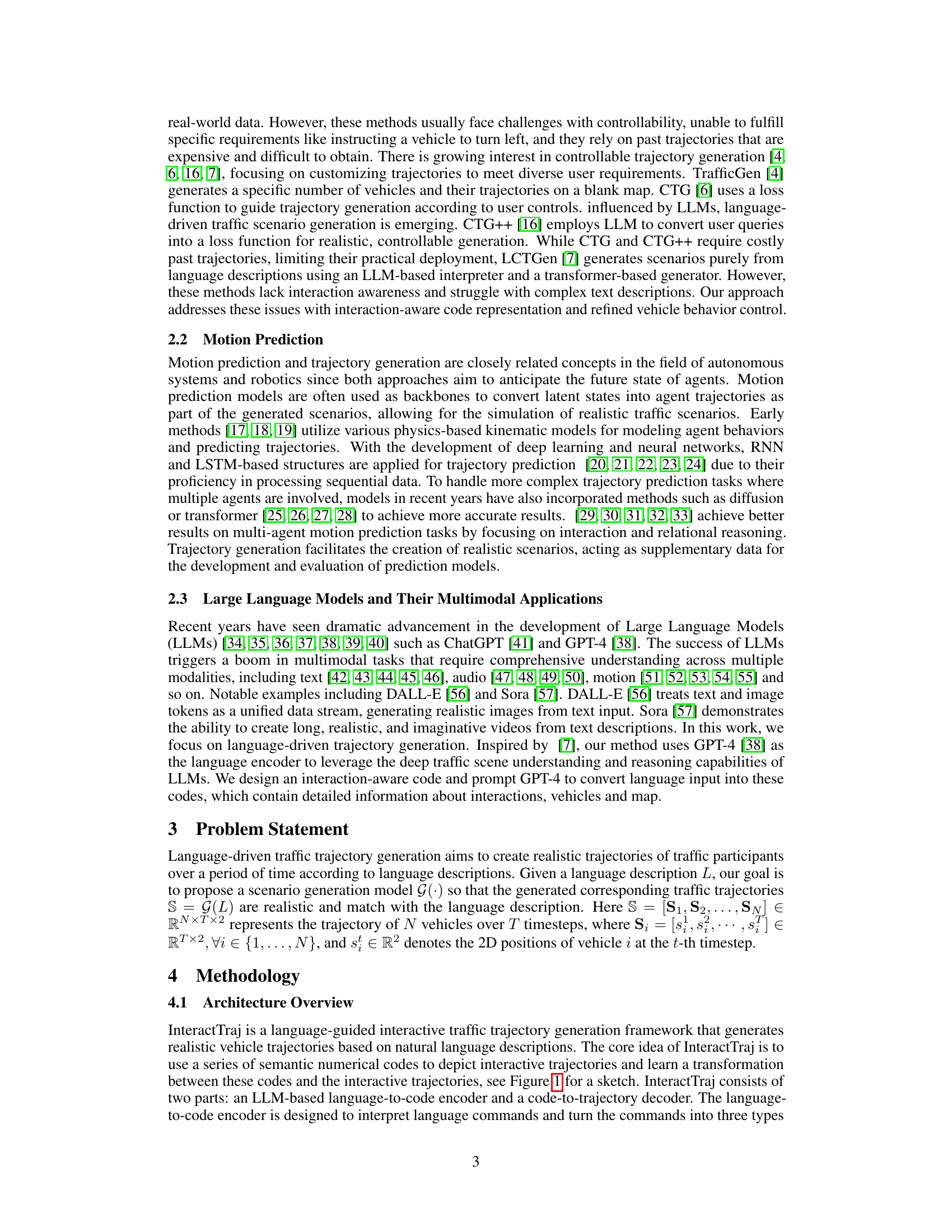

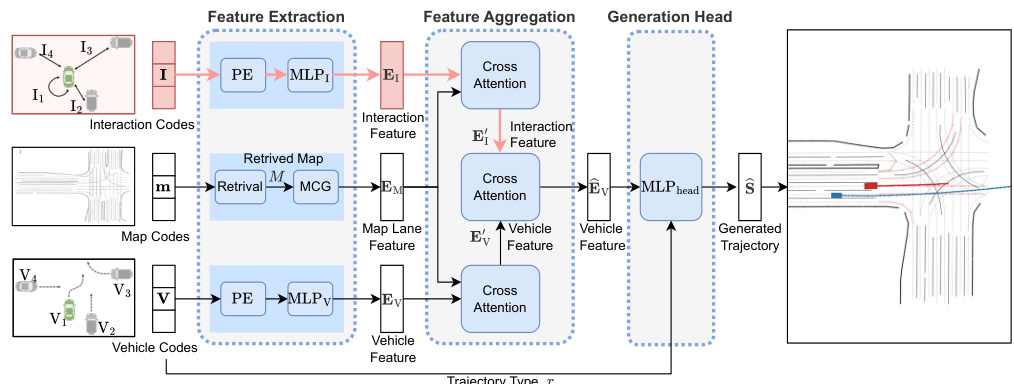

The figure shows the architecture of the code-to-trajectory decoder in InteractTraj. It takes three types of numerical codes (interaction, vehicle, and map codes) as input. These codes are first transformed into features using feature extraction modules (including multi-context gating for map codes and MLPs for interaction and vehicle codes). Then, a two-step feature aggregation strategy is used. First, cross-attention is applied between map features and both interaction and vehicle features. Second, cross-attention fuses interaction features with the resulting vehicle features. Finally, a generation head (MLP) generates the trajectories based on the fused features and trajectory type information from the vehicle codes.

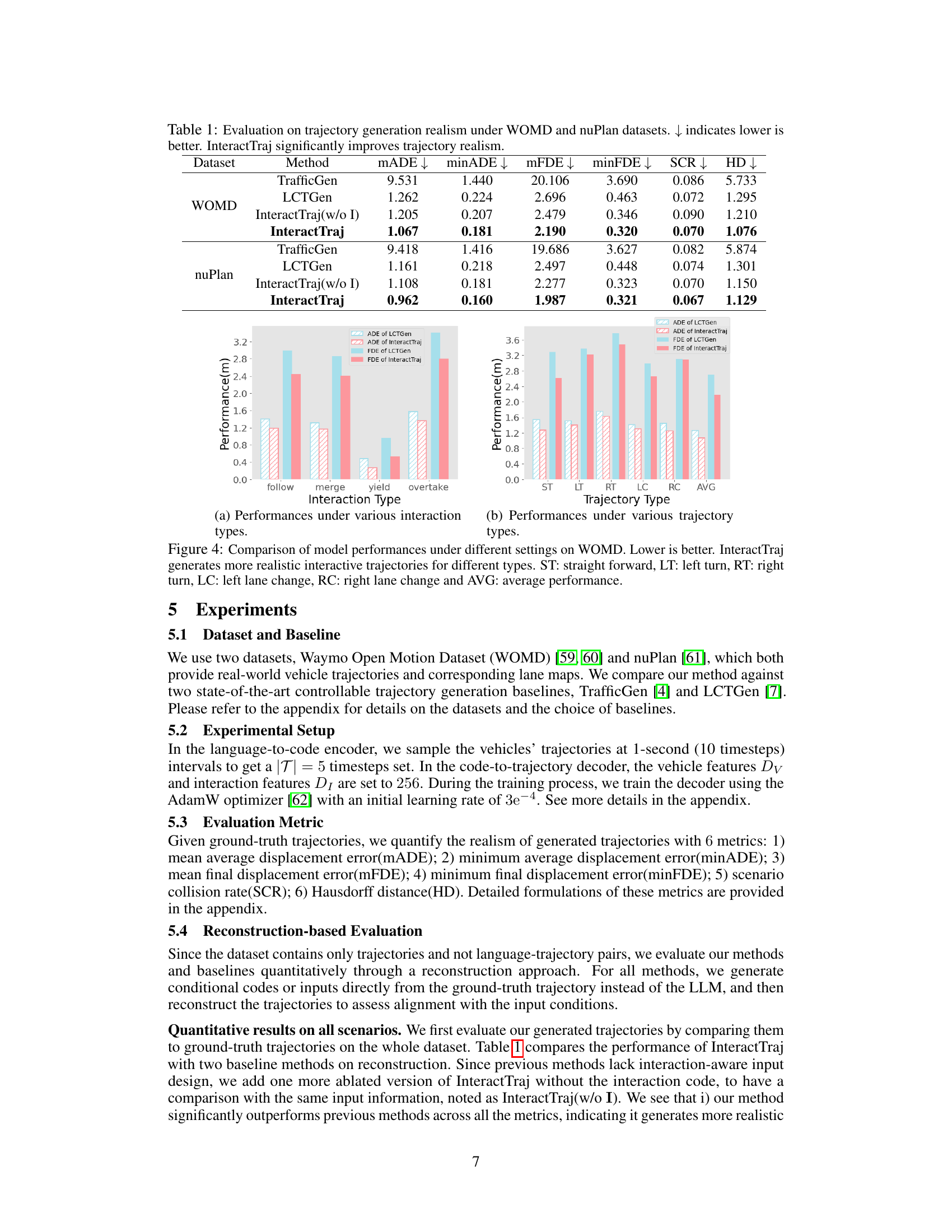

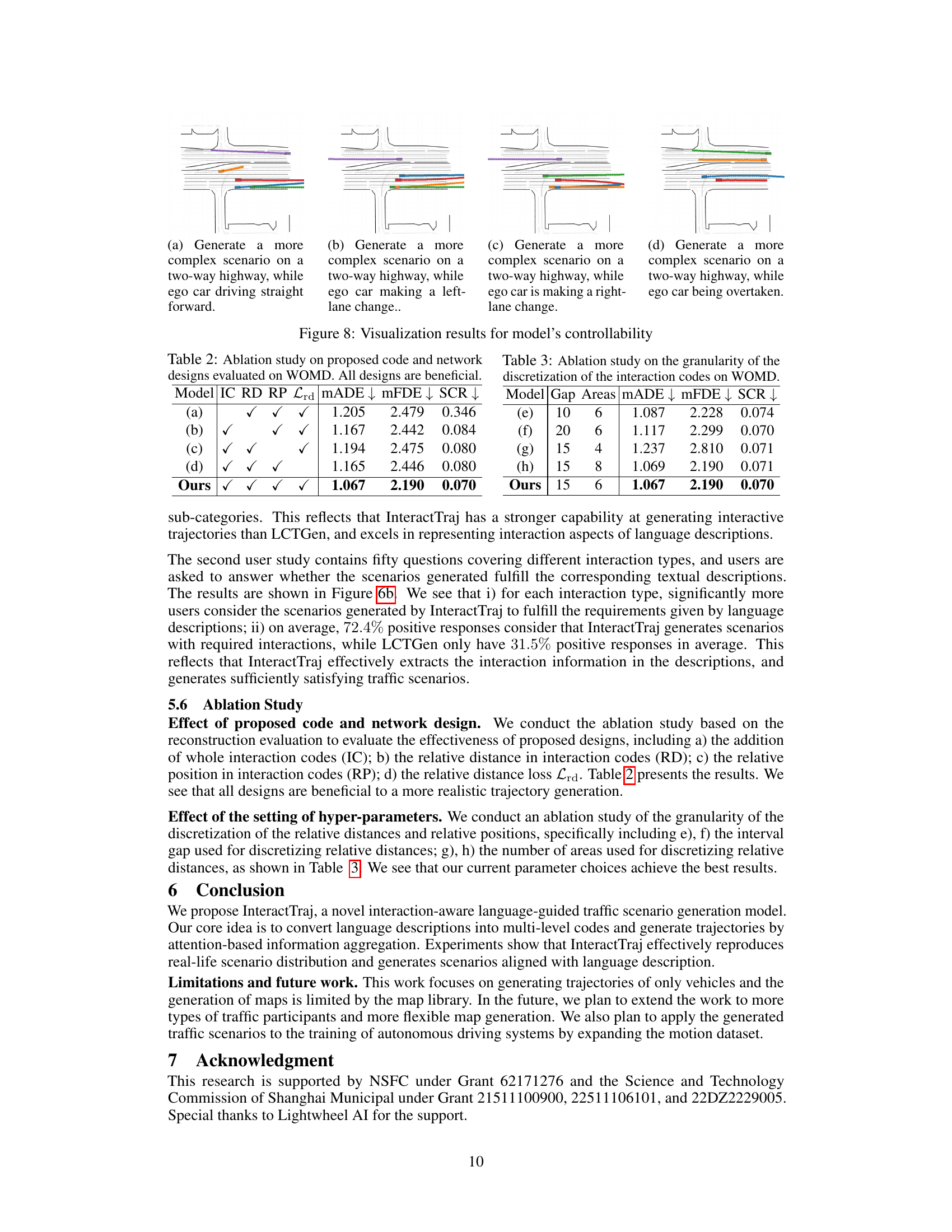

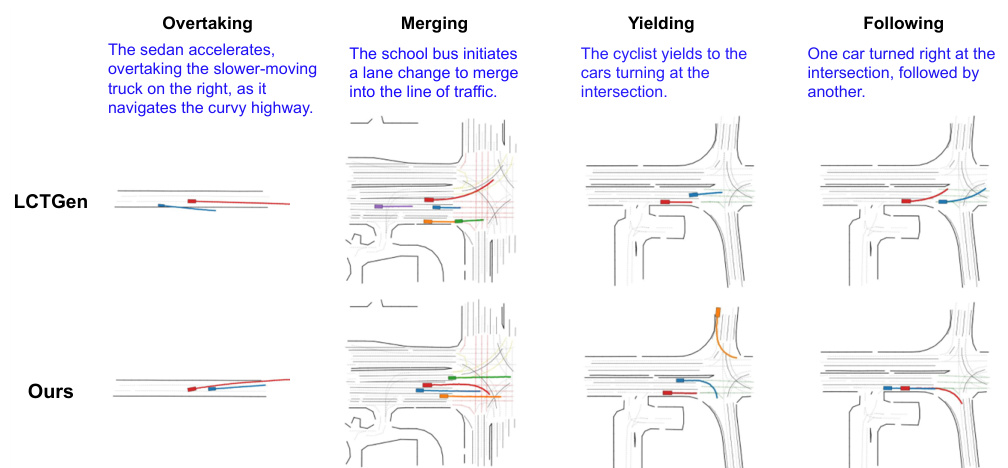

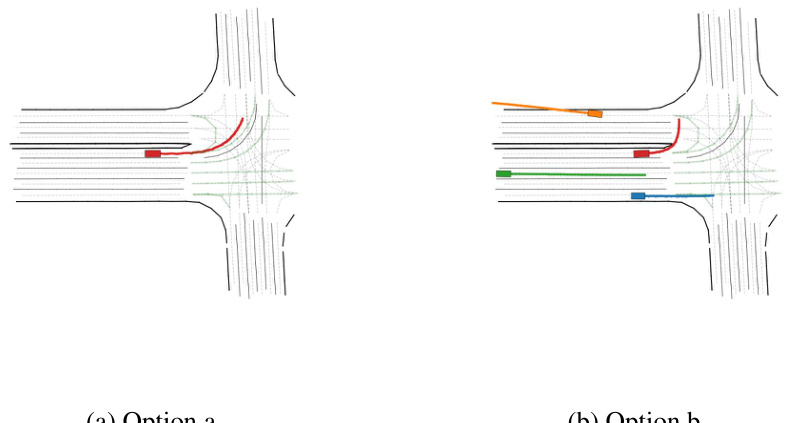

This figure compares the performance of InteractTraj and LCTGen on generating trajectories for different interaction types: overtaking, merging, yielding, and following. For each interaction type, a sample language description is given, and the resulting trajectories generated by both models are visualized. The visualizations show that InteractTraj’s generated trajectories more closely match the intended interaction described in the language prompt compared to LCTGen, which produces less realistic and less interactive trajectories.

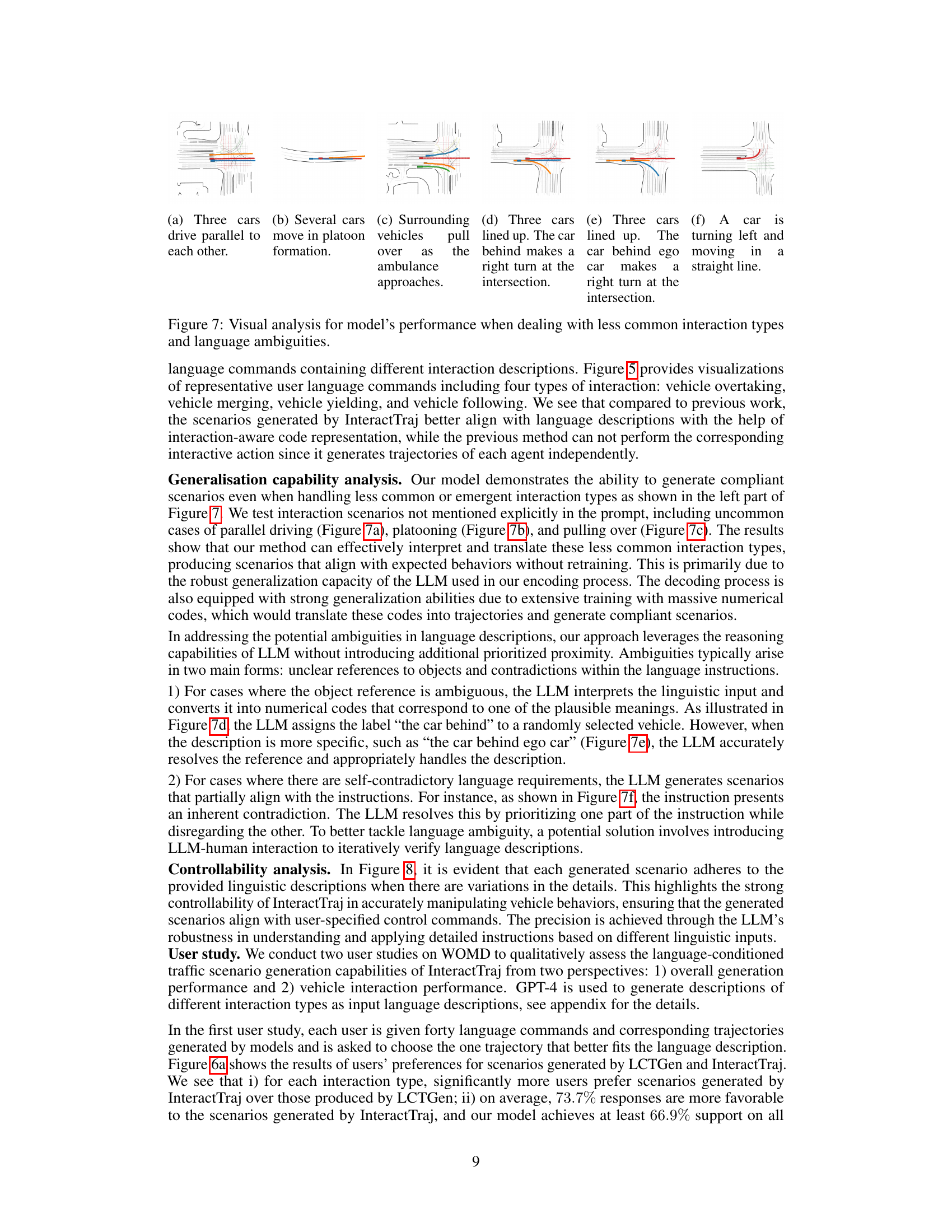

This figure presents the results of a user study comparing the performance of InteractTraj and LCTGen in generating traffic scenarios. The left bar chart shows the percentage of users who preferred the scenarios generated by each model across different interaction types (follow, merge, yield, overtake). The right bar chart displays the percentage of users who found the scenarios generated by each model to accurately reflect the interaction descriptions. InteractTraj consistently outperforms LCTGen in both aspects, highlighting its better performance in generating more realistic and interaction-aware traffic scenarios.

The figure shows the overall architecture of the InteractTraj model. It starts with a natural language description of a traffic scenario. This description is fed into an LLM-based language-to-code encoder, which transforms the description into three types of numerical codes: interaction codes, vehicle codes, and map codes. These codes are then input into a code-to-trajectory decoder, which generates the final interactive traffic trajectories. The figure highlights the key components and data flow within the InteractTraj model.

The figure illustrates the architecture of the code-to-trajectory decoder, a key component of InteractTraj. The decoder takes interaction codes, vehicle codes, and map codes as input. It processes these codes using feature extraction, two-step interaction-aware feature aggregation (incorporating cross-attention mechanisms), and a generation head to produce the final vehicle trajectories. The diagram visually represents the flow of information and the different processing stages within the decoder. This process fuses information about vehicle interactions with environmental map data to improve the realism and coherence of the generated trajectories.

The figure shows the architecture of the code-to-trajectory decoder, a key component of InteractTraj. This decoder takes interaction codes, vehicle codes, and map codes as input. It uses feature extraction to convert these codes into embeddings. A two-step interaction-aware feature aggregation process then fuses the map and interaction features with the vehicle features via cross-attention mechanisms. Finally, a generation head produces the interactive vehicle trajectories based on these fused features. The figure highlights the interplay between different feature types and how they contribute to generating coherent trajectories.

This figure shows the overall architecture of InteractTraj, a language-driven interactive traffic trajectory generation model. It consists of three main components: 1) a Language-to-Code Encoder that converts natural language descriptions of traffic scenarios into interaction-aware numerical codes; 2) an Interaction Code, Vehicle Code, and Map Code that represent the abstract trajectory descriptions in concrete numerical formats; and 3) a Code-to-Trajectory Decoder that takes these codes as input to generate interactive traffic trajectories. The figure highlights the interaction-aware nature of the model, which is a key feature that distinguishes it from previous methods.

This figure illustrates the overall architecture of the InteractTraj model. It shows how language descriptions are processed to generate interactive traffic trajectories. First, an LLM-based encoder transforms natural language into interaction-aware numerical codes (Interaction Code, Vehicle Code, and Map Code). These codes are then fed into a code-to-trajectory decoder, which uses these codes and map information to produce realistic and interactive traffic trajectories that reflect the original language description. The figure highlights the key components of the model and the flow of information between them.

The figure shows the architecture of the code-to-trajectory decoder in the InteractTraj model. The decoder takes interaction codes, vehicle codes, and map codes as input. These codes are processed through feature extraction modules to generate initial embeddings for interaction features, vehicle features, and map lane features. These features are then aggregated using cross-attention mechanisms. Interaction features are fused with vehicle features. The fused features are then passed through a generation head to produce the final vehicle trajectories.

More on tables

This ablation study investigates the impact of different components of InteractTraj on its performance. It evaluates the model’s performance when removing specific parts of the interaction code (Interaction Codes (IC), Relative Distance (RD), Relative Position (RP)), or the relative distance loss (Lrd) from the training process. The results show that including all these components leads to the best performance, suggesting their importance for generating realistic interactive traffic trajectories.

This ablation study investigates the impact of different granularities in discretizing relative distances and positions within interaction codes on the model’s performance. The results demonstrate that using a gap of 15 meters and dividing the space into 6 areas (as in the original model) yields optimal results, balancing the model’s ability to capture fine-grained interaction details without excessively increasing computational complexity.

Full paper#