↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Stochastic Convex Optimization (SCO) studies algorithms minimizing convex functions with noisy data. While some algorithms avoid overfitting even with limited data, the sample complexity of Gradient Descent (GD), a fundamental algorithm, remained unclear. Existing bounds showed either hyperparameter tuning was needed or there was a dimension dependency. This creates a gap in understanding the algorithm’s efficiency and generalization capability.

This research analyzes the sample complexity of GD in SCO. The authors prove a new generalization bound showing that GD’s generalization error is Õ(d/m + 1/√m), where ’d’ is the dimension and ’m’ the sample size. This matches the sample complexity of worst-case ERMs, implying GD has no inherent advantage. The study resolves an open problem by showing that a linear dimension dependence is necessary. The bound highlights the impact of hyperparameters and the need for sufficient iterations to avoid overfitting.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in stochastic convex optimization and machine learning because it resolves a long-standing open problem regarding the sample complexity of gradient descent (GD). It bridges the gap between existing upper and lower bounds, providing a tighter understanding of GD’s generalization capabilities. This work directly impacts the design and analysis of new optimization algorithms and offers new avenues for research in overparameterized learning.

Visual Insights#

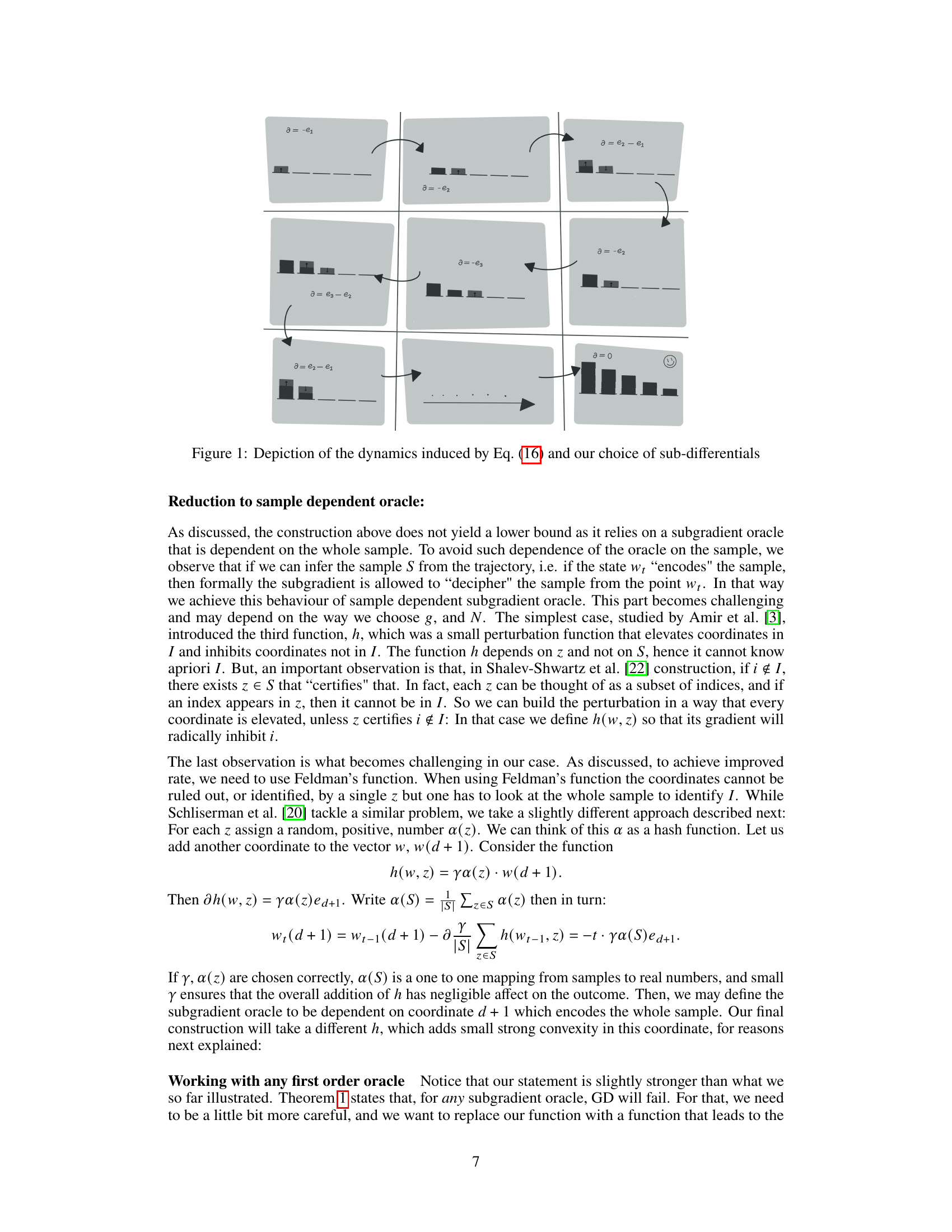

This figure shows a graphical illustration of how the algorithm’s dynamics are influenced by equation 16 and the specific sub-differential choices made. Each cell in the grid represents a step in the algorithm’s execution, visualizing how the coordinate values change in response to the selected sub-gradients. The dark-shaded blocks correspond to activated coordinates and their relative magnitudes. The arrows indicate the transitions between steps. The smiley face in the bottom right corner symbolizes the convergence of the algorithm.

Full paper#