↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Traditional meta-learning struggles with cross-domain few-shot learning due to overfitting. This happens because the source and target tasks have different data distributions. To overcome this issue, many meta-learning methods focus on learning task-specific priors, which are difficult to transfer across different domains. This paper introduces a novel method to address this challenge.

The proposed method, Meta-Exploiting Frequency Prior, uses a new frequency-based framework. It decomposes each image into high and low-frequency components. These components, alongside the original image, are fed into a meta-learning network. The key is that it uses feature reconstruction and prediction consistency priors to enhance the network’s ability to learn features that generalize well to unseen tasks. This method significantly improves the accuracy and efficiency of meta-learning in cross-domain scenarios, achieving state-of-the-art results on multiple benchmarks. This method doesn’t add extra computational cost during inference.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on few-shot learning and cross-domain generalization. It tackles the critical issue of meta-learning overfitting by introducing a novel frequency-based framework, significantly advancing the state-of-the-art. The proposed method’s efficiency and effectiveness pave the way for new research into cross-domain generalization techniques and their applications in various fields. It also inspires further investigation into using frequency domain information to guide meta-learning.

Visual Insights#

The figure illustrates the framework of the proposed method for cross-domain few-shot learning. It’s composed of two main modules: the Image Decomposition Module (IDM) and the Prior Regularization Meta-Network (PRM-Net). The IDM splits input images into low and high-frequency components using FFT. The PRM-Net processes these components and the original image through separate branches, applying prediction consistency and feature reconstruction priors during meta-training to enhance generalization. Only the main branch is used during inference.

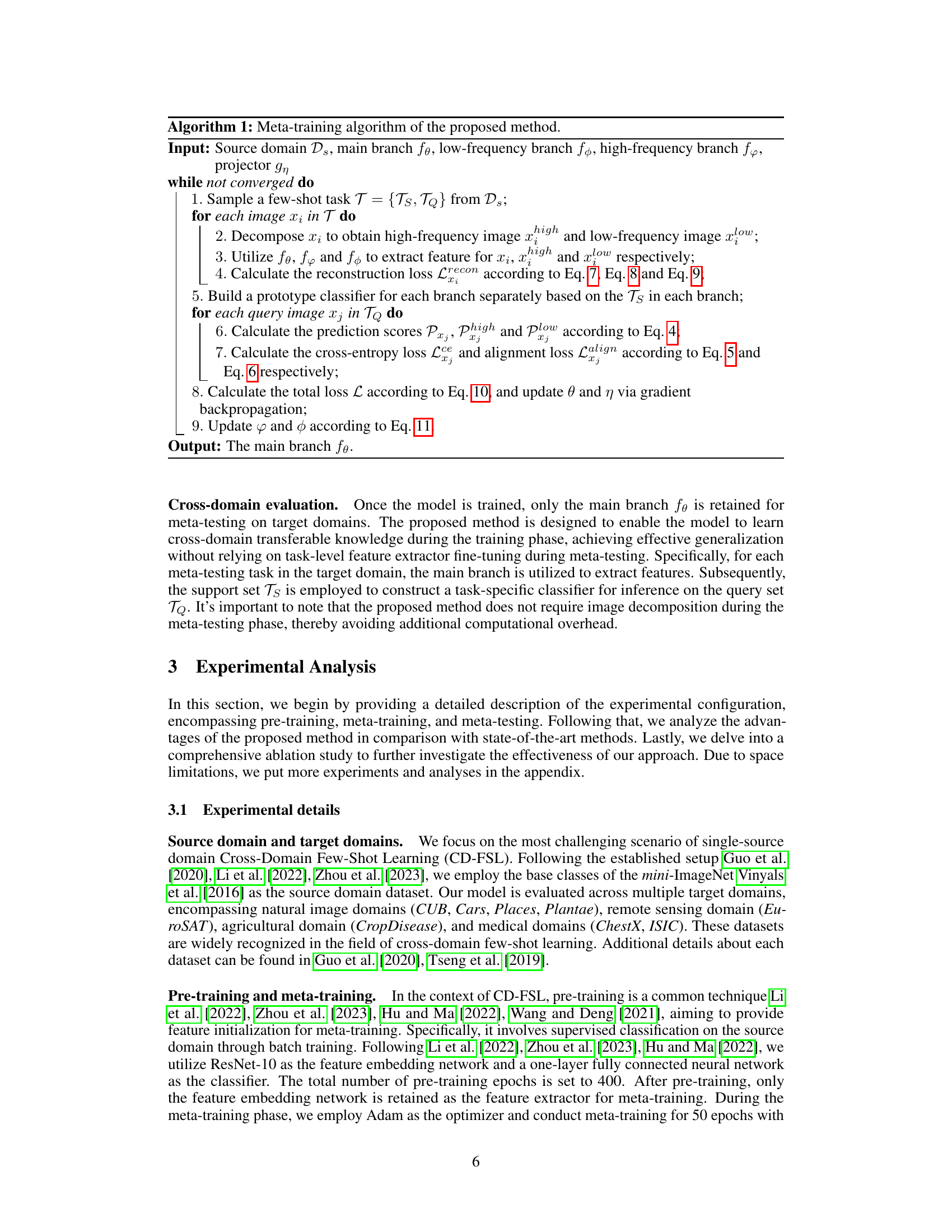

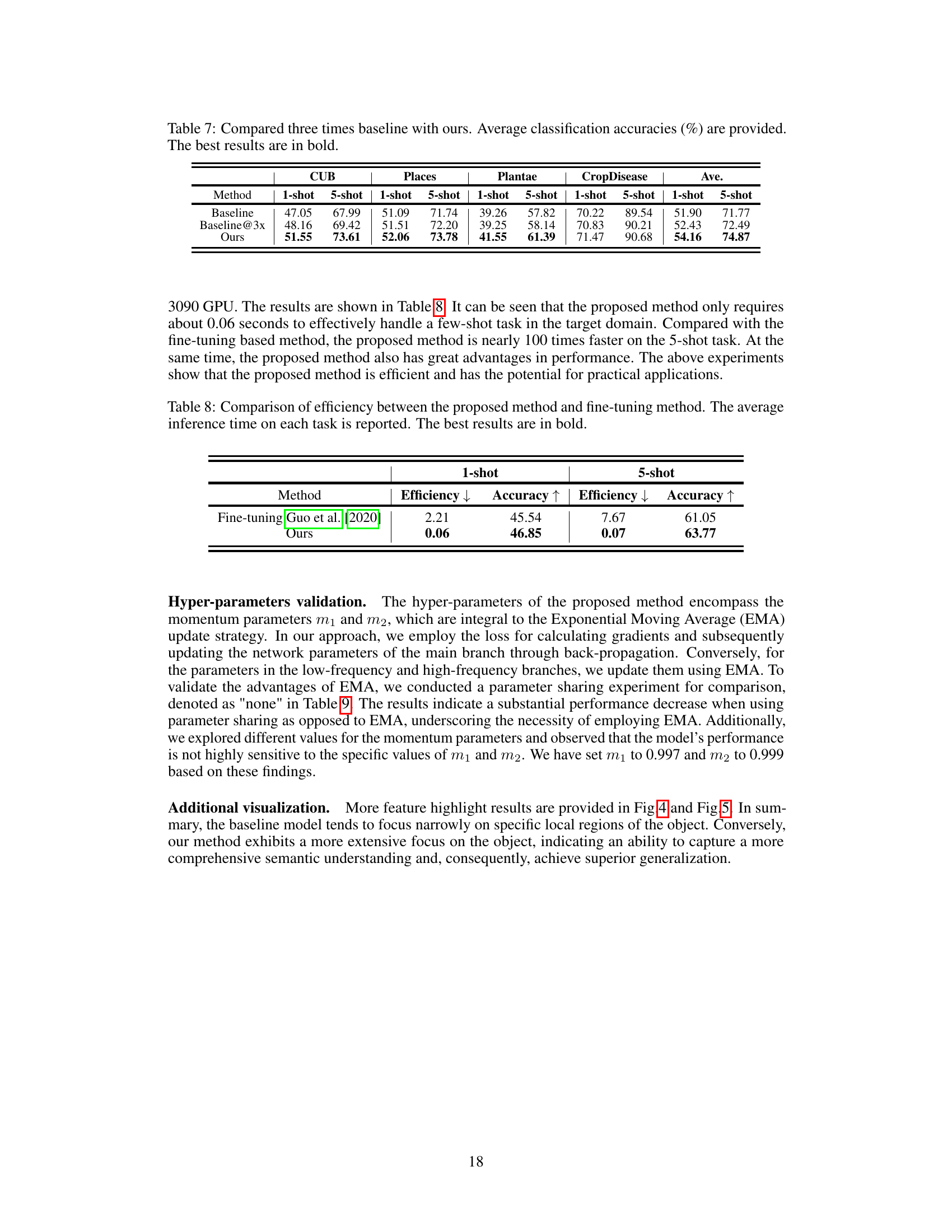

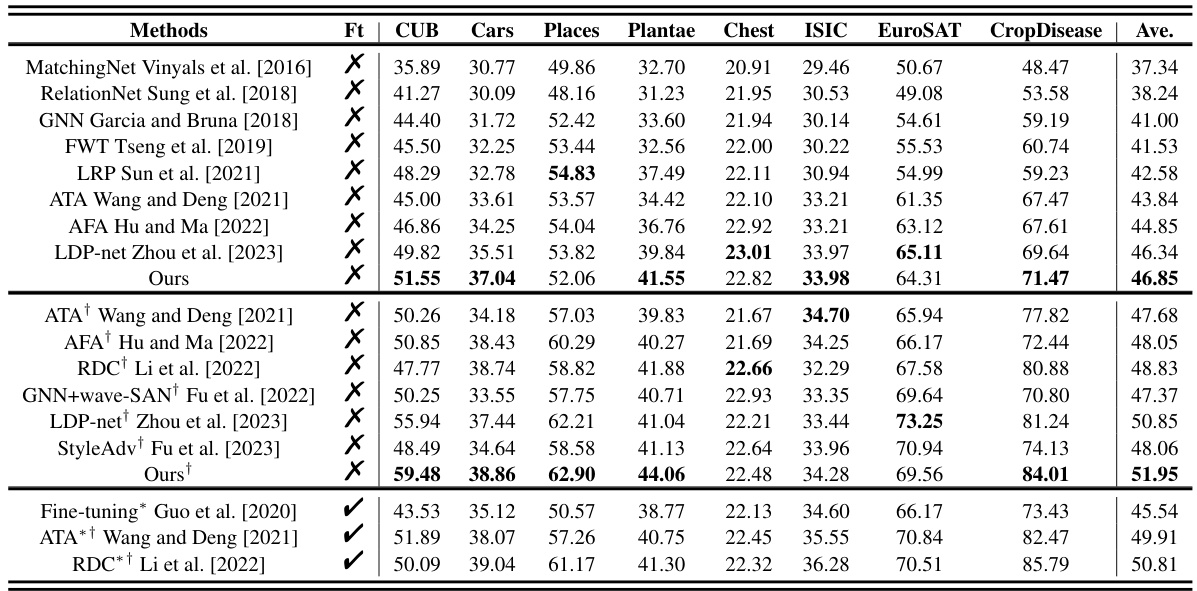

This table compares the proposed method’s performance with several state-of-the-art methods on a 5-way 1-shot cross-domain few-shot learning (FSL) task. It shows the average classification accuracy across multiple target domains (CUB, Cars, Places, Plantae, ChestX, ISIC, EuroSAT, CropDisease). The table indicates whether methods fine-tune their feature embedding networks on each target domain and whether they use the full dataset for training. The best performance for each domain is highlighted in bold.

In-depth insights#

Freq Prior Meta-Learn#

The heading ‘Freq Prior Meta-Learn’ suggests a novel approach to meta-learning that leverages frequency-based image priors. This implies a method where images are decomposed into frequency components (e.g., low and high-frequency bands) before meta-training. The use of frequency priors likely aims to improve the generalizability of the learned features by encoding domain-invariant information present in the frequency representation of images. This would be especially beneficial in cross-domain few-shot learning scenarios, where the distribution of images in the target domain differs significantly from the source domain. The ‘Meta-Learn’ aspect indicates that the model is trained using an episodic meta-learning approach, adapting its parameters to various tasks in the source domain, aiming to learn a generalizable representation. The combination of frequency priors and meta-learning offers a unique way to address challenges of traditional meta-learning, reducing overfitting and increasing robustness to cross-domain variation. This framework is likely designed to extract complementary information from different frequency components of images, enhancing overall feature representation for better classification performance in few-shot settings.

Cross-Domain FSL#

Cross-Domain Few-Shot Learning (FSL) tackles the challenge of training machine learning models effectively using limited labeled data, particularly when the training and testing data come from different domains. This poses a significant hurdle due to the domain shift, where the statistical distributions of features differ substantially between the source (training) and target (testing) domains. Standard FSL techniques often struggle in this context, exhibiting overfitting to the source domain and poor generalization to unseen target domains. Effective cross-domain FSL methods require mechanisms to reduce domain discrepancy and learn domain-invariant features. This might involve techniques like domain adaptation, transfer learning, or utilizing domain-specific image priors (e.g., frequency components). Invariant feature extraction is key—identifying features that are robust across domains and less sensitive to distribution shifts. Addressing the overfitting issue is crucial, and techniques to ensure the model generalizes well from source to target data are vital. The research in this area focuses on developing innovative architectures and training strategies to improve model robustness and adaptability in cross-domain FSL scenarios.

Invariant Priors#

Invariant priors, in the context of cross-domain few-shot learning, represent a powerful concept for enhancing model generalization. They refer to properties of data that remain consistent across different domains, guiding the model to learn domain-invariant features rather than those specific to the training data. Exploiting such priors is crucial for addressing overfitting in cross-domain scenarios, where models trained on one domain often struggle to generalize to another with different characteristics. The effectiveness of invariant priors lies in their ability to bridge the gap between source and target domains, enabling the model to learn transferable knowledge that transcends domain-specific biases. Frequency-based priors, as explored in this paper, offer a particularly promising avenue for developing these invariant features. By decomposing images into low and high-frequency components and incorporating both into the learning process, models can capture robust structural information and content details that are less susceptible to domain shifts. This approach leverages the understanding that high-frequency components often reflect structural properties, while low-frequency components capture content details, leading to more generalizable representations. Consequently, the incorporation of invariant priors allows for the development of more robust and effective few-shot learning models that are capable of handling variations in data distributions.

Frequency Regularization#

Frequency regularization, in the context of cross-domain few-shot learning, is a technique aimed at improving model generalization by leveraging the inherent properties of image frequency components. The core idea is to decompose images into low and high-frequency parts, recognizing that low-frequency components often capture content details and high-frequency components represent robust structural information. This decomposition allows the model to independently learn from these complementary aspects, enhancing its ability to transfer knowledge between domains. To further refine this learning process, prediction consistency and feature reconstruction priors can be introduced. These priors encourage consistent predictions across the different frequency components and the original image, thus preventing overfitting to specific characteristics of the source domain. This approach leads to the learning of more generalizable features, improving performance in cross-domain scenarios. The absence of additional computational costs in inference is a significant advantage of this method, making it more practical for real-world applications.

Future Work#

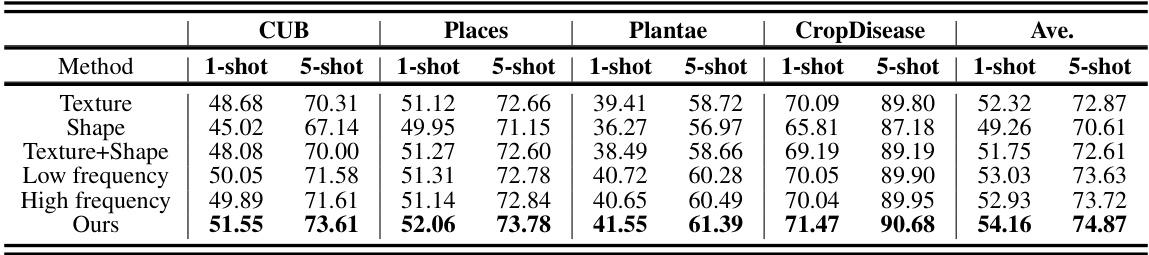

Future research directions stemming from this work could involve exploring alternative frequency decomposition methods beyond FFT, potentially incorporating learnable transformations for improved adaptability across diverse domains. Investigating the impact of different frequency bands on the model’s robustness to various types of noise and distortions would also be valuable. The effectiveness of the proposed frequency priors could be further enhanced by integrating them with other types of priors, such as shape or texture priors, creating a more comprehensive representation learning framework. Finally, applying this framework to other challenging cross-domain few-shot learning tasks, such as medical image analysis or remote sensing, would demonstrate its broader applicability and potentially reveal limitations needing further development. A thorough investigation into the computational efficiency of the method for large-scale datasets is necessary, as is exploring techniques to further minimize computational costs while maintaining performance.

More visual insights#

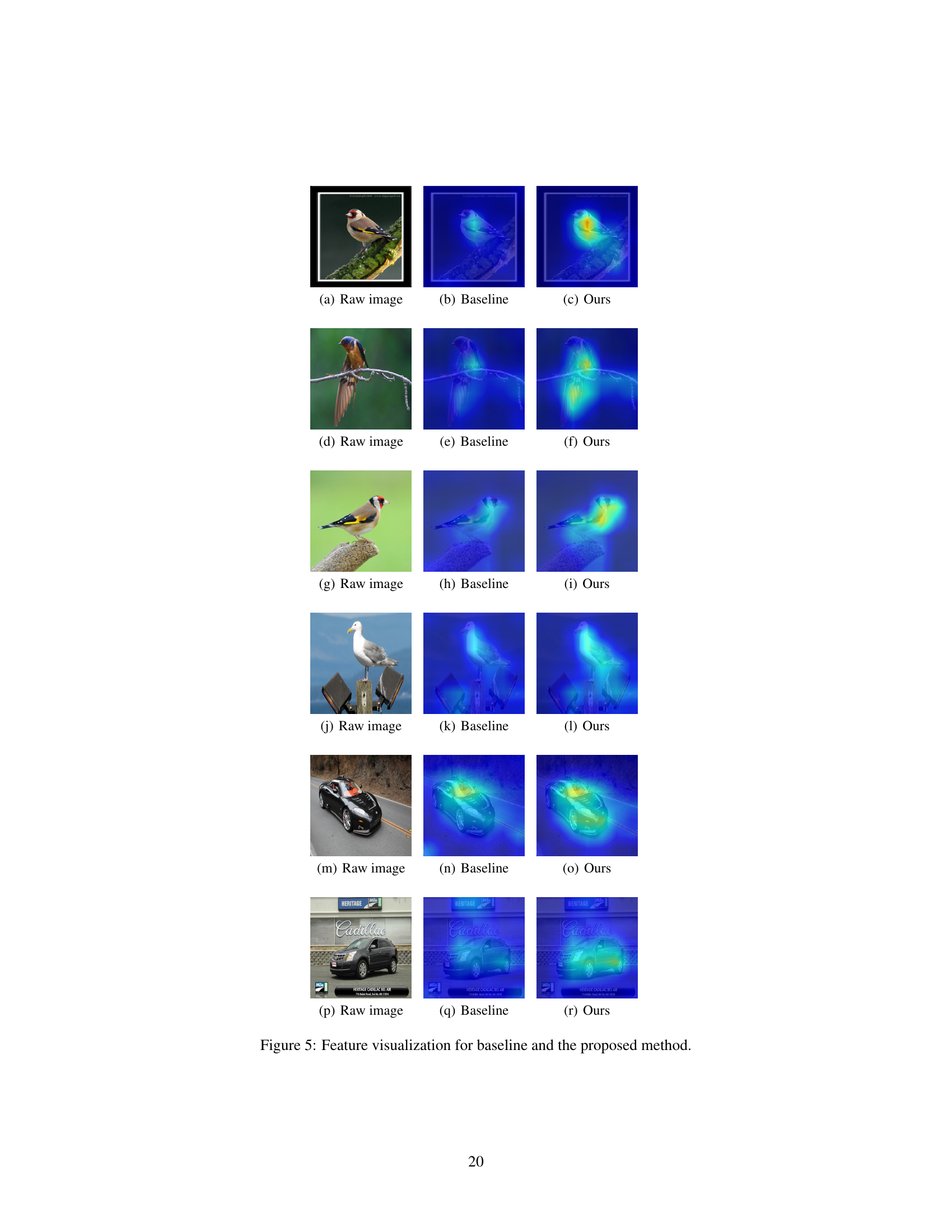

More on figures

This figure shows the framework of the proposed method for cross-domain few-shot learning. It consists of two main modules: an Image Decomposition Module (IDM) which splits images into high and low-frequency components, and a Prior Regularization Meta-Network (PRM-Net) that uses these components to learn generalizable image features. The PRM-Net uses prediction consistency and feature reconstruction priors to guide meta-learning, and only the main branch is used for testing.

The figure illustrates the architecture of the proposed meta-learning framework for cross-domain few-shot learning. It shows two main modules: an Image Decomposition Module (IDM) that separates images into low and high-frequency components, and a Prior Regularization Meta-Network (PRM-Net) that uses these components and two novel priors (prediction consistency and feature reconstruction) to guide the meta-learning process and learn more generalizable features. Only the main branch is used during the inference stage.

This figure shows the overall framework of the proposed method for cross-domain few-shot learning. It uses an image decomposition module to separate high and low-frequency components of images, feeding these into a prior regularization meta-network. This network uses prediction consistency and feature reconstruction priors to regularize the meta-learning process, aiming for better generalization across domains. During inference, only the main branch of the network is used.

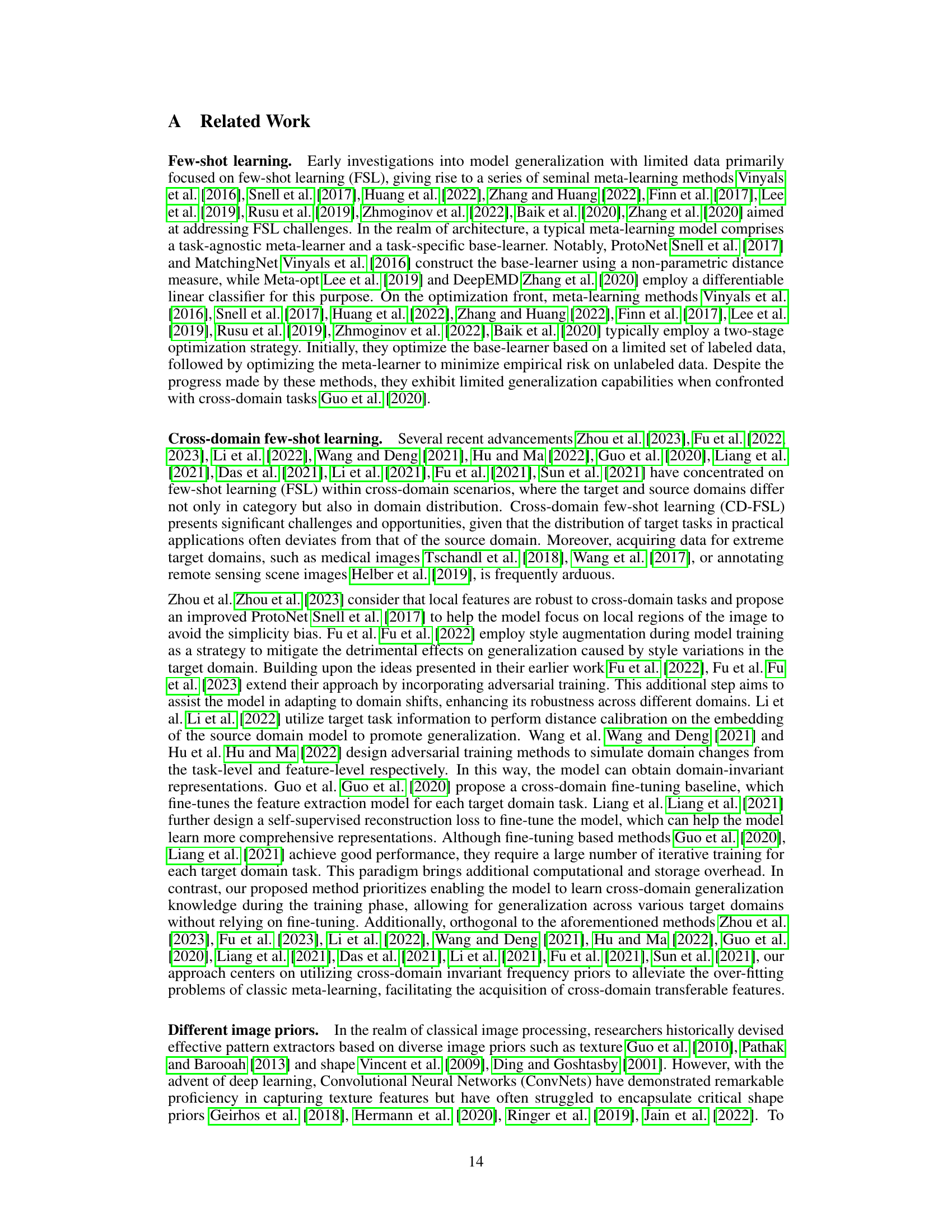

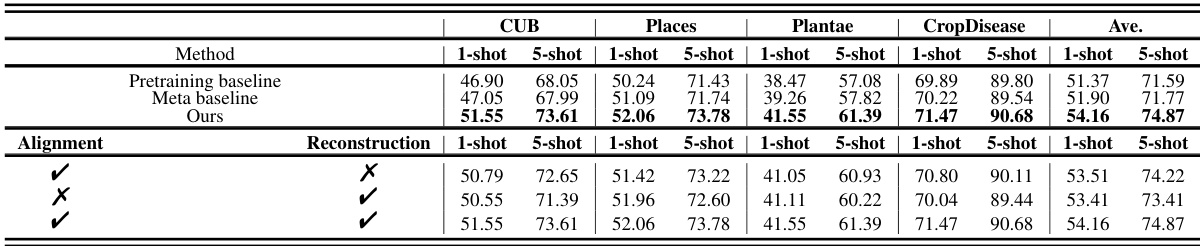

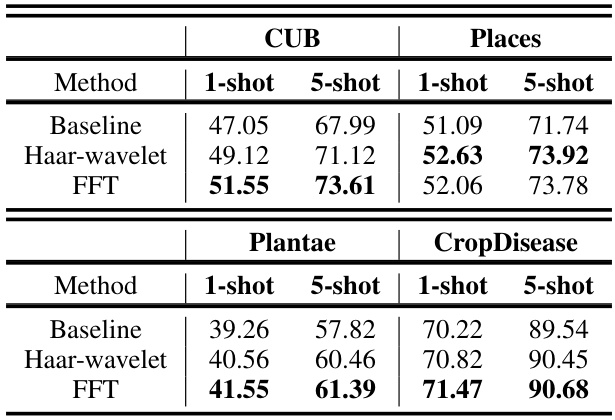

More on tables

This table compares the proposed method’s performance with several state-of-the-art methods on cross-domain few-shot learning (CD-FSL) tasks. It shows the average classification accuracy across eight different target domains (CUB, Cars, Places, Plantae, ChestX, ISIC, EuroSAT, CropDisease) for a 5-way 1-shot setting (5 classes, 1 training example per class, multiple testing examples). The table notes whether methods fine-tune their feature embedding network on each target domain and indicates which methods use the full dataset for training.

This table compares the proposed method’s performance with existing state-of-the-art methods on a 5-way 1-shot cross-domain few-shot learning (FSL) task. It shows the average classification accuracy across multiple target datasets (CUB, Cars, Places, Plantae, ChestX, ISIC, EuroSAT, CropDisease). The table highlights the proposed method’s superior performance, even without fine-tuning the feature embedding network on each target domain, compared to other methods that either use fine-tuning or access to full task data. The best results for each target dataset are marked in bold.

This table compares the proposed method with other state-of-the-art methods on 5-way 1-shot cross-domain few-shot learning (FSL) tasks. It shows the average classification accuracy across various target domains (CUB, Cars, Places, Plantae, ChestX, ISIC, EuroSAT, CropDisease). The table indicates whether methods used fine-tuning (*), exploited full FSL task data(†), and highlights the best performing methods in bold. The results demonstrate the effectiveness of the proposed method compared to existing approaches.

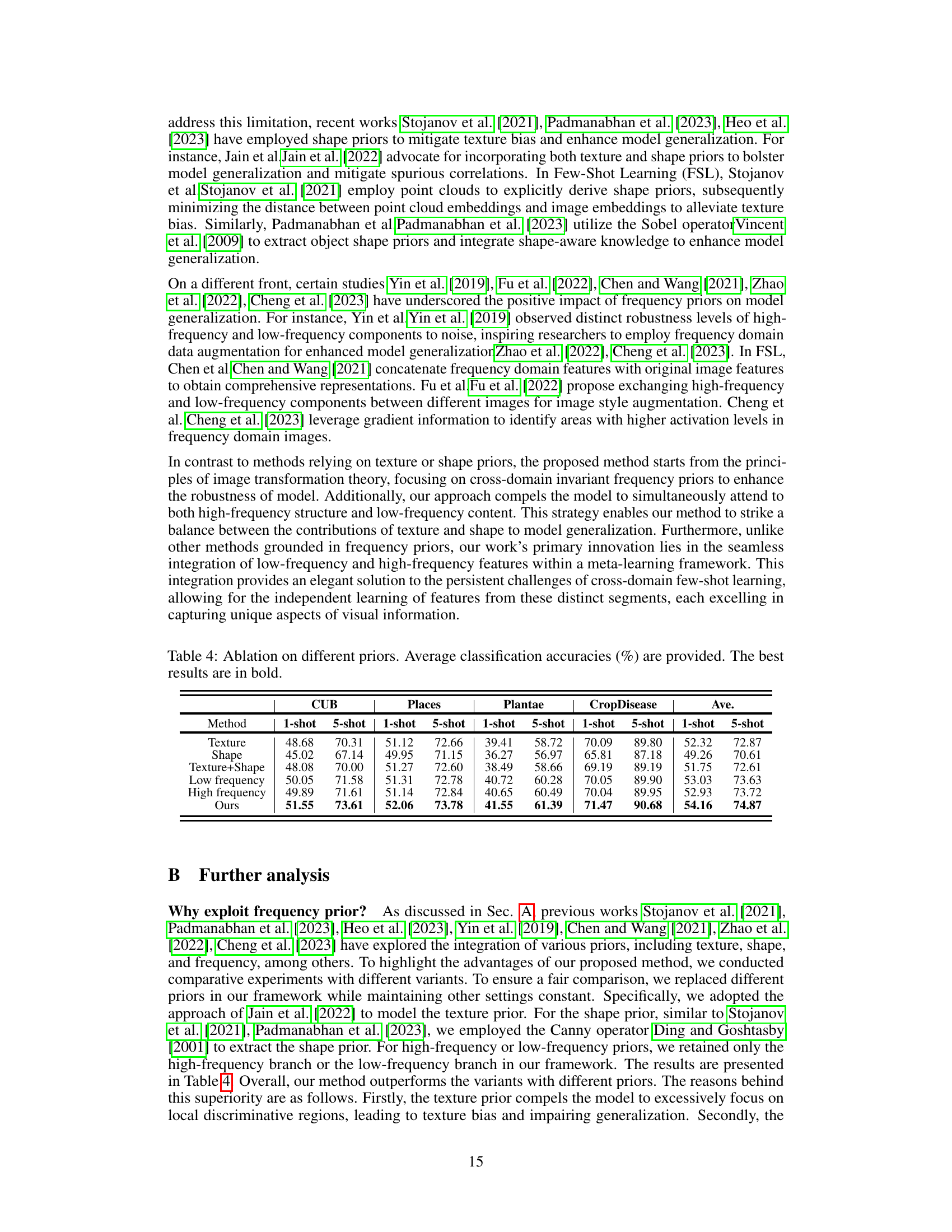

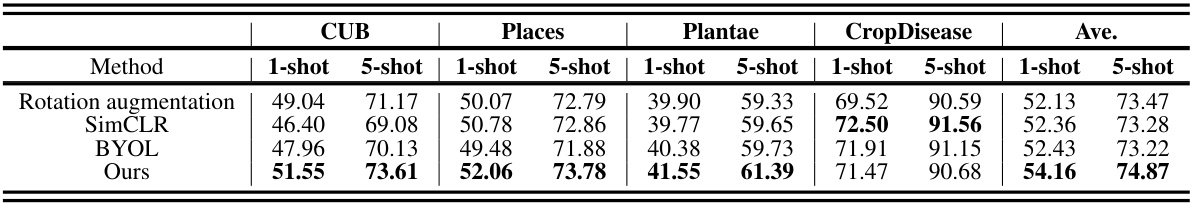

This table compares the proposed method’s performance with other data augmentation techniques (Rotation augmentation) and self-supervised learning approaches (SimCLR, BYOL) on five target domains (CUB, Places, Plantae, CropDisease). The results are presented as average classification accuracies for 1-shot and 5-shot settings, highlighting the superior performance of the proposed method.

This table compares the proposed method’s performance with other state-of-the-art methods on 5-way 5-shot cross-domain few-shot learning (FSL) tasks. It shows the average classification accuracy across eight different target domains (CUB, Cars, Places, Plantae, ChestX, ISIC, EuroSAT, CropDisease). The table indicates whether methods used fine-tuning on each target domain and whether they used the full dataset. The best results for each target domain are highlighted in bold.

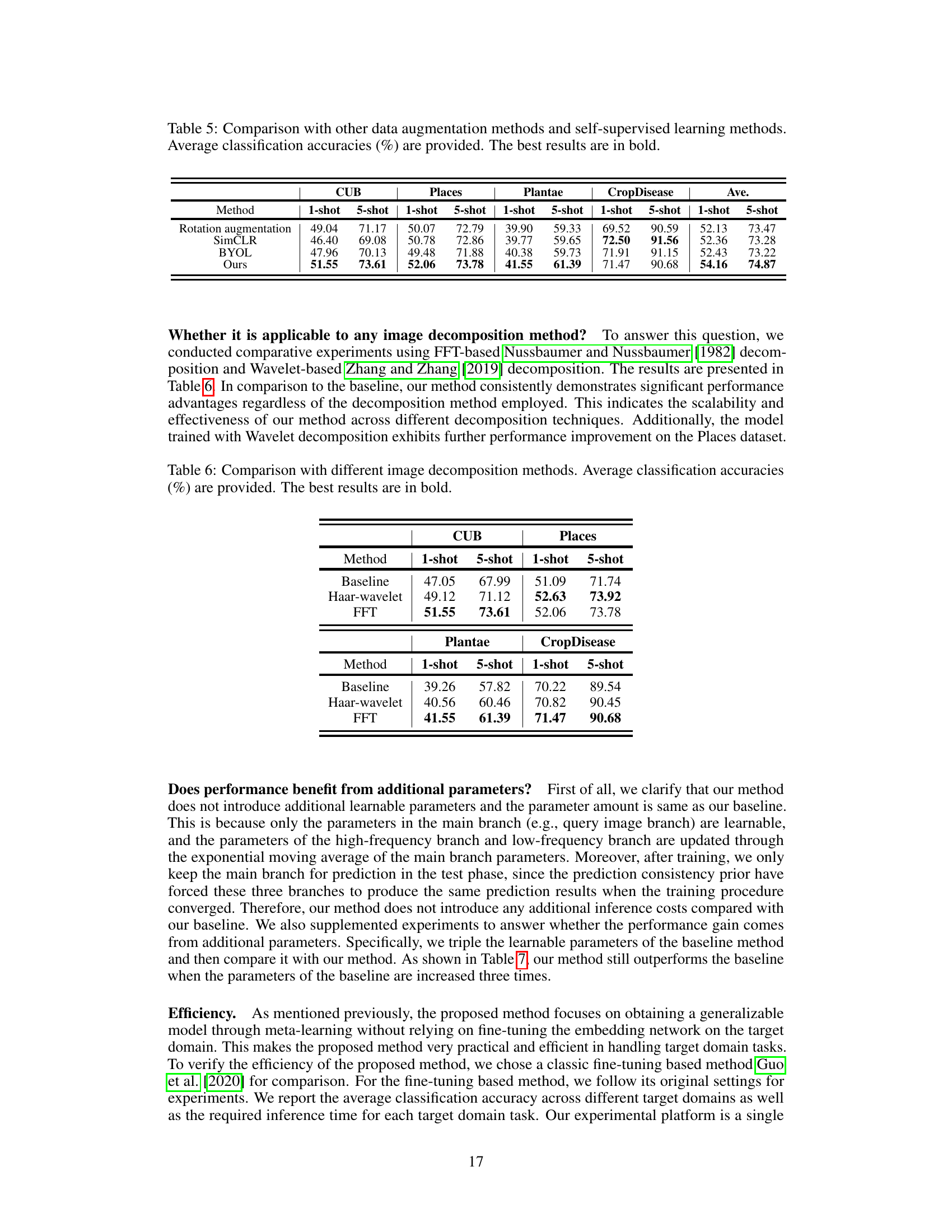

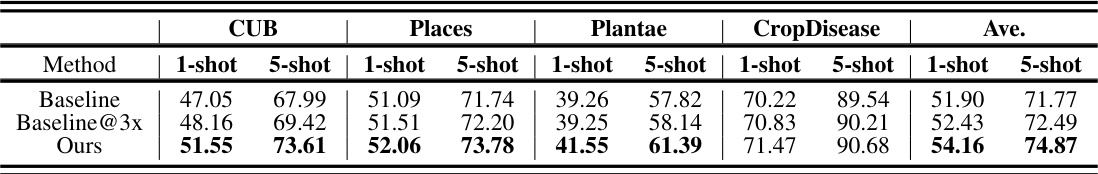

This table compares the performance of the proposed method against a baseline model with three times the number of parameters. The average classification accuracy across eight target domains (CUB, Cars, Places, Plantae, Chest, ISIC, EuroSAT, and CropDisease) is presented for both 1-shot and 5-shot scenarios. The results show that the proposed method achieves higher accuracy even when compared to a significantly larger baseline model.

This table compares the proposed method’s performance with other state-of-the-art methods on 5-way 1-shot cross-domain few-shot learning tasks. It shows the average classification accuracy across eight different target domains (CUB, Cars, Places, Plantae, ChestX, ISIC, EuroSAT, and CropDisease). The table indicates whether each method uses fine-tuning and whether it utilizes the full dataset, and highlights the best results for each domain.

This table compares the proposed method’s performance against several state-of-the-art methods on a 5-way 1-shot cross-domain few-shot learning (FSL) benchmark. It shows the average classification accuracy across multiple target domains (CUB, Cars, Places, Plantae, ChestX, ISIC, EuroSAT, CropDisease) for each method. The table highlights whether the methods used fine-tuning on the target domains and if they used the full dataset for training, providing context for the results. The best-performing method for each dataset is shown in bold.

Full paper#