↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Current 3D occupancy prediction methods heavily rely on LiDAR or cameras, making them vulnerable to bad weather. This limits the deployment of autonomous vehicles in all conditions. Existing radar-based approaches often use sparse point clouds, losing essential scene details.

RadarOcc directly processes the richer 4D radar tensor, addressing the challenges of noise and volume through innovative techniques like Doppler bin descriptors and range-wise self-attention. The spherical feature encoding minimizes interpolation errors, leading to superior performance compared to LiDAR or camera-based methods, especially in challenging weather. The paper presents a novel pipeline and validates its efficacy through extensive experiments and ablation studies on the K-Radar dataset.

Key Takeaways#

Why does it matter?#

This paper is crucial because it pioneers the use of 4D imaging radar for 3D occupancy prediction, a significant advancement over LiDAR and camera-based methods. This opens new avenues for all-weather autonomous driving, addresses limitations of existing techniques, and offers superior performance in adverse conditions. The detailed methodology and benchmark results provide valuable insights for researchers working on radar-based perception.

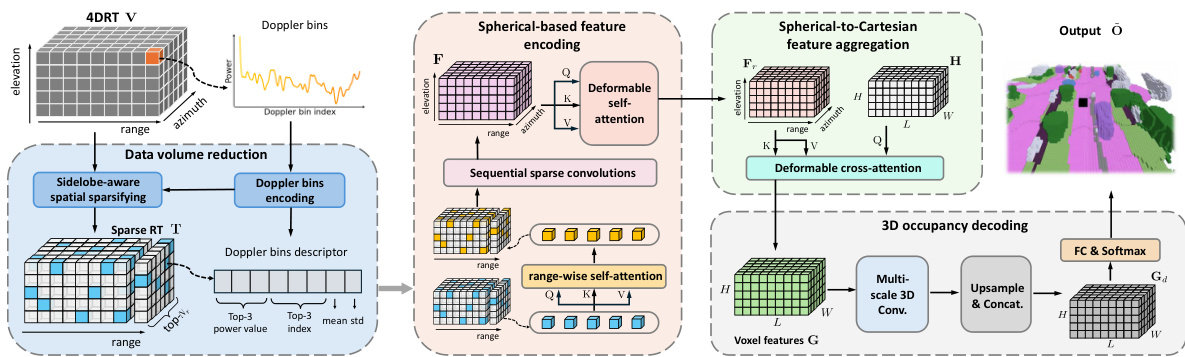

Visual Insights#

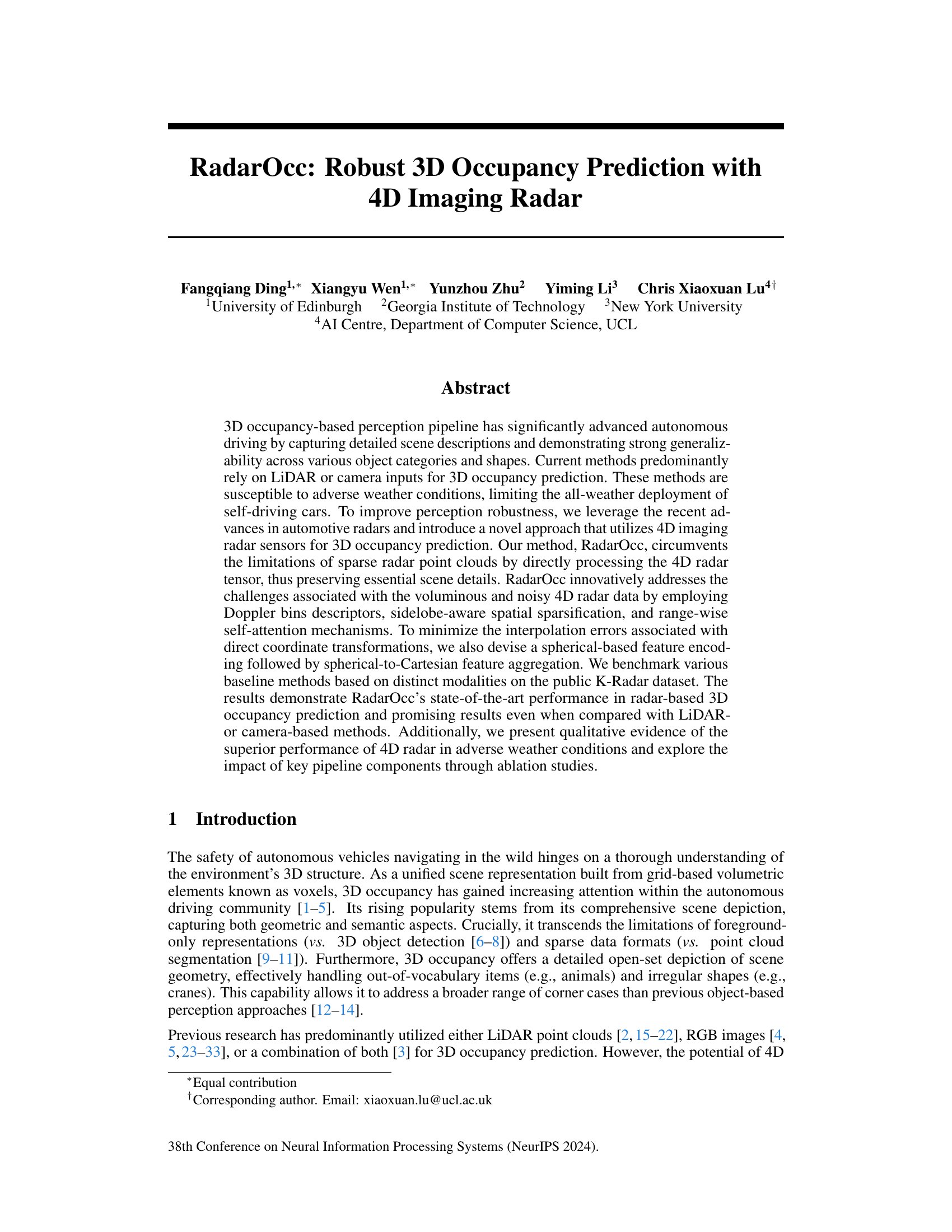

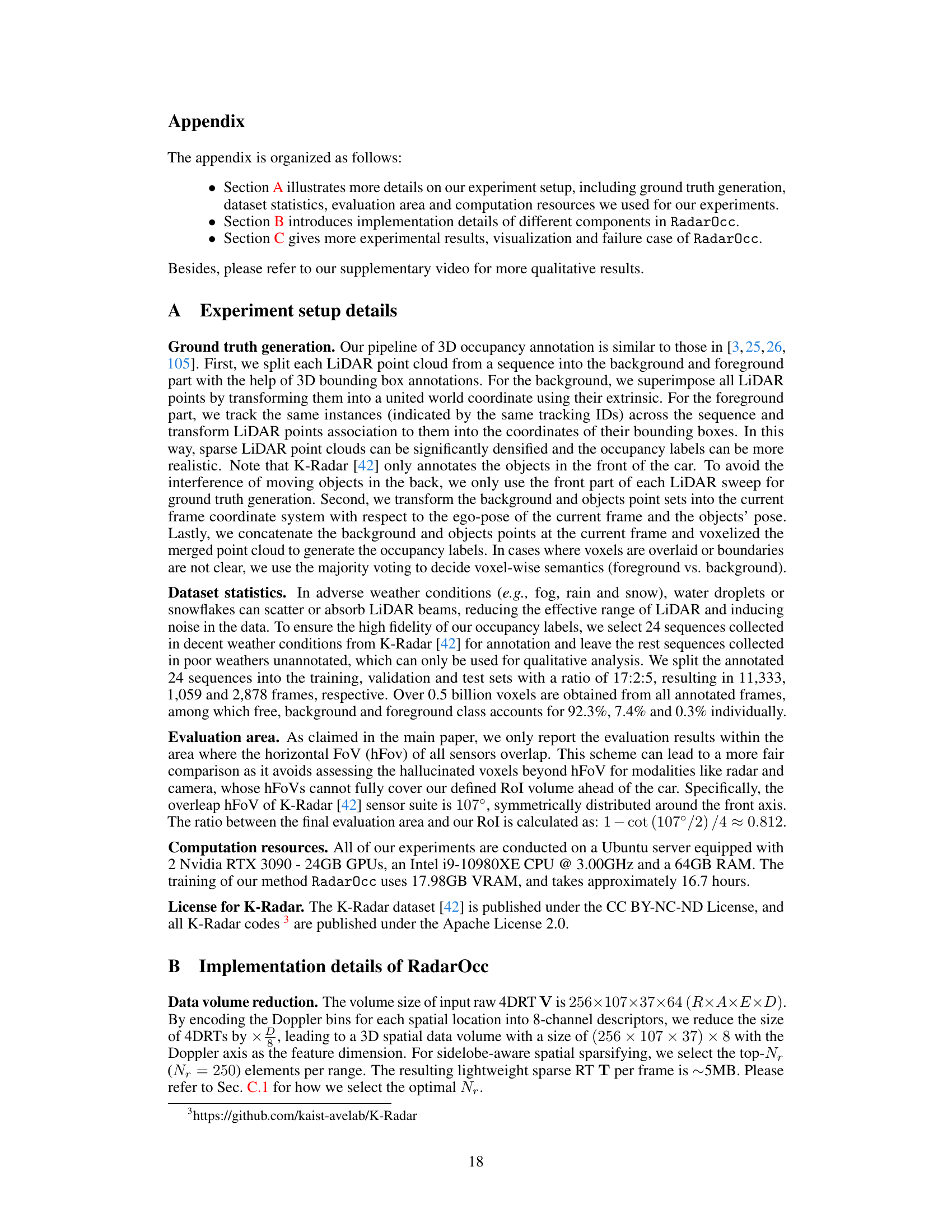

This figure shows the overall pipeline of the proposed RadarOcc model for 3D occupancy prediction using 4D imaging radar data. The pipeline consists of four main stages: 1. Data Volume Reduction: This stage reduces the size of the massive 4D radar tensor (4DRT) by encoding Doppler bins (frequency information) into descriptors and applying a sidelobe-aware spatial sparsification technique. This removes redundant and noisy information while preserving key spatial features. 2. Spherical-based Feature Encoding: The sparsified data is then processed using a spherical-based feature encoding method. This avoids the loss of information often associated with converting spherical radar coordinates to Cartesian coordinates. This process employs sequential sparse convolutions and deformable self-attention to extract relevant features. 3. Spherical-to-Cartesian Feature Aggregation: This stage seamlessly integrates the spherical features with Cartesian voxel queries through deformable cross-attention, enabling the model to predict occupancy in the desired 3D Cartesian grid. 4. 3D Occupancy Decoding: Finally, multi-scale 3D convolutions and upsampling are used to decode the aggregated features and generate the final 3D occupancy prediction.

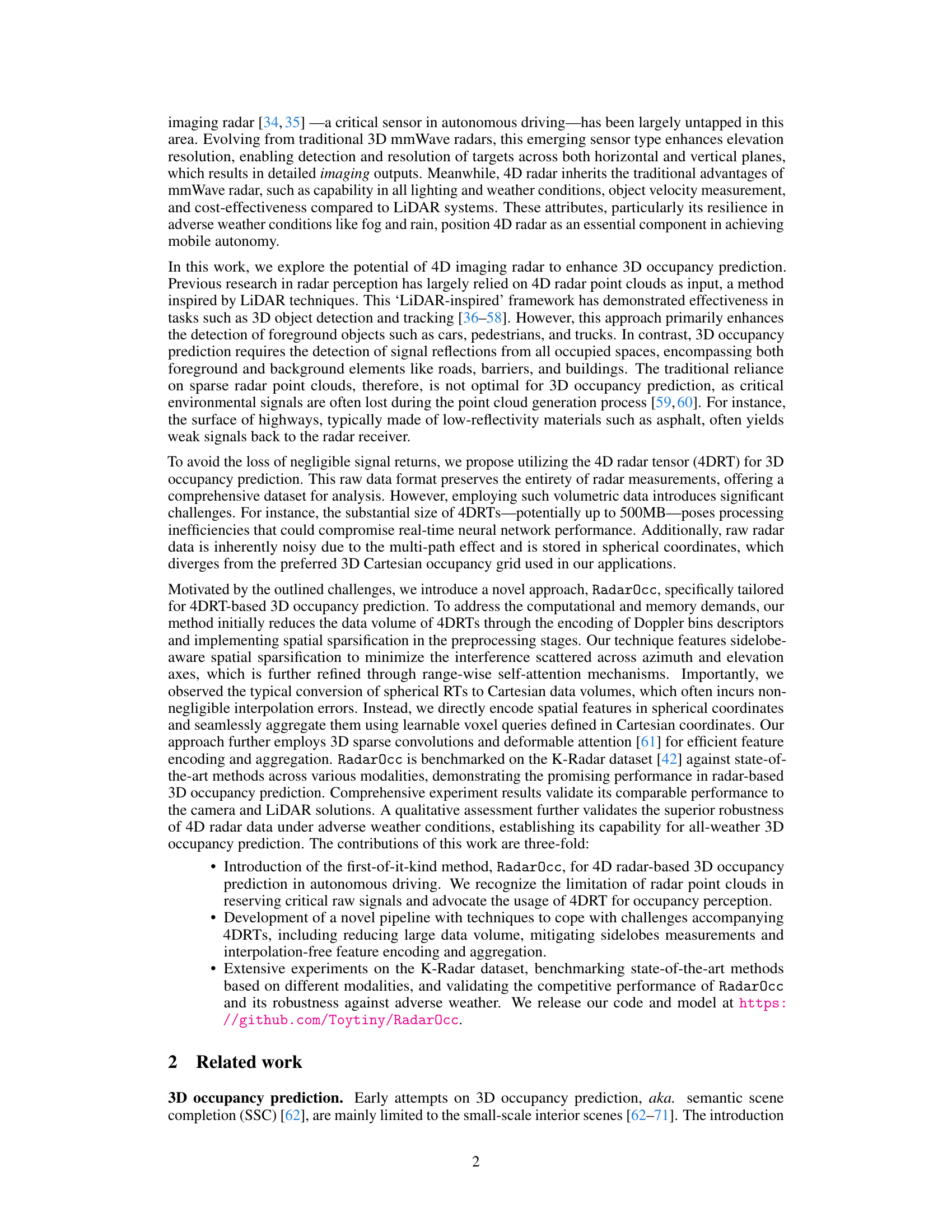

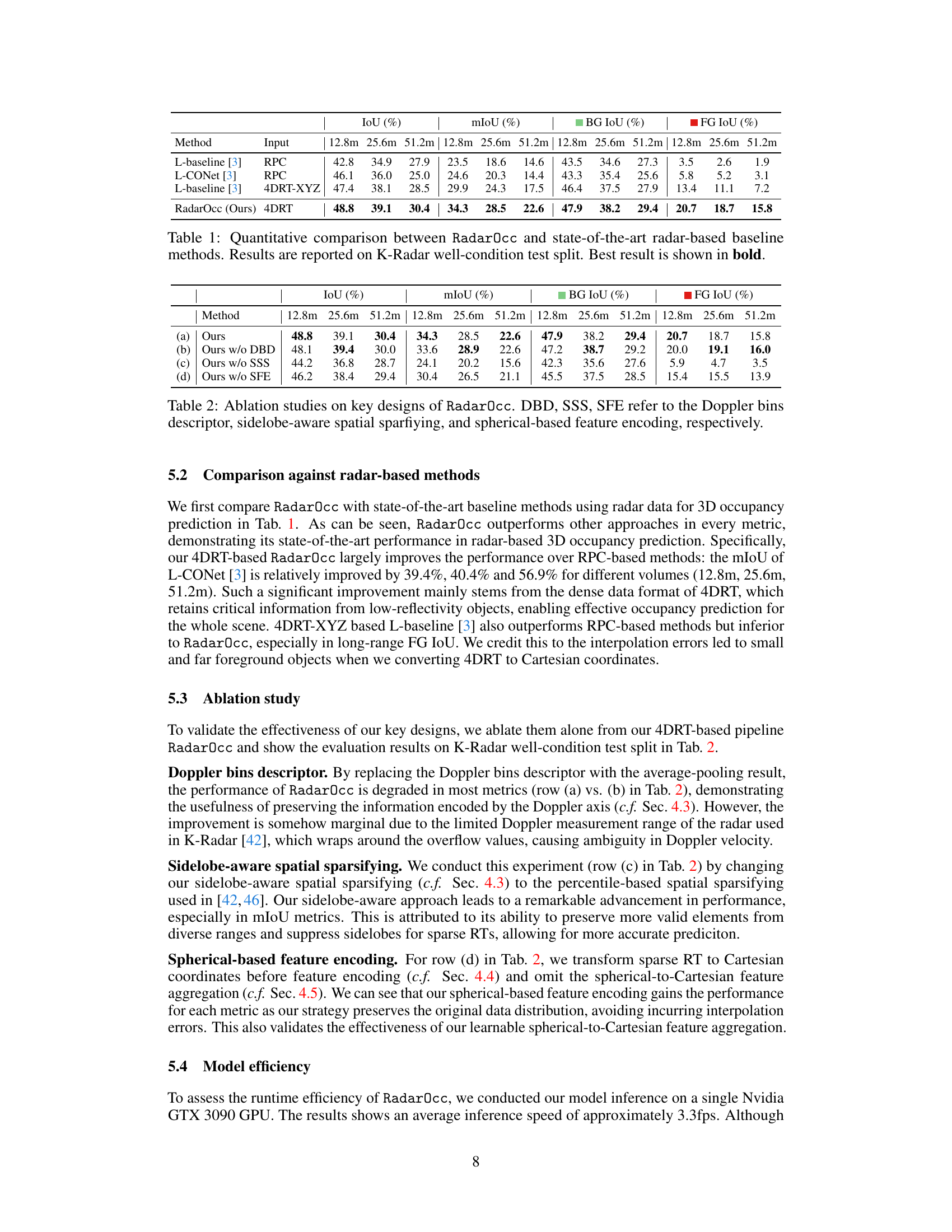

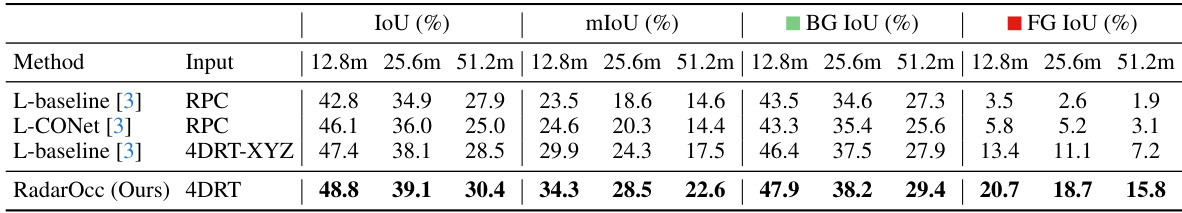

This table presents a quantitative comparison of the proposed RadarOcc method against existing state-of-the-art radar-based methods for 3D occupancy prediction. The results are evaluated using the Intersection over Union (IoU) metric across three different ranges (12.8m, 25.6m, and 51.2m), and broken down into overall IoU, mean IoU (mIoU), background IoU (BG IoU), and foreground IoU (FG IoU). The best performance is highlighted in bold, demonstrating RadarOcc’s superior performance compared to other radar-based methods.

In-depth insights#

4D Radar Sensing#

Four-dimensional (4D) radar sensing represents a significant advancement in automotive perception, offering several key advantages over traditional 3D radar and other sensor modalities like LiDAR and cameras. 4D radar’s enhanced elevation resolution provides a more detailed scene representation, capturing both horizontal and vertical planes, resulting in richer point cloud data. This is particularly beneficial for autonomous driving, enabling more precise object detection and classification. Its all-weather capabilities are a major advantage over LiDAR and cameras, as it performs reliably even in adverse conditions like fog, rain, or snow. Furthermore, 4D radar systems are generally more cost-effective than LiDAR, making them a potentially more accessible and widely deployable technology for autonomous vehicles. However, challenges remain, primarily concerning the processing of large volumes of noisy data. Efficiently extracting meaningful information from 4D radar’s high-dimensional data tensors is crucial for real-time applications. Addressing these computational challenges is a key area of current research, particularly with the rise of neural network-based processing techniques. Thus, while the technology holds great promise, effective computational techniques are crucial to unlocking its full potential in autonomous navigation.

Sparse RT Encoding#

Sparse RT encoding is a crucial preprocessing step for efficient and effective 3D occupancy prediction using 4D imaging radar. The raw 4D radar tensor (RT) is typically massive, posing significant computational challenges. This encoding method directly addresses this issue by reducing the dimensionality and data volume of the raw RT while preserving essential information. Instead of relying on the traditional, sparse point cloud representation which discards important data (e.g., low-reflectivity surface details), a sparse RT encoding approach retains this valuable information, leading to a much richer input representation for the downstream occupancy prediction network. The encoding often involves advanced techniques like Doppler bins descriptors, sidelobe-aware spatial sparsification, and potentially range-wise self-attention, all aimed at filtering noise and prioritizing important signal features. This improved data efficiency enables the use of more complex and capable deep learning models, thus enhancing the accuracy of the occupancy prediction itself. The success of this approach hinges on a balance between information preservation and computational cost reduction, requiring careful selection of the encoding parameters to optimize for accuracy and runtime efficiency.

Occupancy Prediction#

Occupancy prediction, a critical task in autonomous driving, aims to create a 3D representation of the environment, classifying each voxel as occupied or free. Traditional approaches heavily relied on LiDAR or cameras, both susceptible to adverse weather conditions. This paper explores the use of 4D imaging radar, which offers superior robustness in challenging weather, as a primary sensor for occupancy prediction. The innovative use of the 4D radar tensor, a richer data source compared to sparse point clouds, forms the foundation of their method, RadarOcc. RadarOcc addresses challenges related to 4D radar data’s volume and noise through Doppler bin descriptors, sidelobe-aware spatial sparsification, and range-wise self-attention mechanisms. The novel spherical-based feature encoding avoids interpolation errors, leading to improved accuracy. Experimental results showcase RadarOcc’s state-of-the-art performance against other radar-based methods and competitive results compared to LiDAR and camera-based approaches, highlighting the potential of 4D radar for robust and reliable occupancy prediction in all-weather conditions.

Robustness Analysis#

A Robustness Analysis section for this research paper would be crucial. It should thoroughly investigate the model’s performance across diverse conditions beyond the standard evaluation metrics. Sensitivity to noise in the 4D radar data should be a primary focus, exploring the impact of varying noise levels on accuracy and examining techniques to mitigate this vulnerability. Another key aspect is robustness to adverse weather conditions. The paper highlights the all-weather capability of radar, so this section needs to quantitatively show how RadarOcc’s performance degrades (or remains stable) under different weather situations (rain, fog, snow) compared to other sensor modalities (LiDAR, camera). Ablation studies on key components are already mentioned, but the analysis could be extended to consider how those components contribute to overall robustness. The study should also explore generalizability, assessing performance on unseen datasets or scenarios to evaluate the model’s adaptability. Finally, a comparison against adversarial attacks should evaluate the model’s resilience to manipulated inputs, potentially revealing vulnerabilities and guiding future improvements.

Future Radar Work#

Future research in radar technology for autonomous driving should prioritize improving the resolution and accuracy of 4D imaging radars, enabling better detection of small objects and features in complex scenes. Addressing the limitations of current 4D radar data processing techniques, such as efficiently handling the large data volume and mitigating noise, remains crucial. Developing advanced fusion techniques to effectively combine radar data with other sensor modalities (e.g., cameras and LiDAR) will improve overall perception robustness. Research efforts should also focus on developing more sophisticated algorithms for 3D occupancy prediction, potentially utilizing deep learning techniques to improve the accuracy and speed of processing. Finally, exploring novel approaches to handle challenging scenarios like adverse weather conditions and low-reflectivity surfaces is vital for achieving reliable all-weather autonomous driving.

More visual insights#

More on figures

This figure shows the overall pipeline of the RadarOcc model for 3D occupancy prediction using 4D imaging radar data. It illustrates the four main components: data volume reduction (using Doppler bins encoding and sidelobe-aware spatial sparsification), spherical-based feature encoding, spherical-to-Cartesian feature aggregation, and 3D occupancy decoding. The process starts with a large 4DRT, reduces it to a smaller sparse representation, encodes features in spherical coordinates, aggregates them into Cartesian coordinates, and finally outputs the 3D occupancy grid.

This figure shows the overall pipeline of the proposed RadarOcc method. It starts with data volume reduction of the 4D radar tensor (4DRT) using Doppler bins encoding and sidelobe-aware spatial sparsifying. Then, spherical-based feature encoding is performed on the sparse representation, followed by spherical-to-Cartesian feature aggregation using Cartesian voxel queries. Finally, 3D occupancy decoding produces the output 3D occupancy volume.

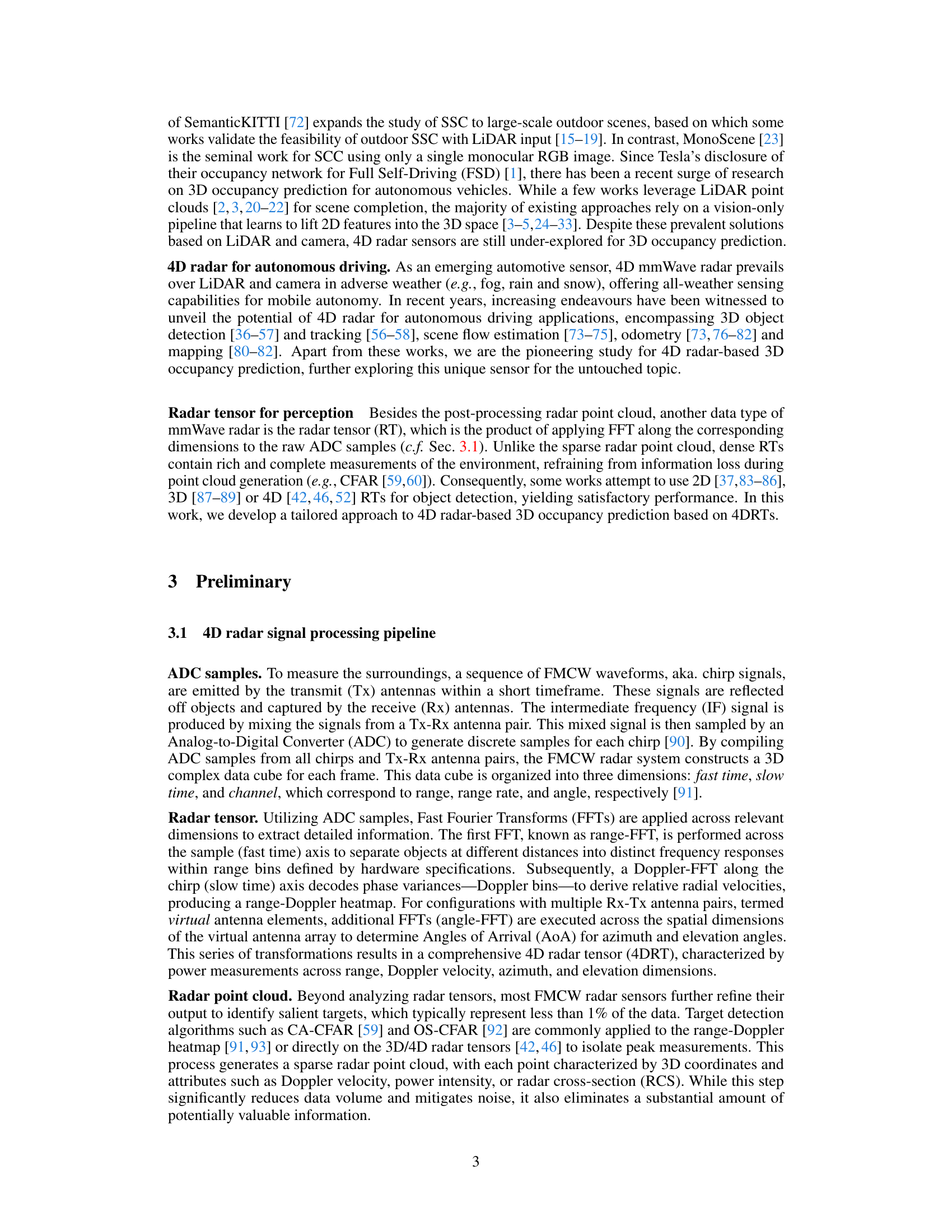

This figure compares the qualitative results of RadarOcc, a LiDAR-based method (L-baseline), and a camera-based method (SurroundOcc) under adverse weather conditions. The top row shows the input data from each modality (4D Radar, LiDAR, and RGB camera). The bottom row shows the corresponding predictions of 3D occupancy, highlighting the superiority of RadarOcc in adverse weather. The RGB images include ground truth bounding boxes for reference.

This figure illustrates the complete pipeline of the RadarOcc model for 3D occupancy prediction using 4D imaging radar data. It begins with data volume reduction techniques to handle the large size of 4D radar tensors (4DRTs), followed by spherical-based feature encoding to process the spatial features directly on the spherical RTs without transforming them to Cartesian coordinates. Finally, a Cartesian occupancy prediction is generated using voxel queries.

More on tables

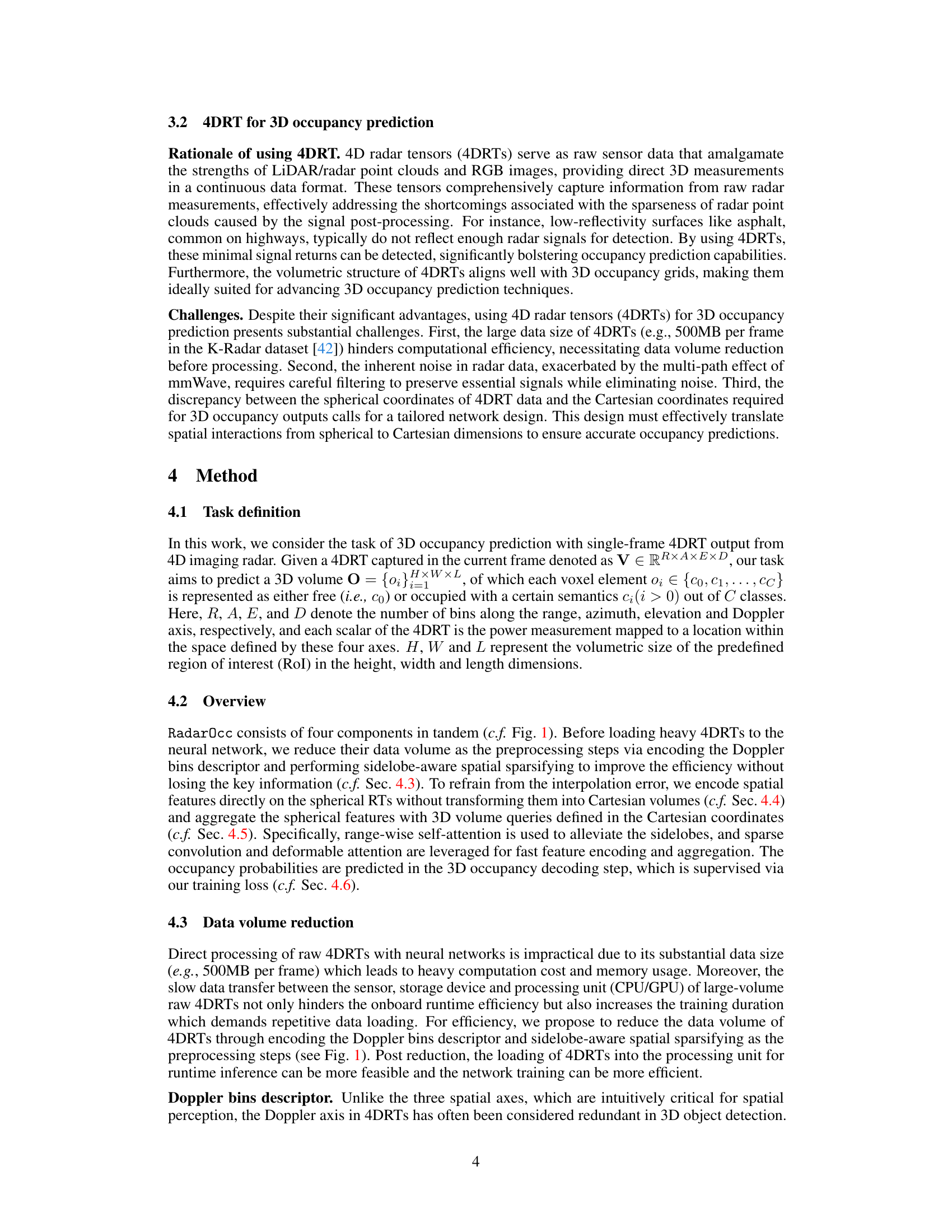

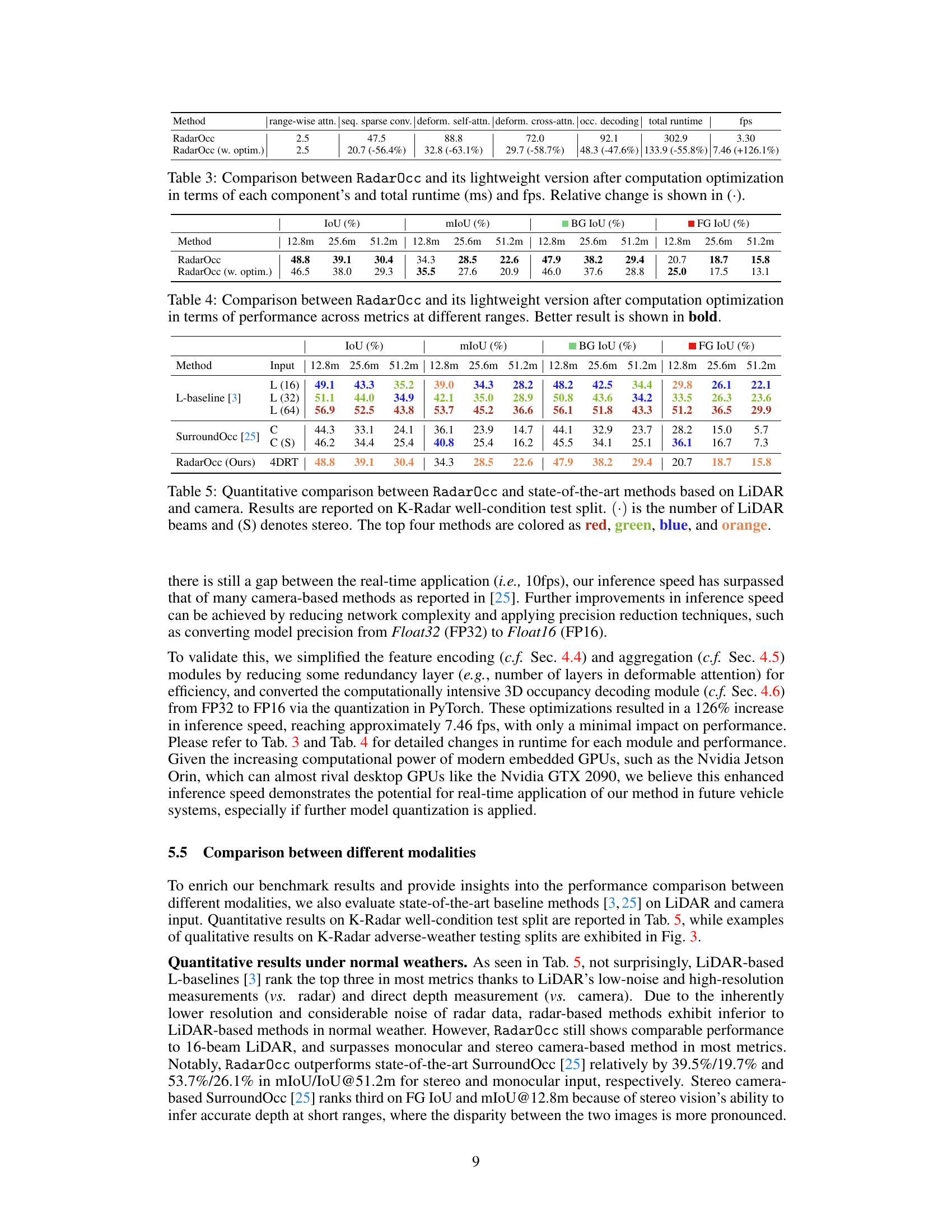

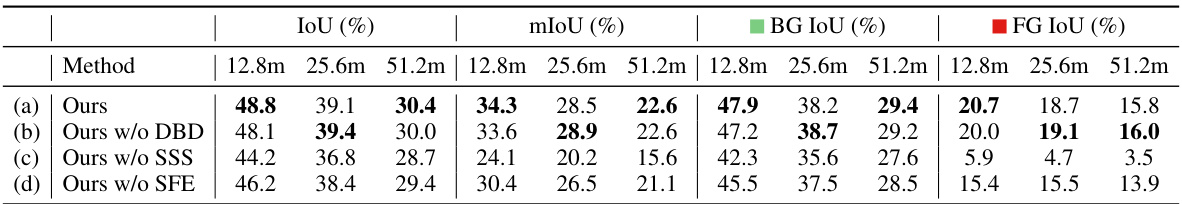

This table presents the ablation study results for RadarOcc, showing the impact of removing each of the three key components: Doppler bins descriptor (DBD), sidelobe-aware spatial sparsifying (SSS), and spherical-based feature encoding (SFE). The performance metrics (IoU, mIoU, BG IoU, FG IoU) are evaluated at different ranges (12.8m, 25.6m, 51.2m). This helps to understand the contribution of each component to the overall performance of the model.

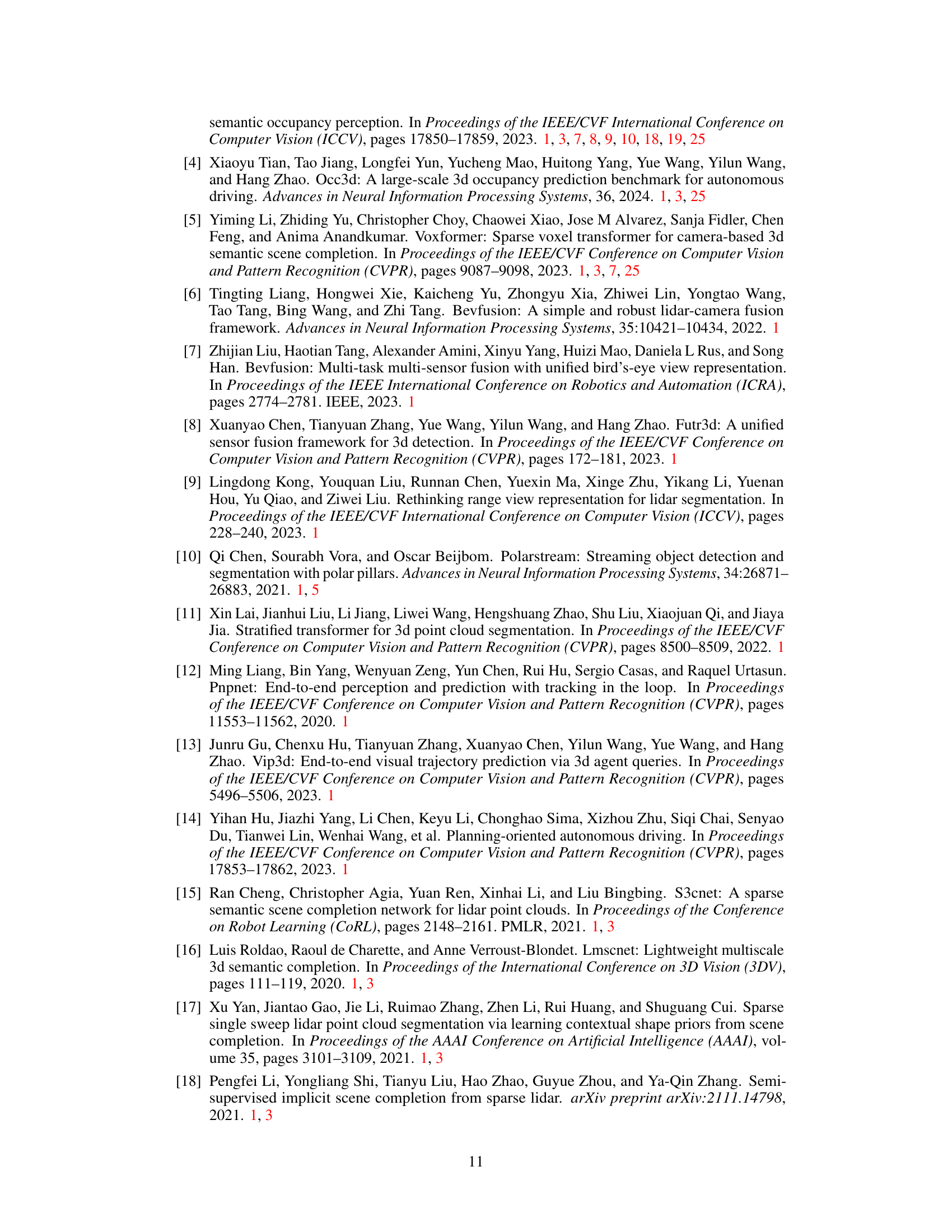

This table compares the runtime performance of the original RadarOcc model and its optimized lightweight version. The table breaks down the runtime of each component (range-wise self-attention, sequential sparse convolution, deformable self-attention, deformable cross-attention, occupancy decoding) in milliseconds (ms), showing the percentage change in runtime for each component in the optimized version. The total runtime (ms) and frames per second (fps) are also shown, indicating a significant increase in fps after optimization.

This table presents a comparison of the performance of the original RadarOcc model and its lightweight version, after various computation optimizations. The comparison focuses on three different metrics (IoU, mIoU, and foreground/background IoU) across three different ranges (12.8m, 25.6m, and 51.2m). The results highlight the impact of the optimization on performance, showing whether performance gains were made at the cost of accuracy. The best performance for each metric and range is shown in bold.

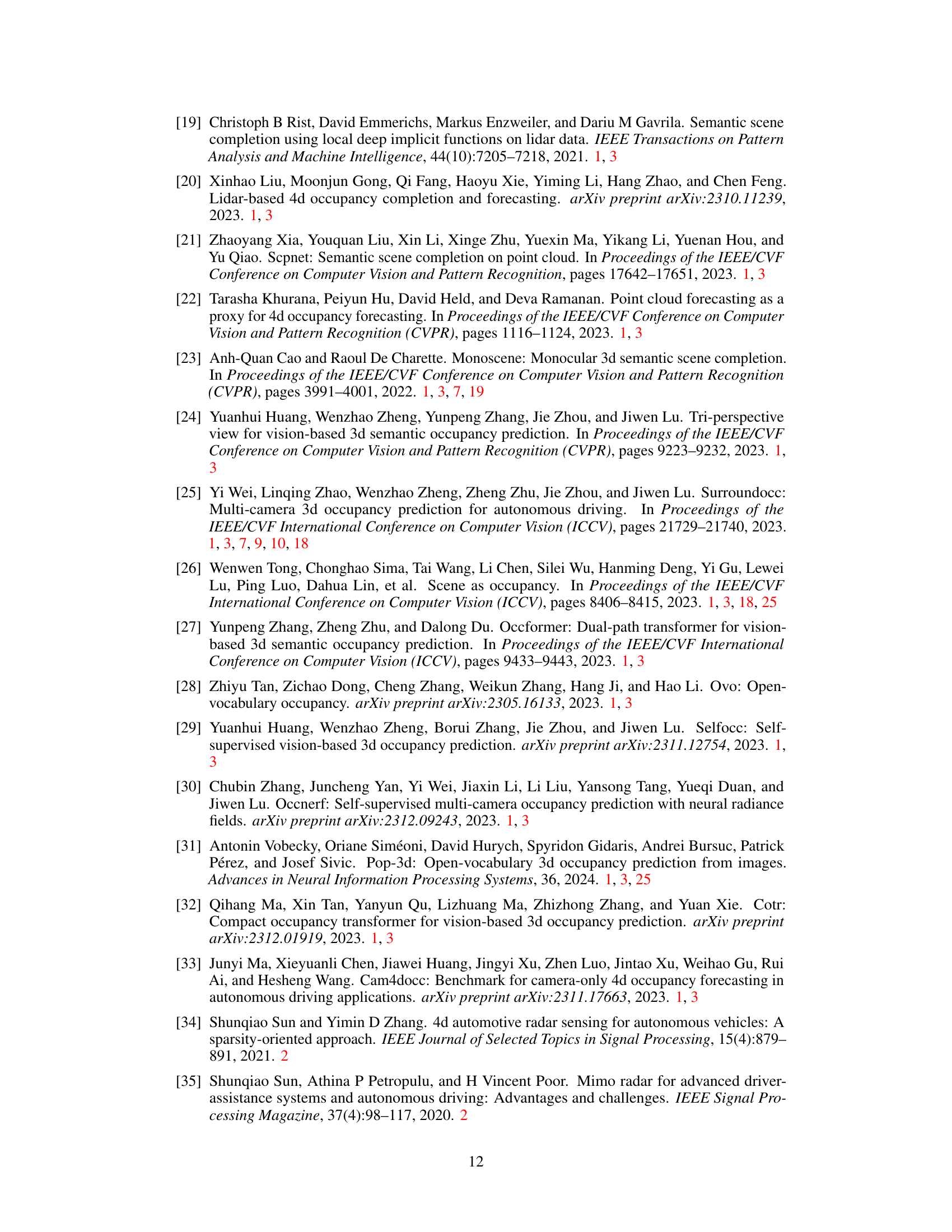

This table presents a quantitative comparison of the proposed RadarOcc method against existing state-of-the-art radar-based baseline methods for 3D occupancy prediction. The comparison is based on the Intersection over Union (IoU) metric, calculated separately for foreground (FG), background (BG), and overall mean IoU (mIoU). The results are reported for three different ranges (12.8m, 25.6m, and 51.2m) on the K-Radar dataset’s well-conditioned test split. The best performing method for each metric and range is highlighted in bold.

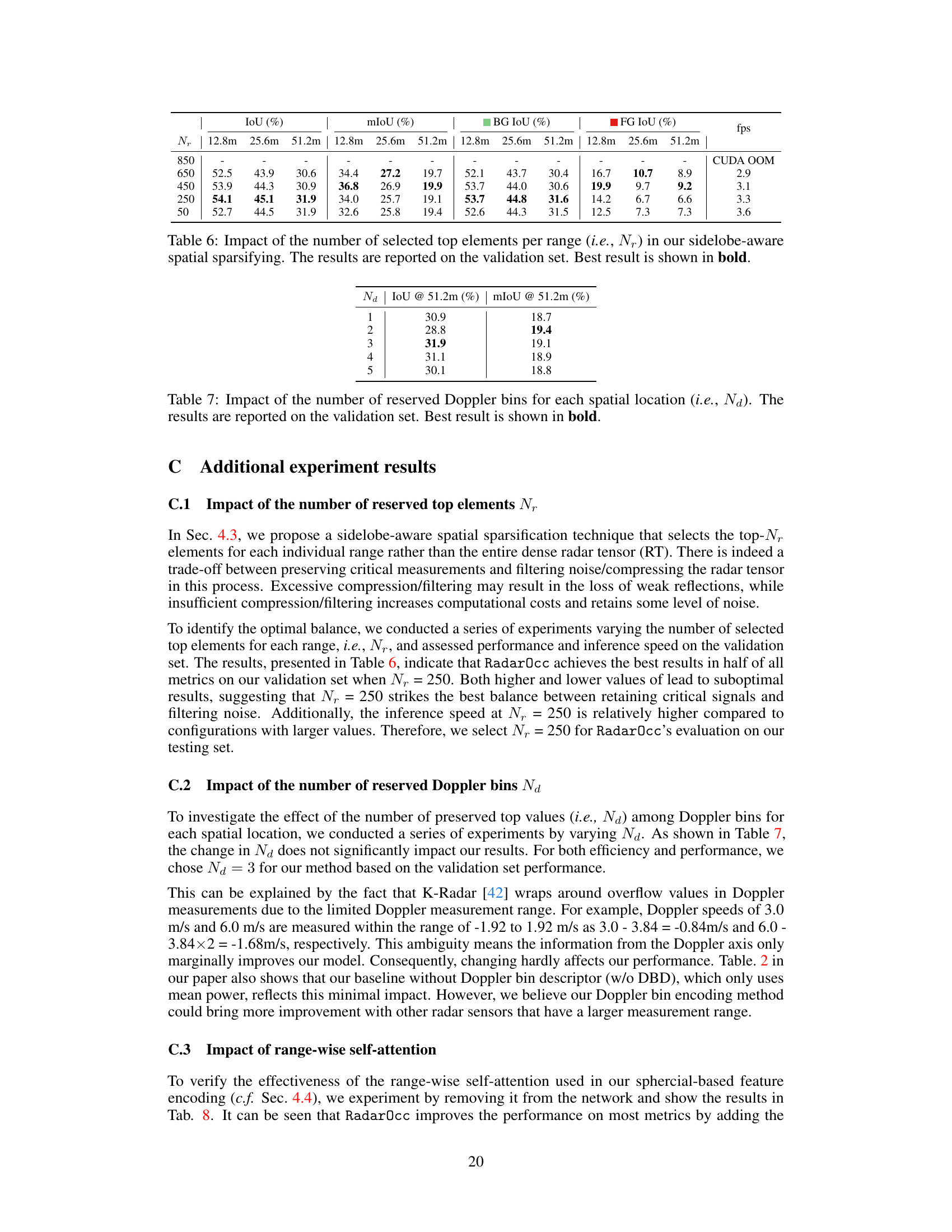

This table presents the ablation study on the impact of the number of reserved top elements (Nr) in the sidelobe-aware spatial sparsification step of the RadarOcc pipeline. It shows how different values of Nr affect various metrics (IoU, mIoU, BG IoU, FG IoU) at different ranges (12.8m, 25.6m, 51.2m) and the inference speed (fps). The best performing Nr value is highlighted in bold, indicating the optimal balance between noise reduction and information preservation.

This ablation study investigates the effect of the number of reserved Doppler bins (Na) on the model’s performance. The table shows the IoU and mIoU at 51.2m for different values of Na (1 to 5), evaluated on the validation set. The best performing Na value is highlighted in bold, indicating an optimal balance between preserving crucial Doppler information and reducing computational cost.

This table presents the ablation study results on the range-wise self-attention mechanism of the Radarocc model. It compares the performance metrics (IoU, mIoU, BG IoU, FG IoU) of the full Radarocc model with a version where the range-wise self-attention is removed. The comparison is done across three different range intervals (12.8m, 25.6m, 51.2m) to show the impact of the range-wise self-attention on the model’s ability to predict occupancy at varying distances.

Full paper#