↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Contextual bandits, a type of online learning problem, aim to minimize cumulative regret, the difference between the rewards received and the optimal rewards. Traditional approaches often focus on minimax regret bounds, assuming the worst-case scenario for reward variance. However, this approach overlooks the potential benefits of lower reward variances.

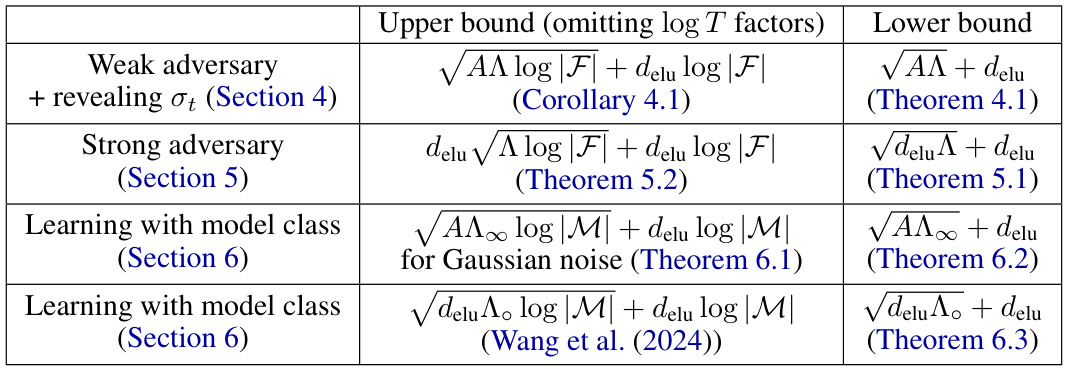

This research delves into the impact of reward variance on contextual bandit regret. The authors consider two types of adversaries: weak (setting variance before observing learner’s action) and strong (setting variance after observing learner’s action). They prove that lower variance can lead to significantly better regret bounds, especially for weak adversaries. Furthermore, the paper explores scenarios where distributional information about the reward is available, refining and extending our knowledge of contextual bandits’ complexity.

Key Takeaways#

Why does it matter?#

This paper is crucial because it challenges the common assumption in contextual bandit research that reward variance is insignificant, thus opening new avenues for algorithm design and theoretical analysis. It demonstrates that low reward variance can lead to significantly improved regret bounds, which is a major step forward in improving efficiency and creating more robust algorithms. The findings also provide novel lower bounds, enhancing our understanding of the fundamental limitations of contextual bandit problems. This research directly impacts the development of more efficient and practical algorithms for various real-world applications.

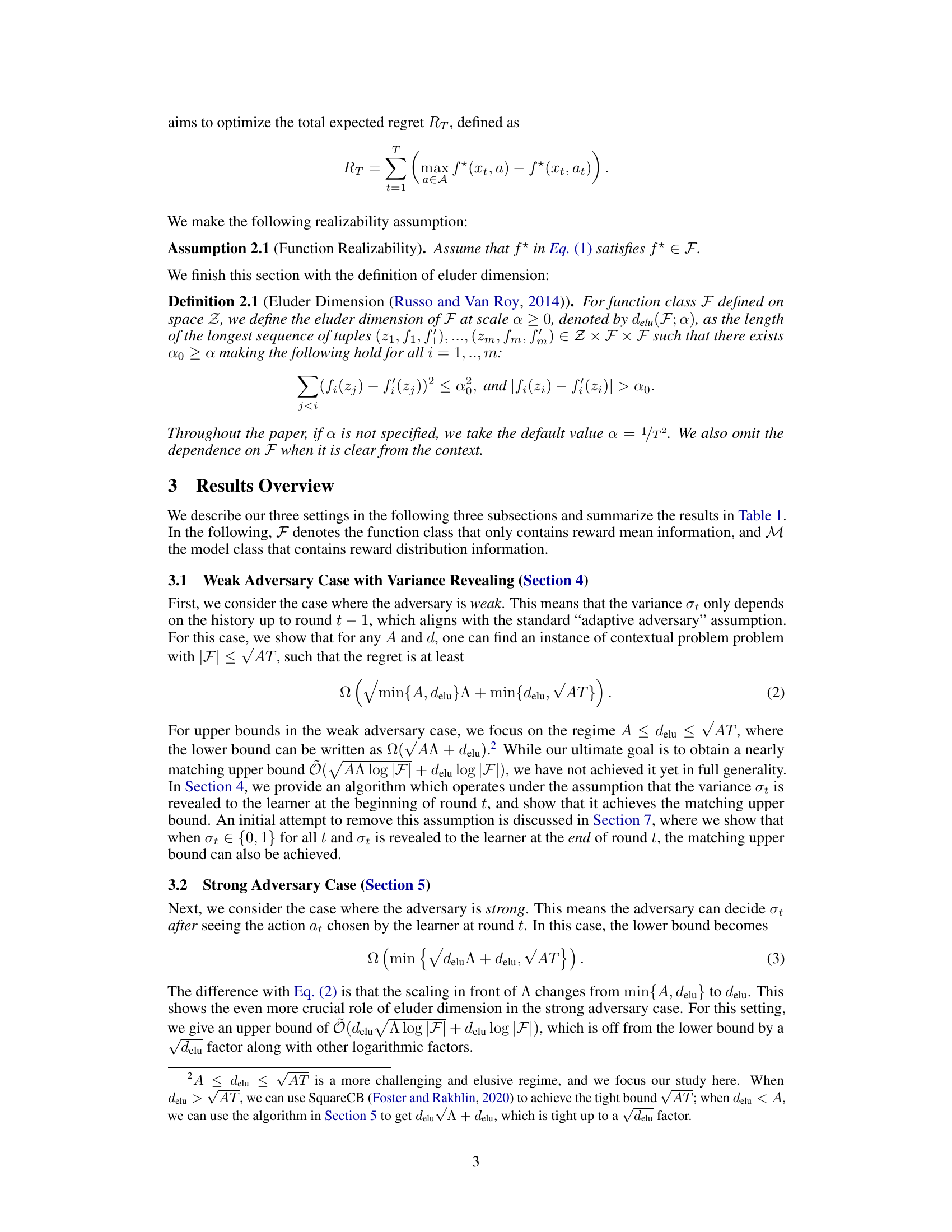

Visual Insights#

This table summarizes the upper and lower bounds on the regret for contextual bandits under different settings. The settings vary based on the adversary’s strength (weak or strong), whether the variance is revealed to the learner, and whether the model class provides information about the reward distribution. The upper and lower bounds are expressed in terms of the eluder dimension (delu), the total variance (A), and the number of actions (A).

Full paper#