↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Medical image analysis using deep learning models often lacks transparency, hindering trust and clinical adoption. Existing interpretable methods have limitations like model dependency and visualization issues. This paper introduces Med-MICN, addressing these challenges by offering multi-dimensional interpretability.

Med-MICN excels by aligning neural symbolic reasoning, concept semantics, and saliency maps. It achieves high accuracy, facilitates understanding from multiple perspectives, and automates concept labeling. The results across benchmark datasets demonstrate Med-MICN’s superior performance and interpretability compared to other methods, promoting trustworthy AI in medical diagnoses.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in medical image analysis and explainable AI. It addresses the critical need for trustworthy and interpretable AI models in healthcare, a major challenge in the field. The proposed Med-MICN framework offers a novel solution with significant potential impact, opening avenues for future research in multi-dimensional explanation alignment and automated concept labeling. Its model-agnostic nature and superior performance on benchmark datasets make it highly relevant to current trends in XAI.

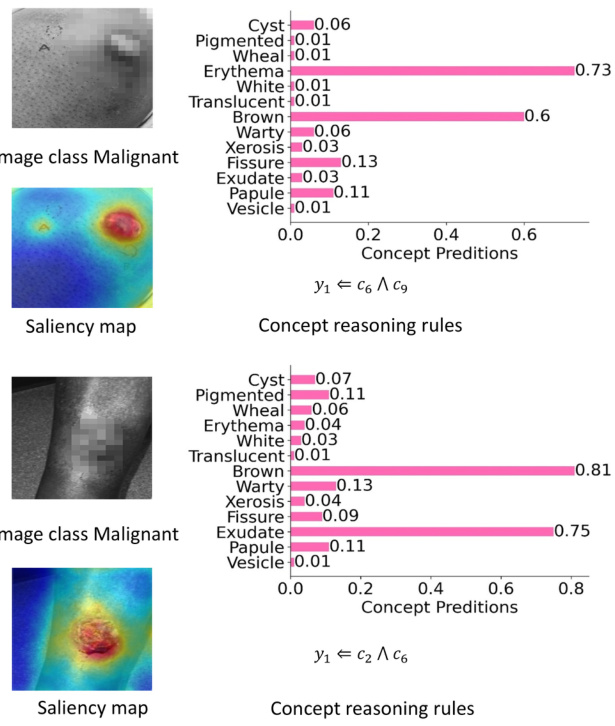

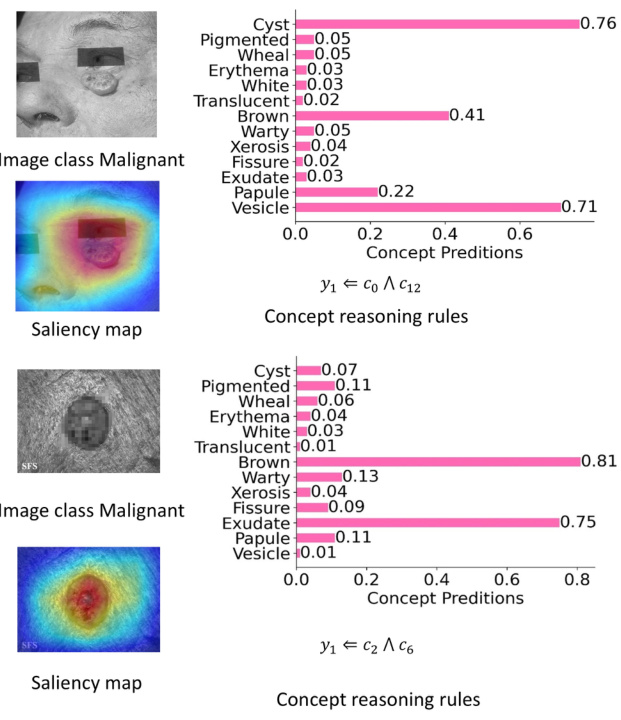

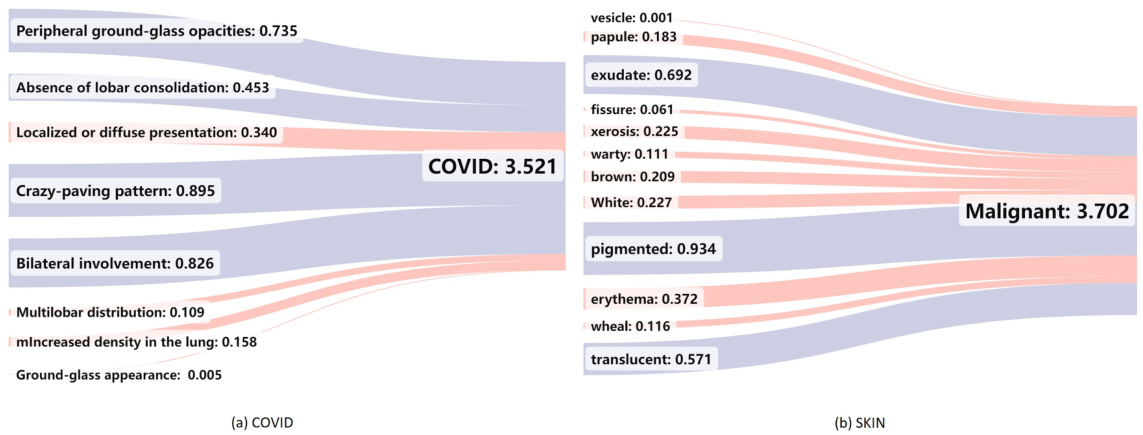

Visual Insights#

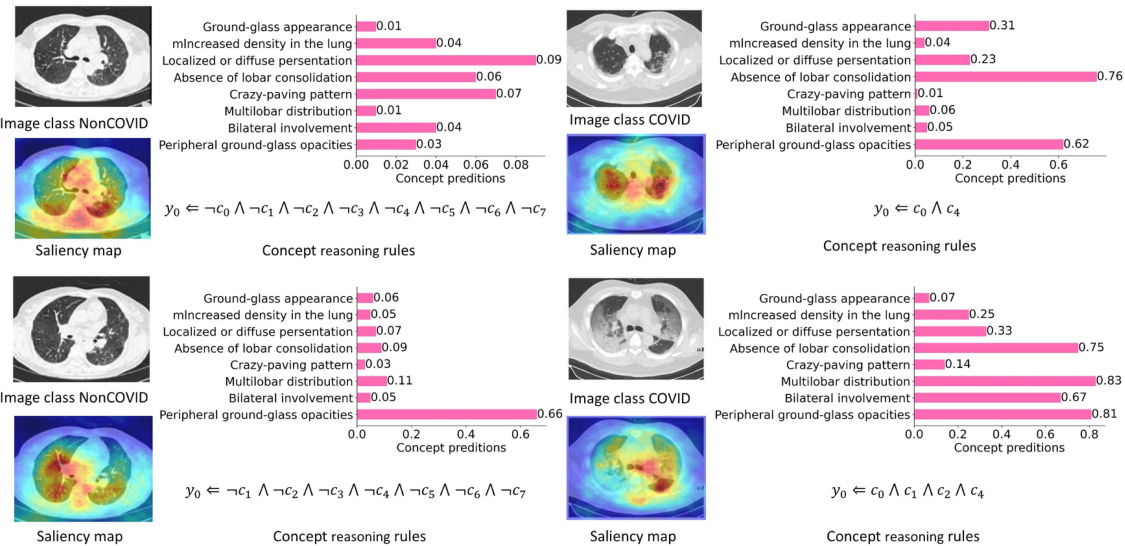

The figure shows four examples of medical image analysis using the Med-MICN model. For each example, the image is displayed along with the concept prediction scores for different concepts (represented as bars), the concept reasoning rules which explain the relationships between concepts and predictions (represented as logical expressions), and a saliency map which highlights the regions of the image that were most important for the model’s decision. The figure demonstrates how the model integrates multiple aspects of interpretability to achieve a comprehensive understanding of the decision-making process.

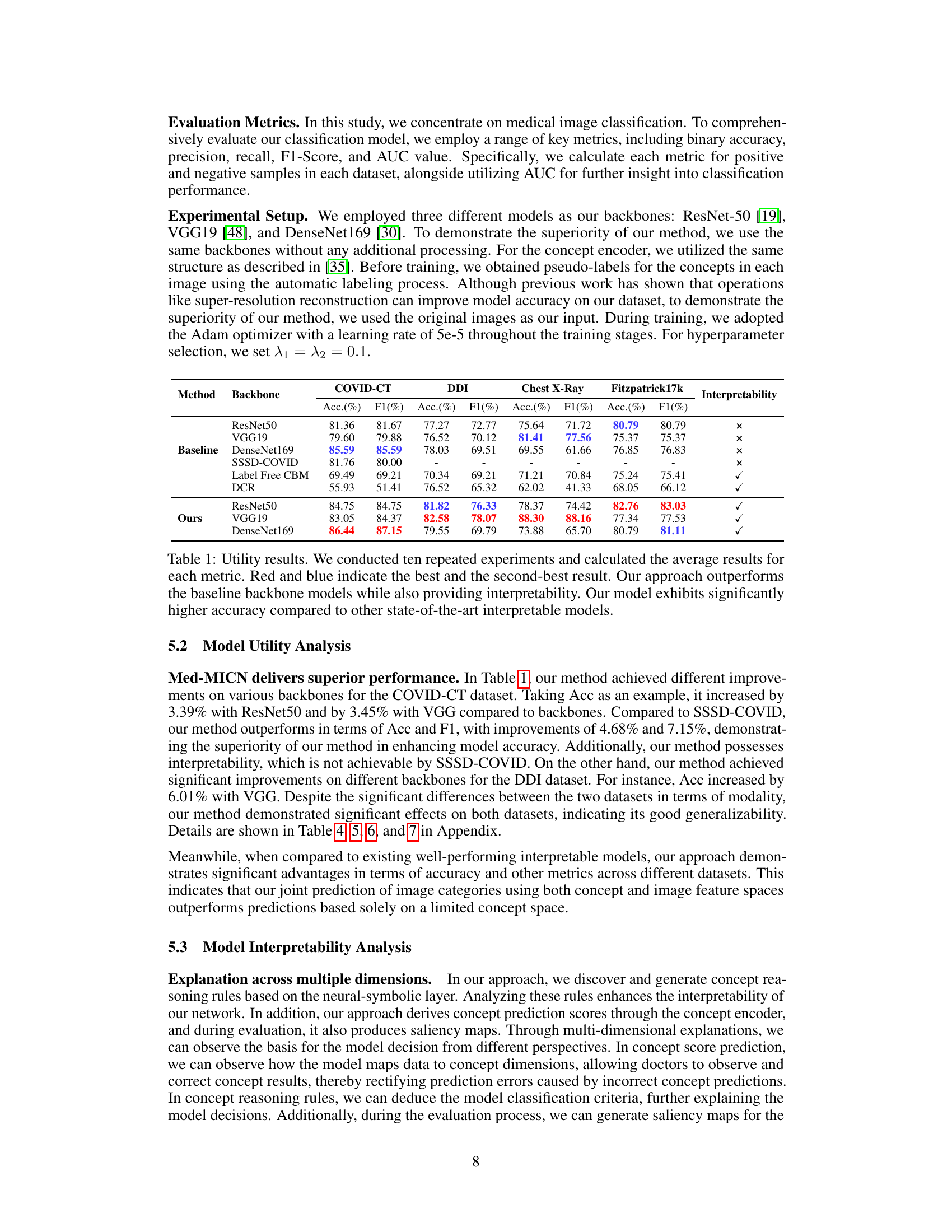

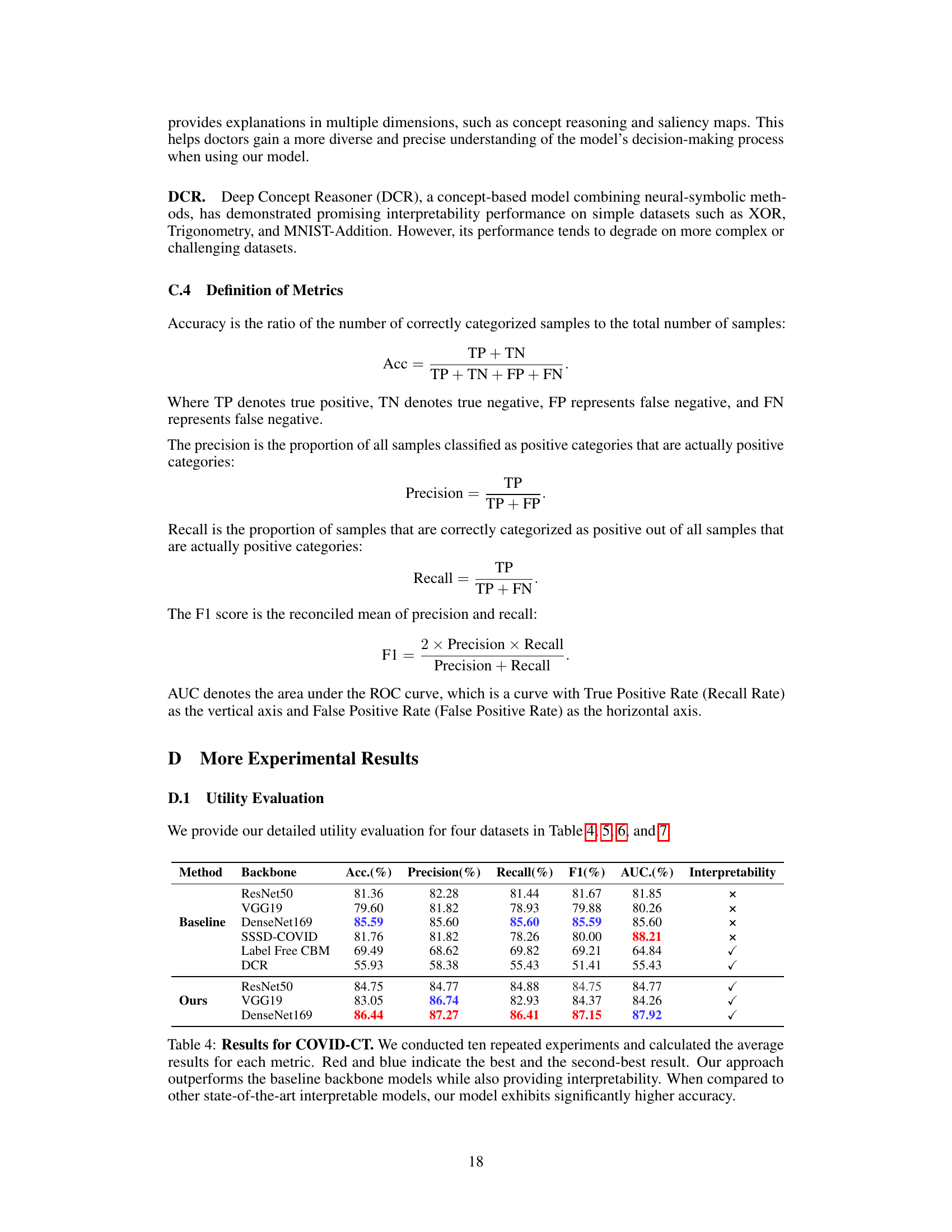

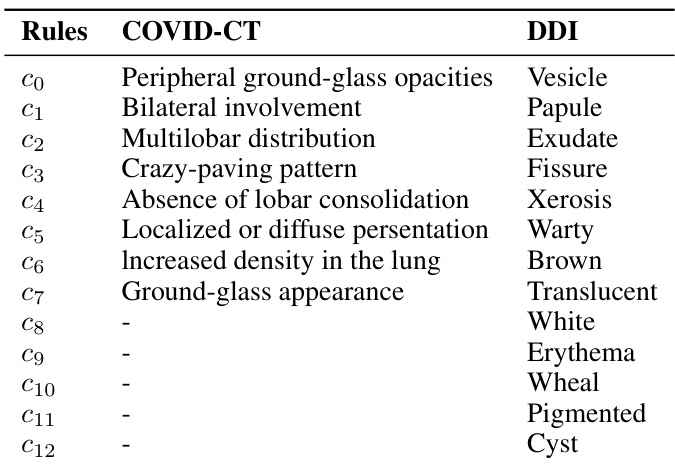

This table presents the performance comparison of the proposed Med-MICN model against several baseline and state-of-the-art interpretable models on four benchmark medical image datasets. The results are evaluated using standard classification metrics (Accuracy, F1-score, etc.) demonstrating Med-MICN’s superior performance and interpretability compared to other methods.

In-depth insights#

Med-MICN Framework#

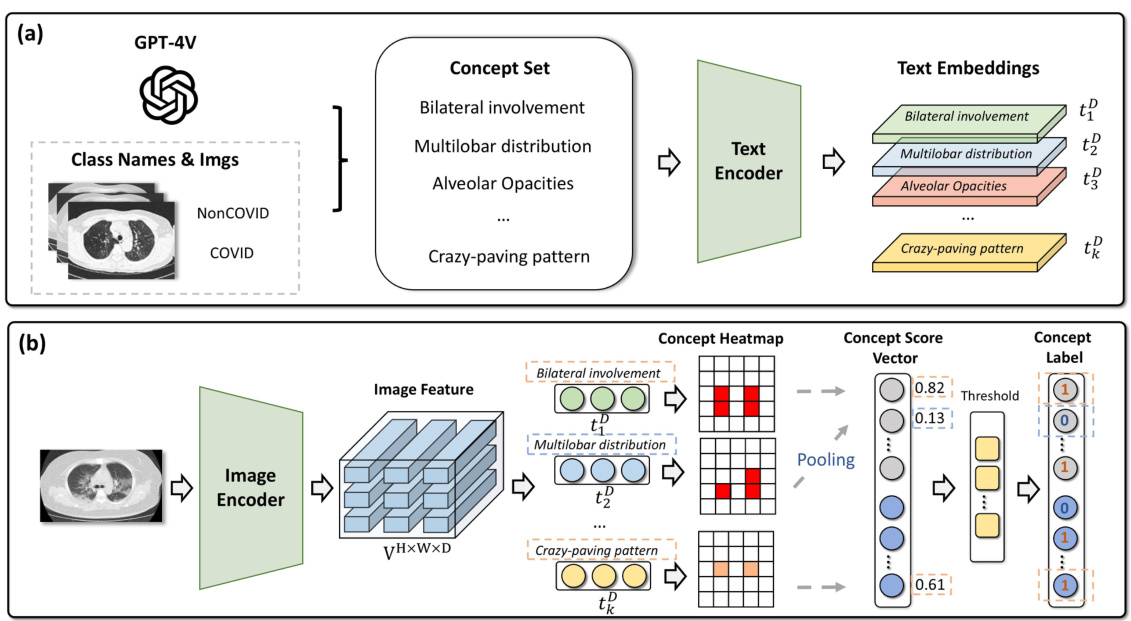

The Med-MICN framework presents a novel, end-to-end approach for medical image classification that prioritizes multi-dimensional interpretability. Unlike traditional methods, Med-MICN integrates concept-based reasoning, neural symbolic methods, and saliency maps for a more comprehensive and trustworthy explanation. Automatic concept labeling and alignment, achieved using Vision-Language Models (VLMs), reduces the need for extensive manual annotation. This automation and integration across multiple interpretative dimensions enable Med-MICN to deliver high prediction accuracy while providing explanations that are readily understandable to medical professionals. The end-to-end architecture streamlines the process and allows for straightforward application to diverse medical datasets, enhancing the reliability and applicability of the model in real-world clinical settings. Furthermore, the framework’s model-agnostic nature facilitates its integration with a variety of existing models, making it a flexible tool for improving trust and transparency in AI-driven medical diagnosis.

Multi-dimensional XAI#

Multi-dimensional XAI signifies a paradigm shift from traditional single-perspective explainability methods in AI. Instead of relying solely on one type of explanation (e.g., saliency maps or concept-based reasoning), a multi-dimensional approach integrates multiple complementary techniques. This allows for a richer, more robust, and trustworthy understanding of a model’s decision-making process. Each dimension offers a unique view, revealing different aspects of the model’s internal workings. Combining these perspectives helps mitigate the limitations of individual methods, improving accuracy and reliability. For instance, while saliency maps highlight regions important to a decision, concept-based explanations provide a higher-level understanding of the model’s reasoning. The alignment of these different explanations becomes crucial for building trust and ensuring the fidelity of the interpretability. This holistic approach is particularly valuable in high-stakes domains like healthcare, where trust and transparency are essential. A crucial aspect of this is the automation of the process, which allows the system to adapt to various datasets without extensive human intervention. This automation significantly reduces human effort while maintaining, if not improving, the accuracy of the interpretation.

Concept Alignment#

Concept alignment, in the context of medical image analysis, is crucial for bridging the gap between human understanding and machine-generated interpretations. Effective concept alignment ensures that the concepts used by the model directly relate to clinically relevant features. This is especially important in high-stakes applications like medical diagnosis where trust and transparency are paramount. The process involves carefully selecting or generating concepts that align with the image data and clinical knowledge. The goal is to create a system where model decisions are not only accurate but also easily interpretable by healthcare professionals. This often requires a multi-modal approach, leveraging the strengths of both image analysis and natural language processing to ensure the concepts are both semantically meaningful and visually grounded in the image features. A well-aligned concept system enhances the explanatory power of the model, aiding clinicians in understanding the reasoning behind diagnoses and facilitating trust in AI-assisted decision-making. This approach is especially beneficial in scenarios where the training data is limited and concept-based interpretability is crucial for clinical acceptance.

Ablation Experiments#

Ablation experiments systematically remove components of a model to assess their individual contributions. Thoughtful ablation studies are crucial for understanding model behavior and isolating the impact of specific design choices. By progressively removing parts (e.g., model layers, data augmentation techniques, or loss functions), researchers can pinpoint which aspects are most critical for performance. The results of ablation experiments inform decisions about model architecture and guide future improvements, leading to better-performing and more robust models. Furthermore, well-designed ablation studies enhance the interpretability of a model by demonstrating the individual impact of each part. This allows for a better understanding of how each component affects overall functionality, paving the way for more explainable AI systems. However, it is important to note that the interpretation of ablation results can be nuanced and context-dependent, requiring careful consideration of the model design and research goals.

Future Directions#

Future research could explore enhancing Med-MICN’s adaptability to diverse medical imaging modalities beyond the four datasets evaluated. Expanding the range of medical conditions addressed would broaden its applicability. Investigating the impact of varying data quantities on model performance and interpretability is crucial. A key area for development involves improving the automation of concept labeling, reducing reliance on human annotation and potentially leveraging techniques like weakly supervised learning or transfer learning from large language models. Exploring novel methods to integrate diverse explanation methods (e.g., incorporating counterfactual explanations) could lead to richer and more holistic interpretations. Finally, a thorough assessment of Med-MICN’s performance in real-world clinical settings with rigorous user testing among medical practitioners is essential to validate its clinical utility and address potential limitations in practical application.

More visual insights#

More on figures

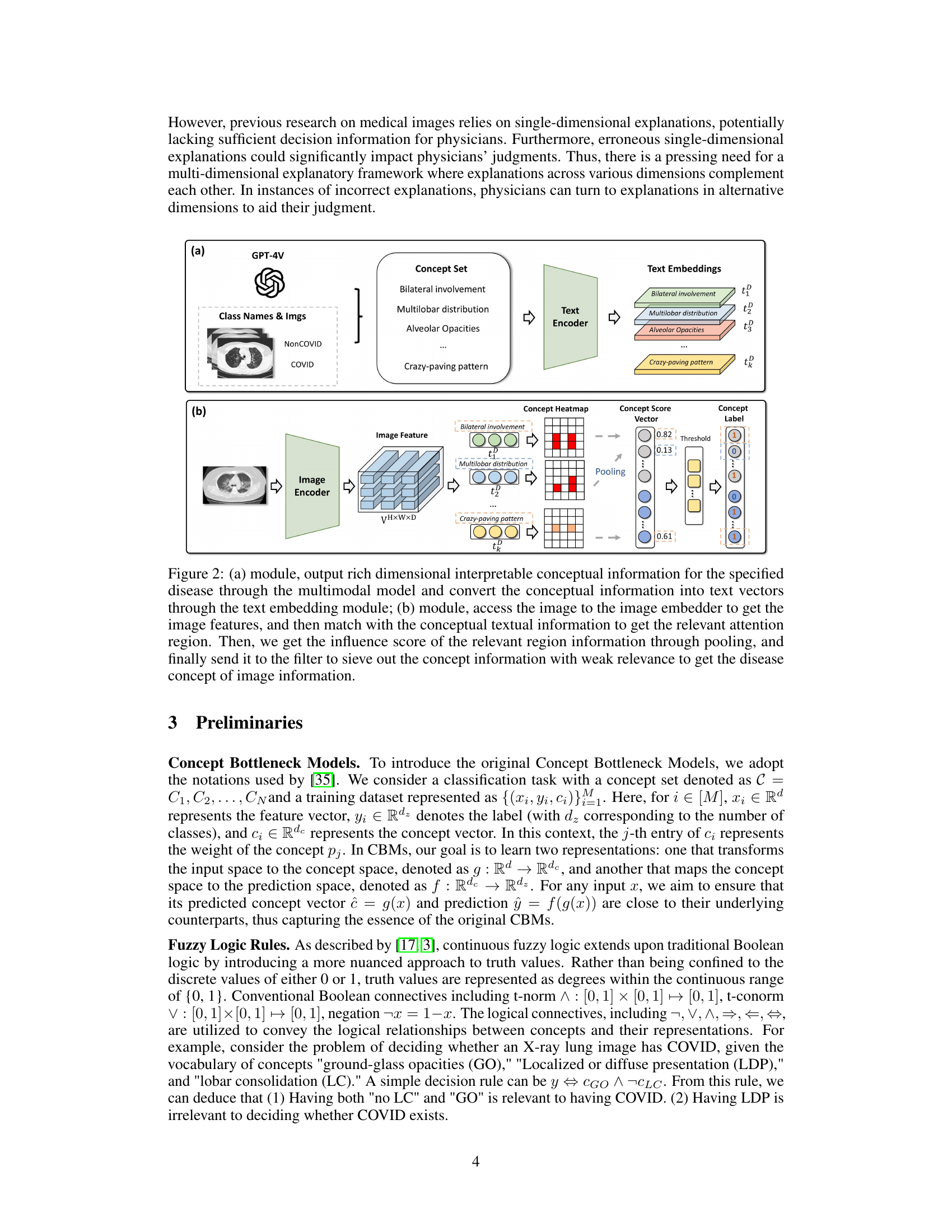

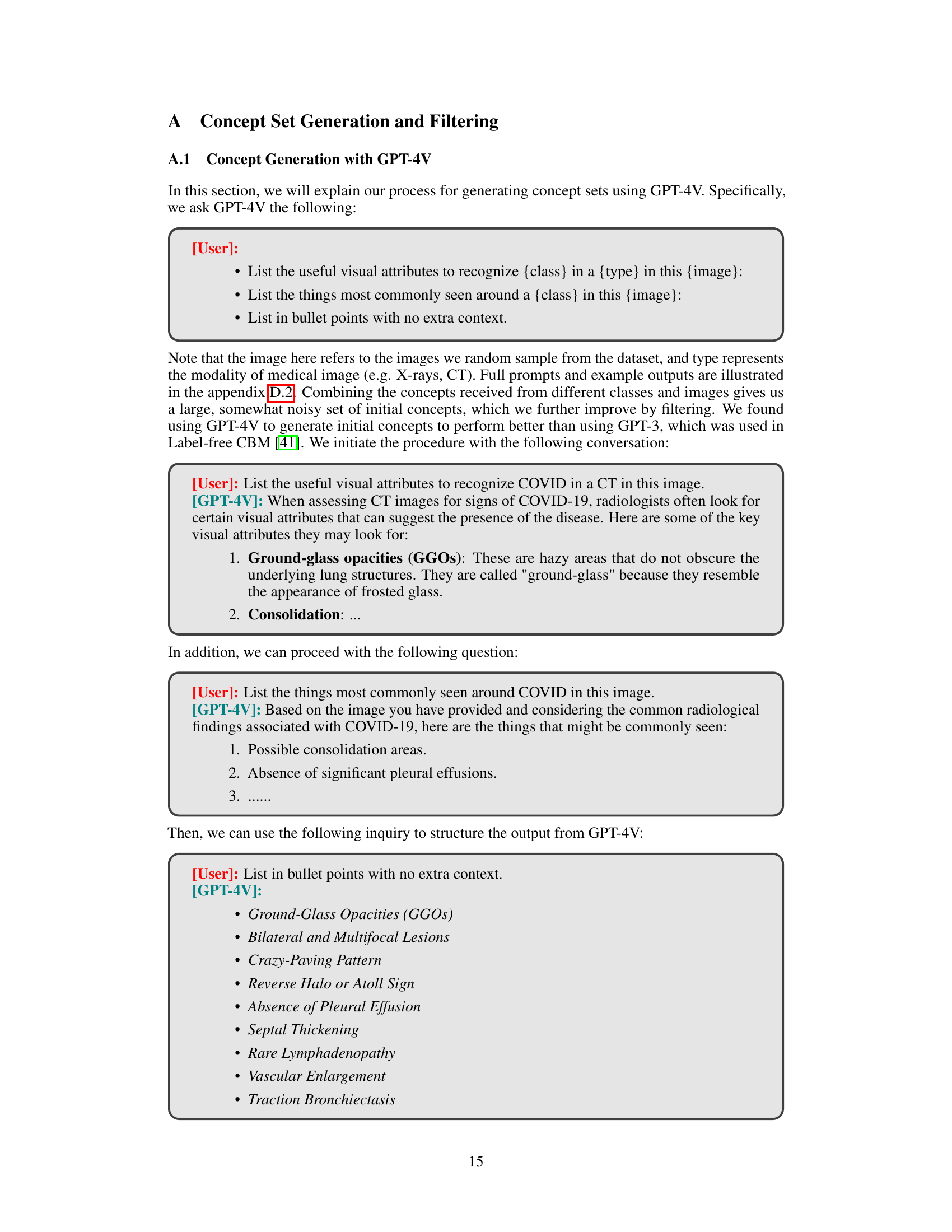

This figure shows the two modules of the Med-MICN framework. Module (a) uses a multimodal model (like GPT-4V) to generate concepts for a given disease, then converts those concepts into text embeddings using a text encoder. Module (b) takes an image as input, uses an image encoder to extract features, and aligns those features with the concept embeddings generated in module (a). This alignment process helps pinpoint relevant image regions related to each concept and filters out weak associations, resulting in a refined set of image-concept relationships.

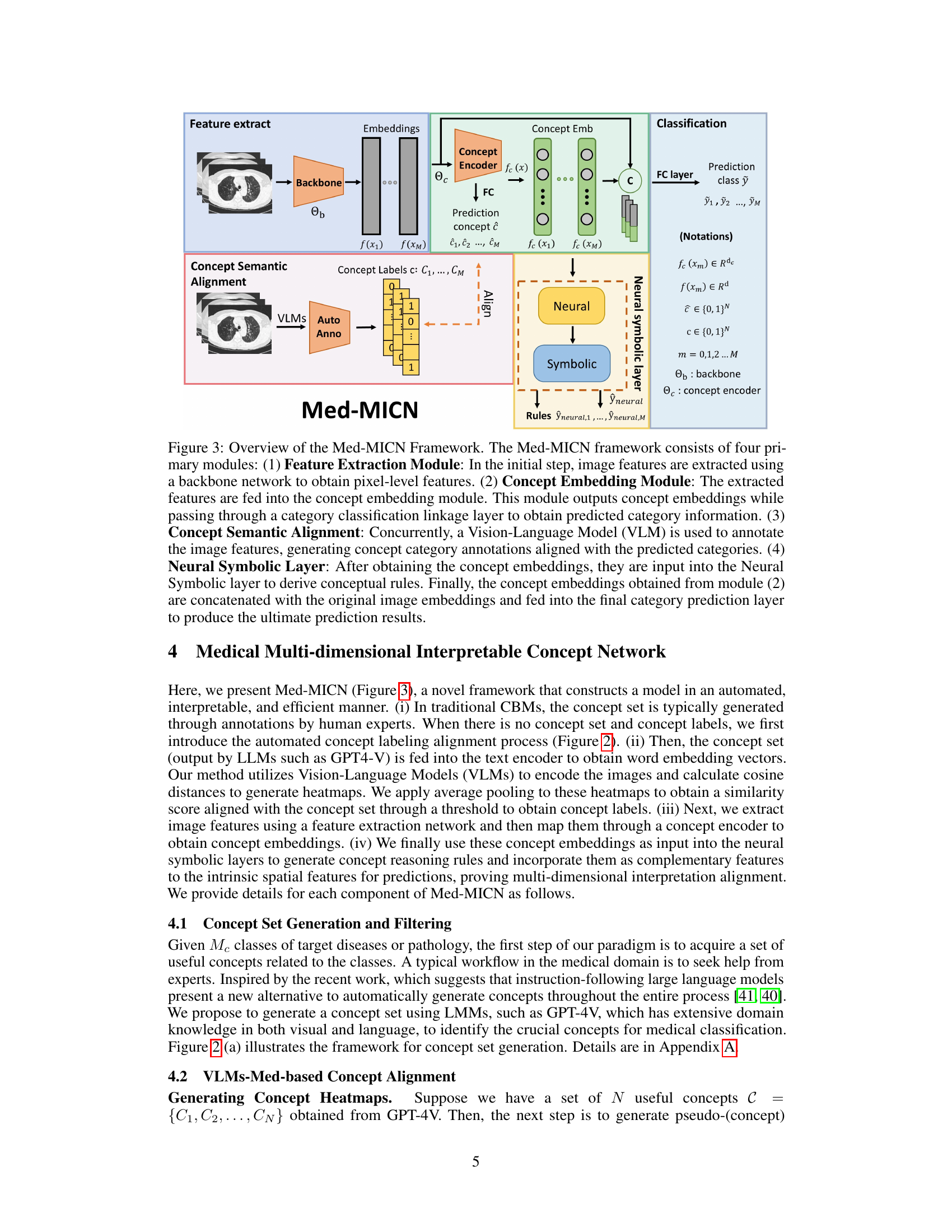

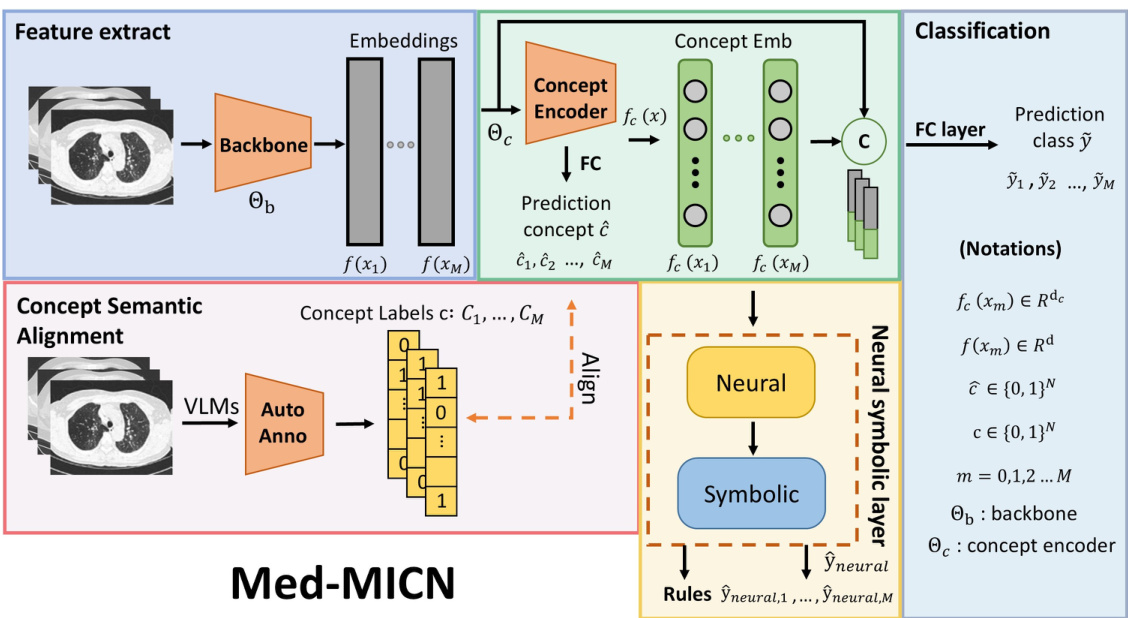

This figure presents a detailed overview of the Med-MICN framework’s architecture, illustrating its four main modules: feature extraction, concept embedding, concept semantic alignment, and a neural symbolic layer. Each module’s function and interconnection are clearly depicted, showing how image features are processed to generate concept embeddings, align with concept labels, and ultimately contribute to the final classification prediction, incorporating both neural and symbolic reasoning.

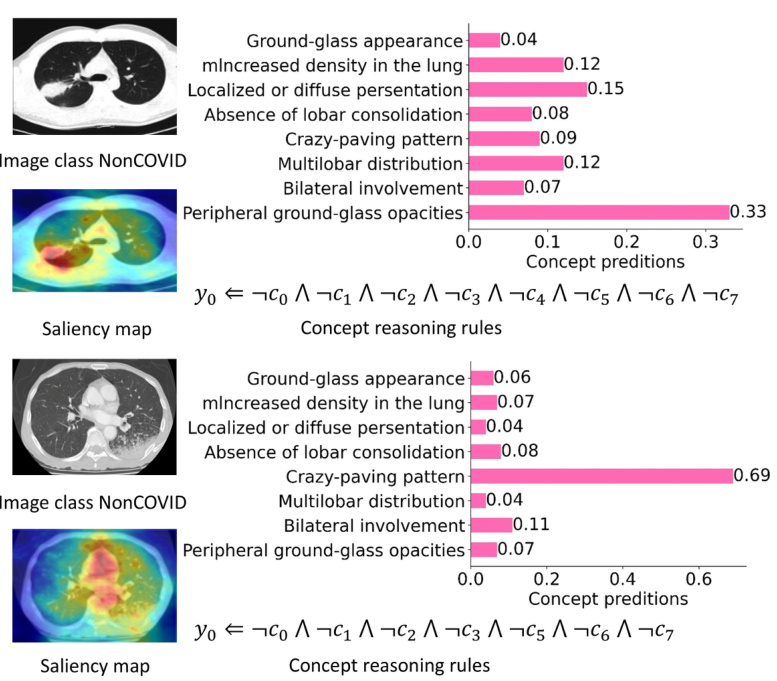

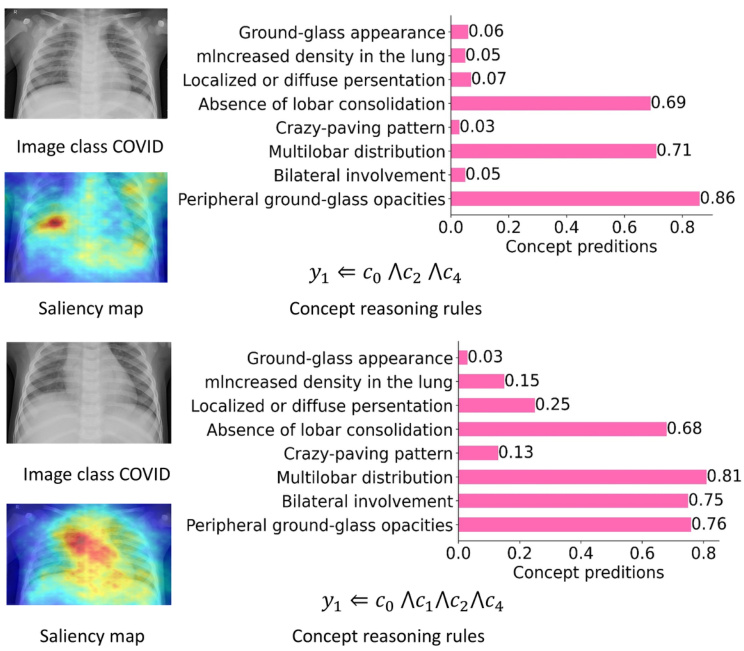

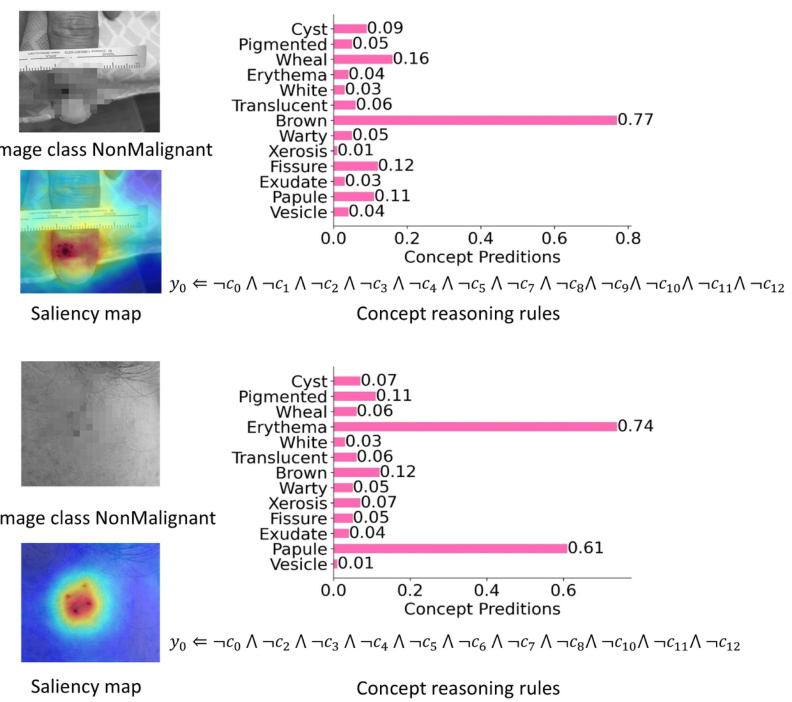

This figure demonstrates the multi-dimensional interpretability of the Med-MICN framework. It shows four example images with their corresponding concept predictions, concept reasoning rules, and saliency maps. Each section visually represents how the model arrives at its classification decision from different perspectives, aligning those perspectives to improve the overall interpretability and trust of the model’s predictions. The y-axis in the concept prediction graphs shows a sequence of concepts, starting with ‘Peripheral ground-glass opacities’ as c0 and increasing sequentially to c7.

The figure showcases the Med-MICN framework’s multidimensional interpretability by visualizing concept predictions, concept reasoning rules, and saliency maps for four medical image samples (two COVID and two NonCOVID). Each row represents a sample, showing its image, saliency map, concept predictions, and concept reasoning rules. The concept predictions indicate the model’s confidence scores for various concepts, and the concept reasoning rules depict the logical rules used by the model for its prediction. The saliency maps highlight the image regions that most significantly influenced the model’s decisions for each concept. The alignment of these different aspects contributes to a more comprehensive and interpretable understanding of the model’s predictions.

This figure showcases the multidimensional interpretability of the Med-MICN framework. It displays four examples of medical image classification, each showing the concept prediction scores, concept reasoning rules, and saliency maps. The alignment of these different explanatory methods is a key feature of Med-MICN, enhancing trust and understanding of the model’s predictions.

This figure showcases Med-MICN’s multi-dimensional interpretability by visualizing four example cases. Each case displays three types of explanations: concept prediction scores, concept reasoning rules, and saliency maps. The alignment of these explanations from different angles provides a more comprehensive and trustworthy interpretation than methods using only one type of explanation. The example shows how Med-MICN combines various interpretative methods to achieve more robust and reliable results.

This figure showcases the multi-dimensional interpretability of the Med-MICN framework. It displays four examples of medical images with their corresponding concept predictions, concept reasoning rules, and saliency maps. Each example demonstrates how Med-MICN aligns different aspects of interpretability, providing a comprehensive understanding of the model’s decision-making process. The concepts are shown on the y-axis, progressing from c0 to c7, representing different features related to the diagnosis.

This figure showcases the multidimensional interpretability of the Med-MICN model. It displays four examples of medical image analysis, each showing a different image class (COVID or NonCOVID) with its corresponding concept predictions, concept reasoning rules, and saliency maps. The concept predictions are visualized as bar charts, illustrating the contribution of individual concepts to the final prediction. The concept reasoning rules provide logical relationships between the concepts, and the saliency maps highlight the relevant regions in the image that contribute most to the classification decision. The alignment of these three dimensions enhances the model’s interpretability, helping clinicians understand the basis for the model’s decisions.

This figure showcases the multi-dimensional interpretability of the Med-MICN model. It displays four examples of medical image analysis. For each image, it shows the concept prediction scores, saliency map highlighting relevant image regions, and concept reasoning rules summarizing how the model arrived at its prediction. The alignment of these different aspects of interpretability is a key feature of Med-MICN.

This figure shows the multidimensional interpretability of Med-MICN, a novel framework for medical image classification. It visualizes how Med-MICN aligns three different aspects of interpretability: concept prediction (probability scores for different concepts), concept reasoning rules (logical rules explaining how concepts relate to the classification outcome), and saliency maps (visualizing the regions of the image that are most influential for the prediction). The alignment of these three aspects helps to ensure that the model’s interpretations are consistent and reliable. Each row in the figure represents a separate medical image, and the concepts are arranged along the y-axis.

This figure showcases the multidimensional interpretability of Med-MICN. It displays four example cases, each showing the model’s prediction, a saliency map highlighting relevant image regions, and concept reasoning rules. The alignment between these different explanatory approaches improves the model’s interpretability and allows for a more comprehensive understanding of its predictions.

This figure shows the multi-dimensional interpretability of the Med-MICN model. It displays how the model aligns different aspects of interpretability, including concept prediction scores (how strongly the model associates specific concepts with the image), concept reasoning rules (logical rules connecting concepts to predictions), and saliency maps (visual highlights indicating important image regions). The alignment of these different interpretability aspects is a key contribution of the proposed model, making it easier for users to understand the model’s reasoning. The example focuses on the concept of ‘Peripheral ground-glass opacities,’ which is shown as a sequence of concepts (C0 to C7) to illustrate the model’s multidimensional reasoning process.

This figure illustrates the multidimensional interpretability of the Med-MICN framework. It shows four examples of medical image analysis, each with a different image class (COVID or NonCOVID). For each image, Med-MICN provides three types of explanations: concept predictions (a bar chart showing the probability of each concept being present), concept reasoning rules (a graphical representation showing the logical relationship between concepts), and saliency maps (a heatmap highlighting the image regions contributing most to the prediction). The alignment of these different explanations is a key feature of Med-MICN, enhancing its interpretability and trustworthiness. The concepts along the y-axis, C1 to C7, sequentially represent increasingly specific aspects of ‘Peripheral ground-glass opacities’.

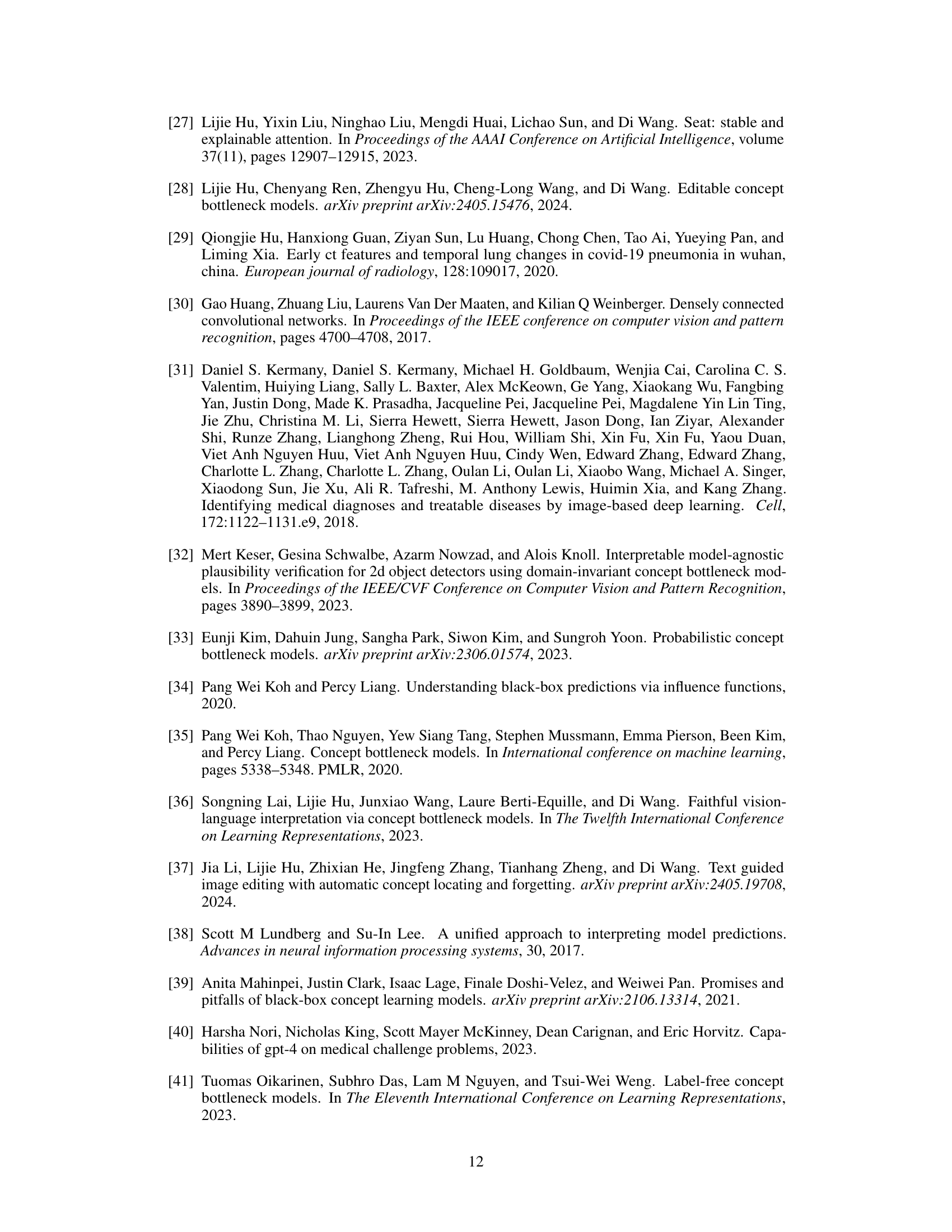

More on tables

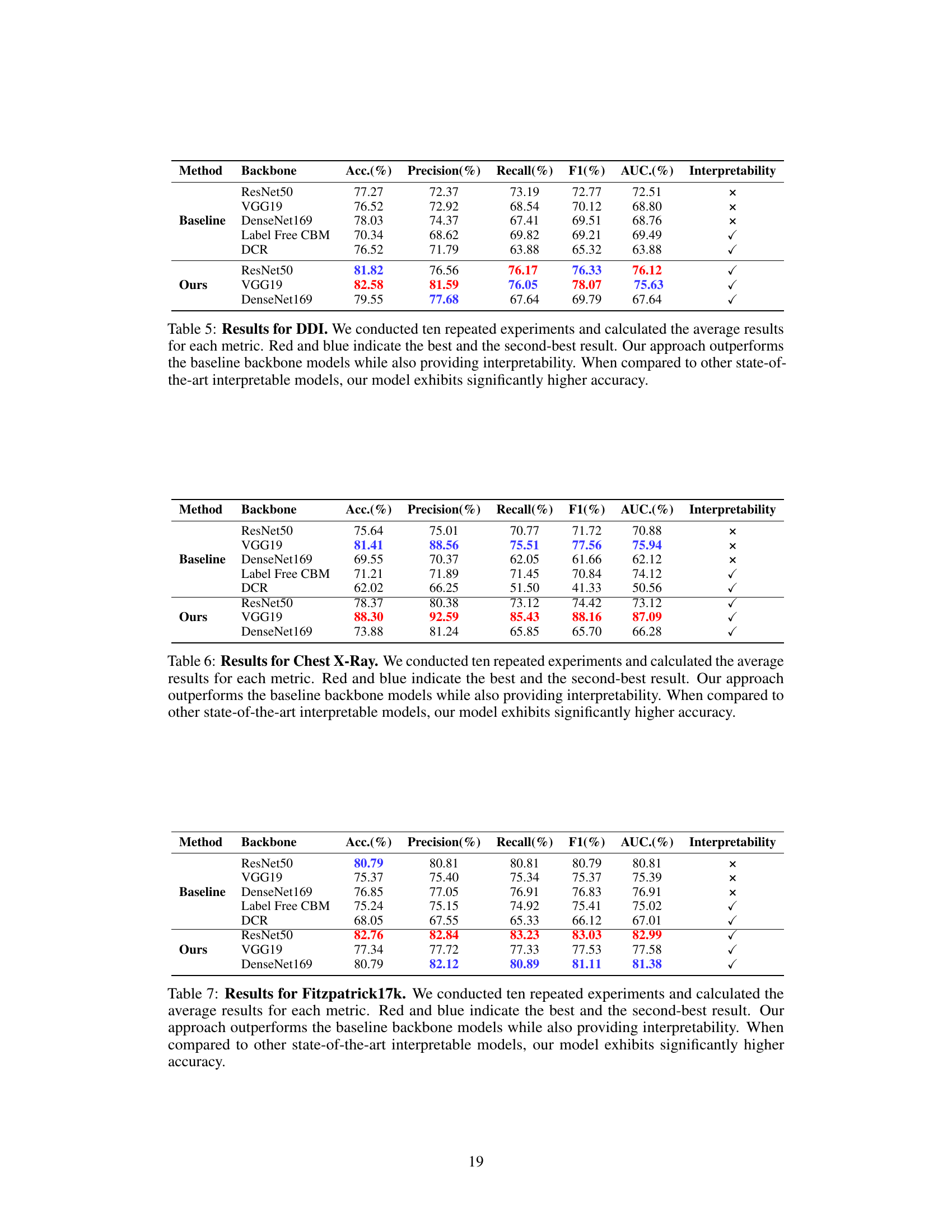

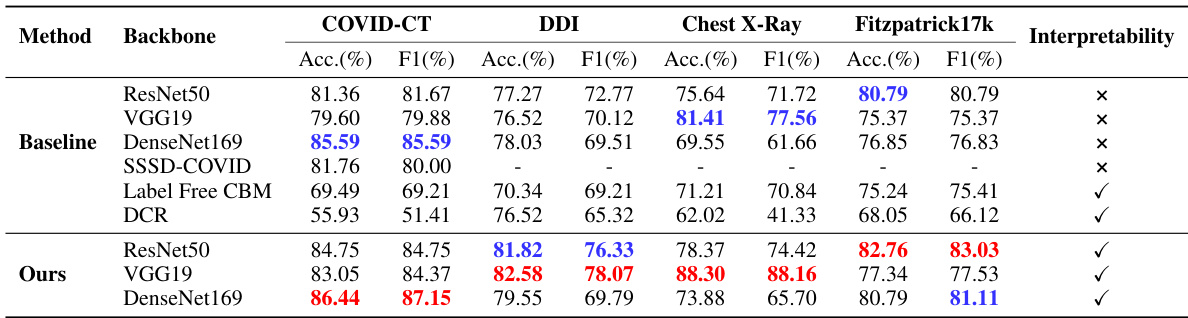

This table presents the performance comparison of the proposed Med-MICN model against several baseline and state-of-the-art interpretable models across four benchmark medical image datasets. The results demonstrate Med-MICN’s superiority in terms of accuracy (ACC), F1-score, and other metrics while also maintaining its interpretability advantages.

This table presents the results of experiments comparing the proposed Med-MICN model against several baseline and state-of-the-art models on four benchmark medical image datasets. Performance is measured across multiple metrics (Accuracy, F1-score, etc.) for different backbone networks (ResNet50, VGG19, DenseNet169). The table highlights Med-MICN’s superior accuracy and its unique combination of high performance and interpretability.

This table presents the performance comparison between the proposed Med-MICN model and several baseline and state-of-the-art interpretable models on four medical image datasets. The metrics used for evaluation include Accuracy, F1-score, and AUC. The table highlights that Med-MICN achieves superior performance and interpretability compared to other methods. Red and blue colors indicate the top two performing models for each metric.

This table presents the performance comparison of the proposed Med-MICN model against several baseline and state-of-the-art interpretable models on four benchmark medical image datasets (COVID-CT, DDI, Chest X-Ray, and Fitzpatrick17k). The results show that Med-MICN achieves superior performance in terms of accuracy (Acc), F1-score, and AUC, while also offering interpretability. Different backbone models (ResNet50, VGG19, DenseNet169) are used to demonstrate Med-MICN’s robustness.

This table presents the performance comparison of the proposed Med-MICN model against various baseline and state-of-the-art interpretable models on four benchmark medical image datasets (COVID-CT, DDI, Chest X-Ray, and Fitzpatrick17k). The results show Med-MICN’s superior accuracy across different backbones (ResNet50, VGG19, DenseNet169) while maintaining interpretability. The metrics used are Accuracy (Acc), F1-Score (F1), and AUC. The interpretability column indicates whether a given model provides interpretable results.

This table presents the performance comparison of the proposed Med-MICN model against various baseline models and other state-of-the-art interpretable models. It shows accuracy, F1 score, and other metrics across four benchmark datasets (COVID-CT, DDI, Chest X-Ray, and Fitzpatrick17k) for different backbones (ResNet50, VGG19, and DenseNet169). The results highlight Med-MICN’s superior performance and interpretability.

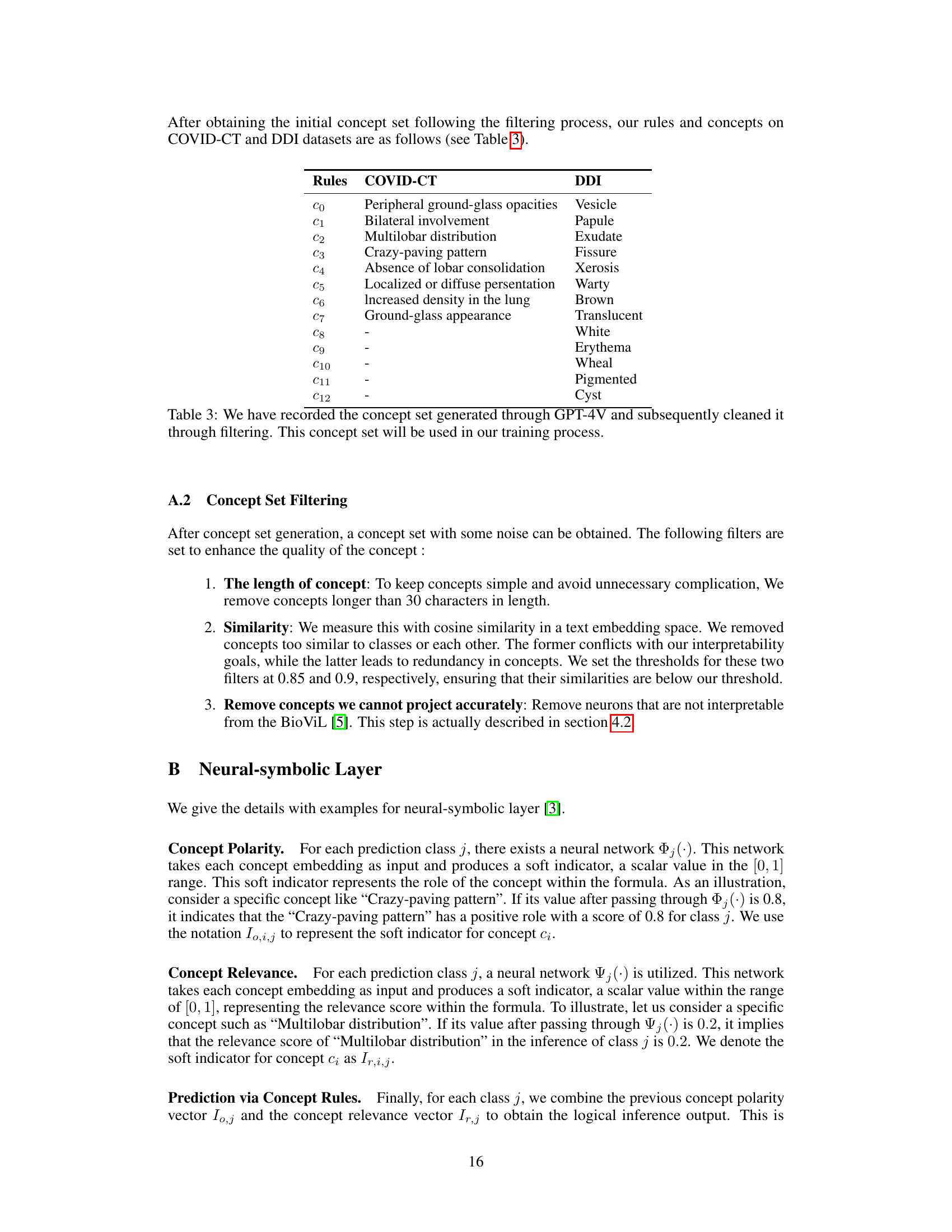

This table presents the results of an ablation study on the impact of concept filters used in the Med-MICN model. It shows the accuracy and number of concepts used for COVID-CT and DDI datasets when applying different combinations of filters. The filters applied are: length (removing concepts longer than 30 characters), similarity (removing similar concepts), and projection (removing concepts that cannot be accurately projected). The comparison shows the model’s performance when all filters are used, when each filter is removed one at a time, and when no filter is applied. This helps to assess the impact of each filter on the overall model performance and interpretability.

The table presents the performance comparison between Med-MICN and other methods (baseline models and other state-of-the-art interpretable models) across four benchmark datasets. The results are given for several evaluation metrics (Accuracy, F1-Score, etc.) and the interpretability of the models is indicated. Med-MICN shows significantly better performance and interpretability than other models.

Full paper#