↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Reinforcement learning (RL) often faces real-world constraints. Infinite horizon average reward settings are crucial for long-term decision-making but pose unique challenges, especially when policies are complex (general parameterization). Existing solutions either use simple policy structures (tabular or linear) or lack rigorous theoretical guarantees for regret (difference from optimal policy) and constraint violations.

This paper introduces a new primal-dual policy gradient algorithm designed to address these challenges. By cleverly managing constraints and optimizing policies using policy gradient methods, the algorithm achieves sublinear regret and constraint violation bounds of Õ(T4/5). This represents a significant advancement, offering the first sublinear regret guarantees for general policy parameterizations in average reward CMDPs and surpassing previous state-of-the-art results.

Key Takeaways#

Why does it matter?#

This paper is crucial because it’s the first to tackle the challenge of average reward Constrained Markov Decision Processes (CMDPs) with general policy parameterization, a common scenario in real-world applications. Its sublinear regret and constraint violation bounds offer significant improvements over existing methods and provide a strong theoretical foundation for future research in constrained reinforcement learning. This opens exciting new avenues for tackling complex decision-making problems under constraints in various domains.

Visual Insights#

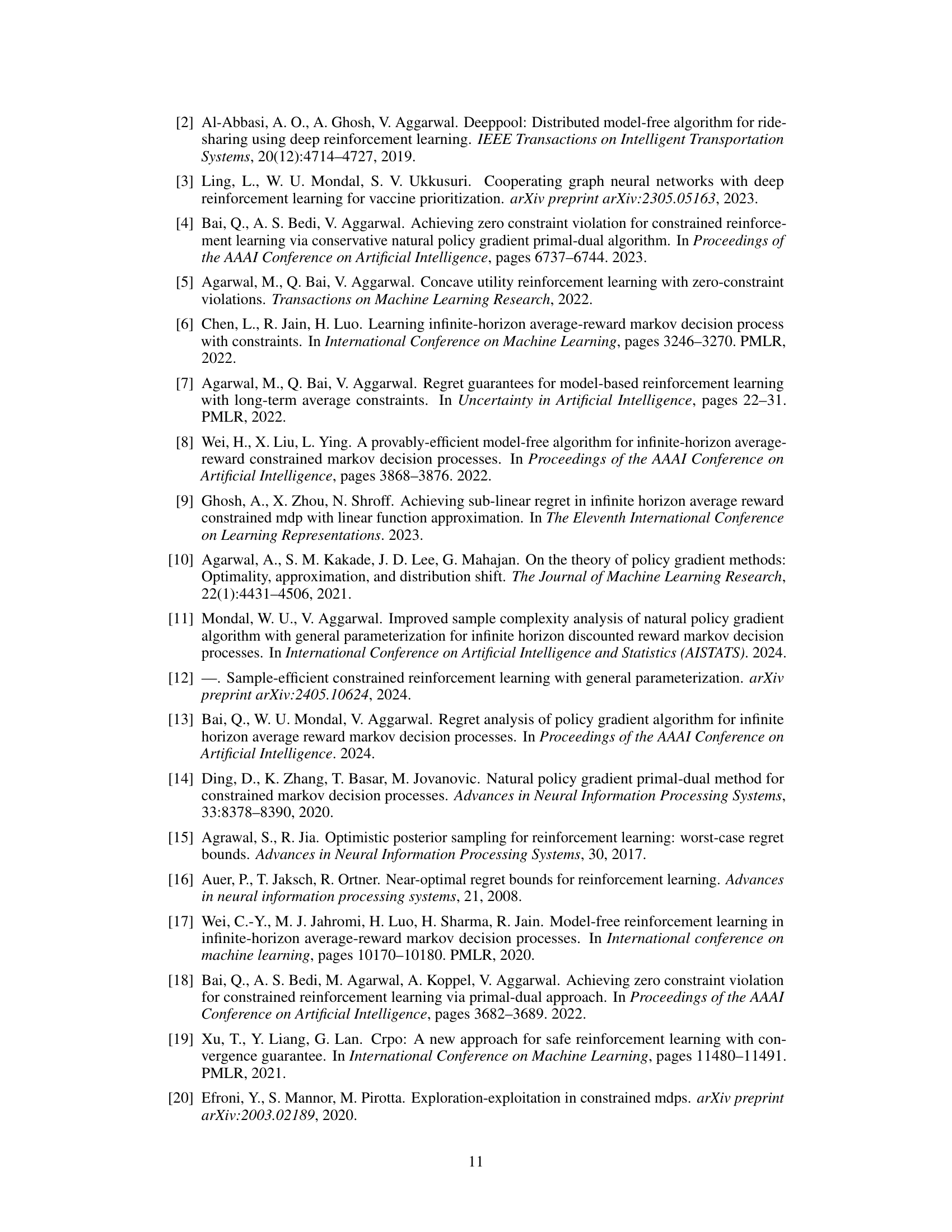

This table compares several state-of-the-art algorithms for solving infinite-horizon average reward constrained Markov Decision Processes (CMDPs). It contrasts their regret (difference between the achieved and optimal average reward) and constraint violation, indicating whether the algorithms are model-free (meaning they do not require an explicit model of the environment) and the type of policy parameterization (tabular, linear, or general). The table highlights that the proposed algorithm in the paper is novel in its analysis of regret and constraint violation for average reward CMDPs with general policy parameterization, which is a more challenging problem setting.

Full paper#