↗ OpenReview ↗ NeurIPS Homepage ↗ Hugging Face ↗ Chat

TL;DR#

Attention mechanisms have been the dominant approach in sequence-to-sequence modeling for several years. However, recent advancements in state-space models (SSMs) offer a compelling alternative, showing competitive performance and scalability advantages. A key challenge, however, is understanding the limitations of standard SSMs in tasks requiring input-dependent data selection, like content-based reasoning in text and genetics. This paper investigates this challenge and seeks to improve the design and performance of such models.

This research addresses the limitations of SSMs by providing a theoretical framework for the analysis of generalized selective SSMs. The authors leverage tools from rough path theory to fully characterize the expressive power of these models. They identify the input-dependent gating mechanism as the crucial design aspect responsible for superior performance. The findings not only explain the success of recent selective SSMs but also provide a solid foundation for developing future SSM variants, suggesting cross-channel interactions as key areas for future improvement. This rigorous theoretical analysis bridges the gap between theoretical understanding and practical applications, guiding the design of more effective and efficient deep learning models for sequential data.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working with sequential data because it provides a theoretical framework for understanding and improving the expressive power of state-space models (SSMs), a critical architecture in deep learning. It offers insights into the design of future SSM variants by highlighting the importance of selectivity mechanisms and identifying architectural choices that affect expressivity. This work bridges theory and practice, impacting both model design and performance.

Visual Insights#

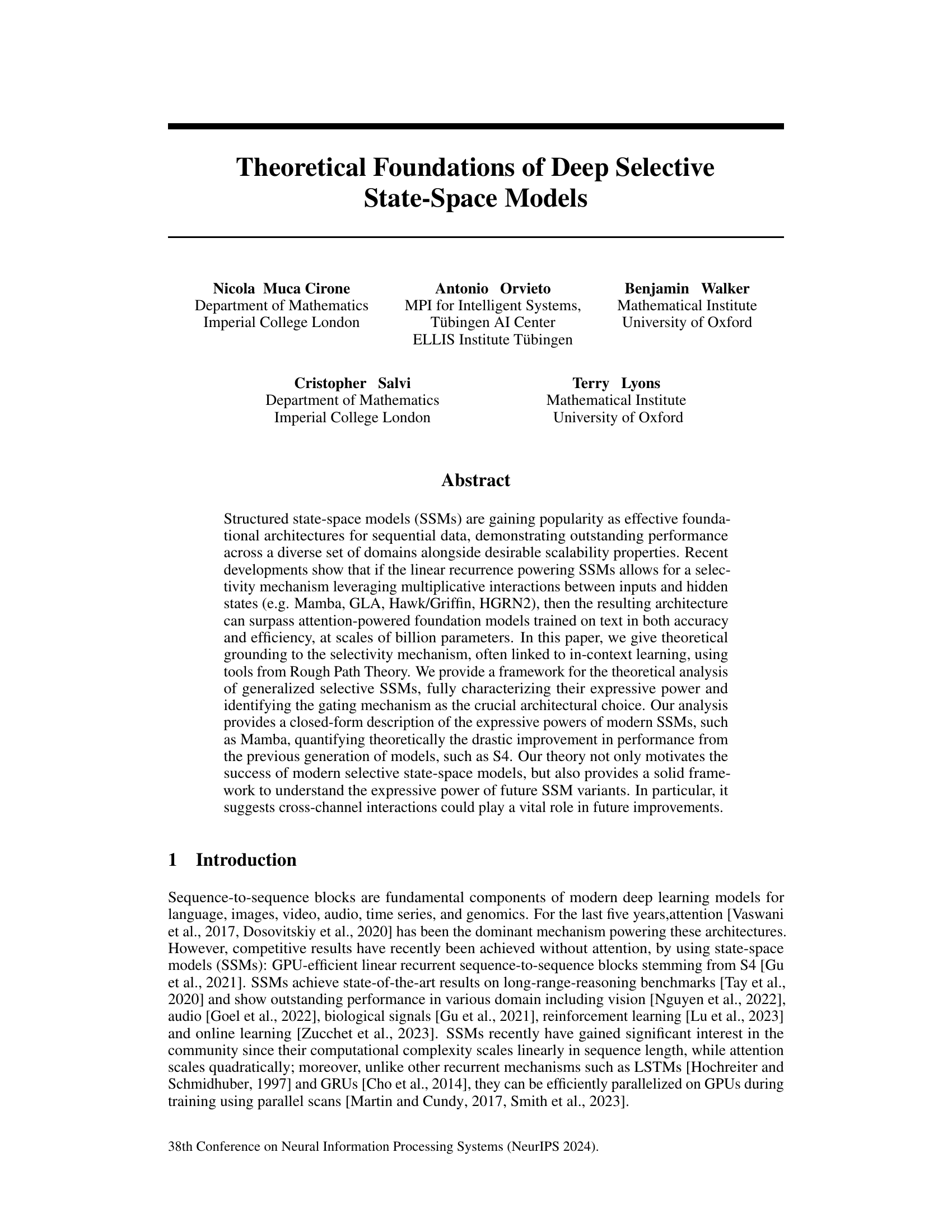

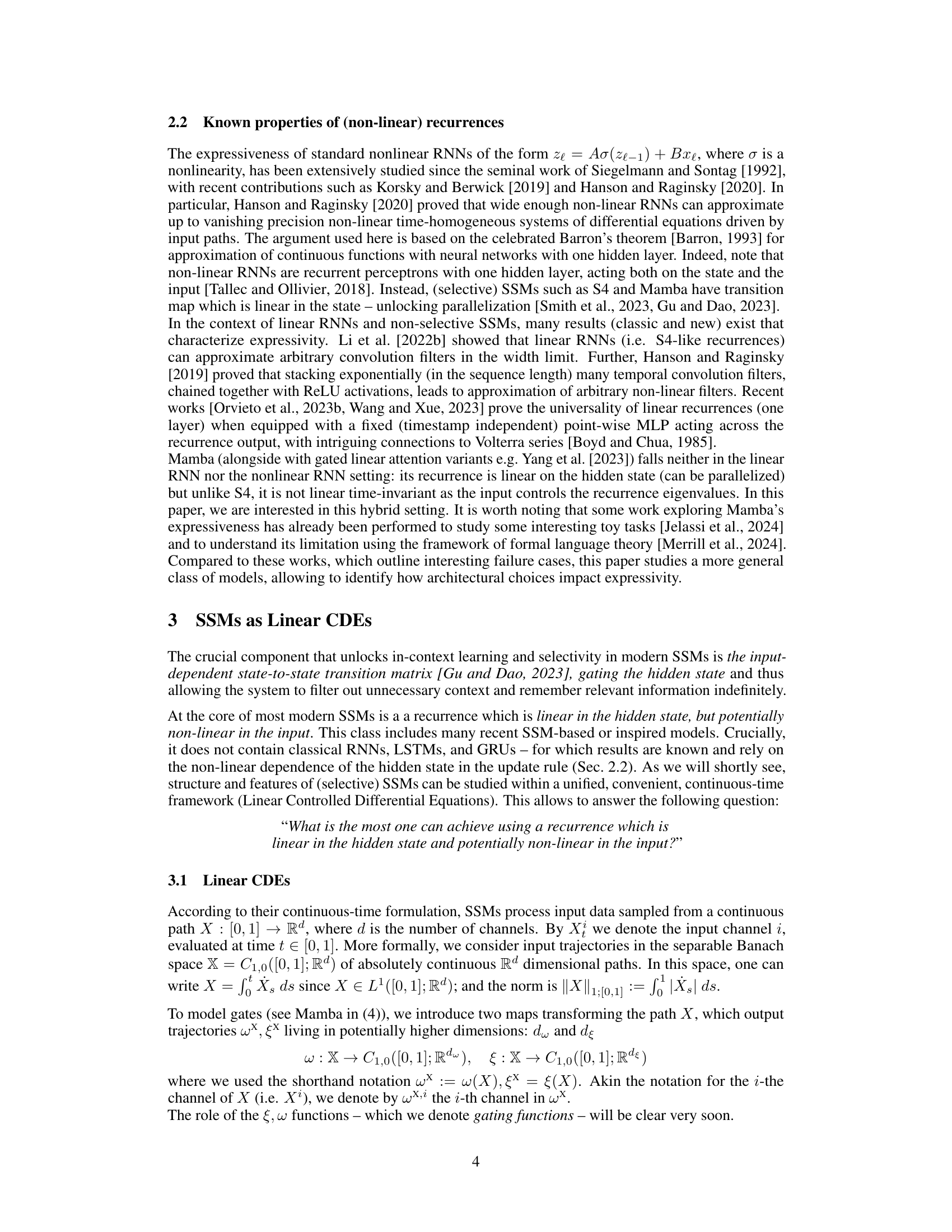

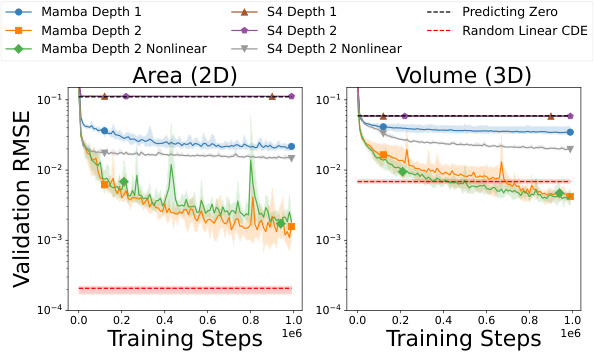

This figure compares the performance of three different models (Linear CDE, Mamba, and S4) on two anti-symmetric signature prediction tasks (Area and Volume). Each model is trained for different depths (1 and 2 layers, and 2 layers with a non-linearity), and the training progression is shown in terms of validation RMSE. Error bars represent the range of accuracy across 5 separate runs. The figure helps to illustrate the differences in performance and efficiency between these models.

Full paper#