↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Training next-generation AI models is hampered by the vast size of model outputs and the limitation of human labeling resources. This means that human feedback, critical for ensuring model alignment and safety, becomes time-consuming and expensive to acquire for models producing long-form outputs, such as long-form text and videos. This paper proposes addressing this issue using hierarchical reinforcement learning (HRL), a popular approach that leverages the hierarchical structure often inherent in complex AI model outputs to provide scalable oversight.

The paper focuses on two feedback settings: cardinal (numerical ratings) and ordinal (preference feedback). For the cardinal feedback setting, a novel algorithm, Hier-UCB-VI, is developed, which is proven to learn optimal policies with sub-linear regret. The efficiency comes from using an ‘apt’ sub-MDP reward function and strategic exploration of sub-MDPs. For the ordinal feedback setting, a hierarchical experimental design algorithm, Hier-REGIME, is proposed to efficiently collect high-level and low-level preference feedback. This addresses the difficulty of assessing model outputs at all levels. Both algorithms aim to manage the trade-off between human labeling efficiency and model learning performance, demonstrating the efficacy of hierarchical structures in enabling scalable oversight.

Key Takeaways#

Why does it matter?#

This paper is crucial because it tackles the challenge of scalable oversight in next-generation AI models, a critical issue in responsible AI development. By focusing on hierarchical reinforcement learning, it provides a novel framework for handling the complexity of large model outputs, which is directly applicable to current trends in large language models and other complex AI systems. The proposed methods offer valuable insights into efficient human-in-the-loop training strategies for advanced AI, opening avenues for future research in human-AI interaction, AI safety, and model alignment. This work also demonstrates the potential for efficiently utilizing human feedback in various AI systems. The sub-linear regret guarantees offered by the suggested algorithms are particularly significant for practical applications and address the challenges related to the cost of human labeling.

Visual Insights#

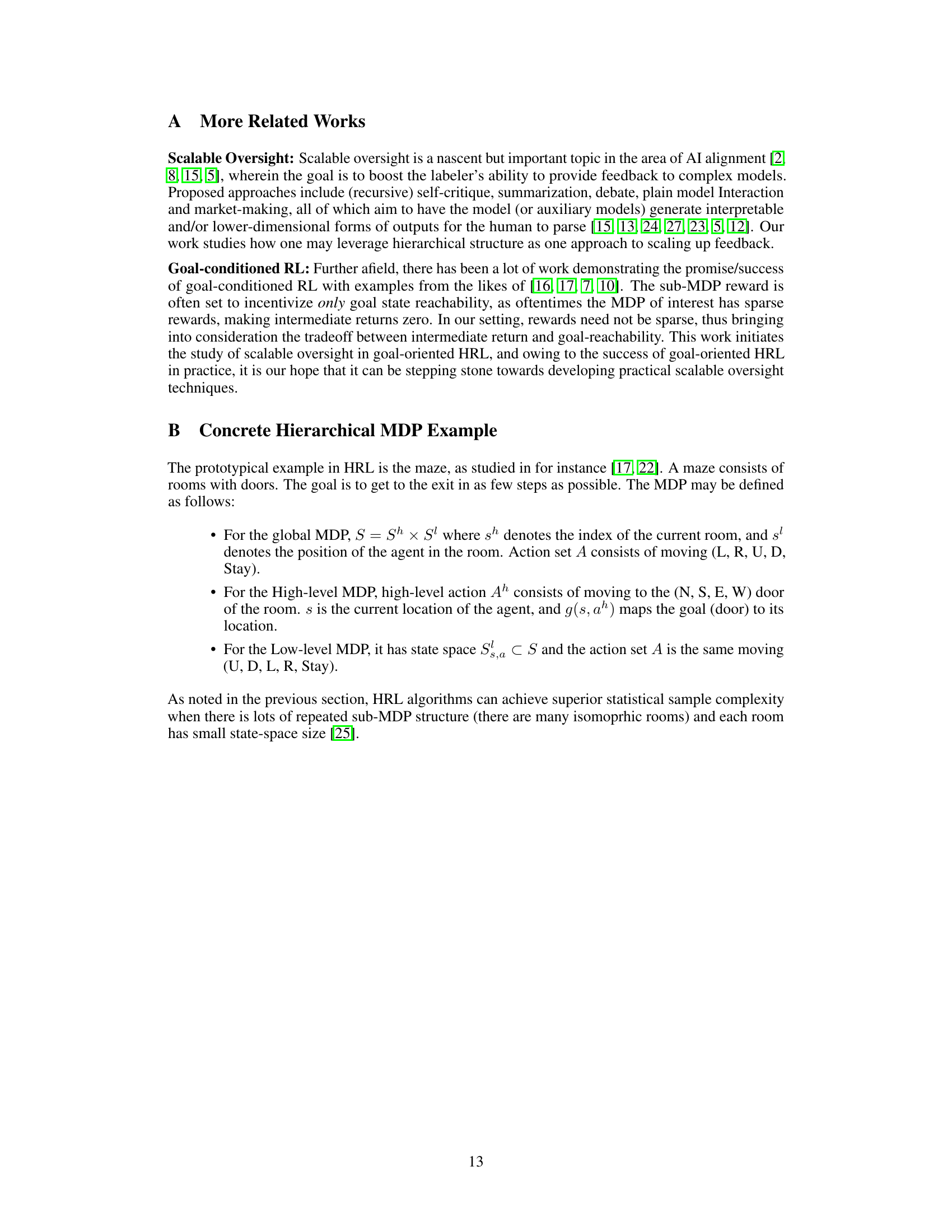

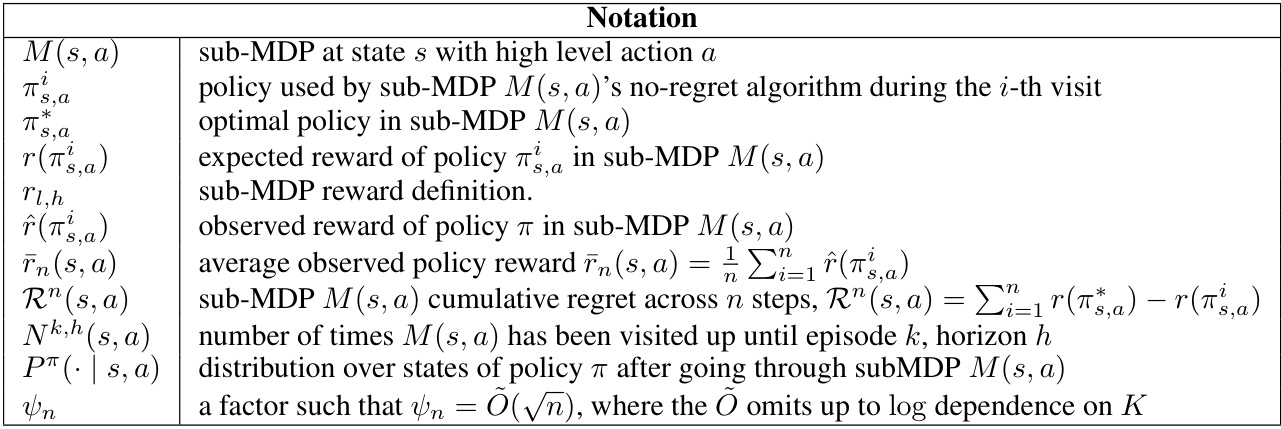

This table lists notations used in Section 3 of the paper, which focuses on learning from cardinal feedback. The notations cover various aspects of the Hierarchical UCB-VI algorithm, including sub-MDPs, policies, rewards, regret, and visit counts. Understanding these notations is crucial for following the mathematical derivations and understanding the algorithm’s workings.

Full paper#