↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Online strategic classification, where agents strategically manipulate their features to receive favorable classifications, poses a significant challenge. Existing complexity measures often fail to provide tight instance-optimal bounds, especially in settings with incomplete information (the learner observes only the manipulated features) and unknown manipulation graphs. This leads to suboptimal learning algorithms and limits our understanding of this crucial learning paradigm.

This research tackles these issues by introducing the Strategic Littlestone Dimension, a new complexity measure that captures the joint complexity of the hypothesis class and the manipulation graph. The authors demonstrate that this dimension characterizes instance-optimal mistake bounds for deterministic learning algorithms in the realizable setting. They also achieve improved regret in agnostic settings through a refined reduction and extend their results to scenarios with incomplete knowledge of the manipulation graph, providing a more comprehensive theoretical understanding of online strategic classification.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in online machine learning and game theory. It provides instance-optimal regret bounds in online strategic classification, addressing a critical gap in the field. By introducing the Strategic Littlestone Dimension, it offers a new measure of complexity that accurately captures the difficulty of learning in strategic settings. This work also opens exciting avenues for future research, especially in tackling scenarios with incomplete information and unknown manipulation graphs.

Visual Insights#

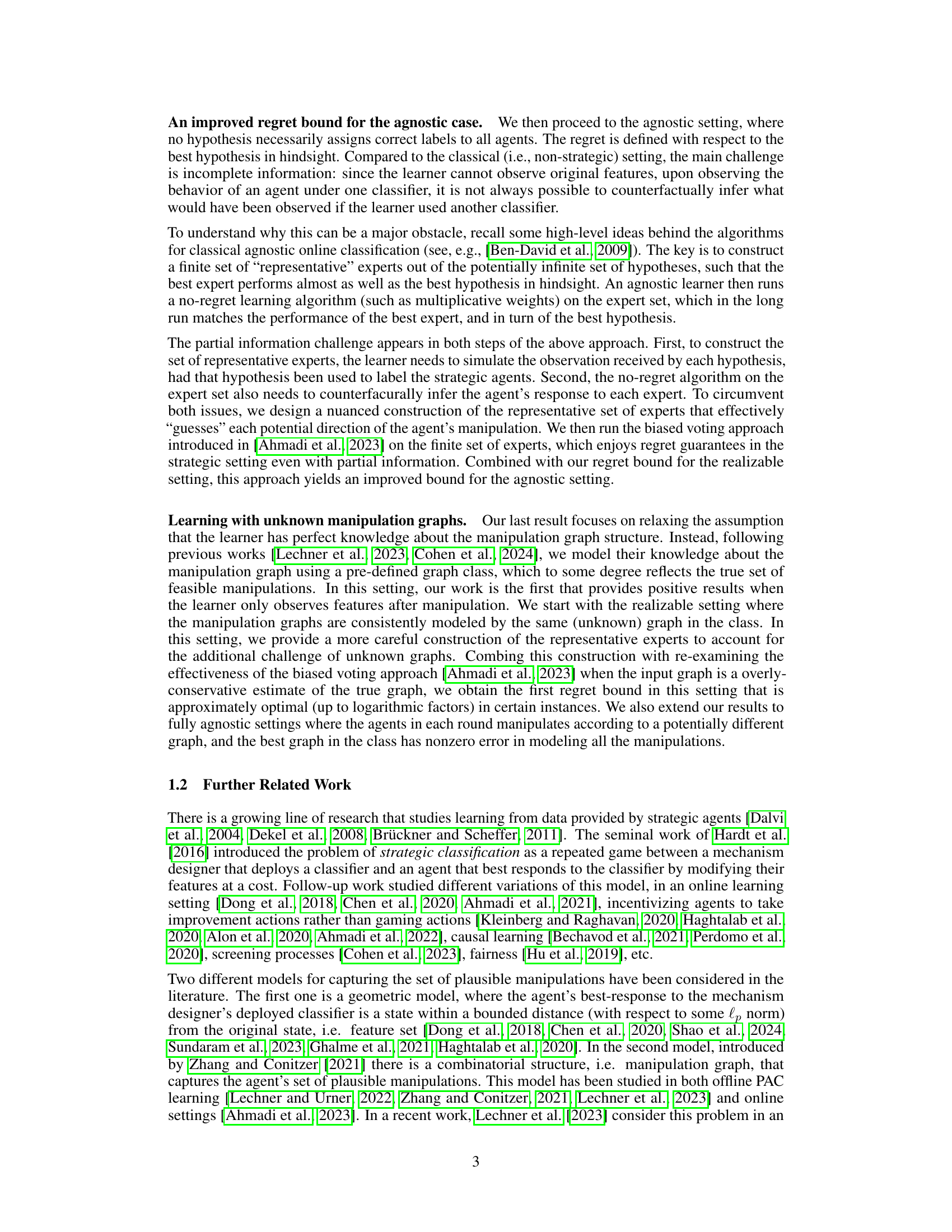

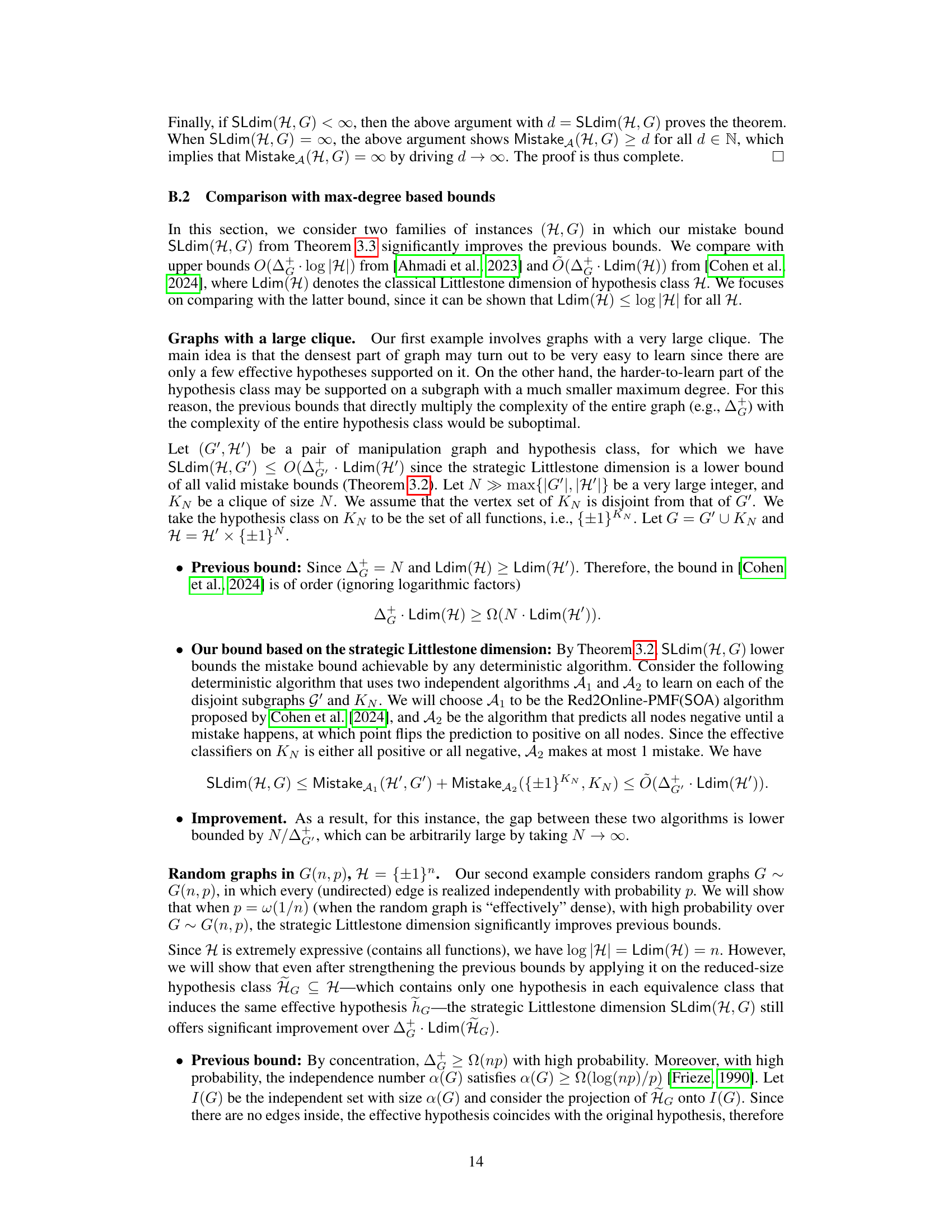

This figure illustrates a Strategic Littlestone Tree, a key concept in the paper. The tree models an adversary’s strategy to maximize the learner’s mistakes in an online strategic classification setting. Nodes represent feature vectors, and edges represent types of mistakes (false positives in blue, false negatives in red). The tree’s structure reflects the asymmetry of information available to the learner and adversary due to strategic manipulation.

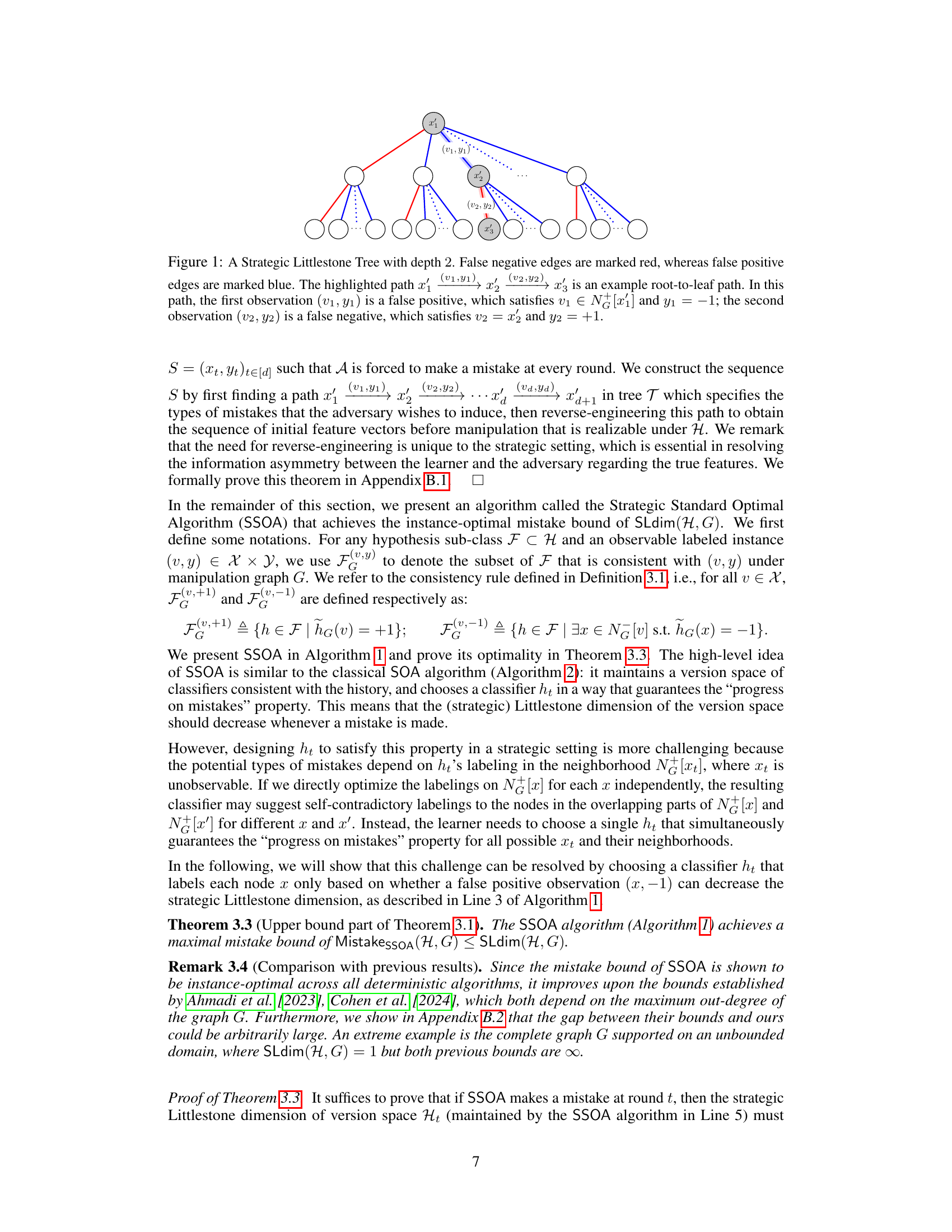

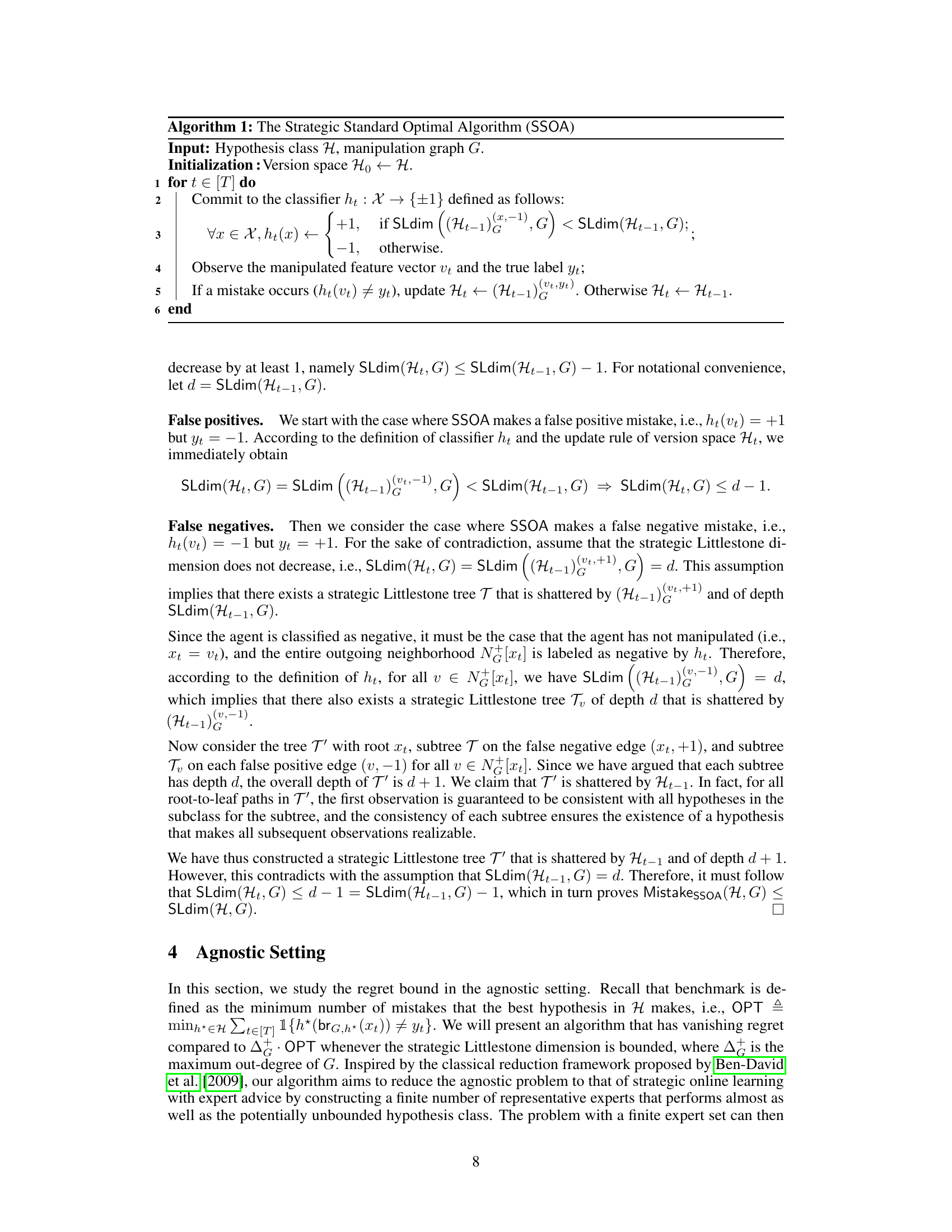

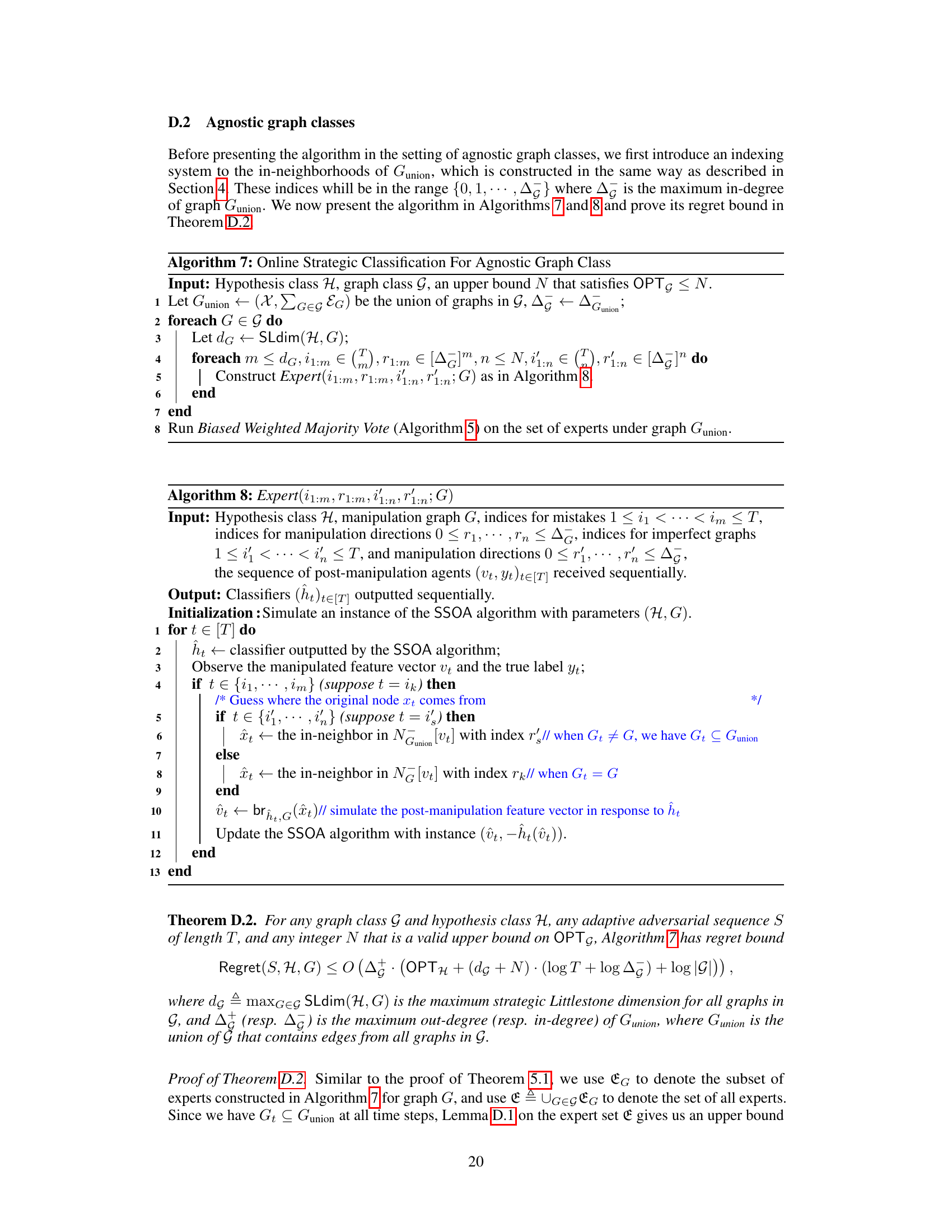

This algorithm is used in the classical online binary classification setting to achieve the minimal mistake bound. It maintains a version space of classifiers consistent with the observed data and selects a classifier that maximizes the Littlestone dimension of the version space. This ensures that the algorithm makes progress in reducing the size of the version space with each mistake.

Full paper#