TL;DR#

Robotic grasp detection struggles with incomplete 3D geometry information in cluttered environments; scanning from multiple viewpoints is time-consuming. Existing methods often treat grasp detection as secondary to 3D reconstruction or directly predict information gain, leading to suboptimal results and inefficiency.

This paper introduces ActiveNGF, an active grasp detection framework that incrementally models scene grasp distribution using a Neural Graspness Field. It addresses the issue of incomplete 3D information by incorporating a novel graspness inconsistency-guided policy for next-best-view selection. The proposed method significantly improves grasping performance and reduces time cost compared to previous methods, demonstrating superior trade-offs in real-world experiments. A neural graspness sampling method further enhances grasp pose detection accuracy.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel approach to active grasp detection, a crucial problem in robotics. It addresses the limitations of existing methods by efficiently modeling the scene’s grasp distribution and using an inconsistency-guided policy for next-best-view planning. This work improves the speed and accuracy of robotic grasping and opens new avenues for research in active perception and robotic manipulation.

Visual Insights#

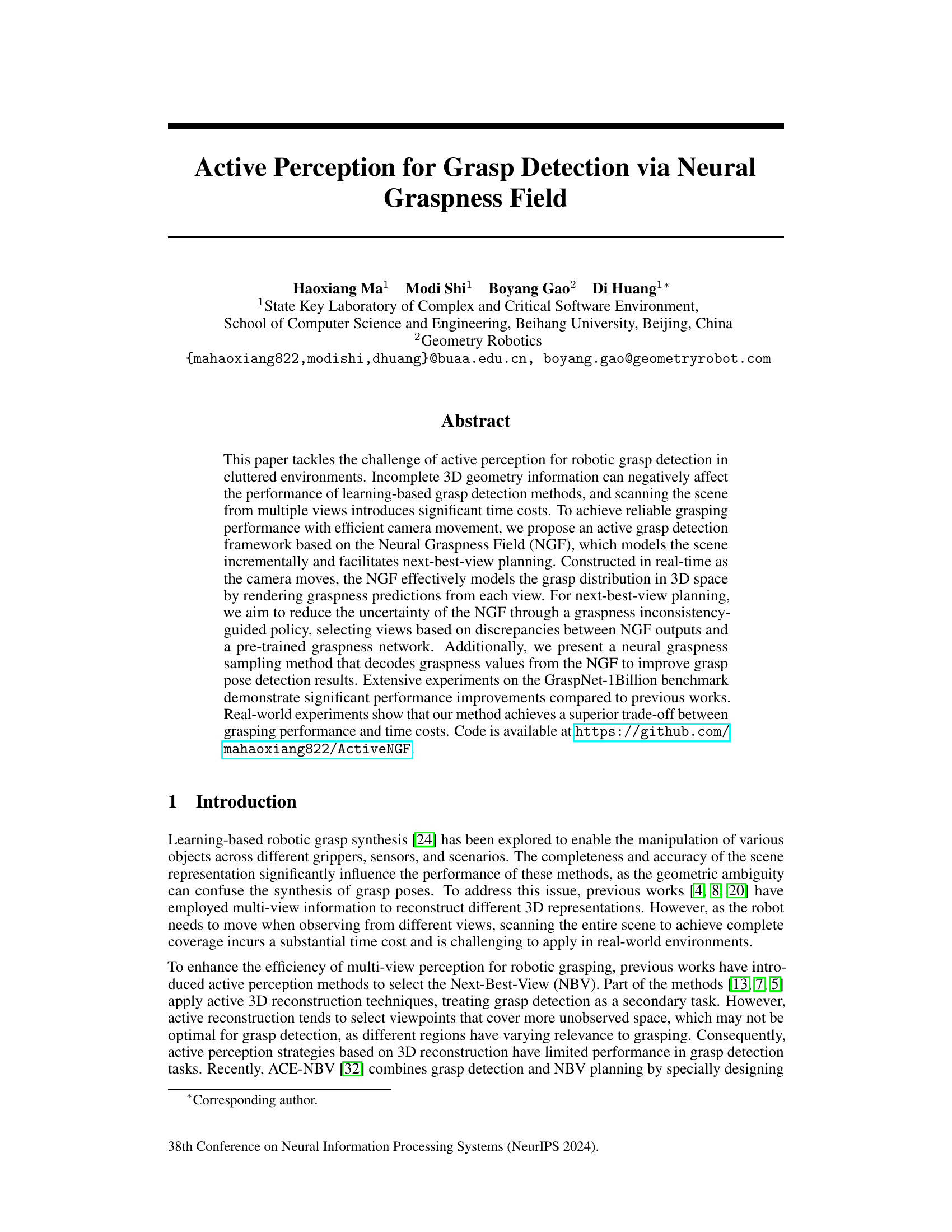

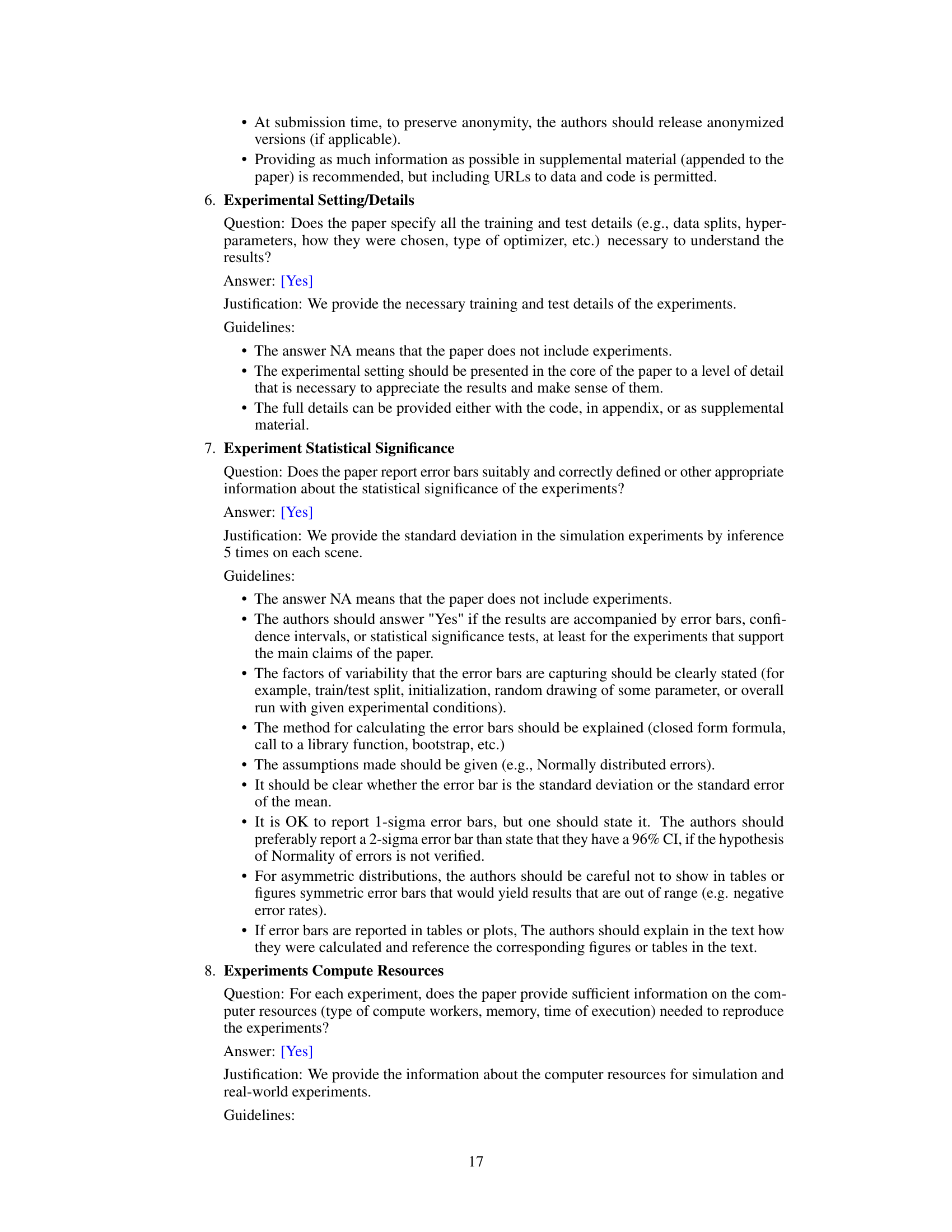

🔼 This figure illustrates the overall workflow of the active grasp detection system. It starts with input RGB and depth data, which are processed through the Neural Graspness Field (NGF) to incrementally build a 3D representation of grasp possibilities. A Next-Best-View (NBV) planner then determines the optimal camera position to reduce uncertainty and improve grasp detection. The system iteratively refines the NGF, samples grasps using neural graspness sampling, and eventually executes a grasp if sufficient high-quality grasps are found or the maximum number of iterations is reached.

read the caption

Figure 1: Overview of the active grasp detection system. The RGB, depth and predicted graspness from a new view are mapped to the Neural Graspness Field (NGF) by rendering loss. After each mapping step, the scene geometry is exported from the neural representation using the marching-cubes algorithm [19] and the candidate positions for grasp synthesis are sampled by neural graspness sampling. If the maximum perception step is reached or a specific result condition is satisfied, e.g., a sufficient number of high-quality grasps are detected, the robot arm is employed to execute the detected grasps. Otherwise, the Next-Best-View (NBV) planner is employed to sample candidate views and select the view with the largest information gain for robot movement.

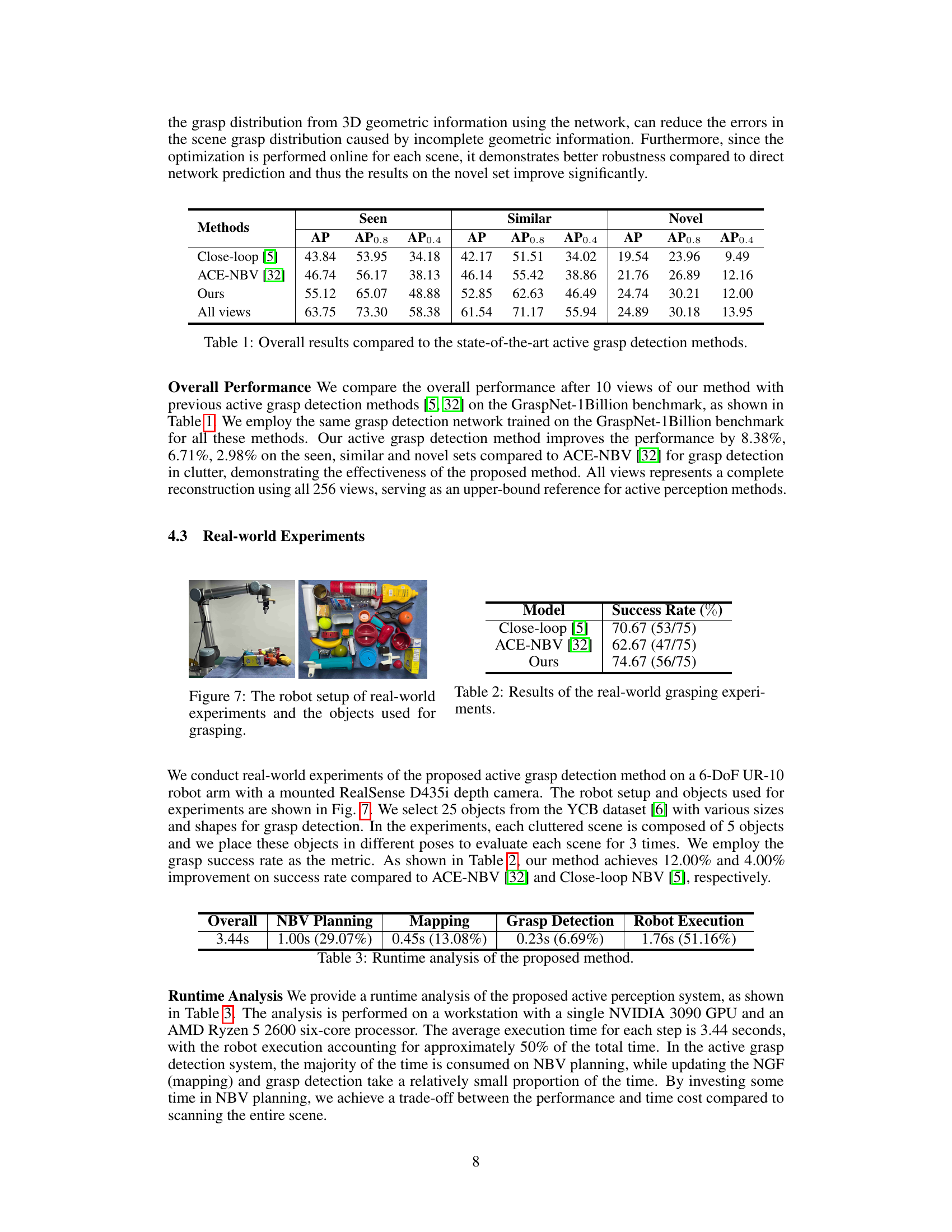

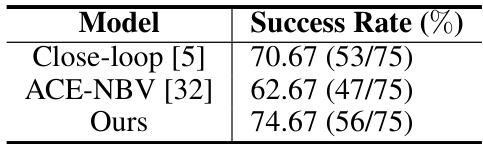

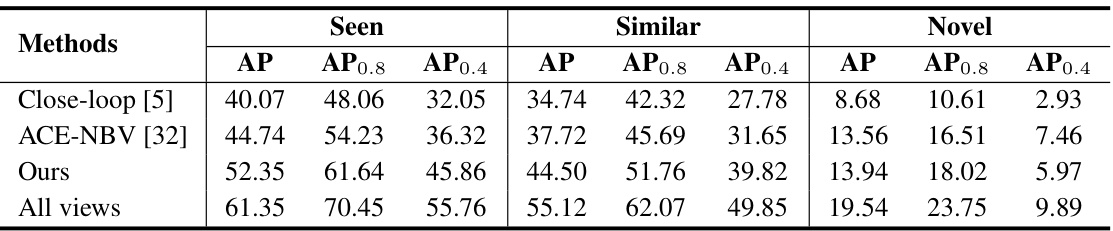

🔼 This table presents a comparison of the proposed active grasp detection method’s performance against existing state-of-the-art methods on the GraspNet-1Billion benchmark. The comparison is done across three subsets of the benchmark: ‘Seen’, ‘Similar’, and ‘Novel’, reflecting the object categories’ visual similarity to the training data. For each subset and overall, the Average Precision (AP) is reported, along with AP at 0.8 and 0.4 success rates (AP0.8 and AP0.4), offering a comprehensive view of the methods’ performance across different grasping difficulty levels. The ‘All views’ row provides an upper bound, representing performance achievable with complete scene information (all 256 views).

read the caption

Table 1: Overall results compared to the state-of-the-art active grasp detection methods.

In-depth insights#

Active Grasp Synthesis#

Active grasp synthesis is a crucial area in robotics research focusing on efficiently and effectively selecting optimal grasps in dynamic environments. Unlike passive approaches that rely on pre-computed grasp maps or exhaustive search, active grasp synthesis leverages sensor feedback and real-time planning to determine the best viewpoints and grasping poses. This necessitates careful consideration of several factors, including uncertainty management, as sensor readings might be noisy or incomplete. Efficient motion planning algorithms are vital to avoid redundant camera movements while ensuring sufficient information gathering for reliable grasp selection. Integrating learning-based methods offers the potential to adapt to diverse object shapes and complex scenes, improving robustness. However, the computational complexity and real-time constraints pose significant challenges. Future research directions could explore novel sensor modalities and enhanced representations to improve accuracy and efficiency. The focus should be on developing systems that can adapt to dynamic situations and exhibit robust performance in cluttered, real-world scenarios.

Neural Graspness Field#

The concept of a “Neural Graspness Field” presents a novel approach to robotic grasp detection by leveraging neural networks to model the probability of successful grasps within a 3D scene. This method is particularly valuable because it addresses limitations of traditional methods, such as relying on complete 3D geometry which is often unavailable in cluttered real-world environments. The neural network learns to represent this grasp distribution incrementally, as it receives information from various camera viewpoints. This incremental learning allows for efficient active perception, where the robot strategically moves its camera to acquire the most informative views for improving the grasp prediction accuracy. The system intelligently selects the next best view based on the current uncertainty of the graspness field, effectively reducing uncertainty and improving grasp success rate without exhaustive camera scanning. The use of a neural graspness sampling method enhances grasp pose detection by generating more accurate grasp candidates. Overall, this approach offers a significant advantage by combining efficient camera planning with accurate grasp prediction, leading to more robust and reliable grasp detection in complex, real-world scenarios.

NBV Planning Strategy#

The NBV (Next-Best-View) planning strategy is crucial for efficient robotic grasp detection in cluttered environments. Active perception, selecting the most informative camera viewpoints, is key to avoiding exhaustive scene scanning. This paper proposes a graspness inconsistency-guided policy, aiming to reduce uncertainty in the Neural Graspness Field (NGF) by prioritizing views that significantly reduce discrepancies between predicted and actual grasp distributions. The NGF incrementally models the scene’s grasp distribution in 3D space using multi-view data. By focusing on reducing uncertainty, the method improves grasp detection efficiency and effectiveness without needing complete scene reconstruction, offering a superior trade-off between performance and time cost compared to previous approaches. The strategy incorporates a neural graspness sampling method to further refine grasp pose detection results. This approach elegantly tackles the challenge of active perception for reliable and efficient robotic grasping.

Graspness Sampling#

The concept of ‘Graspness Sampling’ in robotic grasp detection is crucial for effectively translating the learned grasp distribution into actionable grasp poses. Accurate grasp sampling directly impacts the success rate of grasping, as poorly chosen grasp candidates can lead to failures. The method’s effectiveness hinges on how well it handles incomplete or noisy 3D geometry information, a common challenge in real-world scenarios. A robust sampling strategy must be able to generate diverse and feasible grasp candidates that are consistent with the underlying graspness field. This likely involves carefully considering the trade-off between sampling density and computational cost, possibly employing techniques like furthest point sampling or other efficient sampling methods to select representative grasp locations. Furthermore, the integration with active perception is vital; the sampler must be able to efficiently provide appropriate grasp candidates given partial scene observations from limited viewpoints. The ideal approach would seamlessly integrate sampling with online neural field refinement, ensuring grasp candidates are continuously updated to reflect the progressively more complete scene understanding. This way, the sampling process proactively adapts to the information gain provided by each new viewpoint, leading to a more efficient and effective grasping strategy.

Real-world Evaluation#

A robust real-world evaluation is crucial for validating the claims of any robotic grasp detection system. It assesses the system’s performance beyond simulated environments, considering real-world complexities like varying lighting, object arrangements, and sensor noise. A successful real-world evaluation would involve testing the system on a diverse range of objects, setups, and scenarios, and rigorously documenting the results. This involves specifying the types of objects, their arrangement, the robotic platform, and the metrics used for evaluation. For example, the success rate, time taken for grasping, and the number of grasp attempts are all important factors that should be recorded in detail. Furthermore, the evaluation needs to address the system’s ability to generalize to unseen objects and environments, as this is vital for practical applications. Finally, a good real-world evaluation should also highlight the system’s limitations and potential failure modes and provide insight into areas where future improvements can be made. A strong emphasis on transparency is crucial; details about the experimental setup, methodology, and complete results must be presented to facilitate reproducibility and foster trust in the research.

More visual insights#

More on figures

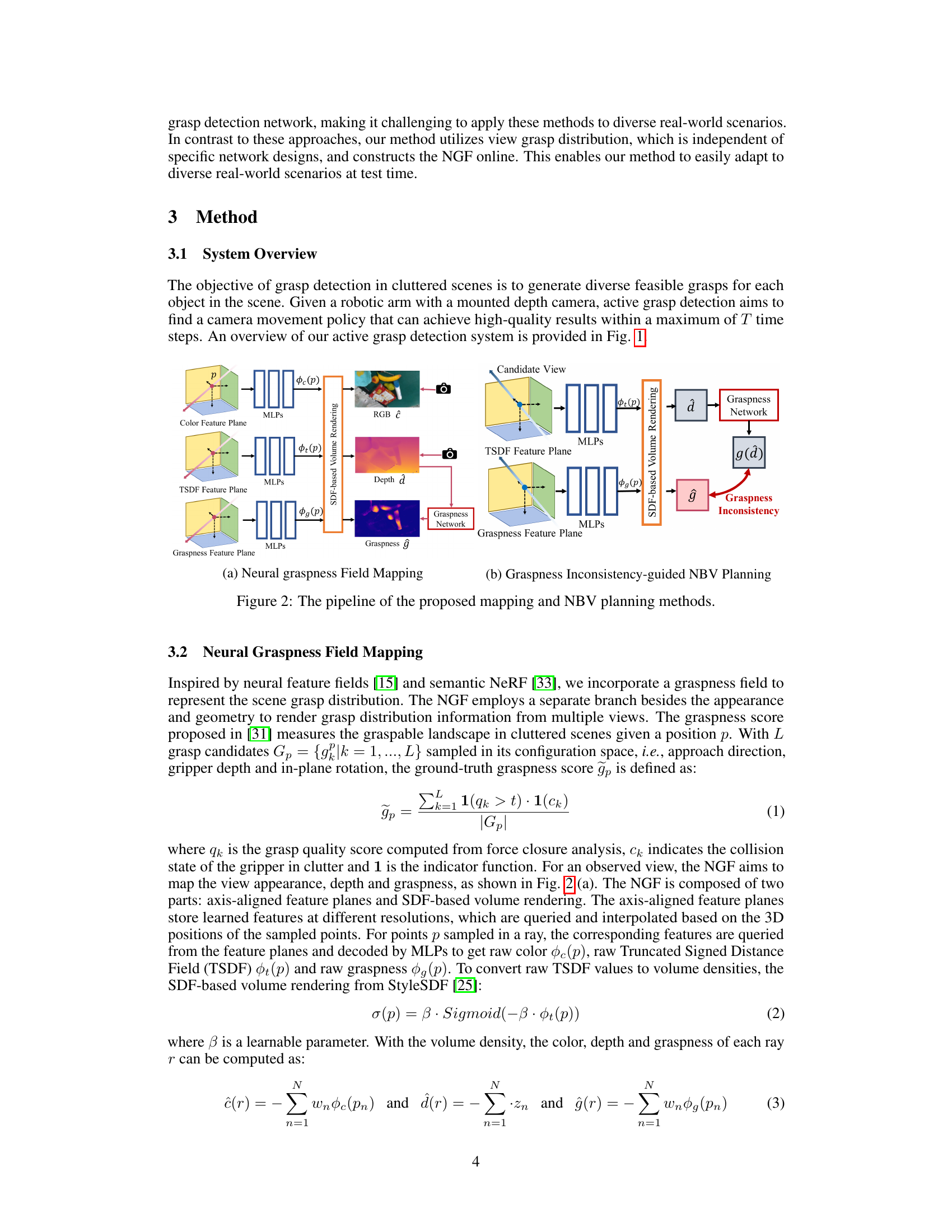

🔼 This figure shows the pipeline of the proposed neural graspness field mapping and next-best-view (NBV) planning methods. (a) Neural Graspness Field Mapping illustrates how the RGB image, depth image and graspness prediction from a graspness network are combined using SDF-based volume rendering to create a Neural Graspness Field (NGF). (b) Graspness Inconsistency-guided NBV Planning shows how the NGF is used to determine the next view by minimizing the inconsistency between the predicted graspness (from the NGF) and the pseudo-graspness (predicted from a depth image via the graspness network) in the candidate views. This inconsistency guides the selection of the next-best-view, aiming to efficiently and incrementally refine the grasp distribution in the NGF.

read the caption

Figure 2: The pipeline of the proposed mapping and NBV planning methods.

🔼 This figure visualizes the performance of the proposed graspness inconsistency-guided next-best-view (NBV) planning strategy. Subfigure (a) shows the pseudo-graspness error and rendered graspness error over multiple steps, indicating how the errors decrease as more views are incorporated. Subfigure (b) displays the pseudo-graspness, rendered graspness, and information gain for different views during the NBV planning process. The yellow boxes highlight areas with higher grasp probability, demonstrating how the NGF improves grasp prediction accuracy with additional views.

read the caption

Figure 3: (a) The pseudo-graspness error Eg(ĝ) and rendered graspness error Eĝ of initial steps. (b) Visualization of the pseudo-graspness, rendered graspness and the corresponding information gain of different views.

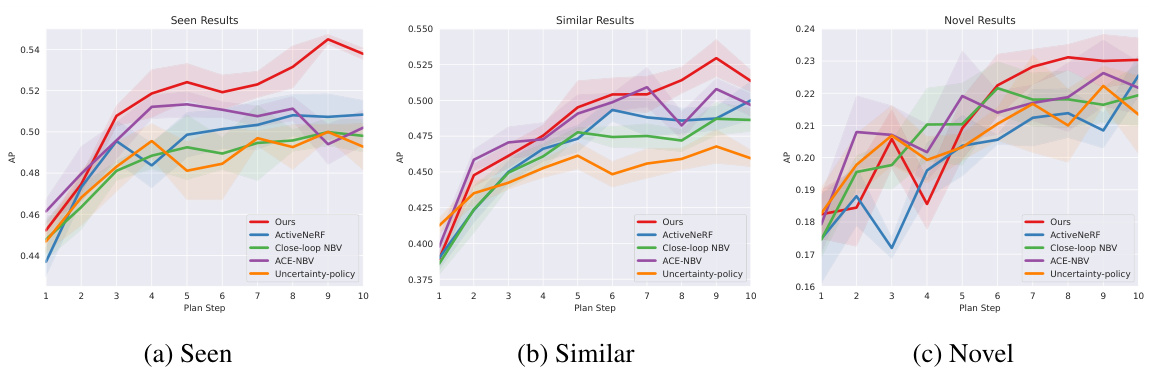

🔼 This figure compares the performance of different Next-Best-View (NBV) policies on the proposed Neural Graspness Field (NGF) for robotic grasp detection. The policies tested are: Ours (the proposed method), ActiveNeRF, Close-loop NBV, ACE-NBV, and Uncertainty-policy. The comparison is made across three object categories (Seen, Similar, Novel) using a variety of performance metrics. The x-axis represents the plan step (number of camera movements), and the y-axis represents the Average Precision (AP). The shaded areas represent confidence intervals. The results show that the proposed method outperforms other methods on all three object categories and across multiple performance measures, particularly in later planning steps.

read the caption

Figure 4: Comparison on different NBV policies based on the proposed NGF.

🔼 This figure compares the grasp detection results obtained using two different methods: one using graspness predicted from a 3D geometric representation, and the other using graspness sampled from a Neural Graspness Field (NGF). The results are shown separately for seen, similar, and novel objects across different planning steps (number of views used for active perception). The shaded area represents the standard deviation across multiple trials. The figure demonstrates the superior performance of the NGF-based sampling method, especially for novel objects.

read the caption

Figure 5: The comparison of grasp detection result generated with the graspness predicted from 3D geometry and sampled from NGF.

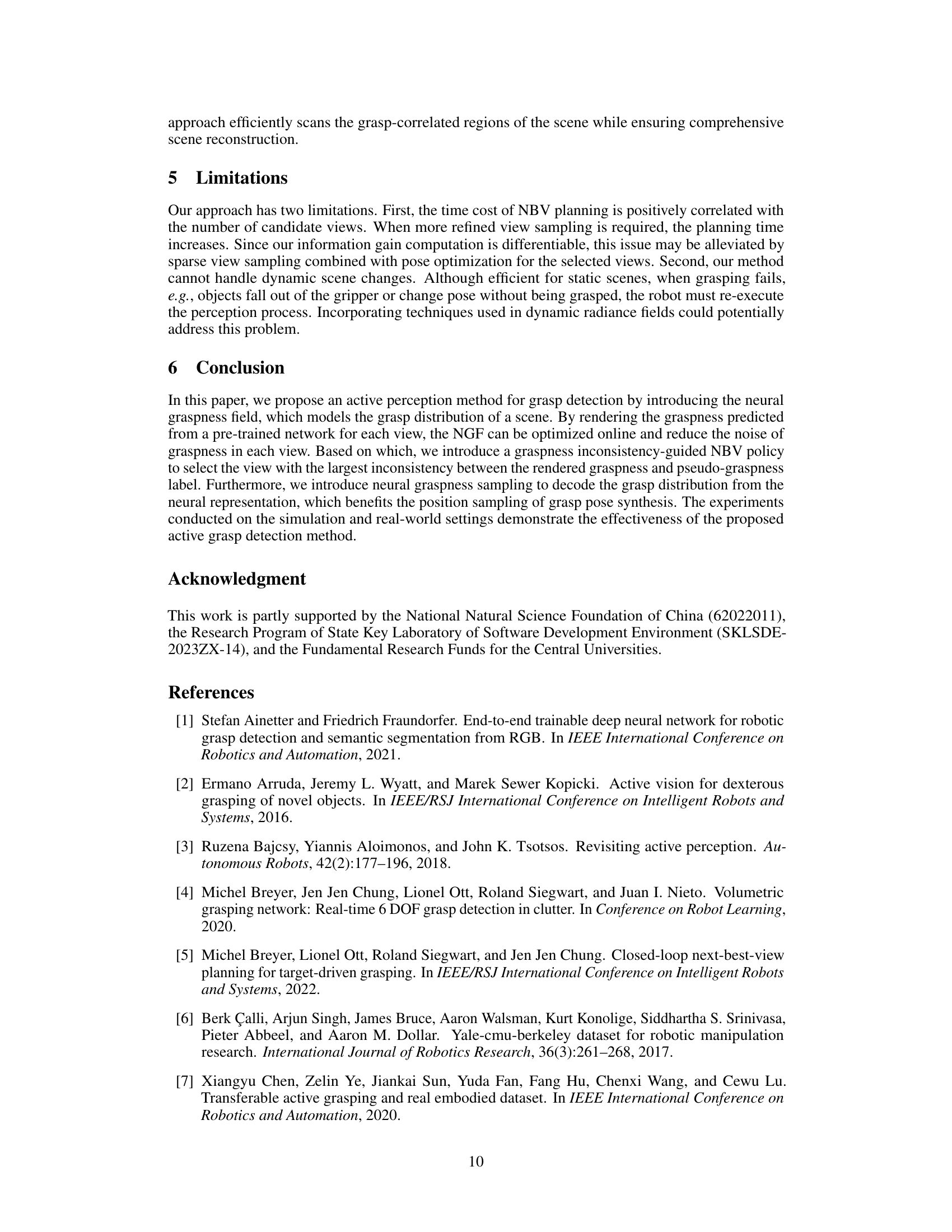

🔼 This figure shows the experimental setup used for real-world grasping experiments. The left image displays a UR-10 robotic arm equipped with a RealSense D435i depth camera, positioned above a table with various objects. The right image shows a collection of the different objects used in the experiments, laid out on a flat surface. These items represent a variety of shapes, sizes, and textures to challenge the grasping system.

read the caption

Figure 7: The robot setup of real-world experiments and the objects used for grasping.

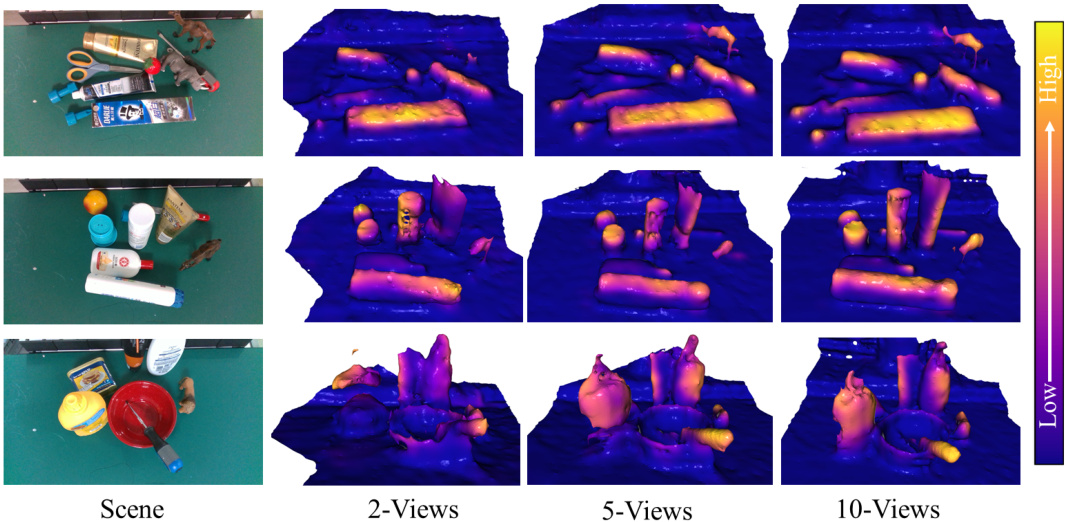

🔼 This figure visualizes the Neural Graspness Field (NGF) with different numbers of perception views (2, 5, and 10 views). The yellow color in the 3D reconstruction represents a high grasp probability. The figure demonstrates that the NGF can reconstruct the scene geometry and model the graspness distribution. With more views added, the details of the geometry and grasp distribution are incrementally refined, showcasing the effectiveness of the proposed active perception method.

read the caption

Figure 8: Visualization of the geometry and graspness extracted from NGF in different planning steps.

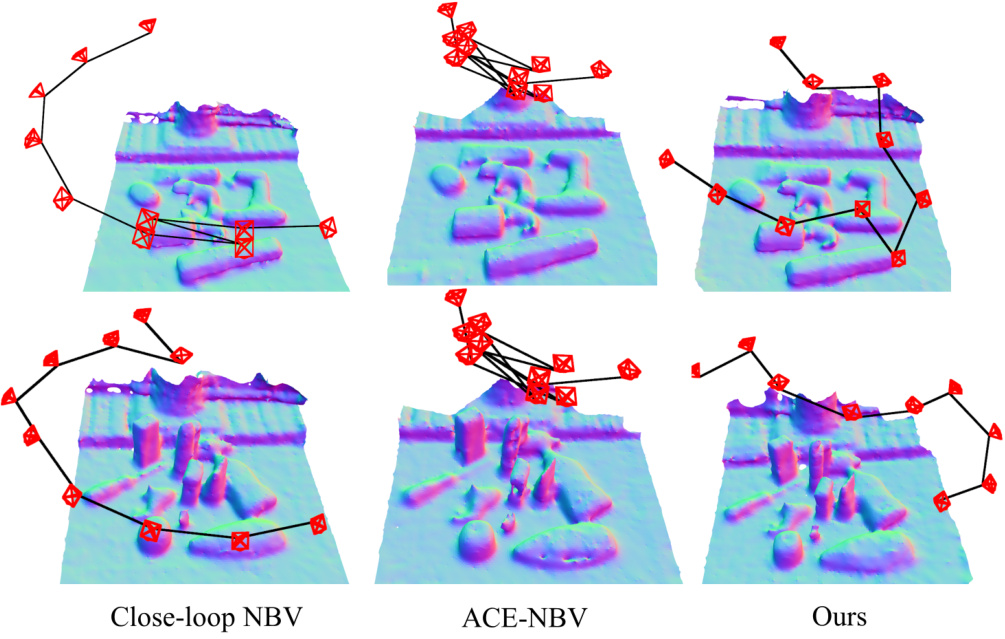

🔼 This figure visualizes the camera trajectories generated by three different active grasp detection methods: Close-loop NBV, ACE-NBV, and the proposed method. Each method’s trajectory is shown on a 3D reconstruction of the scene, illustrating how each strategy plans camera movements to gather information for grasp detection. Close-loop NBV focuses on maximizing scene coverage, while ACE-NBV prioritizes regions with high grasp affordance. The proposed method aims for a balance between comprehensive scene coverage and focusing on grasp-relevant areas, as seen in the more efficient trajectories.

read the caption

Figure 9: Visualization of the camera trajectories generated from different active grasp detection methods.

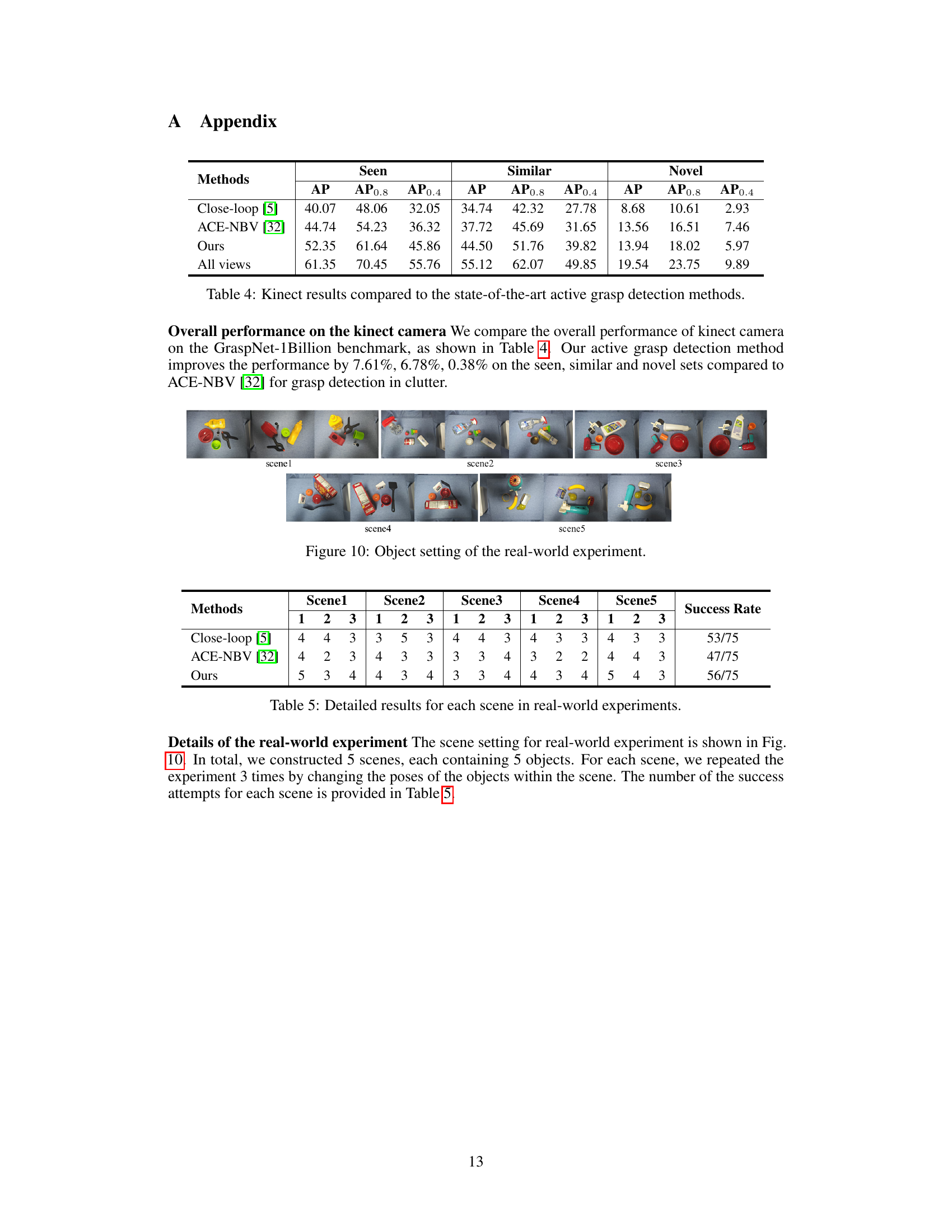

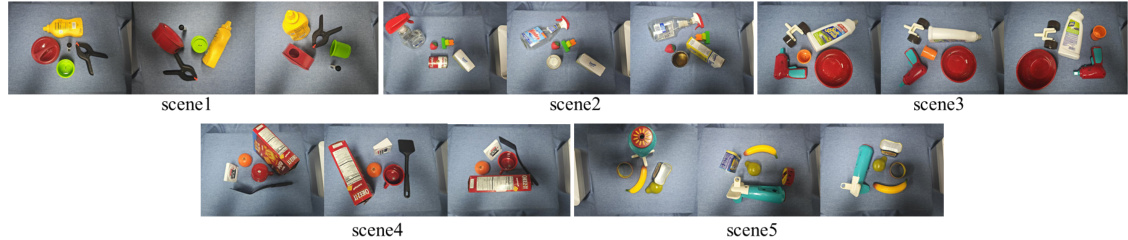

🔼 This figure shows the setup of real-world experiments. There are five scenes in total, each with five objects placed in different poses. The figure visualizes the arrangement of objects in each scene to demonstrate the complexity of the real-world grasping tasks.

read the caption

Figure 10: Object setting of the real-world experiment.

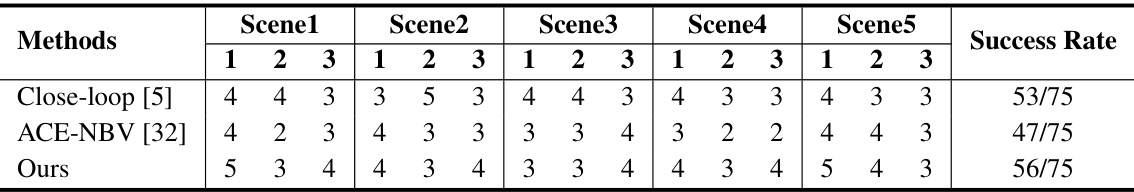

More on tables

🔼 This table presents the success rates of three different methods (Close-loop [5], ACE-NBV [32], and Ours) in real-world grasping experiments. The success rate is calculated as the percentage of successful grasps out of the total number of attempts (56/75 for the proposed method). It shows the performance comparison of the proposed method against two state-of-the-art baselines in a real-world setting, highlighting the improvement achieved by the proposed method.

read the caption

Table 2: Results of the real-world grasping experiments.

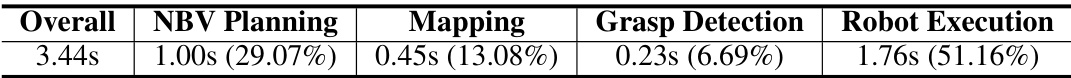

🔼 This table shows a breakdown of the time taken for each step in the active grasp detection system. The total time is 3.44 seconds, with the robot execution taking the longest (51.16%). NBV planning consumes a significant portion of the total time (29.07%). The mapping and grasp detection steps are relatively quick.

read the caption

Table 3: Runtime analysis of the proposed method.

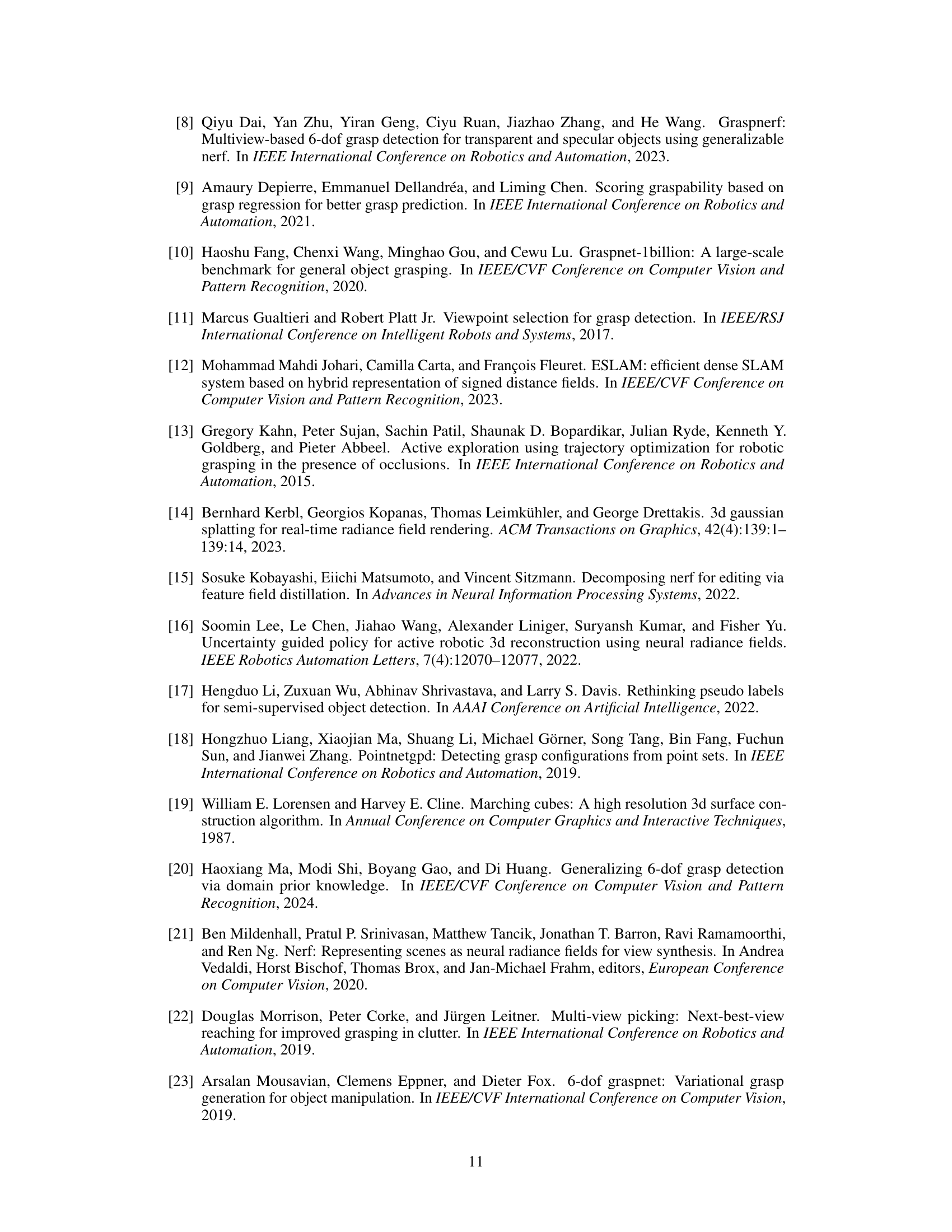

🔼 This table presents a comparison of the performance of the proposed active grasp detection method against state-of-the-art methods using a Kinect camera. It shows the average precision (AP) and AP at different thresholds (AP0.8 and AP0.4) for three object categories: seen, similar, and novel. The ‘All views’ row represents the performance when all available views of the scene are used, serving as an upper bound for comparison.

read the caption

Table 4: Kinect results compared to the state-of-the-art active grasp detection methods.

🔼 This table presents the success rate of the real-world grasping experiments for three different methods: Close-loop [5], ACE-NBV [32], and the proposed method. The success rate is calculated as the number of successful grasps divided by the total number of attempts. Each method was tested on a set of 25 objects from the YCB dataset, with each object tested in five different cluttered scenes, resulting in 75 total trials. The proposed method outperforms the baselines, achieving the highest success rate.

read the caption

Table 2: Results of the real-world grasping experiments.

Full paper#