TL;DR#

Contextual bandit algorithms help machines make optimal decisions based on available information. Neural contextual bandits, using neural networks, perform better than traditional methods but are vulnerable to malicious reward corruptions. These corruptions can significantly impact performance, leading to unreliable AI systems. Existing solutions mostly focus on linear or kernel-based models, which struggle with complex, real-world scenarios.

This work introduces R-NeuralUCB, a new algorithm designed to overcome these limitations. R-NeuralUCB uses a novel training method that focuses on reliable information and effectively minimizes the impact of corrupted rewards. The algorithm’s effectiveness is demonstrated through experiments on real-world datasets, showing superior performance and robustness compared to existing methods. The researchers also provide a theoretical analysis, providing strong evidence supporting the algorithm’s reliability.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on robust reinforcement learning and neural bandits because it addresses the critical challenge of adversarial attacks on rewards. The proposed R-NeuralUCB algorithm is significant for its novel context-aware GD training strategy, improved robustness against reward corruptions, and its theoretical analysis without commonly used restrictive assumptions. This research opens new avenues for developing more reliable and trustworthy AI systems in real-world applications.

Visual Insights#

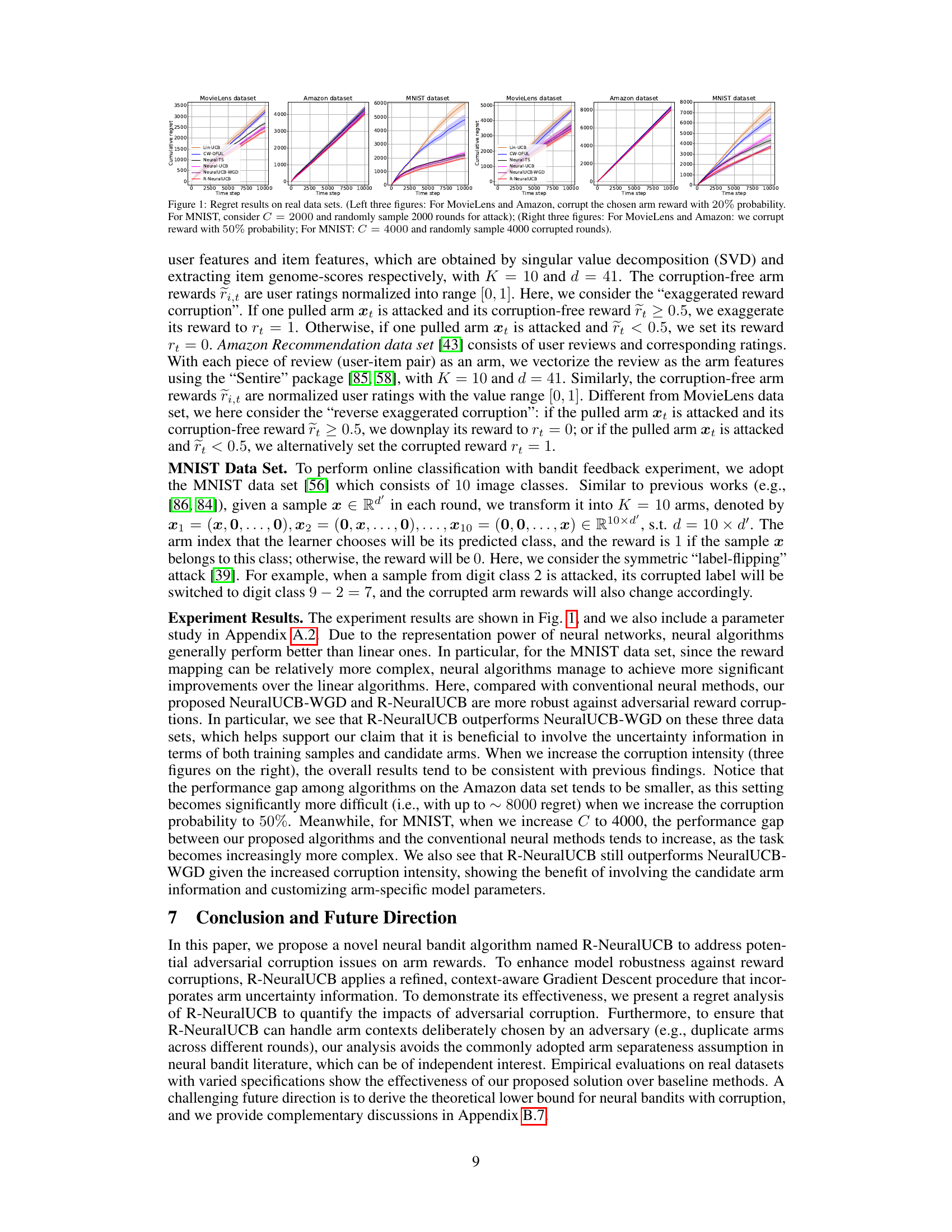

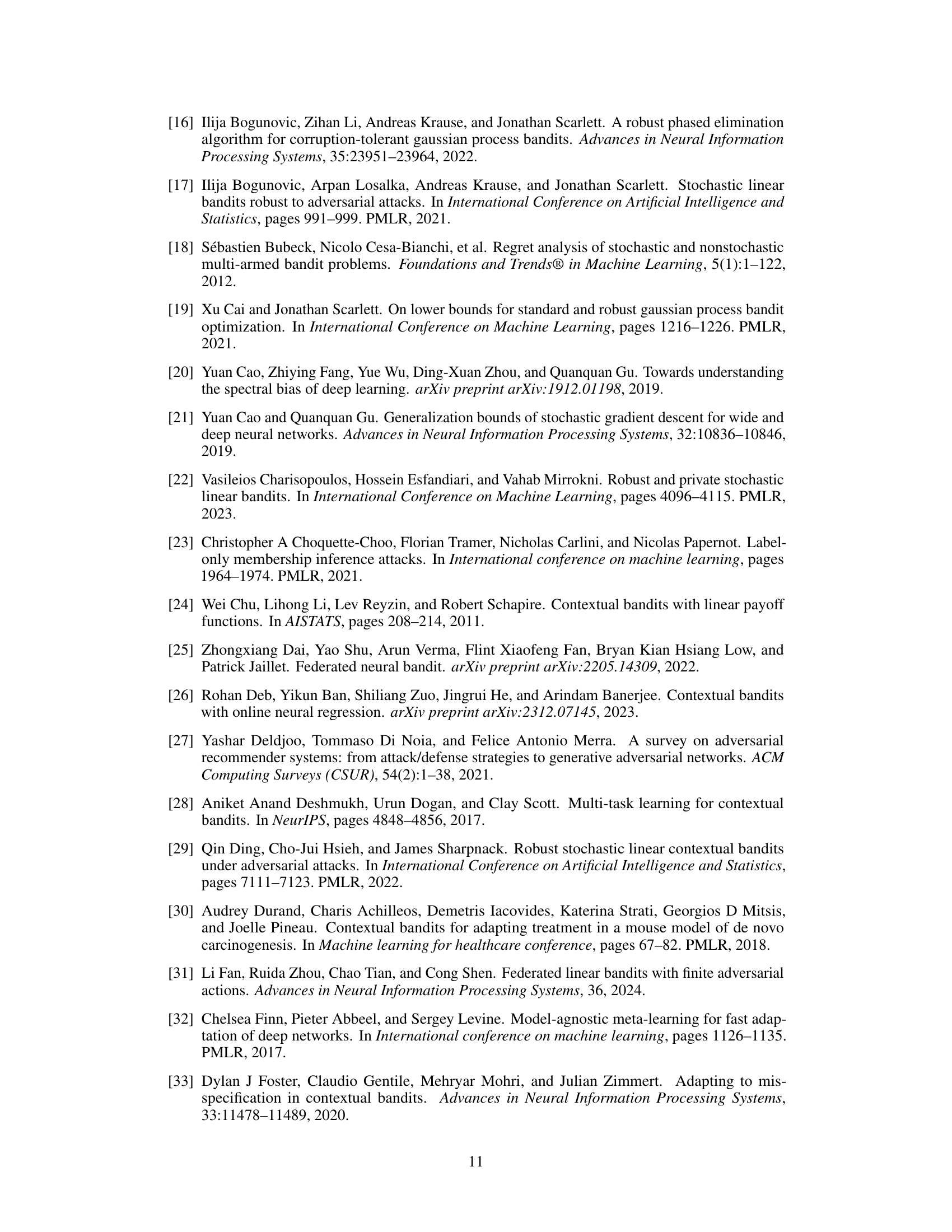

🔼 This figure displays the cumulative regret of different contextual bandit algorithms on three real-world datasets (MovieLens, Amazon, MNIST) under different adversarial corruption scenarios. The left three subfigures show the results when 20% of chosen arm rewards are corrupted (with a specific corruption strategy for MNIST), while the right three subfigures show results when 50% of chosen arm rewards are corrupted. The algorithms compared include linear baselines (LinUCB, CW-OFUL), traditional neural approaches (Neural-UCB, Neural-TS), and the authors’ proposed methods (NeuralUCB-WGD, R-NeuralUCB). The figure demonstrates the performance of different algorithms against adversarial corruptions on various datasets and corruption levels. Error bars might be shown indicating variability in the experiments.

read the caption

Figure 1: Regret results on real data sets. (Left three figures: For MovieLens and Amazon, corrupt the chosen arm reward with 20% probability. For MNIST, consider C = 2000 and randomly sample 2000 rounds for attack); (Right three figures: For MovieLens and Amazon: we corrupt reward with 50% probability; For MNIST: C = 4000 and randomly sample 4000 corrupted rounds).

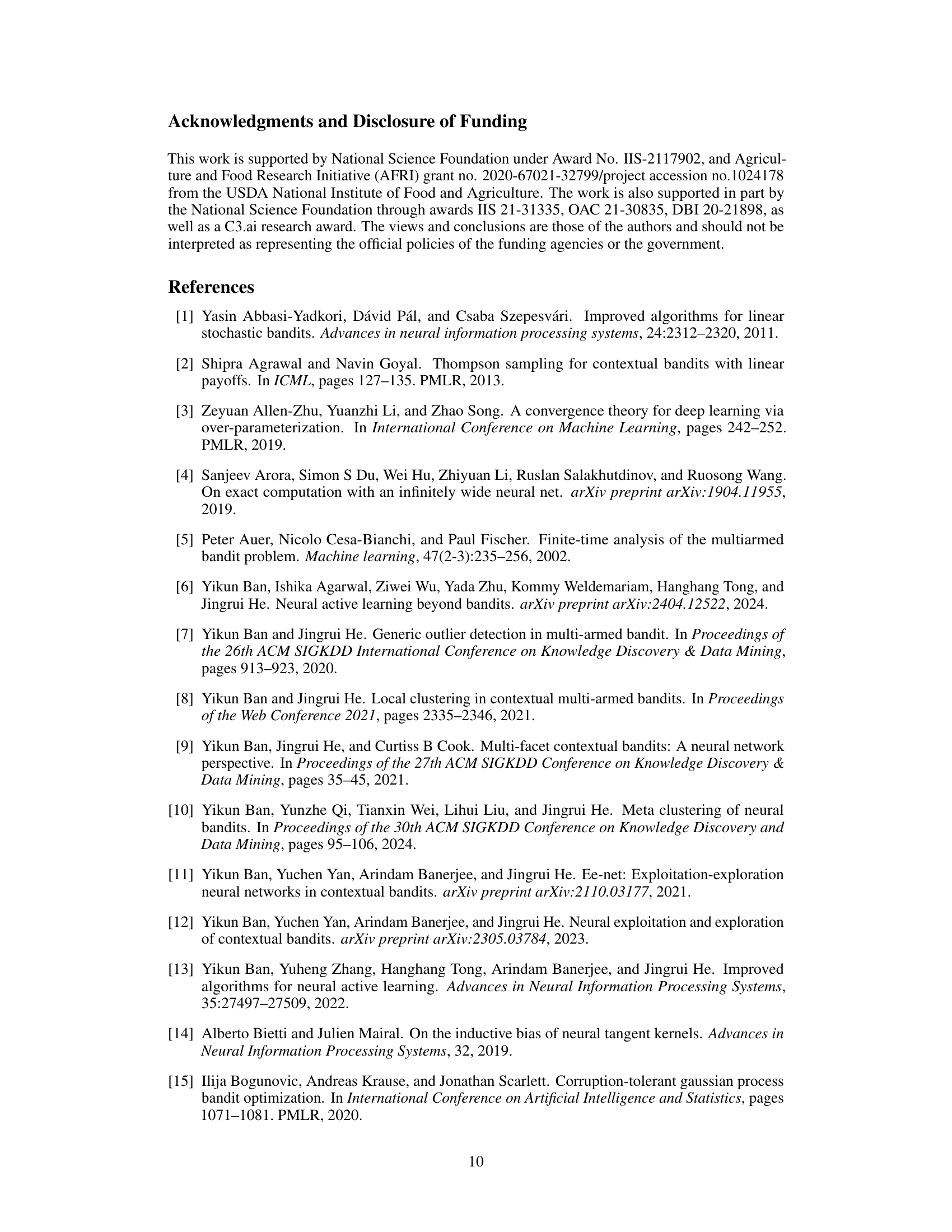

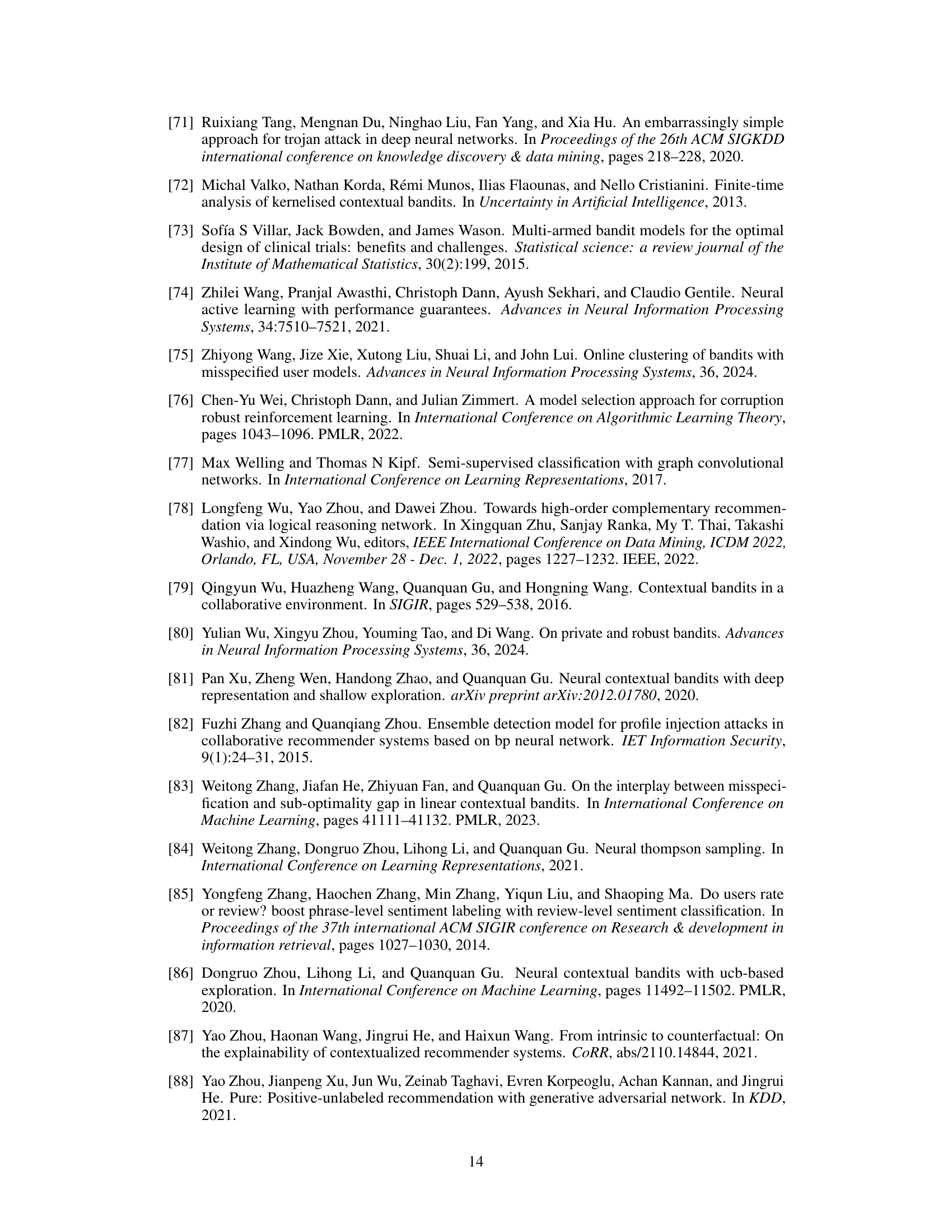

🔼 This table compares the theoretical regret bounds of various contextual bandit algorithms under different reward function assumptions and adversarial corruption levels. The algorithms are categorized by the type of reward function they assume (linear, kernelized, or arbitrary), whether the corruption level C is known or unknown, and their resulting regret bounds. The regret bounds are expressed in terms of the time horizon T, context dimension d, and the corruption level C. The table highlights the trade-off between robustness against adversarial corruption and theoretical guarantees.

read the caption

Table 1: Comparison of T-round regret bounds with adversarial corruption level C.

In-depth insights#

Adversarial Robustness#

The concept of adversarial robustness in machine learning centers on a model’s resilience against malicious attacks or perturbations. In the context of the provided research paper focusing on contextual bandits, adversarial robustness specifically addresses the vulnerability of neural network-based contextual bandit algorithms to corrupted reward data. Corrupted rewards, which can be intentionally manipulated by an adversary, can severely impact the algorithm’s performance and lead to suboptimal decision-making. The paper explores methods to improve model robustness by employing context-aware gradient descent, a strategy that leverages the uncertainty levels of candidate arms to mitigate the influence of corrupted feedback. A key contribution lies in analyzing the impact of reward corruptions without relying on common assumptions (like arm separateness) often used in existing bandit algorithms. This analysis is crucial for demonstrating the algorithm’s effectiveness in real-world adversarial scenarios where such assumptions may not hold. Ultimately, the research focuses on developing more resilient and reliable machine learning systems by enhancing their capacity to function accurately under attack.

R-NeuralUCB Algo#

The Robust Neural Upper Confidence Bound (R-NeuralUCB) algorithm is a novel contextual bandit method designed to enhance robustness against adversarial reward corruptions. It achieves this by employing a context-aware gradient descent training strategy that adaptively weights training samples based on their uncertainty levels. This approach reduces the influence of potentially corrupted rewards. Furthermore, R-NeuralUCB incorporates an informative exploration mechanism (UCB) to balance exploration and exploitation. A key theoretical contribution is its regret analysis under over-parameterized networks without relying on the commonly adopted arm separateness assumption. This makes the algorithm more widely applicable in real-world scenarios. Empirically, R-NeuralUCB demonstrates superior performance compared to baselines, exhibiting enhanced resilience to various types of reward corruption.

Regret Analysis#

A regret analysis in a reinforcement learning or bandit setting is crucial for evaluating algorithm performance. It quantifies the difference between the rewards obtained by an algorithm and those obtained by an optimal strategy. In the context of contextual bandits with adversarial corruptions, a regret analysis must account for the impact of corrupted rewards on the learner’s decisions. A robust algorithm should ideally exhibit a regret bound that scales gracefully with problem parameters, like context dimension, corruption level, and time horizon. Overcoming assumptions, such as arm separateness (distinct contexts for each arm) commonly found in existing neural contextual bandit analysis, is important for establishing theoretical robustness. A rigorous analysis should address the complexities introduced by neural networks, such as non-linearity and the interaction between model parameters, data, and adversarial corruptions, leading to potentially data-dependent regret bounds. Providing a data-dependent bound is more realistic than a worst-case bound. Finally, a thorough analysis must justify the chosen assumptions and highlight their limitations.

Real-world Tests#

A dedicated ‘Real-world Tests’ section would significantly enhance a research paper. It should go beyond simple demonstrations and delve into the practical applicability of the presented model or algorithm. This involves testing against diverse, realistic datasets, possibly obtained from various sources or mimicking real-world conditions. The results should clearly show the algorithm’s performance under varied and noisy inputs, demonstrating its robustness and generalizability. Detailed descriptions of data preprocessing, including any noise handling or standardization techniques, should be provided. Comparison with existing state-of-the-art methods is also crucial, using common evaluation metrics. Furthermore, the analysis of failures is important, not just highlighting successes. Discussion should focus on the model’s limitations when encountering unexpected situations, and how these shortcomings can be addressed. The section should demonstrate not only effectiveness, but also reliability and practical feasibility in scenarios beyond controlled environments.

Future Works#

The ‘Future Works’ section of a research paper on robust neural contextual bandits could explore several promising directions. Extending the theoretical analysis to derive lower bounds on regret under adversarial corruptions would provide a more complete understanding of the algorithm’s optimality. Investigating alternative exploration strategies, beyond the proposed UCB approach, such as Thompson Sampling or other methods, might improve exploration efficiency and robustness. Empirical evaluation on a wider range of datasets and adversarial attack models is crucial to strengthen the algorithm’s generalizability. Furthermore, adapting R-NeuralUCB to different contextual bandit settings, like those with delayed feedback or non-stationary rewards, would broaden its applicability. Finally, researching the impact of network architecture choices on the algorithm’s performance is important to optimize its efficiency and robustness.

More visual insights#

More on tables

🔼 This table compares the theoretical regret bounds of several contextual bandit algorithms under different reward corruption scenarios. The algorithms are categorized based on their reward function (linear, kernelized, or arbitrary) and knowledge of corruption level (known or unknown). The regret bounds are presented in terms of the time horizon T, the effective dimension of the context space d or NTK Gram matrix, and the corruption level C. This table shows that the proposed R-NeuralUCB algorithm achieves a better regret bound by removing assumptions, such as the arm separateness assumption.

read the caption

Table 1: Comparison of T-round regret bounds with adversarial corruption level C.

🔼 This table compares the theoretical regret bounds of several contextual bandit algorithms. The algorithms are categorized by their reward function (linear, kernelized, or arbitrary) and whether the adversarial corruption level (C) is known or unknown. The regret bounds show the algorithm’s performance under different assumptions and adversarial conditions. The table helps to illustrate the robustness and theoretical guarantees of the proposed R-NeuralUCB algorithm.

read the caption

Table 1: Comparison of T-round regret bounds with adversarial corruption level C.

Full paper#