TL;DR#

ADABOOST.MH is a popular multi-class boosting algorithm, but its factorized version lacked a proven convergence rate, hindering its theoretical understanding and practical applications. This open problem stemmed from the difficulty in analyzing the interaction between the input-independent code vector and the label-independent scalar classifier in the factorized structure. This is important because convergence rates determine the algorithm’s efficiency and reliability.

This paper elegantly addresses this issue. The authors provide a novel convergence result for the factorized ADABOOST.MH, demonstrating that the training error can be reduced to zero within a specific number of iterations. Notably, they improved the dependency on the sample size (n) in the convergence bound, making the result more practically relevant, especially when the sample size is large compared to the number of classes. This achievement directly solves the long-standing open problem and significantly advances the theoretical foundation of the algorithm.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in boosting algorithms and multi-class classification. It resolves a long-standing open problem concerning the convergence rate of factorized ADABOOST.MH, offering improved theoretical understanding and potentially guiding the development of more efficient algorithms. It also opens new avenues for research into the theoretical properties of boosting algorithms with factorized classifiers and the relationships between sample complexity and algorithmic design.

Visual Insights#

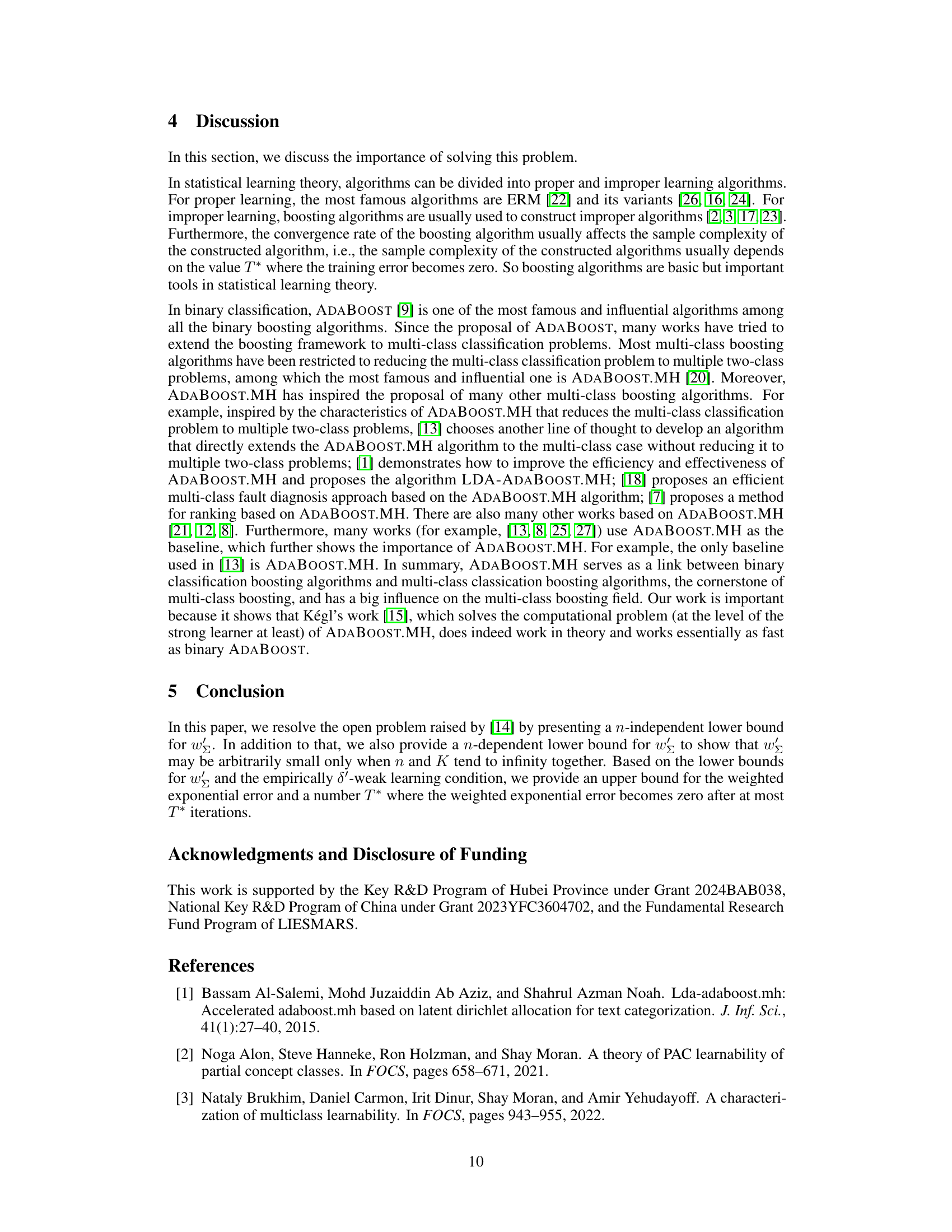

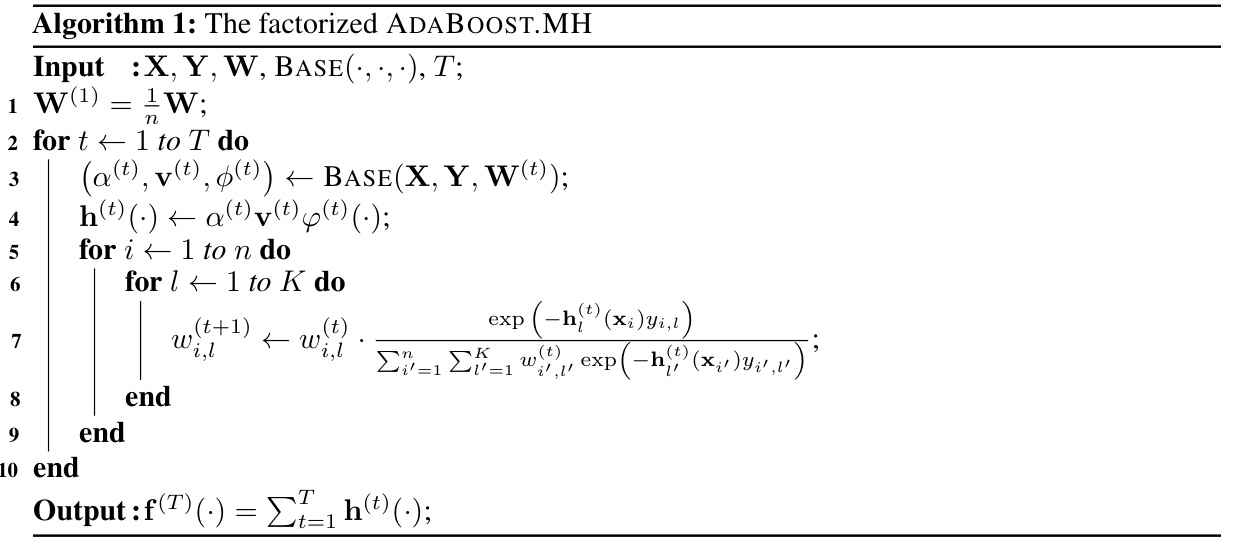

🔼 This algorithm details the steps involved in the factorized version of the ADABOOST.MH algorithm. It begins with an initialization of the weight matrix and iteratively updates weights based on the performance of base classifiers. These classifiers are factorized, meaning they are composed of an input-independent code vector and a label-independent scalar classifier. The algorithm outputs a final discriminant function that combines the results of multiple base classifiers. Each iteration involves selecting a base classifier and adjusting weights to focus more on misclassified data points. The output is a linear combination of base classifiers.

read the caption

Algorithm 1: The factorized ADABOOST.MH

Full paper#