TL;DR#

Contextual Markov Decision Processes (CMDPs) are powerful tools for modeling sequential decision-making problems under uncertainty, but solving them efficiently remains a challenge. Existing methods often require extensive computation or make strong assumptions about the problem structure. This hinders their practical applicability in real-world scenarios. Furthermore, existing algorithms that use offline data often rely on online oracles, which aren’t practical in many offline settings.

This paper introduces LOLIPOP, a novel algorithm that addresses these limitations. LOLIPOP cleverly leverages a layerwise exploration-exploitation tradeoff to achieve near-optimal regret bounds with significantly reduced computational cost. It only requires O(H log T) calls to an offline density estimation oracle, making it highly efficient for offline learning. The algorithm is also versatile enough to be applied to pure exploration tasks, expanding its applicability beyond reward-based settings. The results provide a major advancement in offline reinforcement learning.

Key Takeaways#

Why does it matter?#

This paper is highly important as it presents the first efficient and near-optimal algorithm for offline learning in contextual Markov Decision Processes (CMDPs). It bridges a critical gap in the field by reducing the computational complexity of CMDPs, significantly impacting research in reinforcement learning and related areas. The developed algorithm’s versatility and applicability to pure exploration tasks further broaden its potential impact. This advancement opens up new avenues for research in areas like personalized recommendations, robotics, and healthcare where CMDPs are commonly used.

Visual Insights#

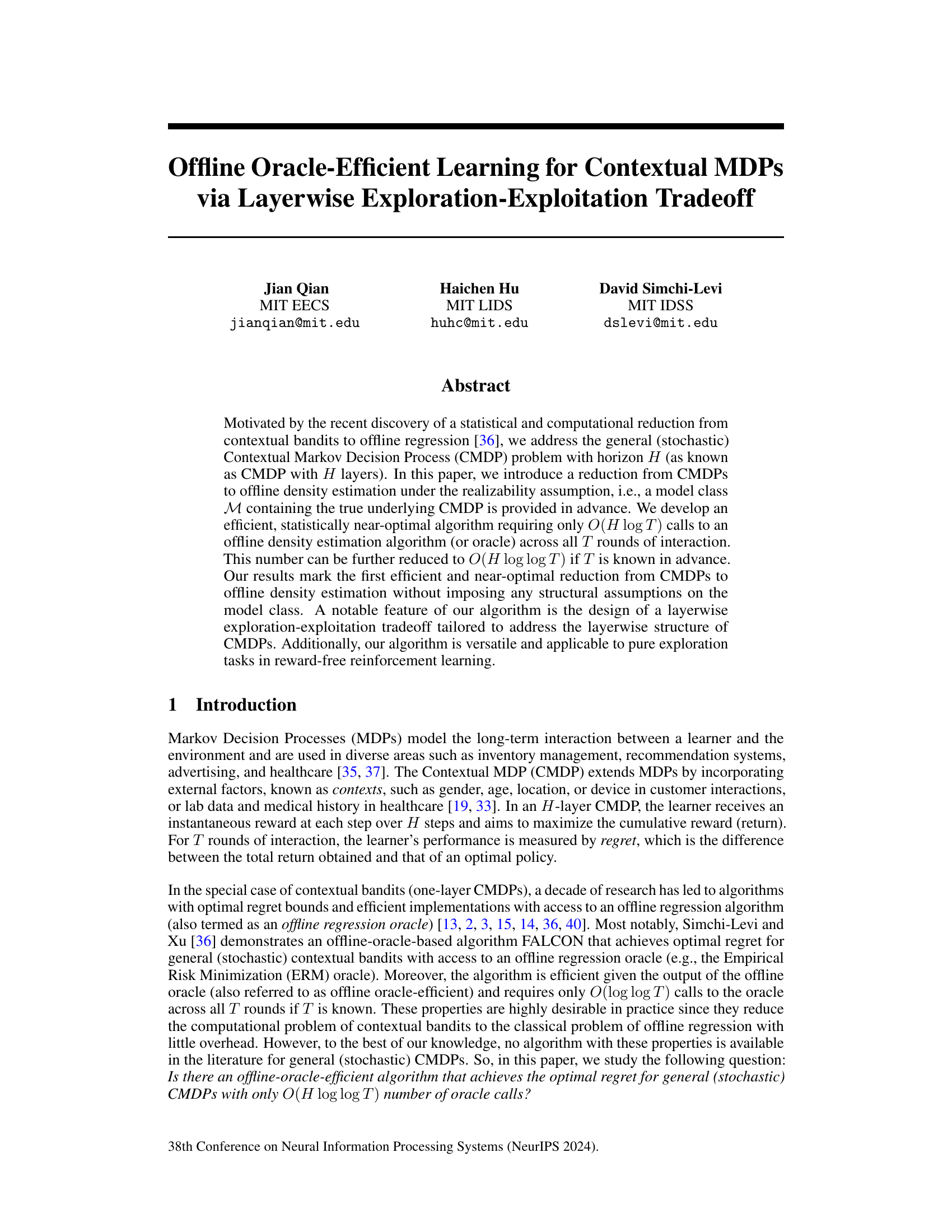

🔼 This figure illustrates the dependencies between the different components of the LOLIPOP algorithm. It shows how the estimations from the previous epoch (Mm−1) are used to construct the policy cover (Πmct) and policy kernel (pmct) for the current epoch. Then, the oracle (OffDEM) is called to get new estimations (Mm) using data collected with the current policy. Finally, it shows how trusted occupancy measures and transitions are computed, feeding back into the next iteration.

read the caption

Figure 1: The dependence graph of the construction. The estimation Mm−1 = {Pm−1h, Rm−1h}h∈[H] from the previous round provides the optimal policy πm−1 (Line 9) and the regret estimation regm−1 (Line 8) for the construction of Πm, pm. The estimation Pmh, Rmh is generated by calling the oracle OffDEM on the trajectories collected with policy kernel pm (Line 12). The trusted transitions and trusted occupancy measures Thm, dhm are computed from amh, Pmh (Eqs. (2) and (3)). The policy cover Πmct is the union of πm−1,ct and the policies {πm,s,act}s,a∈S×A calculated in Line 8 which requires Th−1m, dhm. The policy kernel pmct is the inverse gap weighting on Πmct (Line 10).

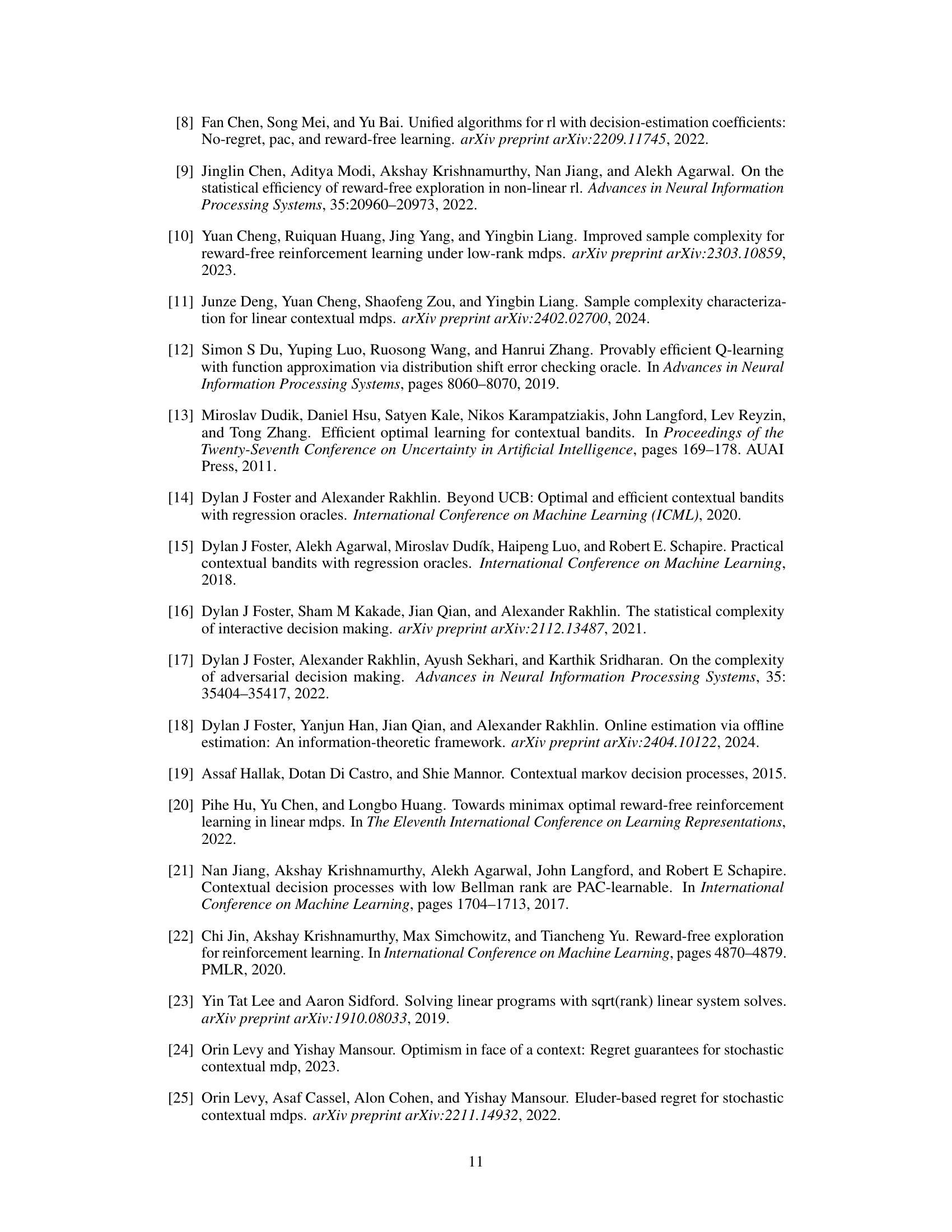

🔼 This table compares the performance of various algorithms for solving contextual Markov Decision Processes (CMDPs). It shows the regret rate (how far from optimal the algorithm’s performance is), the computational complexity (measured by the number of oracle calls), and any assumptions made by each algorithm. The optimal regret rate is given as a benchmark, and the table highlights the efficiency of the proposed LOLIPOP algorithm in terms of oracle calls.

read the caption

Table 1: Algorithms' performance with general finite model class M and i.i.d. contexts. The optimal rate here refers to Õ(poly(H, S, A) √T log |M|). All algorithms assume realizability, so it is omitted from the table. The reachability assumption and the varying representation assumption are very stringent for tabular CMDP, for details we refer to Appendix B.

Full paper#