TL;DR#

Traditional statistical methods often struggle with heavy-tailed data, especially in streaming settings where memory is limited. This creates challenges for high-dimensional data analysis. Existing algorithms, such as Clipped-SGD, have shown promise but don’t reach optimal statistical rates. The limitations stem from the difficulty in handling the large fluctuations caused by the heavy-tailed noise.

This research addresses these challenges by introducing a novel iterative refinement strategy for martingale concentration, significantly improving the accuracy of Clipped-SGD. The refined algorithm achieves near-optimal sub-Gaussian rates for smooth and strongly convex objectives. The improved algorithm shows superior performance in several heavy-tailed statistical estimation tasks, including mean estimation and linear regression in streaming settings. This work bridges a gap in existing research and offers significant improvements for dealing with large, complex datasets in memory-constrained environments.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working with heavy-tailed data in streaming settings. It provides near-optimal statistical estimation rates by refining existing algorithms and offers a novel approach to martingale concentration. This opens new avenues for handling large-scale, high-dimensional data in various applications, especially where memory constraints are significant.

Visual Insights#

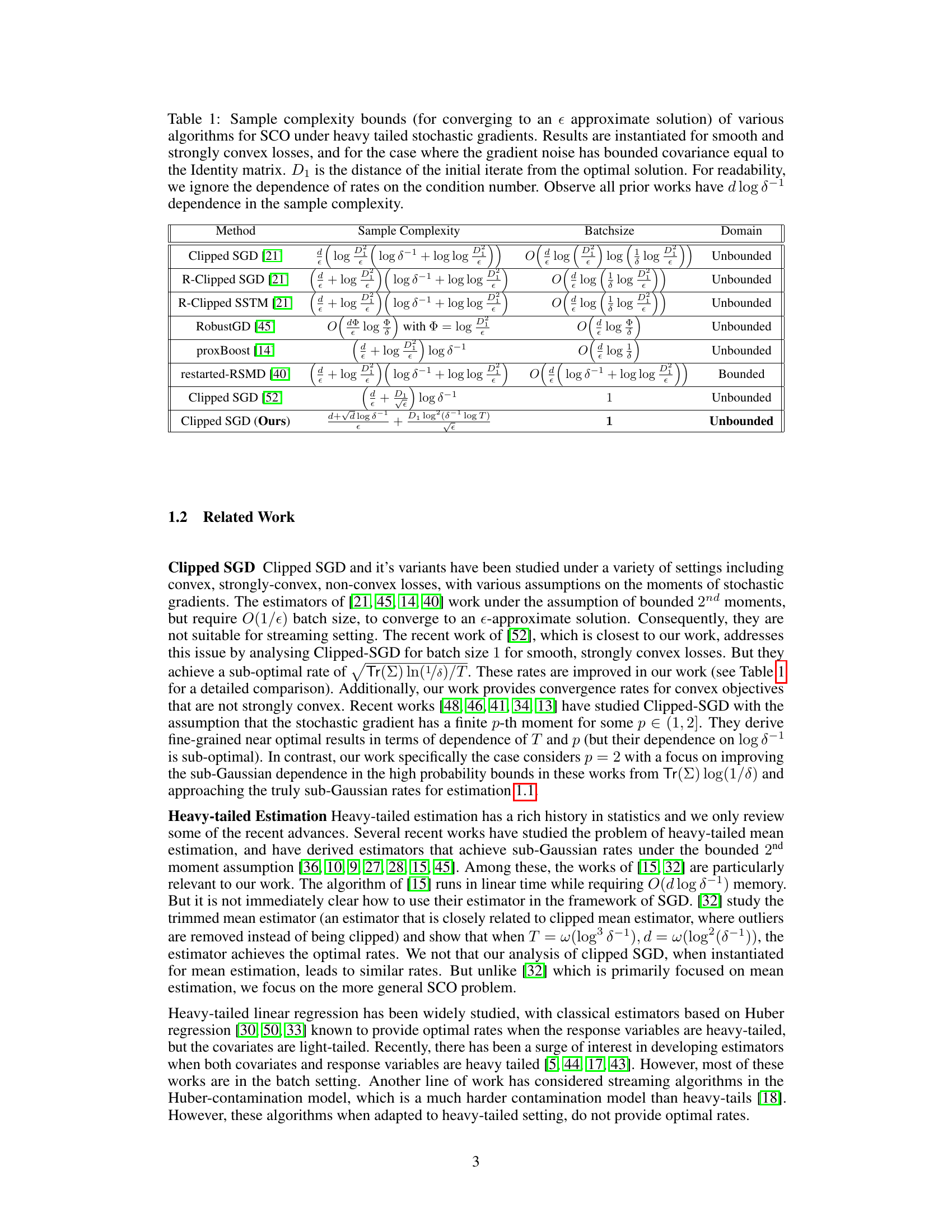

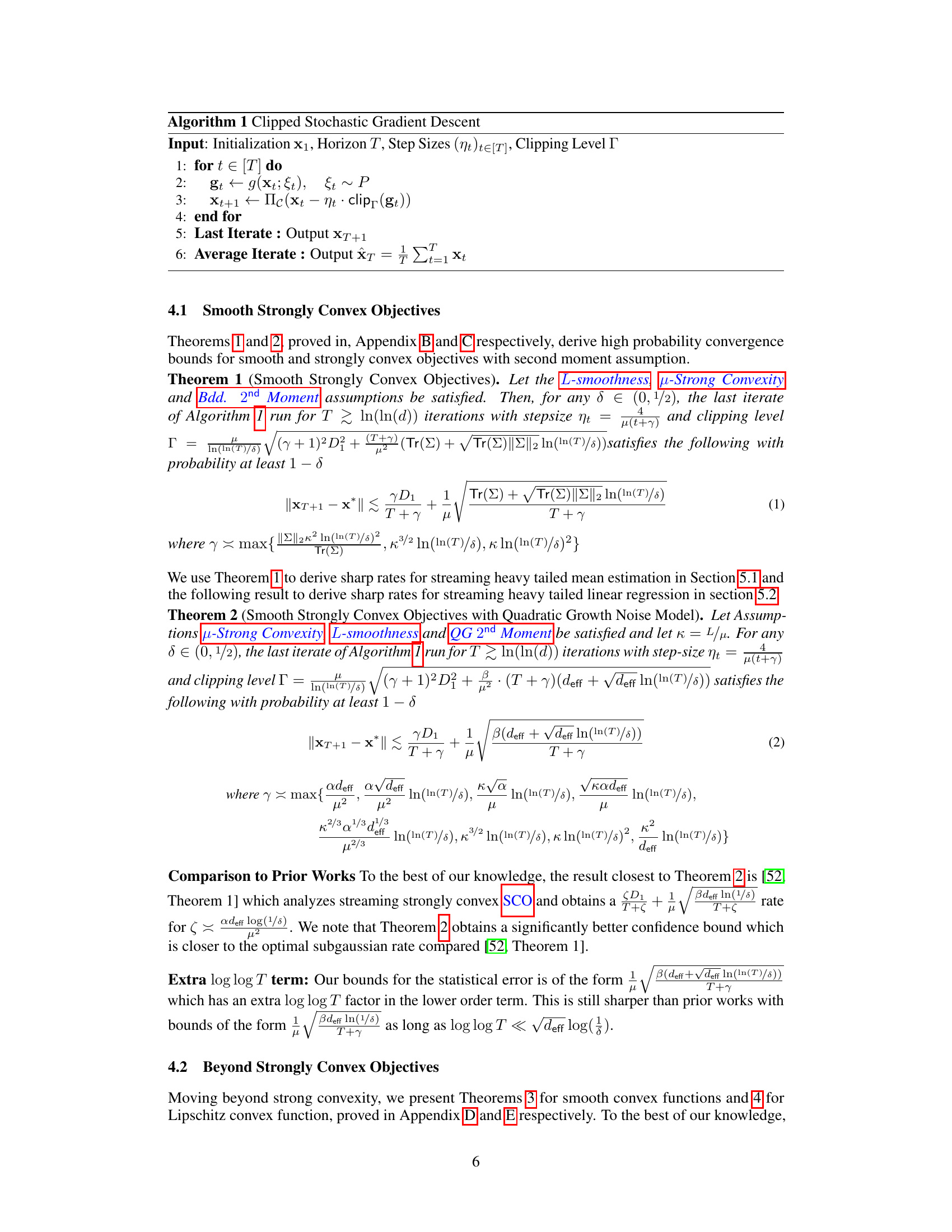

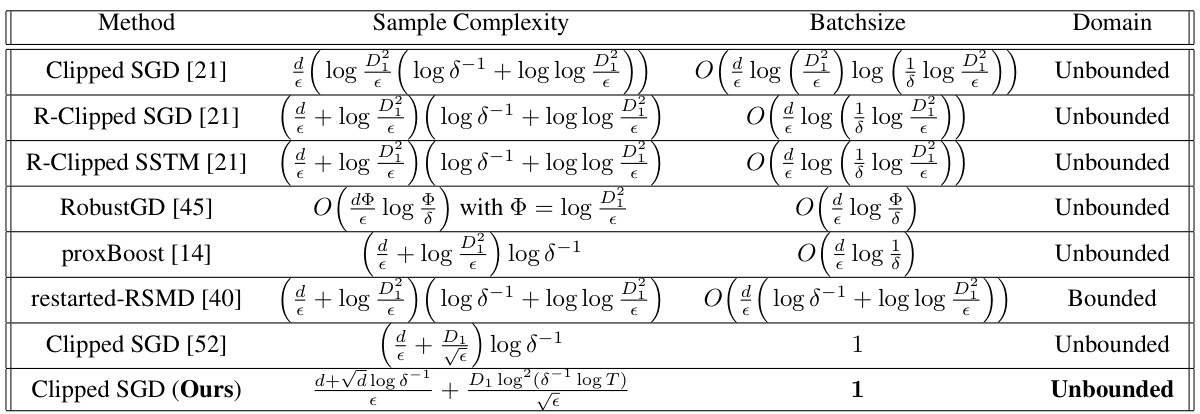

🔼 This table compares the sample complexities of various algorithms for solving stochastic convex optimization (SCO) problems with heavy-tailed stochastic gradients. The complexities are shown for smooth and strongly convex loss functions, assuming the gradient noise has bounded covariance. The table highlights the dependence on the dimension (d), the desired accuracy (ε), the initial distance from the optimum (D₁), and the failure probability (δ). It shows that the proposed Clipped SGD algorithm achieves a significantly improved sample complexity bound compared to existing methods.

read the caption

Table 1: Sample complexity bounds (for converging to an ε approximate solution) of various algorithms for SCO under heavy tailed stochastic gradients. Results are instantiated for smooth and strongly convex losses, and for the case where the gradient noise has bounded covariance equal to the Identity matrix. D₁ is the distance of the initial iterate from the optimal solution. For readability, we ignore the dependence of rates on the condition number. Observe all prior works have d log δ⁻¹ dependence in the sample complexity.

Full paper#