TL;DR#

Estimating the treatment effect from adaptively collected data is crucial in causal inference and reinforcement learning, but existing methods primarily focus on asymptotic properties. This limits their practical value as finite-sample performance is often more relevant. Also, adaptively collected data introduces unique challenges due to its non-i.i.d. nature, thus making traditional statistical guarantees less applicable.

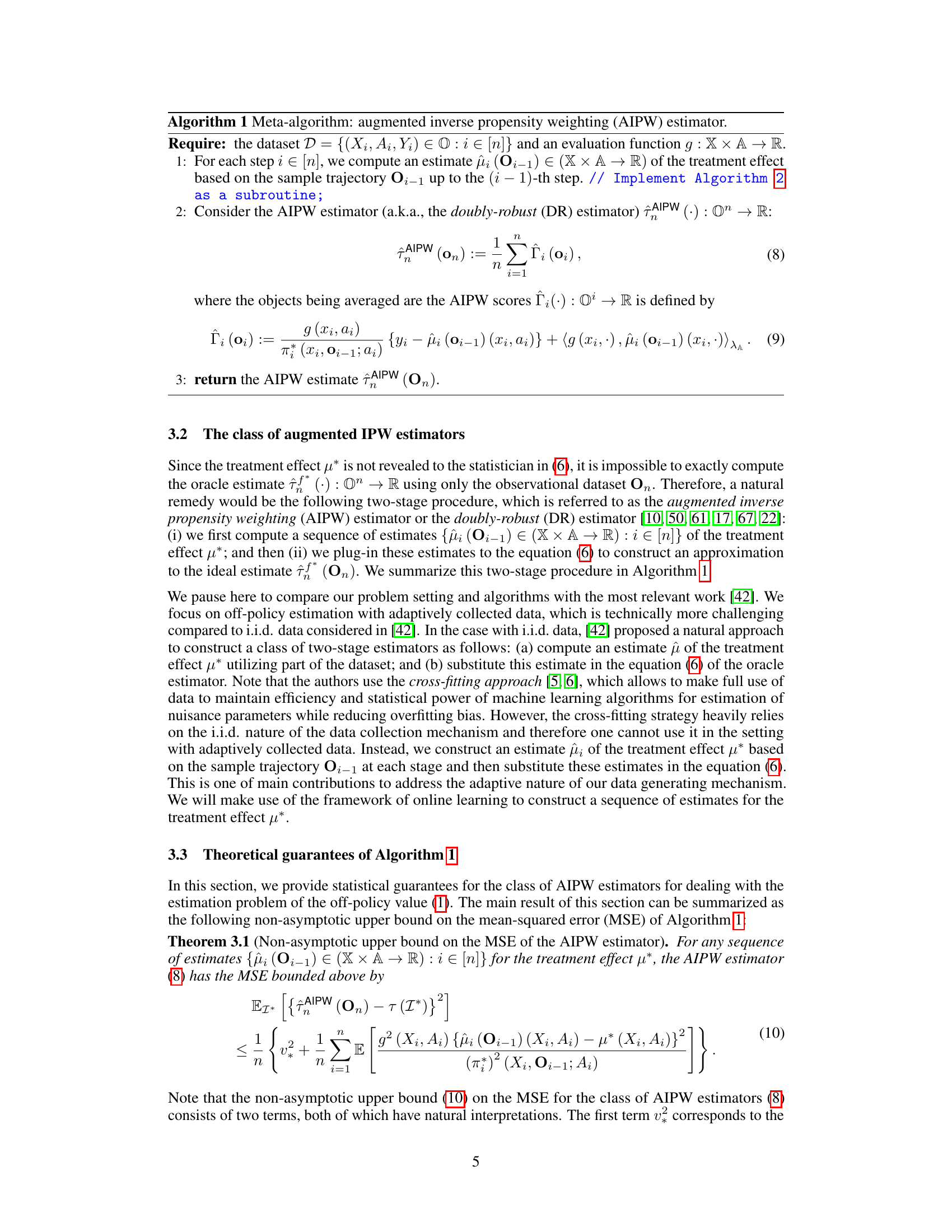

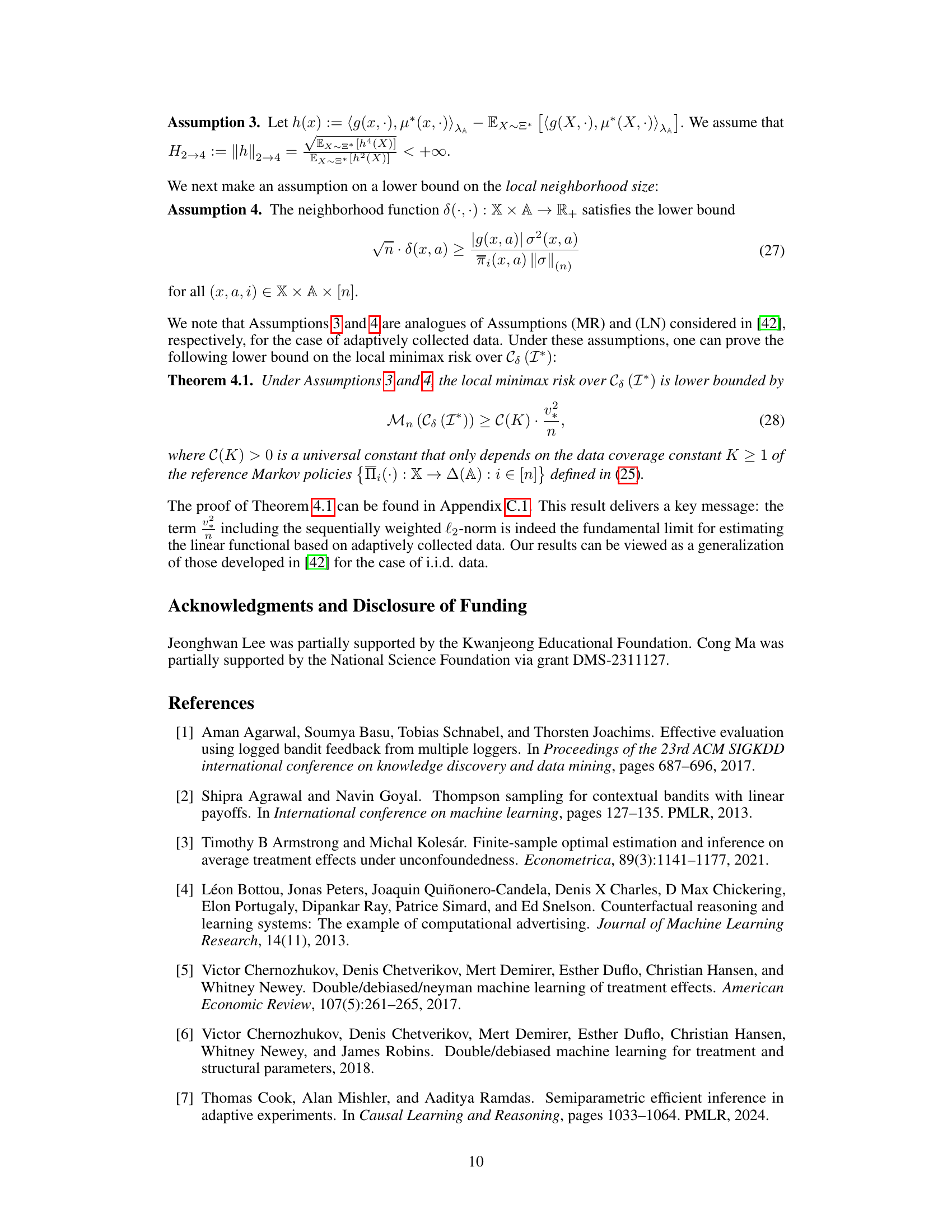

This paper tackles these challenges head-on. It presents new finite-sample upper bounds for a class of augmented inverse propensity weighting (AIPW) estimators, emphasizing a sequentially weighted error. To improve estimation, a general reduction scheme leveraging online learning is proposed, with concrete instantiations provided for various cases. A local minimax lower bound is also derived, demonstrating the optimality of the AIPW estimator using online learning. This offers significant improvements in both theoretical understanding and practical applicability.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working with adaptively collected data because it provides non-asymptotic theoretical guarantees for off-policy estimation, a field lacking in such analysis. It bridges the gap between asymptotic theory and practical applications, offering valuable insights into finite-sample performance. This work is highly relevant to causal inference and reinforcement learning where adaptive data collection is becoming increasingly common.

Visual Insights#

Full paper#