TL;DR#

Many machine learning problems are formulated as stochastic saddle point problems (SSPs) or stochastic variational inequalities (SVIs). Solving these problems with differential privacy (DP) guarantees is critical for protecting sensitive data, but existing methods often focus on simple Euclidean spaces. This limits their practical use because many applications exist in non-Euclidean spaces, such as those arising in federated learning and distributionally robust optimization.

This research addresses these issues by developing new differentially private algorithms for SSPs and SVIs. The algorithms leverage a novel technique called recursive regularization, which involves repeatedly solving regularized versions of the problems, with progressively stronger regularization. This innovative method allows for achieving near-optimal accuracy guarantees in lp/lq spaces while maintaining DP. The research also develops new analytical tools for analyzing generalization, leading to optimal convergence rates for solving these problems efficiently in a variety of settings.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in differential privacy and optimization because it provides optimal algorithms for solving stochastic saddle point problems and variational inequalities beyond Euclidean geometry. This advances privacy-preserving machine learning and opens avenues for new research in non-Euclidean settings.

Visual Insights#

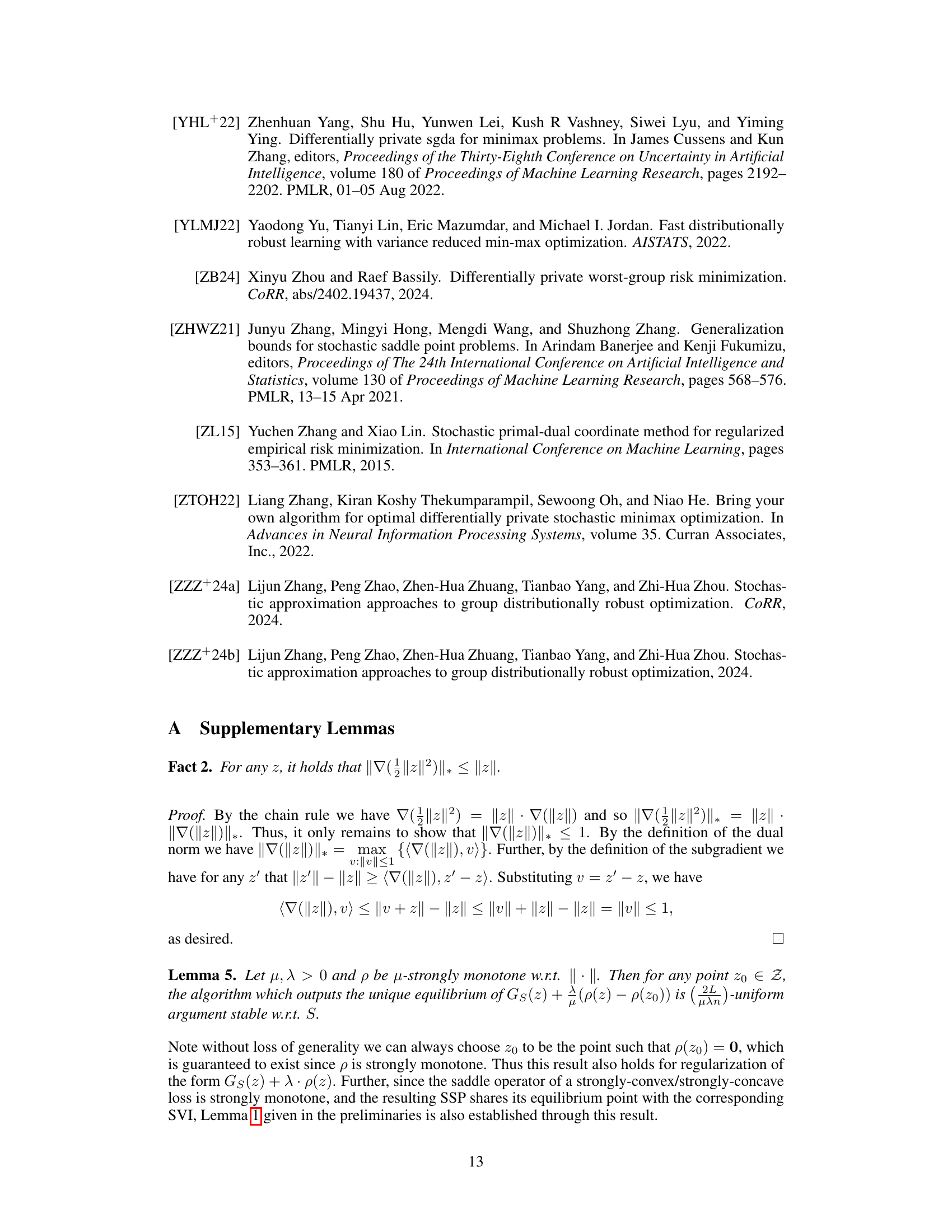

🔼 This table presents the recursive regularization algorithm (Rssp) used for solving stochastic saddle point problems (SSPs). The algorithm iteratively refines an initial point by solving a sequence of regularized saddle point problems using a subroutine (Aemp) on disjoint partitions of the dataset. Each iteration involves updating the regularization parameter and using the output of the previous iteration as the starting point for the next. The algorithm’s output is an approximate solution to the original SSP.

read the caption

Algorithm 1 Recursive Regularization: Rssp

Full paper#