TL;DR#

Current research on diffusion models often separately analyzes training and sampling accuracy. This approach limits the complete understanding of the generation process and optimal design strategies. This paper addresses this gap by providing a comprehensive analysis of both training and sampling.

The study uses a novel method to prove the convergence of gradient descent training dynamics and extends previous sampling error analysis to variance exploding models. By combining these results, the paper offers a unified error analysis to guide the design of training and sampling processes. This includes providing theoretical support for design choices that align with current state-of-the-art models.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in generative modeling because it offers the first comprehensive error analysis of diffusion-based models, bridging the gap between training and sampling processes. It provides theoretical backing for design choices currently used in state-of-the-art models and opens new avenues for optimization and architectural improvements.

Visual Insights#

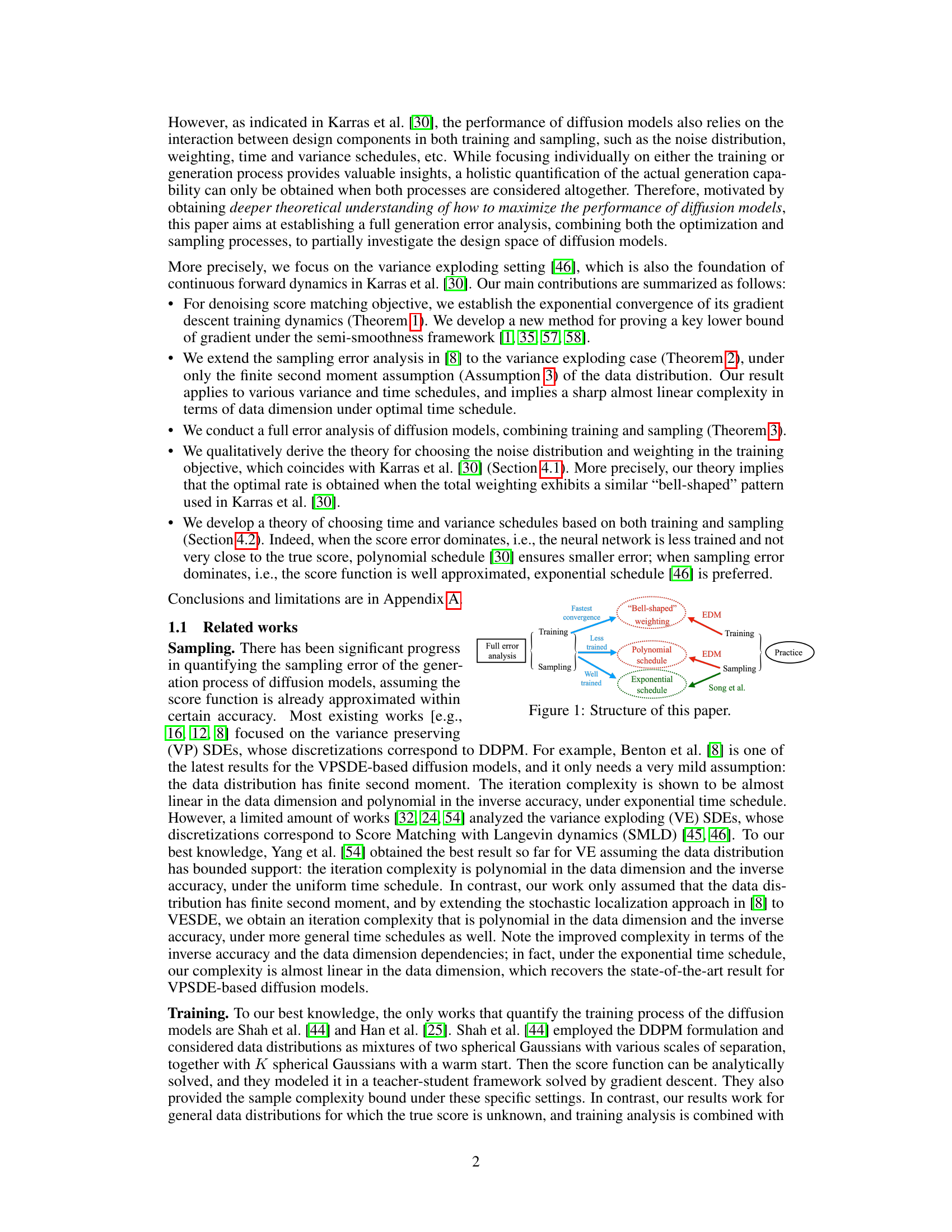

🔼 This figure shows the structure of the paper by illustrating the relationship between different sections and their contributions to the overall error analysis of diffusion models. It demonstrates how the training and sampling processes are combined to provide a full error analysis, highlighting the importance of considering both aspects for optimal model performance. The figure also emphasizes the connections to existing empirical works by Karras et al. and Song et al., showcasing how the theoretical findings complement and extend the existing empirical understanding.

read the caption

Figure 1: Structure of this paper.

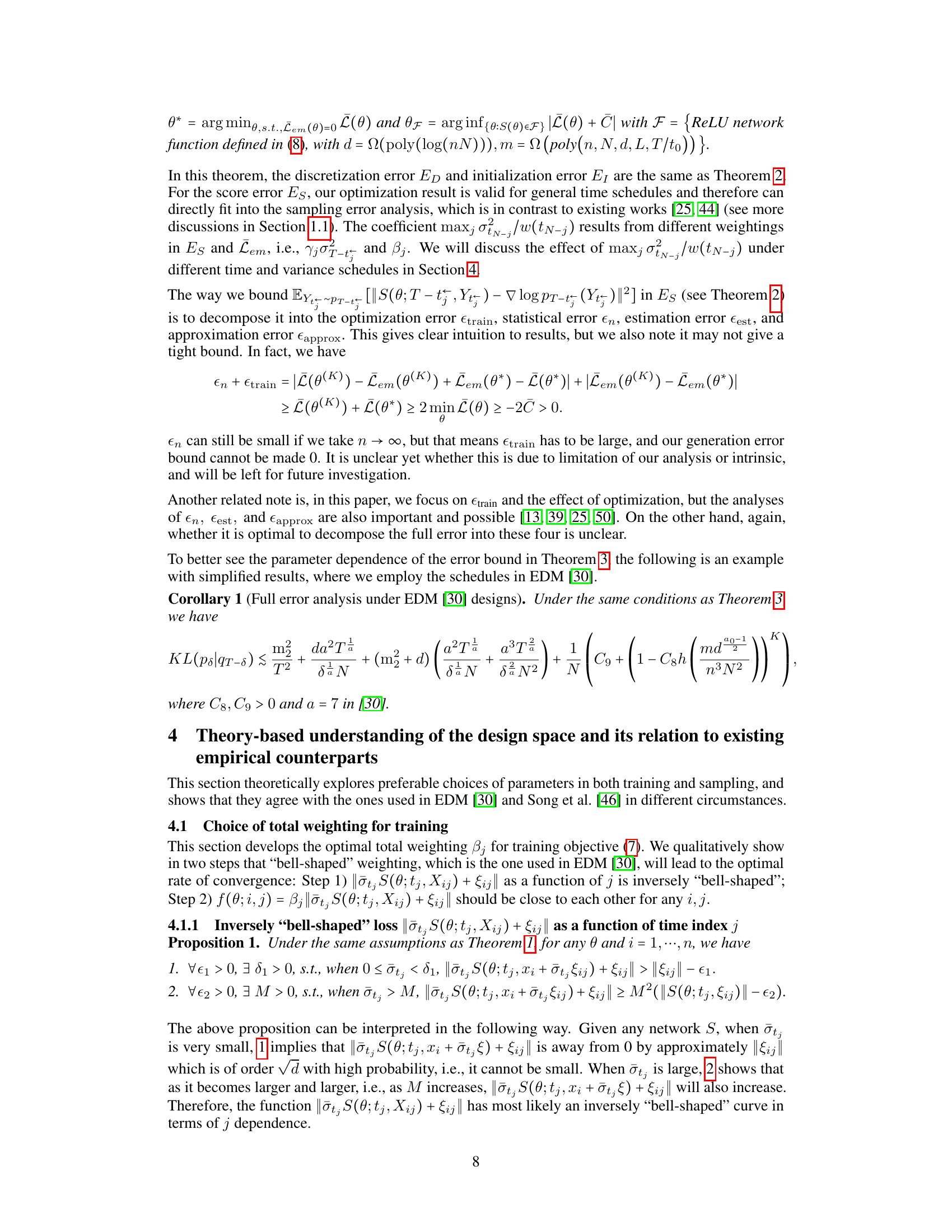

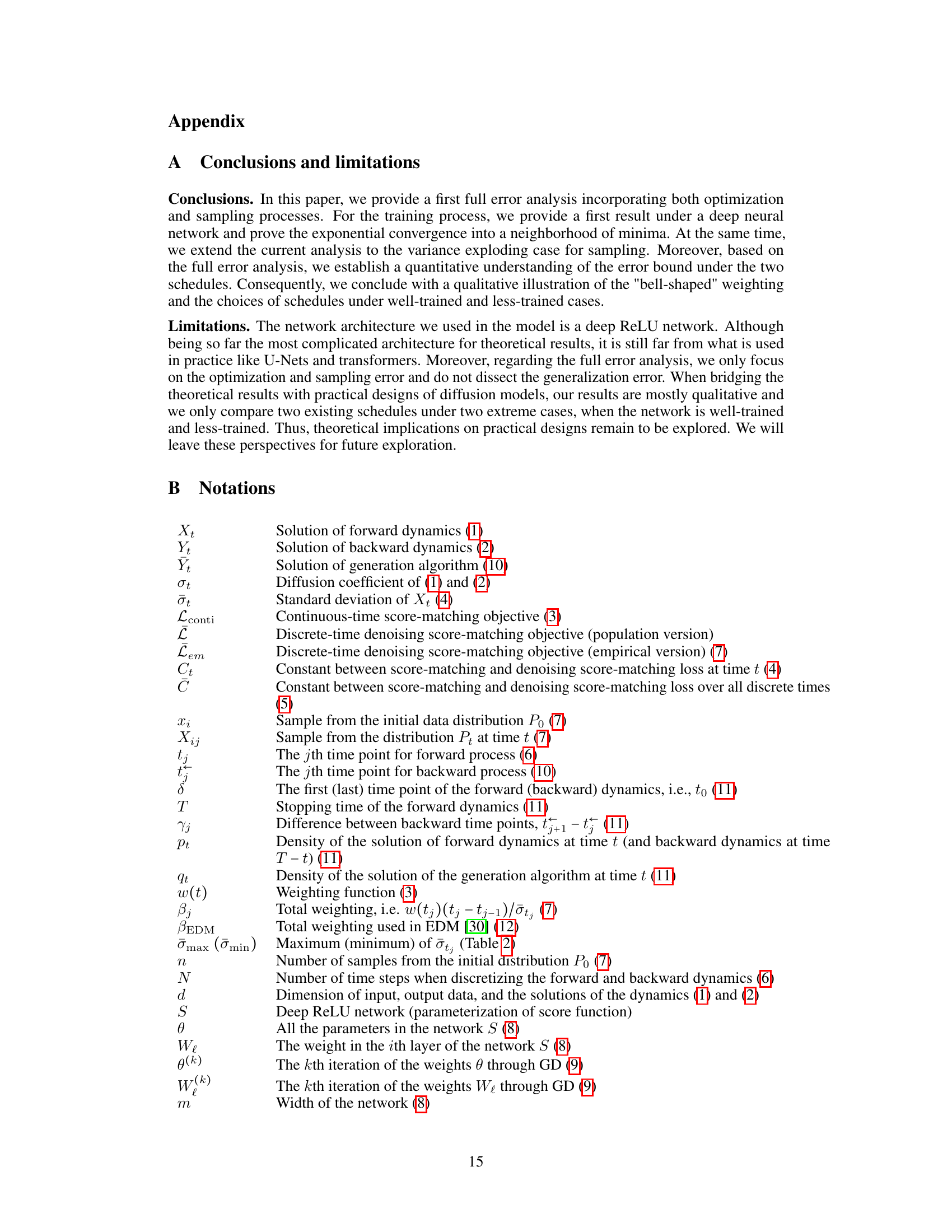

🔼 This table compares the performance of polynomial and exponential time and variance schedules. It shows how the score error (Es), dominated by score function accuracy, and the combined discretization and initialization error (ED + E1), dominated by sampling process, behave differently under each schedule and which schedule is better for each error type and when score function is well or less trained. The choice of optimal schedule is also discussed, based on which type of error dominates.

read the caption

Table 2: Comparisons between different schedules.

Full paper#