TL;DR#

Existing methods to improve Mamba models in computer vision primarily focus on architectural changes, which often require strong prior knowledge and extensive trial-and-error. These methods are often inflexible and not applicable to all Mamba variants. This paper highlights the urgency of addressing performance limitations through a more systematic approach.

The paper introduces “Vision Mamba Mender”, a novel post-hoc method. It analyzes Mamba’s hidden states (internal and external perspectives) through correlation analysis, revealing state flaws. It then proposes targeted repair methods based on these flaws using constraint methods, significantly improving Mamba’s performance across various architectures. The proposed methods demonstrate consistent improvements over existing approaches in visual recognition tasks.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working with the Mamba model, offering a novel post-hoc optimization method. It addresses the limitations of existing pre-defined optimization approaches by systematically analyzing and repairing flaws in the model. This opens new avenues for improving Mamba’s performance and broadens its applicability across various visual tasks.

Visual Insights#

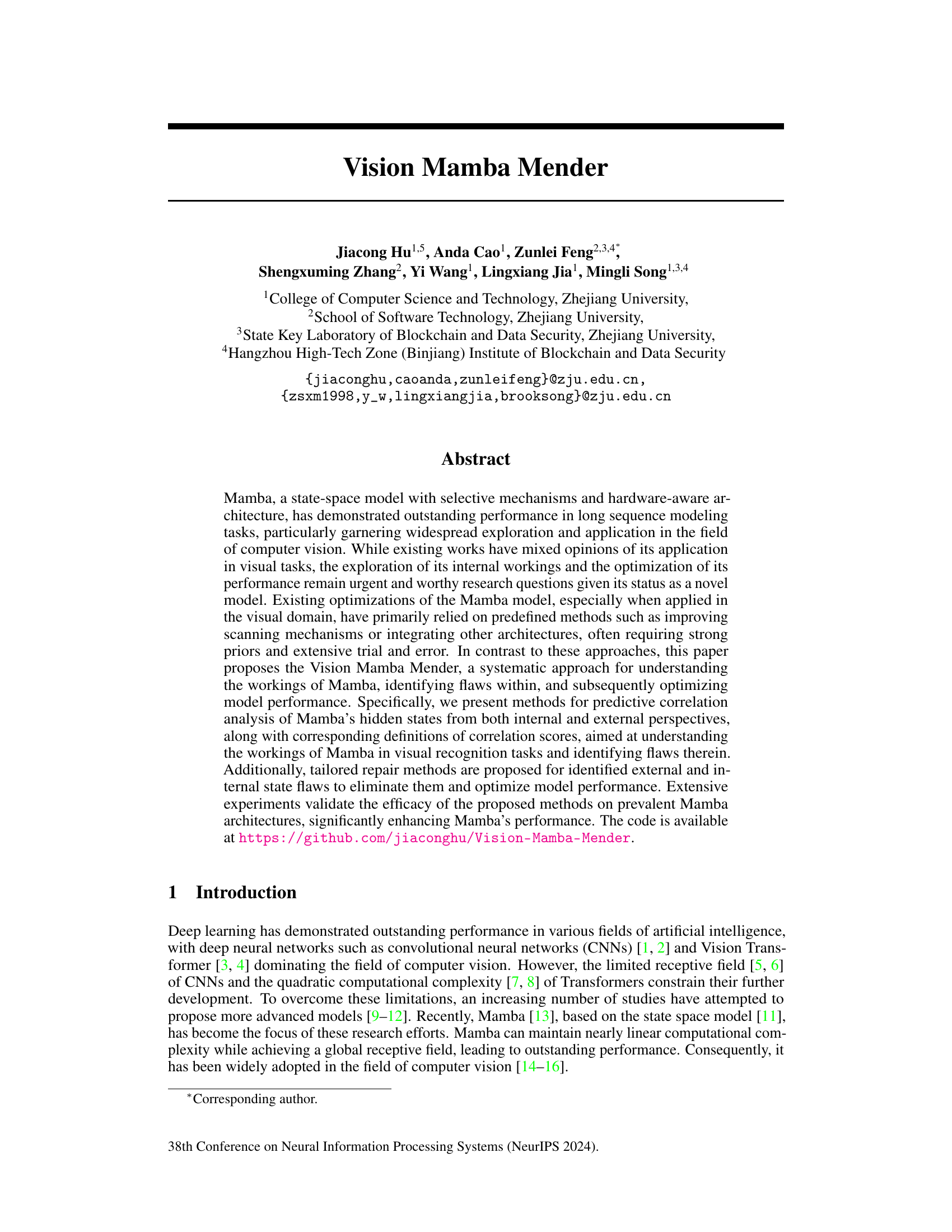

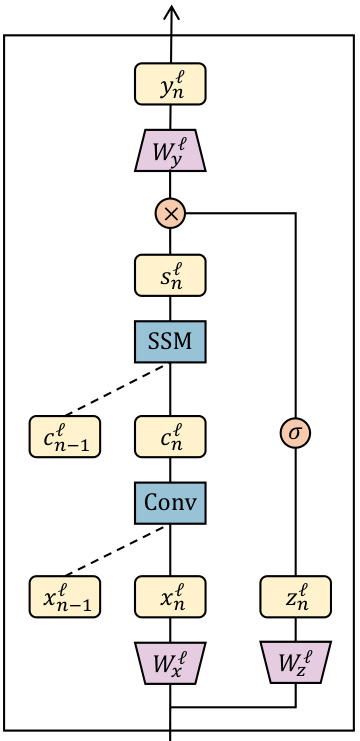

🔼 This figure shows a detailed breakdown of the computational process within a single Mamba block. It illustrates the flow of information through the various modules, including the causal convolution, selective state space model (SSM), and gating mechanism, highlighting the interactions between different intermediate states (x, z, c, s, y) and the final hidden state (h). The figure visually represents the equations provided in the paper detailing the Mamba block’s operations.

read the caption

Figure 1: The computational process of a Mamba block.

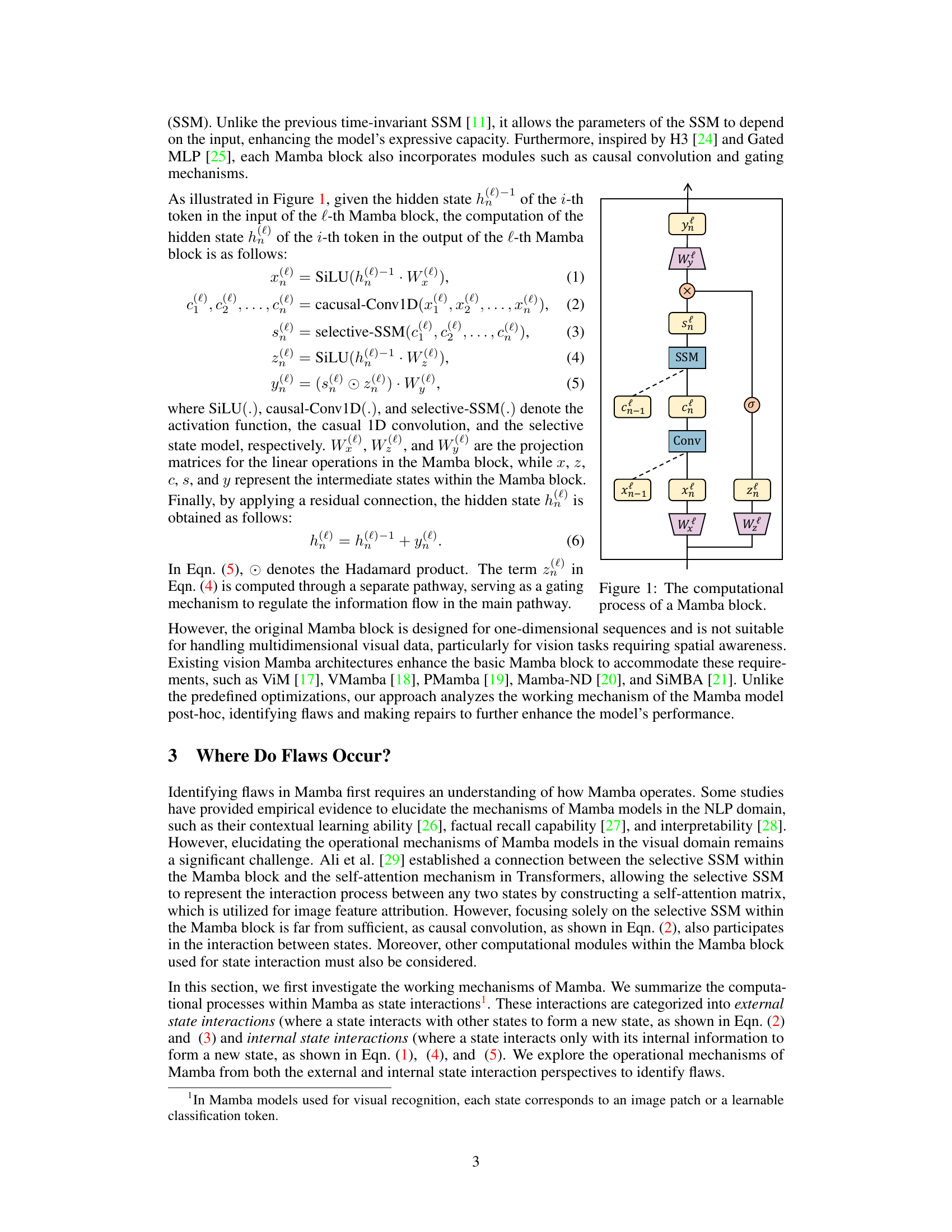

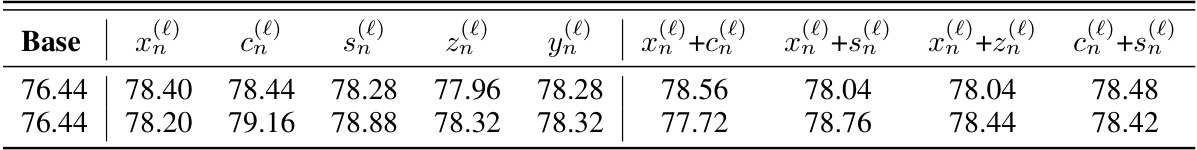

🔼 This table compares the model accuracy before and after applying external and internal flaw repair techniques to different states (xn(l), cn(l), sn(l), zn(l), yn(l)) within the last block of the ViM model. The experiment uses the ImageNet-50 dataset. The ‘Base’ row shows the original model’s accuracy. ‘+Ext’ shows accuracy after external flaw repair, ‘+Int’ after internal flaw repair, and ‘+All’ after both.

read the caption

Table 1: Comparison of model accuracy after external state flaw repair for different states. Taking xn(l) as an example, it represents the external flaw repair of state xn(l). The results in the second row of the table correspond to external flaw repair, while the third row presents the outcomes of internal flaw repair. The experiment was conducted within the last block of the ViM model, using the ImageNet-50 dataset.

In-depth insights#

Mamba’s Visual Flaws#

Analyzing “Mamba’s Visual Flaws” requires a nuanced understanding of the Mamba model’s architecture and its application to computer vision tasks. The core issue appears to be a disconnect between the model’s internal state representations and its ability to accurately interpret visual information. This could stem from several factors: limitations of the selective state-space model in handling complex visual patterns, insufficient contextual information being incorporated into state updates, or an architectural mismatch between the model and the nature of visual data itself. Investigating the predictive correlation between hidden states and model outputs is key; low correlation in specific regions indicates flawed reasoning. The proposed solutions of predictive correlation analysis and targeted repair mechanisms aim to address these flaws directly, improving the Mamba model’s comprehension of visual scenes and leading to enhanced performance in downstream tasks. A critical aspect would be to explore different Mamba variants and analyze if the same kinds of flaws arise, providing insights into possible model-agnostic solutions.

Post-Hoc Mamba Tuning#

Post-Hoc Mamba Tuning presents a novel approach to optimizing the Mamba model, shifting from pre-defined architectural modifications to a data-driven, post-hoc analysis. This method focuses on identifying and rectifying flaws within the model’s internal workings, rather than relying on heuristic improvements. By analyzing correlations between hidden states and predictions, the approach pinpoints areas for targeted intervention. The strength of this technique lies in its systematic nature and adaptability, allowing for optimization across various Mamba-based architectures. Predictive correlation analysis plays a crucial role, enabling a quantitative assessment of the model’s internal flaws and facilitating focused repairs. The result is a more efficient and robust Mamba model, demonstrating the power of post-hoc analysis in model enhancement.

Correlation Analysis#

Correlation analysis in this context likely involves assessing relationships between different variables within a model. It is crucial for understanding the internal mechanisms of the model and its predictive performance. This analysis is particularly important in complex models where direct interpretation is difficult. The authors probably employed quantitative metrics to measure correlation strengths, perhaps using statistical methods like Pearson correlation coefficient or more sophisticated techniques if dealing with non-linear relationships. A key aspect would be identifying strong or weak correlations, which could signal areas of effective or flawed model functioning. By visualizing these correlations (e.g., using heatmaps or other graphical representations), patterns or anomalies could be detected, leading to insights on which aspects of the model deserve more scrutiny. The investigation of correlations between internal states and external outputs may reveal bottlenecks or redundancies in the model’s design or data flow. Ultimately, the results from the correlation analysis serve as a foundational step for further improvements in model architecture or training procedures.

Repair Methodologies#

The effectiveness of the proposed Vision Mamba Mender hinges on its repair methodologies for addressing flaws detected through correlation analysis. Two key strategies are implemented: one for rectifying external state flaws, focusing on improving the correlation between external states and predictions, particularly for difficult samples; and another for internal state flaws, aiming to enhance the consistency and simplicity of internal state correlations. The repair methods use carefully designed loss functions incorporated into the training process, effectively guiding the model to correct its behavior. The external repair method involves constraining external correlations to foreground regions, while the internal repair strategy imposes constraints on internal correlations to improve consistency. This two-pronged approach demonstrates the efficacy of a post-hoc, systematic method to refine a novel state-space model for visual tasks, overcoming the limitations of prior predefined model modifications.

Vision Mamba Future#

A ‘Vision Mamba Future’ section would explore the potential of Mamba models in computer vision. Further research should focus on addressing the limitations of current Mamba architectures, particularly their applicability to high-resolution images and complex visual scenes. Improving the model’s efficiency and scalability is crucial for real-world applications. Investigating the model’s robustness to noise and adversarial attacks is also essential. The exploration of novel applications, such as in medical imaging analysis or autonomous driving systems, would highlight the model’s versatility. Finally, a discussion of potential ethical implications associated with its use in high-stakes visual recognition tasks is vital.

More visual insights#

More on figures

🔼 This figure visualizes the external state correlation of the output from the selective SSM module within different Vision Mamba (ViM) blocks. Each image shows the correlation for a specific state (s(l)) and block (l). The depth (number of blocks) of the ViM model increases from left to right. The heatmaps represent the correlation with the foreground, where brighter regions indicate stronger correlations. The figure aims to illustrate how the external state correlations vary across different blocks and layers of the ViM architecture, which is a key observation in understanding the model’s mechanisms and identifying potential flaws in the external states.

read the caption

Figure 2: Visualization of the external state correlation e(l,s) of the output s(l) from the selective-SSM module in different ViM [17] blocks. The depth of the ViM blocks increases from left to right.

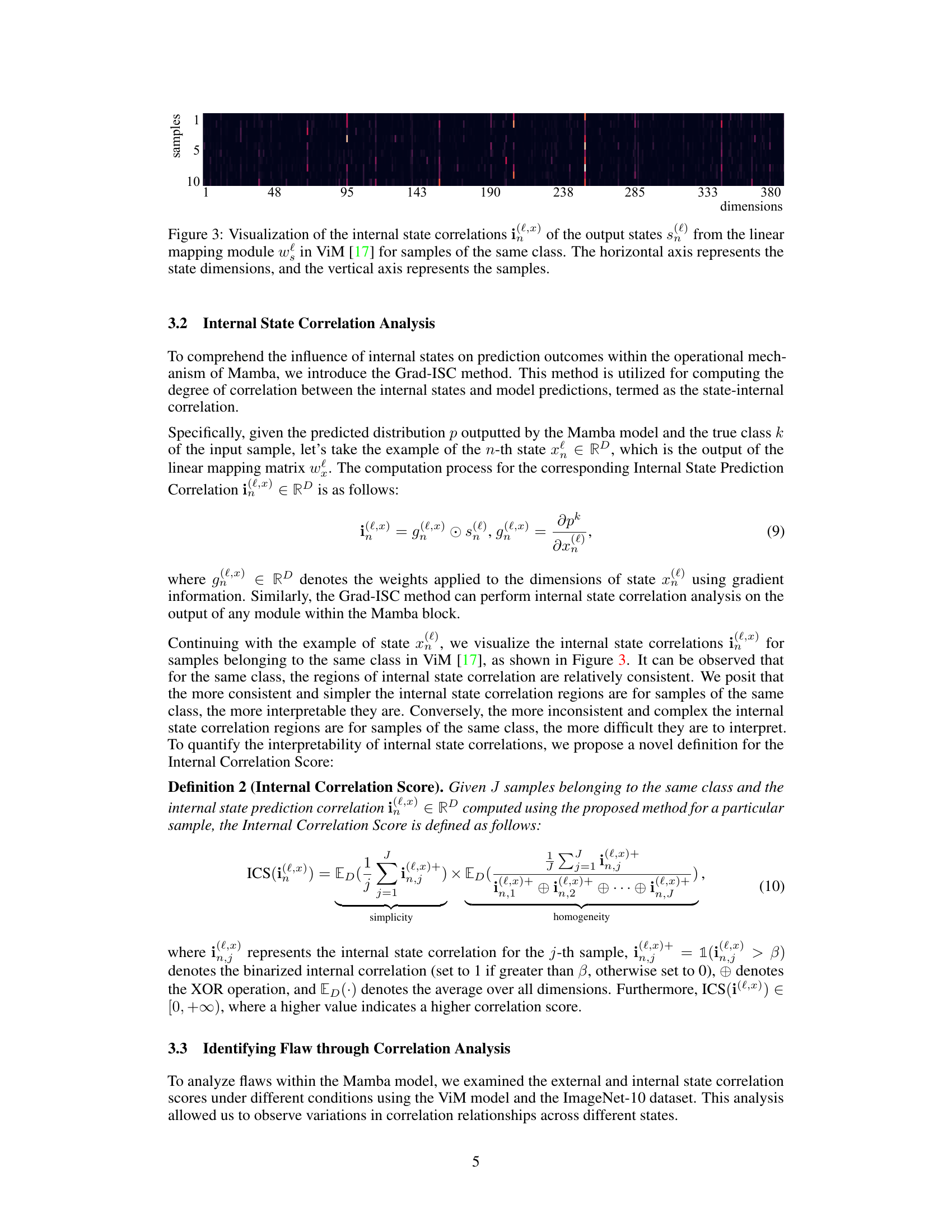

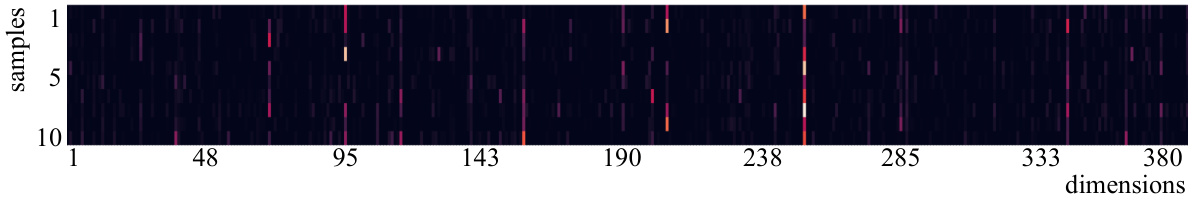

🔼 This figure visualizes internal state correlations in the ViM model for samples belonging to the same class. The heatmap shows the correlation between internal states and model predictions. Each row represents a sample, and each column represents a dimension of the internal state. Warmer colors indicate stronger correlation. This visualization helps to understand how internal states contribute to the model’s predictions for different samples of the same class, revealing patterns of consistency and complexity.

read the caption

Figure 3: Visualization of the internal state correlations i(l,x) of the output states s(l) from the linear mapping module w(l) in ViM [17] for samples of the same class. The horizontal axis represents the state dimensions, and the vertical axis represents the samples.

🔼 This figure compares external and internal state correlation scores for simple and difficult samples across different blocks in the Mamba model. Panels (a) and (b) show the external correlation scores, while (c) and (d) display internal correlation scores. The comparison highlights differences in correlation patterns between easy and hard examples, potentially indicating flaws within the Mamba model’s internal mechanisms.

read the caption

Figure 4: Comparison of external and internal state correlation scores across different blocks in the Mamba model between simple and difficult samples. (a) and (b) show the external state correlation scores for simple and difficult samples, respectively. (c) and (d) present the internal state correlation scores for simple and difficult samples, respectively.

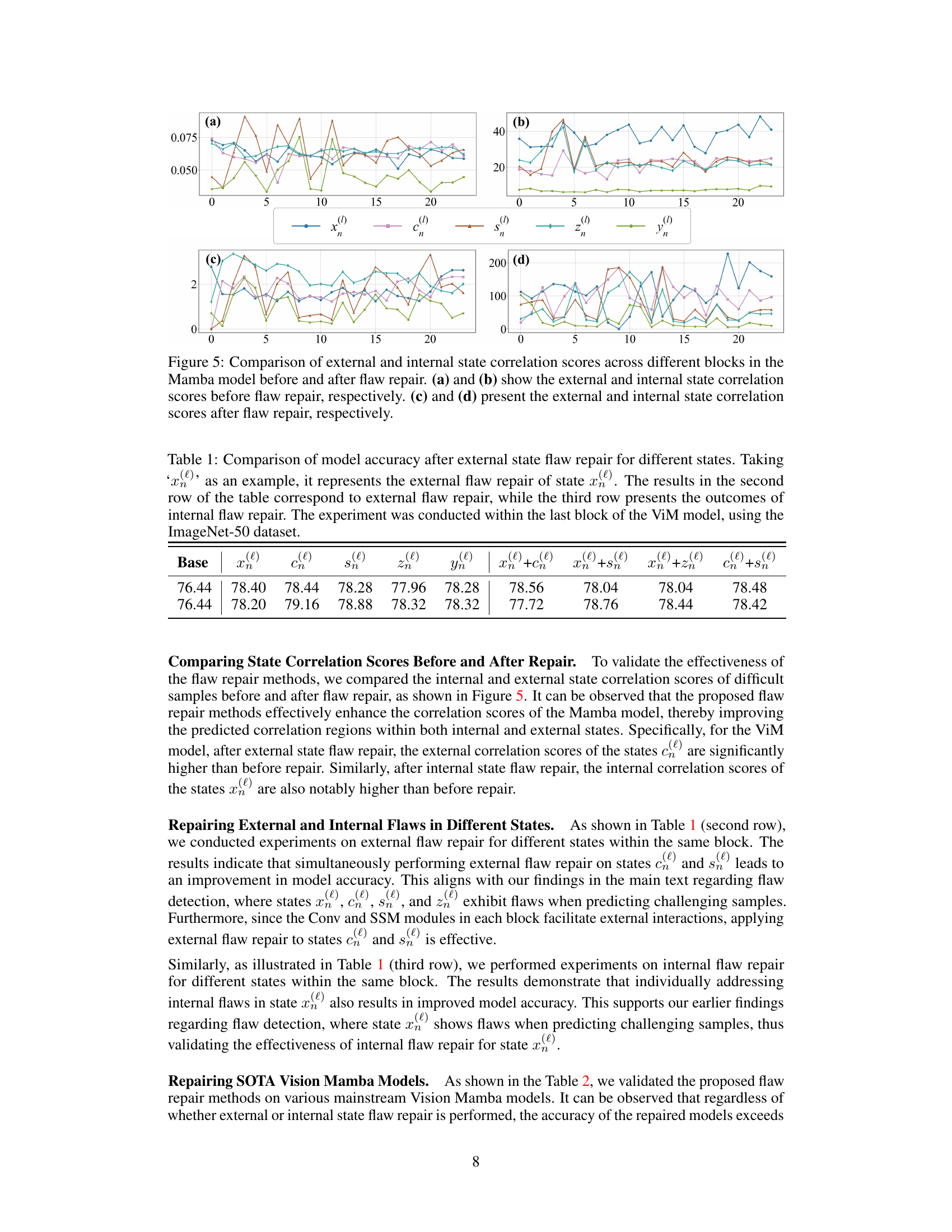

🔼 This figure compares external and internal state correlation scores across different blocks of the Mamba model for both simple and difficult samples. Panels (a) and (b) display the external state correlation scores, while panels (c) and (d) show the internal state correlation scores. The comparison helps identify flaws in the Mamba model’s workings by showing differences in correlation patterns between easy and hard samples, suggesting potential areas for improvement or repair.

read the caption

Figure 4: Comparison of external and internal state correlation scores across different blocks in the Mamba model between simple and difficult samples. (a) and (b) show the external state correlation scores for simple and difficult samples, respectively. (c) and (d) present the internal state correlation scores for simple and difficult samples, respectively.

🔼 The figure visualizes the external state correlation of the output from the selective-SSM module in different Vision Mamba (ViM) blocks. Each image represents the external state correlation for a given block, and the depth of the ViM block increases from left to right. The correlation scores are visualized as heatmaps, indicating the degree of correlation between external states and the model’s prediction outcomes. The figure shows how the external state correlations change across various depths of the ViM blocks, suggesting the impact of external states on the model’s predictive ability.

read the caption

Figure 2: Visualization of the external state correlation e(l,s) of the output s(l) from the selective-SSM module in different ViM [17] blocks. The depth of the ViM blocks increases from left to right.

🔼 This figure shows the impact of different thresholds (α) on external state correlation scores for both simple and difficult samples. The plots visualize how the correlation scores vary across different states (x, c, s, z, y) within the Mamba model for different α values. Comparing the left (simple samples) and right (difficult samples) columns allows for the observation of how the threshold affects the model’s ability to distinguish between foreground and background correlations in easy versus challenging prediction tasks.

read the caption

Figure 6: Comparison of external state correlation scores for simple and difficult samples under different threshold values of α. The left column of images shows the external state correlation scores for simple samples at various α values. The right column of images shows the external state correlation scores for difficult samples at various α values.

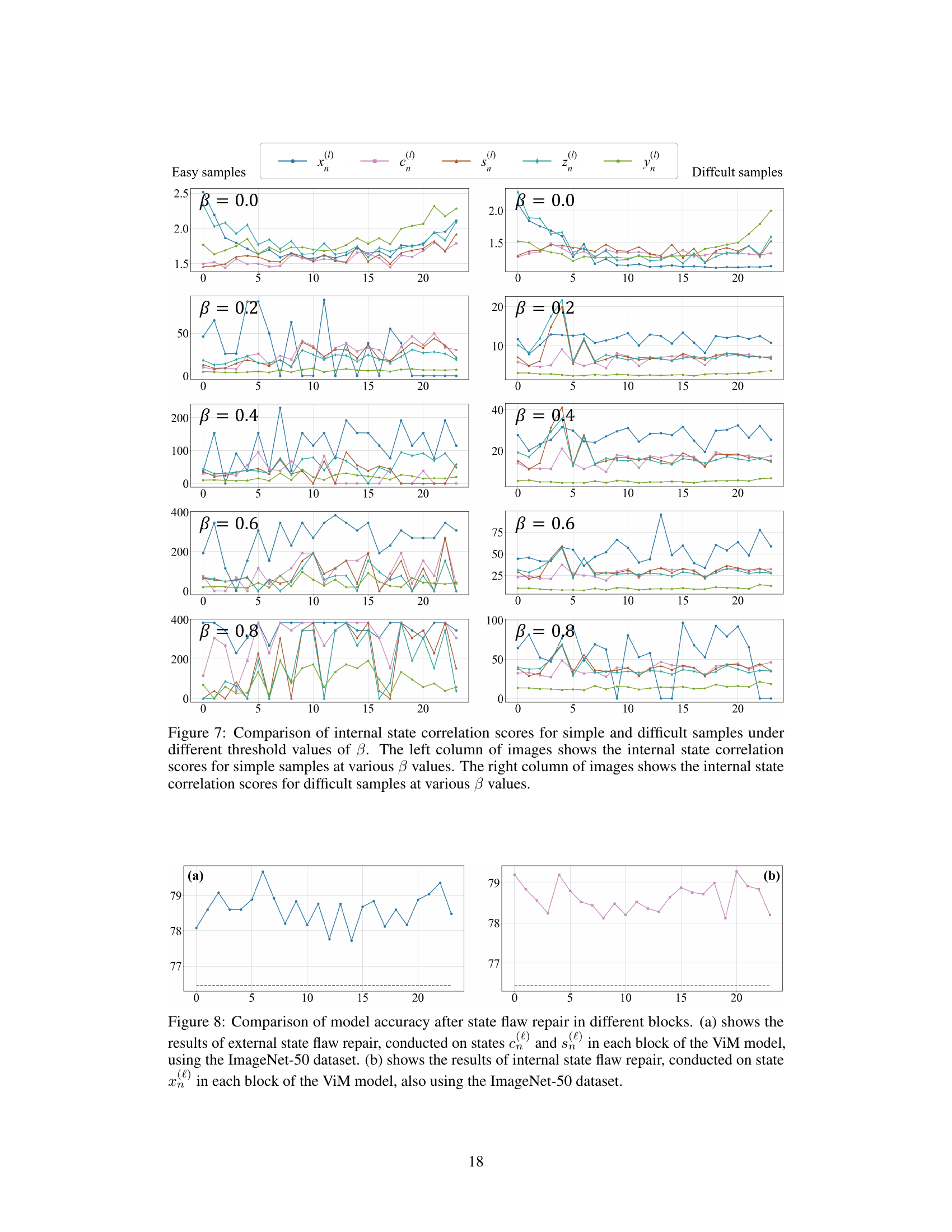

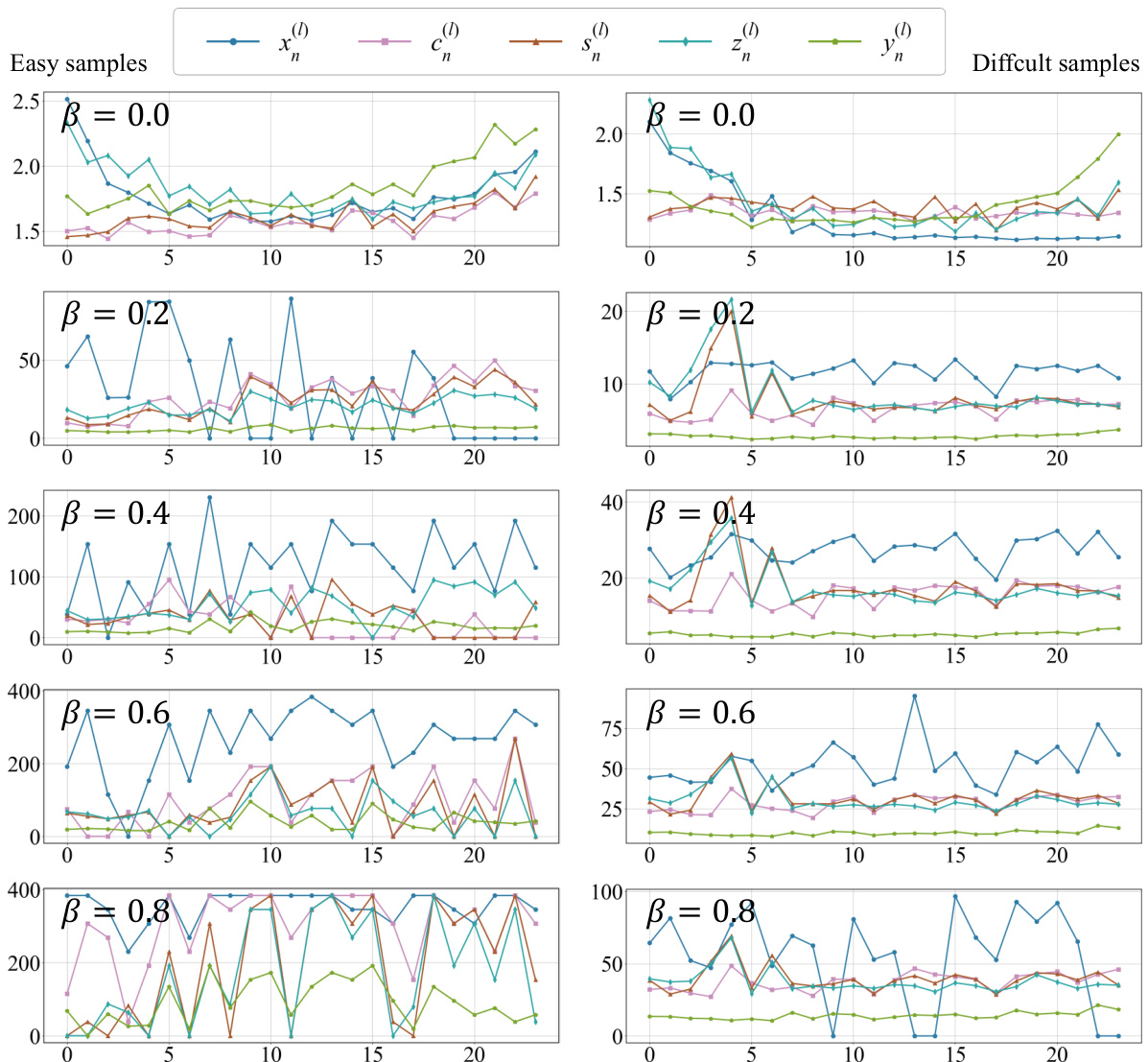

🔼 This figure compares the internal state correlation scores for both simple and difficult samples across different thresholds (β). It visualizes how the internal correlations vary across different states (x, c, s, z, y) within the Mamba model under different threshold settings. By comparing simple and difficult samples, the study aims to identify potential flaws or inconsistencies in the internal state correlations that might contribute to prediction errors for difficult samples.

read the caption

Figure 7: Comparison of internal state correlation scores for simple and difficult samples under different threshold values of β. The left column of images shows the internal state correlation scores for simple samples at various β values. The right column of images shows the internal state correlation scores for difficult samples at various β values.

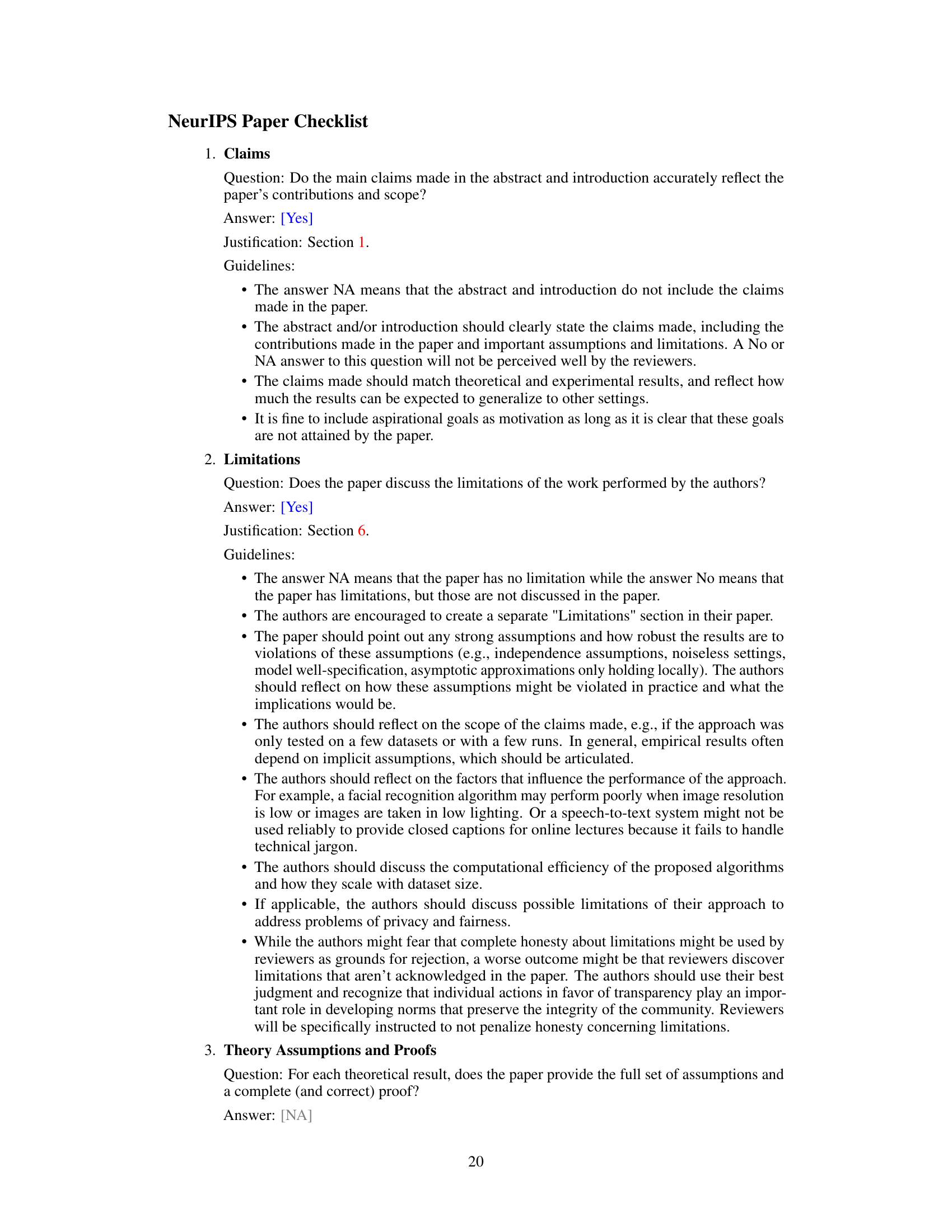

🔼 This figure compares the model accuracy after applying external and internal state flaw repair in different blocks of the ViM model using ImageNet-50 dataset. It shows that fixing these flaws leads to improved accuracy, but the improvement varies across different blocks due to model architecture and the influence of flaws in different blocks.

read the caption

Figure 8: Comparison of model accuracy after state flaw repair in different blocks. (a) shows the results of external state flaw repair, conducted on states c(ℓ)n and s(ℓ)n in each block of the ViM model, using the ImageNet-50 dataset. (b) shows the results of internal state flaw repair, conducted on state x(ℓ)n in each block of the ViM model, also using the ImageNet-50 dataset.

Full paper#