↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Accurate medium-range weather forecasting is crucial but challenging due to the complexity of atmospheric systems and limitations of existing numerical models. Data-driven approaches using deep learning have shown promise, but often employ complex architectures without sufficient analysis. This hinders understanding what contributes to success and limits reproducibility.

This paper introduces Stormer, a straightforward transformer model that achieves state-of-the-art results. Key innovations include a weather-specific embedding, a randomized dynamics forecasting objective (allowing for multiple forecasts at various intervals which are combined), and a pressure-weighted loss function. Stormer outperforms existing methods beyond 7 days and requires significantly less training data and computing resources, demonstrating favorable scalability with increased model size and training data.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in weather forecasting and deep learning due to its state-of-the-art performance, efficient architecture, and scalable design. It opens new avenues for developing foundation models for weather prediction, improving accuracy at longer lead times, and reducing computational costs. The focus on empirical analysis helps other researchers understand what factors actually contribute to forecasting accuracy.

Visual Insights#

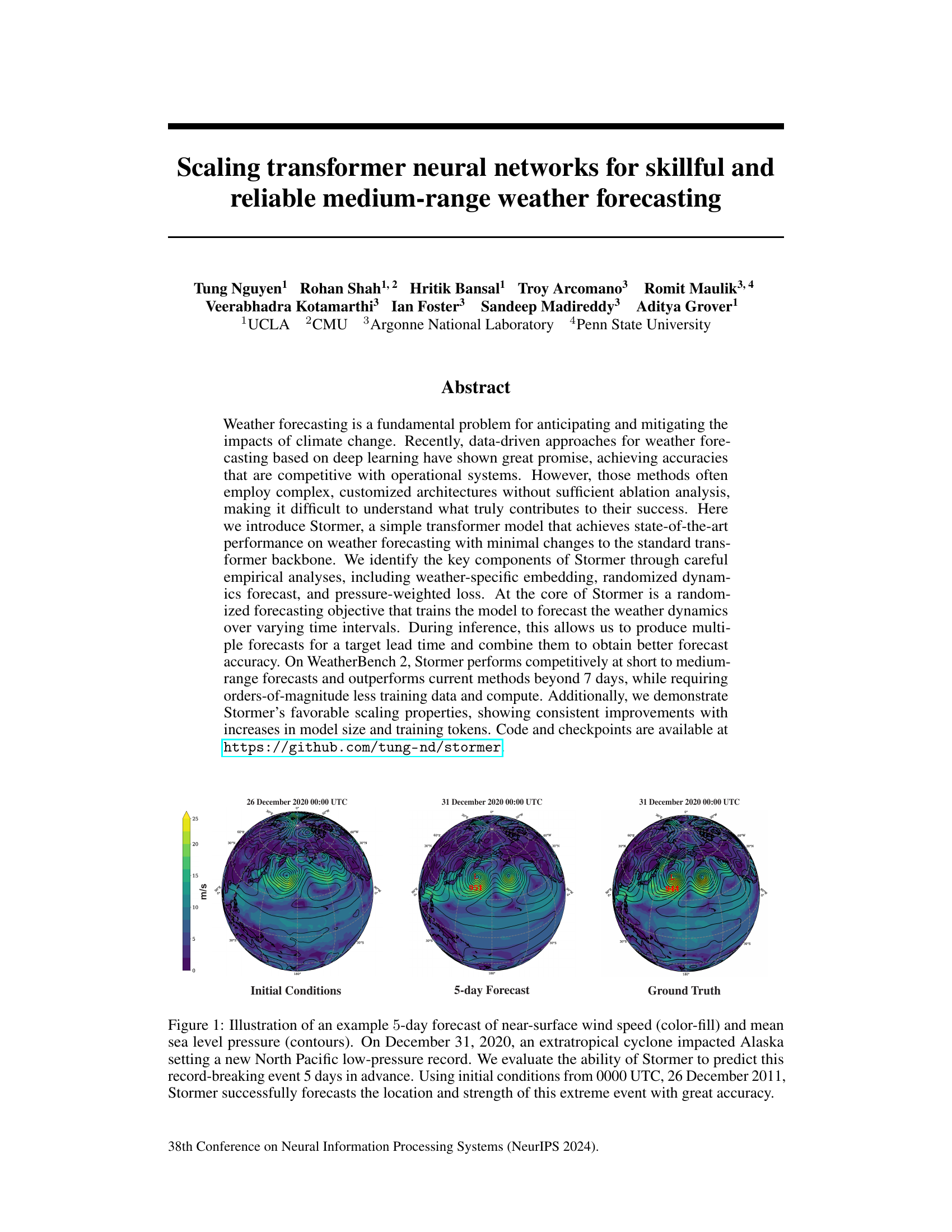

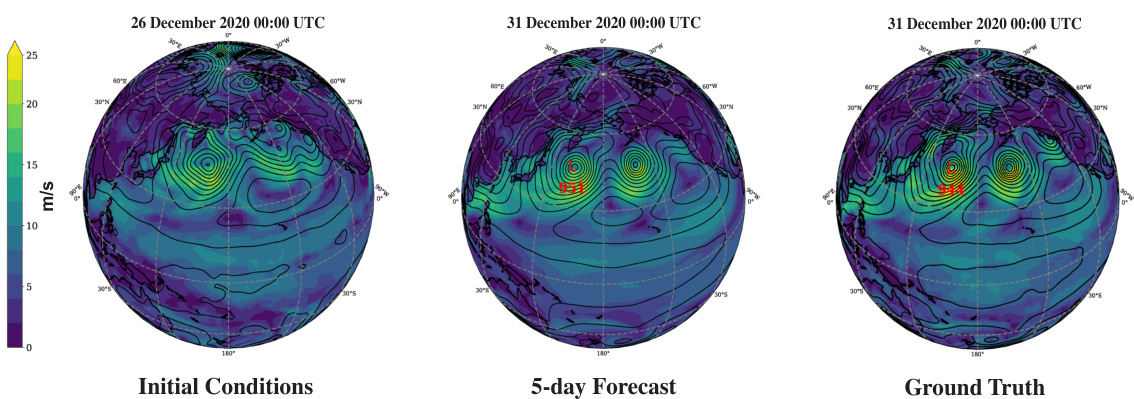

This figure shows a comparison of the initial conditions, a 5-day forecast generated by the Stormer model, and the ground truth for near-surface wind speed and mean sea level pressure. The comparison highlights Stormer’s ability to accurately predict an extratropical cyclone in Alaska that set a new North Pacific low-pressure record.

In-depth insights#

Stormer’s Design#

Stormer’s design centers on a streamlined transformer architecture, intentionally minimizing complexity for enhanced efficiency and interpretability. Key improvements over standard transformers include a weather-specific embedding layer that leverages cross-attention to model intricate interactions between atmospheric variables, improving representation. Furthermore, a randomized dynamics forecasting objective is employed to train the model to predict weather dynamics across various time intervals, enabling Stormer to generate multiple forecasts and aggregate them, enhancing accuracy, particularly for longer lead times. This is coupled with a pressure-weighted loss function prioritizing near-surface variables, aligning with meteorological importance and societal impact. The use of adaptive layer normalization (adaLN) allows for effective conditioning on time intervals, replacing standard layer normalization in transformer blocks. These design choices, supported by extensive ablations studies, contribute to Stormer’s strong performance and favorable scaling properties.

Random Dynamics#

The concept of ‘Random Dynamics’ in the context of weather forecasting using neural networks introduces a novel training paradigm. Instead of training the model to predict weather conditions at fixed time intervals, it is trained on randomly selected intervals. This approach has several advantages. First, it increases the effective training dataset size, improving the model’s generalization capabilities. Second, it allows the model to capture the complexities of atmospheric dynamics across a range of timescales, leading to more robust and accurate forecasts, particularly at longer lead times where the chaotic nature of the weather is most prominent. During inference, the model can produce multiple forecasts for a target lead time by combining predictions from various intervals, thus reducing uncertainty and enhancing overall forecast accuracy. This approach is particularly beneficial for medium-range weather forecasting, where achieving skillful predictions remains a significant challenge.

Ablation Studies#

Ablation studies are crucial for understanding the contribution of individual components within a complex system. In the context of this research paper, ablation studies systematically remove or alter specific aspects of the model (e.g., the randomized dynamics forecasting objective, pressure-weighted loss, or specific embedding techniques) to isolate their impact on overall performance. The results of these experiments offer valuable insights by revealing which components are essential for achieving state-of-the-art results, and which may be redundant or even detrimental. A robust ablation study should demonstrate the relative importance of different model features, providing clear evidence to support claims about design choices. It helps to confirm that model’s effectiveness is not merely due to overfitting or other artifacts of training, thereby lending greater credibility to the proposed approach. The findings can be used to refine the model architecture, optimize hyperparameters, and improve generalization ability. By carefully dissecting the model’s architecture through controlled experiments, ablation studies illuminate the fundamental mechanisms driving the system’s performance and lead to a more comprehensive understanding of its strengths and weaknesses.

Scalability#

The study’s scalability analysis is crucial for evaluating the model’s potential for real-world applications. It investigates how Stormer’s performance changes with increasing model size and training data. The results demonstrate consistent improvements in forecast accuracy with larger models and more training tokens, highlighting the model’s favorable scaling properties. This is a particularly valuable finding as it suggests that Stormer can benefit from future advancements in computing resources to achieve even greater forecast accuracy. Smaller patch sizes, leading to a higher number of tokens, also enhance performance, possibly because they capture finer-grained atmospheric details. The study’s focus on scalability underscores the importance of creating models that can effectively leverage larger datasets and more powerful computing infrastructure for improved weather forecasting, which is critical given the complexities and data requirements of this field.

Future Work#

The authors of the Stormer model for weather forecasting propose several promising avenues for future research. Improving uncertainty quantification is a key goal, acknowledging that current forecasts are underdispersive. They plan to explore various techniques to achieve better probabilistic forecasts and more accurately represent uncertainty. This could involve using multiple forecasts for each lead time, potentially creating an ensemble of forecasts by randomizing other elements within the model or incorporating IC perturbations. Furthermore, enhancing model scalability is crucial. They aim to investigate the performance and benefits of training Stormer on higher-resolution data and with larger models, leveraging the model’s favorable scaling properties already demonstrated. Lastly, exploring the application of Stormer within a broader framework for climate modeling or beyond weather prediction alone is suggested. By combining the strengths of data-driven and physics-based approaches, future studies could aim to create more comprehensive and accurate Earth system models.

More visual insights#

More on figures

The figure illustrates four different approaches to weather forecasting: Direct, Continuous, Iterative, and Randomized Iterative forecasting. Direct forecasting directly predicts future weather conditions from initial conditions. Continuous forecasting includes the target lead time as input to predict future weather. Iterative forecasting produces forecasts in small time intervals and rolls them out to generate longer-term predictions. Randomized iterative forecasting uses randomized time intervals to train the model and combine forecasts for improved accuracy at various lead times. The figure highlights the progression of sophistication in the methods, culminating in the authors’ proposed approach of Randomized Iterative forecasting.

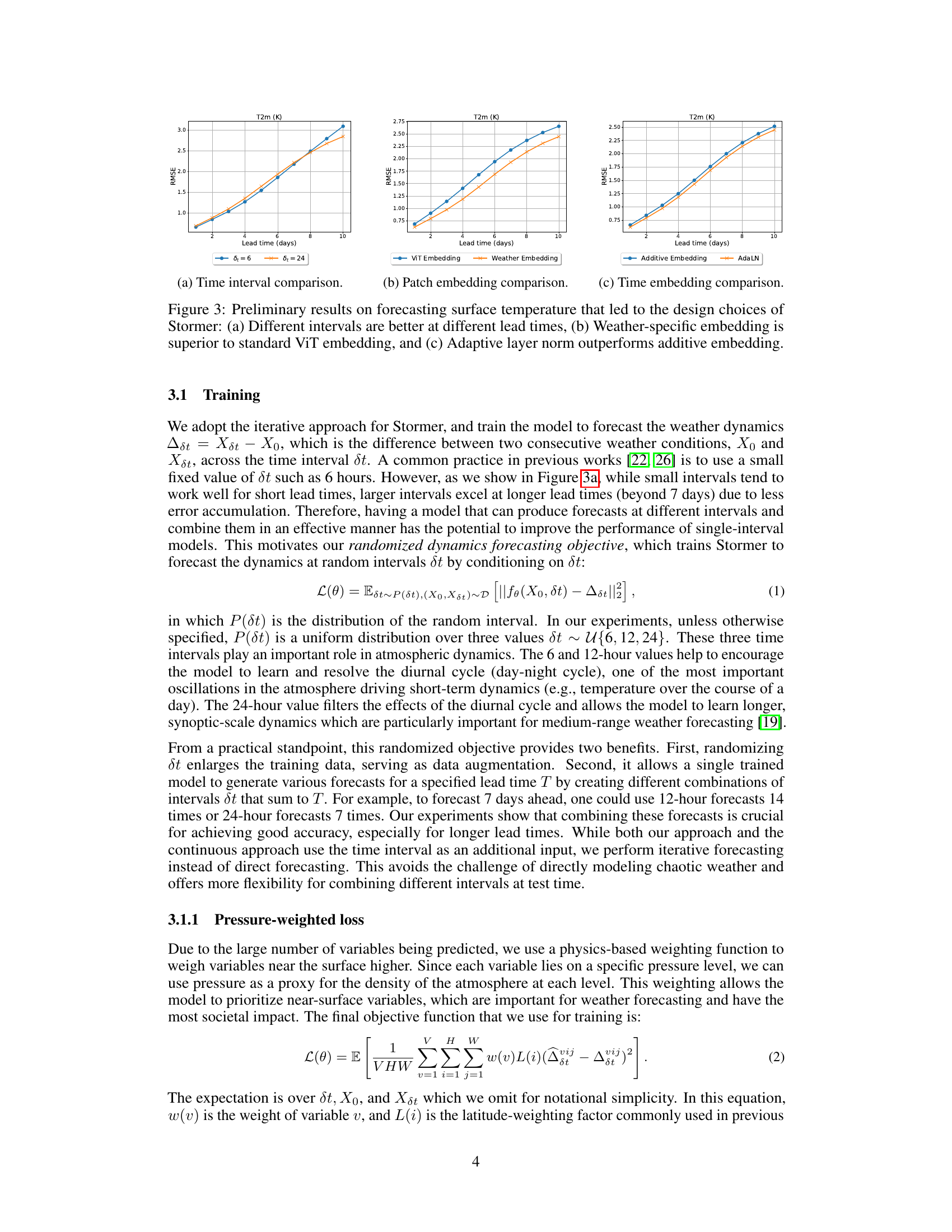

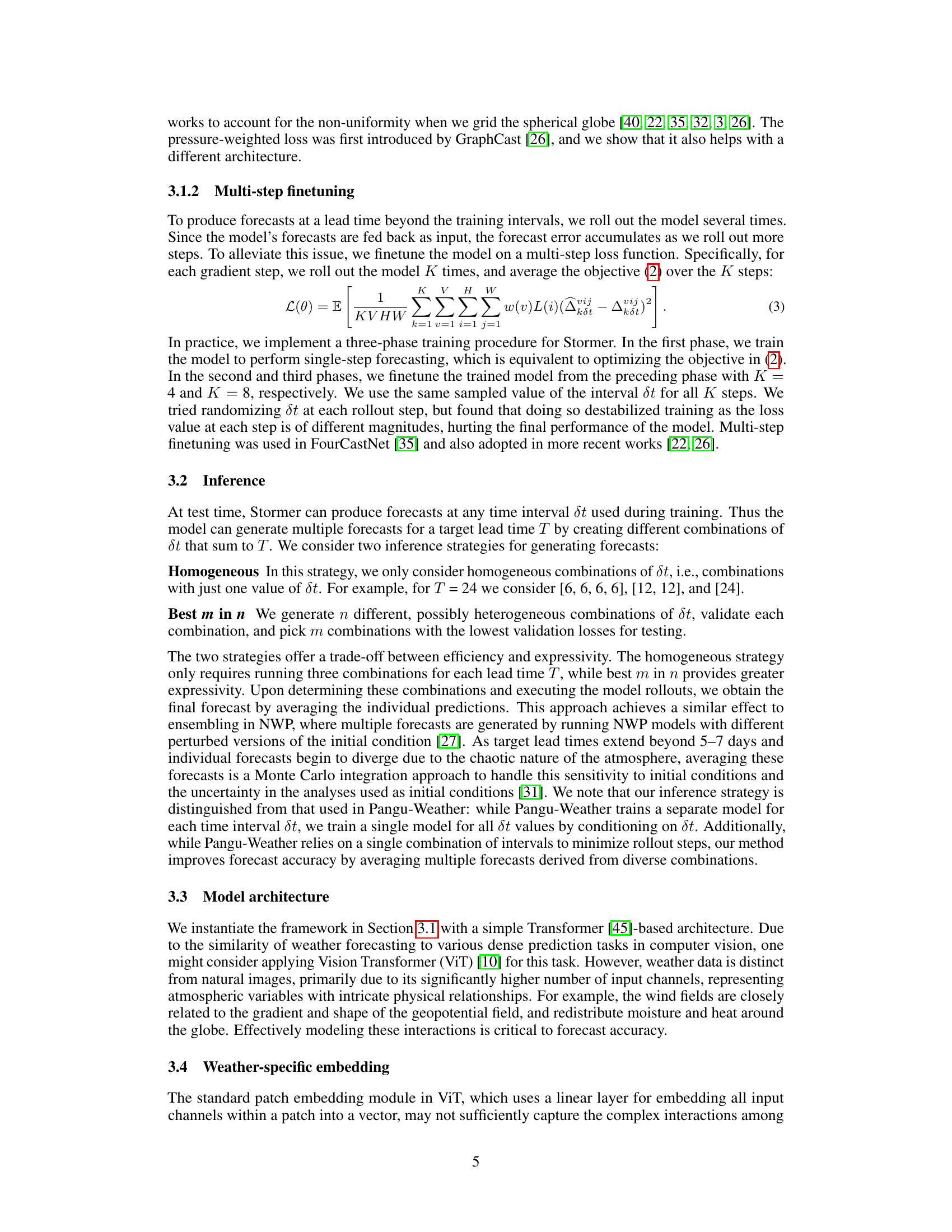

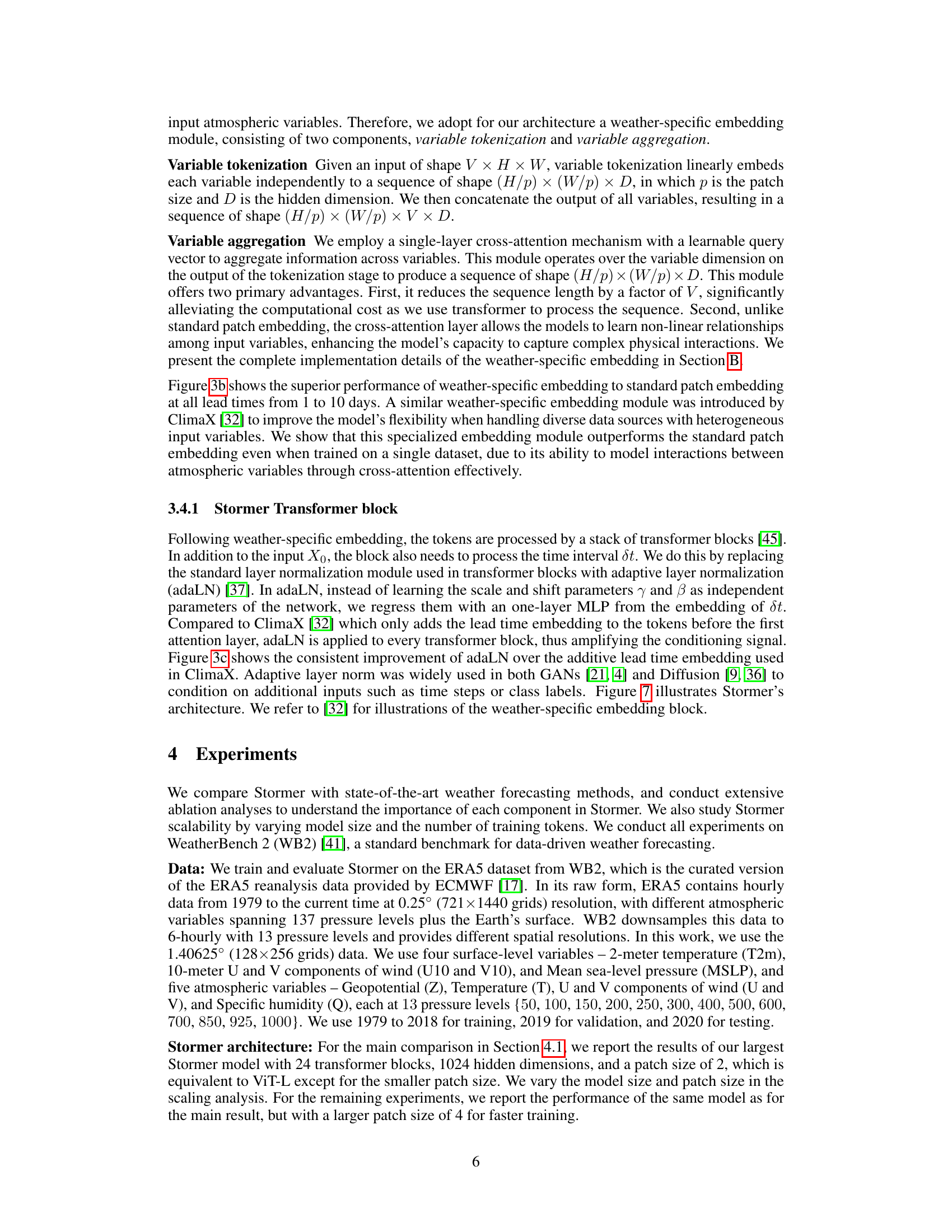

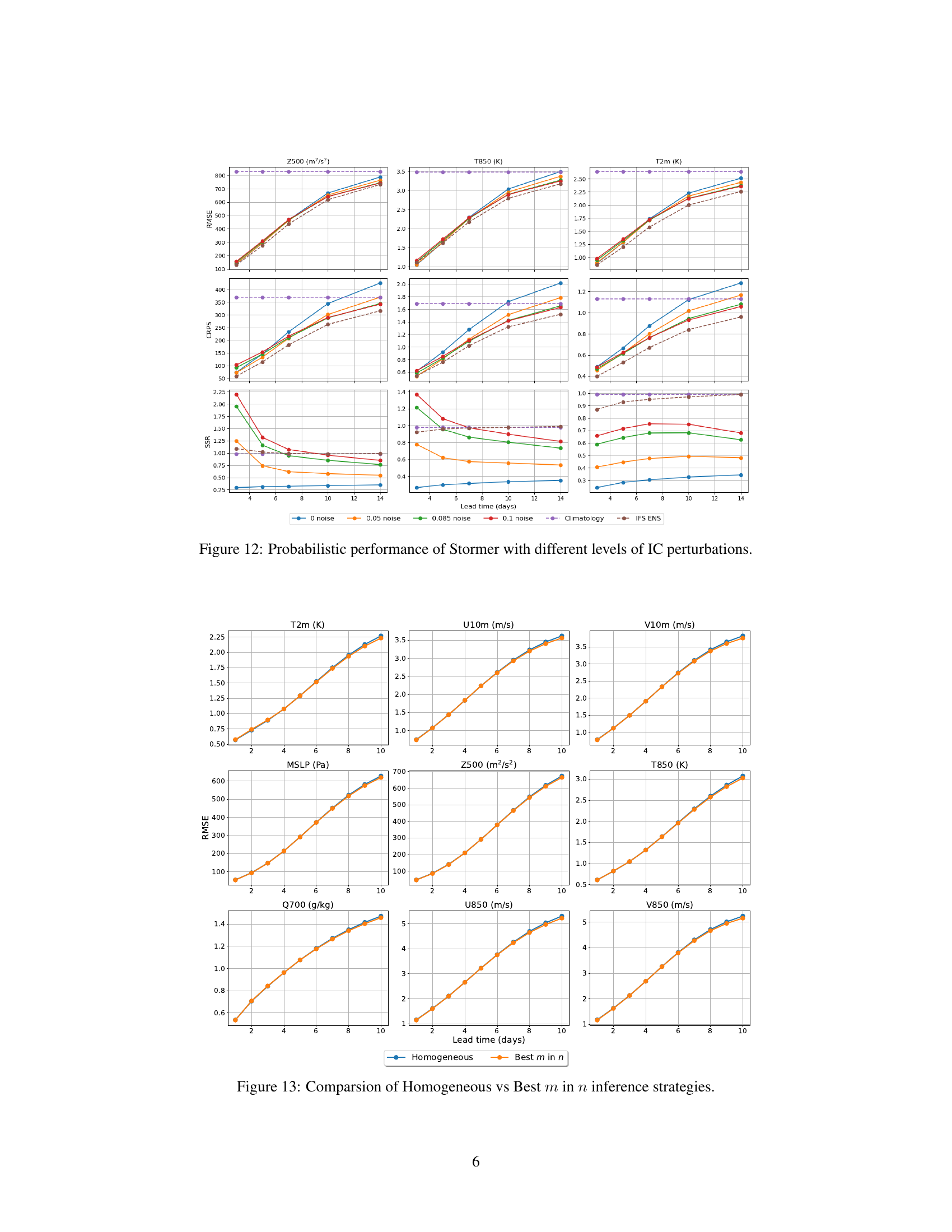

This figure presents ablation study results that guided the design choices in the Stormer model. Specifically, it shows that using different time intervals for forecasting improves accuracy depending on the forecast lead time; a weather-specific embedding layer outperforms the standard ViT embedding; and adaptive layer normalization (AdaLN) is superior to additive embedding for incorporating time information. These findings highlight key design elements of Stormer that contribute to its performance.

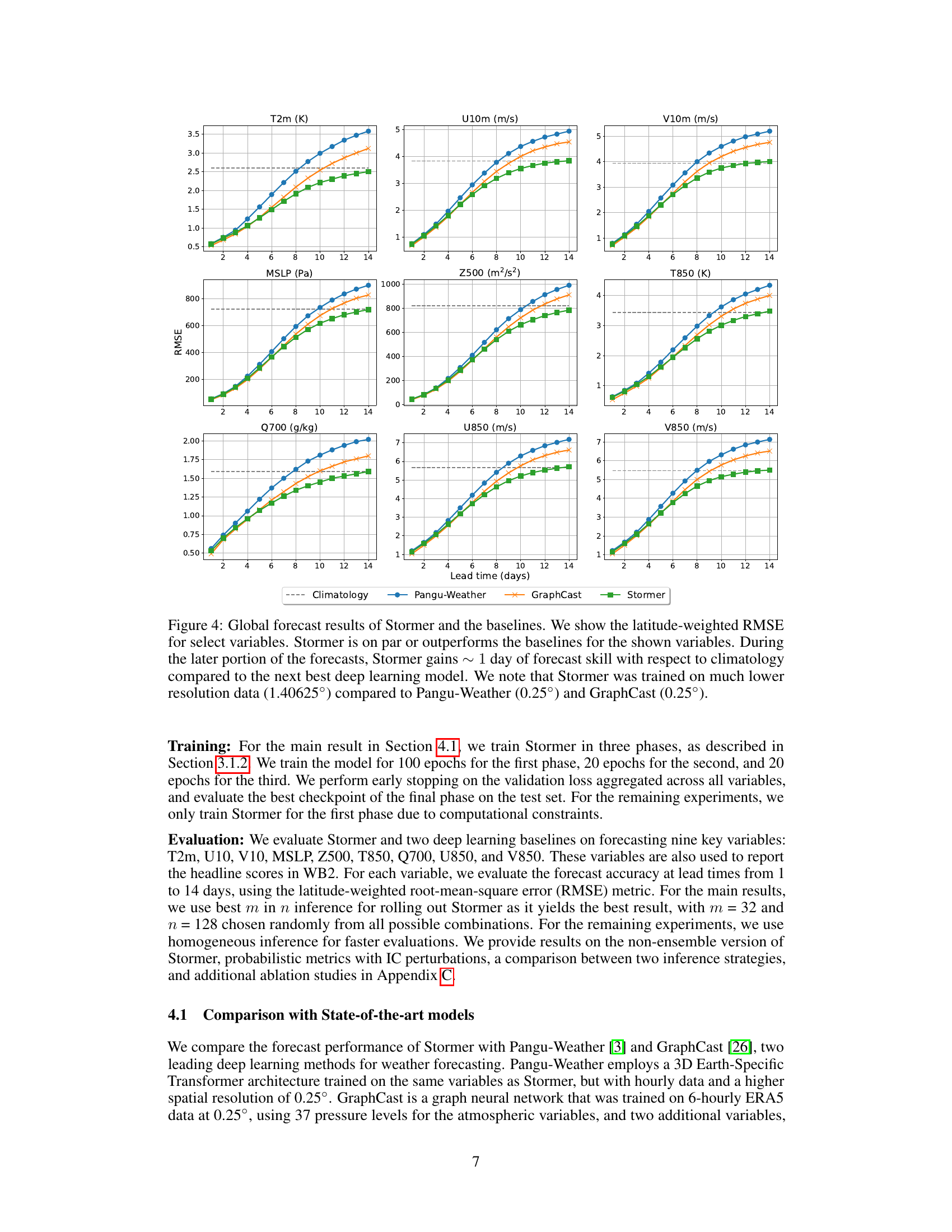

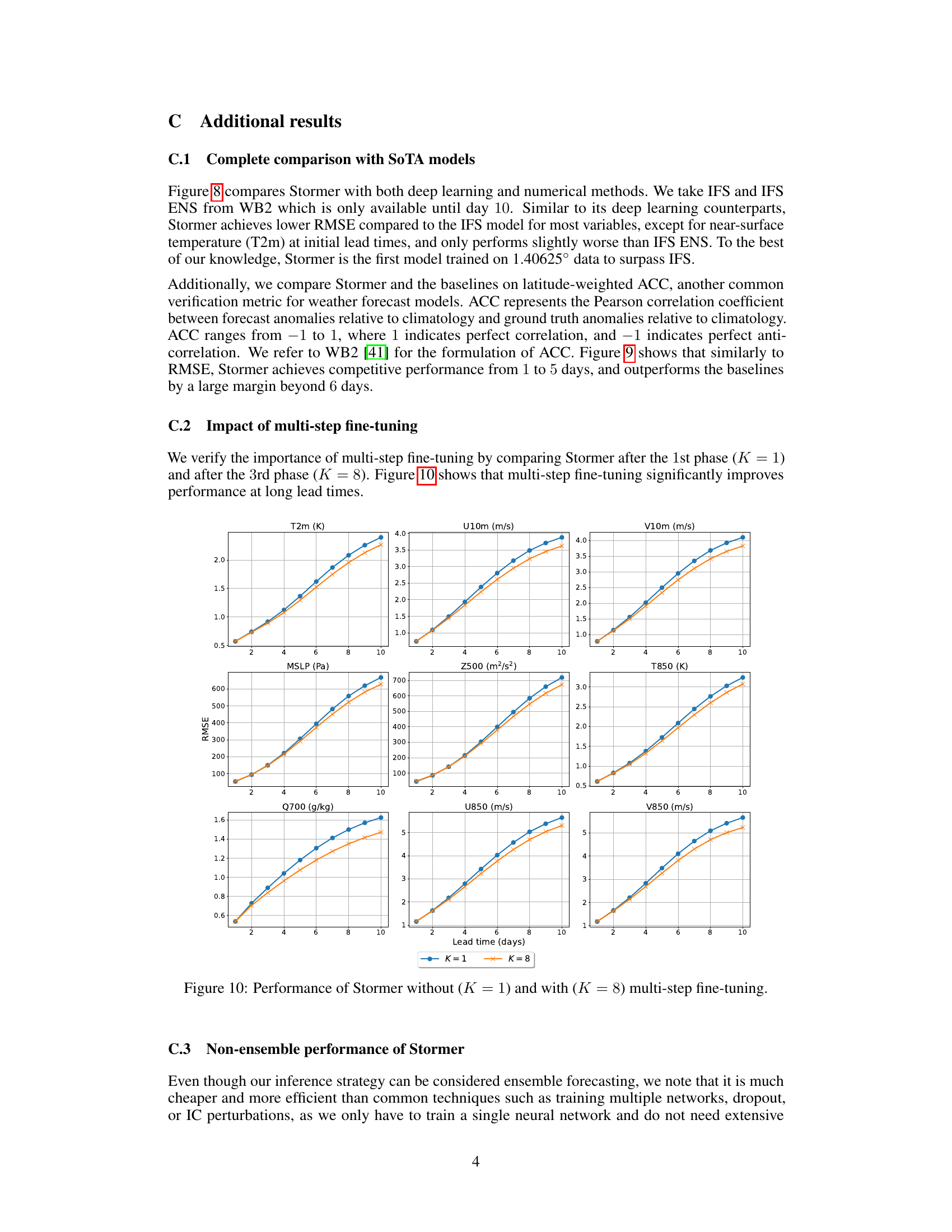

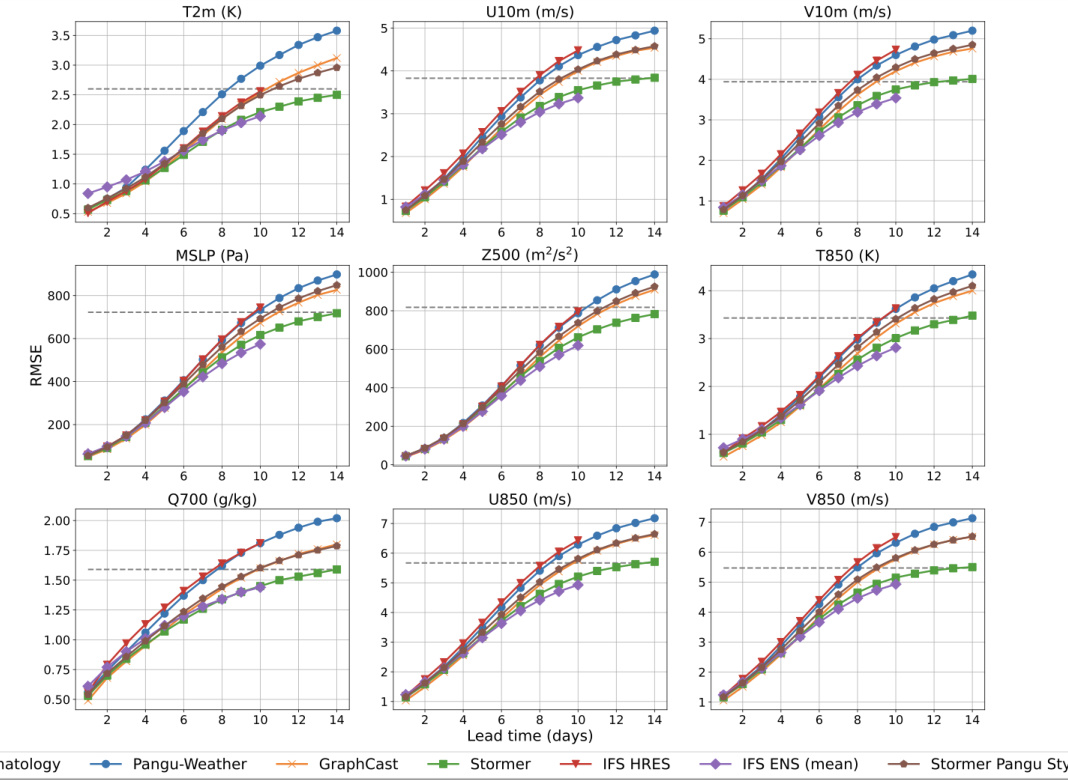

This figure compares the performance of Stormer to other state-of-the-art models (Pangu-Weather and GraphCast) and a climatology baseline in terms of the latitude-weighted root mean square error (RMSE). The results are shown for nine key atmospheric variables over a forecast period of 14 days. Stormer shows competitive or superior performance across most variables, particularly excelling at longer forecast horizons (beyond 7 days). Notably, this performance is achieved despite Stormer being trained on significantly lower resolution data than the other models.

This figure presents the ablation study results that guided the design decisions for the Stormer model. Panel (a) compares forecasting performance using different time intervals (6, 12, and 24 hours) and shows that the optimal interval varies depending on the forecast lead time. Panel (b) demonstrates that a weather-specific embedding method outperforms the standard Vision Transformer (ViT) embedding. Panel (c) shows that adaptive layer normalization (AdaLN) is superior to additive embedding for incorporating temporal information into the model.

This figure demonstrates Stormer’s scalability in terms of model size and training tokens. The left panel shows the results of training three variants of Stormer with parameter counts similar to ViT-S, ViT-B, and ViT-L, respectively (Stormer-S, Stormer-B, Stormer-L). The consistent improvement in forecast accuracy with larger models is evident, with the performance gap widening as the lead time increases. The right panel illustrates the impact of the number of training tokens by varying the patch size from 16 to 2, quadrupling the tokens each time the size is halved. The results again show a consistent performance improvement with a larger number of training tokens.

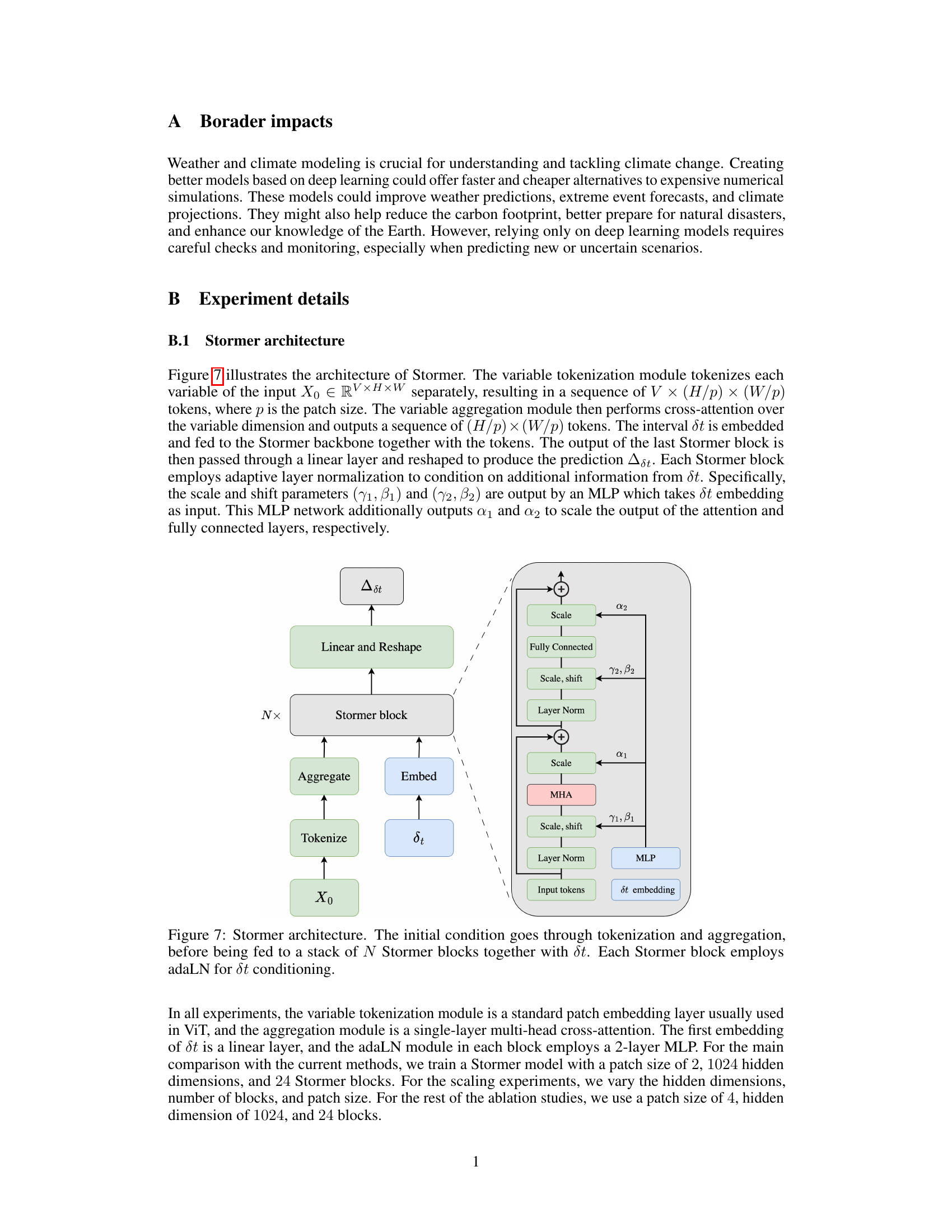

The figure shows the architecture of the Stormer model. The initial weather condition (X0) is first tokenized and aggregated to create a sequence of tokens. These tokens, along with the time interval (δt), are then fed into a stack of Stormer blocks, each incorporating adaptive layer normalization (adaLN) to condition on δt. The output of the final Stormer block is linearly transformed and reshaped to produce a prediction of the weather dynamics (Δδt).

This figure presents a comparison of the forecast performance of Stormer against other state-of-the-art deep learning models (Pangu-Weather and GraphCast) and numerical weather prediction models (IFS HRES and IFS ENS). The results are shown as latitude-weighted RMSE across various lead times (days) for several key atmospheric variables. It demonstrates Stormer’s competitive performance at short lead times and significant gains in skill over other methods at longer lead times (beyond day 6). Importantly, this enhanced performance is achieved despite Stormer being trained on significantly lower-resolution data compared to the other deep learning models.

The figure displays the latitude-weighted root mean square error (RMSE) for nine key atmospheric variables predicted by four different forecasting models: Stormer, Pangu-Weather, GraphCast, and IFS. The results show Stormer’s comparable or superior performance to other models, especially at longer forecast lead times (beyond 7 days). Notably, Stormer’s impressive performance was achieved with significantly less training data and lower resolution compared to the other deep learning models.

This figure presents three subfigures, each showing comparative results of different methods for predicting surface temperature. (a) Compares the effect of using different time intervals (6, 12, and 24 hours) for forecasting, demonstrating that different intervals perform better at different lead times. (b) Compares the performance of a weather-specific embedding versus a standard ViT embedding, showcasing the superiority of the weather-specific method. (c) Shows a comparison between using an adaptive layer normalization (AdaLN) and an additive embedding approach for incorporating time information, illustrating that AdaLN leads to better performance.

This figure presents a comparison of Stormer’s performance against other state-of-the-art deep learning models and traditional numerical weather prediction models for forecasting various atmospheric variables. The comparison is made using the latitude-weighted root mean square error (RMSE) metric across different forecast lead times (days). It highlights Stormer’s competitive performance, particularly its ability to maintain skill for longer lead times, despite using lower resolution data than its competitors. The figure also underscores the relative advantage Stormer gains in forecast accuracy as the prediction window expands beyond 5 days.

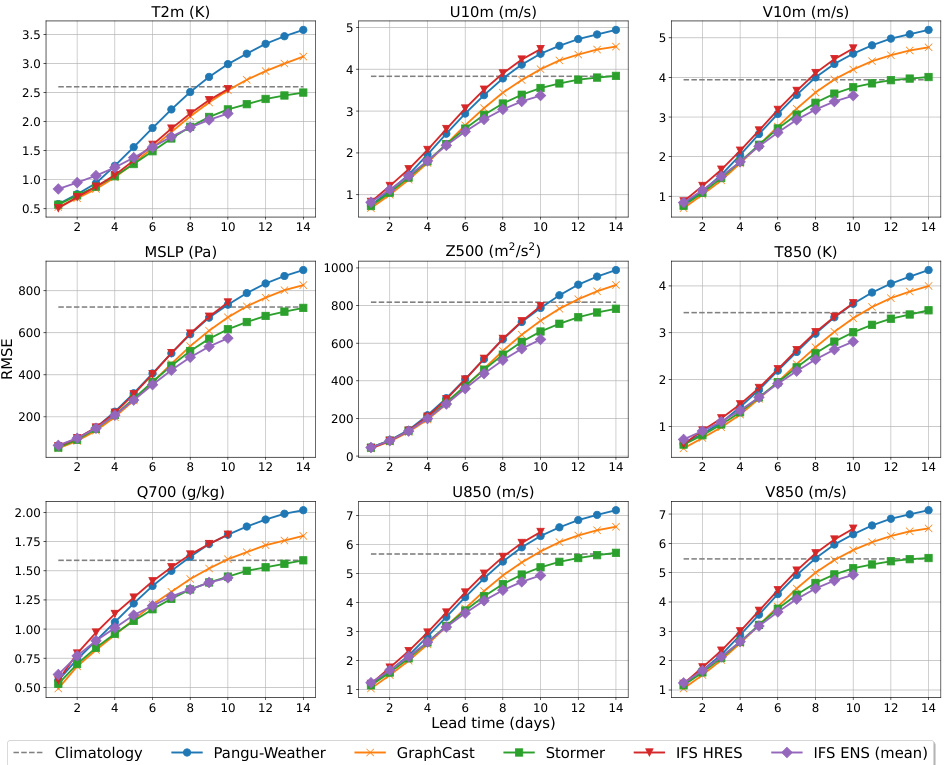

This figure shows the probabilistic performance of the Stormer model with different levels of initial condition (IC) perturbations. The metrics used are RMSE, CRPS and sharpness (SSR). The x-axis represents the forecast lead time in days, while the y-axis shows the value of the metric. Different colored lines represent different levels of noise added to the ICs. The figure helps demonstrate the effect of adding noise to the ICs on the model’s probabilistic forecasting capabilities. It’s important to note the trade-off between deterministic accuracy and uncertainty quantification.

This figure compares the performance of two different inference strategies used with the Stormer model for weather forecasting: Homogeneous and Best m in n. The Homogeneous strategy uses only one time interval (dt) for generating forecasts at a specified lead time, while the Best m in n strategy generates multiple combinations of intervals, selecting the m best-performing combinations based on validation loss. The graph shows the RMSE for various meteorological variables across different lead times (days), indicating how well each strategy performs at making accurate predictions of these variables over time.

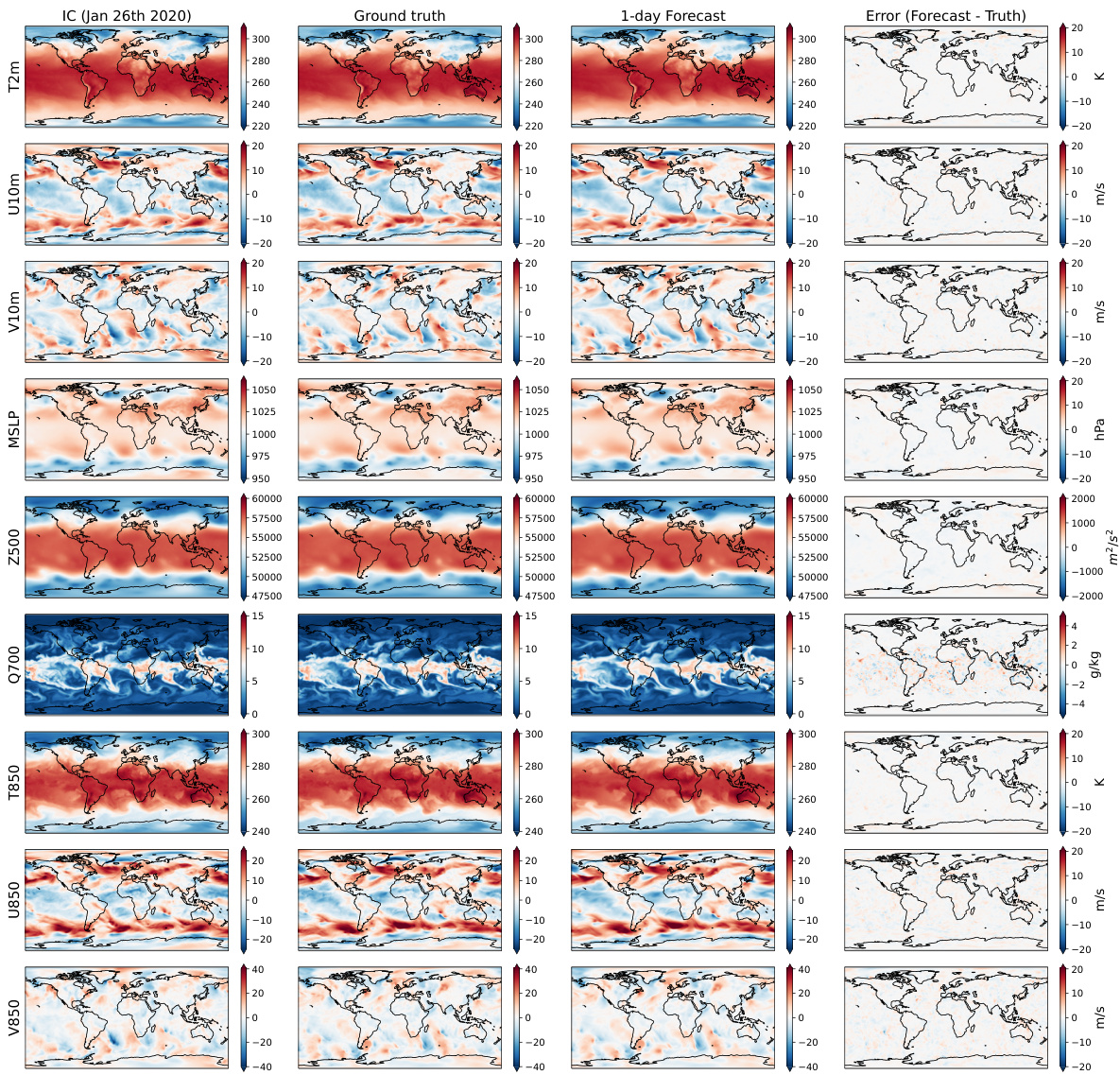

This figure showcases Stormer’s ability to accurately predict a significant weather event five days in advance. It displays three panels: initial conditions, the model’s 5-day forecast, and the ground truth. The comparison highlights the model’s skill in forecasting both wind speed and sea level pressure, particularly in capturing the location and intensity of an extratropical cyclone that set a new North Pacific low-pressure record.

This figure showcases Stormer’s ability to accurately predict an extratropical cyclone that hit Alaska on December 31, 2020. It compares the initial conditions, the 5-day forecast generated by Stormer, and the ground truth. The visualization uses color-fill to represent near-surface wind speed and contours to show mean sea level pressure, highlighting the model’s successful prediction of both the cyclone’s location and intensity.

This figure showcases Stormer’s ability to accurately predict an extratropical cyclone that impacted Alaska on December 31, 2020. It compares the initial conditions, the model’s 5-day forecast, and the ground truth, demonstrating the accuracy of Stormer’s prediction of the cyclone’s location and intensity.

This figure shows a comparison of the initial conditions, the 5-day forecast generated by the Stormer model, and the ground truth for near-surface wind speed and mean sea level pressure. The example highlights a significant extratropical cyclone that hit Alaska on December 31, 2020, setting a new low-pressure record for the North Pacific. The figure demonstrates Stormer’s accuracy in forecasting this extreme weather event five days in advance.

This figure shows a comparison between the initial conditions, a 5-day forecast generated by Stormer, and the ground truth of near-surface wind speed and mean sea level pressure. The example highlights Stormer’s ability to accurately predict a significant extratropical cyclone that impacted Alaska on December 31, 2020, setting a new North Pacific low-pressure record. The visual comparison demonstrates the model’s skill in forecasting both the strength and location of this extreme weather event five days in advance.

This figure shows a comparison of the initial conditions, a 5-day forecast generated by the Stormer model, and the ground truth for near-surface wind speed and mean sea level pressure. The example highlights Stormer’s ability to accurately predict a significant extratropical cyclone event five days in advance, demonstrating the model’s skill in medium-range weather forecasting.

Full paper#