↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Traditional scan-to-CAD methods struggle to create accurate and consistent models from unstructured 3D point cloud data. This is because existing approaches largely ignore the crucial topological information inherent in Boundary Representation (B-Rep), the standard for representing shapes in Computer-Aided Design (CAD). The lack of topological awareness often leads to fragmented or incorrect segmentations of surfaces, making it difficult to build coherent CAD models.

SpelsNet tackles this problem by directly integrating B-Rep topological supervision into a neural network. The core innovation is a novel point-to-BRep adjacency representation that adapts the Linear Algebraic Representation of B-Rep graphs to point cloud data. This allows SpelsNet to learn from both spatial and topological information, resulting in more accurate and consistent surface primitive segmentations. The authors also extend two existing CAD datasets with the necessary annotations, providing valuable resources for future research. The results demonstrate that SpelsNet outperforms state-of-the-art methods in terms of segmentation accuracy and topological consistency.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel approach to 3D shape understanding, bridging the gap between point cloud data and the structured representations used in CAD. Its topological supervision method improves the accuracy and consistency of surface primitive segmentation, which is crucial for various applications like reverse engineering and 3D modeling. The publicly available datasets further advance research in this area.

Visual Insights#

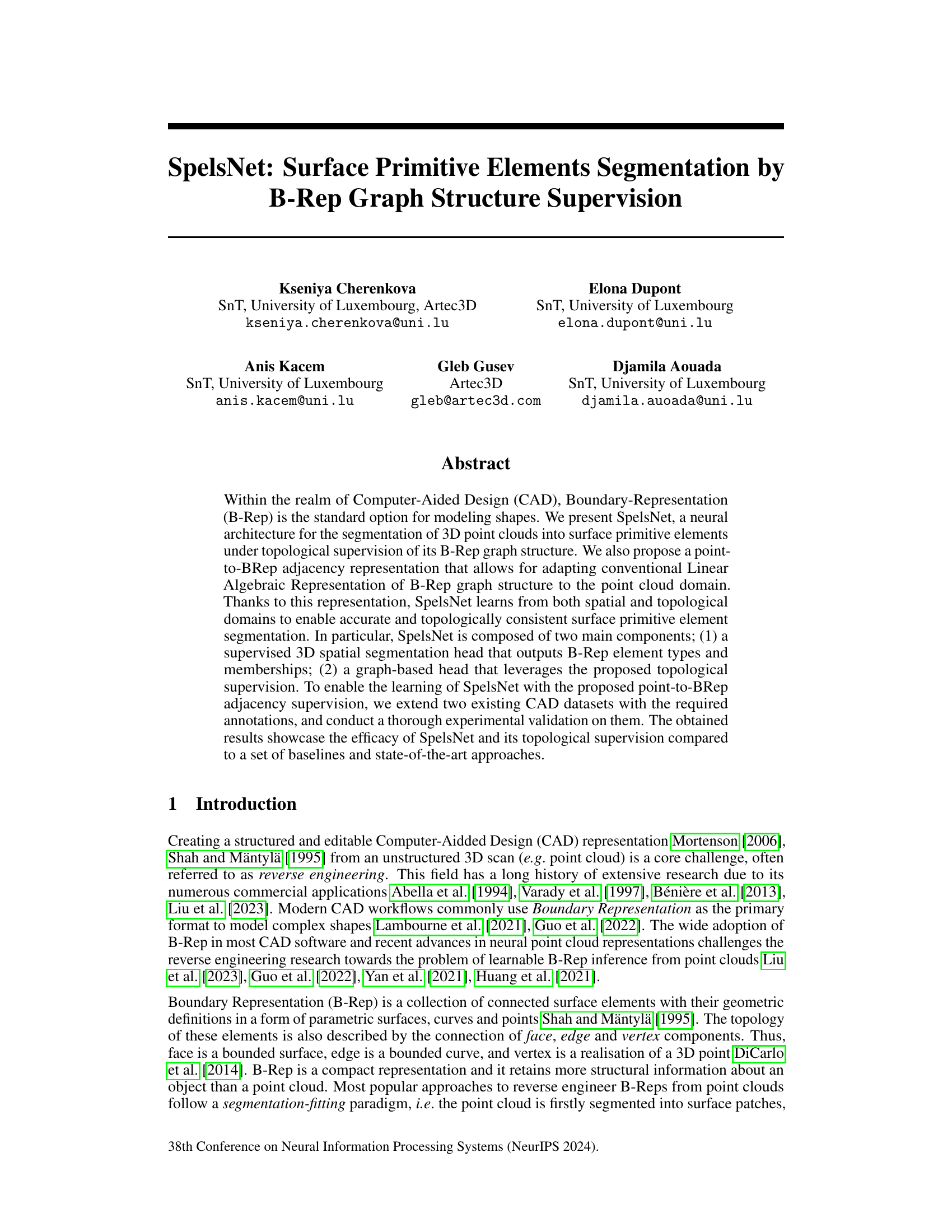

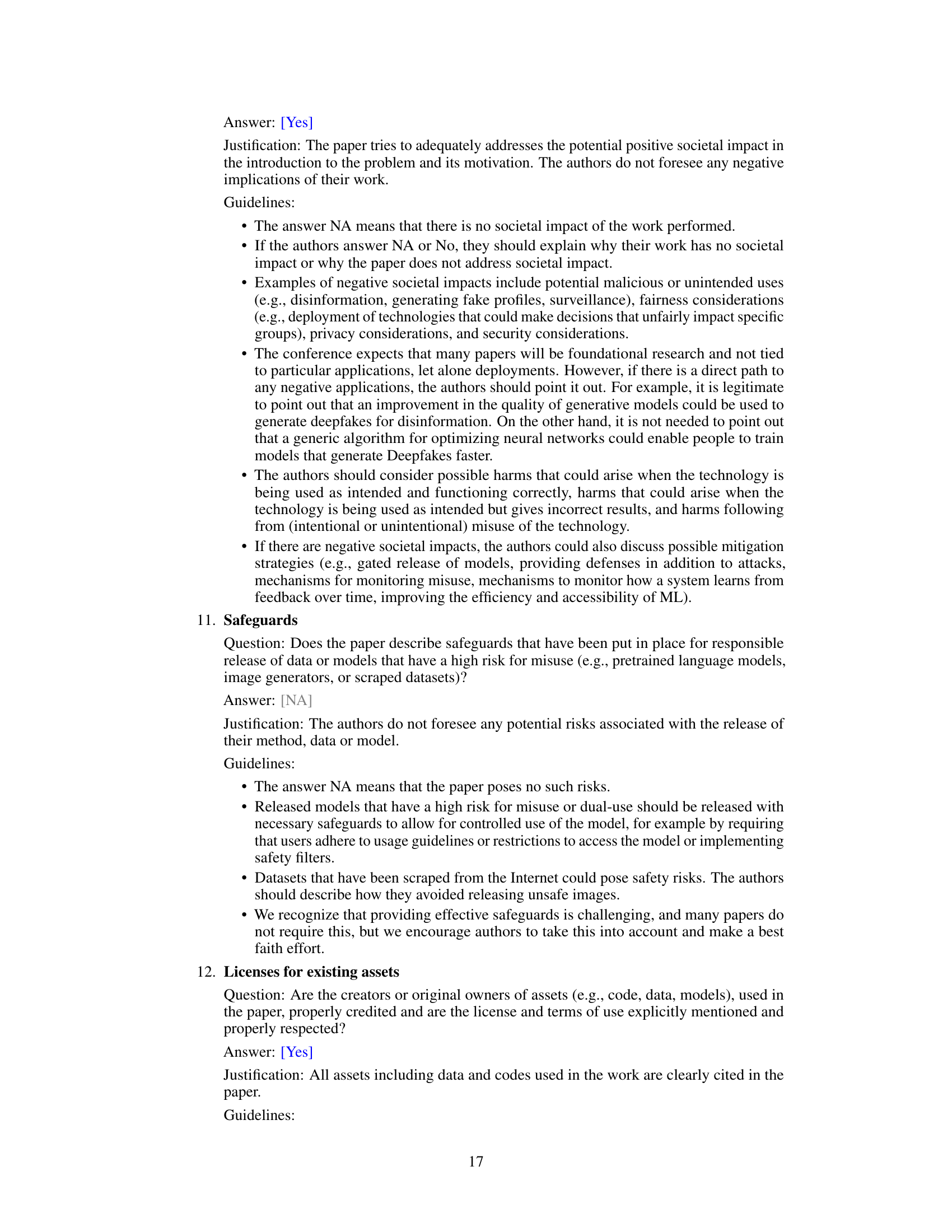

This figure illustrates the core concept of the paper: a novel point-to-BRep adjacency representation. The left panel shows a Boundary Representation (B-Rep) of a pyramid, including its vertices, edges, and faces, and their topological connectivity represented using Linear Algebraic Representation (LAR). The right panel shows how this representation is adapted to a point cloud scenario, enabling the use of B-Rep topological information for direct neural supervision. Key to the adaptation is the mapping of points to their corresponding B-Rep elements, facilitating the use of the LAR representation with point cloud data. Characteristic matrices (M1 and M2) are used to represent the adjacency between elements in both representations.

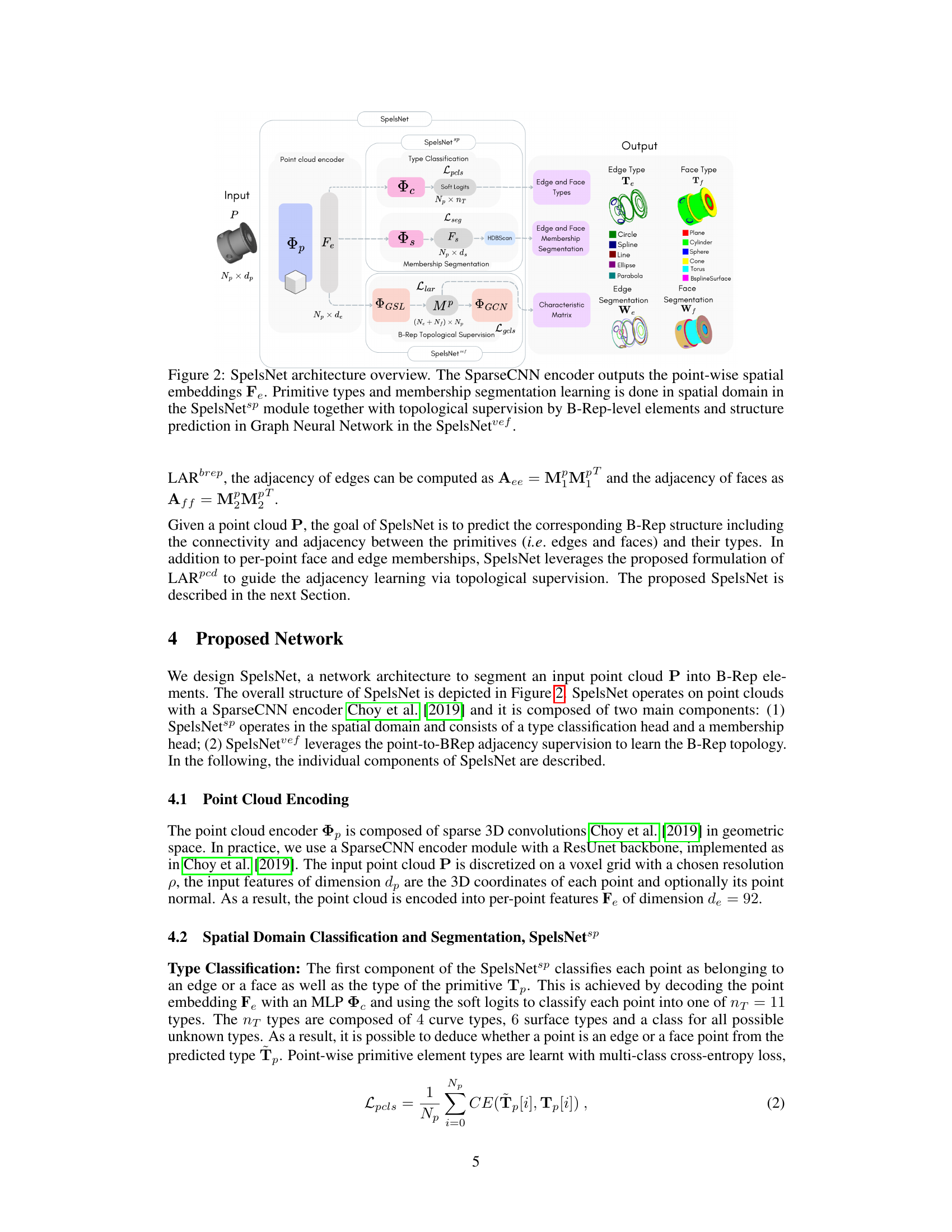

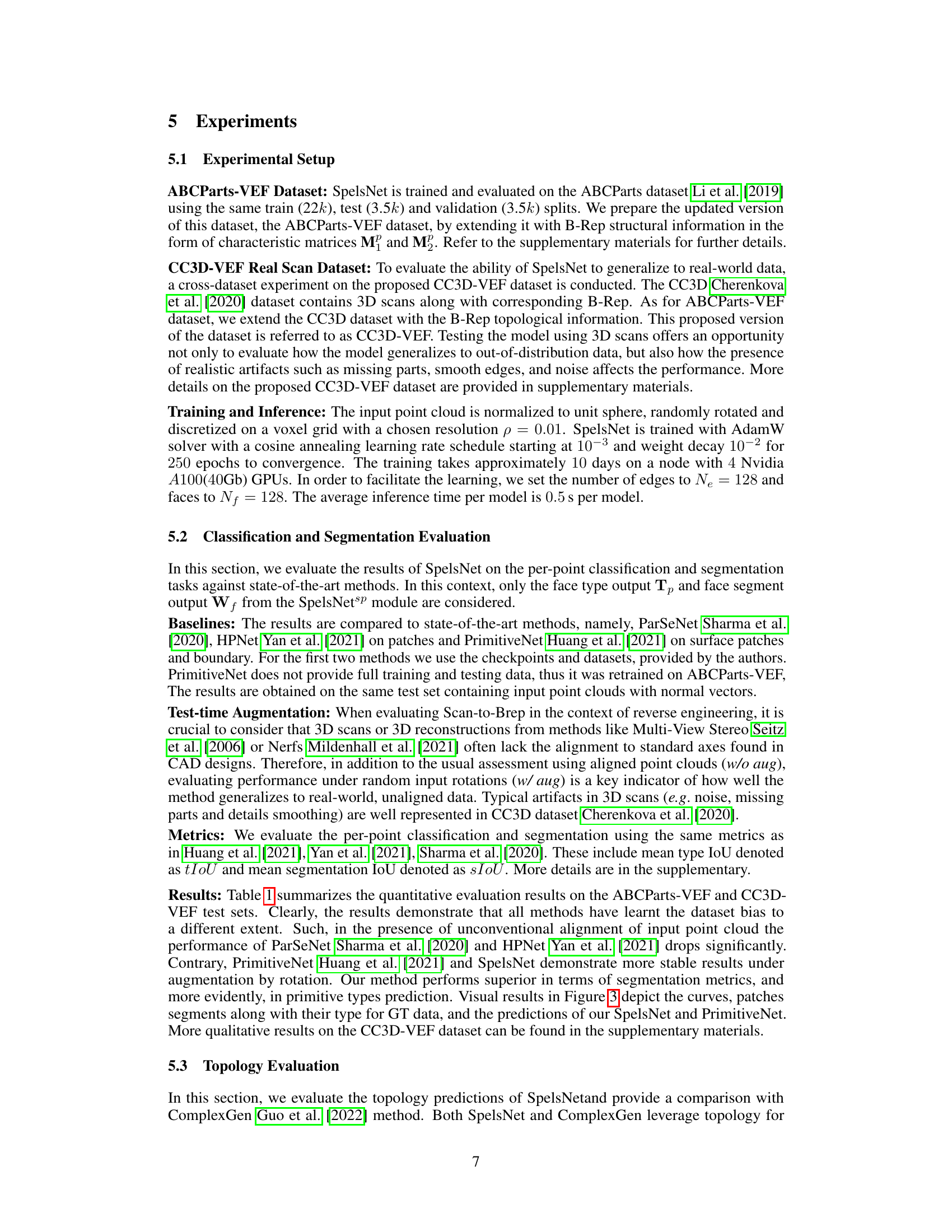

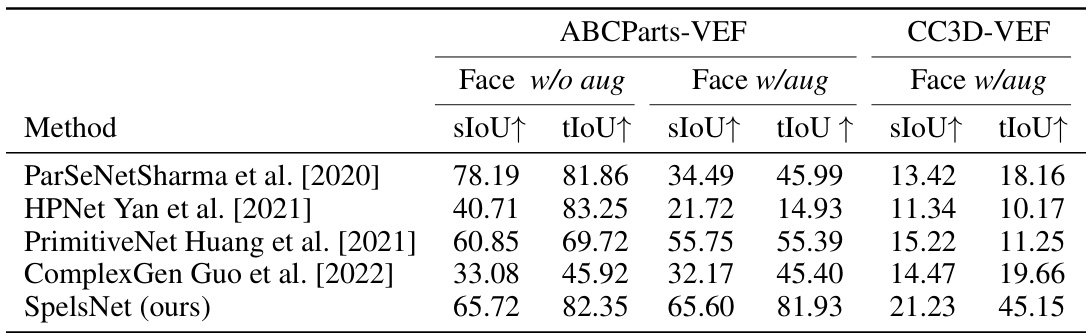

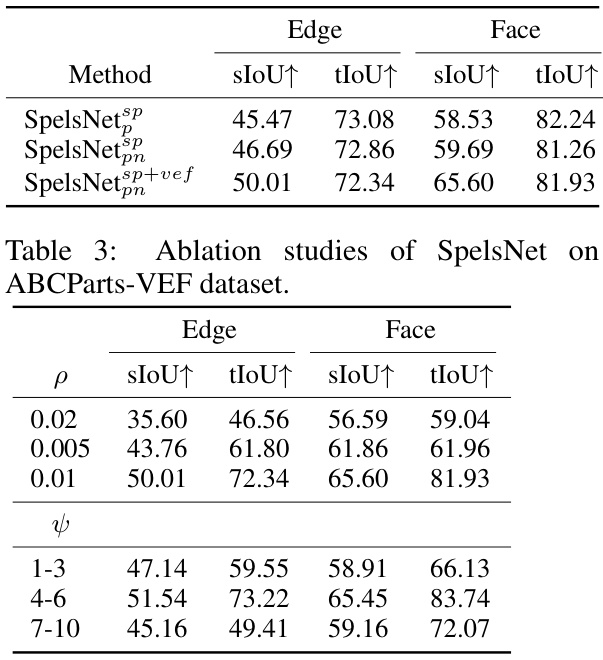

This table presents the quantitative evaluation results of SpelsNet and several baseline methods on two datasets: ABCParts-VEF and CC3D-VEF. The evaluation focuses on face type classification and segmentation, reporting mean Intersection over Union (IoU) scores for both type (’tIoU’) and segmentation (‘sIoU’). Results are shown with and without test-time augmentation (w/o aug and w/ aug respectively) to demonstrate robustness to real-world data variations. Higher scores indicate better performance.

In-depth insights#

B-Rep Supervision#

The concept of ‘B-Rep Supervision’ in the context of 3D point cloud segmentation is a significant advancement. It leverages the topological structure inherent in Boundary Representation (B-Rep) models, moving beyond simple spatial feature learning. Instead of relying solely on point-wise features, this approach incorporates the relationships between faces, edges, and vertices as explicit supervision during the training process. This is crucial because traditional methods often struggle with accurate topology recovery, leading to fragmented or inconsistent segmentation results. The use of a Linear Algebraic Representation (LAR) of the B-Rep chain complex provides a mathematically elegant and computationally efficient means for encoding this topological information. This LAR formulation facilitates the direct integration of topological knowledge into the neural network architecture, enhancing both the accuracy and topological consistency of the output. By supervising the learning process with this richer, topological information, the network can achieve significantly improved segmentation results, particularly in correctly identifying and classifying elements according to their connectivity, overcoming a major limitation of prior art. The effectiveness of this method is demonstrated in the paper through extensive experimental validation on extended CAD datasets. This approach represents a key step towards more robust and reliable 3D shape understanding from point cloud data.

SpelsNet Design#

SpelsNet’s design is a novel architecture for 3D point cloud segmentation into surface primitive elements, supervised by the B-Rep graph structure. Its core innovation lies in a point-to-BRep adjacency representation, adapting the Linear Algebraic Representation (LAR) of B-Rep graphs to the point cloud domain. This allows the network to learn from both spatial (point cloud features) and topological (B-Rep graph structure) information simultaneously. SpelsNet is composed of two main components: a supervised 3D spatial segmentation head that directly predicts B-Rep element types and memberships, and a graph-based head (leveraging a GCN) that refines the segmentation based on the learned topological relations. The design facilitates end-to-end training and directly integrates spatial and topological supervision, which is a key improvement over existing methods that typically treat segmentation and B-Rep inference as separate, sequential steps. The use of LAR as supervision and the unified spatial-topological framework are crucial for achieving accurate and topologically consistent results.

Topology Learning#

Topology learning in the context of 3D shape analysis, particularly within the framework of Boundary Representation (B-Rep), focuses on leveraging the inherent topological structure of shapes for improved segmentation and reconstruction. The core idea is to move beyond purely geometric approaches, which often struggle with noisy or incomplete data, by incorporating explicit knowledge of how elements (vertices, edges, faces) connect and form the overall structure. This requires representing the shape’s topology in a computationally tractable manner, often using linear algebraic representations or graph-based methods. The goal is then to learn a mapping between point cloud data and the underlying topological structure, allowing for more robust and consistent surface segmentation. This is particularly useful in reverse engineering, where the goal is to reconstruct a CAD model from point cloud scans. Challenges lie in designing efficient and effective neural network architectures that can learn both local and global topological features, requiring careful consideration of how to integrate spatial and topological information. Furthermore, the success of topology learning hinges on the availability of datasets with both geometric and topological annotations, a non-trivial challenge for many 3D shape datasets. Ultimately, effective topology learning in this field promises to significantly improve the accuracy and reliability of 3D shape analysis tasks.

Dataset Extension#

Extending existing datasets is crucial for evaluating the performance and generalizability of novel methods in computer vision, especially when dealing with complex tasks like 3D surface primitive segmentation. The paper’s approach to extending two CAD datasets (ABCParts and CC3D) with B-Rep topological information, specifically the Linear Algebraic Representation (LAR) for point-to-BRep adjacency, is commendable. This addition enhances the datasets by providing topological supervision signals. This supervision allows the model to learn not only spatial information (the point cloud itself), but also critical topological relationships between the surface primitives. The inclusion of LAR-based point-to-BRep adjacency is a particularly insightful contribution, as it directly bridges the gap between the point cloud data and the abstract B-Rep structure, enabling direct neural supervision. The extended datasets, termed ABC-VEF and CC3D-VEF, significantly increase the value of the original datasets, making them more suitable for training and testing advanced algorithms focused on accurate and topologically consistent segmentation, representing a substantial contribution to the research community by making these enhanced resources publicly available.

Future Research#

Future research directions stemming from this work on SpelsNet could explore several promising avenues. Improving the robustness of SpelsNet to noisy and incomplete point cloud data is crucial for real-world applications. This might involve incorporating data augmentation techniques or exploring more advanced architectures capable of handling missing data. Extending SpelsNet to handle a wider variety of surface primitives beyond the current set is another key area. This could involve incorporating more complex surface representations or learning to segment surfaces into more granular levels of detail. The current point-to-BRep adjacency representation is quite effective but could be refined and extended further for greater accuracy. Exploring alternative graph neural network architectures for the topological supervision component is worth considering. This might lead to improvements in learning efficiency and accuracy, particularly for large or complex shapes. Finally, applying SpelsNet to other relevant tasks in reverse engineering, such as parameterization, mesh generation, or complete CAD model reconstruction, would greatly enhance the potential of the proposed method. The ability of the framework to scale to handle incredibly large datasets would also need to be assessed.

More visual insights#

More on figures

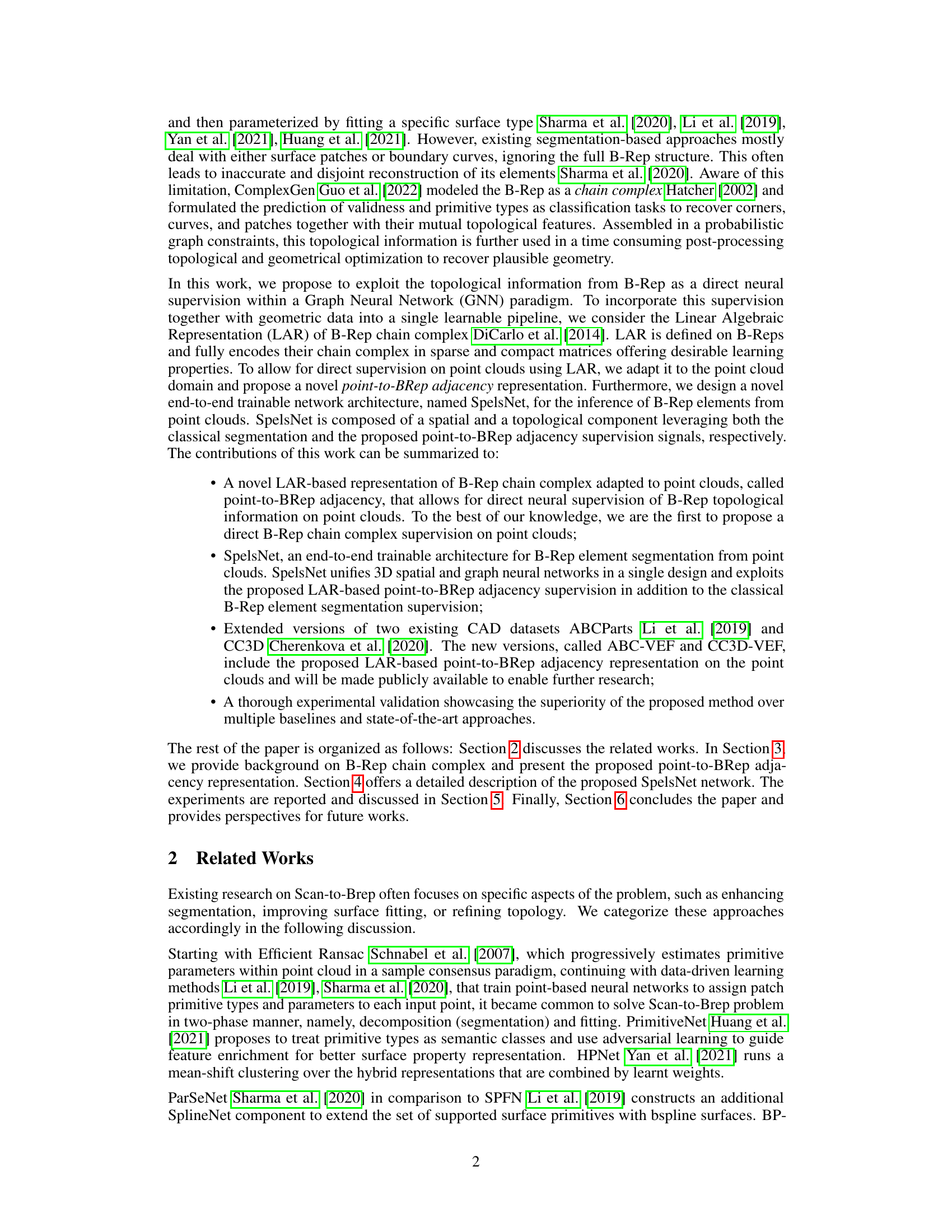

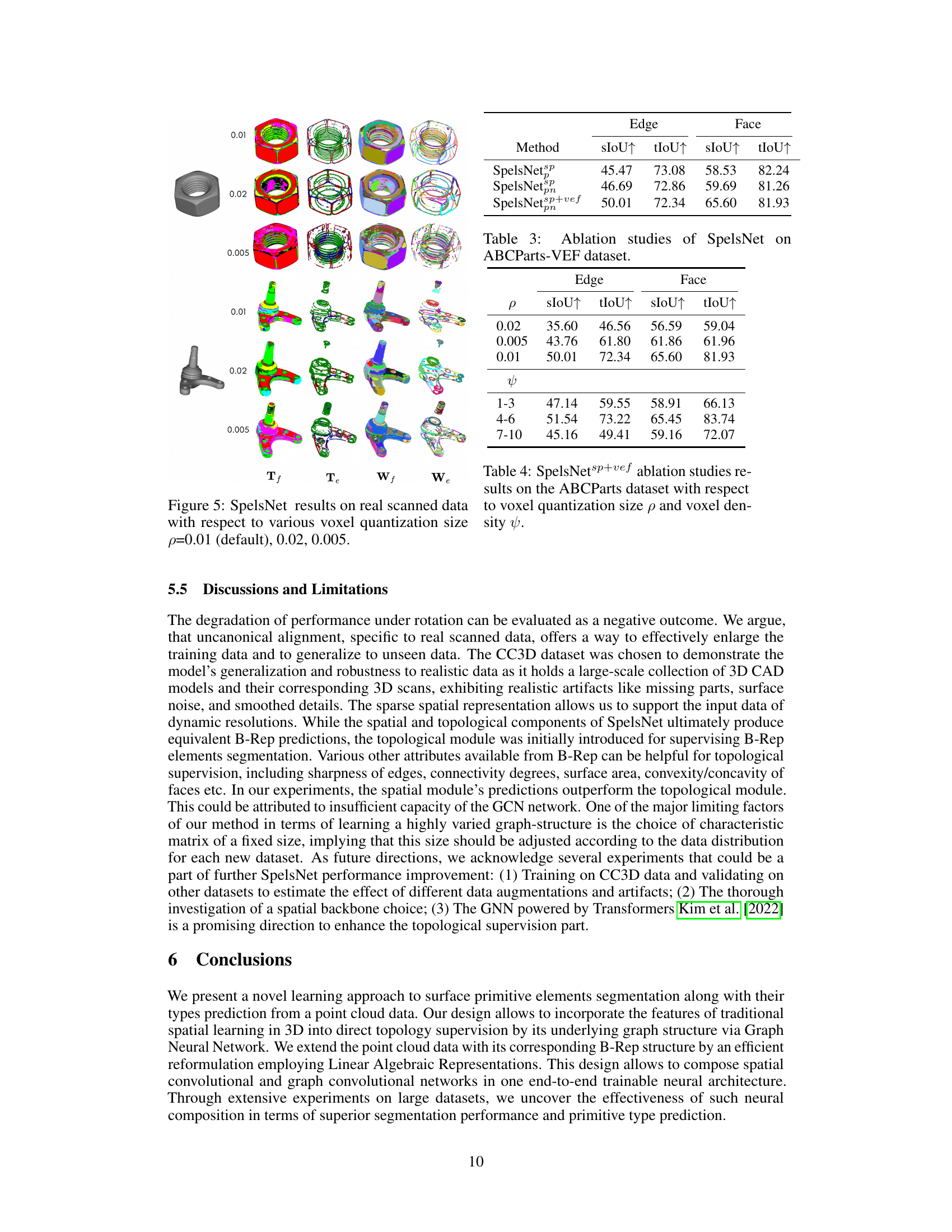

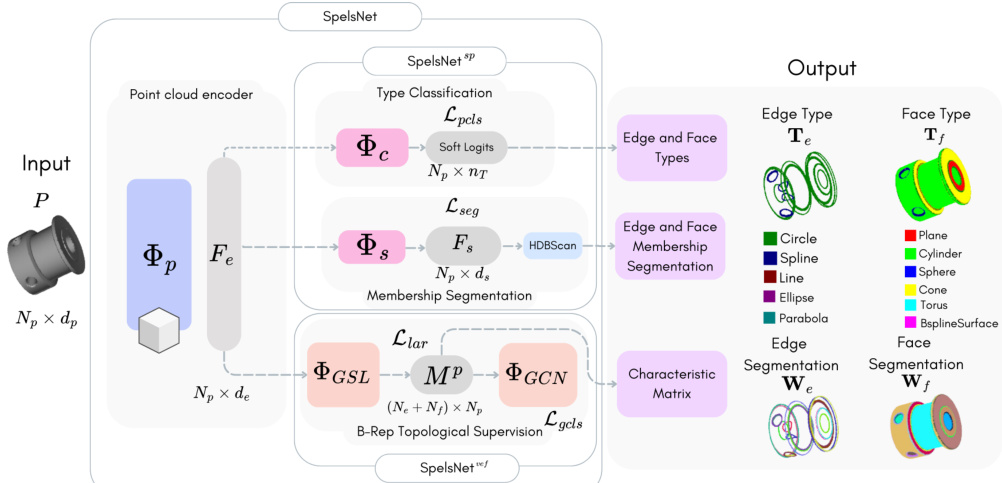

This figure shows the architecture of the SpelsNet model. The model consists of two main components: SpelsNetsp, which handles spatial domain classification and segmentation, and SpelsNetvef, which uses B-Rep topological supervision to improve accuracy. SpelsNet takes a point cloud as input and produces edge and face types (Te and Tf), as well as their corresponding segmentation maps (We and Wf). The spatial component uses a SparseCNN encoder and MLPs to extract features and predict types and memberships. The topological component utilizes a Graph Neural Network and a proposed point-to-BRep adjacency representation to learn the B-Rep structure and integrate topological information into the segmentation process.

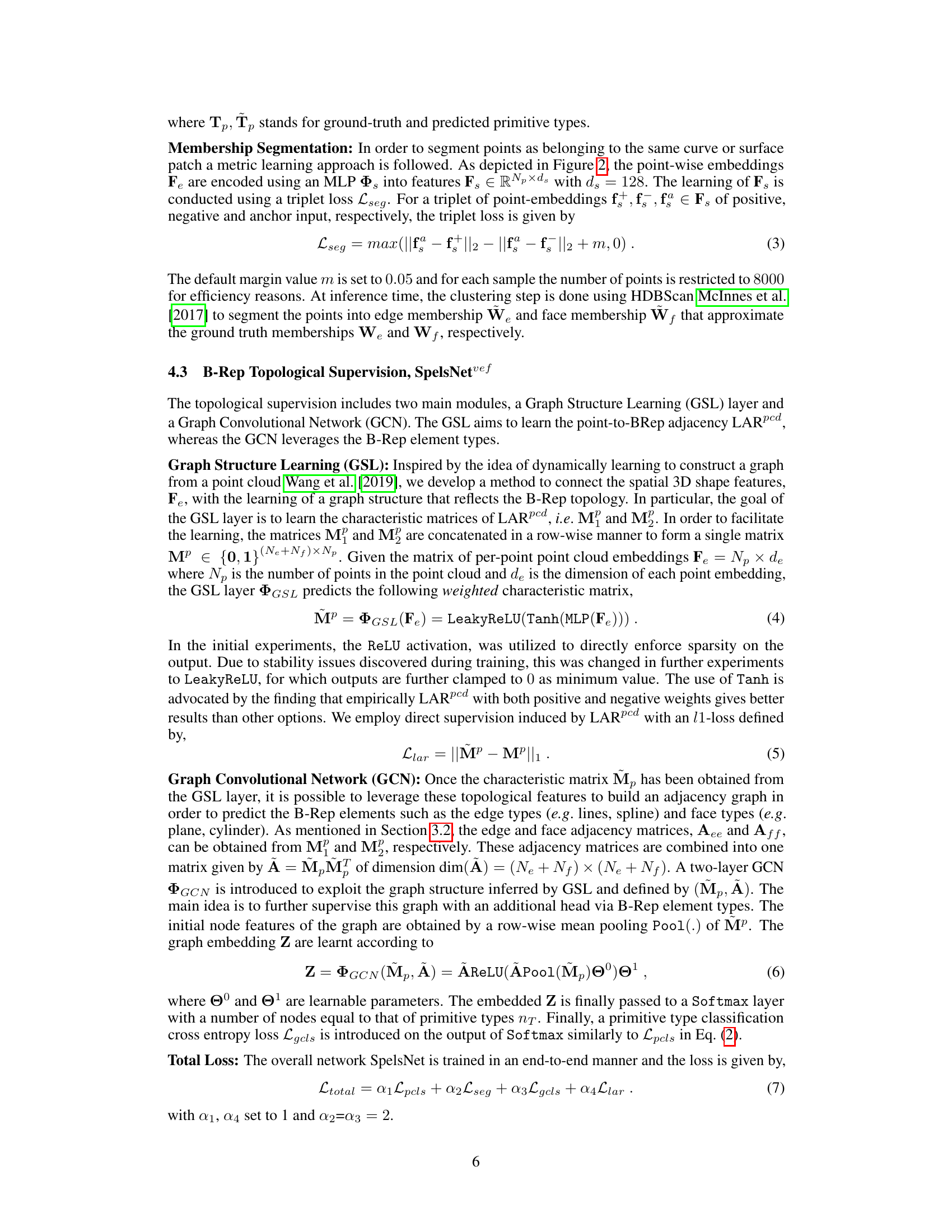

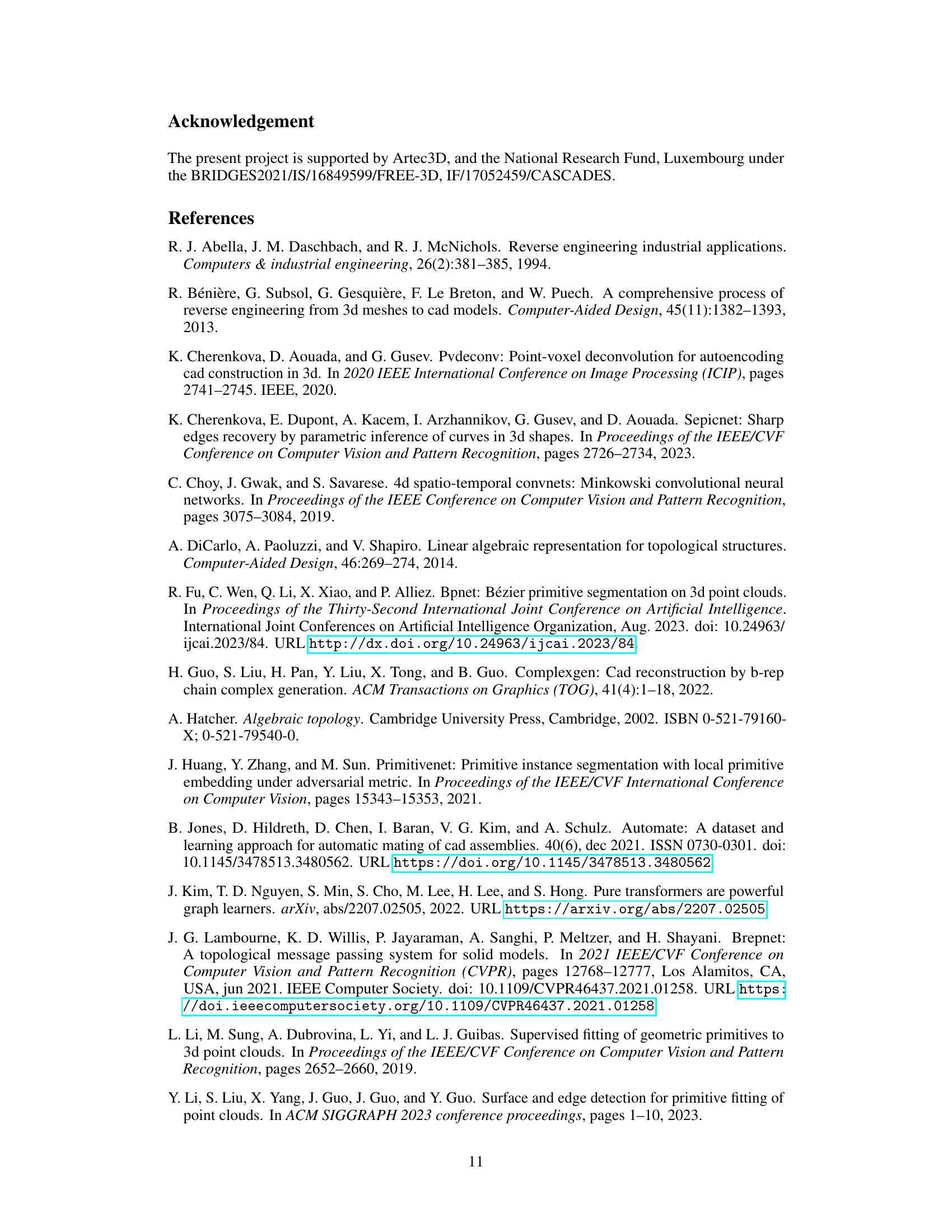

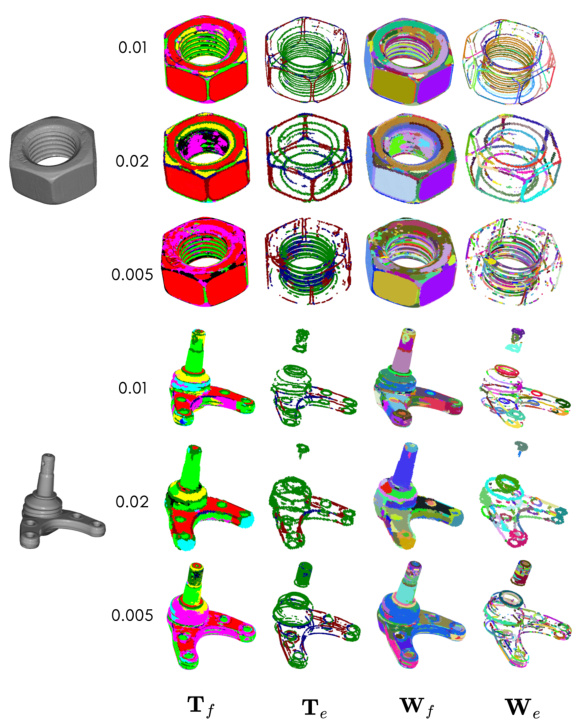

This figure compares the results of three different methods for segmenting point clouds into surface primitives: PrimitiveNet, ComplexGen, and SpelsNet (the authors’ method). Each row shows the same object processed by all three methods. From left to right, we can see the input point cloud, ground truth segmentation (GT) showing the correct surface and edge types, PrimitiveNet’s results, ComplexGen’s results, and finally SpelsNet’s results. Each column shows different aspects of the segmentation: face types (Tf), face segmentations (Wf), edge types (Te), and edge segmentations (We). This allows for a visual comparison of the performance of all three methods in terms of both accuracy of primitive type classification and accuracy of the segmentation itself. The figure highlights SpelsNet’s superior performance.

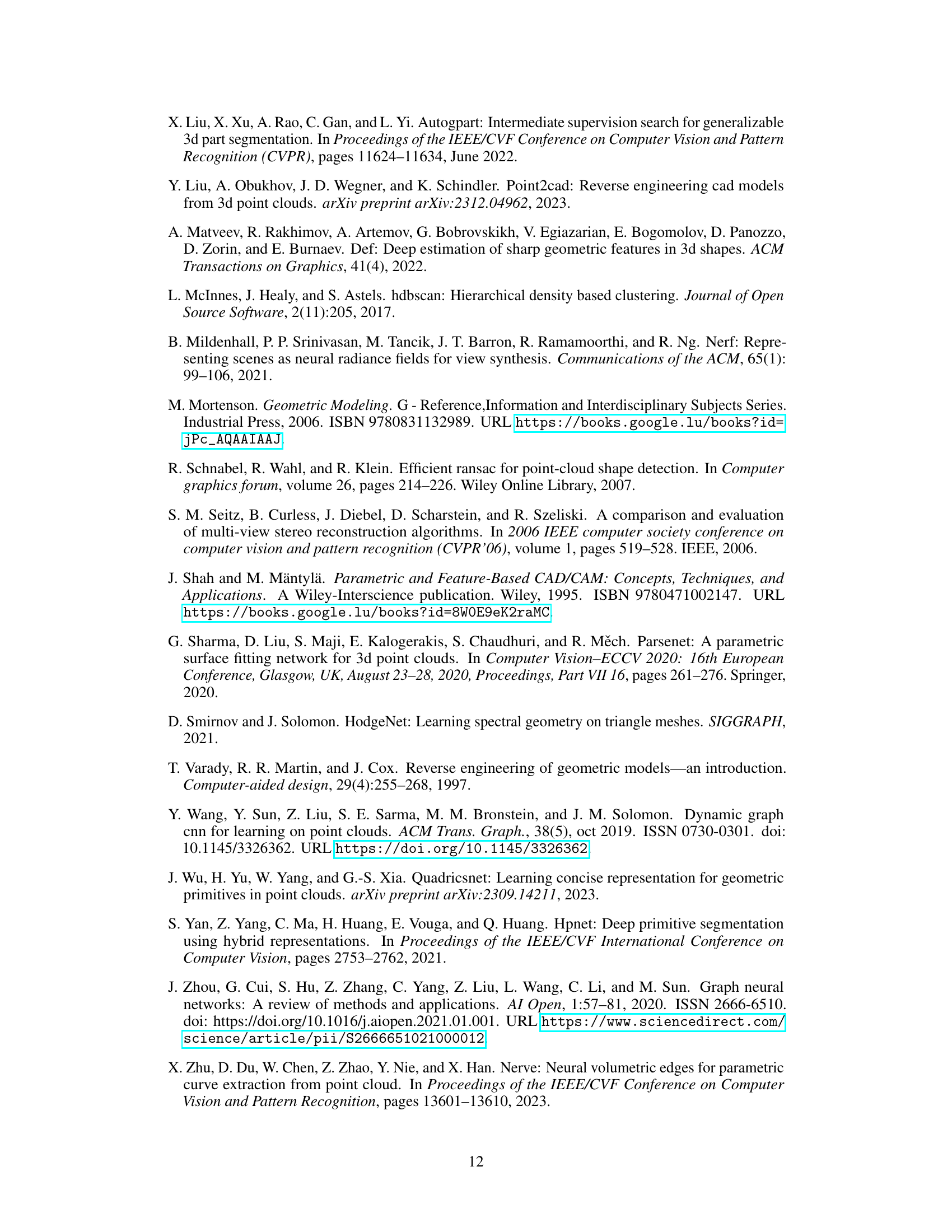

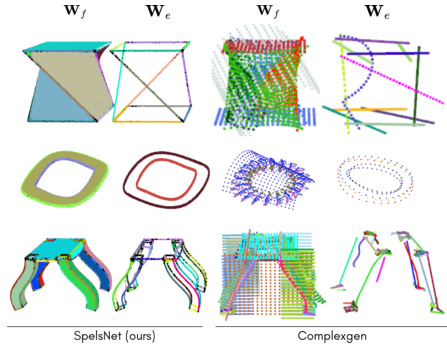

This figure presents a visual comparison of the results obtained by PrimitiveNet and SpelsNet on the ABCParts-VEF dataset. For each model, four columns showcase (from left to right): the input point cloud; the predicted face types and segmentation; the predicted edge types and segmentation; and a combined view of face and edge types and segmentation. The figure highlights the differences in the quality of segmentation and the accuracy of predicted types produced by each method. The superior performance of SpelsNet in both areas is visually evident.

This figure shows the architecture of the SpelsNet model, which is composed of two main components: SpelsNetsp and SpelsNetvef. SpelsNetsp performs spatial domain classification and segmentation, while SpelsNetvef leverages B-Rep topological supervision. The model takes a point cloud as input and processes it through a SparseCNN encoder. This produces spatial embeddings, which are then used for type classification and membership segmentation in the SpelsNetsp module. The results from this module and B-Rep topological supervision are combined in the SpelsNetvef module for final structure prediction using a Graph Neural Network. The output includes the primitive type and the membership segmentation for each point.

This figure shows a comparison of the results obtained by PrimitiveNet and SpelsNet on the ABCParts-VEF dataset. The figure presents visual examples of input point clouds, ground truth (GT), PrimitiveNet predictions, and SpelsNet predictions for both face and edge types and segmentations. It highlights the differences in the quality and accuracy of the predictions for different methods.

This figure provides a detailed overview of the SpelsNet architecture, a neural network designed for segmenting 3D point clouds into boundary representation (B-Rep) elements. It highlights the two main components: SpelsNetsp, which handles spatial domain classification and segmentation, and SpelsNetvef, which incorporates B-Rep topological supervision using a graph neural network. The flow of information from the input point cloud through the SparseCNN encoder, various processing modules, and finally to the output (primitive types and membership segmentation) is clearly illustrated.

Full paper#