↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Current physical adversarial camouflage techniques for vehicle detection often result in unnatural, easily identifiable patterns. This paper introduces Customizable and Natural Camouflage Attack (CNCA), which utilizes a pre-trained diffusion model to generate more realistic and customizable adversarial camouflage. Existing methods struggle to produce visually convincing camouflage while maintaining effectiveness against detectors. The pixel-level optimization process results in attention-grabbing patterns.

CNCA addresses this by leveraging a pre-trained diffusion model and incorporating user-specified text prompts for customization. An adversarial feature is combined with text prompts to guide the diffusion model, while a clipping strategy balances naturalness and attack effectiveness. Experiments demonstrate CNCA produces significantly more natural-looking camouflage than existing methods, achieving competitive attack performance in both digital and physical settings. The ability to generate custom styles, validated through user studies, showcases CNCA’s significant advance in this field.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel approach to generating natural-looking and customizable adversarial camouflage, addressing a key limitation in existing methods. It opens up new avenues for research into physical adversarial attacks, particularly in the context of autonomous driving and surveillance systems, and offers a valuable benchmark for future work on improving the realism and robustness of such attacks. By using diffusion models, this research also contributes to broader advancements in image generation for security applications.

Visual Insights#

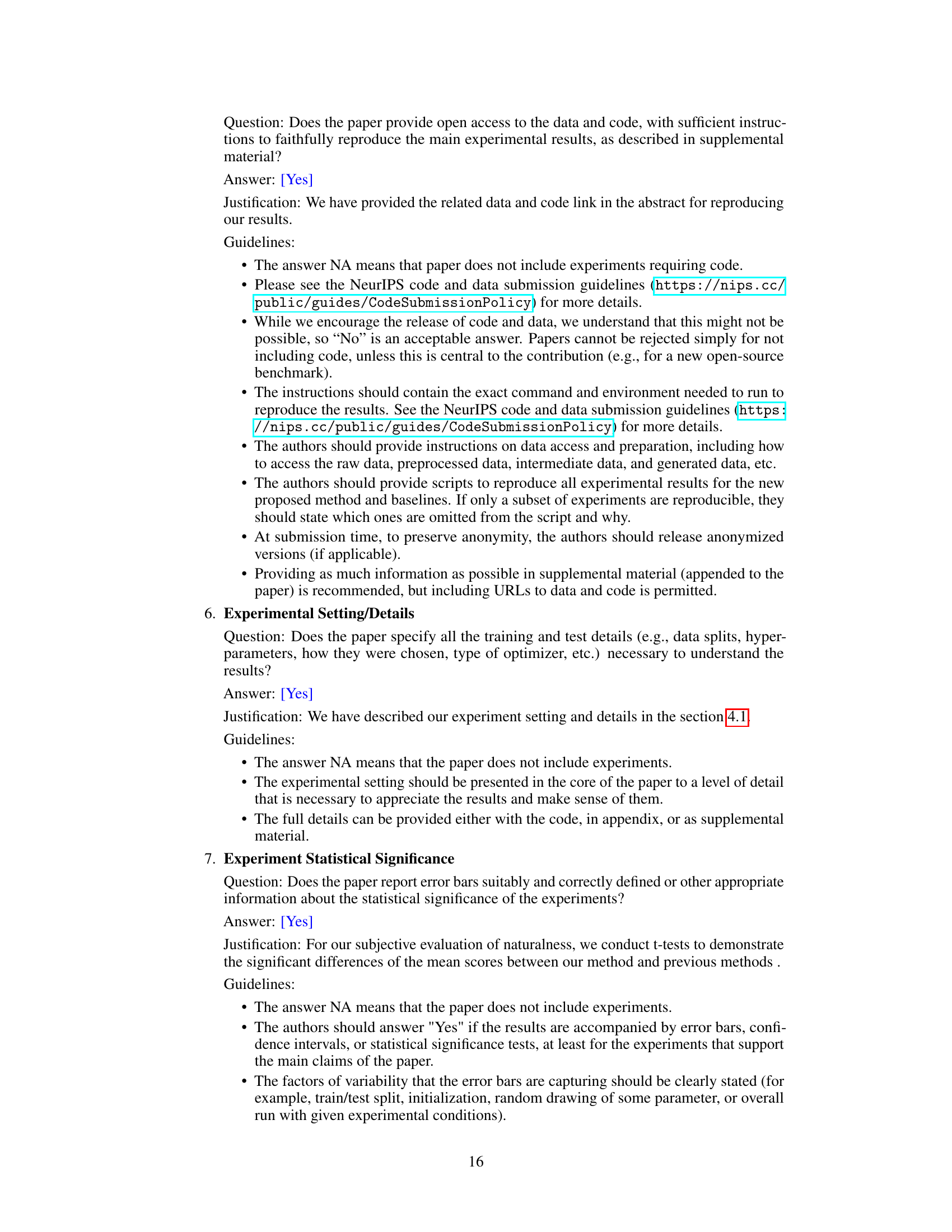

This figure showcases the effectiveness of the proposed CNCA method in generating customizable and natural-looking adversarial camouflage. It presents five images: (a) a normal car, and (b-e) the same car with different camouflage patterns generated using CNCA with corresponding user-provided text prompts. The prompts determine the style of the camouflage, demonstrating the method’s customizability. Each image also shows the detection confidence score of a vehicle detector, illustrating the impact of the camouflage on the detector’s performance.

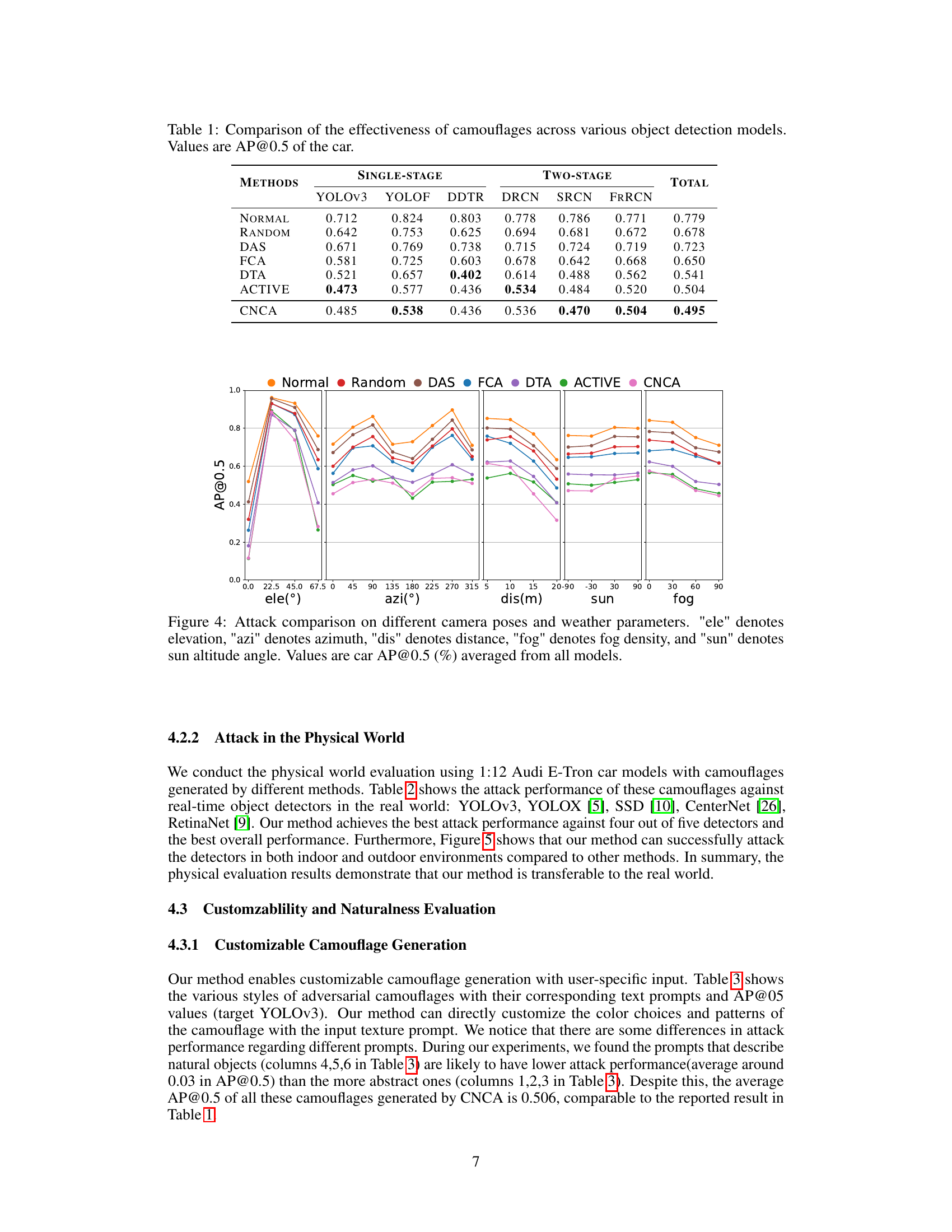

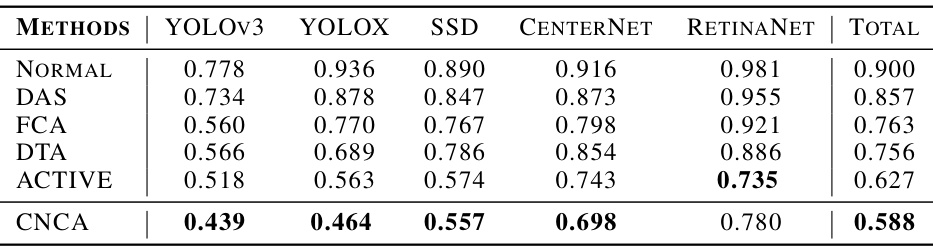

This table presents a comparison of the effectiveness of different adversarial camouflage methods against various object detection models. The effectiveness is measured using the Average Precision (AP) at an Intersection over Union (IoU) threshold of 0.5 (AP@0.5), a common metric in object detection. The table shows the AP@0.5 scores for a car, comparing the performance of different camouflage techniques against six different object detectors: YOLOv3, YOLOF, Deformable DETR (DDTR), Dynamic R-CNN (DRCN), Sparse R-CNN (SRCN), and Faster R-CNN (FRRCN). The ‘NORMAL’ and ‘RANDOM’ rows provide baseline results. The table highlights how well each camouflage method obscures the car from detection by various models.

In-depth insights#

Adv Camouflage Gen#

The heading ‘Adv Camouflage Gen’ suggests a system for generating adversarial camouflage. This likely involves techniques that leverage adversarial machine learning, where the goal is to create visual patterns that deceive a computer vision system (like a vehicle detector) while appearing natural to humans. Deep neural networks are likely used to generate these patterns, possibly employing a generative model (e.g., a GAN or diffusion model) to produce realistic-looking images that are nonetheless imperceptible to the target detection system. The process probably involves optimizing the generated images to maximize the adversarial effect (e.g., causing misclassification or missed detection), possibly through a gradient-based optimization approach. A key challenge would be balancing visual realism with the strength of the adversarial perturbation. Customizability may also be a feature, allowing users to specify aspects of the camouflage (e.g., color palette, texture type) through conditional inputs. Therefore, the ‘Adv Camouflage Gen’ likely focuses on creating robust, visually believable adversarial attacks that are tailored to the specific characteristics of target systems.

Diffusion Model Use#

The research leverages diffusion models for adversarial camouflage generation, marking a significant departure from pixel-level optimization methods. This approach offers two key advantages: enhanced naturalness and customizability. By employing a pre-trained diffusion model, the system generates camouflage textures that appear more realistic and less conspicuous than those produced by previous methods. The user can further customize the camouflage by providing text prompts, allowing for a wider variety of appearances. Integrating adversarial features into the diffusion model’s input allows the model to generate camouflages that are both natural and effective at deceiving vehicle detection systems, highlighting a novel application of diffusion models within an adversarial setting. However, the method necessitates a trade-off between naturalness and attack effectiveness. A clipping strategy is implemented to manage this trade-off, enabling users to control the balance between realism and detection evasion. The study highlights the potential of diffusion models to significantly improve the quality and customizability of physical adversarial attacks.

Physical Attacks#

Physical attacks against AI systems, particularly those involving computer vision, present a unique set of challenges. Unlike digital attacks which manipulate data at a bit level, physical attacks interact with the real world, requiring the adversary to consider factors like lighting, viewing angle, and environmental conditions. Adversarial camouflage, a prominent technique in physical attacks, seeks to deceive AI systems by altering the physical appearance of objects. However, this often leads to conspicuous patterns easily identifiable by humans, hence the work in this paper to achieve more natural-looking camouflage. The effectiveness of physical attacks is closely tied to the robustness of the AI model and the sophistication of the attack. Creating realistic and effective physical attacks requires interdisciplinary expertise, combining knowledge of computer vision, materials science, and even art to create attacks that are both effective and difficult to detect. Successful physical attacks raise significant concerns for AI security in real-world applications like autonomous driving and security surveillance, highlighting the need for more robust and resilient AI systems.

Naturalness Tradeoff#

The concept of “Naturalness Tradeoff” in adversarial camouflage is crucial. It highlights the inherent tension between generating camouflage that effectively deceives object detectors (high attack performance) and creating camouflage that appears visually realistic and blends seamlessly with the surroundings (high naturalness). Simply optimizing for attack performance often leads to unnatural, pixelated, or otherwise conspicuous patterns that are easily detected by humans. This trade-off necessitates a careful balance: overly optimized adversarial textures might be highly effective against algorithms but easily spotted by human observers, undermining the practical application of the technique. Conversely, prioritizing naturalness may reduce the effectiveness of the camouflage. Therefore, successful adversarial camouflage design requires innovative techniques to navigate this trade-off, potentially through the use of generative models to incorporate prior knowledge of natural textures or the incorporation of constraints during the optimization process to favor naturally occurring patterns. The ideal solution would be to find an optimal point on the tradeoff curve, generating camouflage that is both highly effective against detectors and indistinguishable from normal textures to the human eye.

Future Research#

Future research directions stemming from this work on customizable and natural adversarial camouflage could explore several promising avenues. Improving the efficiency of the diffusion model is crucial, as the current approach can be computationally expensive. Investigating alternative generative models or optimization strategies could significantly reduce processing time. Expanding the range of applicable scenarios beyond vehicle detection is another key area. The framework’s adaptability to other object classes and detection systems needs further investigation. Addressing the robustness to various environmental factors such as lighting, weather conditions, and viewing angles should also be prioritized. Real-world deployment requires robust performance under diverse conditions. Developing more sophisticated adversarial features is also important. Current methods might be vulnerable to increasingly robust detection systems. Exploring techniques to dynamically adapt the camouflage to specific detection models in real-time would enhance the attack’s effectiveness. Finally, research into effective countermeasures and defenses is essential to mitigate the potential risks associated with this technology. This includes developing methods for detecting camouflaged objects and enhancing the robustness of detection systems.

More visual insights#

More on figures

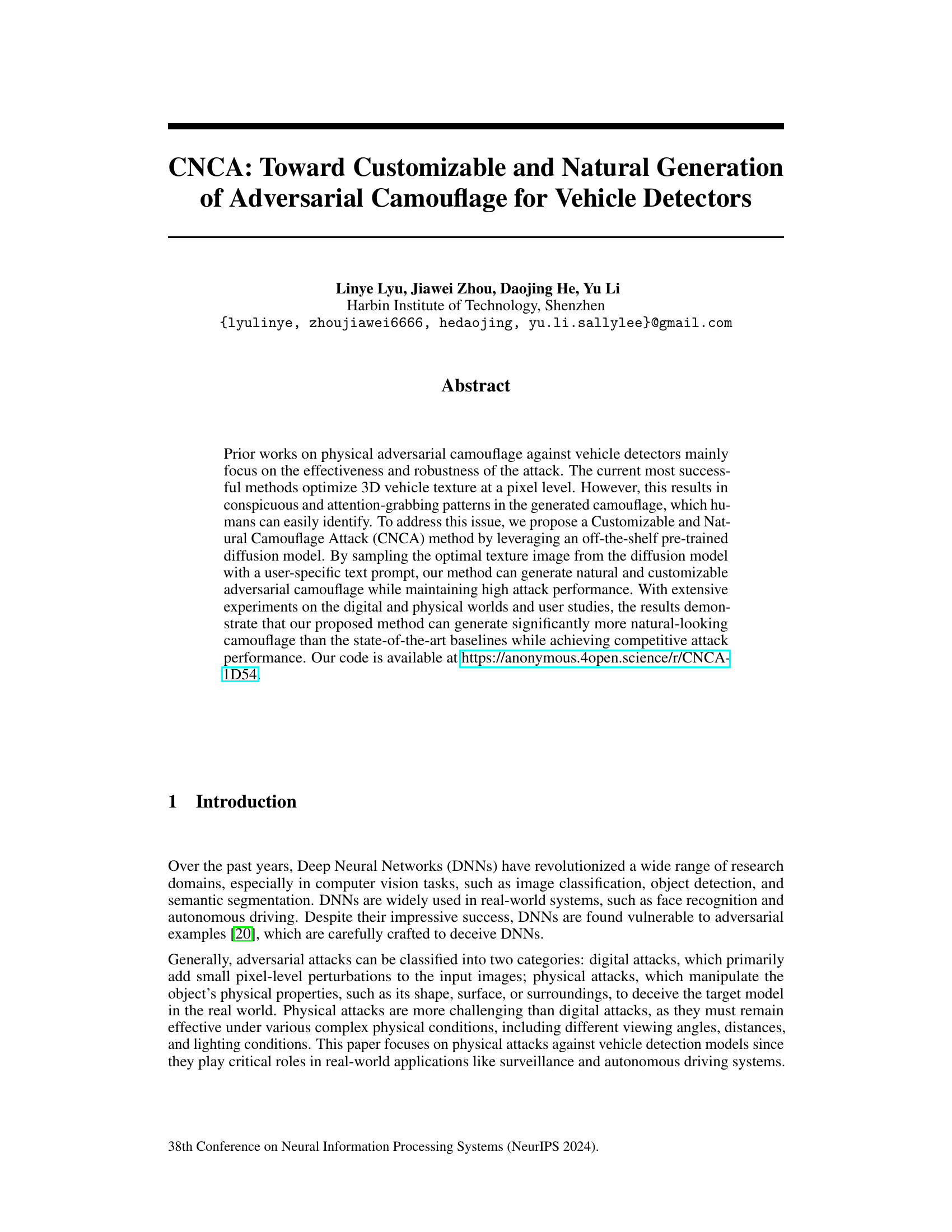

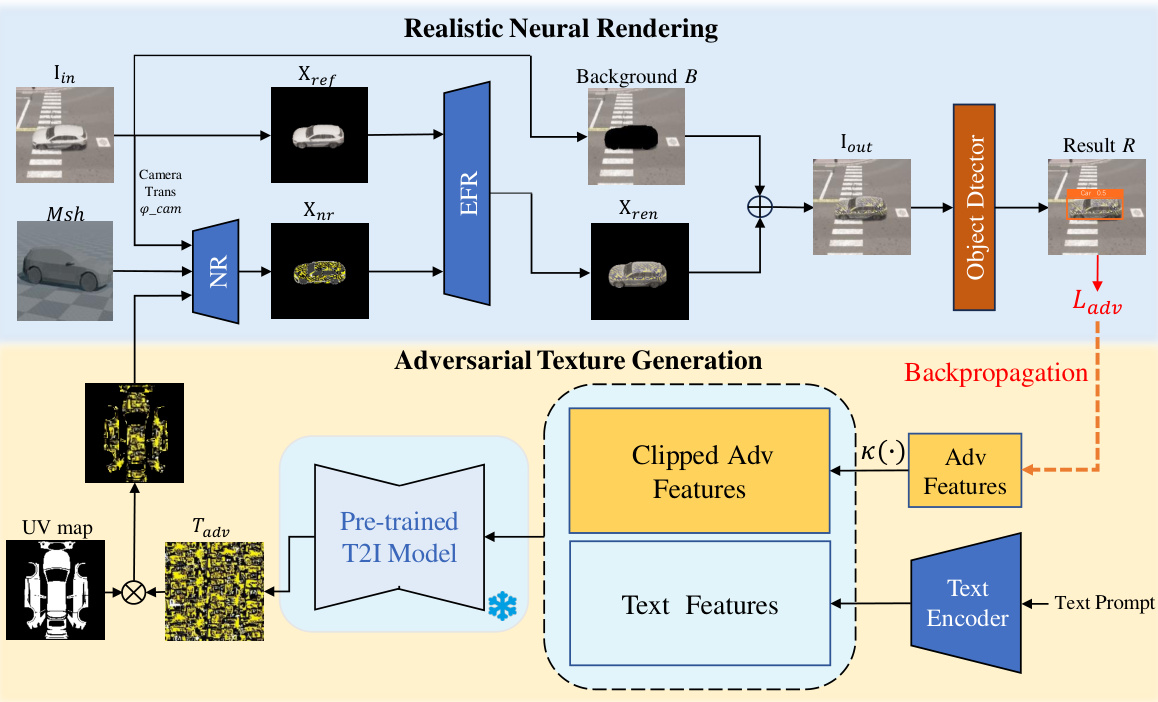

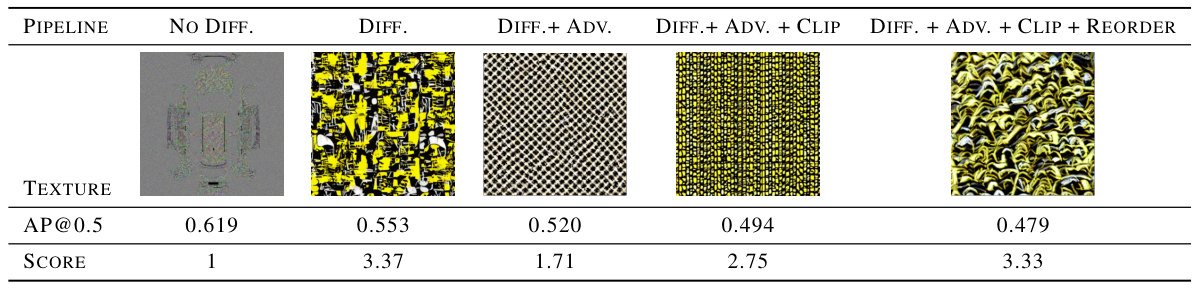

This figure illustrates the CNCA (Customizable and Natural Camouflage Attack) framework, detailing the process of generating customizable and natural adversarial camouflage. It shows how a realistic neural rendering process combines the original vehicle image with an adversarial texture, generated by a diffusion model conditioned on both adversarial and text-based features. The adversarial features are obtained through backpropagation from a target object detector, ensuring the generated camouflage effectively deceives the detector. A clipping strategy is used to balance the trade-off between naturalness and attack performance. The framework integrates various components including a realistic neural renderer (combining environmental features), an adversarial texture generation module (using a pre-trained diffusion model and feature combination/clipping), a backpropagation mechanism for adversarial optimization, and finally an object detector to assess the attack performance.

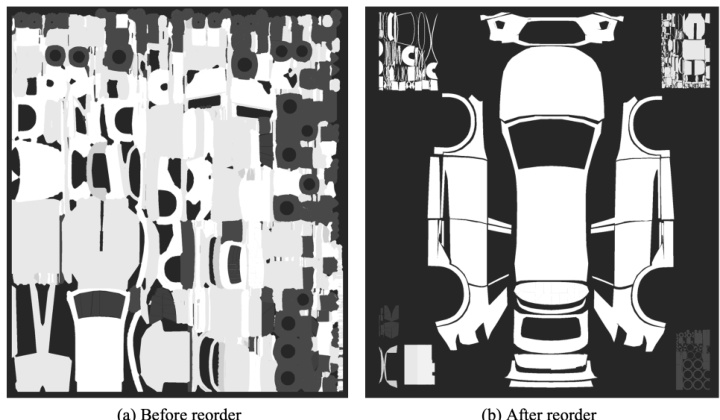

This figure shows the UV map of a vehicle texture before and after reordering. Reordering the UV map improves the naturalness of the generated camouflage by ensuring better connection of the vehicle surface. (a) shows the original UV map before reordering and (b) shows the reordered UV map used to generate more natural looking camouflage.

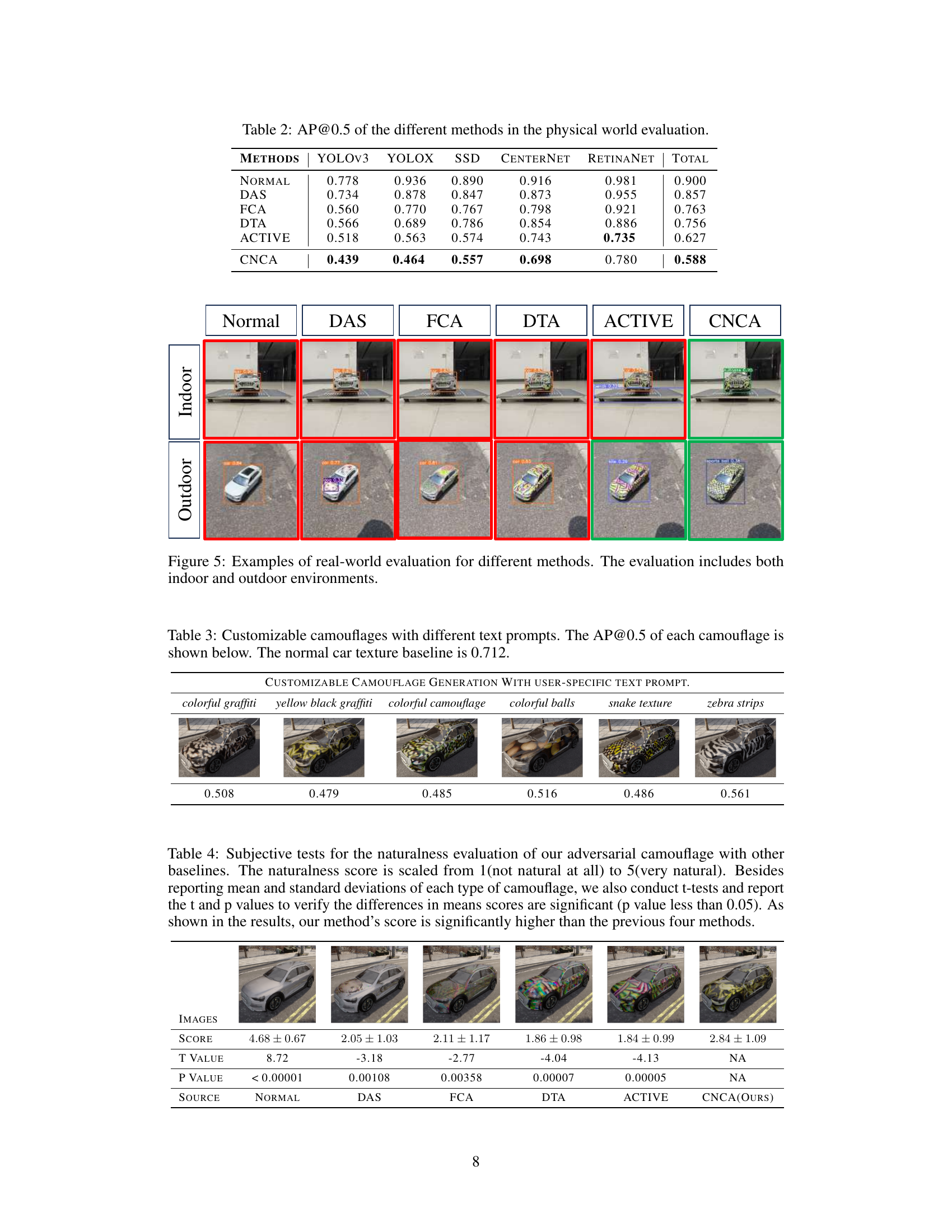

This figure shows a comparison of the attack performance (Average Precision at IoU threshold 0.5, or AP@0.5) of different adversarial camouflage methods under varying conditions. The conditions tested are different camera angles (elevation and azimuth), distances, weather (sun and fog). The graph displays how each method performs across these different viewpoints and weather effects, giving insight into the robustness of the camouflage against changes in environmental conditions and viewing perspectives. It allows one to evaluate the generalizability and effectiveness of the camouflaging techniques.

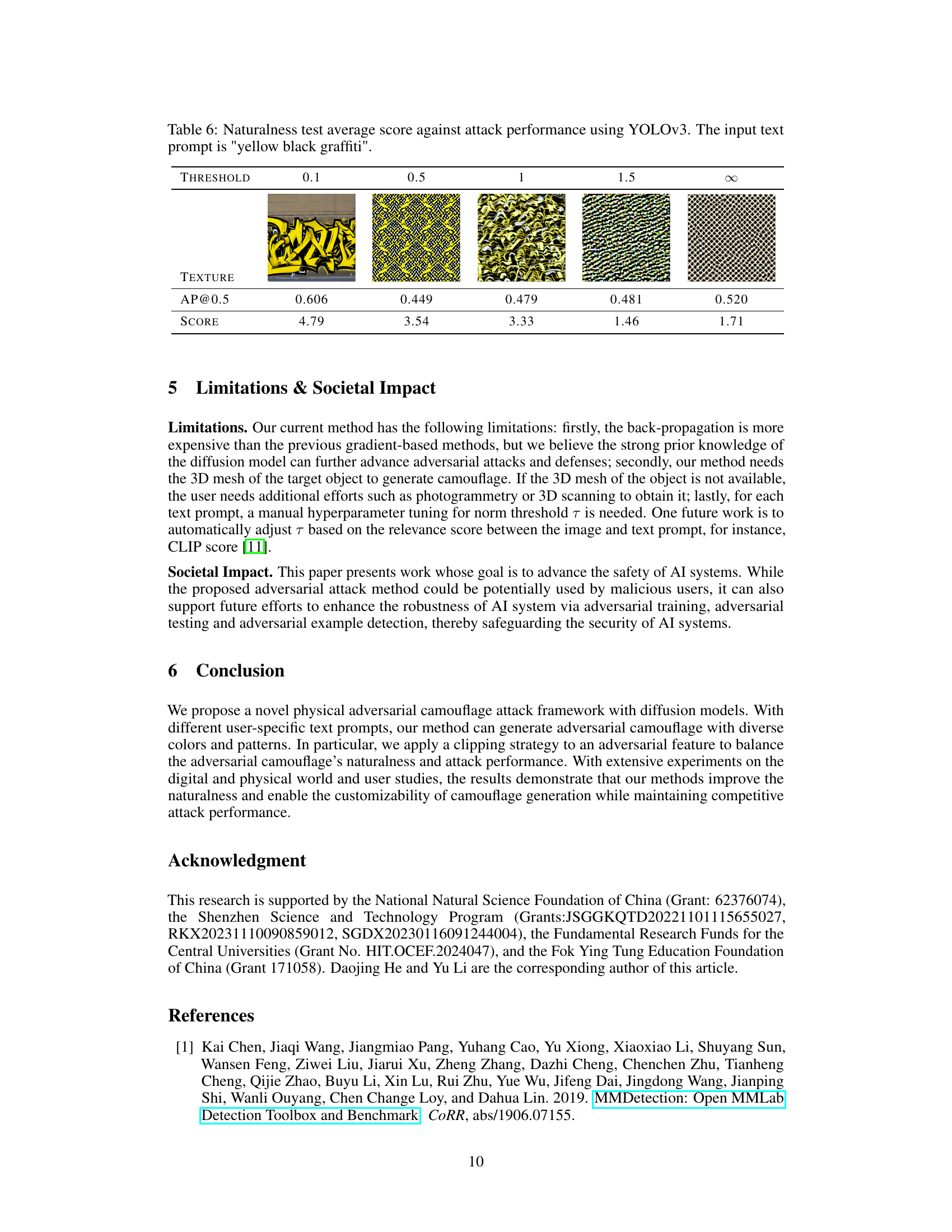

This figure shows the results of real-world evaluations of different adversarial camouflage methods, including DAS, FCA, DTA, ACTIVE, and CNCA. The images compare the detection performance of these methods in both indoor and outdoor settings, highlighting the effectiveness of CNCA in generating natural-looking camouflage that is difficult for object detectors to identify.

This figure showcases the results of the Customizable and Natural Camouflage Attack (CNCA) method. It presents five images: (a) shows a standard car, and (b) through (e) show the same car with different camouflage patterns generated by CNCA using various text prompts as input. The text prompts directly control the visual style of the camouflage, demonstrating the method’s ability to create customized and natural-looking adversarial camouflage.

This figure shows the results of a physical-world evaluation of different adversarial camouflage methods. The top row displays images taken indoors, while the bottom row displays images taken outdoors. Each column represents a different method: Normal (no camouflage), DAS, FCA, DTA, ACTIVE, and CNCA (the proposed method). The green boxes indicate successful detection of vehicles while the red boxes indicate failed detections (camouflage was effective). The image visually demonstrates the effectiveness of each method in different conditions, highlighting the CNCA method’s performance.

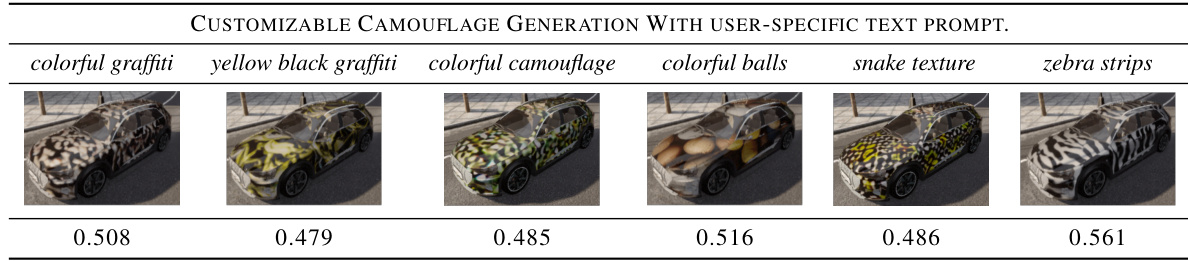

This figure showcases the effectiveness of the proposed CNCA method in generating customizable and natural-looking adversarial camouflage. It displays five images: (a) a normal car, and (b) through (e) the same car with different camouflage patterns generated by CNCA using different user-specified text prompts as input (e.g., colorful graffiti, zebra stripes). The figure demonstrates the ability of CNCA to produce diverse and realistic camouflage styles, unlike previous methods that often result in unnatural patterns.

More on tables

This table presents the Average Precision (AP) at an Intersection over Union (IoU) threshold of 0.5 for different object detection models evaluated in a physical world setting. The models used are YOLOv3, YOLOX, SSD, CenterNet, and RetinaNet. The table compares the performance of the proposed CNCA method against four baseline adversarial camouflage methods (DAS, FCA, DTA, ACTIVE) and a normal (uncamouflaged) vehicle. The ‘TOTAL’ column shows the average AP@0.5 across all five detectors. Lower numbers indicate better performance of the camouflage in evading detection.

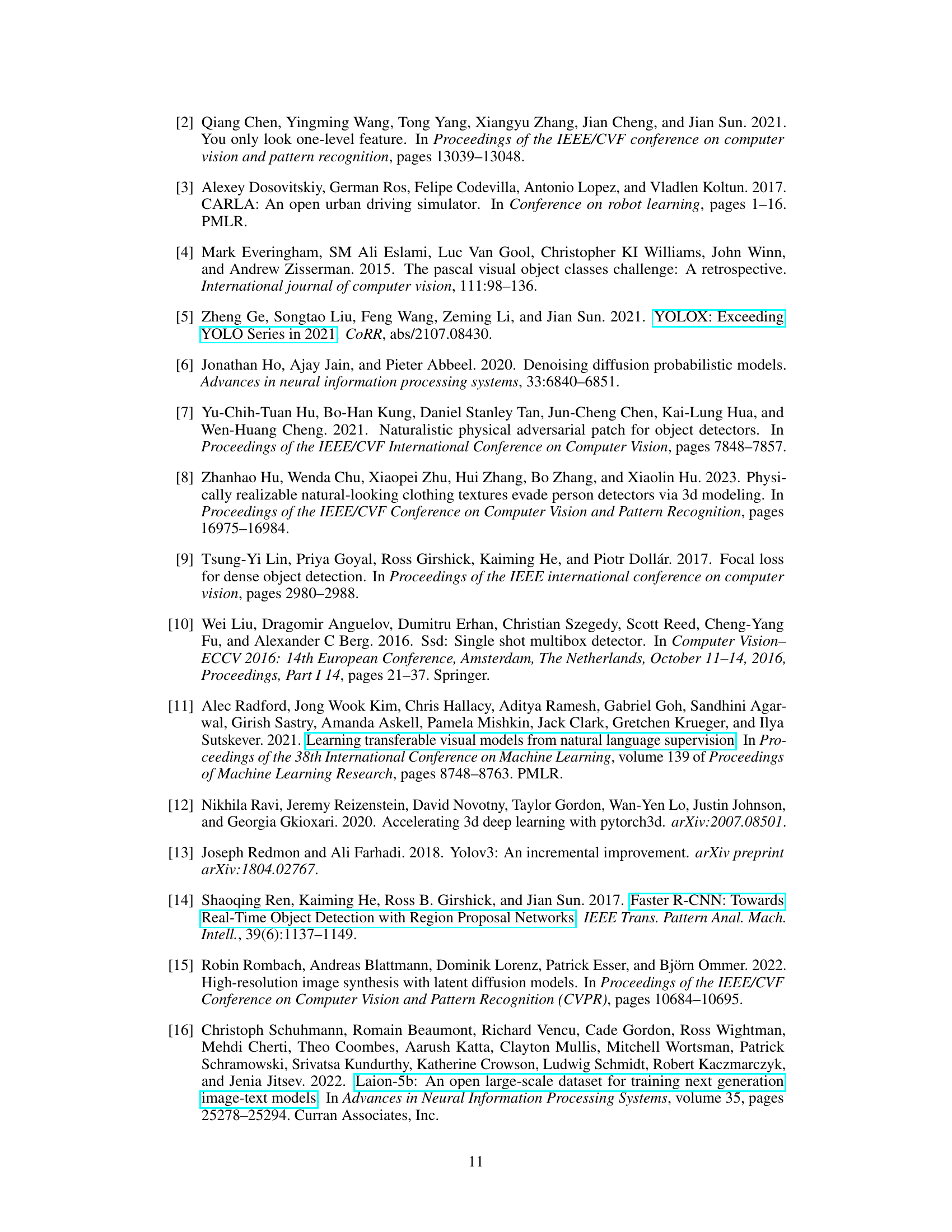

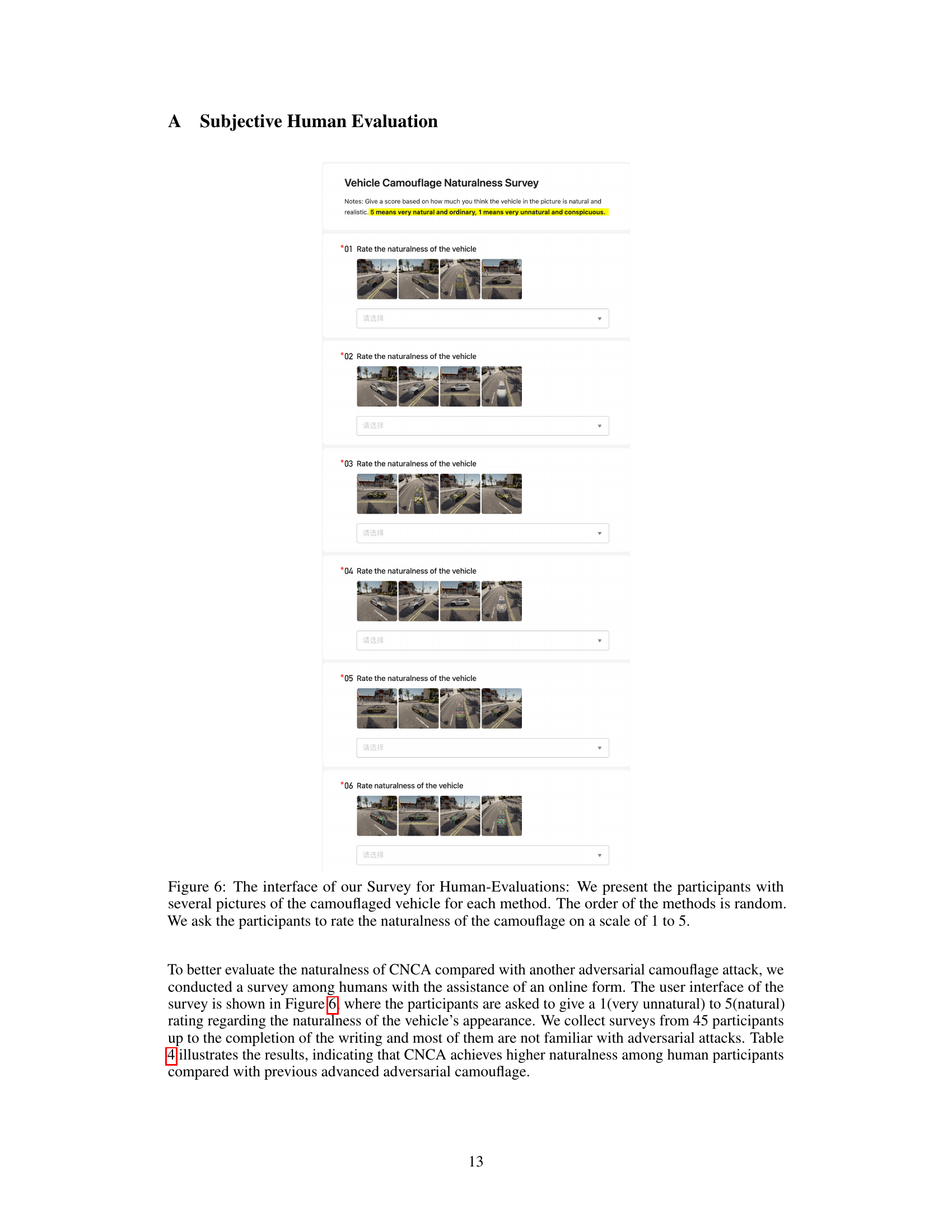

This table presents the results of a subjective human evaluation assessing the naturalness of different adversarial camouflage methods. Human participants rated the naturalness of the camouflage on a scale of 1 to 5, with 5 being the most natural. The table shows the mean and standard deviation of the ratings for each method, along with t-test results comparing CNCA to the baseline methods. The results demonstrate that CNCA generates significantly more natural-looking camouflage than existing methods.

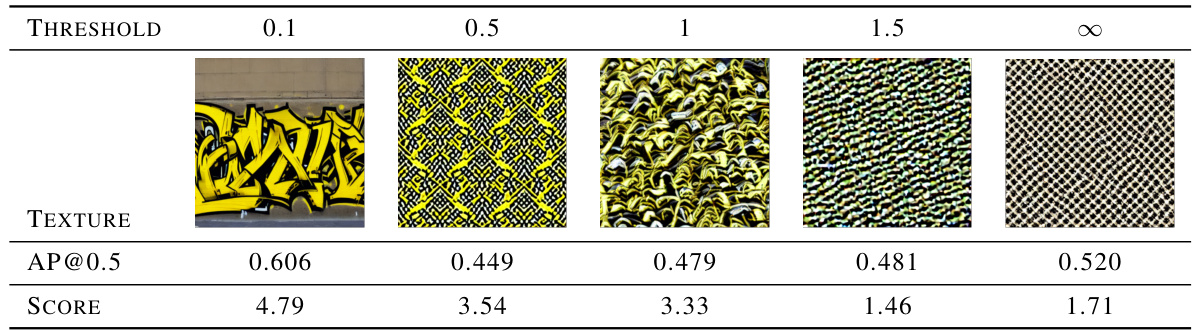

This table presents the results of a subjective evaluation of the naturalness of adversarial camouflage generated with different norm thresholds, along with their corresponding attack performance (AP@0.5) against the YOLOv3 object detector. The input text prompt used for all camouflage generation was ‘yellow black graffiti’. The threshold value controls the strength of the adversarial features, influencing the trade-off between naturalness and attack performance. Lower thresholds generally lead to more natural-looking textures but lower attack success, while higher thresholds result in less natural but more effective camouflage.

Full paper#