↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Private learning algorithms struggle with high computational costs and limited applicability due to stringent privacy requirements. Previous attempts to use public data for improvement were computationally expensive, hindering practical use. The challenge is to develop algorithms guaranteeing differential privacy while maintaining accuracy and efficiency.

This research presents new, computationally efficient algorithms to effectively leverage public data for private learning, overcoming previous limitations. These algorithms are provably efficient, offering significantly improved sample complexities compared to existing methods, especially for convex and binary classification scenarios. This work addresses a crucial gap in private learning by enabling the use of auxiliary public data, paving the way for more practical and scalable private machine learning applications.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in differential privacy and machine learning. It presents the first computationally efficient algorithms that leverage public data for private learning, addressing a major bottleneck in the field. This opens new avenues for research focusing on improving sample complexity and handling various learning settings.

Visual Insights#

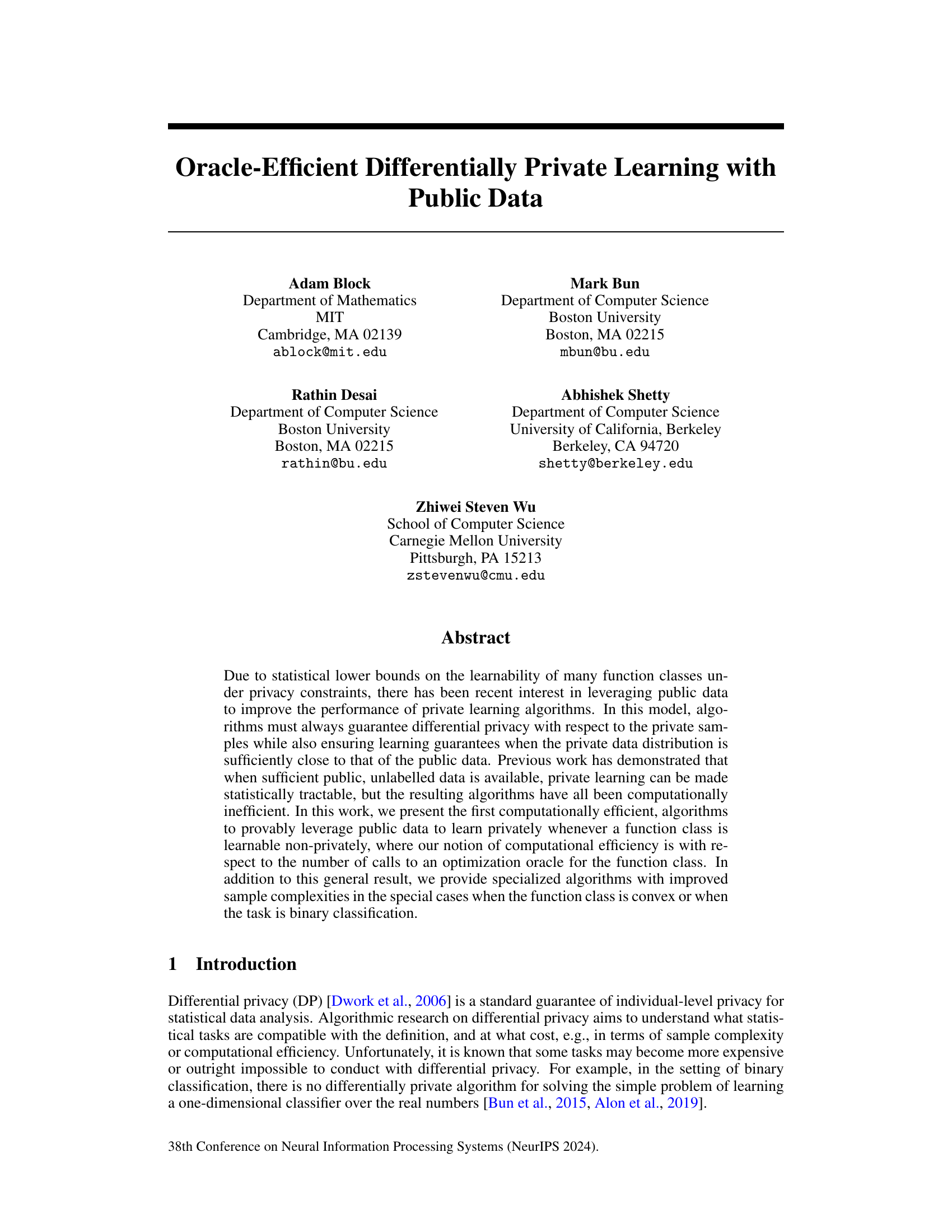

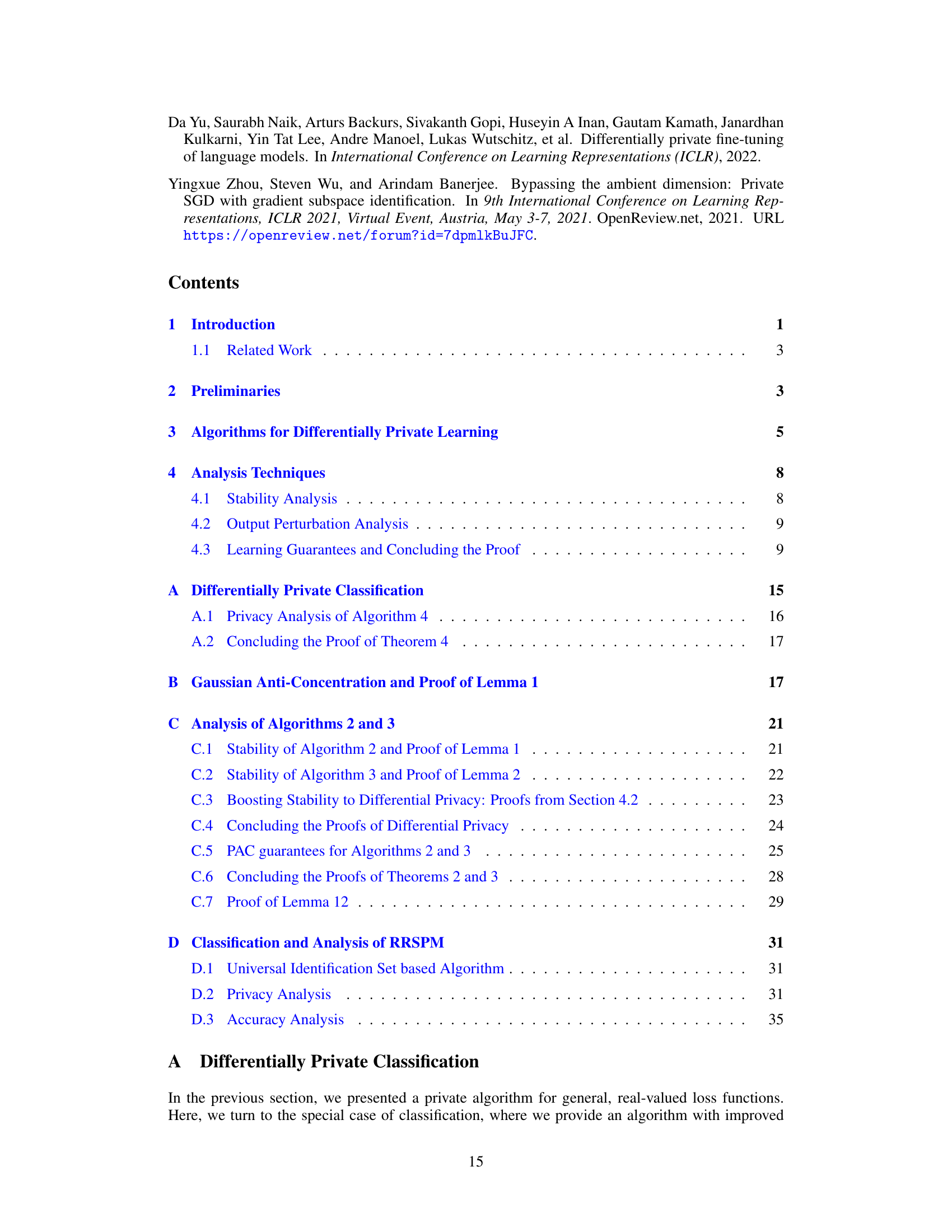

Algorithm 1 describes a method for adding noise to a function using a noise distribution Q and a scaling factor γ. The input is a function f, a noise distribution Q, a scale γ, and public data Dx = {Z1, …, Zm}. The algorithm samples noise from Q and then uses an empirical risk minimization (ERM) oracle to find a function f that minimizes the distance between f and f - γ · ζ, where ζ is the sampled noise vector.

Full paper#