↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many real-world decision-making problems are modeled as Markov Decision Processes (MDPs). Solving MDPs efficiently is challenging, especially when the transition probabilities are unknown or the state space is large. Existing algorithms often have high computational costs, especially for achieving high accuracy. Model-free methods are more memory-efficient than model-based methods, however, prior methods suffer from a sample complexity gap.

This paper introduces faster randomized algorithms for computing near-optimal policies in discounted MDPs. The key innovation lies in a novel variant of stochastic variance-reduced value iteration that carefully truncates the progress of its iterates to improve variance. This new method achieves an improved sample and time complexity, especially when given access to a generative model. This work also closes the sample complexity gap between model-free and model-based methods, representing a substantial advancement in the field.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in reinforcement learning and Markov Decision Processes (MDPs). It significantly advances the efficiency of solving MDPs, bridging the gap between model-free and model-based approaches. The faster algorithms, especially the sample-optimal one for a wide range of error tolerances, are directly applicable to numerous real-world problems. The introduction of recursive variance reduction and truncated value iteration offers novel techniques for improving the efficiency of value iteration methods. These contributions will stimulate further research into more efficient and sample-optimal MDP solving methods.

Visual Insights#

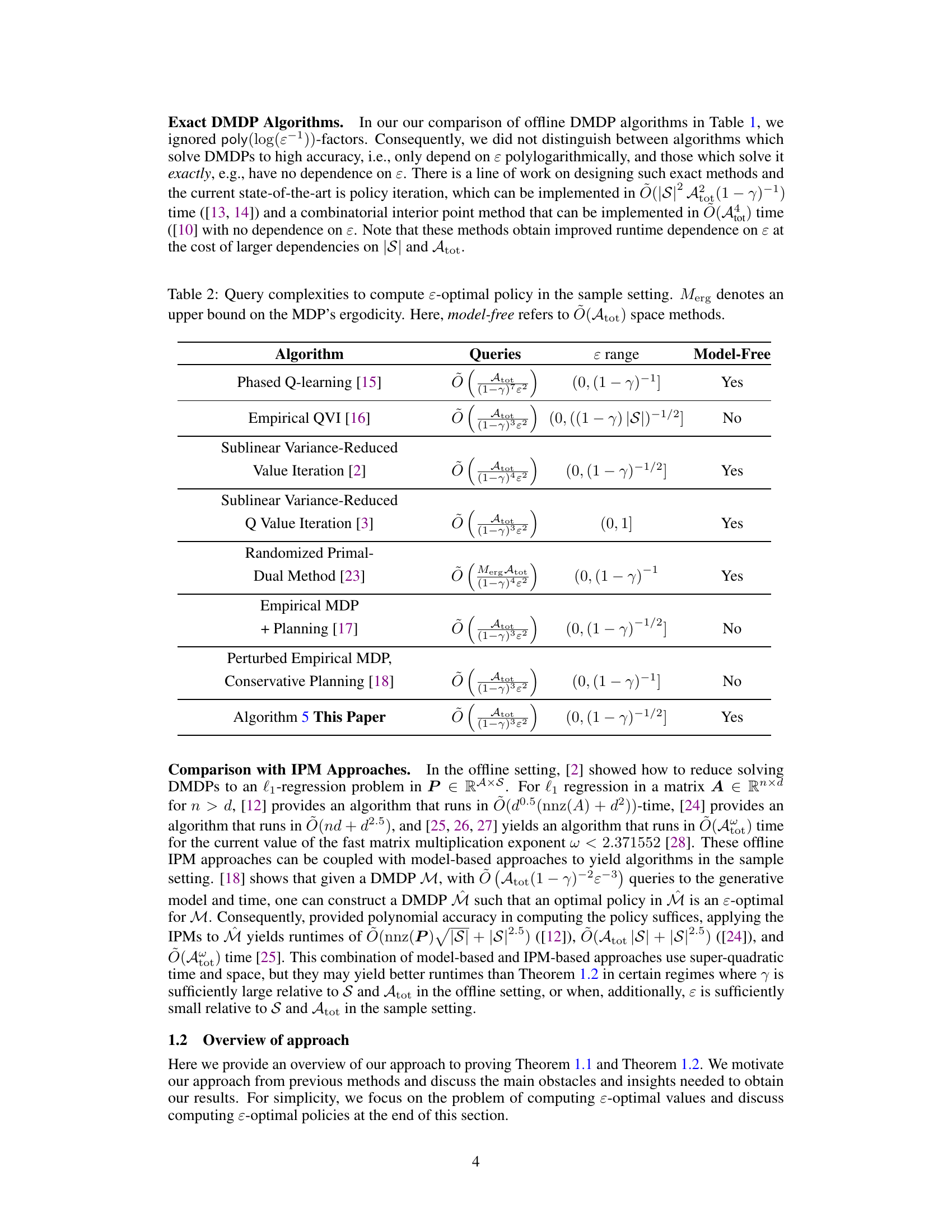

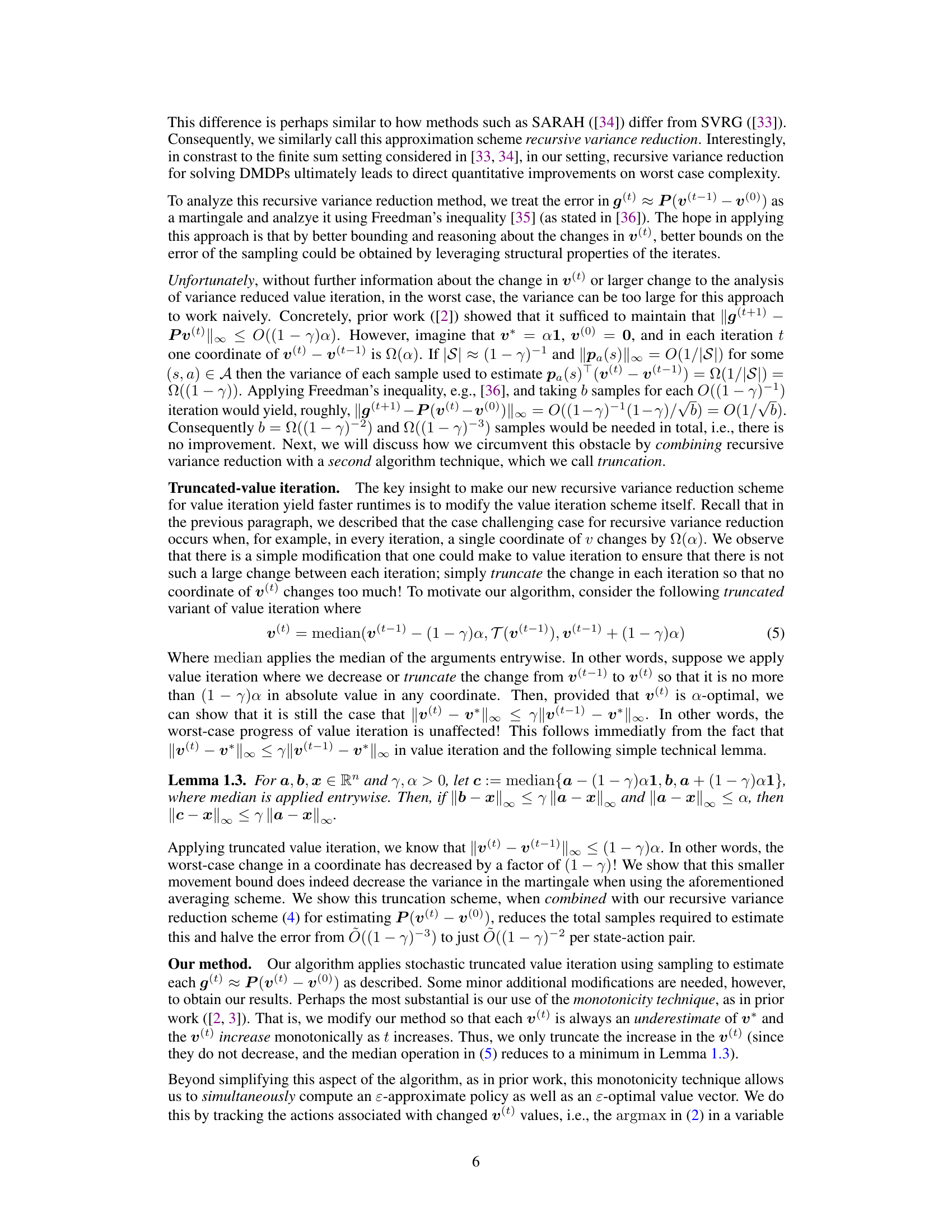

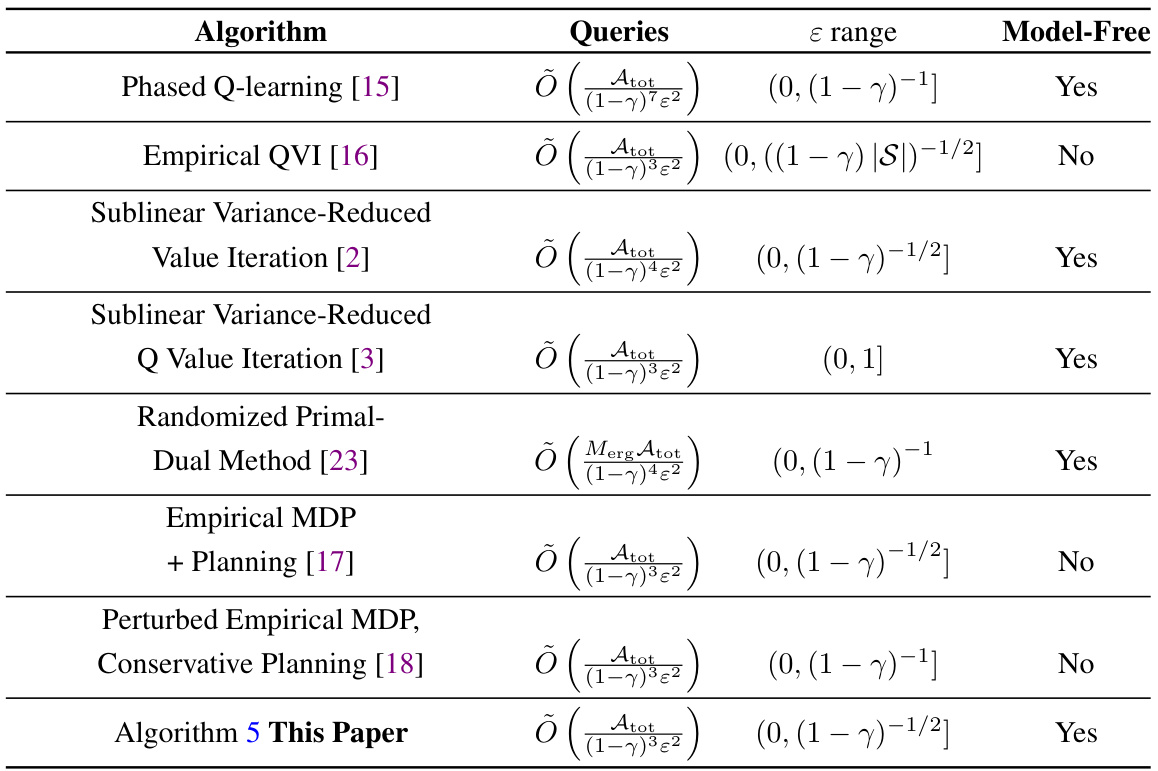

The table compares the running time and space complexity of different algorithms for computing ɛ-optimal policies in the offline setting of discounted Markov Decision Processes (DMDPs). The algorithms compared include Value Iteration, Empirical QVI, Randomized Primal-Dual Method, High Precision Variance-Reduced Value Iteration, and the algorithm presented in this paper. The table highlights the improvements achieved by the new algorithm in terms of runtime complexity, particularly when the discount factor is not too small relative to the average sparsity of rows of the transition matrix. E represents an upper bound on the ergodicity of the MDP, which affects runtime for some algorithms.

This table compares the runtime and space complexity of different algorithms for computing an ɛ-optimal policy in the offline setting of discounted Markov Decision Processes (DMDPs). The algorithms are compared based on their dependence on the number of non-zero entries in the transition matrix (nnz(P)), the total number of state-action pairs (Atot), and the discount factor (γ). The table highlights the improvement achieved by the algorithm proposed in this paper (Algorithm 4).

In-depth insights#

VRVI Enhancements#

The heading ‘VRVI Enhancements’ suggests improvements to Variance-Reduced Value Iteration (VRVI), a core algorithm for solving Markov Decision Processes (MDPs). Key enhancements could involve variance reduction techniques, improving sampling efficiency, and potentially incorporating novel truncation methods to manage the variance of iterates more effectively. Such enhancements could focus on refining existing stochastic value iteration frameworks by carefully truncating iterates’ progress, thus reducing the variance of new sampling procedures. This might involve a recursive variance reduction scheme, cleverly updating the estimates of the utilities. An important aspect would be the trade-off between computational complexity and sample efficiency. The improved algorithm could lead to faster convergence, potentially achieving near-optimal runtime in specific settings while maintaining model-free characteristics and space efficiency. This is a critical point as it addresses the sample complexity gap between model-free and model-based methods. Ultimately, these advancements could contribute to significantly improved algorithms for solving MDPs, particularly in large-scale applications.

Truncated Iteration#

Truncated iteration methods, in the context of reinforcement learning and Markov Decision Processes (MDPs), aim to improve the efficiency and stability of value iteration algorithms. Standard value iteration can be slow to converge, especially in large state spaces. Truncation strategically limits the updates to value estimates in each iteration, preventing drastic changes that could hinder convergence or increase variance. This controlled update process, by limiting the magnitude of value function changes, reduces the variance of stochastic approximations and potentially accelerates convergence. By carefully managing the trade-off between the size of updates and the convergence rate, truncated methods offer an advantageous way to enhance the performance of value and policy iteration algorithms, particularly in stochastic settings where sampling introduces noise. The truncation mechanism helps bound the error accumulation, leading to improved sample complexity and computational efficiency. Recursive variance reduction techniques, often coupled with truncated iteration, further boost performance by reusing previously computed values to reduce the amount of new computation needed in subsequent iterations.

Sample Complexity#

The paper significantly advances our understanding of sample complexity in reinforcement learning, particularly for discounted Markov decision processes (DMDPs). It bridges the gap between model-free and model-based approaches, showing that model-free methods can achieve near-optimal sample complexity for a wider range of accuracy parameters (ε) than previously thought. This is achieved through novel variance reduction and truncation techniques applied to value iteration, resulting in improved runtime and sample efficiency. The analysis carefully considers the interplay between the discount factor (γ), the desired accuracy, and the structure of the MDP. The results highlight the importance of recursive variance reduction and demonstrate how smart sampling strategies can yield considerable improvements over the naive approaches. The authors further demonstrate problem-dependent bounds for sample complexity, showing that in specific scenarios (highly-mixing MDPs, deterministic MDPs) even tighter results are possible. This work offers valuable insights and improved algorithms for practical applications of reinforcement learning where data is scarce or expensive to acquire.

Offline Algorithm#

The heading ‘Offline Algorithm’ suggests a section detailing algorithms that operate using a pre-existing, complete model of the Markov Decision Process (MDP). Unlike online algorithms which learn and adapt as they go, an offline approach leverages all available data upfront. This allows for potentially faster computation since the algorithm doesn’t need to continuously update its understanding of the environment. However, the effectiveness hinges on the accuracy and completeness of the initial MDP model; inaccuracies will directly impact the quality of the solution. The section likely covers specific techniques optimized for this offline setting, possibly including modifications to classical value iteration or other dynamic programming methods. Computational efficiency is likely a key focus, aiming to minimize runtime complexity, perhaps by exploiting the structure of the provided MDP data, such as sparsity in the transition matrix. The discussion likely contrasts offline algorithms with their online counterparts, highlighting the trade-offs between computational speed, data requirements, and the potential for adaptation to changing environments.

Future Directions#

The paper’s core contribution lies in improving the efficiency of solving discounted Markov Decision Processes (DMDPs), particularly in sample-efficient settings. Future research directions could focus on refining the constants within the algorithms to further reduce the sample complexity, potentially achieving near-optimal runtime for a broader range of epsilon values. Investigating alternative truncation techniques beyond the median method could unlock additional performance improvements. Bridging the gap between model-free and model-based methods more completely represents a significant avenue for future work, potentially by designing hybrid approaches that leverage the strengths of both. Additionally, exploring the theoretical limits of sample-efficient DMDP solving and establishing tighter lower bounds would strengthen the field’s understanding of fundamental limitations. Finally, applying these advancements to real-world problems within reinforcement learning presents a compelling opportunity for practical impact, including but not limited to optimization in large-scale systems and robotics.

More visual insights#

More on tables

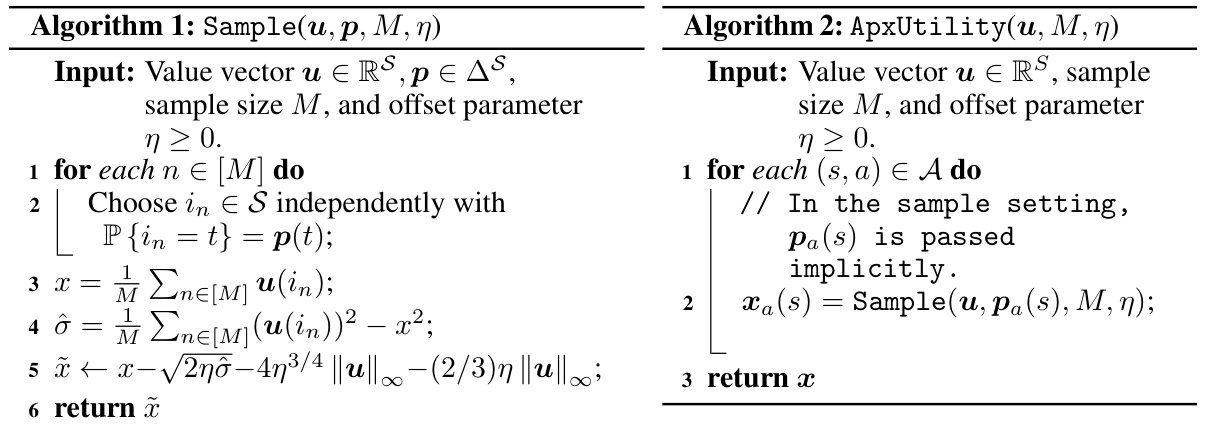

This table compares the query complexities of different algorithms for solving discounted Markov Decision Processes (DMDPs) in a sample setting where the transition probabilities are unknown but accessible through a generative model. The algorithms are categorized by their ɛ range (accuracy) and whether they are model-free (using Õ(Atot) space). The table highlights the improved query complexity achieved by the authors’ algorithm (Algorithm 5).

This table compares the runtime and space complexity of different algorithms for computing ε-optimal policies in the offline setting of discounted Markov Decision Processes (DMDPs). The algorithms include classic Value Iteration, several randomized methods like Empirical QVI and Randomized Primal-Dual, and the High Precision Variance-Reduced Value Iteration. The table highlights the improvement achieved by the proposed algorithm (Algorithm 4 in the paper) in terms of runtime complexity, achieving Õ(nnz(P) + Atot(1 − γ)−2) compared to previous state-of-the-art methods, especially when considering the sparsity of the transition matrix (nnz(P)). E represents an upper bound on the ergodicity of the MDP, and its inclusion in some runtime complexities signifies the algorithm’s performance dependence on the MDP’s mixing properties.

Full paper#