↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Current benchmarks for garment manipulation lack diversity and realism, hindering progress in developing robust AI systems. This limitation stems from the complexity of garment dynamics and the challenges of transferring learned skills from simulation to the real world. The sim-to-real gap poses a significant challenge for researchers.

GarmentLab directly addresses these issues by providing a unified, content-rich simulation environment and benchmark. It features multiple simulation methods (FEM and PBD), a large-scale dataset of diverse garment models, robotic systems, and other objects. This allows researchers to evaluate state-of-the-art algorithms across a wide range of tasks, highlighting their limitations and promoting research in areas such as sim-to-real transfer and long-horizon planning. The real-world benchmark further validates the effectiveness of the proposed approaches.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in robotics and computer vision due to its introduction of GarmentLab, a unified simulation and benchmark for garment manipulation. It addresses the sim-to-real gap, a significant challenge in the field, by offering diverse and realistic simulations, abundant assets, and a comprehensive benchmark across various tasks. This opens new avenues for developing and evaluating algorithms for garment manipulation, advancing the field of embodied AI.

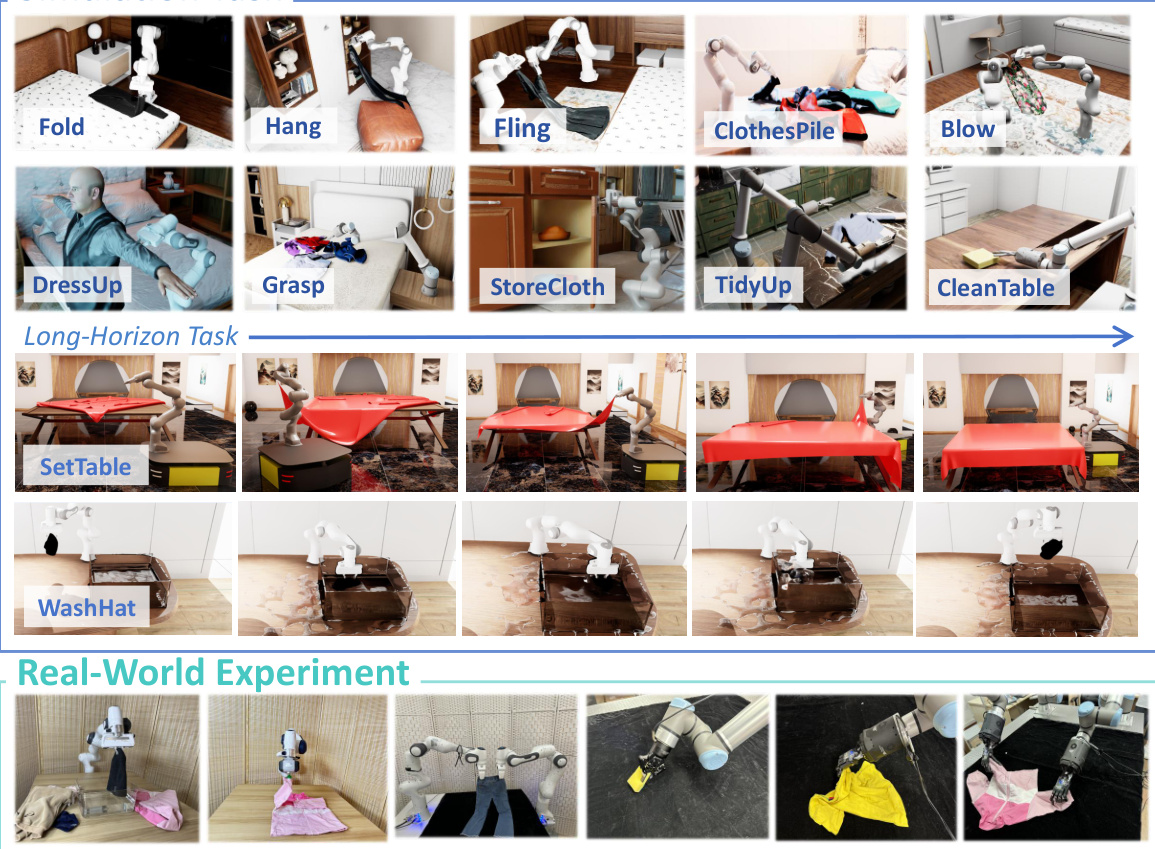

Visual Insights#

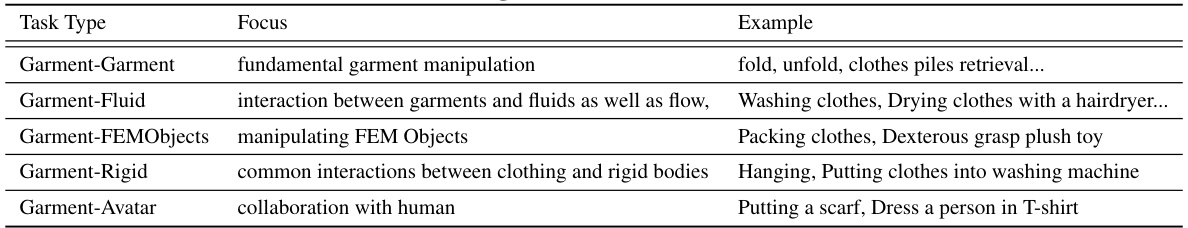

This figure shows various tasks included in the GarmentLab benchmark. The tasks are categorized into five groups based on physical interactions: Garment-Garment, Garment-Fluid, Garment-FEMObjects, Garment-Rigid, and Garment-Avatar. Examples of tasks include folding, hanging, flinging, clothes piles, blowing, dress up, grasping, storing cloth, tidying up, and cleaning tables. The figure also shows three long-horizon tasks: organizing clothes, washing clothes, and setting a table. The bottom row of the image shows the real-world application of these tasks.

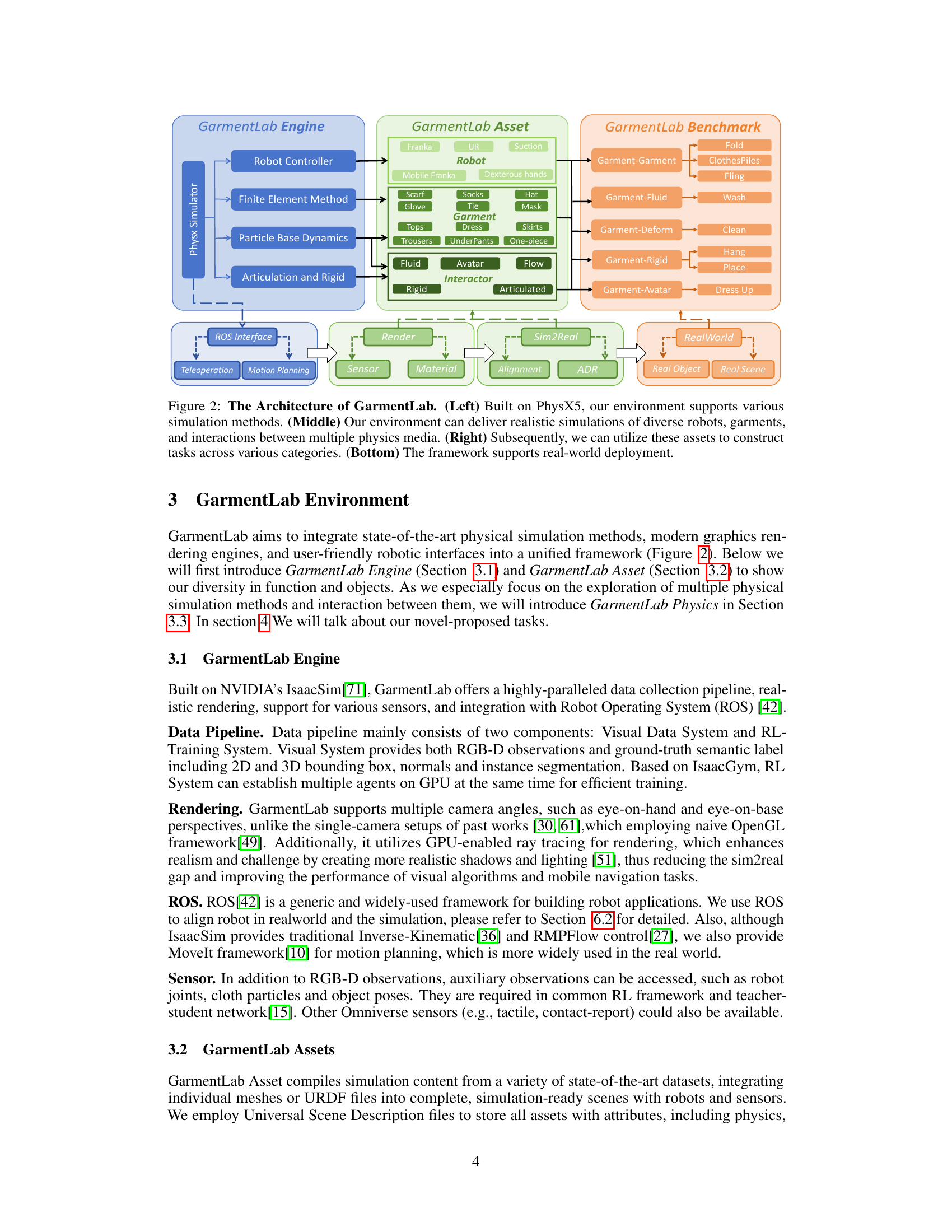

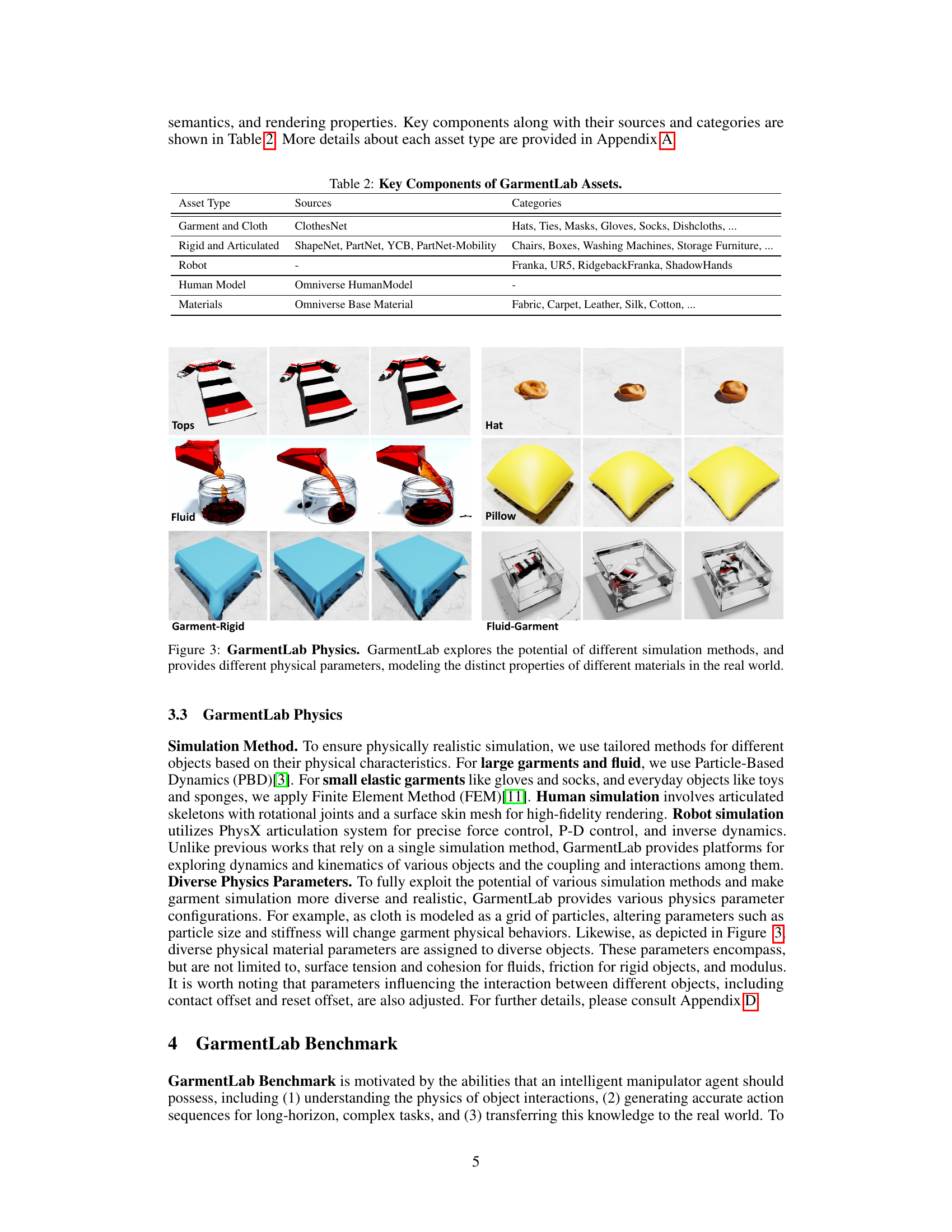

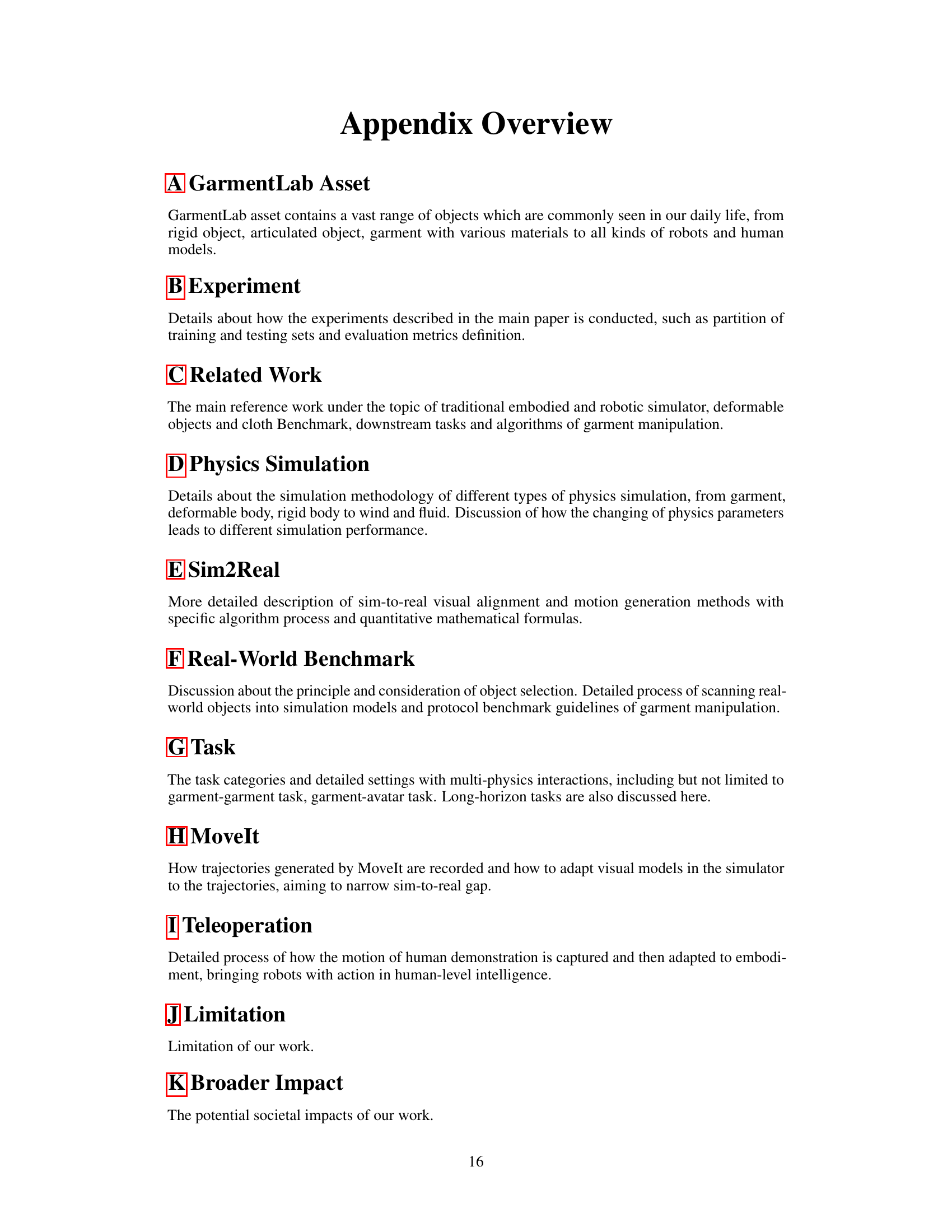

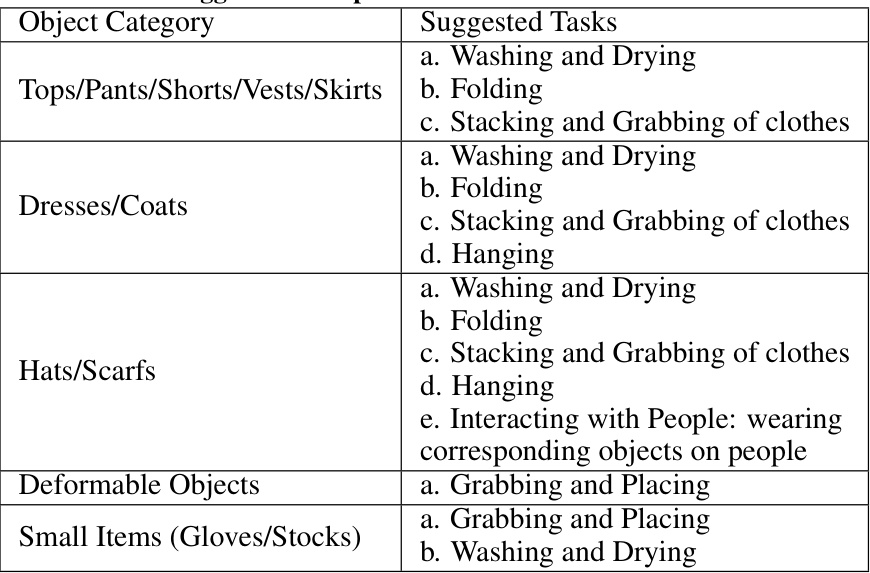

This table lists the key components of GarmentLab Assets, categorized by asset type (Garment and Cloth, Rigid and Articulated, Robot, Human Model, Materials). For each category, it specifies the sources of the assets (e.g., ClothesNet, ShapeNet) and provides examples of the categories included (e.g., Hats, Ties, Masks for garments; Franka, UR5 for robots). This table gives a high-level overview of the diverse range of assets available in the GarmentLab environment for simulation and benchmarking.

In-depth insights#

Garment Manipulation#

Garment manipulation, a subfield of robotics, presents unique challenges due to the inherent flexibility and complex physics of fabrics. Current research is limited by a lack of diverse and realistic benchmarks, hindering progress in developing robust and generalizable algorithms. Significant advancements are needed in both simulation and real-world data collection to address the sim-to-real gap. The development of physically accurate garment simulation engines that can model various fabrics and interactions with other objects (rigid bodies, fluids, human hands) is crucial. Improved vision algorithms are essential for accurate perception and state estimation in the presence of complex deformations and occlusions. The exploration of reinforcement learning and imitation learning approaches offers potential for learning complex manipulation skills, but requires substantial training data and careful consideration of reward functions. Future work should focus on developing more comprehensive benchmarks, improving simulation fidelity, and integrating advanced perception and control strategies to achieve truly dexterous and adaptable garment manipulation capabilities.

Sim-to-Real Gap#

The Sim-to-Real gap, a pervasive challenge in robotics, is a core focus in the paper. The authors acknowledge that successful algorithms in simulation often fail to translate directly to real-world scenarios due to differences in environmental factors, sensor noise, and actuator limitations. The paper directly addresses this by presenting a benchmark that includes real-world data collection, emphasizing the need for algorithms to generalize effectively. They also detail sim-to-real algorithms to help bridge this gap, highlighting the importance of data alignment and robust methods for handling discrepancies between simulated and real data. The incorporation of multiple simulation methods (FEM and PBD) further attempts to capture the nuances of real-world physics, reducing the reliance on potentially unrealistic simulations. Ultimately, the work’s focus on sim-to-real challenges underscores the necessity of comprehensive testing and robust algorithm design to achieve true generalization and practical application in real-world robotic manipulation.

Benchmark Analysis#

A robust benchmark analysis is crucial for evaluating the effectiveness of garment manipulation algorithms. It should involve a diverse range of tasks, encompassing various garment types, robot systems, and physical interactions (e.g., garment-garment, garment-fluid, garment-rigid). Quantitative metrics, such as success rates and task completion times, are essential for objective comparison. Qualitative analysis, including visual inspection of the manipulation process, is equally important for identifying subtle issues and potential areas of improvement. Sim-to-real gap analysis is critical, comparing algorithm performance in simulation versus the real world to highlight challenges and guide future research. Generalization capability testing is also vital, assessing algorithm performance on unseen garments or situations. The analysis should detail the strengths and weaknesses of different algorithms, identifying the limitations and challenges that hinder progress towards robust garment manipulation capabilities. A thorough benchmark analysis provides valuable insights for guiding future research and development in the field.

Physics Engines#

Physics engines are crucial for simulating realistic garment behavior in virtual environments. A robust physics engine should accurately model the complex interactions between different materials such as fabrics, rigid bodies, and fluids. Key considerations include choosing a simulation method (e.g., finite element method (FEM), mass-spring system, or particle-based dynamics (PBD)) that balances accuracy with computational efficiency. Furthermore, the engine needs to handle a wide range of physical properties like elasticity, friction, and collision detection for diverse garment types and environmental interactions. Accurate parameterization is crucial, allowing researchers to fine-tune the simulation’s behavior to match real-world observations. The choice of engine significantly impacts the realism and efficiency of simulations, affecting both the training and testing of AI agents designed to manipulate garments. A high-quality physics engine is critical for bridging the gap between simulated and real-world garment manipulation.

Future Directions#

Future research directions in garment manipulation should prioritize bridging the sim-to-real gap more effectively. This includes developing more robust and versatile simulation environments that accurately capture the complex physics of fabric deformation, including factors like drape, shear, and friction. Improved sim-to-real transfer techniques are crucial, potentially leveraging advancements in domain adaptation and self-supervised learning to enhance the generalization capabilities of algorithms trained in simulation. The development of more diverse and challenging benchmarks is also essential for evaluating progress. This necessitates incorporating a wider array of garment types, manipulation tasks (including long-horizon sequences), and robotic systems. Finally, exploration of advanced sensor modalities, beyond RGB-D, for capturing nuanced garment properties is important, along with research into human-robot collaborative approaches that enable effective interaction and intuitive garment manipulation.

More visual insights#

More on figures

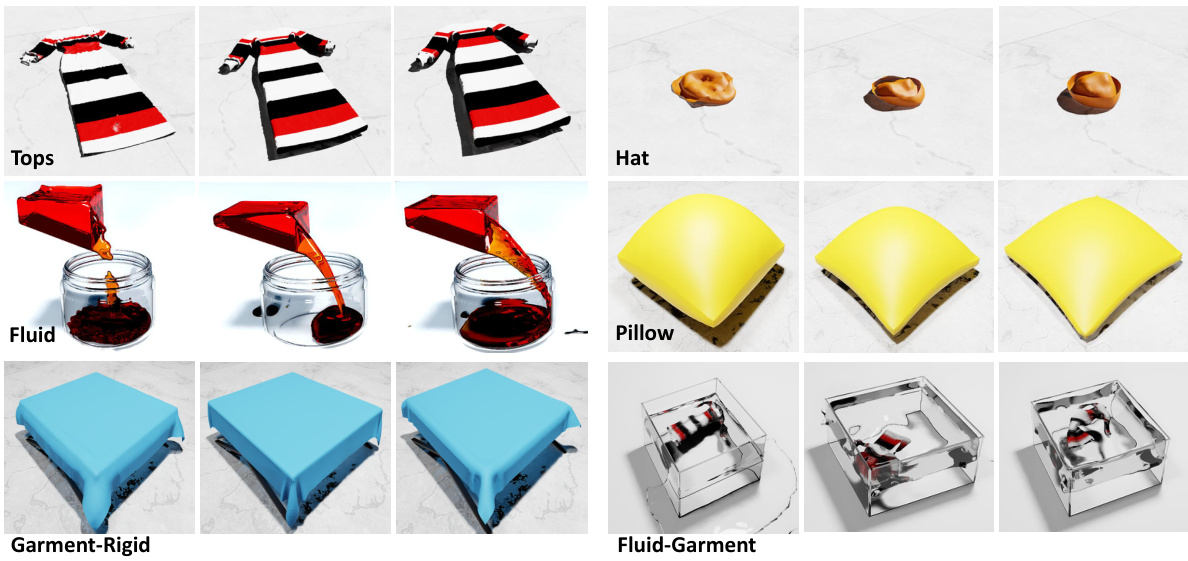

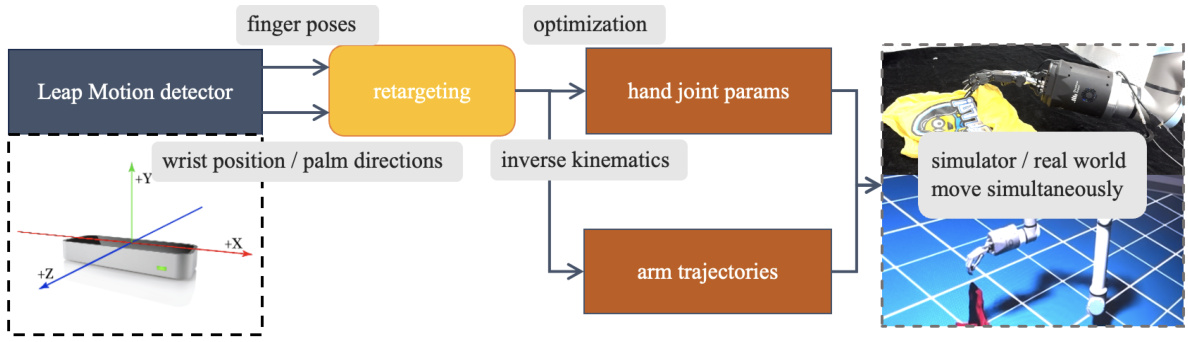

GarmentLab is a unified simulation and benchmark environment for garment manipulation. It provides realistic simulations of various garment types with different physical properties (multi-physics), using methods such as FEM and PBD. The benchmark includes a wide range of tasks performed by different robotic systems and manipulators in both simulated and real-world settings, allowing for evaluation of state-of-the-art vision methods, reinforcement learning, and imitation learning approaches. The figure visually depicts the three key aspects of GarmentLab: MultiPhysics simulation, Teleoperation capabilities for data collection, and a Real-World Benchmark for sim-to-real transfer.

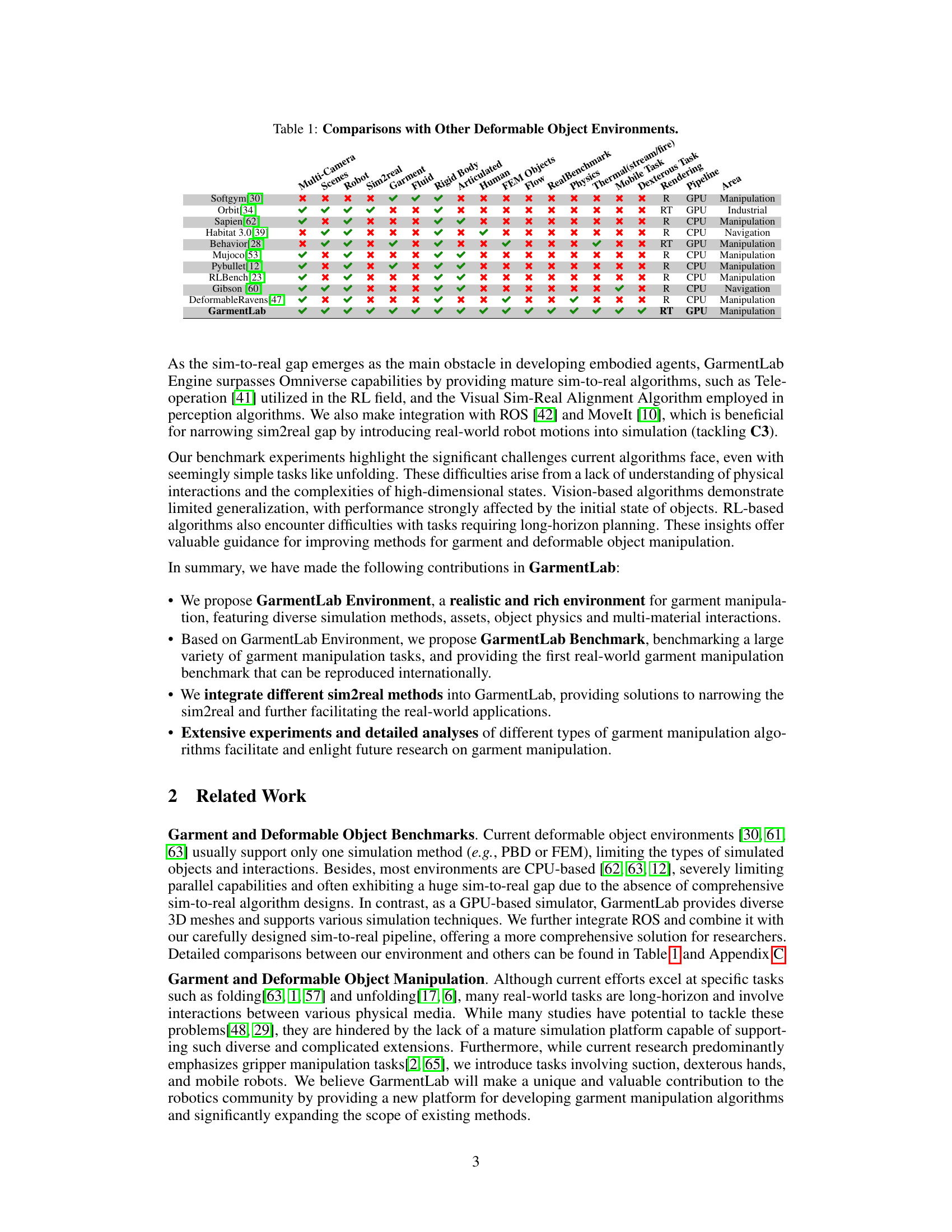

The figure shows the overall architecture of GarmentLab, a unified simulation and benchmark for garment manipulation. It highlights the three main components: the GarmentLab Engine (left), which uses various simulation methods (PhysX5, FEM, PBD) and integrates with ROS for real-world robot control; the GarmentLab Asset (middle), which includes a large-scale dataset of garments, robots, and other objects; and the GarmentLab Benchmark (right), which defines a set of tasks for evaluating garment manipulation algorithms in both simulation and real-world settings. The bottom part shows the sim-to-real pipeline.

This figure shows various tasks included in the GarmentLab benchmark. These tasks are categorized into five groups based on physical interactions: Garment-Garment, Garment-Fluid, Garment-FEMObjects, Garment-Rigid, and Garment-Avatar. The tasks range in complexity from simple manipulation actions (e.g., folding, hanging) to complex, long-horizon tasks requiring planning and integration of multiple skills (e.g., organizing clothes, washing clothes, setting a table, dressing up). The bottom row displays the real-world execution of these complex tasks, highlighting the sim-to-real transfer capabilities of GarmentLab.

This figure shows various tasks included in the GarmentLab benchmark. The top two rows illustrate different simulation tasks categorized by the type of interaction (Garment-Garment, Garment-Fluid, Garment-FEMObjects, Garment-Rigid, and Garment-Avatar). The bottom row displays real-world experiments showcasing the transfer of simulated learning to real-world scenarios.

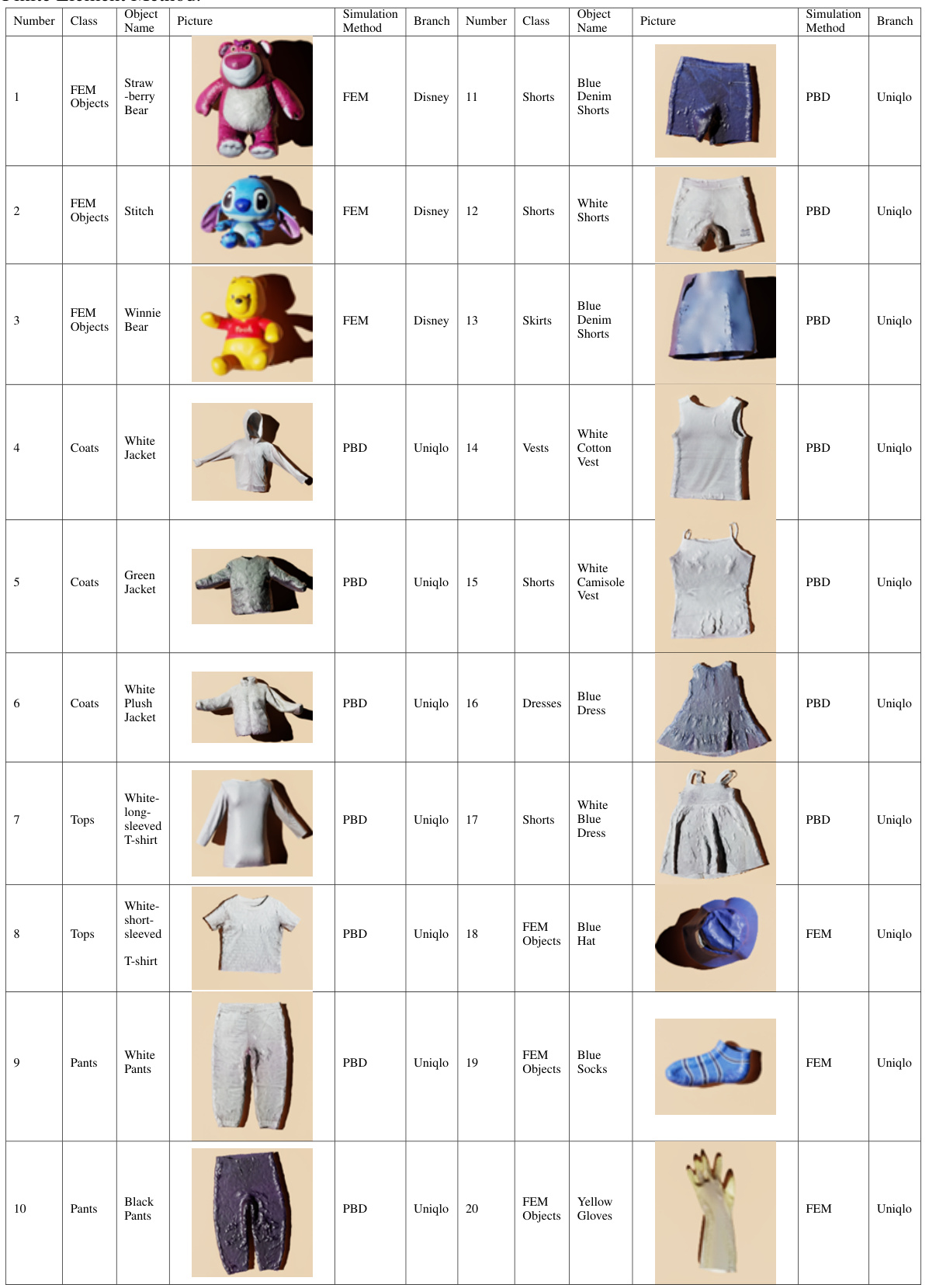

This figure shows the process of creating a real-world benchmark for deformable objects. Part (a) illustrates the steps involved in converting real-world objects into simulation assets, including 3D scanning and post-processing. Part (b) showcases examples of various objects (garments, toys, household items) in both the simulation environment and real-world scenarios, along with the results of robot manipulation on those objects.

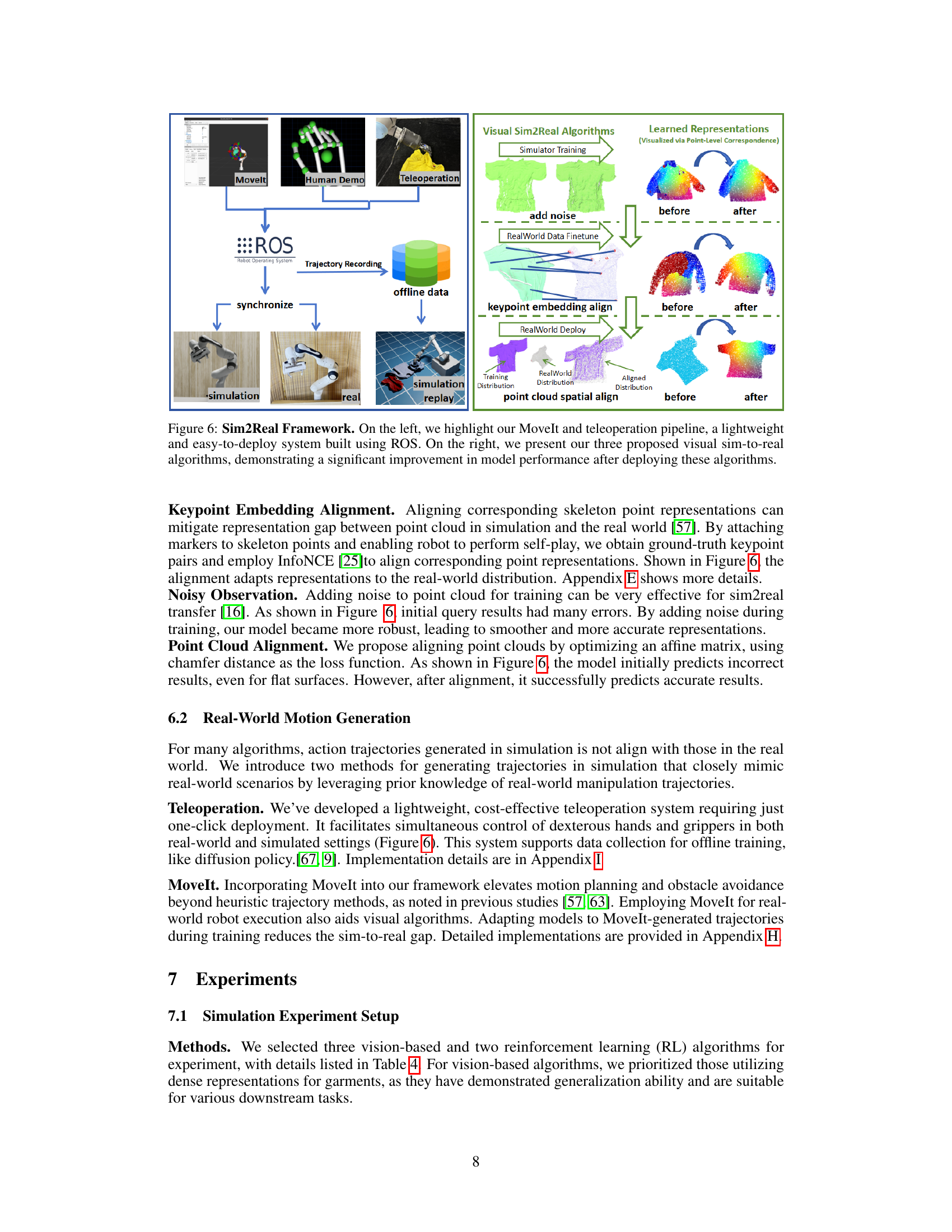

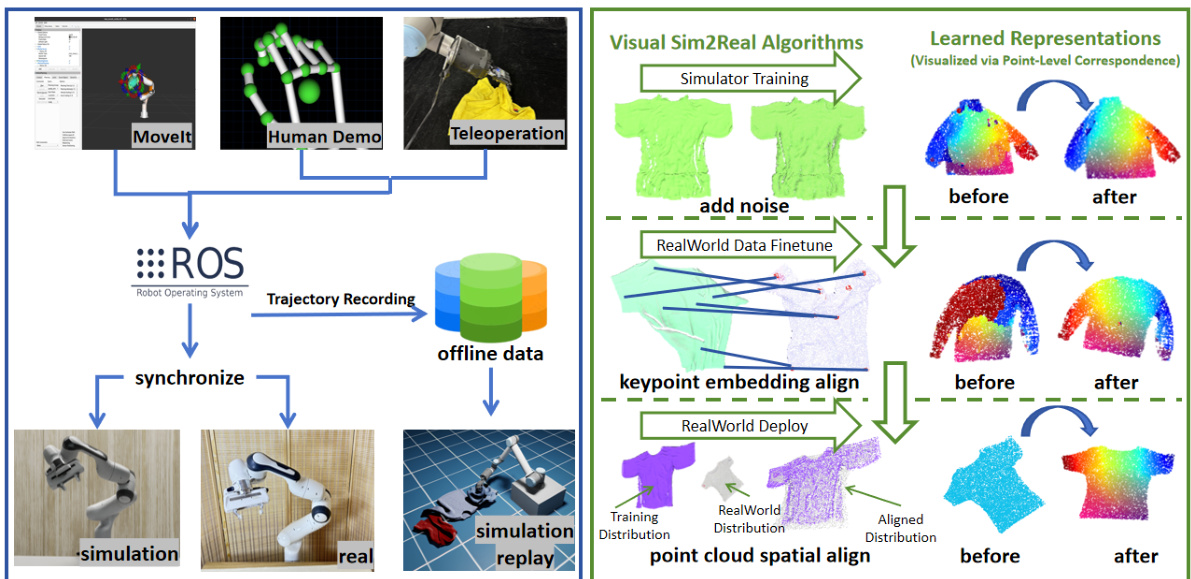

This figure illustrates the GarmentLab’s Sim2Real framework. The left side shows the teleoperation and MoveIt pipelines, utilizing ROS for a lightweight and easily deployable system. The right side showcases three visual Sim2Real algorithms: Keypoint Embedding Alignment, Noisy Observation, and Point Cloud Alignment. Each algorithm’s effect on learned representations (visualized through point-level correspondence) is shown, highlighting improved performance after deploying these algorithms in a real-world setting.

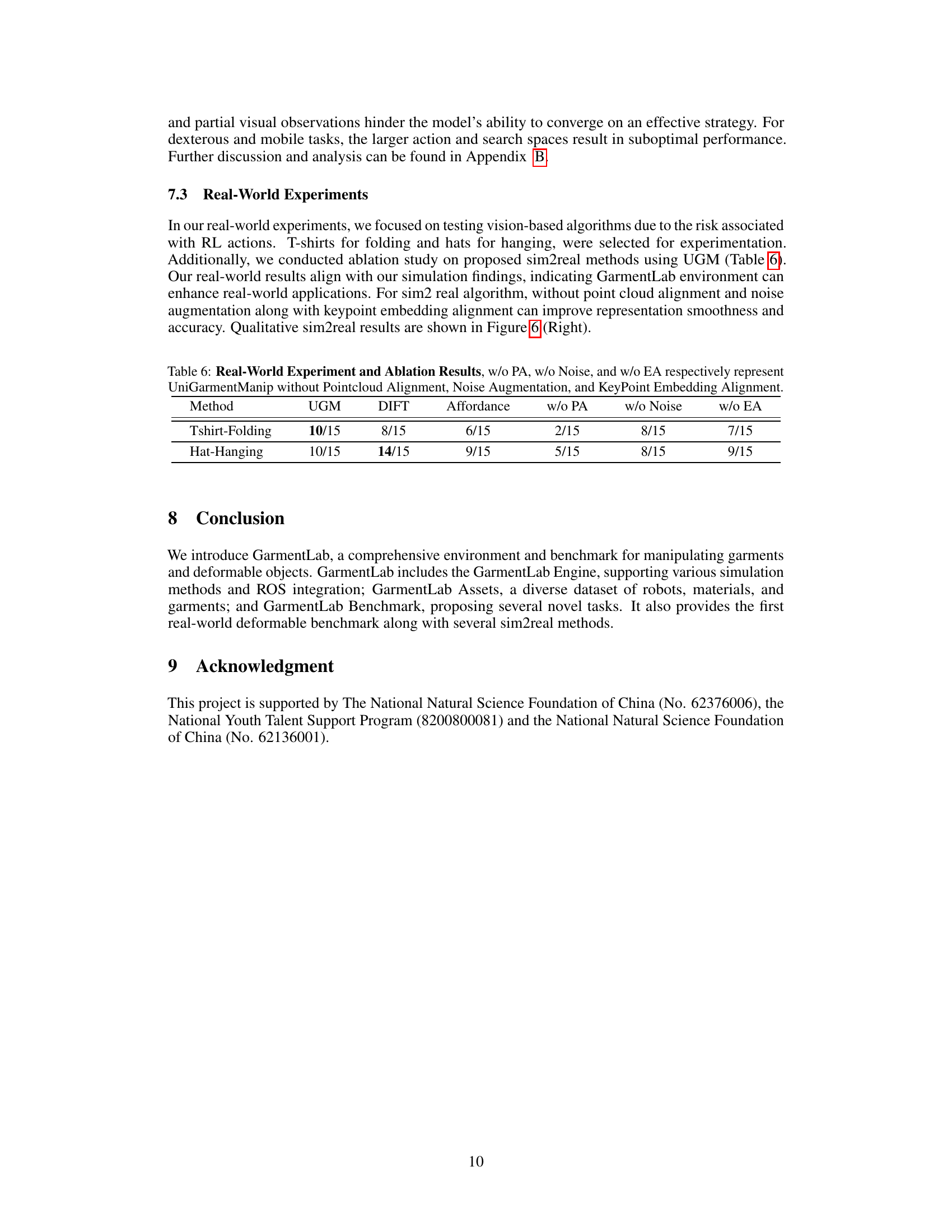

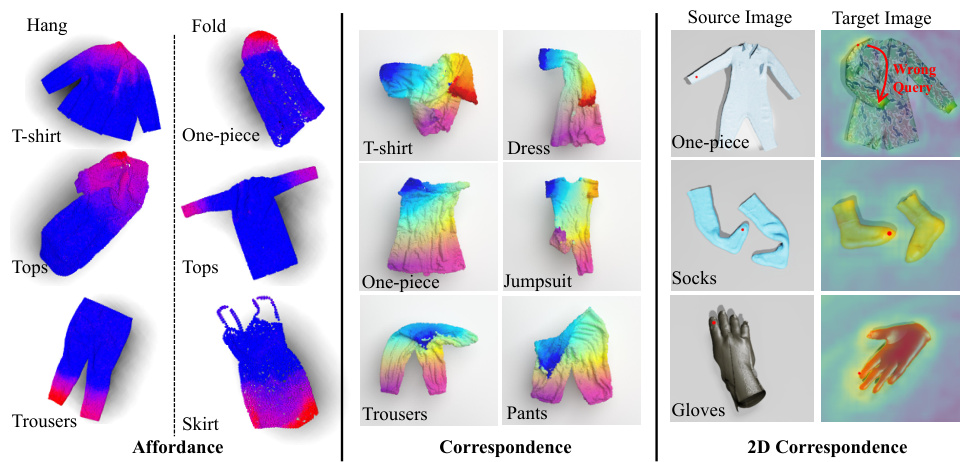

This figure shows a qualitative comparison of three vision-based algorithms (Affordance, Correspondence, and DIFT) on garment manipulation tasks. The left column displays results from the Affordance algorithm, the middle column shows results from the Correspondence algorithm, and the right column shows results from the DIFT algorithm. The images show the predicted manipulation results overlaid on the source images of the garments. The caption notes that the DIFT algorithm shows some errors in its predictions.

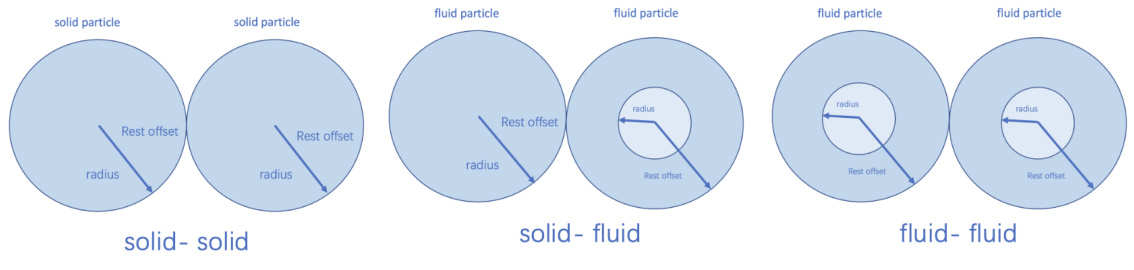

This figure illustrates the different types of particle interactions considered in the GarmentLab simulation. It shows how the simulation handles interactions between solid particles (e.g., parts of a garment), fluid particles (e.g., water), and combinations of solid and fluid particles. The diagrams depict the various scenarios, including the ‘rest offset’ parameter which influences the way particles interact, particularly at boundaries between different material types.

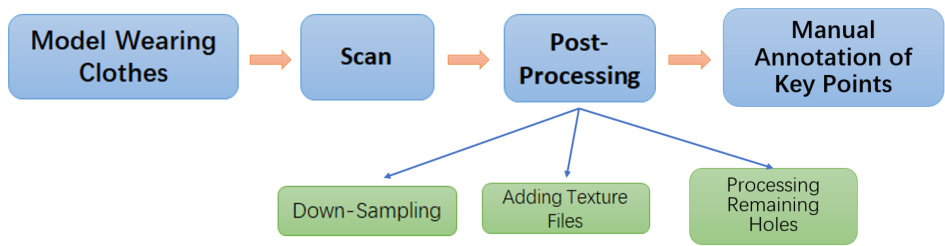

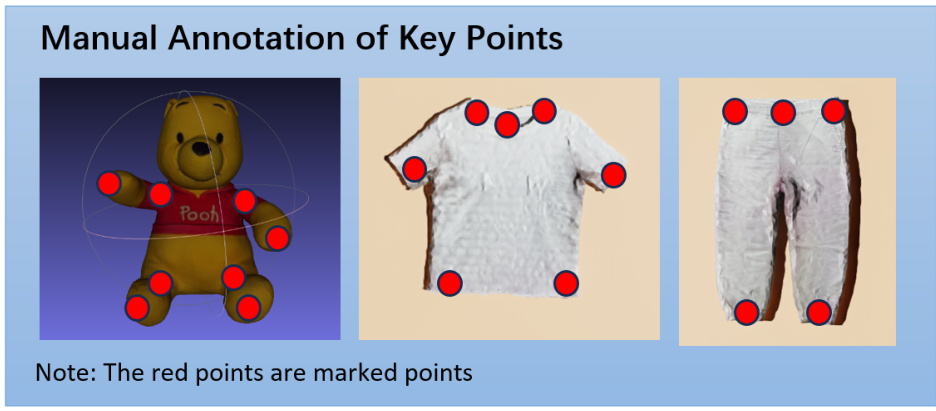

The figure illustrates the four-stage process of scanning real-world objects for the GarmentLab benchmark. First, a model wears the clothes to create natural-looking wrinkles. Next, a 3D scanner captures the model’s shape. The data is then post-processed to reduce the number of points (down-sampling), add texture details, and fill in any gaps in the point cloud. Finally, key points on the garment are manually annotated.

This figure shows a variety of tasks included in the GarmentLab benchmark. The tasks are categorized into five groups based on the physical interactions involved. There are short-horizon tasks such as folding, hanging, flinging, and placing, but also long-horizon, complex tasks which are more challenging and require planning, such as organizing clothes, washing clothes, setting a table, and dressing up. The bottom row of images shows the same tasks performed in the real world, demonstrating the ability of the benchmark to transfer knowledge from simulation to real-world scenarios.

This figure illustrates the Sim2Real framework used in the GarmentLab. The left side showcases the teleoperation and MoveIt pipelines which are lightweight and easy to deploy using ROS. The right side displays the three proposed visual sim-to-real algorithms. Each algorithm’s effect on visual representations before and after implementation is visually demonstrated, illustrating significant performance improvements after algorithm deployment.

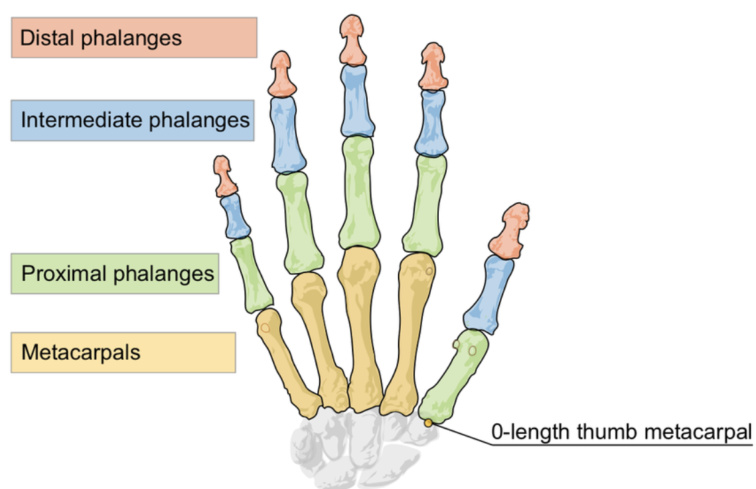

This figure shows a diagram of a hand model used in the Leap Motion system for teleoperation. It illustrates the various parts of the hand, including the distal, intermediate, and proximal phalanges, as well as the metacarpals and the 0-length thumb metacarpal. Each knuckle joint’s spatial position is calculated using a retargeting algorithm and transmitted via ROS (Robot Operating System) messages. This is a crucial component of the system’s ability to accurately track human hand movements and translate those movements into control signals for the robot arm in both simulation and the real world.

More on tables

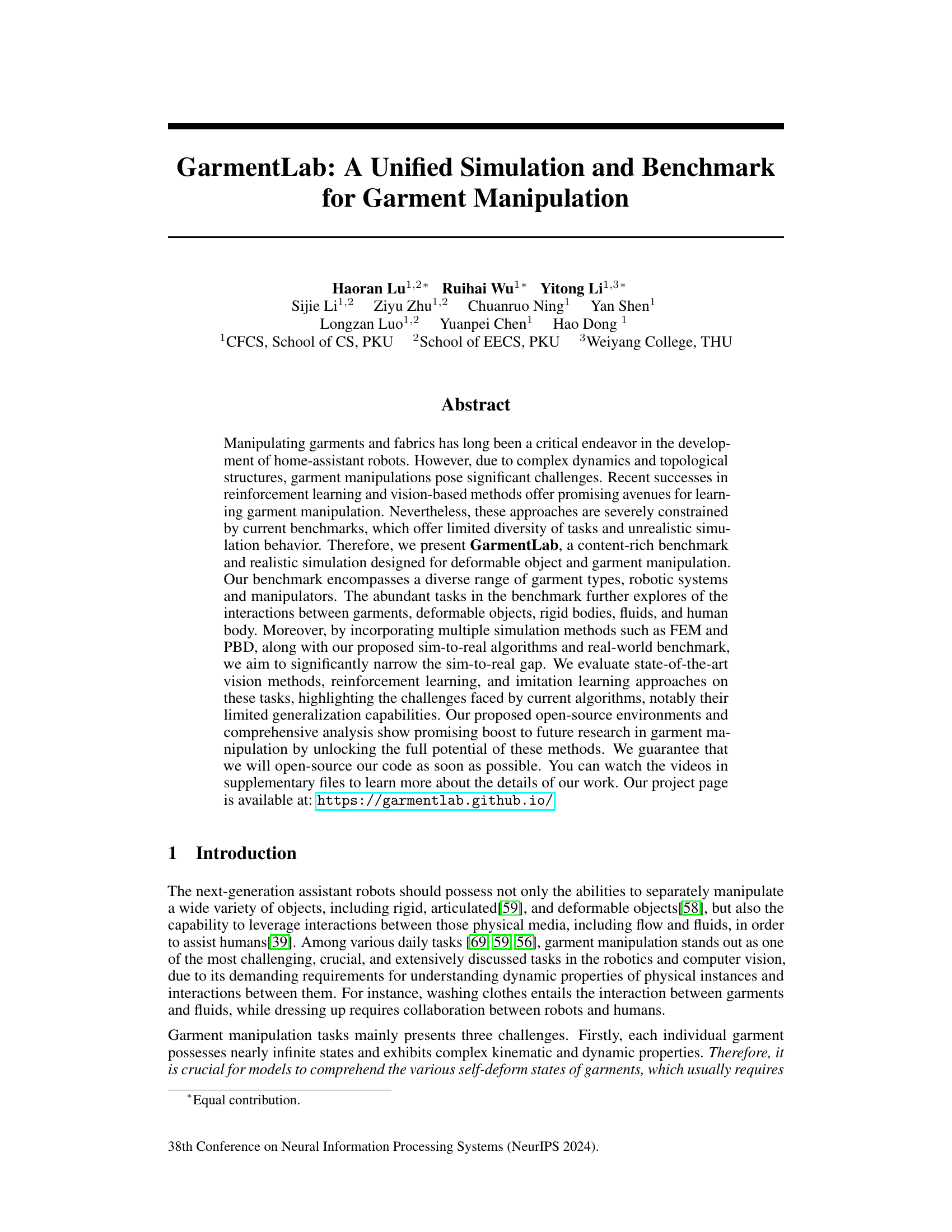

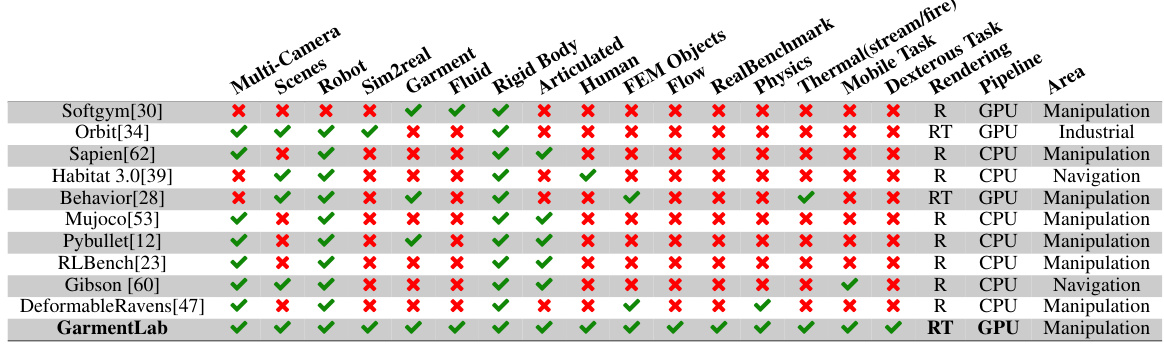

This table compares GarmentLab with other deformable object environments across various features, including support for multi-camera scenes, different types of objects (garments, soft objects, rigid bodies, articulated objects, and fluids), sim-to-real capabilities, and the type of physics engine used. It highlights GarmentLab’s unique strengths in offering a comprehensive and realistic simulation environment for garment manipulation.

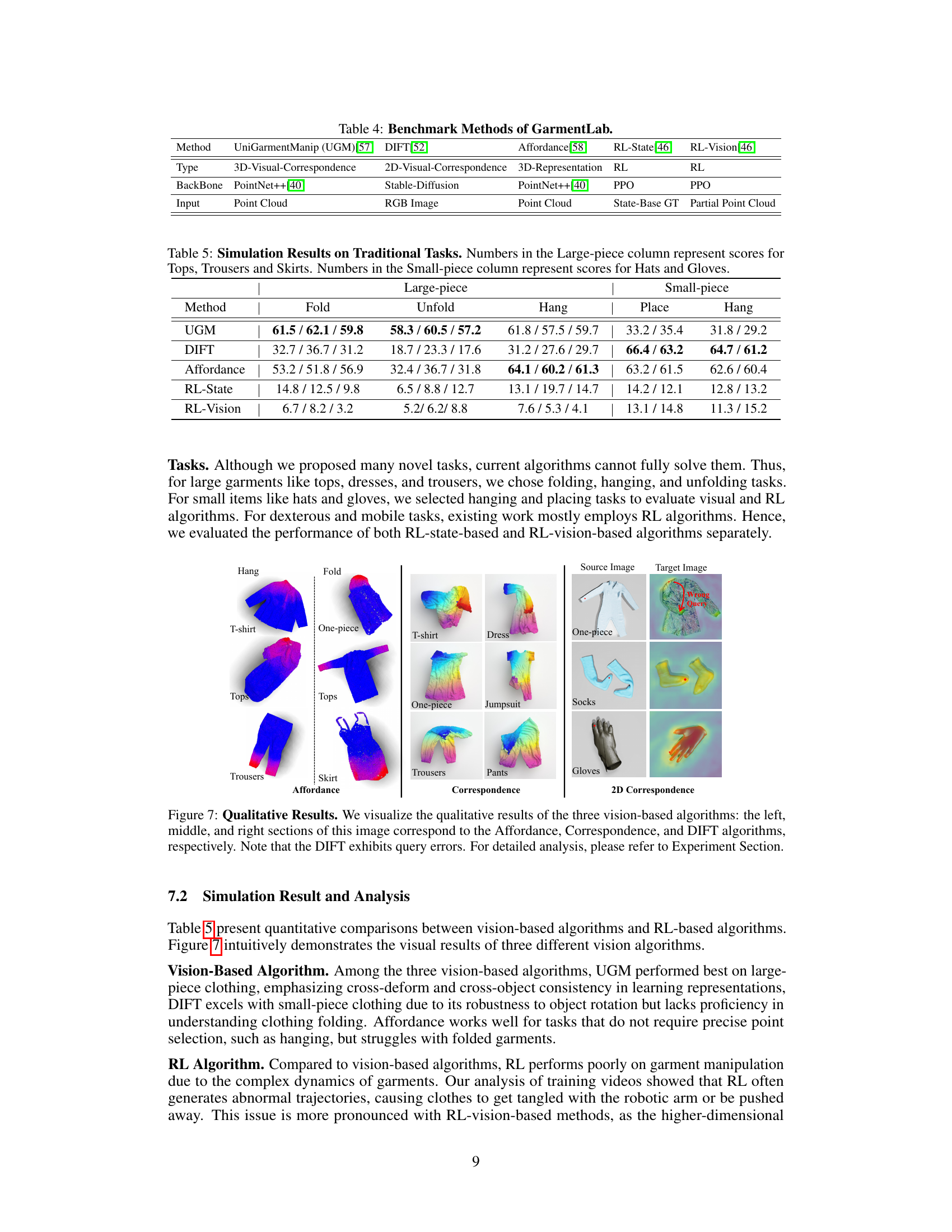

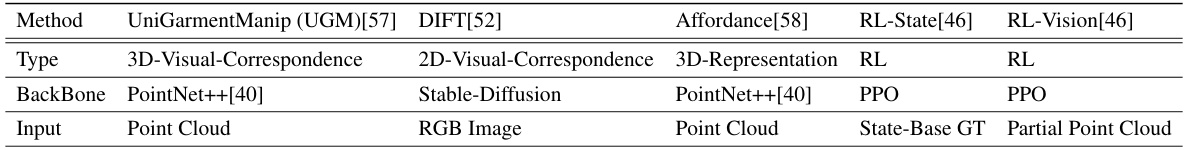

This table provides a summary of the five different methods used in GarmentLab benchmark experiments. It lists the method name, its type (3D/2D visual correspondence, 3D representation, or reinforcement learning), its backbone (the underlying architecture or model), and the input type (point cloud, RGB image, or state-based ground truth). The methods are compared based on their performance in handling various garment manipulation tasks within the GarmentLab environment.

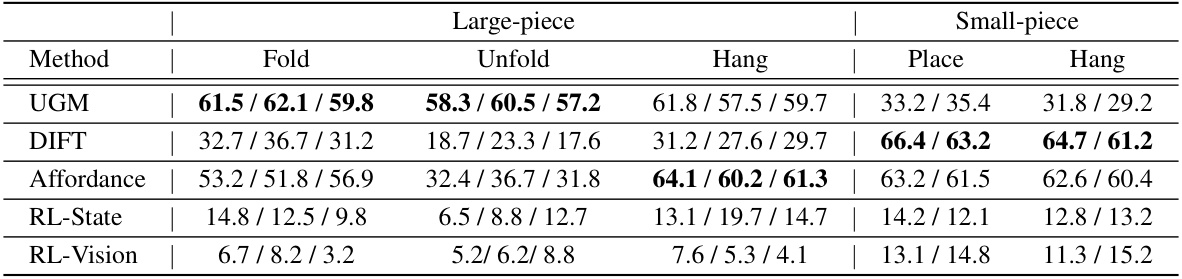

This table presents the quantitative results of five different algorithms (UGM, DIFT, Affordance, RL-State, RL-Vision) on five traditional garment manipulation tasks (Fold, Unfold, Hang, Place, Hang) categorized by Large-piece (Tops, Trousers, Skirts) and Small-piece (Hats, Gloves). Each cell shows the average success rate across multiple trials for a given algorithm and task. The results highlight the varying performance of different methods across different task types and garment sizes, offering insights into the strengths and weaknesses of each algorithm.

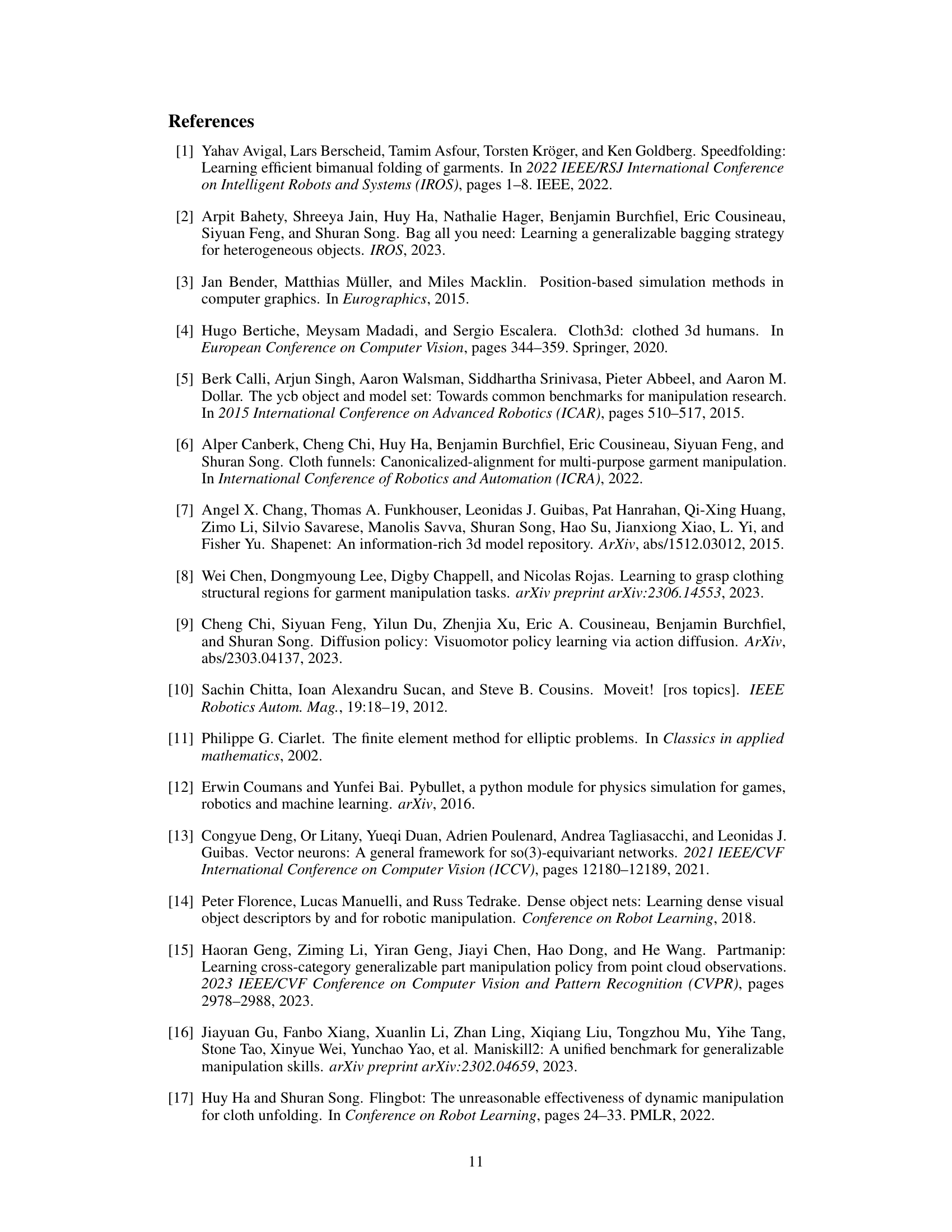

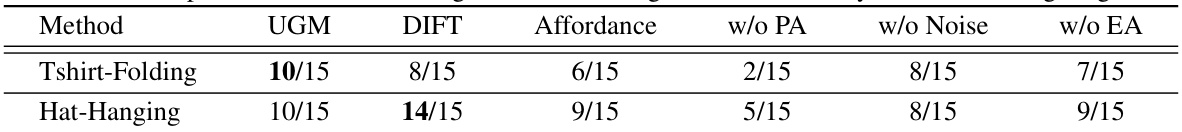

This table presents the results of real-world experiments evaluating the performance of the UniGarmentManip algorithm on two garment manipulation tasks: T-shirt folding and hat hanging. It also shows an ablation study demonstrating the impact of three key components of the UniGarmentManip sim-to-real approach: Pointcloud Alignment (PA), Noise Augmentation (Noise), and Keypoint Embedding Alignment (EA). Each entry represents the number of successful trials out of 15 attempts for each task and algorithm configuration.

This table compares GarmentLab with other deformable object environments across various features, including the types of objects supported (garments, soft objects, rigid bodies, fluids, articulated objects, and humans), simulation methods (FEM and PBD), sim-to-real capabilities, the availability of multi-camera setups, the presence of realistic scenes, and the types of tasks supported (dexterous manipulation, navigation, etc.). GarmentLab stands out due to its support for diverse object types and physics engines, comprehensive sim-to-real capabilities, the inclusion of real-world benchmarks, and its suitability for a wide range of manipulation tasks.

This table compares GarmentLab with other deformable object environments across various features, including the support for multiple cameras, different types of objects (garments, rigid bodies, articulated objects, humans, fluids), physics engines, sim-to-real capabilities, and the type of tasks supported. It highlights GarmentLab’s advantages in terms of its comprehensive features and realistic simulation capabilities.

This table compares GarmentLab with other deformable object environments across various aspects such as the types of objects supported (garments, soft objects, rigid bodies, articulated objects, humans, fluids), simulation methods (FEM, PBD), sim-to-real capabilities, the presence of multi-camera setups, the availability of real-world benchmarks, and the type of tasks supported (dexterous manipulation, mobile manipulation, navigation tasks, etc.). The table highlights GarmentLab’s unique combination of features, particularly its support for multi-physics simulation, diverse garment types, a real-world benchmark, and robust sim-to-real techniques.

Full paper#