↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

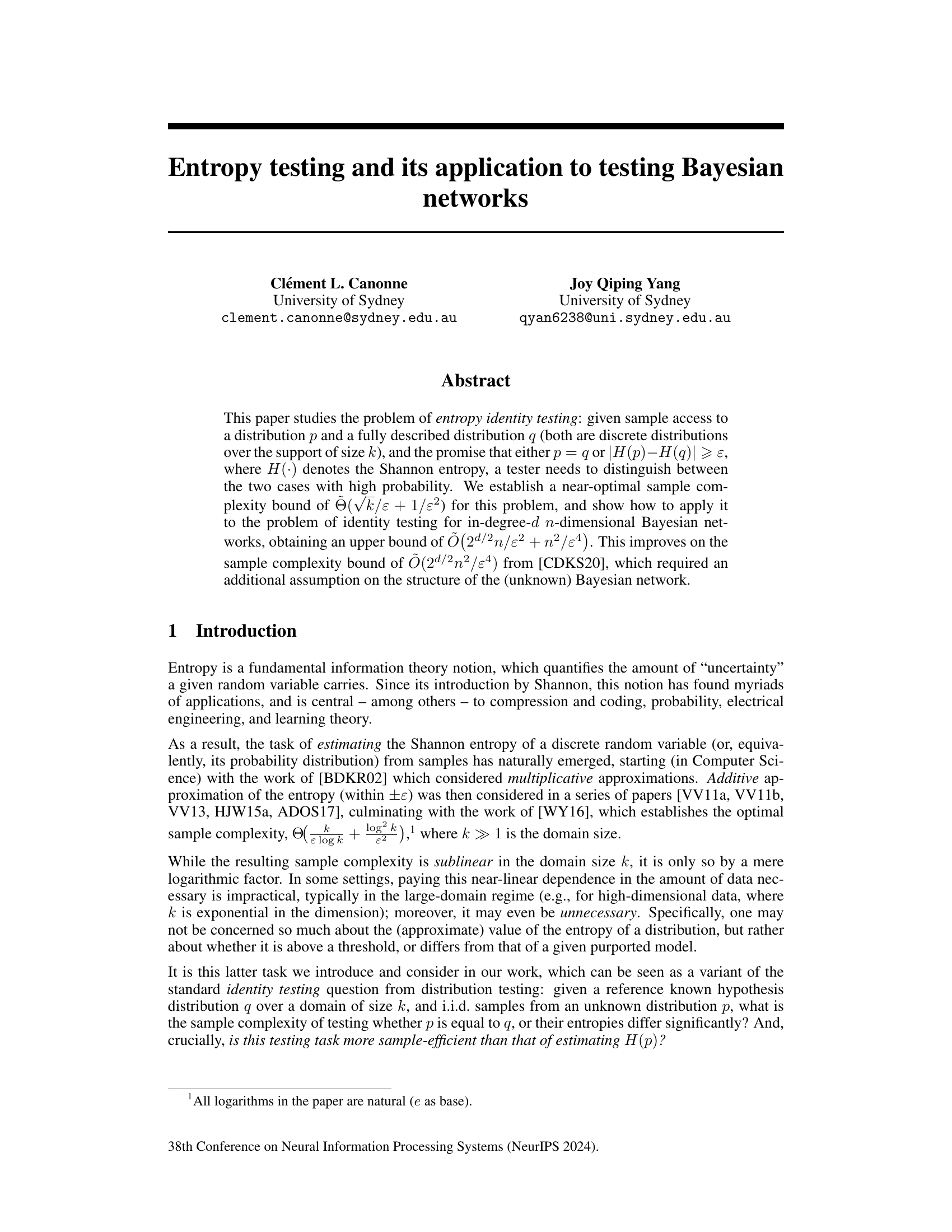

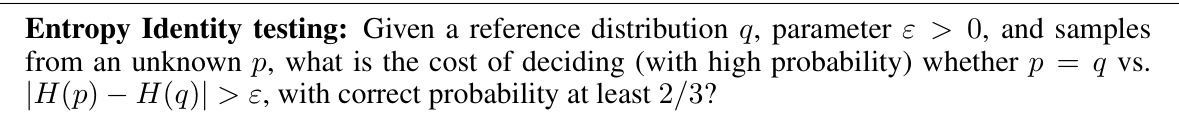

Estimating the Shannon entropy of a distribution is crucial in many fields. However, existing methods often require a near-linear number of samples, making them impractical for high-dimensional data. This paper focuses on a related but more efficient problem: determining if two distributions have the same entropy or significantly different entropies. This problem is called entropy identity testing, and it can be solved more efficiently than directly estimating the entropy.

The authors developed a near-optimal algorithm for entropy identity testing, requiring far fewer samples than previously needed. This is particularly important for testing the equality of Bayesian networks, a common model in many fields. Their method improves upon previous work by removing strict assumptions about the structure of the networks. This represents a substantial advance in the efficiency and applicability of testing Bayesian networks.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in distribution testing and Bayesian networks. It provides near-optimal algorithms for a fundamental problem—entropy identity testing—and applies it to improve Bayesian network testing. This opens new avenues for efficient algorithms in high-dimensional settings and advances our understanding of these complex systems.

Visual Insights#

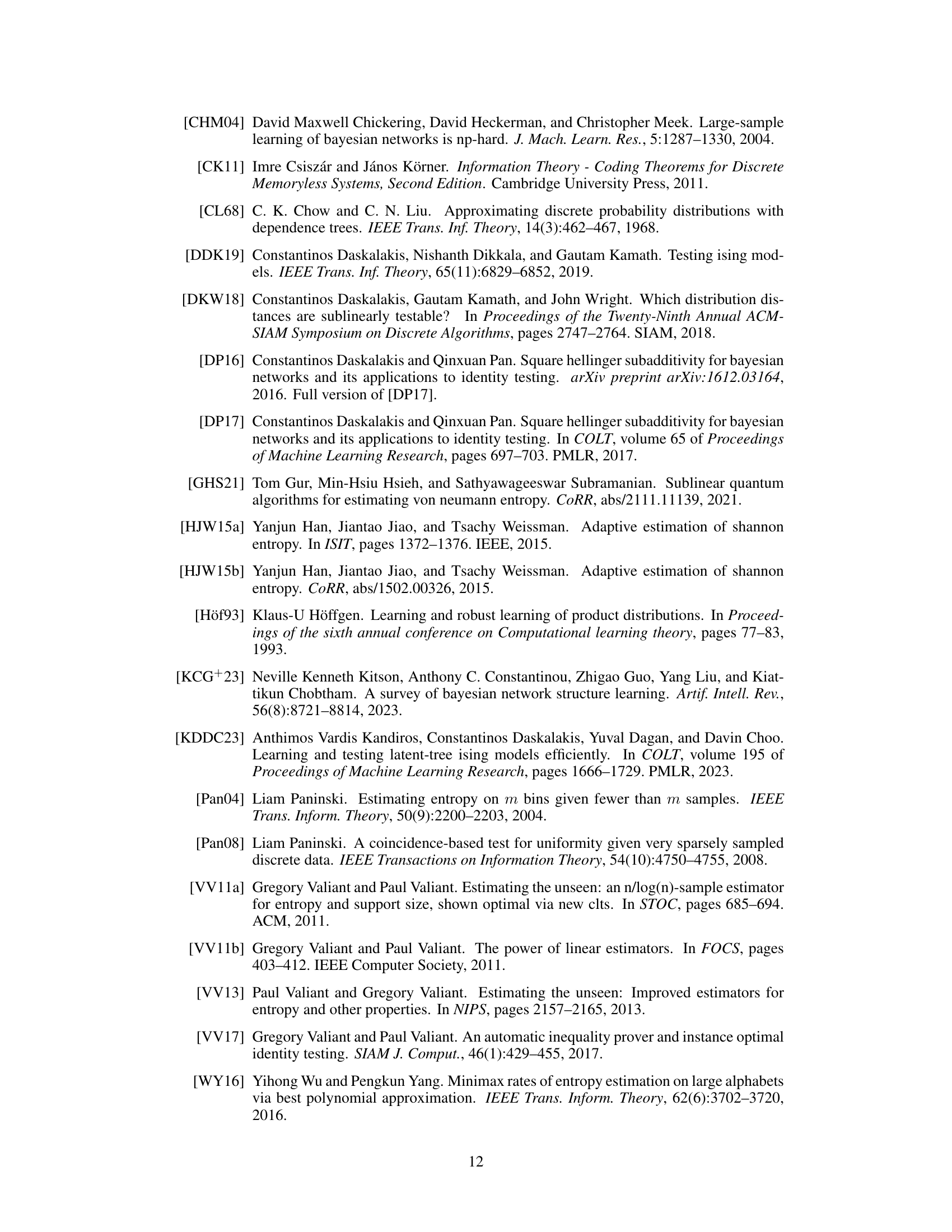

This table summarizes the upper and lower bounds for the sample complexity of entropy identity testing and Bayesian network identity testing. For entropy identity testing, it provides the upper and lower bounds in terms of the domain size k and the parameter epsilon. For Bayesian network identity testing, it shows the upper bound in terms of the number of nodes n, the in-degree d, and epsilon, comparing it to the previous best-known bound. The table highlights the near-optimal sample complexity achieved for entropy identity testing and the improved sample complexity obtained for Bayesian network identity testing.

Full paper#