↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Current 3D reconstruction methods using omnidirectional images often suffer from slow processing times or unsatisfactory quality. Neural radiance fields (NeRF) based approaches, while providing high quality, are computationally expensive. Existing methods using 3D Gaussian splatting show promise for faster processing, but their direct application to omnidirectional images leads to distortion.

This paper introduces ODGS, a novel rasterization pipeline specifically designed for omnidirectional images. ODGS uses a geometric interpretation to project Gaussians onto tangent planes, addressing the distortion issue. By leveraging CUDA parallelization, ODGS achieves a substantial 100x speed improvement over NeRF-based methods while maintaining or even improving reconstruction quality. The method effectively handles various datasets, demonstrating its robustness and versatility.

Key Takeaways#

Why does it matter?#

This paper is important because it presents ODGS, a novel and efficient 3D scene reconstruction framework for omnidirectional images. It significantly improves speed and quality compared to existing methods, opening new avenues for VR/MR, robotics, and other 3D applications. Its high speed and superior quality make it particularly valuable for resource-constrained environments and real-time applications.

Visual Insights#

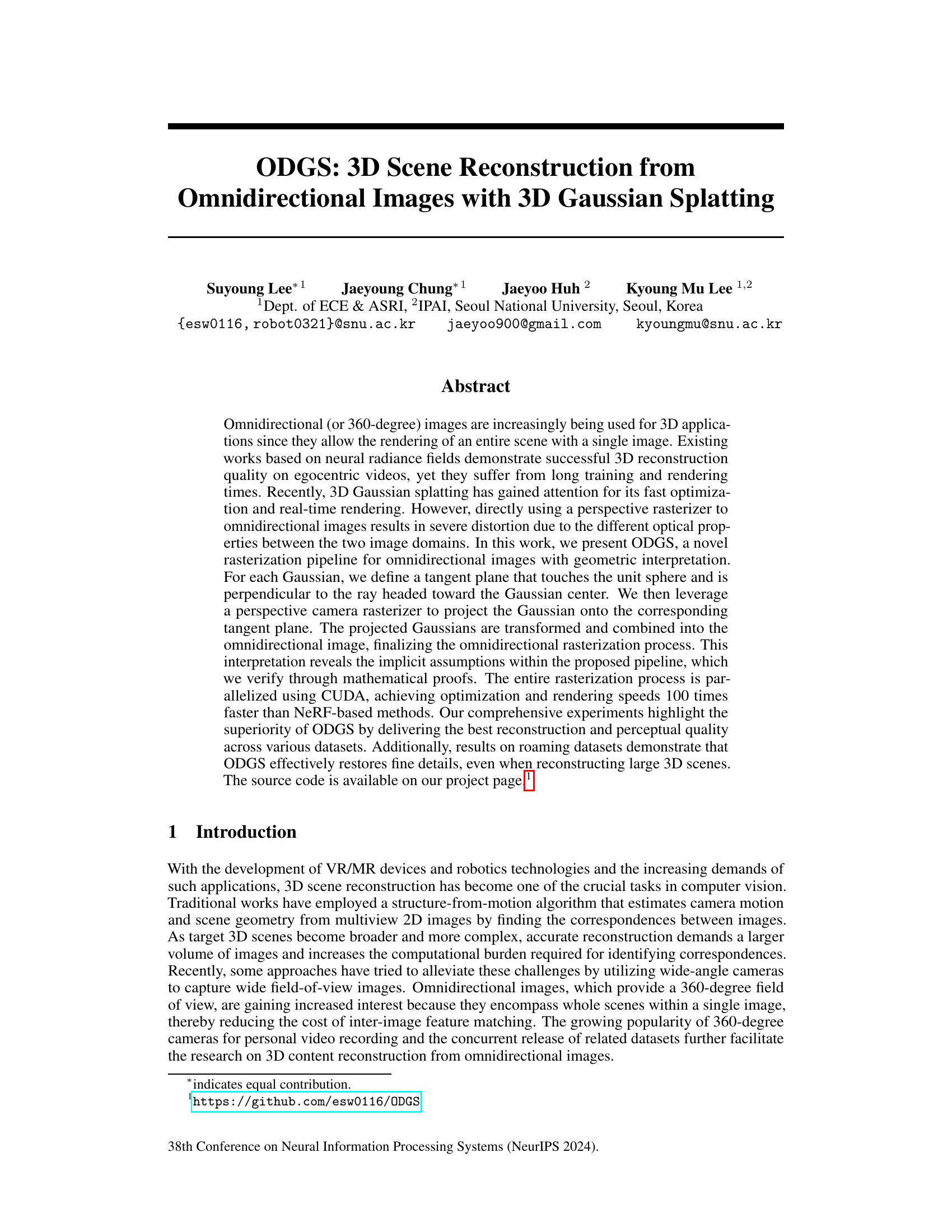

This figure illustrates the rasterization process of the ODGS method for omnidirectional images. It breaks down the process into four steps: coordinate transformation, tangent plane projection, horizontal stretching, and rescaling and combination. Each step is shown visually with a diagram and explained concisely. The figure provides a visual understanding of how a 3D Gaussian is projected onto a 2D omnidirectional image.

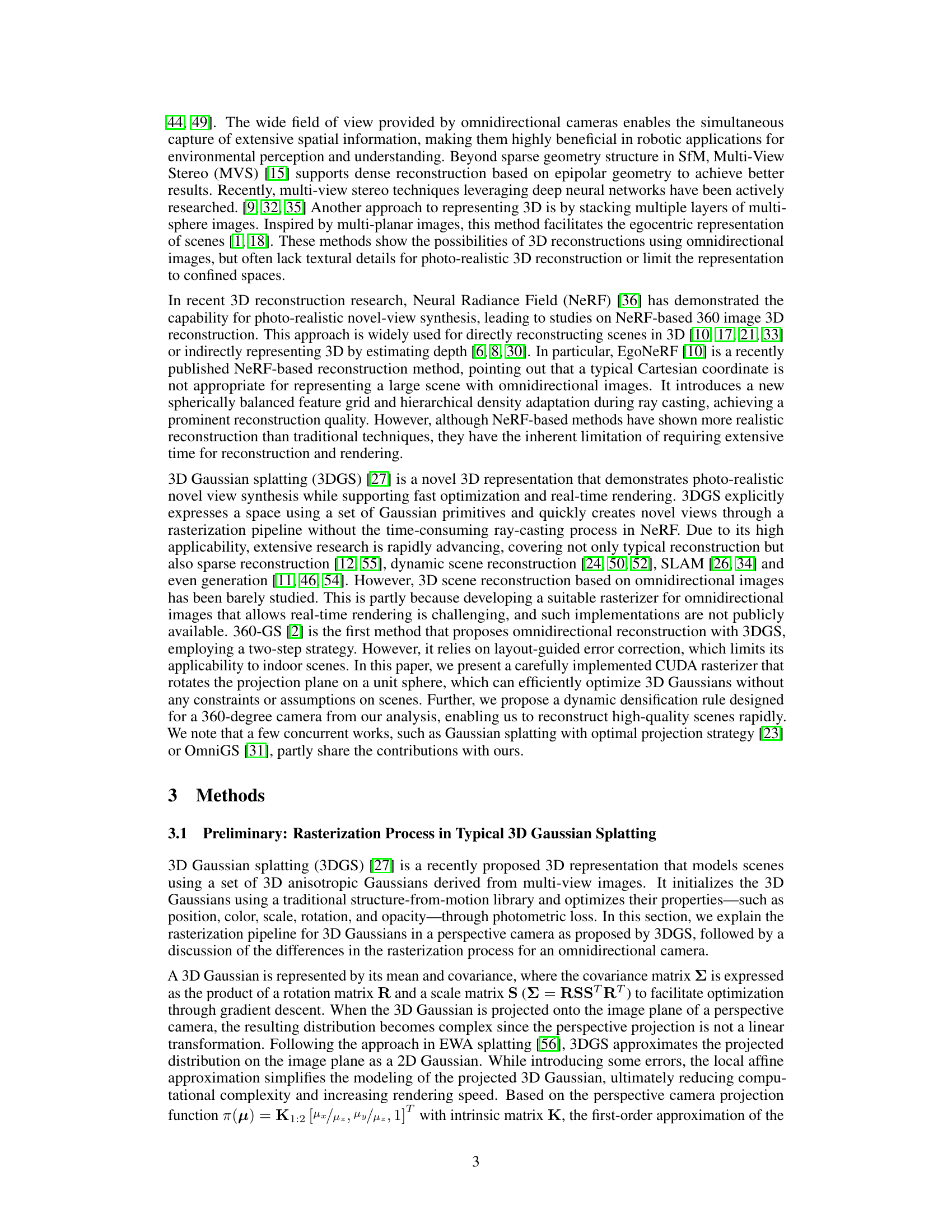

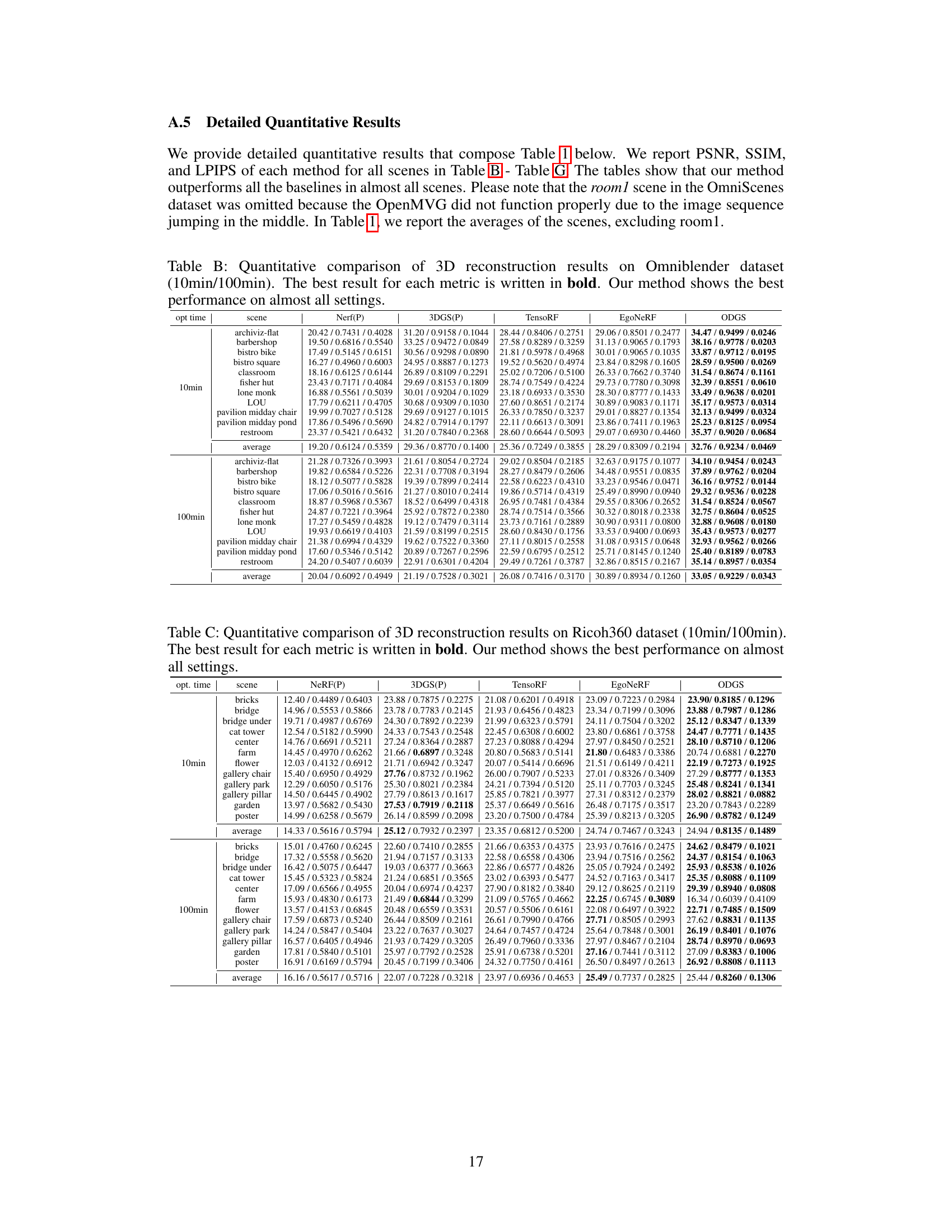

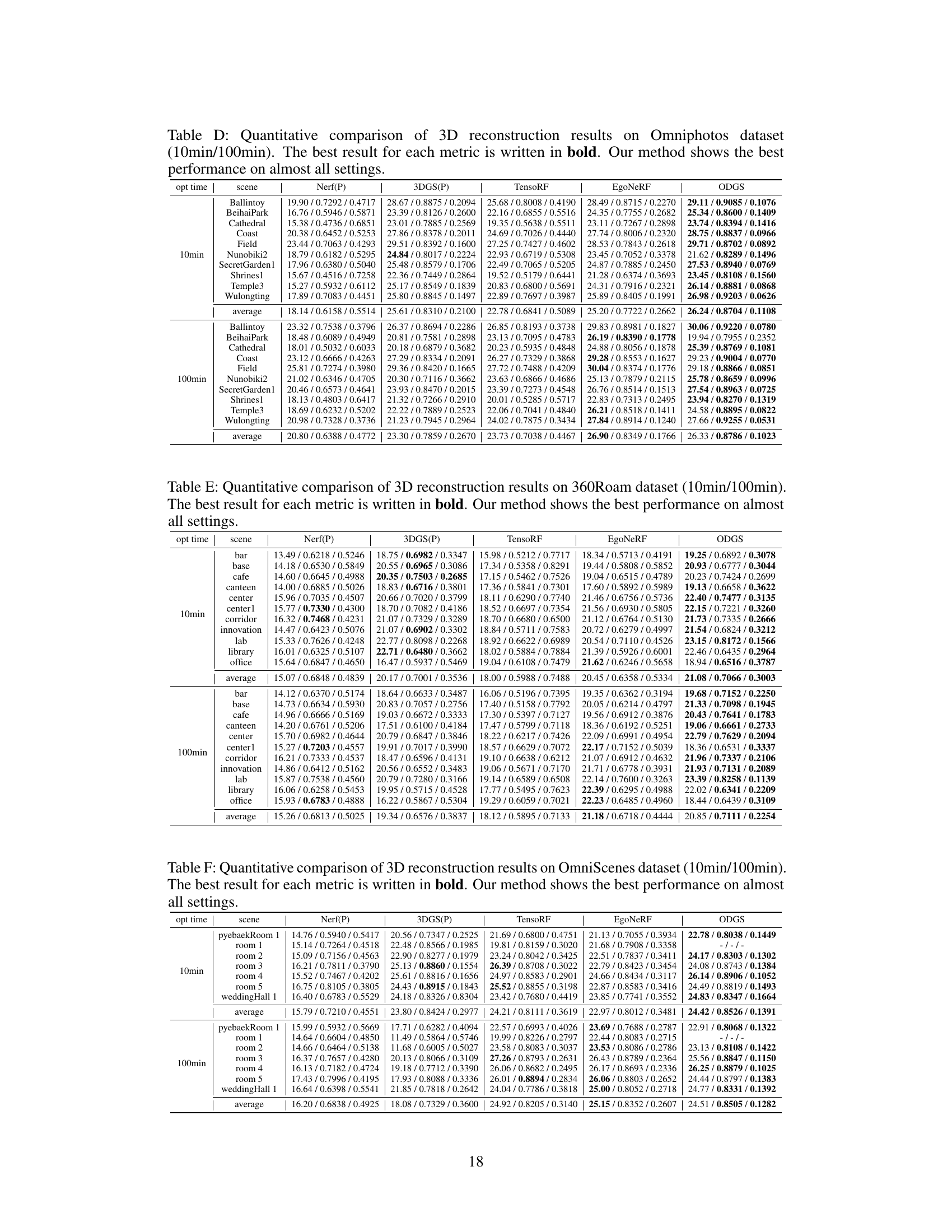

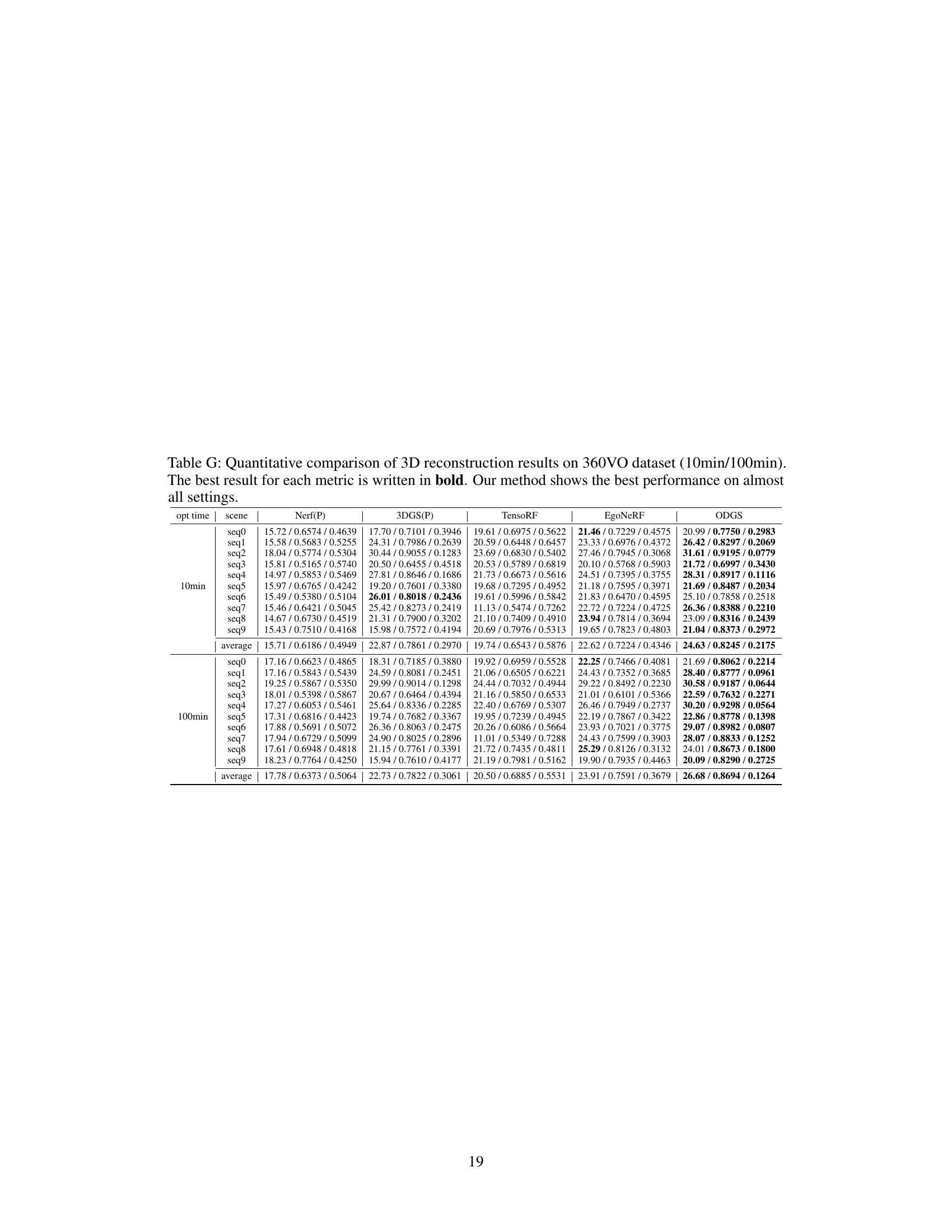

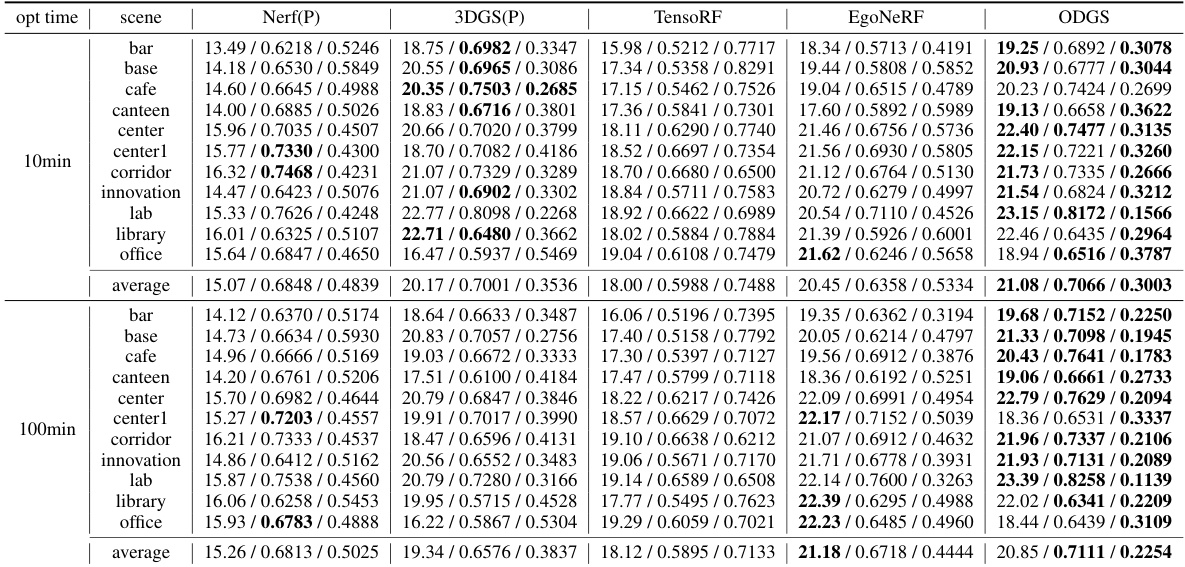

This table presents a quantitative comparison of different 3D reconstruction methods across six datasets. The methods are evaluated based on PSNR, SSIM, and LPIPS metrics. The results show ODGS outperforms other methods in most cases and is significantly faster.

In-depth insights#

ODGS: Omni-Rasterization#

ODGS: Omni-Rasterization presents a novel approach to 3D scene reconstruction from omnidirectional images, addressing limitations of existing methods. The core innovation lies in its novel rasterization pipeline, which cleverly handles the distortion inherent in projecting a 3D scene onto a spherical image. Instead of directly rasterizing onto the equirectangular projection, ODGS projects Gaussians onto tangent planes, minimizing distortion. This geometrically-sound approach is further enhanced by a CUDA-parallelized implementation, resulting in significant speed improvements over neural radiance field (NeRF) based methods. Mathematical proofs validate the assumptions within the pipeline, adding rigor to the approach. The resulting method, ODGS, demonstrates superior reconstruction quality and efficiency, especially in handling large-scale scenes with fine details. This technique represents a significant advancement in 3D reconstruction from omnidirectional images.

3DGS for Omni Images#

Adapting 3D Gaussian splatting (3DGS) for omnidirectional images presents a unique challenge due to the inherent differences in projection geometry between perspective and spherical camera models. A naive application of a perspective rasterizer leads to severe distortions. To overcome this, a novel rasterization pipeline is crucial, involving a geometrically-sound projection method onto tangent planes defined for each Gaussian. This ensures accurate representation of 3D Gaussian splats in equirectangular space and minimizes distortion, unlike existing naive adaptations. Efficient parallel processing using CUDA is vital for achieving the real-time rendering speeds that are characteristic of 3DGS. This optimized approach also requires consideration of dynamic Gaussian densification based on their elevation to compensate for distortion in equirectangular projection, maintaining high-quality reconstruction. Thus, the effective adaptation of 3DGS to omnidirectional images demands a thorough understanding of geometric projection and efficient parallel computation to achieve fast and high-quality 3D scene reconstruction.

Omnidirectional 3D#

Omnidirectional 3D scene reconstruction presents unique challenges and opportunities. Capturing an entire 360-degree view within a single image offers efficiency, but processing this data requires specialized techniques to overcome distortions inherent in projecting a spherical view onto a planar image. Existing methods based on neural radiance fields (NeRFs) struggle with the computational cost of training and rendering, while traditional structure-from-motion approaches lack the detail and speed of newer methods. This area of research is rapidly evolving, focusing on techniques like 3D Gaussian splatting to balance reconstruction quality with real-time performance. The development of efficient rasterization pipelines for omnidirectional images, optimized for parallel processing, is crucial. Geometric interpretation and mathematical verification of these pipelines are also important for ensuring accuracy and stability. Research in this field also aims to solve problems associated with handling a high volume of data and recovering fine details, especially in large, complex scenes.

ODGS: Speed & Quality#

The heading ‘ODGS: Speed & Quality’ suggests a focus on evaluating the performance of the ODGS method across two critical dimensions. Speed likely refers to the computational efficiency of the algorithm, including training time and rendering speed for novel view synthesis. Faster processing is crucial for real-time applications and scalability to large-scale datasets. Quality, on the other hand, would likely encompass metrics such as PSNR, SSIM, and LPIPS, evaluating visual fidelity and perceptual similarity to ground truth images. A high-quality reconstruction would accurately capture scene details, textures, and geometry, delivering photorealistic results. The core of this analysis would involve comparing ODGS’s speed and quality against existing state-of-the-art techniques for 3D scene reconstruction from omnidirectional images, demonstrating its superiority by achieving a better balance or exceeding performance in both aspects. The results section would likely provide quantitative and qualitative evidence to support these claims, bolstering the method’s contribution to the field.

Future of ODGS#

The future of ODGS (Omnidirectional Gaussian Splatting) is promising, given its demonstrated speed and quality advantages in 3D scene reconstruction from omnidirectional images. Further research could focus on improving the handling of extreme viewpoints near the poles, where distortion is significant, perhaps through adaptive sampling or more sophisticated projection techniques. Addressing the limitations of the local affine approximation used in projecting 3D Gaussians onto 2D images is crucial for enhancing accuracy and reducing artifacts. Integrating ODGS with other techniques, such as neural implicit representations or deep learning based methods for feature extraction and geometry refinement, would enhance its capabilities. Exploring applications in fields like virtual and augmented reality, robotics, and autonomous driving presents significant opportunities. Finally, developing more robust and efficient densification strategies will be crucial for scaling ODGS to even larger and more complex scenes, making real-time rendering of highly detailed, vast 3D environments a reality.

More visual insights#

More on figures

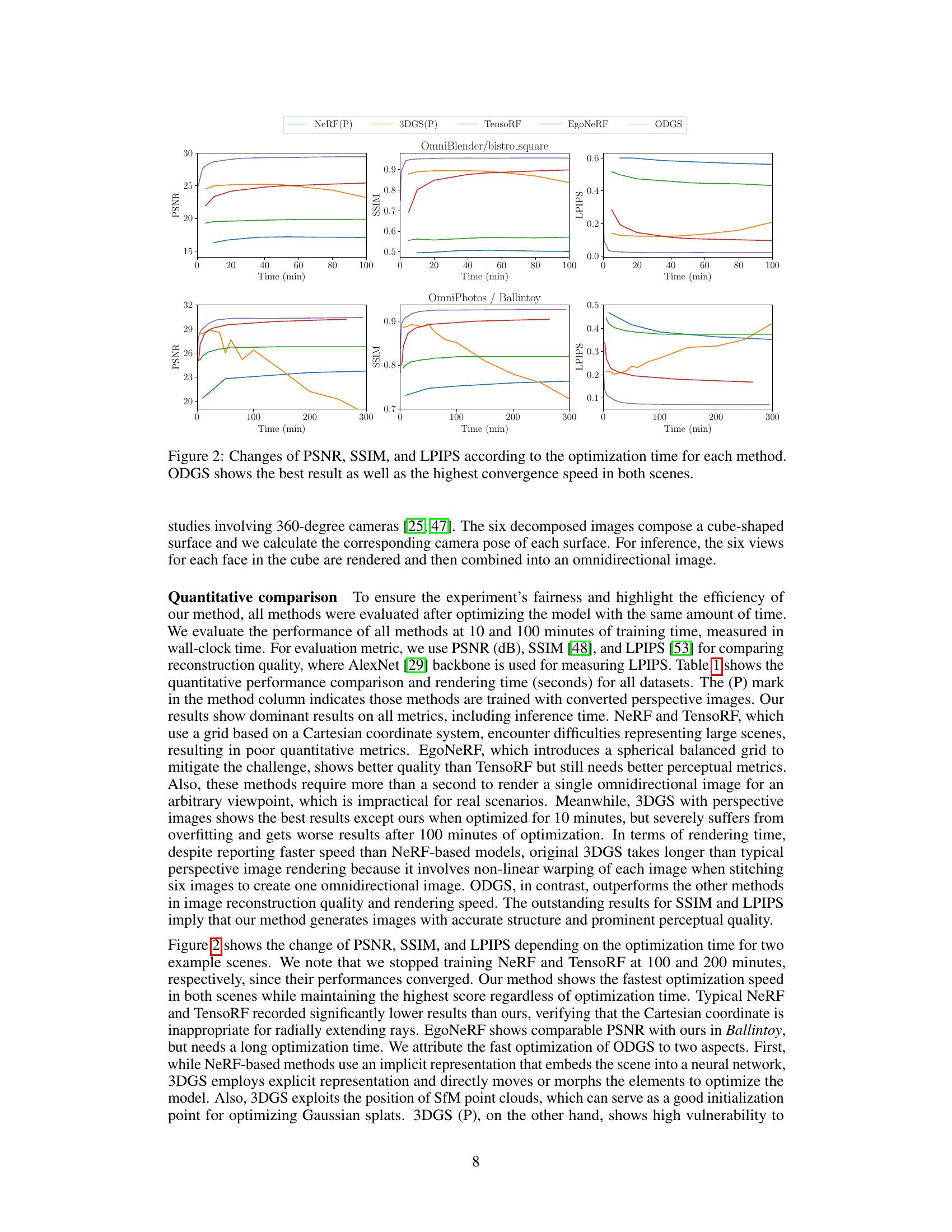

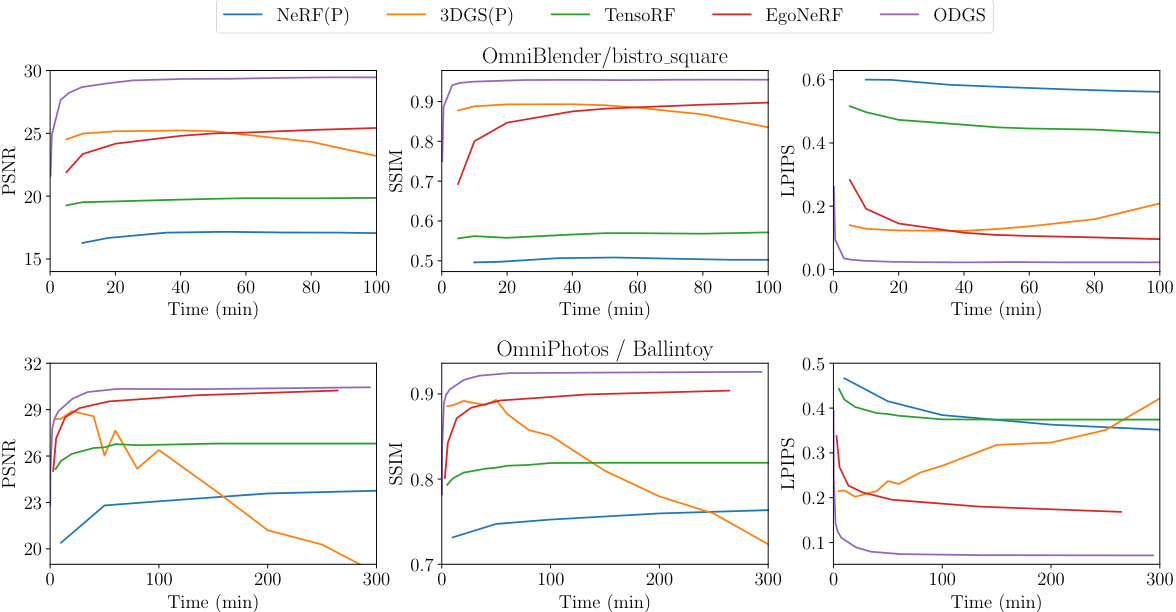

This figure shows the PSNR, SSIM, and LPIPS scores over optimization time for different methods on two example scenes (OmniBlender/bistro_square and OmniPhotos/Ballintoy). It visually demonstrates the superior performance and faster convergence speed of ODGS compared to NeRF(P), 3DGS(P), TensoRF, and EgoNeRF. The plots show how each metric changes as the optimization process continues, highlighting ODGS’s quicker improvement and better final results.

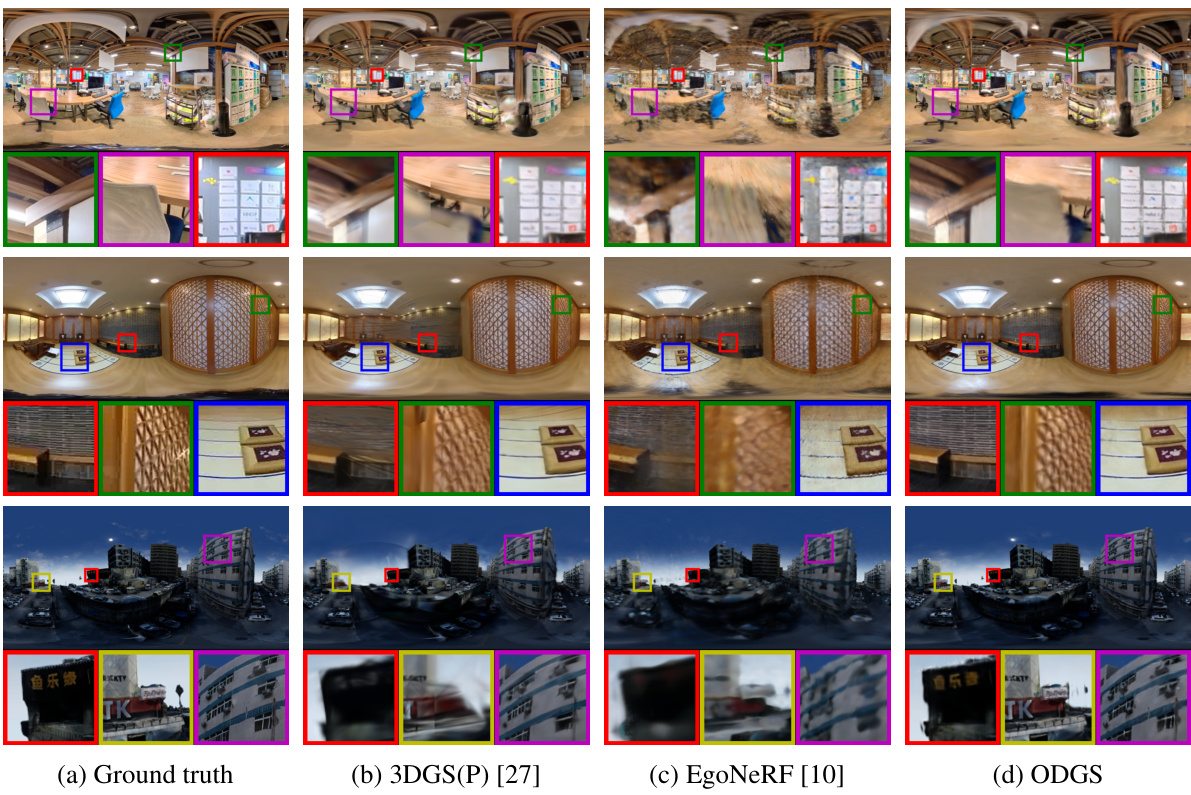

This figure presents a qualitative comparison of 3D reconstruction results between different methods (3DGS(P), EgoNeRF, and ODGS) and the ground truth for egocentric scenes. The comparison highlights the visual differences in reconstruction quality, showing how ODGS achieves a superior reconstruction in terms of sharpness and detail preservation compared to the other methods. The images show details and textures are much better reconstructed by ODGS than the other two methods. The images are from three datasets: Ricoh360, OmniBlender, and OmniPhotos, and all methods were trained for 10 minutes before inference.

This figure shows a qualitative comparison of 3D reconstruction results on three egocentric datasets (Ricoh360, OmniBlender, and OmniPhotos) using four different methods: ground truth, 3DGS(P), EgoNeRF, and ODGS. Each row represents a different scene, and each column represents a different method. The images are cropped to highlight the differences in reconstruction quality, particularly in terms of detail and accuracy. The ODGS method shows better reconstruction quality, with sharper details and fewer artifacts compared to the other methods.

This figure shows a qualitative comparison of rendered images using different Gaussian densification policies. (a) shows the full ground truth image, and (b) shows a cropped version of the ground truth used as a reference. (c) presents results obtained using a static threshold, highlighting artifacts and splits in the lanes. In contrast, (d) shows results obtained using the proposed dynamic threshold, demonstrating markedly more accurate and clean lane representation.

More on tables

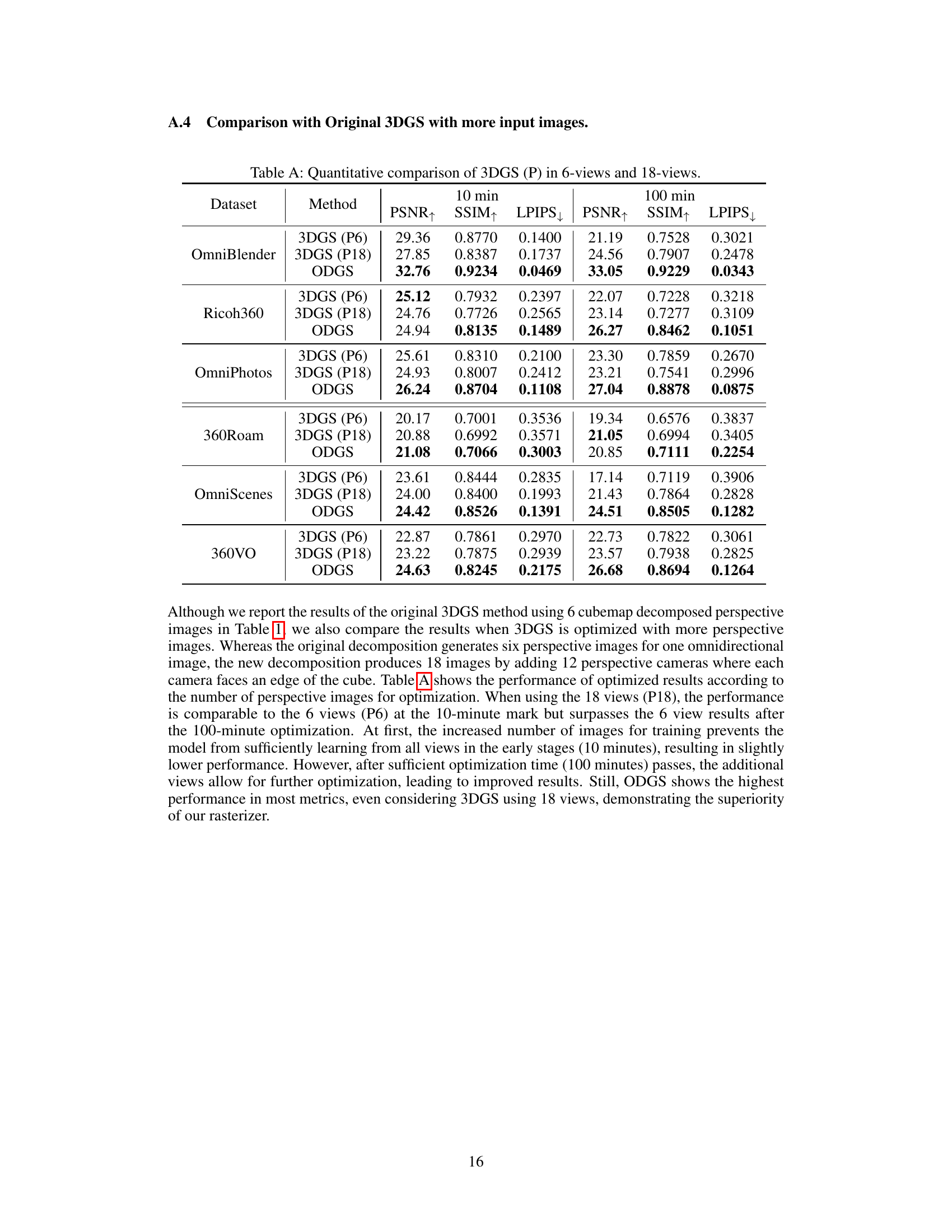

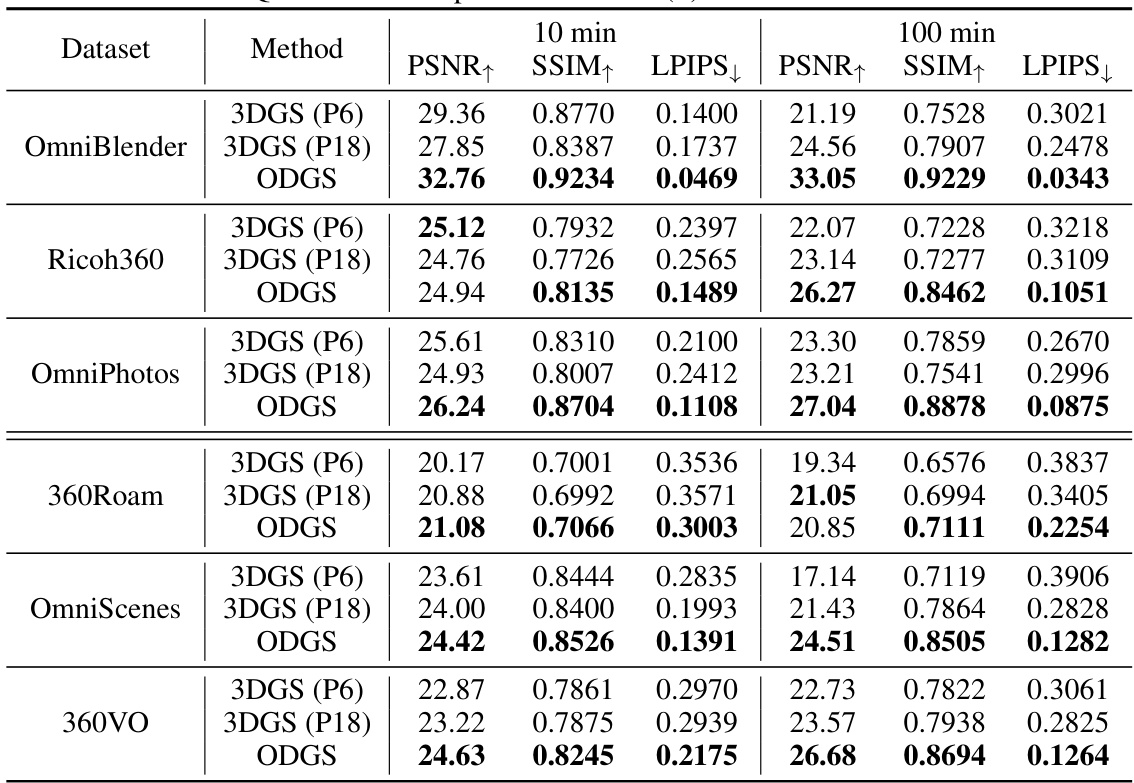

This table presents a quantitative comparison of different 3D reconstruction methods across six datasets. The methods compared are NeRF(P), 3DGS(P), TensoRF, EgoNeRF, and ODGS. The datasets are categorized into egocentric (OmniBlender, Ricoh360, OmniPhotos) and roaming (360Roam, OmniScenes, 360VO) types. For each dataset and method, the table shows the PSNR, SSIM, and LPIPS scores for both 10 minutes and 100 minutes of optimization time. The best result for each metric in each dataset is highlighted in bold. The final column indicates the optimization time in seconds. The results demonstrate that ODGS achieves superior performance across most metrics and the fastest rendering speed.

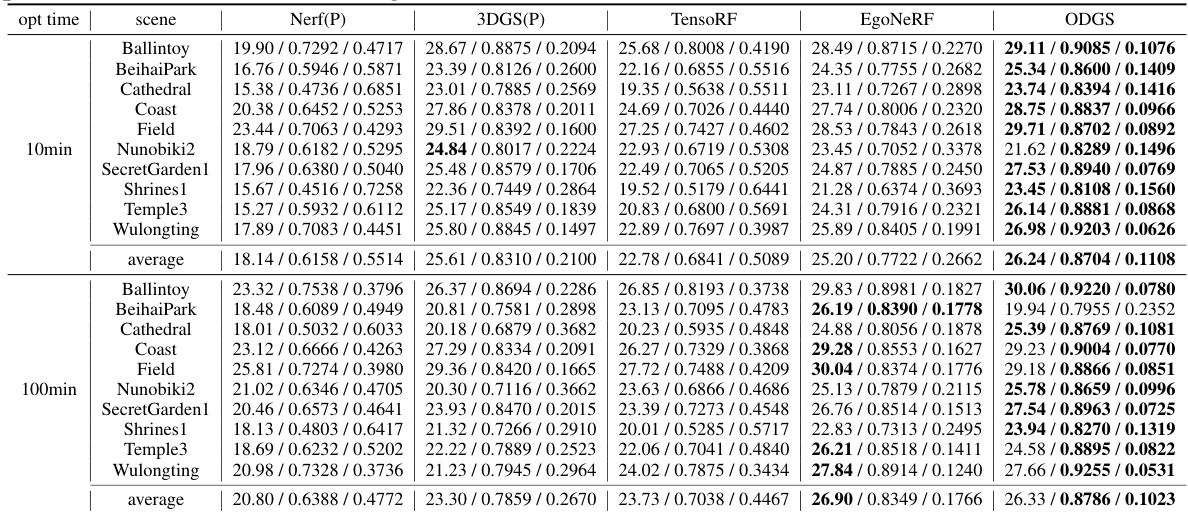

This table presents a quantitative comparison of different 3D reconstruction methods across various datasets. The metrics used for comparison include PSNR, SSIM, and LPIPS, all common image quality metrics. The table also shows the optimization time (in seconds) for each method. The ‘best’ metric for each dataset is highlighted in bold. The results demonstrate that ODGS, the proposed method, outperforms other methods in terms of reconstruction quality and rendering speed.

This table presents a quantitative comparison of different 3D reconstruction methods on six datasets (three egocentric and three roaming). The methods compared include NeRF, 3DGS (with perspective images), TensoRF, EgoNeRF and the proposed method ODGS. For each dataset and method, the table shows the PSNR, SSIM, and LPIPS scores for 10 and 100 minutes of optimization time, along with the total optimization time in seconds. The best metric for each dataset is highlighted in bold. The results demonstrate the superior performance of ODGS in terms of both reconstruction quality and speed.

This table presents a quantitative comparison of different 3D reconstruction methods across various datasets. The metrics used for comparison include PSNR, SSIM, and LPIPS, which assess various aspects of image quality. The table is organized to show the performance of each method at 10 and 100 minutes of training, along with the optimization and rendering times. The best performance for each metric on each dataset is highlighted in bold, clearly illustrating the superiority of the proposed ODGS method in terms of both reconstruction quality and speed.

This table presents a quantitative comparison of several 3D reconstruction methods across six different datasets. The methods compared include NeRF (perspective), 3DGS (perspective), TensoRF, EgoNeRF, and the proposed ODGS method. For each dataset and method, the PSNR, SSIM, and LPIPS metrics are reported for both 10 and 100 minutes of optimization time. The best performing method for each metric in each dataset is highlighted in bold. The table also includes the optimization time in seconds for each method.

This table presents a quantitative comparison of different 3D reconstruction methods on six datasets: three egocentric datasets (OmniBlender, Ricoh360, OmniPhotos) and three roaming datasets (360Roam, OmniScenes, 360VO). For each dataset and method, it shows the PSNR, SSIM, and LPIPS scores after 10 and 100 minutes of training. It also includes the optimization time in seconds. The best metric for each dataset is highlighted in bold. The table demonstrates the superiority of ODGS, the proposed method, in terms of both reconstruction quality and rendering speed.

This table presents a quantitative comparison of different 3D reconstruction methods on various datasets. Metrics such as PSNR, SSIM, and LPIPS are used to evaluate the reconstruction quality of each method. The table includes both short (10 minutes) and long (100 minutes) optimization times for a comprehensive comparison. The best performing method for each dataset and metric is highlighted in bold. The table also shows the rendering speed for each method. Overall, this table demonstrates the superior performance of ODGS (the proposed method) in terms of both reconstruction quality and speed.

Full paper#