↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Online machine learning, while powerful, raises serious privacy concerns when training on sensitive data. Differential Privacy (DP) aims to address this by mathematically quantifying data leakage; however, achieving strong privacy often comes at the cost of reduced accuracy. This paper explores the inherent trade-offs in online learning under DP, focusing on three distinct types of constraints: no DP, pure DP, and approximate DP. Prior research primarily focused on offline settings, but online learning presents unique challenges due to the sequential nature of data arrival and the potential for adaptive adversaries.

The researchers investigate the fundamental limits of DP in online learning algorithms using various hypothesis classes. Their analysis reveals a significant difference between pure and approximate DP, particularly when dealing with adaptive adversaries who can tailor attacks based on the learning algorithm’s past predictions. They prove that under pure DP, adaptive adversaries can force online learners to make infinitely many mistakes. In contrast, approximate DP enables online learning under adaptive attacks, showcasing the importance of adopting approximate DP methods in practice. This work contributes a deeper understanding of the cost of privacy in online learning and provides valuable guidelines for designing more effective privacy-preserving machine learning models.

Key Takeaways#

Why does it matter?#

This paper is crucial because it reveals fundamental limitations of differential privacy (DP) in online machine learning, a rapidly growing field with significant privacy concerns. The findings challenge existing assumptions and highlight the need for more robust privacy-preserving algorithms, particularly for adaptive adversarial settings. This opens avenues for further research into alternative privacy mechanisms and more efficient learning strategies under privacy constraints.

Visual Insights#

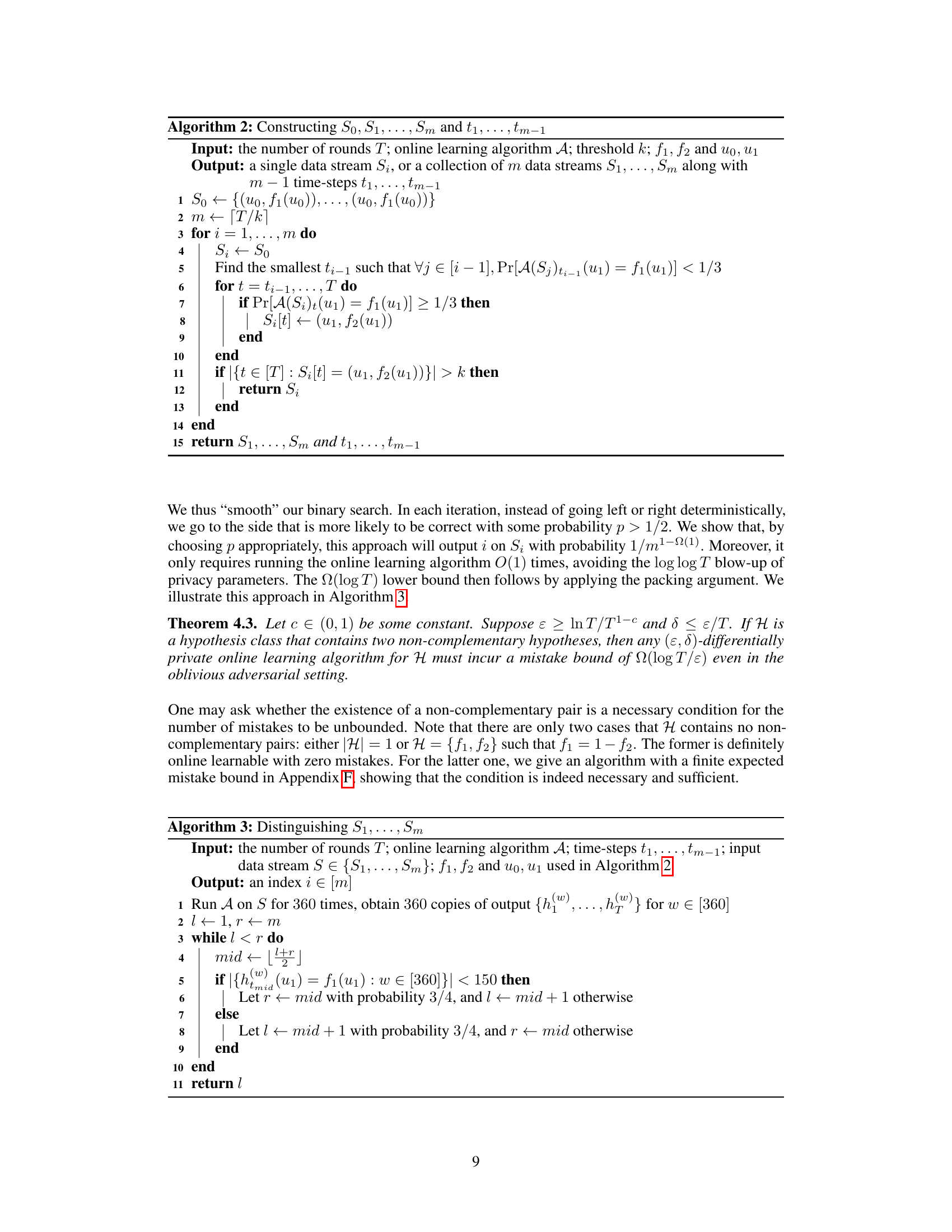

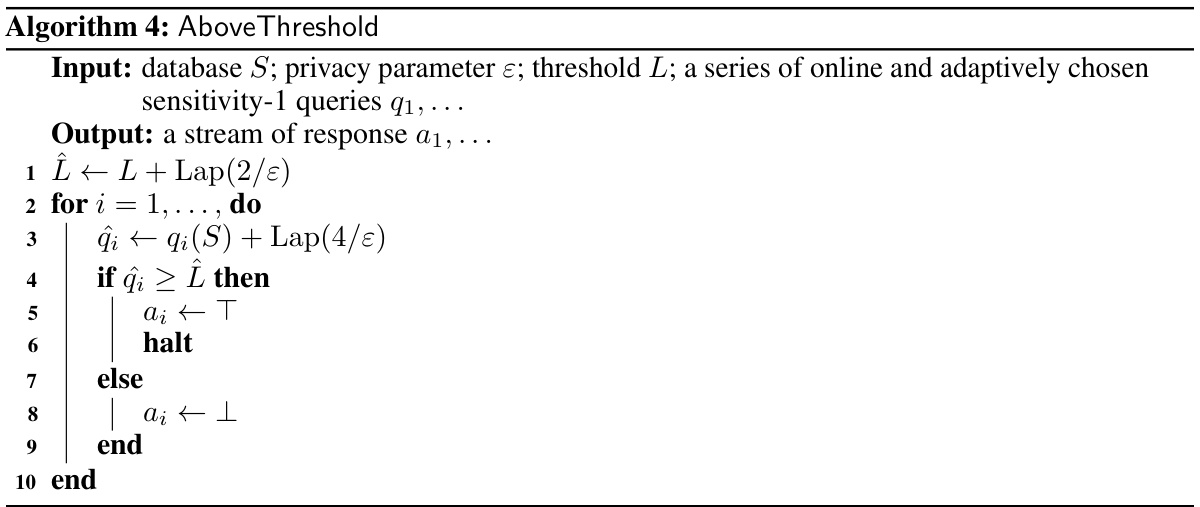

This algorithm takes a database, privacy parameter, threshold, and a series of online adaptively chosen sensitivity-1 queries as inputs. It adds Laplace noise to each query and checks if it exceeds the noisy threshold. If so, it outputs T and halts; otherwise, it outputs 1 and continues to the next query. This algorithm is used to ensure differential privacy.

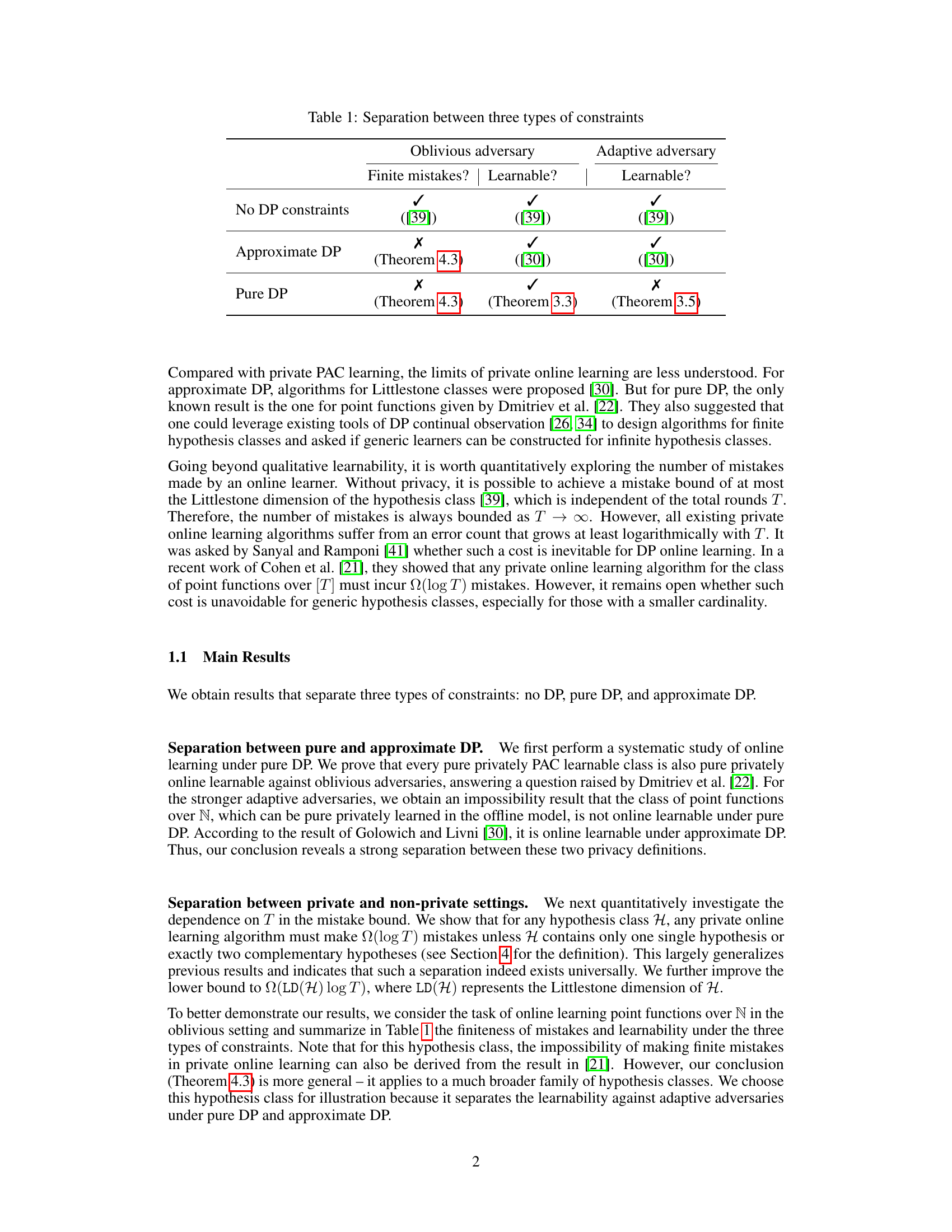

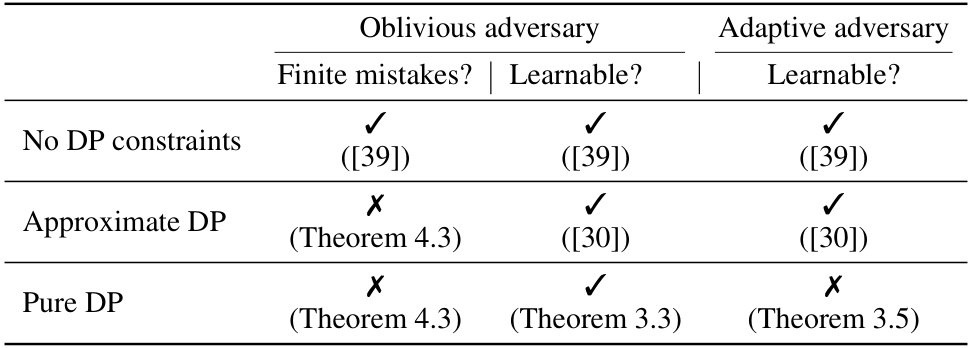

The table summarizes the learnability results under three types of constraints: no differential privacy (DP), pure DP, and approximate DP. It shows whether a finite number of mistakes is achievable and whether the hypothesis class is learnable against both oblivious and adaptive adversaries. The results highlight the differences in learnability between pure DP and approximate DP, especially when facing adaptive adversaries, and the significant gap between private and non-private online learning.

Full paper#