↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Multispectral and hyperspectral image fusion (MHIF) aims to combine high spatial resolution multispectral images with high spectral resolution hyperspectral images to generate high-quality, high-resolution hyperspectral images. Existing methods, including those based on implicit neural representations (INR), often struggle with preserving high-frequency details and achieving global perceptual consistency. This leads to suboptimal fusion results with loss of important information.

To address these issues, the researchers introduce FeINFN. This innovative framework leverages Fourier analysis to capture and enhance high-frequency information and a novel spatial-frequency interactive decoder to combine information effectively. The results demonstrate significant improvements over state-of-the-art methods in objective metrics and visual quality on benchmark datasets, proving the effectiveness of their proposed approach. The theoretical proof of the Gabor wavelet activation’s time-frequency tightness property further strengthens the method’s foundation.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in image fusion and computer vision due to its novel approach to hyperspectral and multispectral image fusion. FeINFN offers significant improvements in image quality, addresses limitations of existing methods, and opens new avenues for research in implicit neural representations and Fourier analysis within the image processing domain. Its findings are relevant to current trends in high-resolution image generation and computer vision applications.

Visual Insights#

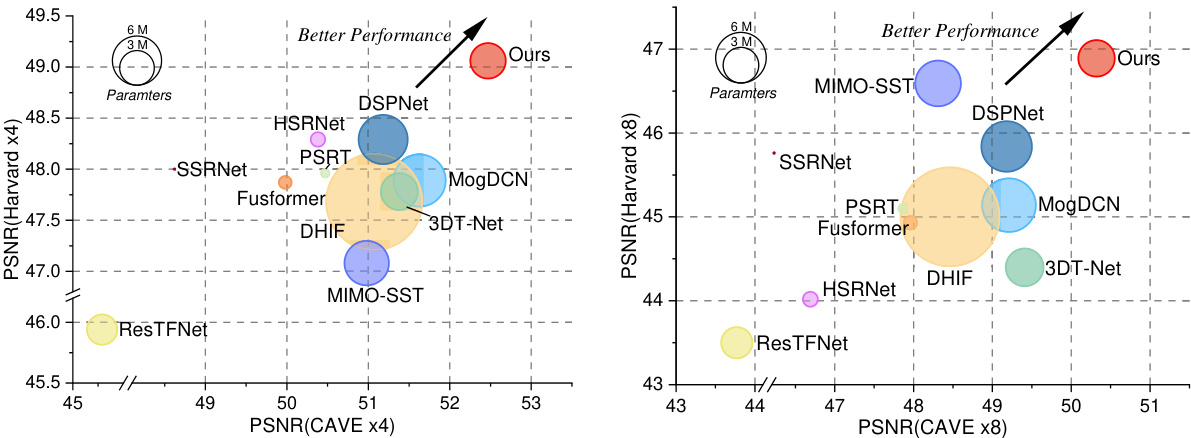

This figure compares the performance of the proposed FeINFN model with other state-of-the-art methods on two benchmark datasets, CAVE and Harvard, at different upscaling factors (×4 and ×8). The x and y axes represent PSNR values for the CAVE and Harvard datasets respectively. The size of each circle corresponds to the number of parameters in each model, and the position of the circle represents the model’s performance in terms of PSNR. The figure shows that FeINFN achieves superior performance with fewer parameters compared to other models.

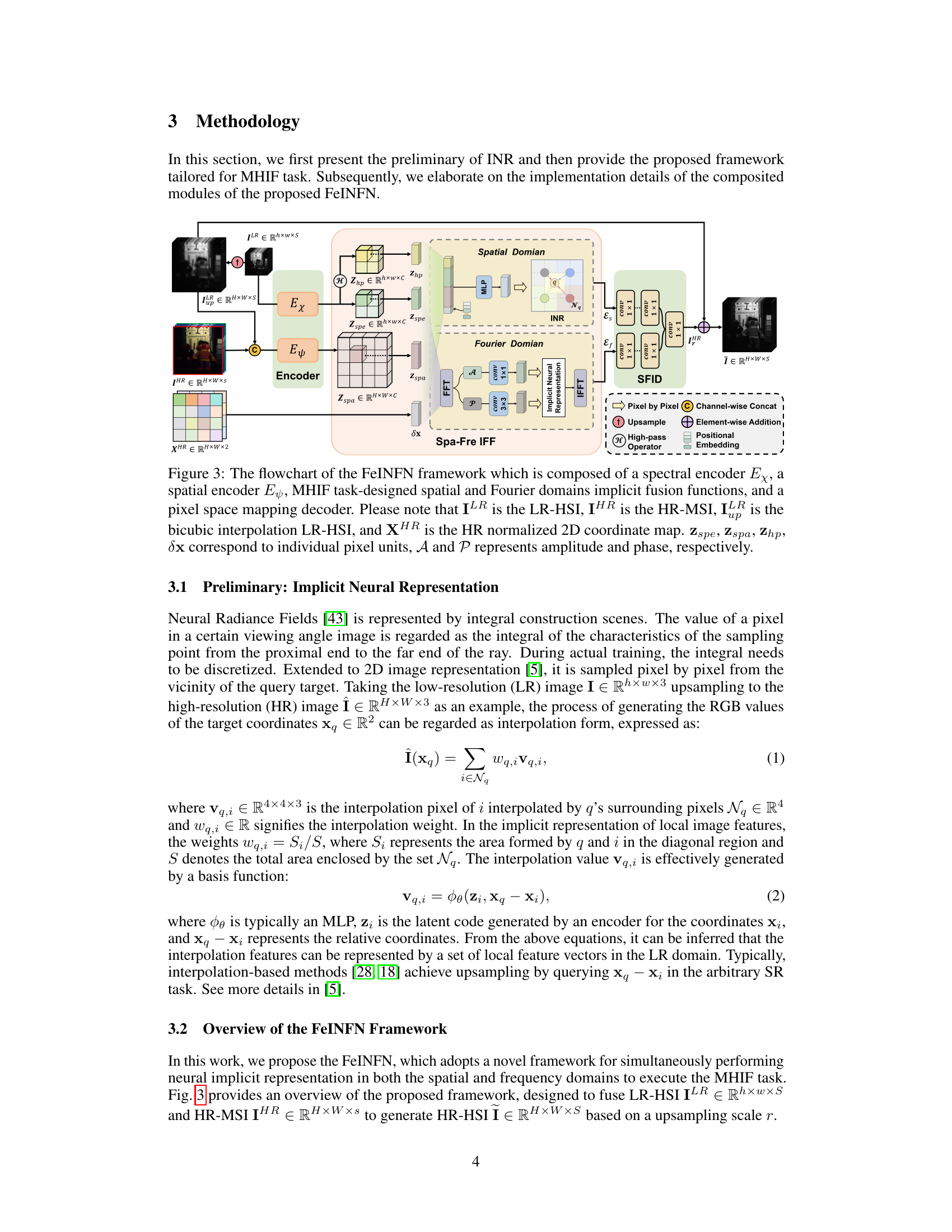

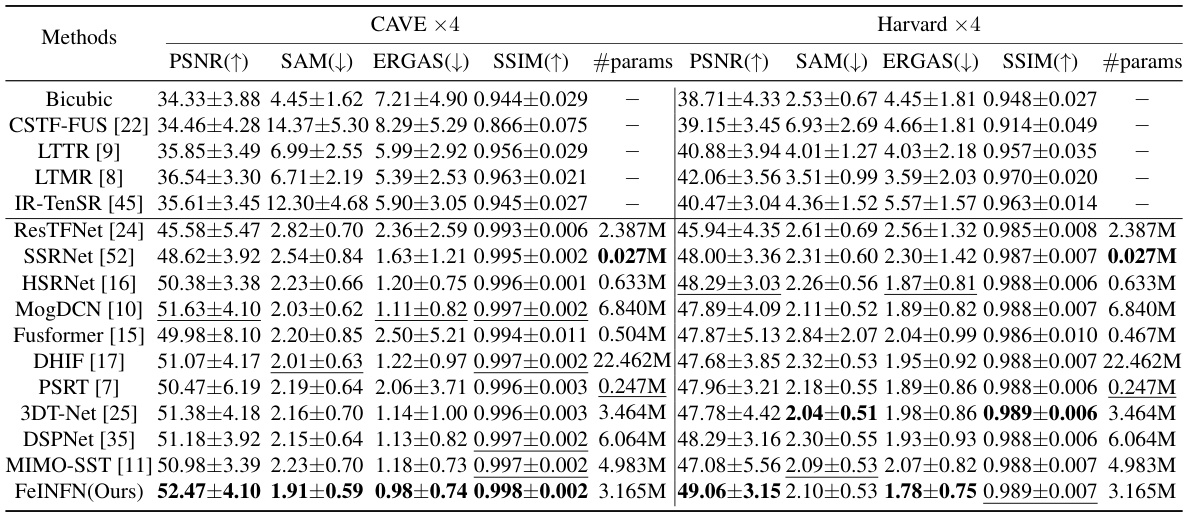

This table presents a quantitative comparison of different image fusion methods on two benchmark datasets (CAVE and Harvard) with a scaling factor of 4. For each method, the average and standard deviation of PSNR, SAM, ERGAS, and SSIM are reported, along with the number of parameters (in millions). The best performing method in each metric is highlighted in bold, and the second-best is underlined.

In-depth insights#

FeINFN Framework#

The FeINFN framework, a novel approach for multispectral and hyperspectral image fusion, cleverly integrates implicit neural representations with Fourier transforms to overcome limitations of traditional INR methods. It leverages the unique property that Fourier amplitudes of high and low-resolution images are similar, while phases differ significantly. This characteristic is exploited by using separate spatial and frequency implicit fusion functions, allowing FeINFN to capture high-frequency information and enhance global perceptual capabilities. The framework’s core is a spatial-frequency interactive decoder employing Gabor wavelet activation, theoretically shown to possess optimal bandwidths for feature learning, improving the quality of the fused images. This unique combination of spatial and frequency domain processing, along with the time-frequency tightness property of the Gabor wavelet activation, leads to state-of-the-art performance. The design of FeINFN directly addresses the issues of high-frequency loss and limited receptive field in standard implicit neural representations, resulting in a significant advancement in hyperspectral image fusion.

Fourier Fusion#

The concept of “Fourier Fusion” in the context of multispectral and hyperspectral image fusion is a novel approach that leverages the power of the Fourier transform to enhance the fusion process. The core idea is that while the amplitude of the spectral information from low- and high-resolution images may be similar, their phase information differs significantly. This difference contains crucial high-frequency details which are often lost in traditional fusion methods. By performing fusion in the frequency domain, specifically targeting the phase component, this method aims to better preserve and integrate the high-frequency information. This technique not only enhances the fusion process by capturing global spatial properties but also effectively addresses the limitations of implicit neural representation networks (INRs) which are prone to neglecting high-frequency information. The use of a spatial-frequency interactive decoder with Gabor wavelet activation function further supports this improved integration, ensuring that the spatial and frequency components work harmoniously to produce a high-quality fused image. This approach showcases a clear advantage over traditional methods, yielding state-of-the-art performance and demonstrating a potentially significant advancement in hyperspectral imaging applications.

Gabor Wavelet Decoder#

A Gabor Wavelet Decoder, in the context of hyperspectral image fusion, is a particularly interesting choice for reconstructing high-resolution images from latent feature maps. The core idea is leveraging the time-frequency localization properties of Gabor wavelets to effectively combine spatial and frequency information. Unlike simpler activation functions, Gabor wavelets are complex-valued, offering a richer representation of signals. This is particularly useful for hyperspectral data, which contains intricate spectral details and subtle frequency variations. This decoder design implies a more sophisticated fusion process, going beyond simple addition of spatial and frequency information. It is likely that the decoder’s architecture incorporates mechanisms that harness the tightness property of the Gabor wavelet transform to maintain optimal bandwidths throughout the decoding process, which can result in more accurate and precise reconstruction of the hyperspectral image. This approach theoretically leads to better preservation of high-frequency details that are often lost during standard downsampling and fusion processes, resulting in improved sharpness and visual quality of the final fused image. The use of a complex-valued Gabor wavelet also implies the potential for modeling phase information which can be crucial for accurate reconstruction of the spectral details.

MHIF Experiments#

A hypothetical ‘MHIF Experiments’ section would delve into the empirical evaluation of a novel multispectral and hyperspectral image fusion (MHIF) method. It would likely begin by describing the datasets used, highlighting their characteristics (spatial and spectral resolutions, number of bands, etc.) and suitability for the task. Benchmark datasets, like CAVE and Harvard, are commonly used and would be expected. The experimental setup would detail the evaluation metrics employed (e.g., PSNR, SSIM, SAM, ERGAS), explaining their relevance to MHIF. The results would then be presented, possibly using tables and figures to compare the performance of the proposed method against state-of-the-art techniques. Ablation studies would likely be included to assess the impact of individual components of the method. Finally, the discussion would interpret the results, acknowledging limitations and suggesting future research directions. A well-structured ‘MHIF Experiments’ section would provide strong evidence of the method’s efficacy and robustness.

INR Limitations#

Implicit neural representations (INRs) demonstrate significant potential in image fusion tasks, but their inherent limitations warrant attention. A core weakness is the tendency of INRs to struggle with high-frequency information, potentially leading to blurry or less detailed results. This limitation stems from INRs’ reliance on smooth, continuous representations that may not effectively capture sharp edges or fine details. Another important issue is the limited receptive field inherent in standard INRs, which restricts their ability to capture global context and contextual relationships within images. INRs predominantly focus on local interactions, hindering their perception of broader spatial structures crucial for a coherent representation of fused images. Furthermore, training instability can be problematic as INRs often involve complex optimization landscapes that make convergence challenging. Addressing these limitations is crucial for improving the quality and reliability of INR-based image fusion, and this is specifically tackled by the novel method FeINFN presented in this paper.

More visual insights#

More on figures

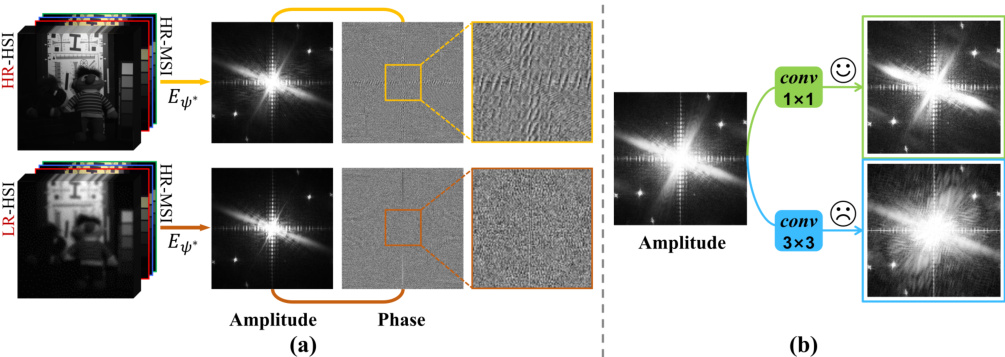

This figure shows that the amplitudes of latent codes from the encoder fed by HR-HSI and LR-HSI (combined with HR-MSI) are similar, but their phases are different. The authors explain that this is because the high-frequency information is mainly contained in the phase component. The figure also compares the effect of 3x3 and 1x1 convolutions on spectrum leakage, illustrating that 1x1 convolution is preferred to avoid such issues. This supports the design choice of the proposed method, which processes the amplitude and phase components separately in the frequency domain.

This figure illustrates the architecture of the proposed Fourier-enhanced Implicit Neural Fusion Network (FeINFN). It shows the flow of data through the network, starting with the low-resolution hyperspectral image (LR-HSI) and high-resolution multispectral image (HR-MSI) as inputs. The inputs are processed by spectral and spatial encoders, and then fed into a spatial-frequency implicit fusion function (Spa-Fre IFF) which operates in both spatial and frequency domains. The output of the Spa-Fre IFF is then decoded using a spatial-frequency interactive decoder (SFID) to produce the final high-resolution hyperspectral image (HR-HSI). Key components of the architecture are highlighted, including the use of FFT and IFFT for frequency domain processing, and the use of a Gabor wavelet activation function in the decoder.

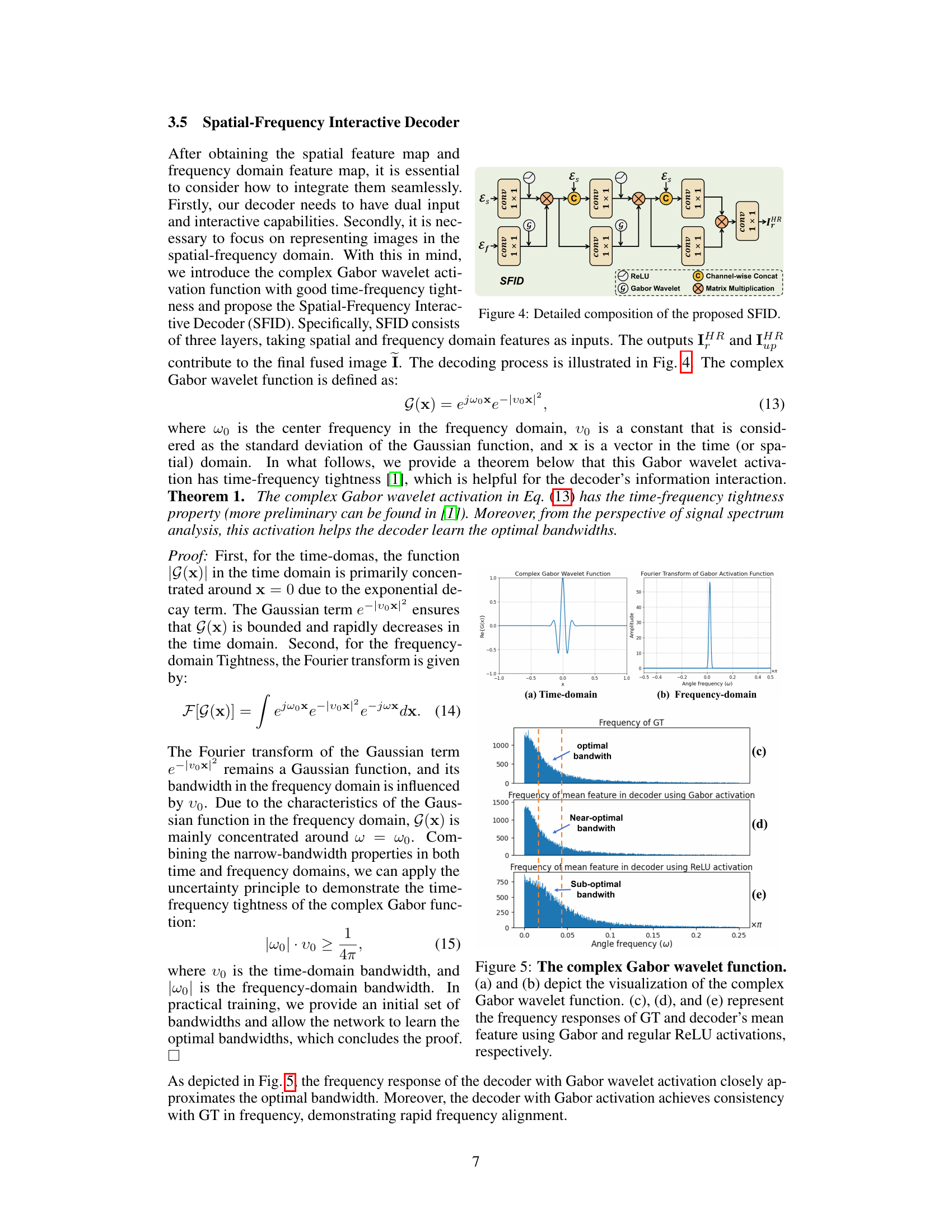

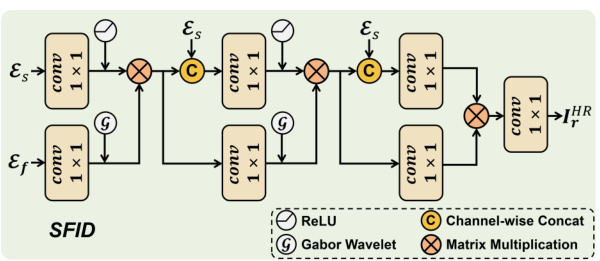

The figure shows the architecture of the Spatial-Frequency Interactive Decoder (SFID), a crucial component of the FeINFN framework. The SFID takes spatial features (Es) and frequency features (Ef) as inputs. It processes these features through three layers. Each layer employs 1x1 convolutions, Gabor wavelet activation functions, channel-wise concatenation (C), and matrix multiplications (⊗) to integrate spatial and frequency information. The final output of the SFID is a refined residual image (IHR) that is added to the upsampled low-resolution image to obtain the final high-resolution hyperspectral image. The diagram clearly depicts the flow of information and the operations performed at each stage.

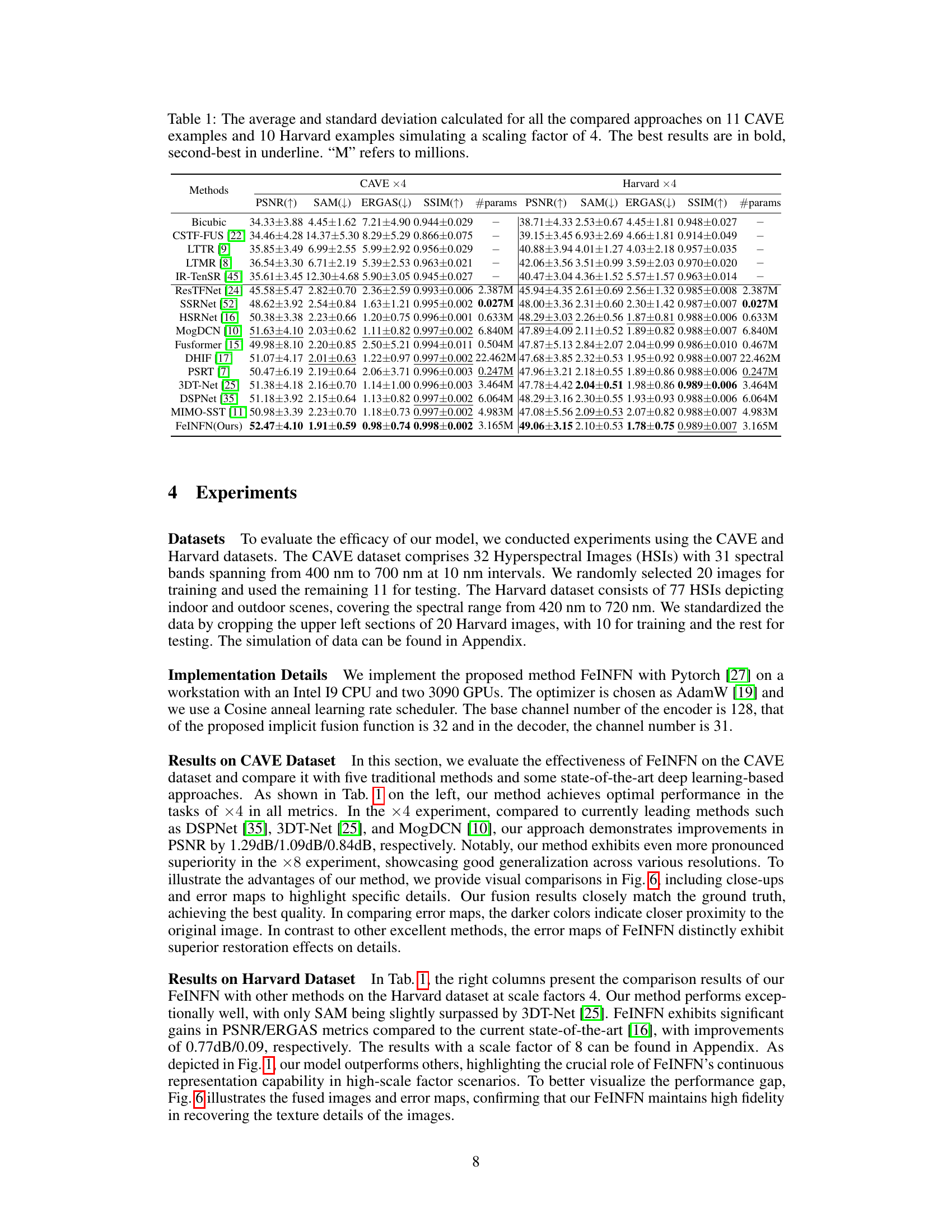

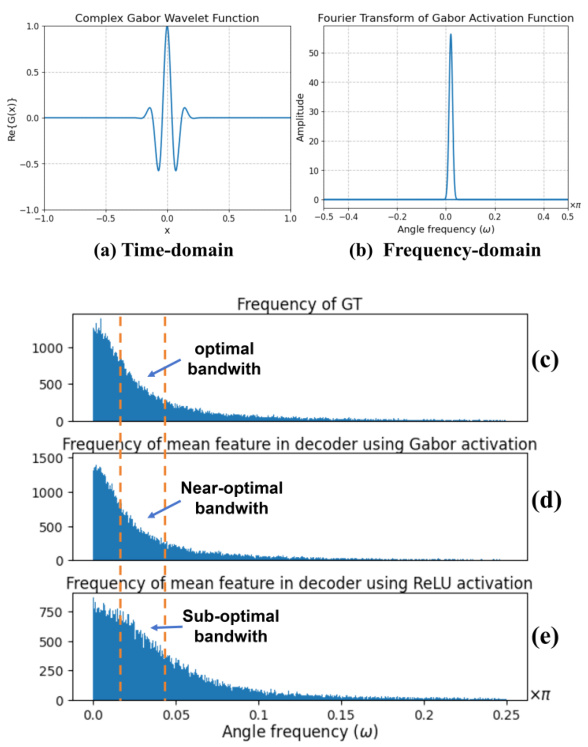

This figure visualizes the complex Gabor wavelet function in both time and frequency domains, highlighting its time-frequency tightness property. It compares the frequency responses of the ground truth (GT) and decoder features when using Gabor and ReLU activations, demonstrating the Gabor wavelet’s superior ability to capture optimal bandwidths.

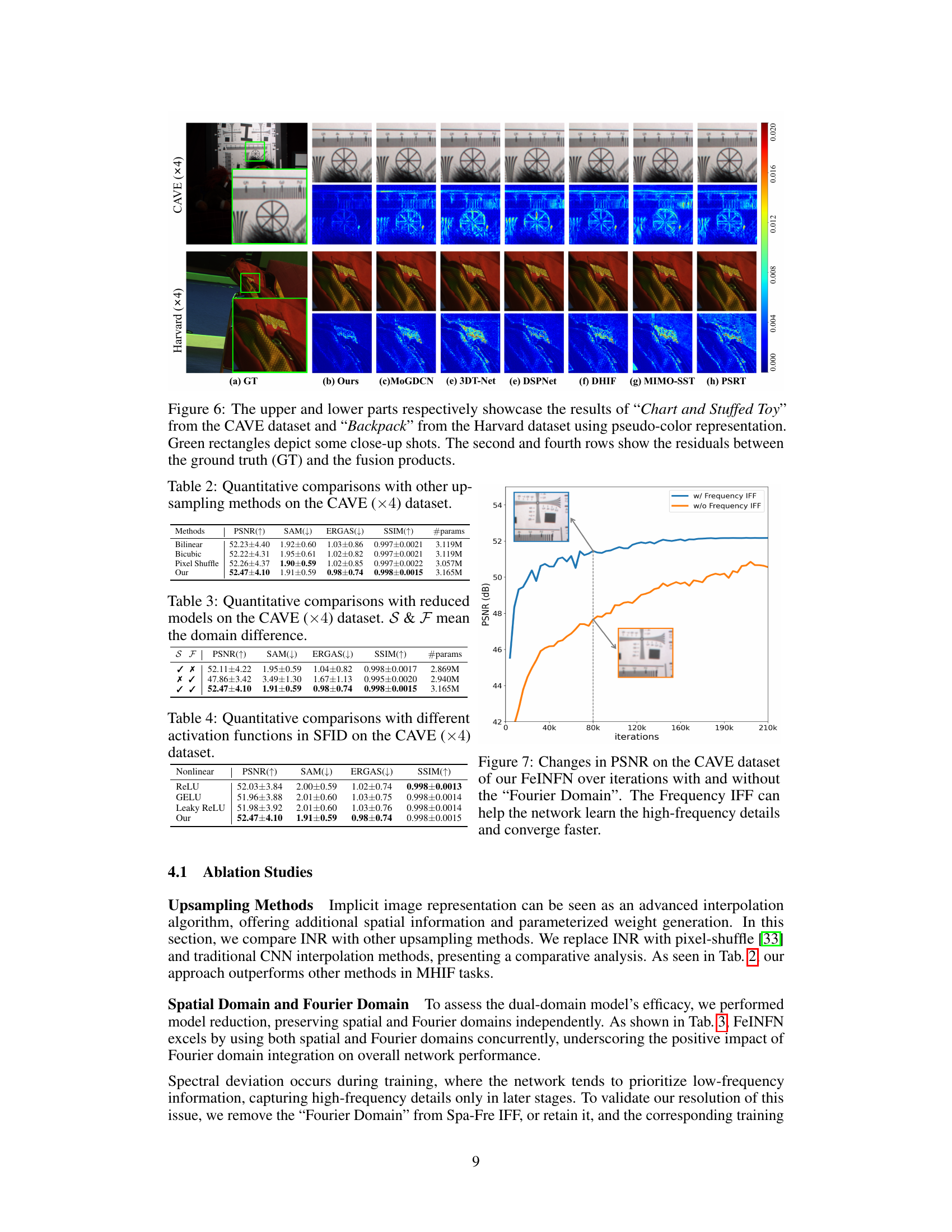

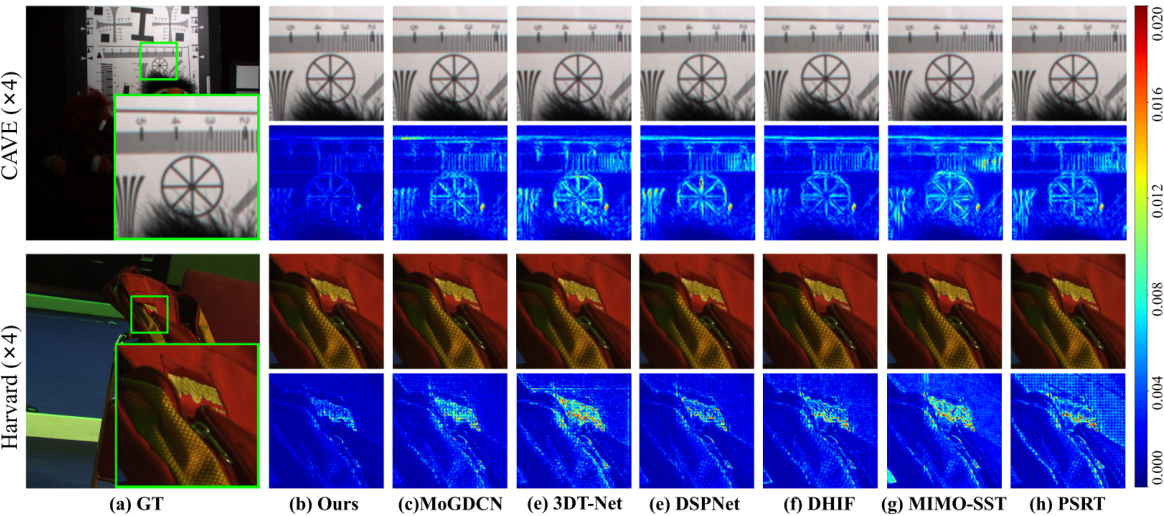

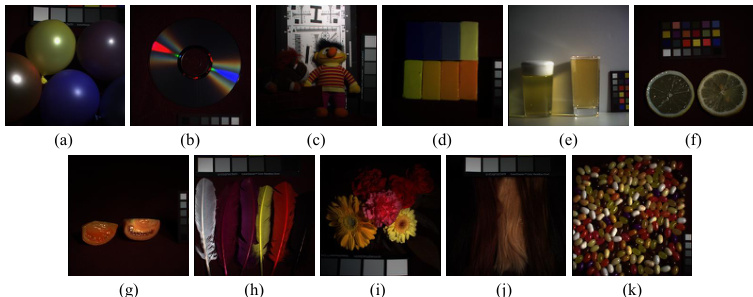

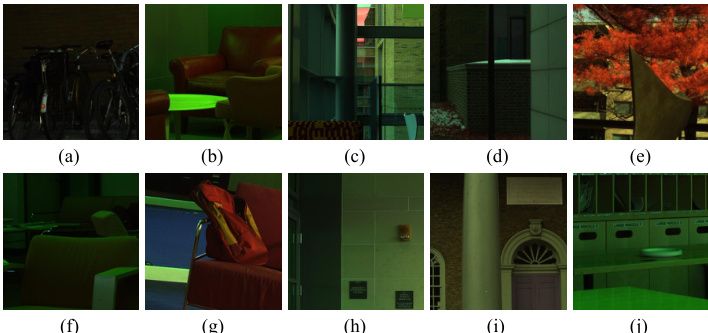

This figure compares the visual results of the proposed FeINFN method against other state-of-the-art methods for hyperspectral image fusion. The top row shows the fusion results for the ‘Chart and Stuffed Toy’ image from the CAVE dataset, while the bottom row shows the fusion results for the ‘Backpack’ image from the Harvard dataset. The images are displayed using a pseudo-color representation for better visualization of spectral information. The green boxes highlight specific areas for a closer comparison. The second and fourth rows show the error maps, which represent the difference between the fused images and the ground truth. Darker colors in the error maps indicate a smaller difference and better fusion performance.

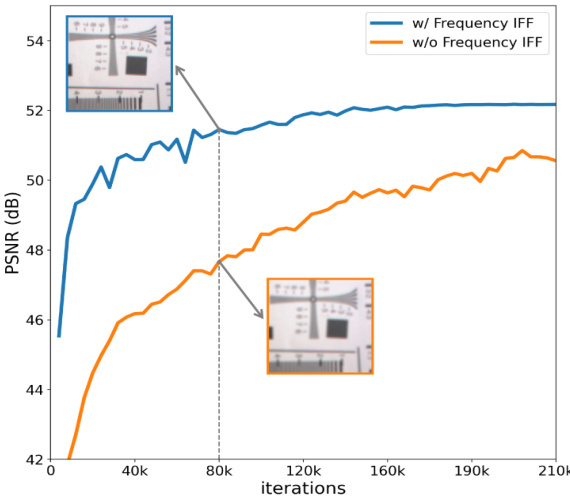

This figure shows the PSNR (Peak Signal-to-Noise Ratio) values over training iterations for the proposed FeINFN model with and without the Fourier domain component. The blue line represents the model incorporating the spatial and frequency implicit fusion function (Spa-Fre IFF) which includes the Fourier transform for frequency feature fusion. The orange line represents the model without the frequency domain component. The figure demonstrates that incorporating the Fourier domain component significantly improves PSNR, implying better performance in capturing high-frequency details and faster convergence during training.

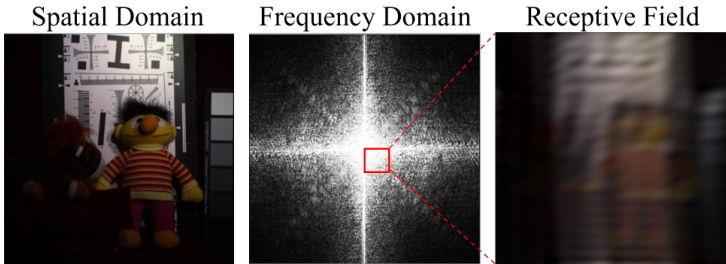

This figure demonstrates the global impact of convolution in the frequency domain on the spatial domain. It shows a spatial domain image, its corresponding frequency domain representation (a spectrogram), and the result of a localized convolution in the frequency domain. The localized convolution in the frequency domain results in a global change to the spatial domain image, highlighting how operations in the frequency domain affect the entire spatial image, expanding the receptive field of the implicit neural representation.

This figure compares the visual results of the proposed FeINFN method with other state-of-the-art methods on two benchmark datasets: CAVE and Harvard. It shows both the fused images and the residual error maps (differences between the fused image and the ground truth) for several sample images. The close-up shots highlight the details and differences between different fusion methods.

This figure compares the visual results of the proposed FeINFN model against other state-of-the-art methods on the CAVE and Harvard datasets. It shows the fused images, alongside close-up views highlighting details, and the residual images which demonstrate the differences between the model’s output and the ground truth. The pseudo-color representation aids in the visualization of spectral details.

This figure compares the error maps produced by the proposed FeINFN model using Gabor wavelet activation against those using ReLU and GELU activations. The Gabor wavelet method shows significantly lower error and better spatial compactness, indicating its effectiveness in representing high-frequency details and edges.

More on tables

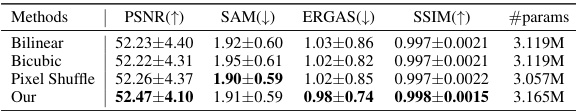

This table compares the performance of different upsampling methods on the CAVE dataset with a scaling factor of 4. The metrics used are PSNR (higher is better), SAM (lower is better), ERGAS (lower is better), and SSIM (higher is better). The number of parameters (#params) for each method is also listed. It shows that the proposed FeINFN method outperforms the other techniques.

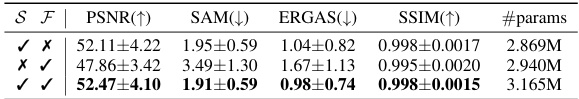

This table presents a quantitative comparison of different model variations of the FeINFN on the CAVE dataset with a scaling factor of 4. It shows the impact of removing either the spatial or frequency component from the fusion function on the overall performance, measured by PSNR, SAM, ERGAS, and SSIM. The results highlight the importance of using both components for optimal performance.

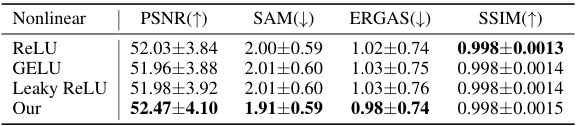

This table presents a quantitative comparison of different activation functions used in the Spatial-Frequency Interactive Decoder (SFID) component of the proposed FeINFN model. The comparison is performed on the CAVE dataset with a scaling factor of 4. The metrics used for comparison include PSNR (Peak Signal-to-Noise Ratio), SAM (Spectral Angle Mapper), ERGAS (Erreur Relative Globale Adimensionnelle de Synthèse), and SSIM (Structural SIMilarity). The ‘Our’ row represents the results obtained using the proposed Gabor wavelet activation function, while the other rows show the results for ReLU, GELU, and Leaky ReLU activation functions.

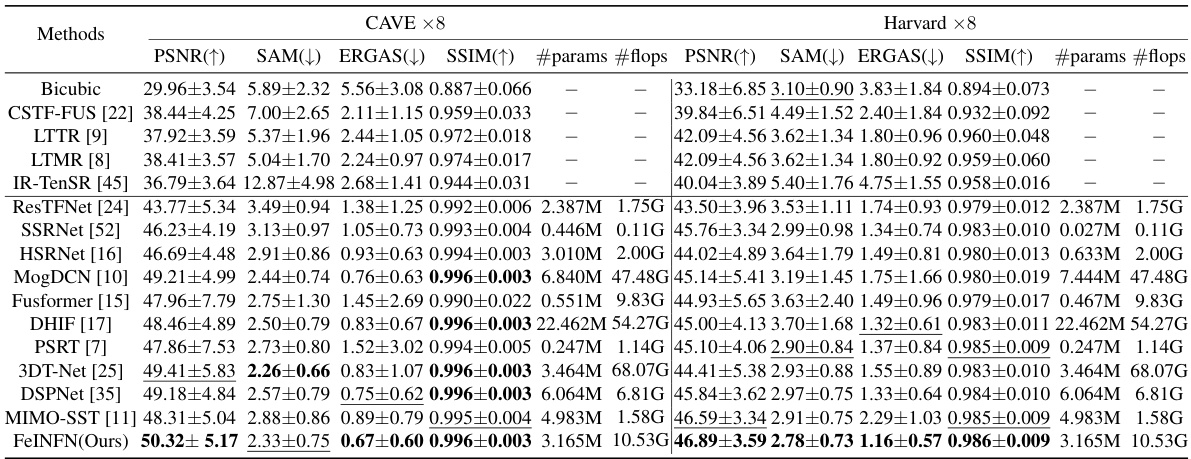

This table presents a quantitative comparison of different image fusion methods on two benchmark datasets (CAVE and Harvard). The methods are evaluated using four metrics: PSNR, SAM, ERGAS, and SSIM. The results are shown for a scaling factor of 4 (meaning the low-resolution input was upscaled to four times its original size). The best and second-best results for each metric are highlighted.

This table presents the ablation study results, comparing the performance of the FeINFN model with different combinations of spatial and frequency domains. The metrics used are PSNR, SAM, ERGAS, and SSIM. The results show the impact of including the frequency domain in the fusion process.

Full paper#