↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Temporal Knowledge Graph Reasoning (TKGR) is crucial for understanding how real-world relationships evolve over time. However, existing methods either lack interpretability (deep learning) or struggle to learn complex temporal patterns (rule-based). Large Language Models (LLMs) show promise but are resource-intensive to update and function as ‘black boxes’.

The proposed method, LLM-DA, leverages LLMs to generate interpretable temporal rules from historical data. A dynamic adaptation strategy updates these rules with the latest events. This makes reasoning more accurate and adaptable to evolving knowledge. Experimental results show that LLM-DA significantly outperforms existing methods on several datasets, without requiring LLM fine-tuning.

Key Takeaways#

Why does it matter?#

This paper is important because it presents LLM-DA, a novel and robust framework for temporal knowledge graph reasoning (TKGR). It addresses the limitations of existing deep learning and rule-based approaches by leveraging the strengths of LLMs while mitigating their weaknesses, such as lack of interpretability and difficulty in updating knowledge. This opens new avenues for research in interpretable reasoning and dynamic knowledge adaptation in various knowledge-rich applications. The proposed method also significantly improves accuracy without fine-tuning, saving valuable computational resources.

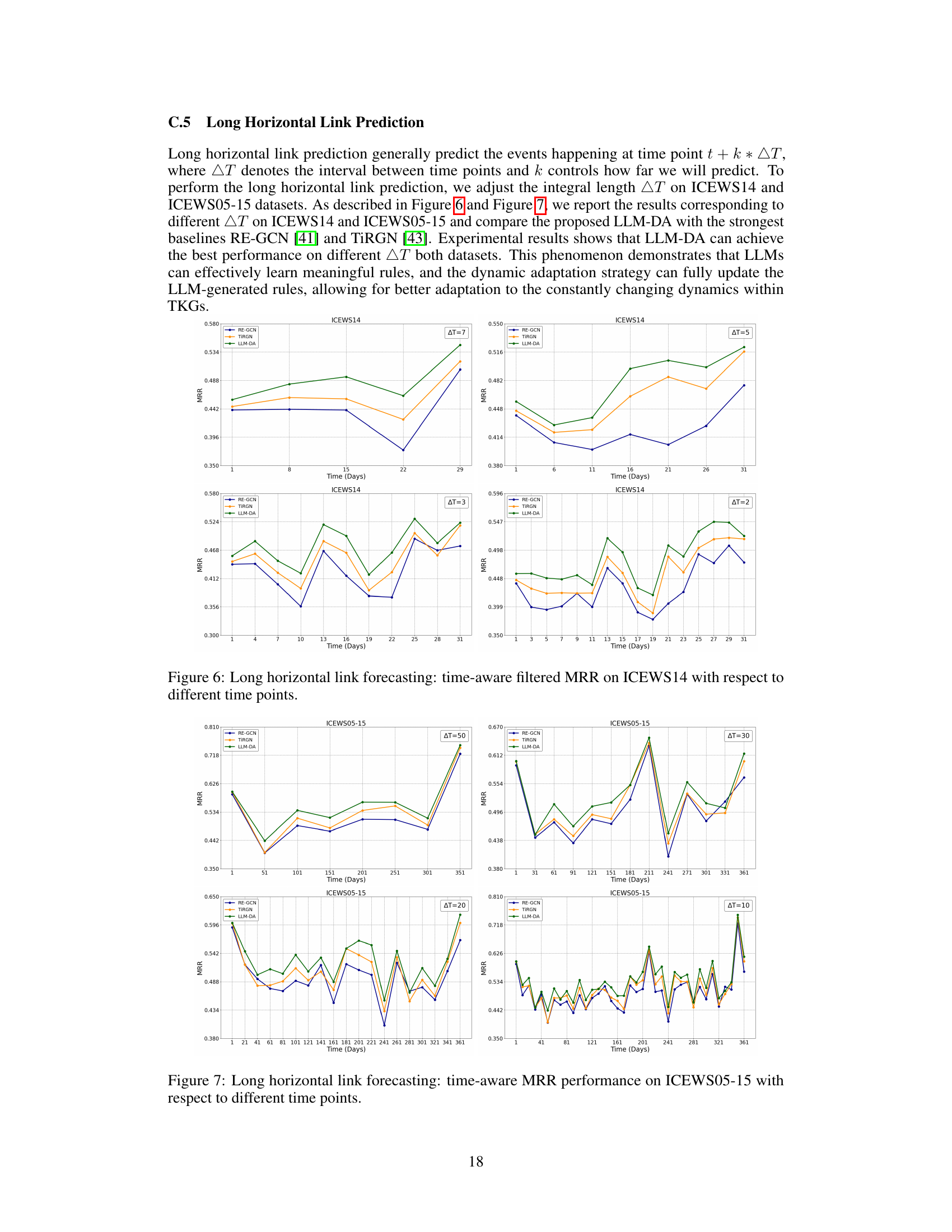

Visual Insights#

The figure illustrates the LLM-DA framework. It shows how LLM-DA uses LLMs to generate rules from historical data. These rules are then dynamically updated using current data, aiming to better match the actual distribution of relations in the Temporal Knowledge Graph (TKG). The diagram visually represents the shift in data distributions from historical to current data, demonstrating how the dynamically updated rules better model the current relations.

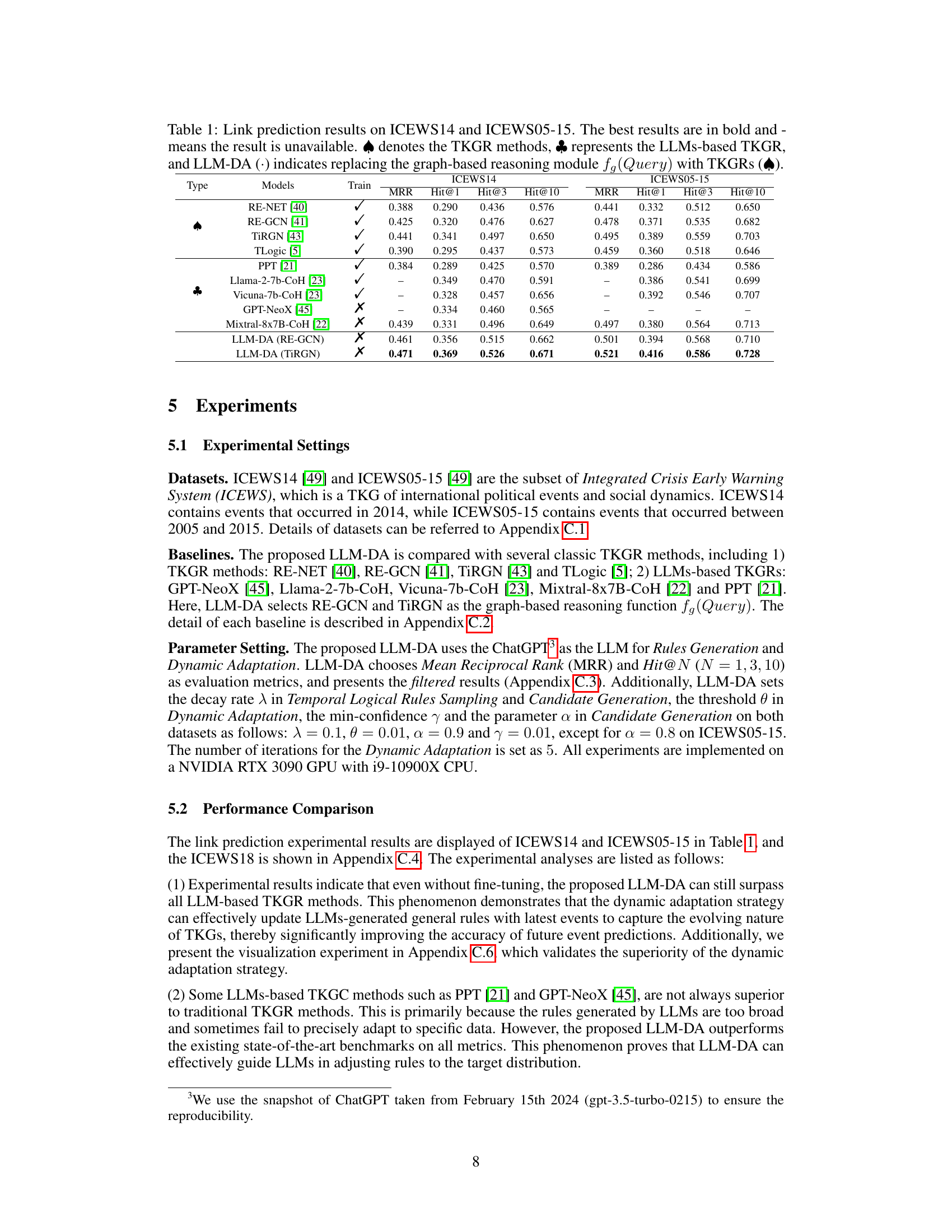

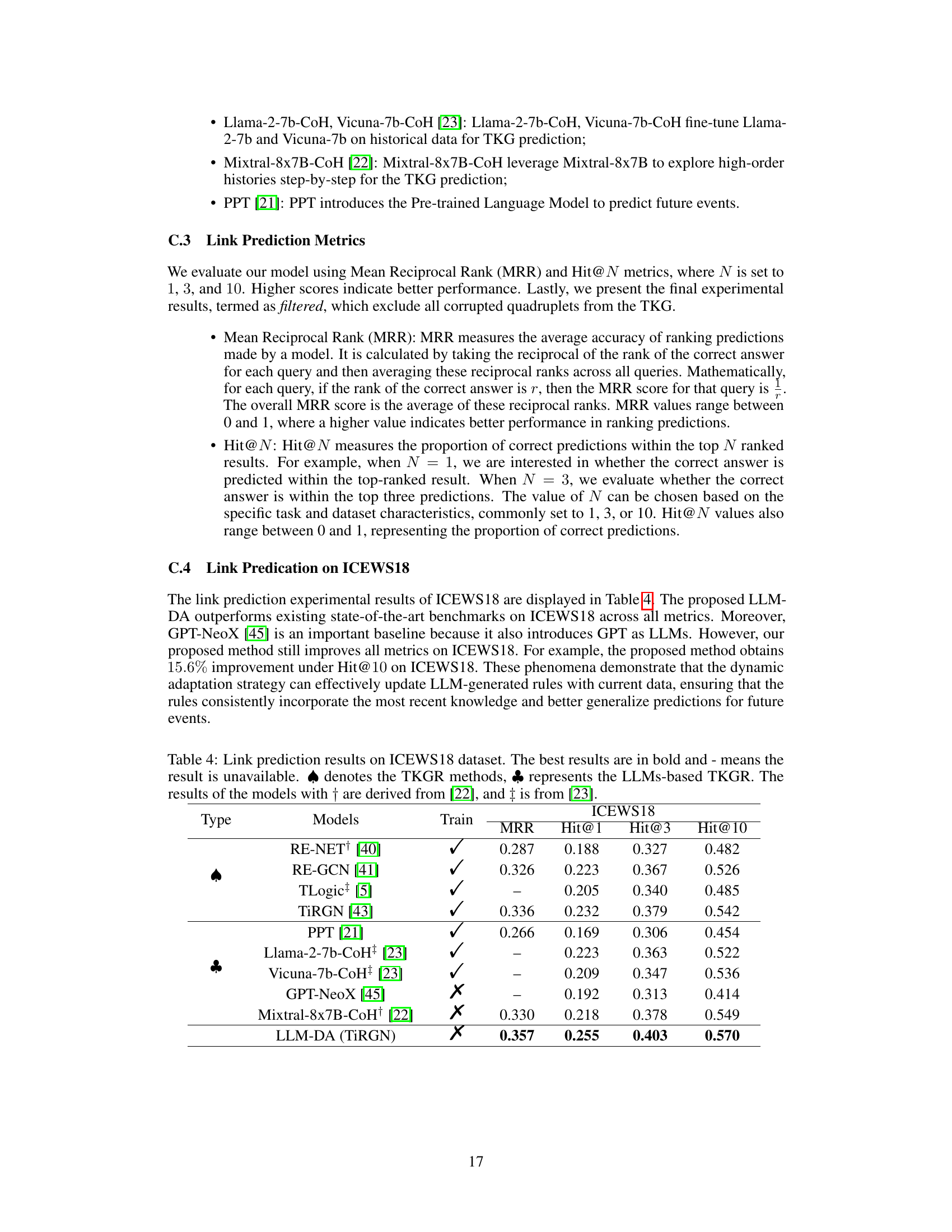

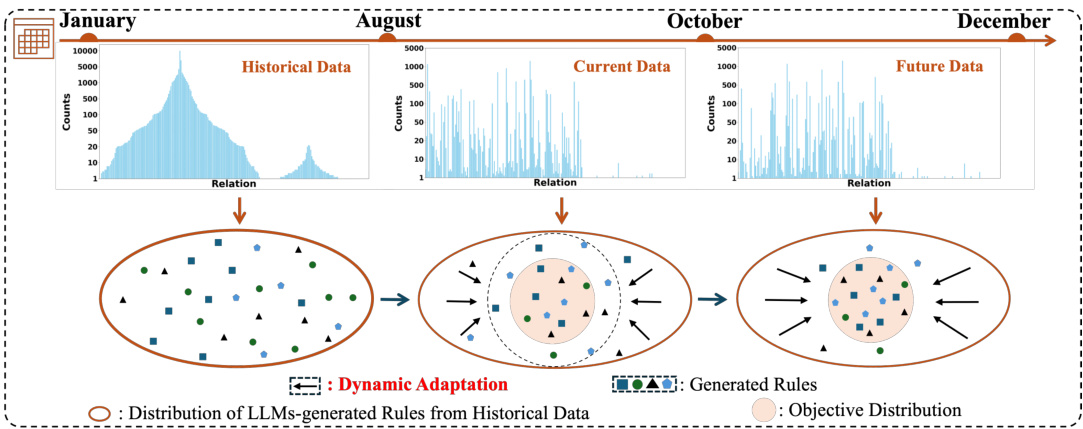

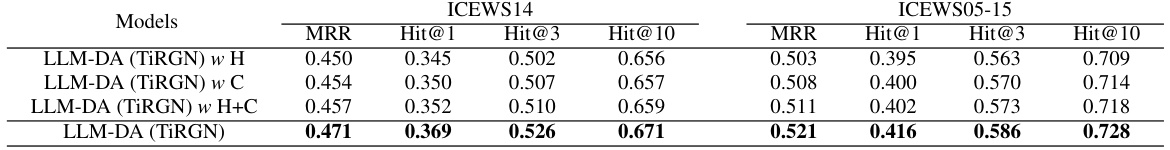

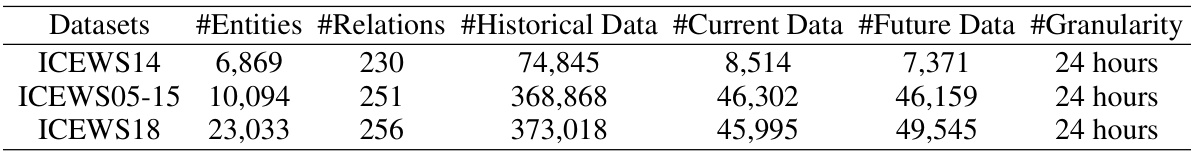

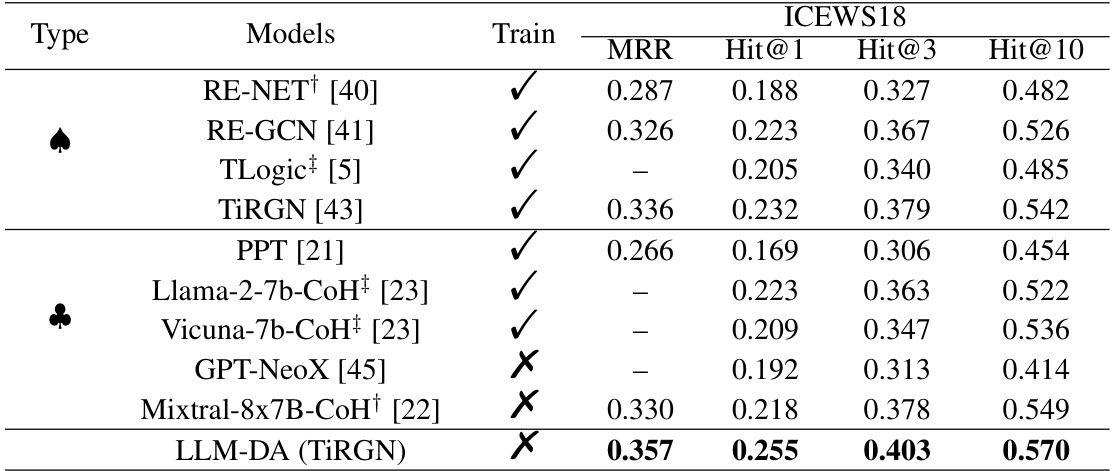

This table presents the link prediction results of several TKGR methods and LLMs-based TKGR methods on two datasets, ICEWS14 and ICEWS05-15. The performance is evaluated using Mean Reciprocal Rank (MRR) and Hit@N (N=1,3,10) metrics. LLM-DA, a novel method proposed in the paper, is compared with traditional methods and other LLM-based methods. The table highlights the performance improvements of LLM-DA by showing that it achieves the best results in most cases, even without fine-tuning the LLMs.

In-depth insights#

LLM-DA: Dynamic TKGR#

LLM-DA, a dynamic approach to Temporal Knowledge Graph Reasoning (TKGR), leverages the power of Large Language Models (LLMs) to extract and dynamically adapt temporal logical rules. Instead of relying solely on static rules or deep learning models, which often suffer from interpretability issues, LLM-DA uses LLMs to analyze historical data, uncovering temporal patterns that inform rule generation. The dynamic adaptation strategy is key; it continuously updates these rules with the latest information from the evolving TKG, ensuring the model’s predictions reflect the most current knowledge. This approach aims to improve reasoning accuracy and interpretability while reducing the need for resource-intensive LLM fine-tuning, thereby creating a more robust and adaptable TKGR framework. The core of LLM-DA’s innovation is its ability to combine the power of LLMs with the efficiency and interpretability of rule-based reasoning. By dynamically updating rules rather than the LLM itself, LLM-DA addresses the challenges of updating LLMs for the constantly changing TKGs.

Rule-Based Reasoning#

Rule-based reasoning, within the context of temporal knowledge graph reasoning (TKGR), leverages explicitly defined rules to perform inference. Unlike deep learning approaches that often function as black boxes, rule-based methods offer enhanced interpretability, making the reasoning process transparent and understandable. This characteristic is particularly valuable for TKGR, where understanding the temporal dependencies and logical connections driving predictions is crucial. However, a key challenge lies in the effective learning and adaptation of these rules. Manually crafting comprehensive and accurate rules is labor-intensive and often infeasible for large-scale TKGs. Therefore, efficient methods for automatically extracting or learning temporal logical rules from historical data are essential, and further, mechanisms for dynamically updating the rule-base to account for evolving knowledge in the TKG are necessary for maintaining accuracy over time. The effectiveness of rule-based reasoning hinges on the quality of the extracted rules and the ability to adapt to new information. Consequently, methods combining rule-based reasoning with other techniques such as graph-based reasoning are often more robust and accurate.

Dynamic Adaptation#

The concept of ‘Dynamic Adaptation’ in the context of a research paper likely revolves around the system’s ability to adjust and learn from new data without extensive retraining. This is especially crucial when dealing with constantly evolving information, such as in temporal knowledge graphs where new events and relationships are continuously added. A successful dynamic adaptation mechanism would be efficient, requiring minimal computational resources, and robust, capable of handling noisy or incomplete data. Interpretability is another key aspect; the method should allow for an understanding of how the system adapts and why certain changes are made. The effectiveness of dynamic adaptation is ultimately evaluated on its ability to improve the system’s performance on future predictions by incorporating the most up-to-date knowledge. A potential approach might involve incrementally updating a model’s parameters or rules based on the incoming data, rather than completely retraining the entire model. This allows for a continuous learning process while keeping the computational cost manageable.

Interpretable Reasoning#

Interpretable reasoning, in the context of Temporal Knowledge Graph Reasoning (TKGR), is a crucial aspect often overlooked in favor of accuracy. Many deep learning approaches excel at prediction but lack transparency, making it difficult to understand the underlying logic. This lack of interpretability hinders trust and limits the potential for human-in-the-loop refinement and debugging. The use of Large Language Models (LLMs) offers a potential path toward more interpretable TKGR. LLMs excel at natural language processing and can potentially extract and express the reasoning process used to derive inferences in a human-understandable form. By translating the complex relationships within a Temporal Knowledge Graph into a sequence of logical rules, LLMs can significantly improve the transparency of the system. However, challenges remain. LLMs themselves function as “black boxes,” and the rules they generate must be carefully vetted for accuracy and consistency to ensure reliability. The dynamic adaptation strategy implemented to update rules based on current data is critical for maintaining relevance and interpretability over time. Finally, careful selection of the most salient relations within the TKG is crucial to focus the LLM’s attention and improve the quality of the generated rules. Therefore, the combination of LLMs with a robust rule extraction and update mechanism is promising for building trustworthy, high-performing, and interpretable TKGR systems.

Future of LLMs in TKGR#

The future of LLMs in Temporal Knowledge Graph Reasoning (TKGR) is exceptionally promising, driven by LLMs’ inherent strengths in understanding complex temporal relationships and vast knowledge stores. Improved interpretability will be crucial; current “black box” nature hinders trust and adoption. Future research should focus on developing methods to extract and explain LLM reasoning, possibly through rule extraction or attention mechanism analysis. Dynamic adaptation strategies are vital given the constantly evolving nature of TKGs; integrating real-time updates seamlessly into LLM reasoning will be key. Efficient rule generation and refinement techniques are needed to avoid the computational cost of full LLM retraining. Furthermore, exploration of hybrid approaches, combining LLMs’ strengths with existing TKGR methods, will unlock novel capabilities, leading to more robust and accurate temporal reasoning.

More visual insights#

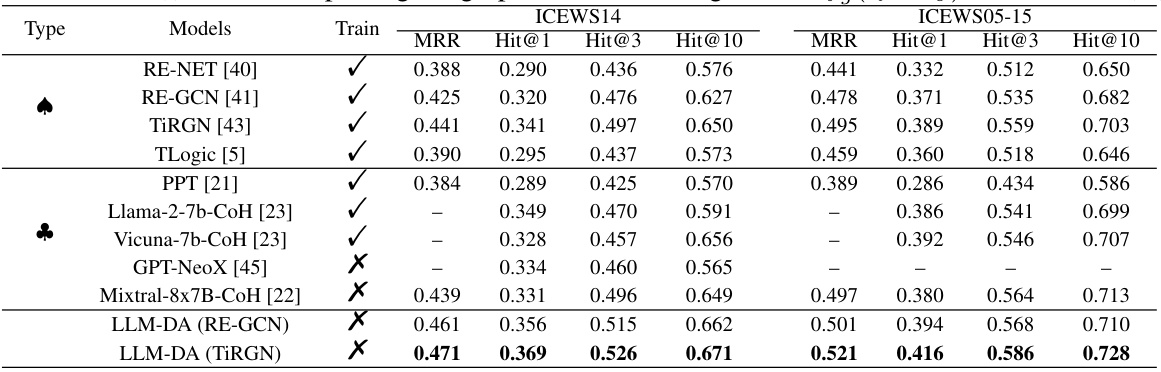

More on figures

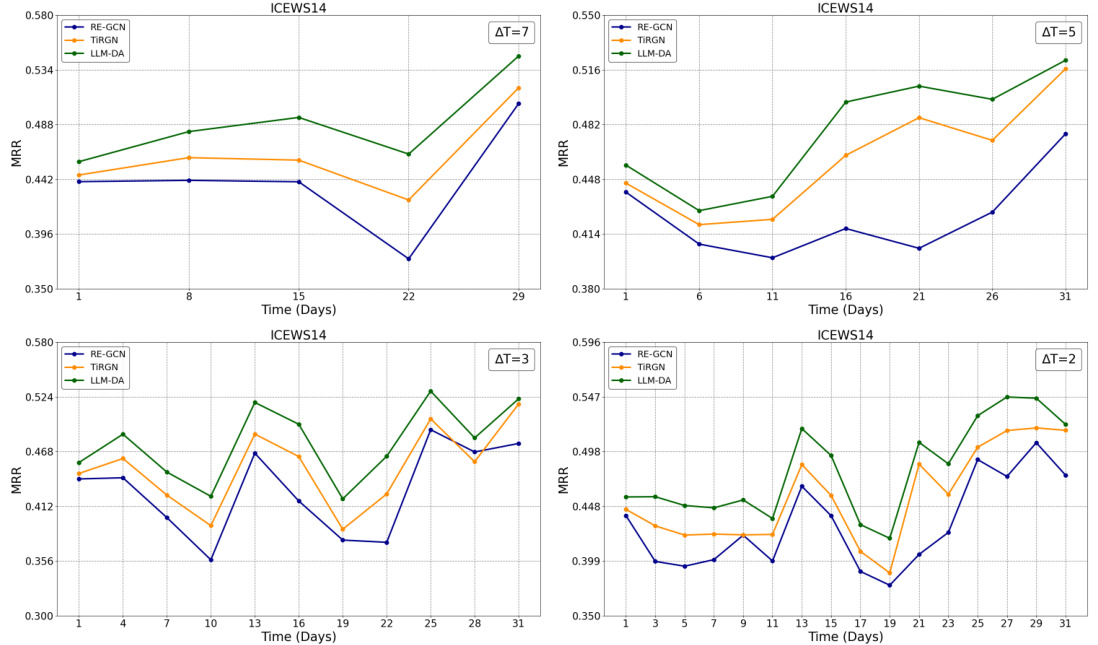

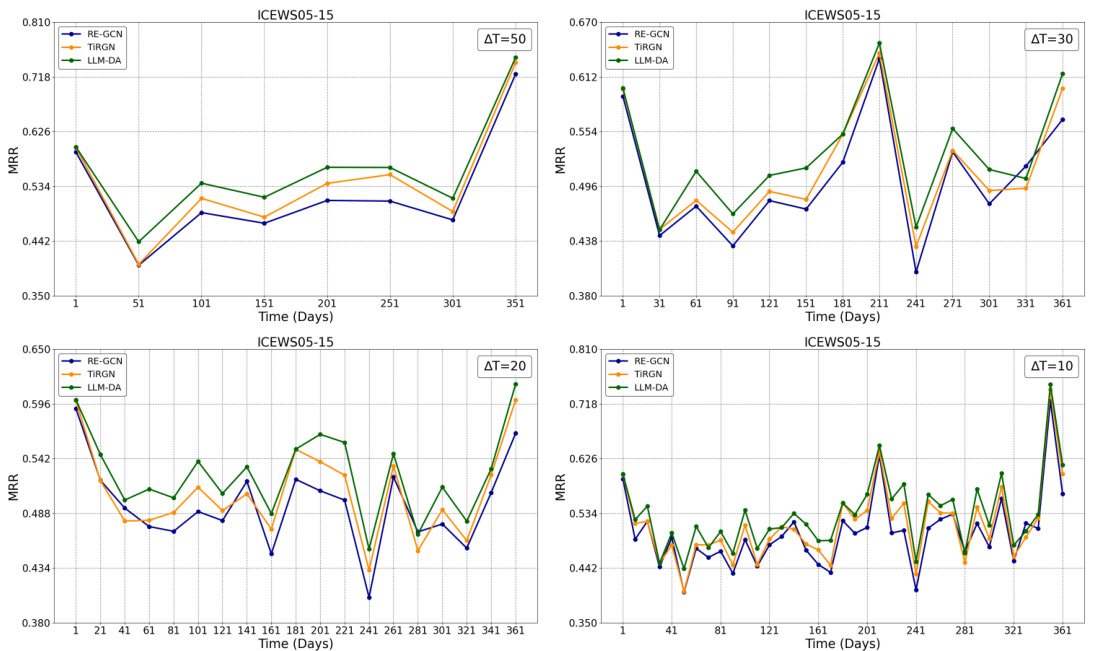

The figure illustrates the framework of the LLM-DA method. It shows four main stages: 1) Temporal Logical Rules Sampling, where constrained Markovian random walks are used to extract temporal logical rules from historical data; 2) Rule Generation, where LLMs generate general rules based on historical data and top-k relevant relations selected by a contextual selector; 3) Dynamic Adaptation, where LLMs update the generated rules using current data; and 4) Candidate Reasoning, where rule-based and graph-based reasoning are combined to generate candidates for the final answer. The entire process is illustrated with an example query and answer.

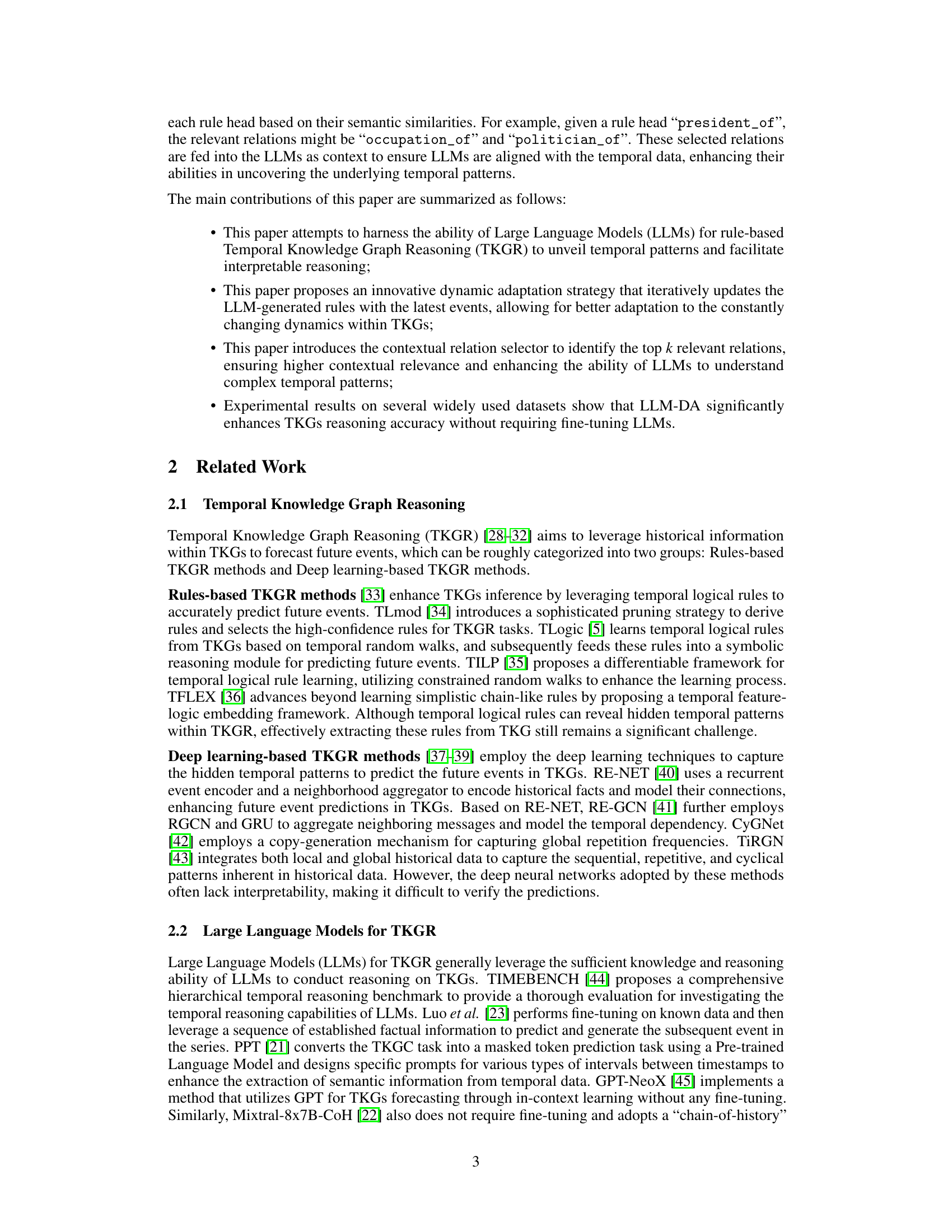

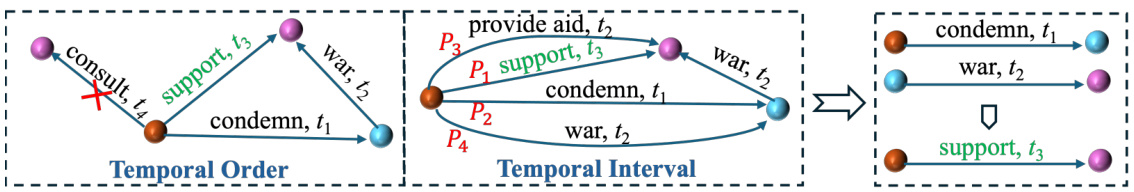

This figure illustrates the constraints applied during the constrained Markovian random walks process used in the LLM-DA method for temporal knowledge graph reasoning. It shows how the temporal order and temporal intervals between events influence the selection of the next node during the random walk. The ‘X’ symbol indicates paths that are disallowed due to violating these constraints, while ‘P’ represents the transition probabilities, highlighting that paths with shorter temporal intervals receive higher weights. This ensures the random walk prioritizes temporally closer nodes, reflecting the evolving nature of the data.

The figure illustrates the LLM-DA framework. LLM-DA uses LLMs to generate rules from historical data, then dynamically updates those rules with current data to improve accuracy. The diagram shows the process, including the distribution of LLM-generated rules from historical data, how these rules are updated based on current data, and the objective distribution of relations in the TKG (Temporal Knowledge Graph).

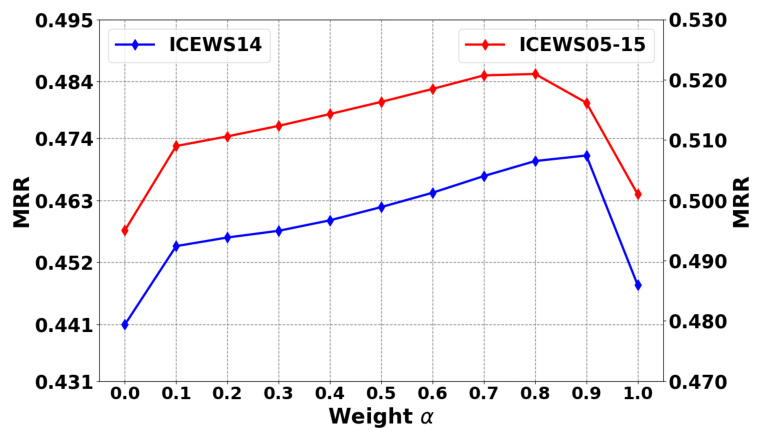

This figure shows the impact of varying the number of iterations in the dynamic adaptation module of the LLM-DA model on the Mean Reciprocal Rank (MRR) metric for two datasets, ICEWS14 and ICEWS05-15. The x-axis represents the number of iterations, while the y-axis shows the MRR values. The graph indicates that increasing the number of iterations generally leads to improved performance on both datasets, although the rate of improvement diminishes with additional iterations.

This figure presents the framework of the LLM-DA method proposed in the paper. It shows the four main stages involved in the process: 1. Temporal Logical Rules Sampling: extracting rules from historical data; 2. Rule Generation: using LLMs to generate general rules based on historical data; 3. Dynamic Adaptation: updating these rules with current data; and 4. Candidate Reasoning: using the updated rules to predict future events. The diagram visually depicts the flow of data and the interactions between different components of the framework.

This figure illustrates the framework of the Large Language Models-guided Dynamic Adaptation (LLM-DA) method. The process begins with extracting temporal logical rules from historical data using constrained Markovian random walks. A contextual relation selector filters the relations, providing context for LLMs to generate high-quality general rules. These rules are then dynamically adapted using current data, ensuring they incorporate the most recent knowledge. Finally, the updated rules are applied for reasoning and prediction on future events.

This figure illustrates the LLM-DA framework, showing the four main stages: 1) Temporal Logical Rules Sampling extracts rules from historical data using constrained Markovian random walks. 2) Rule Generation uses LLMs to generate general rules from the sampled rules and top-k relevant relations selected by the contextual relation selector. 3) Dynamic Adaptation updates these general rules using current data and LLMs. 4) Candidate Reasoning combines rule-based and graph-based reasoning using the updated rules and a graph neural network to generate candidate answers.

This figure illustrates the framework of the LLM-DA model. The model begins by extracting temporal rules from historical data using constrained Markovian random walks. Then it uses LLMs to generate general rules from these extracted rules, incorporating the top-k most important relations identified by a contextual relation selector. These rules are then dynamically updated using current data via another LLM process. Finally, the updated rules are applied to predict future events through a combination of rule-based and graph-based reasoning.

More on tables

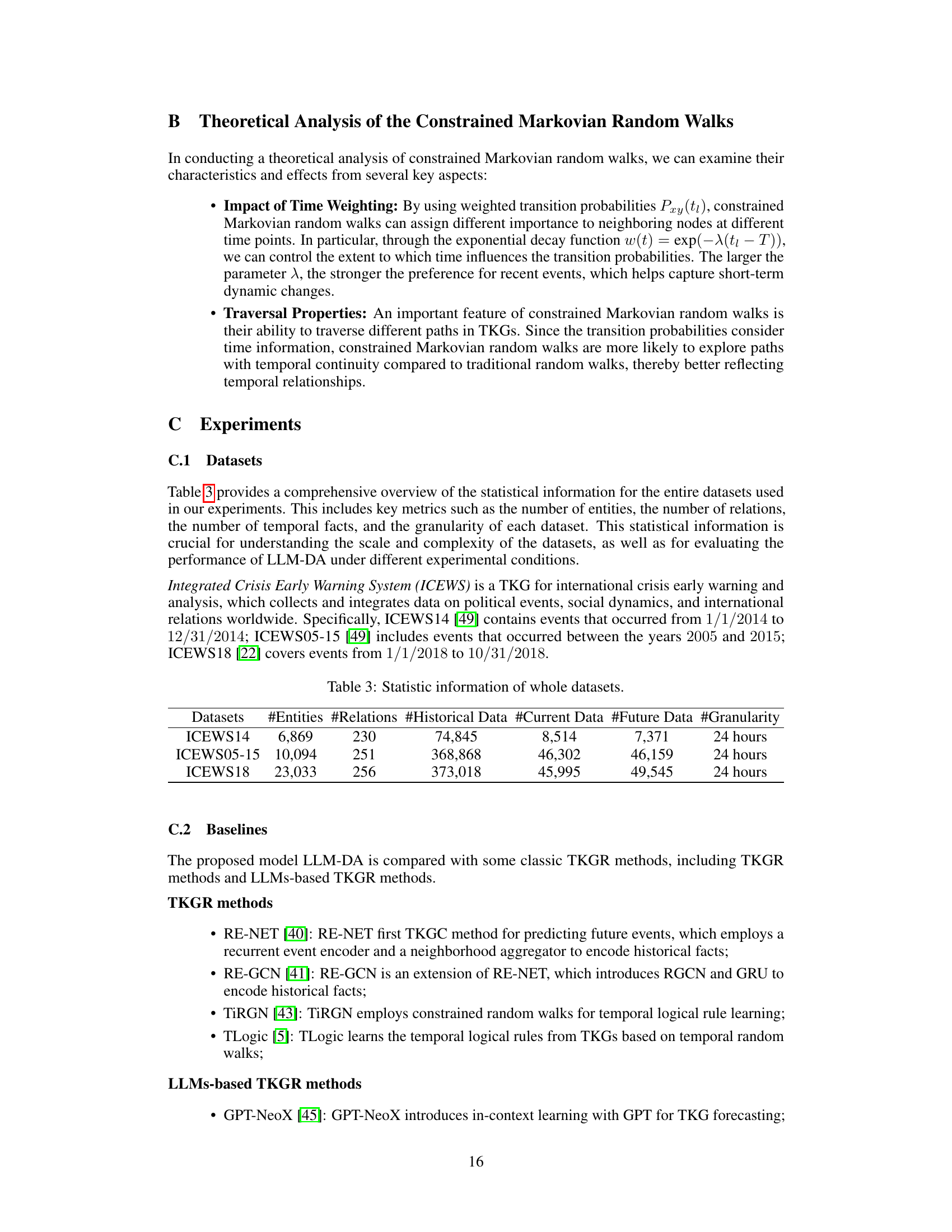

This table presents the results of link prediction experiments on two datasets, ICEWS14 and ICEWS05-15, comparing various methods including traditional TKGR methods and LLMs-based TKGR methods. The best performing methods for each metric are highlighted in bold. LLM-DA results are shown both with and without a replacement graph-based reasoning module from traditional TKGR methods.

This table presents the link prediction results on two datasets, ICEWS14 and ICEWS05-15, comparing various TKGR (Temporal Knowledge Graph Reasoning) methods. It shows the Mean Reciprocal Rank (MRR) and Hit@N (N=1,3,10) metrics for each method. The methods are categorized into traditional TKGR approaches and those using LLMs (Large Language Models). LLM-DA results are shown with different underlying graph reasoning methods (RE-GCN and TiRGN) substituted in for comparison. The best results for each metric on each dataset are highlighted in bold.

This table presents the link prediction results on two datasets, ICEWS14 and ICEWS05-15, comparing various TKGR methods including rule-based and deep learning-based approaches, as well as LLM-based methods. The best performing method for each metric is highlighted in bold. The table shows the performance of different models in terms of Mean Reciprocal Rank (MRR) and Hit@N (where N=1,3,10), indicating the ability of each method to accurately predict links in the temporal knowledge graphs. LLM-DA variants using RE-GCN and TiRGN as baselines are also shown.

This table presents the link prediction results on two datasets, ICEWS14 and ICEWS05-15, comparing various TKGR (Temporal Knowledge Graph Reasoning) methods. The methods are categorized into traditional TKGR approaches and LLM-based methods. LLM-DA, the proposed method, is shown with two variations, replacing its graph reasoning module with different established TKGR techniques. The table highlights the best-performing methods for each metric (MRR, Hit@1, Hit@3, Hit@10) and indicates when a result is not available. The purpose is to demonstrate the improved performance of LLM-DA compared to existing methods.

Full paper#